Abstract

Purpose

The present study was designed to evaluate use of spectral and temporal cues under conditions in which both types of cues were available.

Method

Participants included adults with normal hearing and hearing loss. We focused on 3 categories of speech cues: static spectral (spectral shape), dynamic spectral (formant change), and temporal (amplitude envelope). Spectral and/or temporal dimensions of synthetic speech were systematically manipulated along a continuum, and recognition was measured using the manipulated stimuli. Level was controlled to ensure cue audibility. Discriminant function analysis was used to determine to what degree spectral and temporal information contributed to the identification of each stimulus.

Results

Listeners with normal hearing were influenced to a greater extent by spectral cues for all stimuli. Listeners with hearing impairment generally utilized spectral cues when the information was static (spectral shape) but used temporal cues when the information was dynamic (formant transition). The relative use of spectral and temporal dimensions varied among individuals, especially among listeners with hearing loss.

Conclusion

Information about spectral and temporal cue use may aid in identifying listeners who rely to a greater extent on particular acoustic cues and applying that information toward therapeutic interventions.

Listeners with hearing loss often report that speech sounds distorted. Some of the “distortion” is due to threshold elevation, which reduces consonant audibility (Humes, 2007; Singh & Allen, 2012). However, there are also situations in which speech sounds are audible, but speech recognition is still poor (e.g., Bernstein, Mehraei, et al., 2013; Bernstein, Summers, Grassi, & Grant, 2013; Souza, Boike, Witherell, & Tremblay, 2007). Those problems are often attributed to a generalized problem resolving the spectral and/or temporal cues in speech.

Spectral cues to consonant place of articulation include the frequency characteristics of the consonant release burst or frication noise in obstruents (e.g., LaRiviere, Winitz, & Herriman, 1975) and the onset frequency location of the formants and resulting formant transitions in sonorants and obstruents (e.g., Dorman, Studdert-Kennedy, & Raphael, 1977), both of which provide cues to consonant place. Temporal cues derived from the amplitude envelope include the duration of the sound and the rise time at onset of the consonant. Rise time and duration provide information about consonant manner (e.g., /ʃ/–/tʃ/–/t/), vowel identity (e.g., /ɪ/–/i/; Howell & Rosen, 1983; Rosen, 1992), and consonant voicing (Stevens, Blumstein, Glicksman, Burton, & Kurowski, 1992). It is well known that there are one-to-many and many-to-one relationships between speech cues and speech sounds, resulting in significant informational redundancy. That, in turn, underlies the remarkable perceptual robustness of the speech signal (see discussions in Goldinger & Azuma, 2003; Nearey, 1997; Wright, 2004). For listeners with normal hearing presented with everyday speech, when one aspect of the signal is distorted, the listener may be able to shift to an alternative cue (Repp, 1983).

The same level of redundancy is unlikely for listeners with hearing loss. Some listeners with hearing loss have broadened auditory filters (Faulkner, Rosen, & Moore, 1990; Glasberg & Moore, 1986; Souza, Wright, & Bor, 2012), which could limit their ability to resolve and use spectral cues. That limitation is supported by studies that show listeners with hearing loss have difficulty identifying consonants when the frequency content of the consonant falls into a region of broadened auditory filters (Dubno, Dirks, & Ellison, 1989; Preminger & Wiley, 1985) and have more difficulty identifying vowels based on formant patterns as compared to the average performance of listeners with normal hearing (Leek & Summers, 1996; Molis & Leek, 2011; Souza, Wright, & Bor, 2012; Turner & Henn, 1989). Other studies show that formant transitions—when formant frequency varies dynamically across the coarticulation point of two sounds—may be particularly difficult for listeners with hearing loss (Carpenter & Shahin, 2013; Coez et al., 2010; Hedrick, 1997; Hedrick & Younger, 2007; Stelmachowicz, Kopun, Mace, Lewis, & Nittrouer, 1995; Turner, Smith, Aldridge, & Stewart, 1997; Zeng & Turner, 1990). In most of these studies, however, there were also individuals with hearing loss who showed similar abilities to those with normal hearing, suggesting that ability to resolve spectral cues cannot be easily predicted from the individual audiogram.

For listeners for whom spectral cues are less accessible, it seems reasonable to expect greater reliance on temporal cues. The idea that listeners with hearing loss will be forced to shift reliance to temporal cues has been put forth in several articles (Boothroyd, Springer, Smith, & Schulman, 1988; Christensen & Humes, 1997; Davies-Venn & Souza, 2014; Davies-Venn, Souza, Brennan, & Stecker, 2009; Souza, Jenstad, & Folino, 2005). That hypothesis relies, in part, on the idea that temporal cues will be more resistant to degradation from hearing loss than spectral cues, provided the listener can access a sufficiently wide signal bandwidth. However, although many individuals with hearing loss have good temporal cue perception (Reed, Braida, & Zurek, 2009), others—particularly older listeners—demonstrate reduced temporal cue perception (e.g., Brennan, Gallun, Souza, & Stecker, 2013). This makes it difficult to predict the relative use of spectral versus temporal cues with any precision.

Most studies of cue use by listeners with hearing loss used a single-cue paradigm; that is, they varied one dimension (either spectral or temporal) and assessed performance on that basis (e.g., Coez et al., 2010; Hedrick & Rice, 2000; Souza & Kitch, 2001; Turner et al., 1997). With that approach, it is difficult to say with certainty whether a particular listener with hearing loss listening to speech that contains both spectral and temporal information—as would be the case in most everyday listening situations—will be utilizing temporal cues, spectral cues, or both. Individual characterization could provide insight into auditory damage patterns and may also be useful in a practical way. For example, amplification parameters could be set to avoid distorting information useful to that individual. To illustrate this idea, consider two hypothetical listeners: one who has relatively good spectral resolution and can utilize spectral cues to speech and one who has reduced spectral resolution and consequently depends to a greater extent on temporal cues. Without this distinction, a common rehabilitation approach might be to use fast-acting compression amplification to improve audibility of low-intensity phonemes. Because that type of amplification would also distort temporal envelope cues (Jenstad & Souza, 2005), it is possible that such a strategy may be detrimental to the listener who depends to a greater extent on temporal cues (Davies-Venn & Souza, 2014). Conversely, use of a high number of compression channels—which may improve audibility at the expense of smoothed frequency contrasts (Bor, Souza, & Wright, 2008)—may be detrimental to the listener who depends to a greater extent on spectral cues. Although such arguments are speculative, a necessary first step in disentangling these issues is to understand how reliance on spectral and temporal properties varies among individuals with hearing loss.

It seems likely that, as a group, adults with hearing loss will tend to rely more on temporal cues when both spectral and temporal cues are available (e.g., Hedrick & Younger, 2003; 2007; Lindholm, Dorman, Taylor, & Hannley, 1988). Some studies hint that individuals with hearing loss may be accessing different stimulus dimensions. Within a small sample (three participants with hearing loss), Hedrick and Younger (2001) demonstrated three different patterns of responses to synthetically modified speech cues: one listener was sensitive to both amplitude envelope and formant transition (similar to listeners with normal hearing), a second listener was heavily influenced by amplitude envelope and insensitive to the formant transition, and a third listener did not appear to respond to either cue modification. Work with nonspeech signals (Wang & Humes, 2008) found that listeners with hearing loss were able to use an enhanced formant transition to improve sound labeling. However, that study also excluded several listeners who were unable to perform the task to a criterion level. Post hoc examination of the excluded listeners suggested that one of them used temporal cues to a greater extent than the listeners who were retained in the test group. Two of the listeners who were included in the test group but performed at low levels also showed evidence of greater temporal cue use. Taken together, these results suggest that individuals with hearing loss may show different sensitivity to temporal versus spectral cues.

The present study was designed to evaluate individual use of spectral and temporal cues under conditions when both types of cues were available. We also aimed to construct a complete set of stimuli with more dimensions (static spectral, dynamic spectral, temporal; low- and high-frequency cues) applied to the same set of individuals than have been tested in previous research. Synthetic speech stimuli were used to provide precise control over dimensions of the stimuli. We focused on three categories of speech cues: static spectral (spectral shape), dynamic spectral (formant change), and temporal (amplitude envelope). Two sets of stimuli were created. In one set, the spectral cue was the location of high-frequency frication noise, and the temporal cue was the envelope rise time and duration of frication. In the second set, the spectral cue was the initial frequency of the formants and rate of formant change; the temporal cue was envelope rise time. In Experiment 1, we confirmed sensitivity to these speech dimensions in listeners with normal hearing. In Experiment 2, we examined variability among adults with sensorineural hearing loss while taking care to ensure sufficient audibility of available cues.

Experiment 1

As a foundation to our eventual work with listeners with hearing impairment, the purpose of Experiment 1 was to demonstrate that listeners with normal hearing are sensitive to both spectral and temporal aspects of a synthetic speech stimulus. We further hypothesized that all listeners with normal hearing would show similar response patterns.

Method

Participants

Participants included 20 adults with normal hearing (15 women, five men), ages 19–30 years (mean age 22 years). All participants had bilaterally normal hearing, defined as thresholds of 20 dB HL or better (ANSI, 2004) at octave frequencies between 0.25 and 8.00 kHz; spoke English as their first language; completed an informed consent process; and were compensated for their time.

Stimuli

Two sets of stimuli were created to test the interaction between spectral cues and temporal cues. All stimuli were synthesized using the Synthworks implementation of the Klatt parametric synthesizer (Version 1112) running on a Macbook Pro with Mac OSX 10.7.4 and saved as individual sound files on a computer hard drive.

Stimulus Set 1. We first created a set of stimuli that varied in the distribution of energy in the frication spectrum. The frequency values were based on published values in Klatt (1980).1 At one extreme, the signal spectrum had a distribution of energy between 3500 Hz and 10000 Hz with a peak at 4500 Hz (typical of the alveolar consonant /s/; see Table 1, Column 2). At the other extreme, the signal spectrum had a distribution of energy between 1000 Hz and 5500 Hz with a peak at 3500 Hz (typical of the palatal consonant /ʃ/; see Table 1, Column 6). The resulting waveforms and spectrograms are shown in the top left and lower left panels of Figure 1. Intermediate values (see Table 1, Columns 3–5) were chosen to span the range between the alveolar and palatal “endpoints.”

Table 1.

Formant (F), bandwidth (b), and formant-amplitude (a) values (in Hz) in the alveolar (/s/–/ts/) to palatal (/ʃ/–/tʃ/) spectral continuum.

| Parameter | Alveolar | Palatal | |||

|---|---|---|---|---|---|

| F1 | 320 | 315 | 310 | 305 | 300 |

| F2 | 1396 | 1507 | 1618 | 1729 | 1840 |

| F3 | 2530 | 2585 | 2640 | 2695 | 2750 |

| b1 | 200 | 200 | 200 | 200 | 200 |

| b2 | 80 | 84 | 88 | 92 | 100 |

| b3 | 200 | 225 | 250 | 275 | 300 |

| a3 | 0 | 14 | 28 | 42 | 56 |

| a4 | 0 | 12 | 24 | 36 | 48 |

| a5 | 0 | 12 | 24 | 36 | 48 |

| a6 | 51 | 50 | 49 | 48 | 47 |

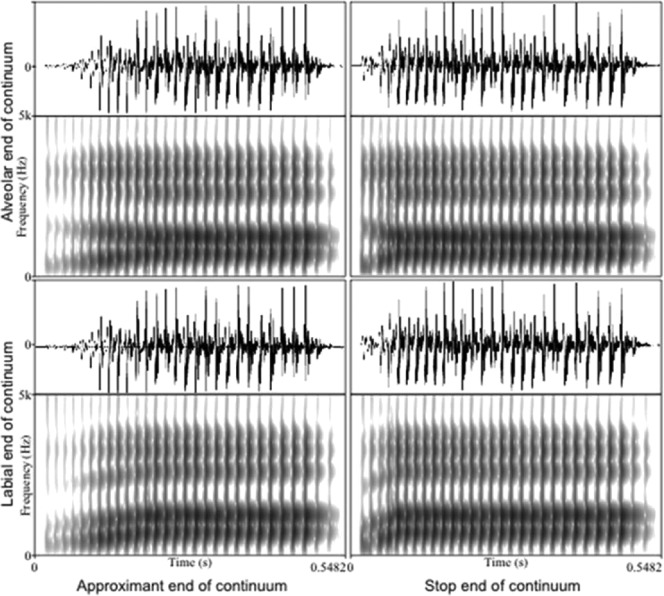

Figure 1.

Waveforms and spectrograms of the four stimulus endpoints with the temporal (fricative–affricate) dimension on the x-axis and the frequency (alveolar–palatal) dimension on the y-axis.

Next, we converted each unique frequency spectra (i.e., the spectra denoted by each column of Table 1) into five different tokens by manipulating the rise time2 and duration of the frication noise. One end of the continuum had a 90-ms rise time and a 190-ms fricative noise duration (typical of the fricatives /s/ or /ʃ/ as shown in Figure 1, left panels). The other end of the continuum had a 2-ms rise time and a 102-ms fricative noise duration (typical of the affricates /ts/ or /tʃ/ as shown in Figure 1, right panels). Intermediate values were chosen to span the range between the fricative and affricate “endpoints.”

In all of the stimuli, the vowel portion of the syllable was typical of the vowel /i/ with the following values (where F = formant, b = formant bandwidth): duration 325 ms, F1 310 Hz, F2 2100 Hz, F3 2700 Hz, b1 45 Hz, b2 200 Hz, b3 400 Hz. The fundamental frequency (f0) was set to 150 Hz for the initial 60 ms then fell linearly to 100 Hz. Amplitude of voicing rose from 0 to 60 over the initial 40 ms, then was 60 dB until falling to 0 dB over the final 60 ms of the stimulus.

The combination of five values on spectral and five on the temporal dimension created 25 total stimuli. The combination of the most extreme values on each continuum created four “endpoints” with spectral and temporal characteristics typical of /si, ʃi, tsi, tʃi/ (see Figure 1).

Stimulus Set 2. The second stimulus set was similarly designed to create a two-dimensional continuum with spectral variation on one dimension and temporal variation on the other. For this set, we wished to focus on use of lower frequency cues (with which participants with hearing loss were likely to have narrower auditory filters and better spectral resolution as well as greater audibility). Stimulus Set 2 also used a dynamic spectral cue (formant transitions) rather than the relatively static spectra that comprised the spectral cue for Stimulus Set 1.

We first created a set of stimuli that varied in F2 and F3 frequency and bandwidth onset values. The formant frequencies (F) and bandwidths (b) were based on values published in Klatt (1980).3 At one extreme, the formants had onset values of F2 1500 Hz, F3 2700 Hz, b2 100 Hz, b3 725 Hz (typical of the alveolar consonant /l/; see Table 2, Column 2). At the other extreme, the formants had onset values of F2 850 Hz, F3 2150 Hz, b2 95 Hz, b3 95 Hz (typical of the labial consonant /w/; see Table 2, Column 6). The remaining formant and bandwidth onset values were fixed: F1 250 Hz, F4 3250 Hz, b1 55 Hz, b4 200 Hz. The resulting waveforms and spectrograms are shown in the top and lower left panels of Figure 2. Intermediate values (see Table 2, Columns 3–5) were chosen to span the range between the alveolar and labial “endpoints.”

Table 2.

Formant (F) and bandwidth (b) values (in Hz) for Stimulus Set 2.

| Parameter | Alveolar | Labial | |||

|---|---|---|---|---|---|

| F1 | 250 | 250 | 250 | 250 | 250 |

| F2 | 1500 | 1338 | 1175 | 1013 | 850 |

| F3 | 2700 | 2563 | 2425 | 2288 | 2150 |

| F4 | 3250 | 3250 | 3250 | 3250 | 3250 |

| F5 | 3700 | 3700 | 3700 | 3700 | 3700 |

| b1 | 55 | 55 | 55 | 55 | 55 |

| b2 | 100 | 99 | 98 | 96 | 95 |

| b3 | 725 | 568 | 410 | 253 | 95 |

| b4 | 200 | 200 | 200 | 200 | 200 |

| b5 | 200 | 200 | 200 | 200 | 200 |

Figure 2.

Waveforms and spectrograms of the four stimulus endpoints with the temporal (stop–approximant) dimension on the x-axis and the frequency (alveolar–labial) dimension on the y-axis. Note the difference in formant location and transition near the onset of each stimulus.

Next, we converted each unique frequency spectra (i.e., the spectra denoted by each column of Table 2) into five different tokens by manipulating the amplitude rise time and formant transition time. One end of the continuum had a 60-ms rise time and 110-ms formant transition (typical of the approximants /l/ or /w/ as shown in Figure 2, left panels). The other end of the continuum had a 10-ms rise time and a 40-ms formant transition (typical of the stops /b/ or /d/ as shown in Figure 2, right panels). Intermediate values (Table 3) were chosen to span the range between the approximant and stop “endpoints.”

Table 3.

Starting amplitude of voicing (av), rise time duration to maximum voicing amplitude of 60 dB, and duration of formant transitions for Stimulus Set 2.

| Manner | Starting av value | Rise time | Formant transition |

|---|---|---|---|

| Stop | 60 dB | 10 ms | 40 ms |

| 50 dB | 20 ms | 50 ms | |

| 40 dB | 30 ms | 70 ms | |

| 40 dB | 40 ms | 90 ms | |

| Approximant | 40 dB | 60 ms | 110 ms |

Each stimulus had a steady-state vowel portion of the syllable that remained fixed as the vowel /a/ with the following formant frequency (F) and bandwidth (b) values: F1 700 Hz, F2 1200 Hz, F3 2600 Hz, b1 130, b2 70, b3 160. The fundamental frequency (f0) started at 120 Hz and fell linearly to 80 Hz across the 300-ms syllable. The amplitude of voicing was held constant until it fell from 60 to 0 dB over the final 60 ms of the syllable.

The combination of five steps on spectral and five on the temporal dimension created 25 total stimuli. The combination of the most extreme values on each continuum created four “endpoints” with spectral and temporal characteristics typical of /ba, da, wa, la/ (see Figure 2). Taken together, the two sets of stimuli sampled ability to use both lower- and higher-frequency spectral cues as well as both static and dynamic spectral cues.

Test Procedure

Testing was conducted in a double-walled, sound-treated booth. The stimuli were converted from digital to analog format (TDT RX6) and passed through a programmable attenuator (TDT PA5) and headphone buffer (TDT HB7) for presentation via an ER-2 insert earphone to the participant's right ear. Participants used a computer mouse to select which endpoint they heard from a graphical display of four alternatives, labeled orthogonally as either “see,” “shee,” “chee,” “tsee” (for Stimulus Set 1) or “dah,” “lah,” “bah,” “wah” (for Stimulus Set 2).

Stimulus Set 1 was always tested first, followed by Stimulus Set 2. Within each stimulus set, the procedure consisted of three phases: familiarization, training, and testing. Familiarization was included to acquaint the listeners with the synthetic stimuli. During familiarization, participants listened to a block of 40 trials in which each trial was randomly chosen from the set of four endpoint stimuli (10 each). The appropriate label was shown with green highlighting. The participants were asked to confirm they could pair the stimulus and label by clicking on the highlighted label. Following familiarization, the participant completed a training block of 40 trials. Each trial was randomly chosen from the set of four endpoint stimuli (10 each). After each trial, the participant was asked to identify each token from the set of possible endpoint labels. Visual correct answer feedback was provided after each response. Training continued until 160 trials (four blocks) had been completed or until the participant could achieve 80% correct for each endpoint, whichever occurred first. For Experiment 1, only listeners with normal hearing who achieved 80% correct for each endpoint (n = 20 for Stimulus Set 1, n = 16 for Stimulus Set 2) proceeded to the test phase. During testing, participants completed 375 randomly selected trials (15 trials of each token) without correct answer feedback. Rest breaks were given after 125 and 250 trials and between the two stimulus sets.

Results

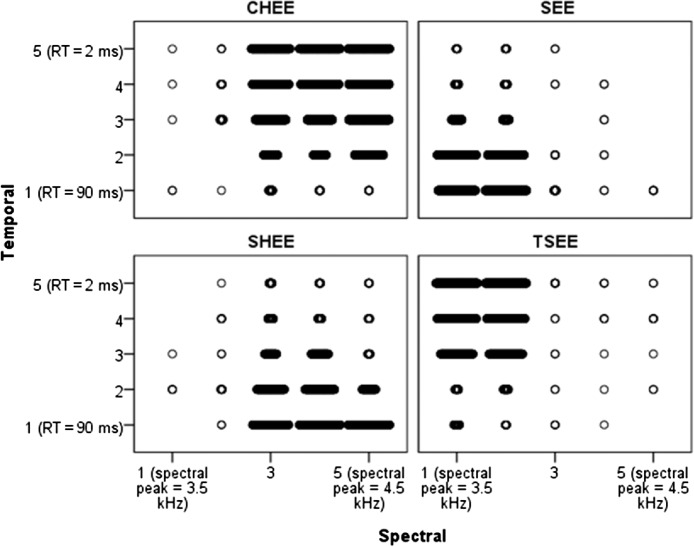

Figure 3 shows raw data for Stimulus Set 1. Recall that each token had two dimensions: spectral (bandwidth and location of spectral energy) and temporal (rise time/duration), each represented by one of five steps along a continuum. The x- and y-axes represent the five steps of these continua. For ease of viewing, each of the four response alternatives is shown in a separate panel. Each point within a panel represents a single response. More points plotted for a single Spectral × Temporal stimulus indicates that choice was made more frequently. A single point indicates that choice was rarely made.

Figure 3.

Stimulus Set 1 responses for listeners with normal hearing. Each panel represents the 25 possible Spectral × Temporal continua steps. Each point represents a single response. More frequent responses to a category are plotted as larger groupings of points. For example, listeners tended to make more “see” responses when the spectrum contained lower-frequency energy and the envelope had a long rise time (lower left corner of the top right “see” panel). For ease of viewing, axes are labeled using one of the acoustic parameters; for full parameter set, see Table 1. RT = rise time.

To interpret these data, consider how the frequency of a response changes as the spectral and/or temporal dimensions are varied. For example, by comparing the top right and lower left panels, we can see that the number of /ʃ/ responses increases (and the number of /s/ responses decreases) as the spectral peak and bandwidth are shifted to higher frequencies. The listeners with normal hearing usually made clear distinctions between the stimuli with a high probability of identifying a given endpoint and a clear demarcation at roughly the midpoint on each continuum. For example, for a 2-ms rise time (Step 5 on the temporal continua), Steps 1 and 2 on the spectral were identified as “tsee” almost all of the time, and Steps 3–5 on the spectral continua were identified as “chee” almost all of the time. The clear demarcation for the group also implies similar response patterns across individuals.

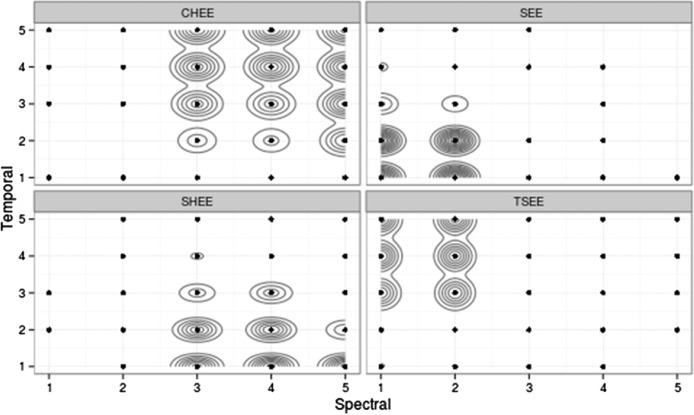

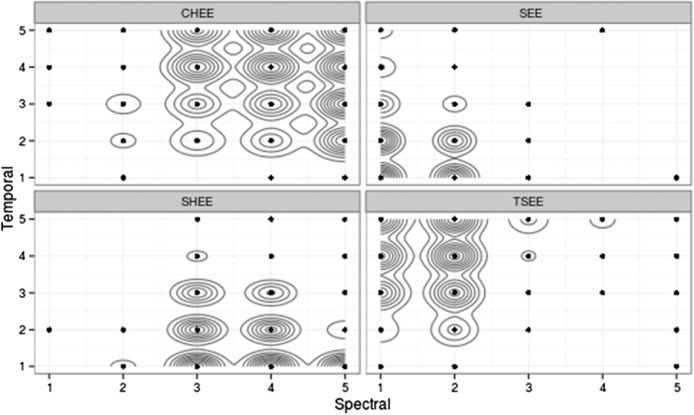

We were most interested in the relative contributions of spectral and temporal cues to consonant identification. Because both the independent and dependent measures were categorical, a nonparametric analysis was appropriate. As a first step in that process, a bivariate kernel density estimation (KDE) was generated.4 In brief, the KDE was used to estimate the underlying probability density function of observed data. It was analogous to a two-dimensional histogram but without the constraints introduced by specifying histogram bin width and origin. The contribution of each data point in the two-dimensional (Spectral × Temporal) space was translated into an area around that point, and all point areas were integrated to obtain a final two-dimensional distribution (Silverman, 1986). The model used a standard bivariate normal density kernel, and bandwidths were determined using the normal reference distribution function in R (Team, 2013), based on Venables and Ripley (2002). The outcome of the KDE for Stimulus Set 1 is shown in Figure 4. Each contour line indicated approximately a 10% increase in the probability that a given token will be judged as the token. The size of each contour was determined by variability; larger contours and more space between contours indicated more variability, and smaller, more tightly spaced contours indicated less variability. Contiguous distribution contours indicated a higher probability of confusion along the connected dimension. Figure 5 shows such data for Stimulus Set 2.

Figure 4.

Results of kernel density estimation (KDE) analysis (2d density estimation function from the ggplot2 package; Wickham, 2009) for listeners with normal hearing for Stimulus Set 1. Each classification is shown in its own graph for ease of reading, but the scales and axes are the same. A point in the graph indicates that at least one judgment of that type was observed for that combination of temporal and spectral cues. Each contour line represents approximately a 10% increase in likelihood of the given judgment for each point it encompasses. For example, the probability of a normal-hearing listener judging a token with a spectral and temporal value of 2 as “see” is near 100%.

Figure 5.

Results of KDE analysis for listeners with normal hearing for Stimulus Set 2. Note the contiguous distributions along the temporal dimension, suggesting a greater certainty when identifying signals based on spectral cues (formants and/or bandwidth). There was also greater variability in judgments, which can be seen in the increased size of the contours.

From Figures 4 and 5, we can infer that (a) listeners with normal hearing were able to distinguish the various stimuli in both the spectral and temporal domains with a high degree of certainty, and (b) for both stimulus sets, listeners tended to make more distinct differentiations in the spectral than in the temporal domain, particularly for Stimulus Set 2.

Although the KDE offered a convenient way to visualize and qualitatively examine the data, it did not allow us to compare the relative weighing of cues in a quantitative way. In order to quantitatively compare the use of cues, we undertook a separate analysis whereby the data were submitted to a discriminant function analysis (Lachenbruch & Goldstein, 1979). The output of a discriminant function analysis is an equation or set of equations that indicates to what degree each of the input variables contributes to the classification and how robust that classification is. Because the classification results of linear discriminant analysis (LDA) and quadratic discriminant analysis were almost identical for these data (correlation = .99 for each of the stimulus sets), the simpler LDA model was selected. Separation between judgments was tested to ensure that it was large enough that a discriminant analysis was warranted. The classifications were significantly distinct for an LDA with Wilks's lambda of 0.149 (p < .0001).

LDA automatically determined an optimal combination of variables so that the first equation (LD1) provided the greatest discrimination between groups (in this case, between the four stimulus choices), and the second (LD2) provided the second greatest discrimination among the groups. The proportion of trace for each equation is the percentage of data accounted for by the given equation. Within each equation, the absolute value of the coefficient, or “weight,” can be interpreted as reflecting the contribution of that variable (Hair, Black, Babin, & Anderson, 2010). Table 4 shows a summary of the results of the LDA.

Table 4.

Group results of linear discriminant analysis for listeners with normal hearing.

| Stimulus Set | LD1 | LD2 |

|---|---|---|

| 1 | ||

| Discriminant weight | ||

| Spectral | −1.32 | −0.11 |

| Temporal | −0.14 | 0.97 |

| Proportion of trace | 0.74 | 0.26 |

| 2 | ||

| Discriminant weight | ||

| Spectral | 0.96 | 0.34 |

| Temporal | 0.39 | −0.85 |

| Proportion of trace | 0.63 | 0.37 |

Stimulus Set 1. LD1 correctly classified 74% of the data. LD1 indicated that spectral information was more influential than temporal information. The second equation, LD2, classified the remaining 26% of the data. LD2 indicated that temporal information was more influential than spectral information. When the LDA was calculated on an individual basis, LD1 accounted for 61%–86% of the variance across participants. Spectral cue coefficients ranged from 1.2 to 1.4, and temporal cue coefficients ranged from 0.1 to 0.6. That is, each individual listener with normal hearing showed a similar pattern with the classification determined to a greater extent by spectral cues. As a whole, the analysis suggests that spectral cues were more important for classification than temporal cues but that they both played some role.

Stimulus Set 2. For Stimulus Set 2, LD1 accounted for 63% of the data and indicated that listeners relied more heavily on spectral information. LD2 accounted for 37% of the data with greater reliance on temporal information, albeit with more variability in cue use than for Stimulus Set 1. When the LDA was calculated on an individual basis, LD1 accounted for 59%–87% of the data. Spectral cue coefficients ranged from 0.1 to 1.2, and temporal cue coefficients ranged from 0.0 to 1.1. Individual data will be discussed later in this article.

Discussion

Results indicated that the listeners with normal hearing were able to use both spectral and temporal cues to classify stimuli with a preference for spectral cues. That was the case whether spectral cues were relatively static and broadband (Stimulus Set 1) or more dynamic (Stimulus Set 2). Moreover, this pattern was typical of all individuals in the group. This seems reasonable considering that all listeners had normal hearing with presumably normal frequency resolution. The pattern might be quite different for listeners with hearing loss whose spectral resolution (and perhaps their relative use of spectral and temporal cues) may not be the same. That population was examined in Experiment 2.

Experiment 2

Results of Experiment 1 indicated that listeners with normal hearing were sensitive to differences in both spectral and temporal dimensions. In this experiment, we were interested in individuals with hearing loss, specifically whether they demonstrated different patterns of cue use than listeners with normal hearing as well as whether there was greater variability among individuals than had been the case for the normal-hearing group. Audibility of speech cues presents an additional concern for this group. Accordingly, the design also included measures of signal audibility.

Method

Participants

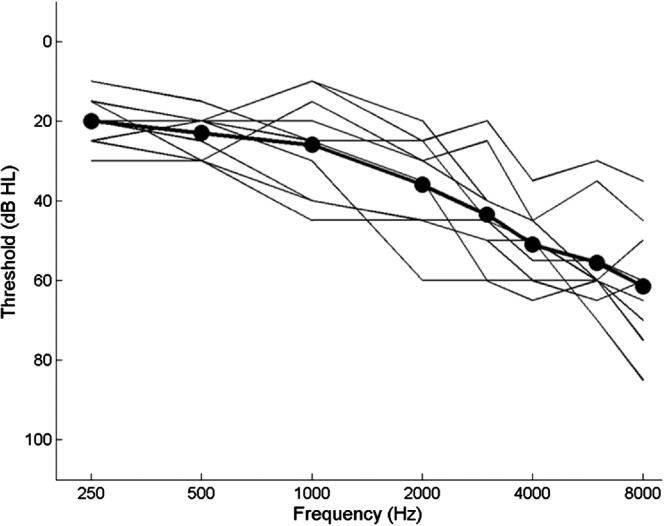

Participants in the group with hearing impairment included 10 adults (four women and six men) with mild to moderately severe sensorineural hearing loss, ages 63–83 years (mean age 71 years). All reported gradual, late-onset hearing loss typical of presbycusis. Eight of the participants had symmetrical loss (defined as between-ear difference ≤ 10 dB at three consecutive frequencies), and one ear was randomly selected for testing. Two participants had an asymmetrical loss, and for those two individuals, the better ear was selected for testing. The participants were a representative sample of adults with hearing loss with hearing thresholds that were typical for their age (Cruickshanks et al., 1998) at octave frequencies from 0.5 to 4.0 kHz. Audiograms for the test ears of the 10 listeners with hearing loss are shown in Figure 6.

Figure 6.

Audiograms for listeners with hearing impairment. Thin lines show individual audiograms; the thick line with filled circles shows the mean audiogram for the group.

Stimuli

Test signals were the Stimulus Set 1 and Stimulus Set 2 tokens described for Experiment 1. To compensate for audibility loss due to high-frequency threshold elevation, a flat individual gain was applied for each listener. Frequency shaping was deliberately not used to maintain the spectral and temporal properties of the signals as designed. The individual gain was based upon the spectral content of the stimuli with the goal of achieving audible bandwidth through 5 kHz. Because 5 kHz is not tested in a routine audiogram, we used a custom program to measure the participants' 5000 Hz thresholds. This program utilized a two-down/one-up adaptive tracking procedure (Levitt, 1971) to estimate the level in dB sound pressure level (SPL) at which the subject could detect 71% of the target tones of a given frequency. A computer monitor displayed a panel with two buttons, and on each trial, listeners saw the two buttons marked A and B. Each button illuminated for 400 ms with a 500-ms interstimulus interval. The listener then chose which button had been illuminated during the period in which a tone was presented and used a computer mouse to click on the button corresponding to that choice. Correct responses led to decreases in level, and two incorrect responses in a row led to increases in level. A change in direction (an increase followed by a decrease, or a decrease followed by an increase) was termed a reversal. For the first four reversals, changes in level were 5 dB. After the fourth reversal, the changes were 2 dB, and eight more reversals were obtained. The levels at which these final eight reversals occurred were averaged, and that average value was used as the estimated 5 kHz threshold. In pilot testing, we verified that threshold values obtained with the custom program were very similar to those obtained via conventional audiometry.

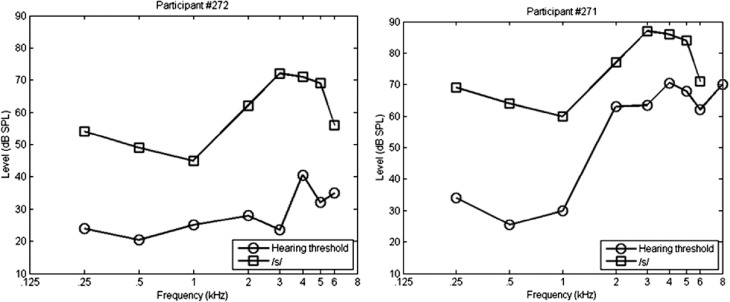

After the 5 kHz threshold was obtained, presentation level was adjusted for each listener. Presentation levels of the speech tokens ranged from 68 dB SPL to 92 dB SPL across the 10 listeners with hearing loss (see Figure 7). Nine of the 10 listeners reported that the initially selected presentation level was comfortable for them. One listener's presentation level was reduced 5 dB for comfort. The same presentation level was used for both stimulus sets.

Figure 7.

Illustration of speech audibility for two subjects, roughly representing the range of audiograms (least hearing loss to most hearing loss) among the study cohort. Each panel shows pure-tone thresholds (circles) for a single participant, relative to one-third octave band levels measured for /s/ at that participant's individually set presentation level (squares). All levels are expressed as dB SPL measured in a 2-cc coupler.

Test Procedure

Test setup was similar to that described for the listeners with normal hearing. Stimulus Set 1 was always tested first, followed by Stimulus Set 2. As in Experiment 1, each stimulus set included three phases: familiarization, training, and testing. In Experiment 1, 80% correct endpoint identification was taken to confirm appropriate labeling and required to move forward to the test phase. Here, we assumed that some of the listeners with hearing loss would be unable to utilize some cues even after training. Accordingly, all participants proceeded to testing, either because they had achieved 80% endpoint identification or had completed four training blocks. Because of his limited availability, one of the 10 participants did not complete Stimulus Set 2. Final scores were based on 375 randomly selected trials (15 trials of each token) without correct answer feedback.

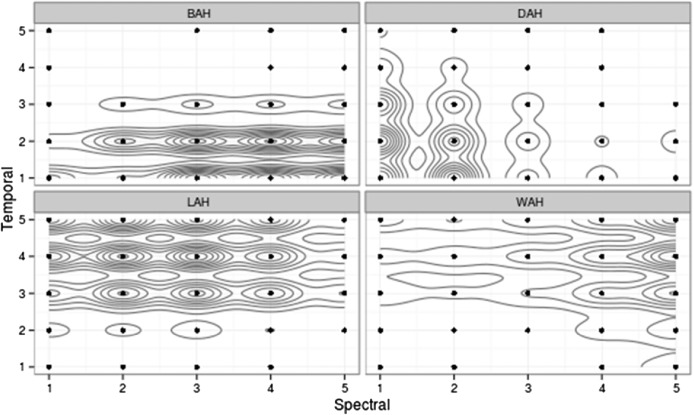

Results

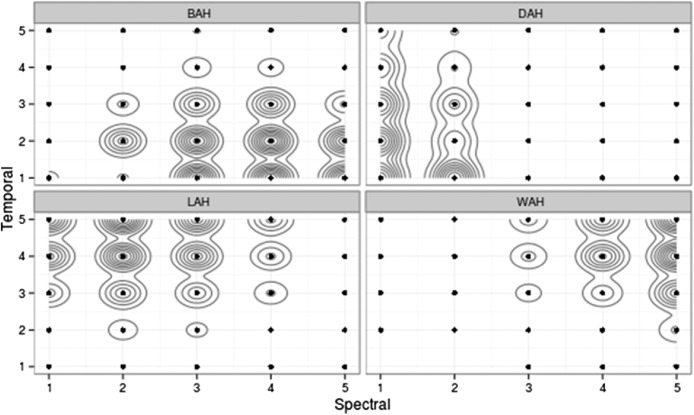

As in Experiment 1, the data were first examined qualitatively using a bivariate KDE. For Stimulus Set 1 (see Figure 8), patterns were similar to those for listeners with normal hearing, albeit with more variation (i.e., greater spread of the contours). The strong vertical pattern suggests that for a given spectral token value, participants with hearing loss were likely to classify it similarly with greater variation in their classifications based on the temporal dimension. For Stimulus Set 2 (see Figure 9), patterns were notably different than those for the listeners with normal hearing (see Figure 5). Figure 9 shows a large amount of variation as well as a very different use of cues. The strong horizontal lines suggest that participants with hearing impairment are more likely to agree about a token's temporal dimension (i.e., manner) than the spectral dimension (i.e., place).

Figure 8.

Results of KDE analysis (2d density estimation function from the ggplot2 package; Wickham, 2009) for listeners with hearing loss for Stimulus Set 1. Each classification is shown in its own graph for ease of reading, but the scales and axes are the same. A point in the graph indicates that at least one judgment of that type was observed for that combination of temporal and spectral cues. Each contour line represents approximately a 10% increase in likelihood of the given judgment for each point it encompasses. Variability is shown by the size and spread of contours; small, tight contours indicate high certainty.

Figure 9.

Results of KDE analysis for listeners with hearing loss for Stimulus Set 2. Note the contiguous distributions along the temporal dimension (i.e., the contours cover a large number of tokens with little separation), suggesting difficulty using temporal cues to identify sounds.

As in Experiment 1, we also examined the data quantitatively using a linear discriminant analysis. The participants' classifications were significantly distinct for an LDA, with Wilks's lambda of 0.194 (p < .001). Results of the analysis are summarized in Table 5.

Table 5.

Results of linear discriminant analysis for listeners with hearing impairment.

| Stimulus Set | LD1 | LD2 |

|---|---|---|

| 1 | ||

| Discriminant weight | ||

| Spectral | 1.17 | 0.13 |

| Temporal | 0.16 | −0.95 |

| Proportion of trace | 0.68 | 0.32 |

| 2 | ||

| Discriminant weight | ||

| Spectral | 0.15 | 0.79 |

| Temporal | 1.03 | −0.11 |

| Proportion of trace | 0.81 | 0.19 |

For Stimulus Set 1, LD1 explained 68% of the variance. Spectral cues accounted for the most variance (coefficient 1.17 compared to 0.16 for temporal cues). LD2 explained the remaining 32% of the variance with slightly greater emphasis on temporal cues. Overall, the analysis suggests a spectrally mediated change, similar to the balance for the listeners with normal hearing.

For Stimulus Set 2, LD1 explained 81% of the variance. Temporal cues accounted for the most variance (coefficient 1.02 compared to 0.15 for spectral cues). LD2 explained a small amount of variance (19%) with modestly greater reliance on spectral cues (coefficient 0.79 compared to −0.11 for temporal cues).

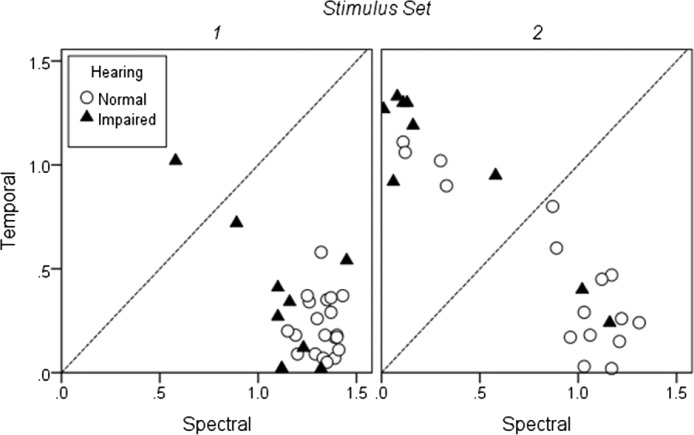

As was done in Experiment 1, we also calculated the LDA on an individual basis. Results for LD1 (which explained the highest proportion of variance) are shown in Figure 10 (triangles) along with Experiment 1 data for the participants with normal hearing (circles). The data are plotted as the absolute value of the coefficient or “weight” for temporal versus spectral information. Participants for whom spectral and temporal cues were equally weighted would fall on or very close to the diagonal reference line in each plot. Symbols falling above the reference line indicated that categorization was based to a greater extent on temporal cues, and symbols falling below the reference line indicate a greater emphasis on spectral cues. For Stimulus Set 1, there was a clear reliance on spectral cues for all of the listeners with normal hearing and the majority of listeners with hearing loss with both groups showing similar patterns (χ2 = 2.07, p = .15). However, there was also more variability across individuals than had been the case for the listeners with normal hearing, with two listeners showing either greater use of temporal cues or nearly equivalent use of both cues.

Figure 10.

Individual spectral and temporal weights for listeners with normal hearing (open circles) and hearing loss (filled triangles). All values are plotted as the absolute value of the individual coefficients for LD1. Left panel shows results for Stimulus Set 1 and right panel for Stimulus Set 2. Data points falling along the diagonal line in each panel indicate equal consideration of both stimulus dimensions. Data points falling below and to the right of the diagonal indicate greater reliance on spectral cues. Data points falling above and to the left of the diagonal indicate greater reliance on temporal cues.

For Stimulus Set 2, there was much more variability in cue use. The majority of the listeners with normal hearing continued to use spectral cues. Nearly all of the listeners with hearing loss were more influenced by temporal cues, and the pattern of cue use was significantly different than for the listeners with normal hearing (χ2 = 6.51, p = .01). Only two listeners with hearing loss showed a normal hearing–like reliance on spectral cues for this stimulus set. Among the listeners with hearing loss, those two participants had relatively better thresholds, particularly in the high frequencies. They did not have greater signal audibility (in terms of the presentation level of the signals regarding their thresholds). However, their ability to use spectral cues to a greater extent could reflect better spectral resolution due to narrower auditory filters.

Discussion

These data suggested that when audibility issues were addressed, the listeners with hearing loss could use the static broadband spectral cues even in the higher frequencies but were less able to use the dynamic spectral cues. Reduced use of dynamic spectral cues occurred even when those cues were in the lower frequencies at which the listeners had better thresholds (and, perhaps, better resolution). The data also suggest that responses for listeners with hearing loss were based to a relatively greater extent on temporal rather than spectral information. Finally, there was considerable variability among individuals.

General Discussion

This study was motivated, in part, by the expectation that individuals with hearing loss would differ in the extent to which they could resolve specific cues to speech. If that proved to be true, a related issue (not tested in this study) was our interest in a more customized approach to amplification parameters that would take individual differences into account. As a first step in this line of work, we created a set of test stimuli that varied in spectral and temporal information. We hypothesized that (a) listeners with normal hearing would show high sensitivity to both dimensions, and those individuals would behave similarly to one another in this respect, and (b) listeners with hearing impairment (who we assumed would have broadened auditory filters) would show reduced sensitivity to the spectral dimension, although with varying patterns across listeners.

Experiment 1 data supported the first hypothesis. Listeners with normal hearing were able to make distinctions across both temporal and spectral dimensions although with a greater contribution from spectral cues. The second hypothesis was partially supported by Experiment 2. For Stimulus Set 1, in which spectral cues were the frequency location of the frication noise and temporal cues were envelope rise time, listeners with hearing loss were able to use both spectral and temporal cues but with a greater contribution from spectral cues (similar to the normal-hearing listeners). For Stimulus Set 2, in which spectral cues were dynamic in nature (i.e., formant transitions) and temporal cues were envelope rise time, listeners with hearing loss relied more heavily on temporal cues. The data suggest that listeners with hearing loss showed greater weighting of the spectral dimension when the stimuli had static spectral cues but a much greater use of the temporal cues when the stimuli had dynamic spectral cues.

Note that although when we varied the spectral and temporal dimensions independently there was a relative effect that indicated sensitivity to change in the dimension being manipulated, that effect may also indicate sensitivity to the way in which that dimension being manipulated interacted with the other dimension. In other words, the response to a particular stimulus must be determined by the total percept of both dimensions to the listener. Accordingly, the difference between groups may reflect different reliance on temporal cues, different reliance on dynamic cues, or even frequency-dependent differences.

Issues Related to Test Stimuli

We approached this project by devising a controlled test of speech sound identification, in which specific temporal and spectral dimensions were varied. Synthetic speech was used to provide precise control over the stimuli and to avoid two issues that may have been present in earlier work: confounding effects of accompanying cues, other than the cues under study (e.g., Nittrouer & Studdert-Kennedy, 1986), and artificial manipulation of cues in natural speech. Although using manipulated natural speech is a valid approach to the problem, the mere act of manipulating natural speech cues can introduce differences across subjects. For example, if stimuli are adjusted for audibility by increasing the level of a specific cue (such as the consonant burst), listeners may be influenced by that higher level to put more weight on that cue than would be the case in a more natural listening scenario. Therefore, we attempted to put all listeners on equal footing by creating new signals presented in similar ways and with equivalent training to all participants.

With that said, synthetic speech may have its own effects. At least one study suggested that older listeners might require more time to acclimatize to synthetic signals than younger listeners (e.g., Wang & Humes, 2008). That study deliberately created nonspeech-like stimuli whose acoustic properties could not be interpreted as being speech sounds, so they might not apply directly to the present data. In addition, age-related differences in synthetic speech recognition have been attributed to the higher thresholds that accompany aging, not to aging per se (Roring, Hines, & Charness, 2007). The implication is that when audibility is controlled to compensate for threshold elevation (as was the case here), older and younger listeners will react similarly to synthetic speech materials. During training, the majority of our listeners in both experiments reached criterion performance for the endpoint stimuli after similar numbers of trials, suggesting that there were no overarching differences across groups. Nonetheless, it is possible that ability to acclimatize to synthetic sounds varied across groups or individuals.

An important factor in speech-cue studies is audibility. Naturally, if a cue is inaudible to a listener, it cannot be used. Audibility confounds—particularly for high-frequency cues—may have been an unintended part of previous data sets (e.g., Coez et al., 2010). In support of the role of audibility, when amplification is provided to listeners with hearing loss, it improves use of acoustic cues to a level similar to listeners with normal hearing (Calundruccio & Doherty, 2008; Harkrider, Plyler, & Hedrick, 2006). In this case, we used the 5-kHz threshold (adaptive threshold in a forced-choice paradigm) as a benchmark for audibility. Under those circumstances, use of static spectral cues (Stimulus Set 1) by listeners with hearing loss was very similar to that of listeners with normal hearing. That finding is in conflict with some earlier studies that suggested that such cues would not be well used in cases of hearing loss and that reduced audibility may have played a role in previous data sets.

Normal Hearing and Hearing Loss

The use of dynamic spectral cues (the formant transitions in Stimulus Set 2) showed some differences across the two groups. Listeners with normal hearing used the formant transition to a greater extent than the envelope cue (as shown by the vertical lines in Figure 5, i.e., greater probability of making a spectral than a temporal differentiation). Those data are in good agreement with earlier work showing that listeners with normal hearing have full capability of using formant transitions (Carpenter & Shahin, 2013; Hedrick & Younger, 2001, 2007; Lindholm et al., 1988). When both cues were present for listeners with normal hearing, perceptions were affected by formant transition information to a greater extent than temporal (envelope) cues (e.g., Nittrouer, Lowenstein, & Tarr, 2013). The difference between listeners with hearing loss and listeners with normal hearing was dramatic; compare Figures 5 and 9, which show the heavy use of temporal cues by listeners with hearing impairment. In a sense, listening to a definite formant transition while having hearing loss may be a bit like a listener with normal hearing presented with a neutral formant transition; that information is no longer available, necessitating other cue use. However, our data were also in agreement with work showing that in the presence of neutral formant transition information, listeners with normal hearing were able to shift to use of temporal envelope information (Hedrick & Younger, 2001). Note that recent work by Nittrouer et al. (2013) drew a different conclusion in that their adult listeners with normal hearing did not use envelope cues at all. That finding can be explained by considering Nittrouer et al.'s stimuli, in which the formant transition was either typical of /ba/ or typical of /wa/ but never neutral. Although unlikely to arise in everyday speech, we can infer that in a conflicting-cue situation, listeners with normal hearing appear to show a preference for use of spectral rather than temporal information.

All listeners showed sensitivity to temporal cues even when those were used as a secondary cue. This is in good agreement with studies showing that hearing loss produces greater spectral than temporal degradations, at least when the available temporal cues are contained in the speech envelope (e.g., Turner, Souza, & Forget, 1995). One caveat is that the same principles may not apply to other types of temporal cues, such as gaps, which may be affected by hearing loss and/or aging to a greater extent (Walton, 2010).

Individual Patterns

Among listeners with normal hearing, the relative use of spectral versus temporal cues was quite similar. As expected, individuals with hearing loss showed more listener-to-listener variation. The most interesting effects occurred among listeners with hearing loss presented with Stimulus Set 2. Previous studies that addressed only group effects suggest use of dynamic spectral information might be impaired in all listeners. Instead, two of our listeners with hearing impairment showed responses much like the listeners with normal hearing. Those two individuals had age, etiology, and duration of loss similar to the rest of the hearing-impaired group but had relatively better thresholds, particularly in the high frequencies. However, they did not have greater signal audibility (in terms of the presentation level of the signals regarding their thresholds). Perhaps their ability to use spectral cues to a greater extent reflected better spectral resolution due to narrower auditory filters or some other auditory (or listening strategy) difference.

We recruited a representative sample of adults with hearing loss, who, statistically, were likely to be older. Because this study was not designed to address contributions of age versus hearing loss, the data leave unresolved the question of whether an older listener with a completely normal audiogram would perform like the Experiment 1 subjects or show variability of specific cue use more like the Experiment 2 subjects. This is an interesting question and depends somewhat on one's view. If we take the position that greater dependence on temporal cues is a direct consequence of poor spectral resolution, we might expect older listeners with normal hearing, who show little evidence of auditory filter broadening (Ison, Virag, Allen, & Hammond, 2002), to maintain good spectral resolution. Conversely, some authors (e.g., Pichora-Fuller, Schneider, Macdonald, Pass, & Brown, 2007) have suggested that asynchronous nerve firing that accompanies aging can degrade representation of temporal fine structure, which could affect use of the “spectral” cues presented here.

Implications for Hearing Rehabilitation

The stimuli used in this study could potentially be used to identify listeners who are more spectral-dependent versus listeners who are more temporal-dependent. Although this area is largely unexplored, if we have the ability to measure listener cue “weighting,” we could better understand the consequences of that listener's specific listening habits and perhaps modify therapeutic interventions. One consequence of modern amplification technology is a tendency to distort in the spectral domain, the temporal domain, or both. Many technologies distort one dimension to a greater extent. For example, fast-acting compression can degrade envelope cues. A listener who depends heavily on temporal envelope versus spectral cues may be affected by even minimal envelope distortion (Souza, Hoover, & Gallun, 2012). Conversely, a listener who bases categorical perception largely on spectral cues may tolerate larger amounts of envelope distortion without impaired recognition. Multichannel compression with a high number of compression channels and a relatively high compression ratio has many advantages, such as more specific noise management and better loudness equalization, but can smooth spectral contrasts and degrade recognition when the essential information is carried by spectral contrasts and presented to a listener who is sensitive to those contrasts (Souza, Wright, & Bor, 2012). It may even be possible to shift cue dependence with training (Francis, Baldwin, & Nusbaum, 2000). Such topics offer an interesting area for future translational work.

Acknowledgments

This work was supported by National Institutes of Health Grant R01 DC60014.

Funding Statement

This work was supported by National Institutes of Health Grant R01 DC60014.

Footnotes

To remove low-frequency noise that is not characteristic of natural unvoiced fricatives, the fricative portion was high-pass filtered using a Hanning filter with a cutoff of 500 Hz and a smoothing setting of 100 Hz using Praat (Boersma, 2001).

A linear attenuation slope was applied to the base (fricative) shortening the rise time in 22-ms steps (using Sound Studio 3.6).

Because of the limitations of the synthesizer, the step size was always a multiple of 10 ms.

ggplot2 package (Wickham, 2009) for R (2013).

References

- American National Standards Institute. (2004). Specification for audiometers (ANSI S3.6-2004). New York, NY: Author. [Google Scholar]

- Bernstein J. G., Mehraei G., Shamma S., Gallun F. J., Theodoroff S. M., & Leek M. R. (2013). Spectrotemporal modulation sensitivity as a predictor of speech intelligibility for hearing-impaired listeners. Journal of the American Academy of Audiology, 24, 293–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein J. G., Summers V., Grassi E., & Grant K. W. (2013). Auditory models of suprathreshold distortion and speech intelligibility in persons with impaired hearing. Journal of the American Academy of Audiology, 24, 307–328. [DOI] [PubMed] [Google Scholar]

- Boersma P. (2001). Praat, a system for doing phonetics by computer. Glot International, 5(9/10), 341–345. [Google Scholar]

- Boothroyd A., Springer N., Smith L., & Schulman J. (1988). Amplitude compression and profound hearing loss. Journal of Speech and Hearing Research, 31, 362–376. [DOI] [PubMed] [Google Scholar]

- Bor S., Souza P., & Wright R. (2008). Multichannel compression: Effects of reduced spectral contrast on vowel identification. Journal of Speech, Language, and Hearing Research, 51, 1315–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brennan M. A., Gallun F. J., Souza P. E., & Stecker G. C. (2013). Temporal resolution with a prescriptive fitting formula. American Journal of Audiology, 22, 216–225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calundruccio L., & Doherty K. A. (2008). Spectral weighting strategies for hearing-impaired listeners measures using a correlational method. The Journal of the Acoustical Society of America, 123, 2367–2378. [DOI] [PubMed] [Google Scholar]

- Carpenter A. L., & Shahin A. J. (2013). Development of the N1-P2 auditory evoked response to amplitude rise time and rate of formant transition of speech sounds. Neuroscience Letters, 544, 56–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christensen L. A., & Humes L. E. (1997). Identification of multidimensional stimuli containing speech cues and the effects of training. The Journal of the Acoustical Society of America, 102, 2297–2310. [DOI] [PubMed] [Google Scholar]

- Coez A., Belin P., Bizaguet E., Ferrary E., Zibovicius M., & Samson Y. (2010). Hearing loss severity: Impaired processing of formant transition duration. Neuropsychologia, 48, 3057–3061. [DOI] [PubMed] [Google Scholar]

- Cruickshanks K. J., Wiley T. L., Tweed T. S., Klein B. E., Klein R., Mares-Perlman J. A., & Nondahl D. M. (1998). Prevalence of hearing loss in older adults in Beaver Dam, Wisconsin. The epidemiology of hearing loss study. American Journal of Epidemiology, 148, 879–886. [DOI] [PubMed] [Google Scholar]

- Davies-Venn E., & Souza P. (2014). The role of spectral resolution, working memory, and audibility in explaining variance in susceptibility to temporal envelope distortion. Journal of the American Academy of Audiology, 25, 592–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies-Venn E., Souza P., Brennan M., & Stecker G. C. (2009). Effects of audibility and multichannel wide dynamic range compression on consonant recognition for listeners with severe hearing loss. Ear and Hearing, 30, 494–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M. F., Studdert-Kennedy M., & Raphael L. J. (1977). Stop consonant recognition: Release bursts and formant transitions as functionally equivalent, context-dependent cues. Perception & Psychophysics, 22, 109–122. [Google Scholar]

- Dubno J. R., Dirks D. D., & Ellison D. E. (1989). Stop-consonant recognition for normal-hearing listeners and listeners with high-frequency hearing loss. I: The contribution of selected frequency regions. The Journal of the Acoustical Society of America, 85, 347–354. [DOI] [PubMed] [Google Scholar]

- Faulkner A., Rosen S., & Moore B. C. (1990). Residual frequency selectivity in the profoundly hearing-impaired listener. British Journal of Audiology, 24, 381–392. [DOI] [PubMed] [Google Scholar]

- Francis A. L., Baldwin K., & Nusbaum H. C. (2000). Effects of training on attention to acoustic cues. Perception & Psychophysics, 62, 1668–1680. [DOI] [PubMed] [Google Scholar]

- Glasberg B. R., & Moore B. C. (1986). Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments. The Journal of the Acoustical Society of America, 79, 1020–1033. [DOI] [PubMed] [Google Scholar]

- Goldinger S. D., & Azuma T. (2003). Puzzle-solving science: The quixotic quest for units in speech perception. Journal of Phonetics, 31, 305–320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hair J. F. J., Black W. C., Babin B. J., & Anderson R. E. (2010). Multivariate data analysis (7th ed.). Upper Saddle River, NJ: Prentice Hall. [Google Scholar]

- Harkrider A. W., Plyler P. N., & Hedrick M. (2006). Effects of hearing loss and spectral shaping on identification and neural response patterns of stop-consonant stimuli. The Journal of the Acoustical Society of America, 120, 915–925. [DOI] [PubMed] [Google Scholar]

- Hedrick M. (1997). Effect of acoustic cues on labeling fricatives and affricates. Journal of Speech, Language, and Hearing Research, 40, 925–938. [DOI] [PubMed] [Google Scholar]

- Hedrick M., & Rice T. (2000). Effect of a single-channel wide dynamic range compression circuit on perception of stop consonant place of articulation. Journal of Speech, Language, and Hearing Research, 43, 1174–1184. [DOI] [PubMed] [Google Scholar]

- Hedrick M., & Younger M. S. (2001). Perceptual weighting of relative amplitude and formant transition cues in aided CV syllables. Journal of Speech, Language, and Hearing Research, 44, 964–974. [DOI] [PubMed] [Google Scholar]

- Hedrick M., & Younger M. S. (2003). Labeling of /s/ and /sh/ by listeners with normal and impaired hearing, revisited. Journal of Speech, Language, and Hearing Research, 46, 636–648. [DOI] [PubMed] [Google Scholar]

- Hedrick M., & Younger M. S. (2007). Perceptual weighting of stop consonant cues by normal and impaired listeners in reverberation versus noise. Journal of Speech, Language, and Hearing Resarch, 50, 254–269. [DOI] [PubMed] [Google Scholar]

- Howell P., & Rosen S. (1983). Production and perception of rise time in the voiceless affricate/fricative distinction. The Journal of the Acoustical Society of America, 73, 976–984. [DOI] [PubMed] [Google Scholar]

- Humes L. E. (2007). The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults. Journal of the American Academy of Audiology, 18, 590–603. [DOI] [PubMed] [Google Scholar]

- Ison J. R., Virag T. M., Allen P. D., & Hammond G. R. (2002). The attention filter for tones in noise has the same shape and effective bandwidth in the elderly as it has in young listeners. The Journal of the Acoustical Society of America, 112, 238–246. [DOI] [PubMed] [Google Scholar]

- Jenstad L. M., & Souza P. E. (2005). Quantifying the effect of compression hearing aid release time on speech acoustics and intelligibility. Journal of Speech, Language, and Hearing Research, 48, 651–667. [DOI] [PubMed] [Google Scholar]

- Klatt D. (1980). Software for a cascade/parallel formant synthesizer. The Journal of the Acoustical Society of America, 67, 971–995. [Google Scholar]

- Lachenbruch P. A., & Goldstein M. (1979). Discriminant analysis. Biometrics, 35, 69–85. [Google Scholar]

- LaRiviere C., Winitz H., & Herriman E. (1975). The distribution of perceptual cues in English prevocalic fricatives. Journal of Speech and Hearing Research, 18, 613–622. [DOI] [PubMed] [Google Scholar]

- Leek M. R., & Summers V. (1996). Reduced frequency selectivity and the preservation of spectral contrast in noise. The Journal of the Acoustical Society of America, 100, 1796–1806. [DOI] [PubMed] [Google Scholar]

- Levitt H. (1971). Transformed up-down methods in psychoacoustics. The Journal of the Acoustical Society of America, 49, 467–477. [PubMed] [Google Scholar]

- Lindholm J. M., Dorman M. F., Taylor B. E., & Hannley M. T. (1988). Stimulus factors influencing the identification of voiced stop consonants by normal-hearing and hearing-impaired adults. The Journal of the Acoustical Society of America, 83, 1608–1614. [DOI] [PubMed] [Google Scholar]

- Molis M. R., & Leek M. R. (2011). Vowel identification by listeners with hearing impairment in response to variation in formant frequencies. Journal of Speech, Language, and Hearing Research, 54, 1211–1223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nearey T. M. (1997). Speech perception as pattern recognition. The Journal of the Acoustical Society of America, 101, 3241–3254. [DOI] [PubMed] [Google Scholar]

- Nittrouer S., Lowenstein J. H., & Tarr E. (2013). Amplitude rise time does not cue the /ba/–/wa/ contrast for adults or children. Journal of Speech, Language, and Hearing Research, 56, 427–440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S., & Studdert-Kennedy M. (1986). The stop-glide distinction: Acoustic analysis and perceptual effect of variation in syllable amplitude envelope for initial /b/ and /w/. The Journal of the Acoustical Society of America, 80, 1026–1029. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller K., Schneider B. A., Macdonald E. N., Pass H. E., & Brown S. (2007). Temporal jitter disrupts speech intelligibility: A simulation of auditory aging. Hearing Research, 223, 114–121. [DOI] [PubMed] [Google Scholar]

- Preminger J., & Wiley T. L. (1985). Frequency selectivity and consonant intelligibility in sensorineural hearing loss. Journal of Speech and Hearing Research, 28, 197–206. [DOI] [PubMed] [Google Scholar]

- Reed C. M., Braida L. D., & Zurek P. M. (2009). Review of the literature on temporal resolution in listeners with cochlear hearing impairment: A critical assessment of the role of suprathreshold deficits. Trends in Amplification, 13, 4–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Repp B. H. (1983). Trading relations among acoustic cues in speech perception are largely a result of phonetic categorization. Speech Communication, 2, 341–361. [Google Scholar]

- Roring R. W., Hines F. G., & Charness N. (2007). Age differences in identifying words in synthetic speech. Human Factors, 49, 25–31. [DOI] [PubMed] [Google Scholar]

- Rosen S. (1992). Temporal information in speech: Acoustic, auditory and linguistic aspects. Philosophical Transactions; Biological Sciences, 336, 367–373. [DOI] [PubMed] [Google Scholar]

- Silverman B. W. (1986). Density estimation for statistics and data analysis. London, United Kingdom: Chapman & Hall. [Google Scholar]

- Singh R., & Allen J. B. (2012). The influence of stop consonants' perceptual features on the Articulation Index model. The Journal of the Acoustical Society of America, 131, 3051–3068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza P., Boike K. T., Witherell K., & Tremblay K. (2007). Prediction of speech recognition from audibility in older listeners with hearing loss: Effects of age, amplification, and background noise. Journal of the American Academy of Audiology, 18, 54–65. [DOI] [PubMed] [Google Scholar]

- Souza P., Hoover E., & Gallun F. J. (2012). Application of the envelope difference index to spectrally sparse speech. Journal of Speech, Language, and Hearing Research, 55, 824–837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Souza P., Jenstad L. M., & Folino R. (2005). Using multichannel wide-dynamic range compression in severely hearing-impaired listeners: Effects on speech recognition and quality. Ear and Hearing, 26, 120–131. [DOI] [PubMed] [Google Scholar]

- Souza P., & Kitch V. (2001). The contribution of amplitude envelope cues to sentence identification in young and aged listeners. Ear and Hearing, 22, 112–119. [DOI] [PubMed] [Google Scholar]

- Souza P., Wright R., & Bor S. (2012). Consequences of broad auditory filters for identification of multichannel-compressed vowels. Journal of Speech, Language, and Hearing Research, 55, 474–486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stelmachowicz P. G., Kopun J., Mace A., Lewis D. E., & Nittrouer S. (1995). The perception of amplified speech by listeners with hearing loss: Acoustic correlates. The Journal of the Acoustical Society of America, 98, 1388–1399. [DOI] [PubMed] [Google Scholar]

- Stevens K. N., Blumstein S. E., Glicksman L., Burton M., & Kurowski K. (1992). Acoustic and perceptual characteristics of voicing in fricatives and fricative clusters. The Journal of the Acoustical Society of America, 91, 2979–3000. [DOI] [PubMed] [Google Scholar]

- Team, R. C. (2013). R: A language and environment for statistical computing. Retrieved from http://www.R-project.org [Google Scholar]

- Turner C. W., & Henn C. C. (1989). The relation between vowel recognition and measures of frequency resolution. Journal of Speech and Hearing Research, 32, 49–58. [DOI] [PubMed] [Google Scholar]

- Turner C. W., Smith S. J., Aldridge P. L., & Stewart S. L. (1997). Formant transition duration and speech recognition in normal and hearing-impaired listeners. The Journal of the Acoustical Society of America, 101, 2822–2825. [DOI] [PubMed] [Google Scholar]

- Turner C. W., Souza P., & Forget L. N. (1995). Use of temporal envelope cues in speech recognition by normal and hearing-impaired listeners. The Journal of the Acoustical Society of America, 97, 2568–2576. [DOI] [PubMed] [Google Scholar]

- Venables W. N., & Ripley B. D. (2002). Modern applied statistics with S (4th ed.). New York, NY: Springer. [Google Scholar]

- Walton J. P. (2010). Timing is everything: Temporal processing deficits in the aged auditory brainstem. Hearing Research, 264, 63–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang X., & Humes L. (2008). Classification and cue weighting of multidimensional stimuli with speech-like cues for young normal hearing and elderly hearing-impaired listeners. Ear and Hearing, 29, 725–745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wickham H. (2009). ggplot2: Elegant graphics for data analysis. New York, NY: Springer. [Google Scholar]

- Wright R. (2004). A review of perceptual cues and cue robustness. In Hayes B., Kircher R., & Steriade D. (Eds.), Phonetically based phonology (pp. 34–57). Cambridge, United Kingdom: Cambridge University Press. [Google Scholar]

- Zeng F. G., & Turner C. W. (1990). Recognition of voiceless fricatives by normal and hearing-impaired subjects. Journal of Speech and Hearing Research, 33, 440–449. [DOI] [PubMed] [Google Scholar]