Abstract

Hospital executives pursue external recognition to improve market share and demonstrate institutional commitment to quality of care. The Magnet Recognition Program of the American Nurses Credentialing Center identifies hospitals that epitomize nursing excellence, but it is not clear that receiving Magnet recognition improves patient outcomes. Using Medicare data on patients hospitalized for coronary artery bypass graft surgery, colectomy, or lower extremity bypass in 1998–2010, we compared rates of risk-adjusted thirty-day mortality and failure to rescue (death after a postoperative complication) between Magnet hospitals and non-Magnet hospitals matched on hospital characteristics. Surgical patients treated in Magnet hospitals, compared to those treated in non-Magnet hospitals, were 7.7 percent less likely to die within thirty days and 8.6 percent less likely to die after a postoperative complication. Across the thirteen–year study period, patient outcomes were significantly better in Magnet hospitals than in non-Magnet hospitals. However, outcomes did not improve for hospitals after they received Magnet recognition, which suggests that the Magnet program recognizes existing excellence and does not lead to additional improvements in surgical outcomes.

National health policy makers have placed increased emphasis on publicly identifying hospitals with superior outcomes.[1] Amid increased competition for patients and payers, hospital executives face the daunting tasks of ensuring high-quality care, retaining qualified staff, and marketing their facility. Patients express increased interest in using quality rankings to select hospitals for surgical care.

Thus, hospital executives seek external recognition such as that provided in the rankings of US News and World Report[2] and other organizations, including the Leapfrog Group,[3] the Baldridge program,[4] and Truven Health Analytics.[5] There is little overlap in these rankings, which adds to confusion over how consumers can reliably assess hospital quality.[6]

The Magnet Recognition Program of the American Nurses Credentialing Center is one initiative designed to identify health care facilities with a commitment to quality improvement, especially in terms of nursing care delivery.[7] Established in 1994 by a subsidiary of the American Nurses Association, hospitals that participate in this voluntary program pay a fee for the recognition process. The process includes rigorous documentation and site visits to assess adherence to five key principles: transformational leadership, a structure that empowers staff, an established professional nursing practice model, support for knowledge generation and application, and robust quality improvement mechanisms.[8] Magnet recognition lasts for four years. Magnet hospitals have improved nursing job outcomes, such as burnout and satisfaction,[9] and Magnet recognition has been associated with improved hospital financial performance.[10] According to the program's website, one stated benefit of recognition is to “improve patient care.”[11] As of March 2015, 402 facilities in the United States were recognized currently by the Magnet program. The number of facilities that apply and are not recognized is not known publicly.

Studies have reported better patient outcomes in Magnet hospitals. Three cross-sectional studies have identified favorable outcomes for Medicare discharges,[12] neonates,[13] and surgical patients.[14] However, it is unclear whether the successful pursuit of Magnet recognition leads to improved patient outcomes. Despite the favorable outcomes observed in these cross-sectional studies, lingering questions remain. First, these findings have not been replicated with nationally representative longitudinal data. Second, previous [12-14] studies have not determined whether patient outcomes improve after Magnet recognition is obtained.

To address these questions, we investigated patient outcomes in Magnet and non-Magnet hospitals over time. In addition, we examined outcomes in Magnet hospitals both before and after they received recognition.

Study Data And Methods

We analyzed Medicare inpatient claims files for the years 1998–2010 to assemble three cohorts of surgical patients: those who had coronary artery bypass graft surgery, colectomy, or lower extremity bypass.[15] These operations were selected to reflect variation in baseline mortality risk and because they are performed frequently in acute care hospitals. We excluded patients who died on the admission date. The study was deemed exempt from review by the University of Michigan's Institutional Review Board.

The complete sample consisted of 5,057,255 patients who were ages sixty-five and older, enrolled in fee-for-service Medicare, and treated in one of 5,222 hospitals during the thirteen-year study period. After matching each Magnet hospital with two non-Magnet hospitals from the Medicare sample, we restricted our analyses to 1,897,014 patients who were treated in one of 993 hospitals. Details about the matching are provided in the online Appendix.[17]

Thirty-Day Mortality And Failure To Rescue

Thirty-day mortality was defined as all-cause mortality within thirty days of the hospital admission date. Failure to rescue is a death within thirty days of hospital admission for patients who also experienced a postoperative complication.[17,18] Multiple definitions of failure to rescue are available. Consistent with our previous work,[18] we used International Classification of Diseases, 9th revisionand Current Procedural Terminology codes to identify nine postoperative complications (pulmonary failure, pneumonia, myocardial infarction, venous thromboembolism, acute renal failure, hemorrhage, surgical site infection, gastrointestinal bleed, and reoperation). Patients with these codes up to ninety days before the admission were excluded from consideration.

Failure to rescue is an attractive quality of care measure because it focuses less on the occurrence of a complication and more on the hospital's capability to recognize and address a complication. Our team's previous work suggests that compared to complication rates, failure to rescue is more closely associated with differences in hospital characteristics.[18] Failure to rescue is also considered a sensitive measure of nursing care delivery.[19]

Magnet Hospital Recognition

The primary exposure variable was a hospital's recognition by the ANCC Magnet Recognition Program.[7] The program's website was used to identify hospitals that obtained Magnet recognition and their year or years of recognition. We identified each hospital's Medicare National Provider Identifier using the National Plan and Provider Enumeration System.[20]

Hospitals were classified as having Magnet recognition in the corresponding year of analysis, having Magnet recognition at any time during the study period, or not having Magnet recognition at any time during the study period (1998-2010). Magnet classifications were updated each year of the study period in the case of mergers or closures.

Hospital Characteristics

Hospital characteristics were obtained from the Medicare Provider of Service files and the Healthcare Cost Report Information System. We measured the following seven hospital characteristics to account for differences between Magnet and non-Magnet hospitals. 1. Location. The location of the hospital was categorized as urban or rural, determined by absence or presence in a Metropolitan Statistical Area. 2. Transplant Program. Hospitals with active programs were identified from the Medicare Provider of Service files. 3. Teaching status. We identified hospitals that employed medical residents or fellows. 4. Size. We measured the number of staffed beds. 5. Outpatient share. The proportion of hospital revenue attributable to outpatient care was calculated as the amount of revenue obtained from outpatient billing, divided by the revenue obtained from the sum of outpatient and inpatient billing. 6. Cost-to-Charge Ratio. Each hospital's ratio was calculated as the total costs for inpatient acute care reported to Medicare, divided by the charges submitted in the same fiscal year. 7. Nurse Staffing. Hospital nurse staffing was measured from the Provider of Service files and Healthcare Cost Report Information System, using registered nurse hours per patient day adjusted for each hospital's outpatient share.[21] This adjustment reduced the variation in reported staffing levels for facilities with large on-site ambulatory practices that skewed inpatient nurse staffing values.

Patient Characteristics For Risk Adjustment

Diagnosis and procedure codes and demographic variables were used for severity of illness adjustment. Using methods published by Anne Elixhauser and coauthors,[22] we identified comorbid conditions from diagnosis and procedure codes. Age, sex, race/ethnicity, operation performed, number of comorbid conditions reported, and the presence of twenty-nine specific comorbid conditions were included in all models.

Statistical Analysis

We linked the list of Magnet hospitals to the patient claims and hospital characteristics data by matching Medicare provider identifier and year. All patient data were included if the hospital had relevant operations in the corresponding study year. We first conducted analyses at the patient level to understand the outcomes from the population perspective. We then conducted hospital-level analyses to examine these issues from the perspective of hospital and health system leaders.

Patient-Level Analyses

For thirty-day mortality, we analyzed the sample of 1,897,014 patients. For failure to rescue, we studied a subsample of 669,158 patients who experienced a postoperative complication; these patients were treated in 984 hospitals. We used generalized estimating equations to examine the likelihood of both thirty-day mortality and failure to rescue for hospitals that had ever received Magnet recognition and those that never received it. All models were adjusted for patient characteristics, hospital characteristics, and year of operation.

To reduce differences in hospital characteristics between Magnet and non-Magnet hospitals, we used a propensity score model that compared each Magnet hospital's outcomes with those of two non-Magnet hospitals that were most closely matched on the seven hospital characteristics listed above. Details on the modeling strategies and adjustments are available in the Appendix.[16]

Hospital-Level Analyses

We analyzed patient outcomes across hospitals to assess hospital outcomes over time and to determine whether outcomes improved after hospitals received Magnet recognition. For each study year, risk-adjusted patient outcomes were averaged for each hospital. We plotted the risk-adjusted outcome rates for Magnet and non-Magnet hospitals across all thirteen study years.

First, we examined all Magnet hospitals and their matched controls across all study years. For each study year, we identified hospitals that achieved Magnet recognition in that year and their matched controls. We compared outcomes for the years before, during, and after Magnet recognition (and the comparable observation years for non-Magnet control hospitals). Finally, we examined outcomes for patients treated in all Magnet hospitals to determine whether outcomes were better if the hospital was recognized during the year of the operation than if the hospital was not recognized at that time point..

Sensitivity Analyses

To increase confidence in our presented findings, we conducted seven sets of sensitivity analyses. First, we replicated our model using Jeffrey Silber and coauthors’ definition of failure to rescue.[17] Second, in contrast to the approach we present here, in which Magnet hospitals were matched to non-Magnet hospitals, we replicated our findings by using all hospitals in the national Medicare sample. Third, we replicated our findings by using all Magnet hospitals and five non-Magnet matched control hospitals.

Fourth, we replicated our patient-level models using a mixed modeling approach.[23] Fifth, we ran the analyses separately for the three operations. Sixth, instead of treating the hospital as a fixed effect in the hospital-level analyses, we treated it as a random effect in mixed models. Seventh, we examined four additional operations (carotid endarterectomy, aortic valve repair, abdominal aortic aneurysm repair, and mitral valve repair).

None of the estimates obtained in the sensitivity analyses differed appreciably from those reported here.

Limitations

This study has several limitations. Between 1998 and 2010, the Magnet recognition program has undergone criteria changes. We could not account for the various changes and external factors that might influence receipt of Magnet recognition.

Despite careful risk adjustment, there were unmeasured differences in hospitals (such as staff perceptions of their work environment, intensive care unit availability), and characteristics of patients (for example, physiological variables) that might influence postoperative outcomes. In addition, results from Medicare claims might not be generalizable to other populations.

The propensity score model matched each Magnet hospital with two similar hospitals. However, there were still differences in selected hospital characteristics, which suggests imbalances in our matching process. This is a common problem in health services research.[24]

Using additional hospital characteristic measures in the propensity score model might have improved our approach. Furthermore, our models estimated the effects from initial or current Magnet recognition: 48 percent of the Magnet hospitals in the sample had a gap in their recognition.

Finally, because of hospital closures and mergers, not all facilities were included in all thirteen study years.

Study Results

Of the 1,897,014 patients, 839,802 (44.3 percent) were treated in Magnet hospitals (Exhibit 1). The two groups were similar in terms of age, number of comorbid conditions, and breakdown by sex.

Exhibit 1.

Characteristics Of Patients And Hospitals In The Study, By Magnet Recognition Status

| Characteristic | Magnet hospitals (n = 331) | Non-Magnet matched control hospitals (n=662) |

|---|---|---|

| Patients | ||

| All | 44.3% | 55.7% |

| Age (years)**** | ||

| 65-69 | 24.2 | 24.7 |

| 70-74 | 26.2 | 26.2 |

| 75-79 | 24.8 | 24.5 |

| 80-84 | 16.0 | 15.8 |

| 85 and older | 8.8 | 8.8 |

| Number of comorbid conditions**** | ||

| 0 | 11.1 | 10.8 |

| 1 | 28.3 | 27.7 |

| 2 or more | 60.6 | 61.5 |

| Sex**** | ||

| Male | 58.1 | 56.8 |

| Female | 41.9 | 43.2 |

| Operation**** | ||

| Coronary artery bypass graft | 55.0 | 53.7 |

| Colectomy | 29.2 | 30.1 |

| Lower extremity bypass | 15.8 | 16.2 |

| Hospitals | ||

| Urban location* | ||

| No | 8.2 | 5.0 |

| Yes | 91.8 | 95.0 |

| Teaching program | ||

| No | 31.4 | 29.0 |

| Yes | 68.6 | 71.0 |

| Transplant program**** | ||

| No | 70.7 | 81.3 |

| Yes | 29.3 | 18.7 |

| Staffed beds (median)** | 421 | 371 |

| Adjusted RN hours/patient daya (median) | 7.10 | 6.74 |

| Cost-to-charge ratio (median)** | 0.34 | 0.33 |

| Outpatient share (median) | 0.36 | 0.35 |

SOURCE Authors' analysis of Medicare data. NOTES We used chi-square tests to compare categorical variables and the Wilcoxon two-sample test to compare continuous variables. Asterisks displayed with category labels show the results. Outpatient share and cost-to-charge ratio are defined in the text. RN is registered nurse.

Adjusted for hospital's outpatient share.

p > 0.10

p < 0.05

p < 0.001

The unadjusted overall thirty-day mortality rate was 6.1 percent. In the subset of patients who experienced a postoperative complication (669,158; 35.3 percent of the sample), the unadjusted failure to rescue rate was 12.0 percent.

Of the 993 hospitals studied, 331 (33.3 percent of the analytic sample) were recognized as Magnet hospitals at any time during the study period. Magnet hospitals were larger than non-Magnet hospitals (median staffed beds: 421 versus 371 beds), and a larger share of Magnet hospitals had transplant programs (29.3 percent versus 18.7 percent; Exhibit 1).

Compared to non-Magnet hospitals, Magnet facilities had better nurse staffing (in terms of adjusted registered nurse hours per patient day), were less likely to be in an urban location, and had fewer teaching programs. However, these differences were not significant.

Magnet Recognition And Patient Mortality

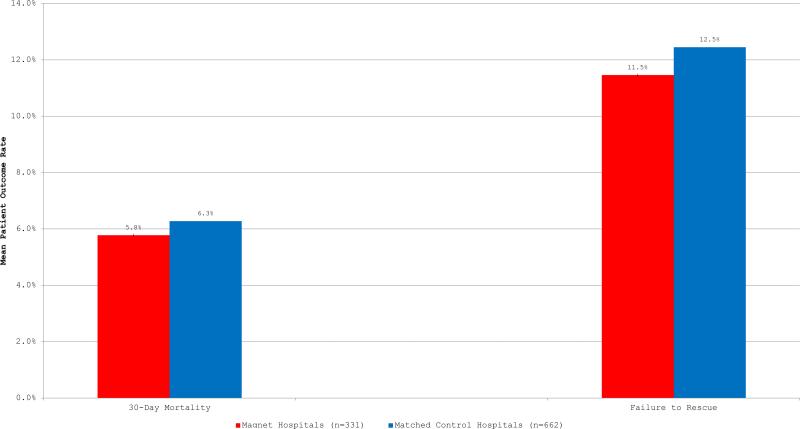

Thirty-day mortality rates were significantly lower in Magnet hospitals than in matched controls (5.8 percent versus 6.3 percent; Exhibit 2). A similar difference was observed for failure to rescue.

Exhibit 2 (figure).

Caption: Mean Patient Outcome Rates In Magnet Hospitals And Non-Magnet Matched Control Hospitals

Source/Notes: SOURCE Authors’ analysis of 1998-2010 Medicare data. NOTES Thirty-day mortality and failure to rescue are defined in the text. Differences between the two groups were significant (p < 0.01).

In multivariable analyses, after patient and hospital characteristics were controlled for, we found that patients treated in Magnet hospitals were 7.7 percent less likely to experience thirty-day mortality than patients treated in non-Magnet hospitals (95% confidence interval: 0.89, 0.96). And patients treated in Magnet hospitals were 8.6 percent less likely to die after a postoperative complication than patients treated in matched control hospitals (95% CI: 0.88, 0.95).

Hospital Outcomes Over Time

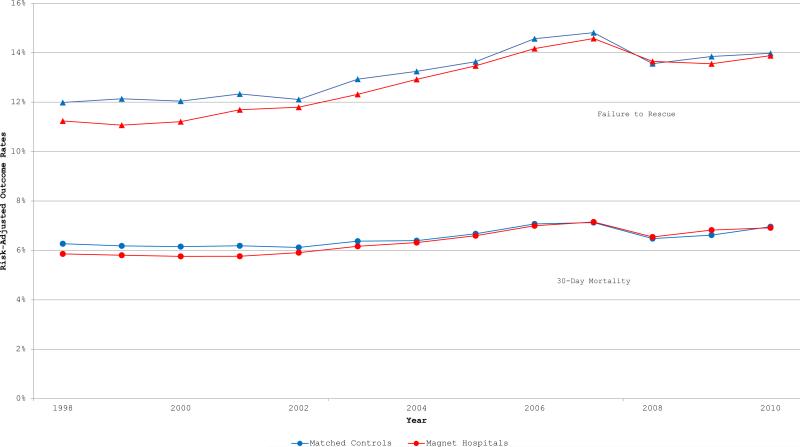

With the exception of two years, Magnet hospitals had lower thirty-day mortality and failure to rescue rates than matched control hospitals (Exhibit 3). The rates of thirty-day mortality and failure to rescue for Magnet Hospitals are higher than matched controls in 2009 and 2008, respectively. However in linear mixed models that include all study years, Magnet hospitals had significantly lower rates of risk-adjusted thirty-day mortality and failure to rescue throughout the study period.

Exhibit 3 (figure).

Caption: Risk-Adjusted Mean Patient Outcome Rates In Magnet Hospitals And Non-Magnet Matched Control Hospitals Across Study Years

Source/Notes: SOURCE Authors’ analysis of 1998-2010 Medicare data. NOTES Thirty-day mortality and failure to rescue are defined in the text. The rates were adjusted for patients’ characteristics.

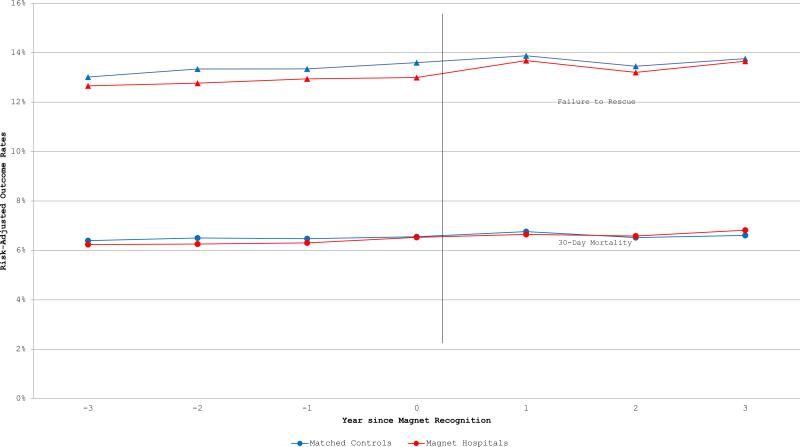

We next examined whether outcomes in Magnet hospitals improved after initial Magnet recognition, and we compared outcomes with matched controls for the four years before and three years after initial recognition (Exhibit 4). In multivariable models, Magnet hospitals had significantly better risk-adjusted failure to rescue rates than non-Magnet hospitals (β = −0.66; 95% CI: −1.20, −0.12). For both Magnet hospitals and their matched controls, outcome rates did not differ significantly over time.

Exhibit 4 (figure).

Caption: Risk-Adjusted Mean Patient Outcome Rates In Magnet Hospitals And Non-Magnet Matched Controls for Four Years Before And Three Years After Magnet Recognition

Source/Notes: SOURCE Authors’ analysis of 1998-2010 Medicare data. NOTE 106 hospitals were excluded from this analysis due to insufficient years of data for analysis.

No noteworthy improvements in outcomes were observed for Magnet hospitals after their first recognition. A final analysis that included only the 331 Magnet hospitals found no significant differences in risk-adjusted thirty-day mortality or failure to rescue rates, according to whether or not patients received their operations during a year in which the hospital's Magnet recognition was active.

Discussion

During a thirteen-year period, surgical patients treated in hospitals recognized by the ANCC Magnet Recognition Program were less likely to experience all-cause mortality within thirty days of admission or failure to rescue (death after a postoperative complication). These results persisted despite adjustments for year of operation, patient severity of illness, and hospital characteristics. Results were also adjusted for hospital nurse staffing, a variable frequently associated with patient mortality.[21,25,26] For hospitals that achieved their first Magnet recognition during the study period, we observed no improvements in outcome rates after recognition.

Our work confirms the findings of previous cross-sectional studies[12–14] and extends the understanding of how organizational factors affect surgical patient outcomes. The 1994 study[12] used the original 1983 cohort of Magnet hospitals that were identified by reputation, not the current formal review process. Using a matched control sample, the researchers found that risk-adjusted mortality rates were lower in Magnet hospitals. However, these original Magnet hospitals had better nurse staffing ratios than non-Magnet hospitals. Our study shows that independent of nurse staffing levels, Magnet hospitals have lower rates of thirty-day mortality and failure to rescue. It confirms the findings of two studies conducted in convenience samples, with comparable effect sizes.[13,14]

Patients across three surgical conditions benefited from receiving their care in Magnet hospitals. Previous work has demonstrated that Magnet hospitals reduce organizational hierarchy, create structures and processes to increase the autonomy of staff nurses, measure and benchmark nursing-sensitive quality indicators, and have more satisfied nursing staff.[9] Organizations with robust quality improvement mechanisms and those that empower front-line clinicians to advocate for patients and facilitate decision making are more likely to deliver evidence-based care, identify patient problems rapidly, and assemble the necessary human and physical resources to rescue patients from crisis.[27]

Overall outcomes were improved for patients treated in Magnet hospitals than in non-Magnet facilities, but for Magnet hospitals, outcome rates did not differ before and after recognition. Additional organizational factors likely contribute to the favorable outcomes observed in Magnet hospitals. In a recent study,[14] a survey-derived measure of nursing quality was significantly associated with lower mortality, independent of Magnet recognition.

Our findings can inform the deliberations of the Institute of Medicine's commission on credentialing research in nursing.[28] The hospital-level credential of Magnet recognition identifies existing excellence in patient care, not a trend of improved outcomes after recognition is obtained. Studying Magnet hospitals in greater depth is one important strategy for uncovering how nursing care delivery affects performance.

When our results are considered in the context of the literature, the following important messages emerge. Patients are well-served in choosing Magnet hospitals for their surgical care. It does not appear to matter whether hospitals are newly recognized or have been recognized for some time. Hospital leaders should appreciate the benefits to staff when pursuing Magnet recognition but should not expect improvements in patient outcomes after recognition has occurred. Finally, policy makers should support efforts to study how high-performing hospitals achieve their results.

Conclusion

Surgical outcomes are better for patients treated in hospitals recognized by the Magnet Recognition Program of the American Nurses Credentialing Center. Hospitals that obtain Magnet recognition have additional organizational features that confer benefits on patient outcomes. To further motivate quality improvements, researchers must study how well-performing hospitals achieve better outcomes than hospitals with subpar performance.

Supplementary Material

Hospitals In ‘Magnet’ Program

Show Better Patient Outcomes

On Mortality Measures Compared

To Non-‘Magnet’ Hospitals

Acknowledgment

This research was supported by a Pathway to Independence Award from the National Institute of Nursing Research to Christopher Friese (Award no. R00-NR01570). The authors thank Marie-Claire Roberts for her assistance in verifying Magnet hospital recognition.

Footnotes

Disclaimer

John Birkmeyer is the founder of and holds an equity interest in ArborMetrix, a health care software company. The company had no role in the research described in this article. The other authors have no disclaimers.

NOTES

- 1.Medicare.gov. Hospital compare [Internet] Centers for Medicare and Medicaid Services; Baltimore (MD): [2015 Apr 23]. Available from http://www.medicare.gov/hospitalcompare/search.html. [Google Scholar]

- 2.U.S. News and World Report [2015 Apr 23];Health: best hospitals. [serial on the Internet] Available from: http://health.usnews.com/best-hospitals.

- 3.Leapfrog Group . Top hospitals [Internet] Leapfrog Group; Washington (DC): 2015. [2015 Apr 23]. c. Available from: http://www.leapfroggroup.org/tophospitals. [Google Scholar]

- 4.Baldridge Performance Excellence Program: About us [Internet] National Institute of Standards and Technology; Gaithersburg (MD): 2015. [2015 Apr 27]. c. Available from: http://www.nist.gov/baldrige/publications/criteria.cfm. [Google Scholar]

- 5.Truven Health Analytics. 100 top hospitals. Truven; Ann Arbor (MI): 2015. [2015 Apr 23]. c. Available from: http://www.100tophospitals.com/ [Google Scholar]

- 6.Austin JM, Jha AK, Romano PS, Singer SJ, Vogus TJ, Wachter RM, et al. National hospital ratings systems share few common scores and may generate confusion instead of clarity. Health Aff (Millwood) 2015;34(3):423–30. doi: 10.1377/hlthaff.2014.0201. [DOI] [PubMed] [Google Scholar]

- 7.American Nurses Credentialing Center . ANCC Magnet Recognition Program [Internet] ANCC; Silver Spring (MD): [2015 Apr 23]. Available from: http://www.nursecredentialing.org/Magnet. [Google Scholar]

- 8.American Nurses Credentialing Center . Magnet Recognition Program designation criteria [Internet] ANCC; Silver Spring (MD): [2015 Apr 23]. Available from: http://www.nursecredentialing.org/Magnet/ResourceCenters/MagnetResearch/MagnetDesignation.html. [Google Scholar]

- 9.Houston S, Leveille M, Luguire R, Fike A, Ogola GO, Chando S. Decisional involvement in Magnet, magnet-aspiring, and non-magnet hospitals. J Nurs Adm. 2012;42(12):586–91. doi: 10.1097/NNA.0b013e318274b5d5. [DOI] [PubMed] [Google Scholar]

- 10.Jayawardhana J, Welton JM, Lindrooth RC. Is there a business case for magnet hospitals? Estimates of the cost and revenue implications of becoming a magnet. Med Care. 2014;52(5):400–6. doi: 10.1097/MLR.0000000000000092. [DOI] [PubMed] [Google Scholar]

- 11.American Nurses Credentialing Center . Magnet Recognition Program Benefits [Internet] ANCC; Silver Spring (MD): [2015 Apr 27]. Available from http://www.nursecredentialing.org/Magnet/ProgramOverview/WhyBecomeMagnet. [Google Scholar]

- 12.Aiken LH, Smith HL, Lake ET. Lower Medicare mortality among a set of hospitals known for good nursing care. Med Care. 1994;32(8):771–87. doi: 10.1097/00005650-199408000-00002. [DOI] [PubMed] [Google Scholar]

- 13.Lake ET, Staiger D, Horbar J, Cheung R, Kenny MJ, Patrick T, et al. Association between hospital recognition for nursing excellence and outcomes of very low-birth-weight infants. JAMA. 2012;307(16):1709–16. doi: 10.1001/jama.2012.504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McHugh MD, Kelly LA, Smith HL, Wu ES, Vanak JM, Aiken LH. Lower mortality in Magnet hospitals. Med Care. 2013;51(5):382–8. doi: 10.1097/MLR.0b013e3182726cc5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Birkmeyer JD, Dimick JB, Birkmeyer NJ. Measuring the quality of surgical care: structure, process, or outcomes? J Am Coll Surg. 2004;189(4):626–32. doi: 10.1016/j.jamcollsurg.2003.11.017. [DOI] [PubMed] [Google Scholar]

- 16.To access the Appendix, click on the Appendix link in the box to the right of the article online.

- 17.Silber JH, Williams SV, Krakauer H, Schwartz JS. Hospital and patient characteristics associated with death after surgery. A study of adverse occurrence and failure to rescue. Med Care. 1992;30(7):615–29. doi: 10.1097/00005650-199207000-00004. [DOI] [PubMed] [Google Scholar]

- 18.Ghaferi AA, Birkmeyer JD, Dimick JB. Variation in hospital mortality associated with inpatient surgery. N Engl J Med. 2009;361(14):1368–75. doi: 10.1056/NEJMsa0903048. [DOI] [PubMed] [Google Scholar]

- 19.NQF Quality Positioning System: Failure to Rescue [Internet] National Quality Forum; 2015. [2015 Apr 27]. c. Available from http://www.qualityforum.org/QPS/0353. [Google Scholar]

- 20.National Plan and Provider Enumeration System . National Provider Identifier [Internet] Centers for Medicare and Medicaid Services; Baltimore (MD): [2015 Apr 23]. Available from: https://nppes.cms.hhs.gov/NPPES/Welcome.do. [Google Scholar]

- 21.Needleman J, Buerhaus P, Mattke S, Stewart M, Zelevinsky K. Nurse-staffing levels and the quality of care in hospitals. N Engl J Med. 2002;346(22):1715–22. doi: 10.1056/NEJMsa012247. [DOI] [PubMed] [Google Scholar]

- 22.Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Med Care. 1998;36(1):8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- 23.Diggle PJ, Heagerty P, Liang K- Y, Zeger SL. Analysis of longitudinal data. 2nd ed. Oxford University Press; Oxford: 2013. [Google Scholar]

- 24.Garrido MM, Kelley AS, Paris J, Roza K, Meier DE, Morrison RS, et al. Methods for constructing and assessing propensity scores. Health Serv Res. 2014;49(5):1701–20. doi: 10.1111/1475-6773.12182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Aiken LH, Clarke SP, Sloane DM, Sochalski J, Silber JH. Hospital nurse staffing and patient mortality, nurse burnout, and job dissatisfaction. JAMA. 2002;288(16):1987–93. doi: 10.1001/jama.288.16.1987. [DOI] [PubMed] [Google Scholar]

- 26.Kane RL, Shamliyan TA, Mueller C, Duval S, Wilt TJ. The association of registered nurse staffing levels and patient outcomes: systematic review and meta-analysis. Med Care. 2007;45(12):1195–204. doi: 10.1097/MLR.0b013e3181468ca3. [DOI] [PubMed] [Google Scholar]

- 27.Clarke SP. Failure to rescue: lessons from missed opportunities in care. Nurs Inq. 2004;11(2):67–71. doi: 10.1111/j.1440-1800.2004.00210.x. [DOI] [PubMed] [Google Scholar]

- 28.Institute of Medicine . Standing committee on credentialing research in nursing [Internet] IOM; Washington (DC): [2015 Apr 23]. Available from: http://www.iom.edu/Activities/Workforce/NursingCredentialing.aspx. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.