Article-at-a-Glance

Background

Despite substantial evidence to support the effectiveness of hand hygiene for preventing health care–associated infections, hand hygiene practice is often inadequate. Hand hygiene product dispensers that can electronically capture hand hygiene events have the potential to improve hand hygiene performance. A study on an automated group monitoring and feedback system was implemented from January 2012 through March 2013 at a 140-bed community hospital.

Methods

An electronic system that monitors the use of sanitizer and soap but does not identify individual health care personnel was used to calculate hand hygiene events per patient-hour for each of eight inpatient units and hand hygiene events per patient-visit for the six outpatient units. Hand hygiene was monitored but feedback was not provided during a six-month baseline period and three-month rollout period. During the rollout, focus groups were conducted to determine preferences for feedback frequency and format. During the six-month intervention period, graphical reports were e-mailed monthly to all managers and administrators, and focus groups were repeated.

Results

After the feedback began, hand hygiene increased on average by 0.17 events/patient-hour in inpatient units (interquartile range = 0.14, p = .008). In outpatient units, hand hygiene performance did not change significantly. A variety of challenges were encountered, including obtaining accurate census and staffing data, engendering confidence in the system, disseminating information in the reports, and using the data to drive improvement.

Conclusions

Feedback via an automated system was associated with improved hand hygiene performance in the short term.

Although there is substantial evidence to support the effectiveness of hand hygiene for preventing health care–associated infections,1–4 research shows that health care personnel (HCP) hand hygiene practice is often inadequate.5 In an effort to improve adherence to recommended hand hygiene practices, The Joint Commission,6 the Centers for Disease Control and Prevention (CDC),7 and the World Health Organization (WHO)8 have called for facilities to monitor hand hygiene compliance and provide the information back to frontline staff so that they can improve their performance. This combination of audit and feedback has been shown to improve compliance.9 However, both audit and feedback processes present challenges.

Audit of hand hygiene is most commonly accomplished through direct observation of practice.10 It is a resource-intensive process and can yield erroneous results if observers are not trained to consistently apply a standard data collection protocol. Compliance can be artificially inflated or deflated by selection bias, observer bias, or the Hawthorne effect.11,12

Feedback of hand hygiene performance is a cornerstone of multimodal approaches to increasing compliance.13,14 Unfortunately, reports of multimodal interventions frequently do not describe the feedback process in sufficient detail to fully understand the methods and allow for replication. Furthermore, it is difficult to judge the effects of feedback independent of other elements of the intervention.15 Reports of hand hygiene audit and feedback have generally not been based on behavioral theories or concepts, so there is little specific evidence about how or why certain types of feedback work under different circumstances.16

Automated hand hygiene monitoring systems that use electronic counters embedded in soap and/or alcohol-based hand rub (ABHR) dispensers are promising because they are less subject to the biases inherent in direct observation and require fewer person-hours for data collection. Systems that can generate reports in a timely manner in formats that are meaningful for staff (that is, provide feedback that staff can readily interpret and then take action) may be an effective means of improving hand hygiene and aligning practice with evidence-based guidelines. In this article, we describe the implementation of an automated group monitoring and feedback system for promoting hand hygiene among HCP and report its impact on the frequency of hand hygiene at a community hospital.

Methods

We assessed the impact of an automated hand hygiene monitoring and feedback system using an interrupted time series design. We hypothesized that hand hygiene frequency would increase when the system was used to deliver feedback.

Setting

The study was conducted from January 2012 through March 2013 at a not-for-profit 140-bed community hospital in the northeastern United States with a staff of 1,700 HCP, 300 affiliated physicians, and 8,000 inpatient admissions and 40,000 emergency visits per year. There were no outbreaks during the course of the study, and no other infection prevention initiatives were undertaken.

Hand Hygiene Policies and Practices Before the Study Began

The hand hygiene policy in place before the study began was based on CDC guidelines.7 Employees completed hand hygiene training on hire and annually. Soap or ABHR was available at more than 800 wall-mounted dispensers. ABHR dispensers were located in corridors at the doorway to each patient room, inside patient rooms near the doorway and/or near the bed, in stretcher bays, in utility rooms, in medication rooms, and at nurses’ stations. Soap dispensers were located inside patient and staff bathrooms. In addition, freestanding ABHR pump bottles were located on anesthesia carts, and pocket dispensers of ABHR were carried by HCP on the psychiatry unit. Visual reminders of when and how to perform hand hygiene were posted on every inpatient unit, in patient bathrooms, and beside elevators, and a life-size image of the chief executive officer using ABHR was displayed at the main entrance to the hospital.

For six years before the study began, hand hygiene monitoring was conducted by direct observation by unit staff using a standard data collection form to record hand hygiene before and after patient contact and between patients in the same room. Each inpatient and outpatient clinical unit was responsible for performing at least 10 observations per month by convenience sampling. Most observations were performed on day shifts during the week. Staff members performing hand hygiene observations were not trained, but the infection prevention program (IP) manager was available to answer questions. Observers were encouraged, but not required, to give immediate feedback to HCP about their hand hygiene performance. Composite compliance reports and graphs were sent monthly to the emergency department (ED) and twice annually to other units. Reports were presented to the Infection Prevention Committee and Medical Executive Committee eight times per year, to the Quality and Patient Safety Council quarterly, and to the Board of Trustees annually.

Before the study began, hand hygiene compliance was reported to be consistently high. For example, in 2011 compliance was reported as > 95% on every inpatient unit. However, these rates did not comport with what the IP manager witnessed on rounds. The organizational culture around hand hygiene was described by one hospital administrator as “committed but complacent,” and administrators did not routinely communicate expectations about hand hygiene to frontline staff. On the advice of the IP manager, because the > 95% compliance was suspect, the hospital administration committed monies toward purchasing an automated hand hygiene monitoring and feedback system (see page 410). Monitoring by direct observation was discontinued in December 2011.

Approval to study the impact of the new monitoring system was granted by the hospital and the Columbia University Medical Center Institutional Review Board with a waiver of individual consent.

Theoretical Underpinnings for the Study

The model of actionable feedback was used to guide the study.17 It states that facilities with the highest adherence to clinical practice guidelines tend to deliver feedback that is timely, individualized, nonpunitive, and customizable. The model suggests that performance reports should be delivered monthly or more frequently to individual providers and delivered in a collegial manner that focuses on achievement. Reports should be flexible in format to allow HCP to actively engage with the data in a way that is meaningful to them.17 Because hand hygiene feedback to individuals from automated systems can be perceived as potentially punitive by HCP,18 we chose a system that provides quantitative feedback at the unit level rather than by individual. Individuals are not identified by the system. Thus, the feedback delivered in this study met three of the four criteria of the model: It was timely, nonpunitive, and customizable. It was individualized to the specific units, although not to specific individuals, because we were focused on creating a team culture.

Group Monitoring System

An automated group monitoring system (GMS) for hand hygiene was installed throughout the hospital in December 2011. All existing wall-mounted ABHR and soap dispensers were replaced with ABHR and soap dispensers that generate inaudible electronic signals each time a lever is pushed to deliver the hand hygiene product. If a dispenser is pushed more than once within 2.5 seconds, the activations are transmitted as a single event. The dispenser sends the signal to a ceiling-mounted hub/modem in the corridor. There were two or three modems in each unit. The modems transmit event counts along with the locations of the dispensers to a centrally located server. The server automatically generates reports and sends them via e-mail to recipients on a contact list provided by the hospital. If a dispenser battery is at < 20% capacity, or if a dispenser has not sent data for 96 hours, an alert is sent to the central server. When > 1% of dispensers go stale, engineers are dispatched to repair or replace the units. Freestanding pump bottles and pocket dispensers were not monitored.

Study Time Line

Hand hygiene was monitored continuously by the GMS during three periods: baseline from January through June 2012, rollout from July through September 2012, and intervention from October 2012 through March 2013.

No feedback was provided during the baseline or rollout periods. During the rollout of the GMS, focus groups were conducted with staff and interviews were conducted with administrators to introduce the automated system and to determine preferences for the frequency and format of feedback. Participants discussed how frequently feedback reports should be delivered, who should receive the reports, whether each unit should receive only its own data or that of comparable units and/or the entire hospital, and whether goals should be included. A sample report was shown to the focus groups and interviewees, and their comments and preferences were used to format the reports used in the study. Training sessions were held for managers, department heads, and supervisors to explain how the GMS worked and how to read and interpret the feedback reports.

During the intervention period, feedback was provided monthly to managers and administrators via e-mailed graphical compliance reports. Managers and administrators also had continuous online access to a website where they could create customized reports of hand hygiene events by time or dispenser location, if they chose to do so. The IP manager periodically held informal conversations with unit managers about the reports, encouraging them to share the data with their staff.

At the end of the intervention period, focus groups were repeated with staff and managers separately to gauge their response to the GMS and to assess the extent that the compliance reports were actually used. All inpatient units and outpatient areas were included in the study except the rehabilitation unit, perioperative areas (operating rooms, postanesthetic care unit, and day surgery unit), and the interventional radiology unit. The rehabilitation unit was being moved off-site. Reports were not generated for the perioperative areas and the interventional radiology unit because census data were inconsistently available for those areas during the study period.

Outcome Measures

Two outcome measures were computed by the GMS server. First, the number of hand hygiene events per patient-hour or per patient-visit was computed using hourly unit-level census data for inpatient units and daily unit-level census data for outpatient areas. Census data were obtained from the preexisting admission/discharge/transfer (ADT) system. In general, the ADT system counted patients in the census when they arrived on the unit; however, patients who were prescheduled for admission (for example, to the orthopedic joint center) could be entered into the census as early as 4 a.m. regardless of the time of their arrival. In the ADT system, inpatients who underwent a procedure or test in an outpatient area were not included in the outpatient unit’s census.

Second, a hand hygiene compliance index (HHCI) was computed by dividing the number of actual hand hygiene events by the number of events to be expected after adjusting for patient census, patient-to-nurse ratio, and unit type (that is, ICU, medical/surgical ward, or ED) on the basis of a previous study.19 The purpose of the earlier study was to measure the number of hand hygiene opportunities in acute care hospitals. Steed et al. observed 437 hours of patient care in general medical ICUs, medical/surgical wards, and EDs at a large tertiary care teaching hospital and a small community hospital, using the WHO “My 5 Moments for Hand Hygiene” monitoring methodology.20,21 Estimates from that study were used to calculate the HHCI denominator: hand hygiene opportunities. Dispenser counts formed the HHCI numerator: hand hygiene events. The HHCI ranges from 0 to 100; an HHCI of 50 indicates that hand hygiene has been performed 50% of the times it could be expected on a given unit during a given time frame.

In a separate validation study, the HHCI was highly correlated with hand hygiene compliance judged by trained observers who reviewed 1,511 hours of video surveillance of 26 patients on a medical unit (r = 0.976).22 Because the HHCI had not been validated in outpatient areas or specialty units such as childbirth or psychiatry, feedback was provided to these areas in terms of hand hygiene events per patient-visit or per patient- hour. For all other units, feedback was provided in terms of the HHCI.

Units were defined geographically; subunits (for example, a four-bed medical day care unit housed on the telemetry floor) were not given separate reports. For the purposes of computing the HHCI, the ICU was considered an ICU unit type, even though it houses a 60/40 mix of ICU and medical/surgical patients. Patient-to-nurse ratios for day and night shifts were estimated by the manager of each unit at the beginning of the study.

Study Design and Analysis

We employed an interrupted time series design. Compliance was compared for two study phases defined a priori: baseline and intervention. The rollout period was designated as a phasein period. Differences in hospital-level HHCI for the two study phases were compared using segmented regression analysis. Differences in unit-level HHCI and hand hygiene events per patient- hour or patient-visit for the two study phases were compared by paired t-test or Wilcoxon signed rank test. All tests were two-tailed, and the significance level was set at p ≤ .05. Data were analyzed using SAS 9.3 (SAS Institute Inc., Cary, North Carolina). The focus groups and interviews were recorded and summarized by two members of the study team (an epidemiologist [B.C.] and a registered nurse [L.J.C.]. each of whom was experienced in facilitating focus groups).

Results

Rollout Focus Groups and Training

During the rollout period, 7 administrators were interviewed, and 19 staff from all shifts participated in two focus groups. Input from the participants was used to determine the frequency and format of hand hygiene reports. Three one-hour training sessions were attended by 16 managers, department heads, and supervisors—or roughly one quarter of all those who would receive the reports.

Feedback

Hand hygiene reports were delivered via e-mail directly to managers and administrators during the first week of each month from October 2012 through March 2013. Reports were delivered to 67 unit managers, department directors, physician section chiefs, and executives. The reports presented the most recent six months of unit-level data by month in graphical and tabular formats. The graphs incorporated a histogram and a run chart. The graphs included a baseline, which was the average compliance in the months preceding those in the report, and a goal line, which was set at 10% above the baseline compliance for that unit. Managers received reports for their unit without comparison to other units or to the hospital as a whole. Senior administrators received reports for the hospital and for every unit for which they were responsible. Managers were then expected to provide results to their staff in whatever manner they determined to be most relevant to their unit.

Hand Hygiene Compliance

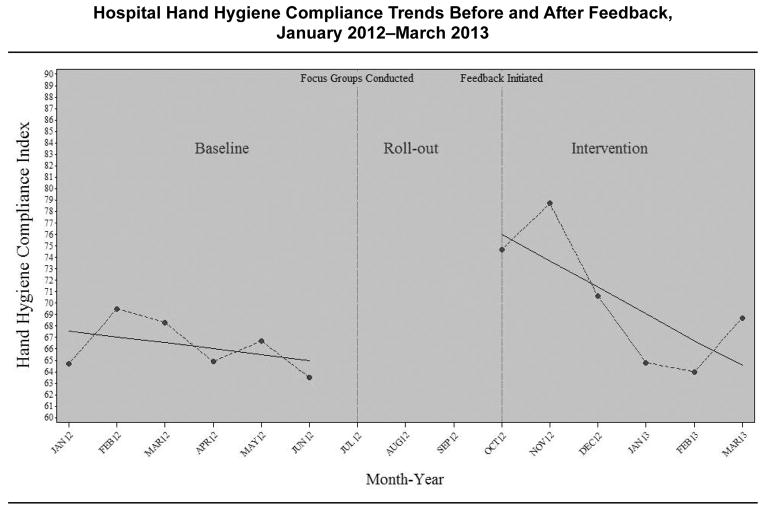

Between January 2012 and March 2013, the GMS recorded 1,778,852 hand hygiene events. Figure 1 (page 412) illustrates the trends in the HHCI for the hospital before and after feedback. The monthly HHCI ranged from 63.5% to 69.5% before the feedback, and from 64.0% to 78.7% after the feedback began. Hand hygiene compliance did not change significantly from month to month during the baseline period (baseline trend, p = .55). Compliance significantly increased during the feedback period (p = .03) but was not sustained (that is, monthly trend after the feedback compared with the baseline trend; p = .16).

Figure 1.

For 1,778,852 hand hygiene events recorded by the group monitoring system, the monthly hand hygiene compliance index ranged from 63.5 to 69.5 before the feedback and from 64.0 to 78.7 after the feedback began. Hand hygiene compliance did not change significantly from month to month during the baseline period (baseline trend, p = .55).

The differences in the HHCI by unit are summarized in Table 1 (page 413). In all units except the ED, the HHCI was significantly higher after than before the feedback (mean difference, 4.9; standard deviation [SD], 4.3; p = .02).

Table 1.

Hand Hygiene Compliance Index Values by Unit During Baseline and Intervention Periods, January 2012–March 2013

| Unit | Hand Hygiene Compliance Index | ||

|---|---|---|---|

| Baseline Period January 2012–June 2012 |

Intervention Period October 2012–March 2013 |

Difference* | |

| Critical Care | 62.6 | 71.8 | 9.2 |

| Joint Center | 47.2 | 54.9 | 7.7 |

| Medical/Surgical 1 | 82.7 | 85.9 | 3.2 |

| Medical/Surgical 2 | 55.4 | 63.3 | 7.9 |

| Step-down | 27.4 | 34.0 | 6.6 |

| Telemetry | 60.0 | 63.0 | 3.0 |

| Emergency | 79.7 | 76.5 | -3.2 |

Mean difference, 4.9; standard deviation, 4.3; paired t-test, p = .02.

The differences in the number of hand hygiene events per patient- hour or per patient-visit by unit are summarized in Table 2 (page 413). Hand hygiene frequency significantly increased in inpatient areas (median difference, 0.17 events/patient-hour; interquartile range = 0.14; p= .008) but remained unchanged in outpatient areas (mean difference, 0.40 events/patient-visit; SD, 0.83; p = .29). In perioperative areas that did not receive feedback, the number of hand hygiene events per patient-visit did not change significantly (mean difference, -0.20 events/patient- visit, SD, 0.31; p = .38; data not shown).

Table 2.

Hand Hygiene Frequency by Unit During Baseline and Intervention Periods, January 2012–March 2013

| Unit | Hand Hygiene Events per Patient-Hour | ||

|---|---|---|---|

| Inpatient Areas | Baseline Period January 2012–June 2012 |

Intervention Period October 2012–March 2013 |

Difference* |

| Childbirth | 1.12 | 1.26 | 0.14 |

| Critical Care | 3.47 | 3.98 | 0.51 |

| Joint Center | 1.17 | 1.36 | 0.19 |

| Medical/Surgical 1 | 1.27 | 1.32 | 0.05 |

| Medical/Surgical 2 | 1.39 | 1.58 | 0.19 |

| Psychiatry | 0.21 | 0.22 | 0.01 |

| Step-down | 0.85 | 1.06 | 0.21 |

| Telemetry | 1.49 | 1.56 | 0.07 |

| Hand Hygiene Events per Patient-Visit | |||

| Outpatient Areas | Baseline Period | Intervention Period | Difference† |

| Computerized Tomography | 7.51 | 7.17 | -0.34 |

| Emergency | 10.05 | 9.63 | -0.42 |

| Endoscopy | 7.11 | 7.96 | 0.85 |

| Lab/Phlebotomy | 0.62 | 0.86 | 0.24 |

| Magnetic Resonance Imaging | 5.08 | 6.89 | 1.81 |

| Mammography | 0.95 | 1.20 | 0.25 |

Median difference, 0.17 events/patient-hour; interquartile range, 0.14; Wilcoxon signed rank test, p = .008.

Mean difference, 0.40 events/patient-visit; standard deviation, 0.83; paired t-test, p = .29.

Postintervention Focus Groups

At the end of the intervention period, 9 staff and 11 managers, including nurses, nurse educators, nurse managers, laboratory technicians, radiology staff, respiratory therapists, and environmental service staff, participated in four focus groups, which we conducted to assess how the compliance reports were used. Focus group members were invited to represent various units and positions within the hospital. The focus groups yield- ed several findings. First, information in the reports was not disseminated widely. All managers reported having provided the data to their staff, either in staff meetings, via e-mail, or by posting the reports on the unit. However, 7 of 9 staff reported having never or inconsistently received the data. Nurses working the night shift had not received any feedback. A minority of participants (9 of 20) were aware of their unit’s hand hygiene compliance goal.

Second, staff and managers lacked confidence in the HHCI. Many focus group participants were unfamiliar with the WHO 5 Moments for Hand Hygiene on which the expected events were based. As the reported compliance was much lower than the > 95% previously reported, managers and staff questioned the accuracy of the GMS reports. Many managers had difficulty reading and interpreting the reports.

Third, there was little evidence that the reports were used to drive improvement. All the participants in the postintervention focus groups thought that receiving feedback about hand hygiene compliance was essential to improvement, and most agreed that electronic monitoring was more accurate than direct observations. However, few respondents (8/20) had discussed obstacles to improvement with their coworkers because the system was new and they were puzzled by the data reports. Few (6/20) discussed action plans with coworkers because they stated that the reports did not provide data on individual performance, nor contextual information such as whether or not hand hygiene was performed at the correct time or for the correct reason. None of the managers had accessed the website where customizable reports were available by unit/dispenser and by date/hour.

On the basis of these focus group findings and input from the IP manager, several changes were made after the study closed. The hospital’s infection prevention training materials were revised to include the WHO methodology, and an illustration of the 5 Moments for Hand Hygiene was posted as a screen saver on computers in all clinical areas. The hand hygiene report format was simplified. The histogram was removed, leaving only the run chart. The baseline was removed from the run chart, the column names in the summary table were clarified (for example, “Δ” was reworded to “change”), and 10 lines of text intended to explain the report were removed because they were not found to be helpful. Generic tips for hand hygiene improvement were added to the hand hygiene reports each month to stimulate discussion and action plans. One such generic tip was “Identify one barrier to performing hand hygiene on your unit and make an action plan to remove it.”

Discussion

Hand hygiene compliance on inpatient units at the study hospital improved with the initiation of feedback from the GMS. However, the increase was small (median difference, 0.17 events per patient-hour, which is equivalent to 4 more hand hygiene events per inpatient per day), and the clinical significance of this increase is unknown. McGuckin, Waterman, and Govednik suggest that there are 144 hand hygiene opportunities per day for ICU patients and 72 per day for non-ICU ward patients.23 On the basis of this estimate, we achieved only modest effects.

We identified five studies of hand hygiene compliance in which feedback to individuals or groups was the sole or primary intervention. The two studies that used automated hand hygiene monitoring systems that delivered individual feedback reported different outcomes.24,25 Fisher and colleagues randomly assigned 119 HCP to receive individual feedback weekly from a system composed of ultrasound transmitters in ABHR dispensers and wireless receiver tags worn by staff.24 At the end of the 10- week intervention, hand hygiene compliance was 6.8% higher among HCP who wore the tags compared with those who did not. However, the gains in compliance occurred during the early phase of the study, when HCP received reminder beeps, and not during the later phase, when weekly feedback commenced without audible beeps.24 Marra et al., using data from automated ABHR dispensers in each patient room, provided individual feedback to nurses in a step-down unit twice weekly and did not provide feedback to nurses in a control unit.25 During the six-month study, hand hygiene compliance in the intervention and control units was similar (41.1 versus 35.8 events per patient- day, respectively, p = .56).

The remaining three studies, which used automated hand hygiene monitoring systems to deliver group feedback, all showed improvement in hand hygiene compliance. Armellino et al. used continuous remote video auditing to provide HCP in a medical ICU real-time feedback via two centrally located displays updated every 10 minutes, end-of-shift e-mail summaries, and weekly performance reports.26 Rates were provided by room, shift, and provider type, but individual staff members were not identified. The aggregate compliance increased from < 10% during the 16-week baseline period to > 80% within the first 4 weeks of feedback. Hand hygiene compliance was maintained for the following 18 months of the study. When the study was replicated in a surgical ICU at the same hospital, similar results were obtained.27 Such an intervention, however, is extremely costly and unlikely to be sustainable or generalizable to other settings. Morgan et al. compared hand hygiene compliance using data from automated dispensers before and after providing feedback to groups of HCP in two ICUs, using colorful posters.28 Automated dispenser counts increased significantly in both units after the feedback (differences of 22.7% and 7.3% per unit, p < .001). These studies of individual and group feedback cannot be directly compared because of differences in the automated monitoring systems and feedback delivery methods, but group-level real-time feedback appeared to be effective.

Meeting Implementation Challenges

As described above, we encountered several challenges during GMS implementation. First, determining the number of expected hand hygiene events for the HHCI posed some difficulty. The HHCI can be easily interpreted as the percentage of times that hand hygiene was performed as expected. Because it adjusts for unit type, patient census, and staffing ratios, the HHCI enables a fair comparison of hand hygiene rates across units with different patient acuities and therefore different numbers of opportunities. However, data reported as events per patient-hour or per patient-visit may be less cumbersome to obtain and may be more intuitive to HCP. Had we used the benchmarks for reporting suggested by McGuckin, Waterman, and Govednik,23 (6 hand hygiene opportunities/ICU patient- hour and 3/non-ICU patient-hour), the message to HCP would not have changed drastically. For example, during the intervention period, compliance in the step-down unit would have been reported as 1 event out of 3 opportunities rather than as an HHCI of 34. However, feedback using this patient-hour measure would not have been sensitive to differences in patient acuity across units. For example, HCP on Medical/Surgical Units 1 and 2 performed a similar number of hand hygiene events during the intervention period (1.3 and 1.6 events per patient-hour, respectively), but their compliance indices were very different (86 versus 63). This was because patients on Unit 1 required less-intense nursing care (patient-to-nurse ratio approximately 6:1 versus 4:1 on Unit 2), so there were fewer opportunities for hand hygiene on Unit 1.

Also as noted, hand hygiene rates computed by the GMS were lower than previously reported at the facility, leading to considerable consternation among staff and managers and resulting in lack of confidence in the new system. To promote confidence in an automated system, facilities considering a change from direct observation to such a system should prepare staff for a lack of correlation between direct observation and electronic monitoring devices.29

Finally, there were inconsistencies in the GMS reports reaching some groups of HCP, particularly those on the night shift. It may be necessary to deliver unit-based reports directly to staff instead of depending on managers to disseminate the data. Assanasen, Edmond, and Bearman found that hand hygiene did not improve when performance reports were e-mailed quarterly to nurse managers and physician directors, but when additional feedback was provided directly to staff via posters, hand hygiene compliance improved by as much as 36%.30

Without contextual information about noncompliance, managers at the study facility found it difficult to know how to target their improvement efforts. However, they did not use the full capability of the GMS. To determine when and where hand hygiene compliance was low, the managers could have designed reports comparing compliance during periods of peakunit activity versus low-unit activity, or they could have identified the location of dispensers that were seldom used. To determine which indications were being missed, they could have compared compliance at dispensers mounted in doorways (WHO moments 1, 4, and 5) to dispensers mounted beside patient beds (WHO moments 2 and 3). Supplementing reports from the automated GMS with direct observations or surveys in low-performing units could also provide the contextual information needed to guide improvement efforts.6,31 Clearly, however, managers also need training in how to maximize use of data generated from automated systems.

Reasons for Minimal Impact

The initial increase in hand hygiene compliance we observed in inpatient areas was not sustained, and there was no significant change in outpatient areas. This may have been a consequence of other simultaneous administrative changes occurring in the institution and/or the fact that use of the data and feedback by clinical staff was minimal. It is possible that the effect of feedback may be greater in hospitals with lower baseline compliance than those recorded at this hospital because there is more room for improvement, or that the feedback effect was modest because it was not individualized. The model of actionable feedback suggests that the individualization of feedback is nearly as important as its timeliness—and is more important than nonpunitive or customizable feedback.17 Although the IP manager actively promoted hand hygiene adherence, evidence suggests that to sustain improvement in hand hygiene compliance, facilities must involve staff in planning interventions and must ensure that executives explicitly support hand hygiene improvement, 32,33 possibly by including financial bonuses or other incentives.34,35

One important consideration is that the model of actionable feedback suggests that feedback must be individualized. The monitoring system used in this study provided only group feedback (for example, by unit, dispenser, day). We purposely selected this system for trial because our goal was to create a culture without pointing out individuals in which a team environment was created and the entire unit or work group “owned” responsibility for hand hygiene. These initial study results do not permit us to determine whether the minimal impact of the feedback was due to the fact that the staff as a group did not receive it in a meaningful way or that individuals were not identified. As noted in a recent systematic review of automated hand hygiene monitoring systems, the relative effectiveness of individual versus group feedback, which, we believe, may vary on the basis of the safety culture, is one of a number of important issues regarding electronic monitoring that must be further tested. 36

Limitations

This study has several limitations. First, the study period was brief for a behavioral intervention. Although the use of an interrupted time series design minimizes the threat of temporal confounding and regression to the mean, a longer follow-up period would have accounted for seasonal effects and would have allowed a more thorough assessment of the institutional response to the first six months of data. Second, the intervention was not randomly assigned. Although the use of perioperative areas as a comparison group strengthens the assertion that the feedback itself was responsible for the improvement, it is possible that the perioperative units differed from the other units in a systematic way that influenced the outcome. Third, it is not clear how many HCP in the intervention units actually received the feedback. Because this would have underestimated the effect, our results are conservative. Fourth, the intervention was performed at a single community hospital, and, therefore, the results may not be generalizable. Finally, the HHCI calculations may have either over- or underestimated actual hand hygiene compliance. The ICU housed a 60/40 mix of ICU and medical/surgical patients, but an ICU benchmark was used. Hence, it is likely that the number of expected events for our patient mix was overestimated. On the orthopedic unit, patients prescheduled for admission were entered into the census several hours before they arrived, which could increase the expected number of hand hygiene events and lead to an underestimate of compliance. In contrast, outpatient areas did not count inpatients undergoing a procedure in their area, which could decrease the expected number of hand hygiene events and thereby lead to an overestimate of compliance. Including distinct subunits within larger geographically defined units may have increased or decreased the expected number of hand hygiene events for the unit as a whole. Estimates of patient-to-nurse ratios provided by the unit managers were subsequently found to be different from actual patient-to-nurse ratios determined by payroll records; this may have increased or decreased the expected number of hand hy- giene events. Not all units were monitored or provided with feedback, which may have over- or underestimated the hospital- level HHCI. Freestanding ABHR pump bottles and pocket dispensers were not monitored, likely resulting in an underestimation of compliance in the operating rooms and psychiatric unit. Because the data sources for hand hygiene events and census and staffing estimates remained constant across study periods, any over- or underestimation of rates would have been consistent, allowing us to compare changes in rates between periods.

Summary and Conclusions

Feedback of hand hygiene rates via an automated GMS was associated with minimally improved compliance in the short term. Despite limitations, this real-life field test of an automated GMS for hand hygiene provides detailed information for other facilities considering implementation. Reporting implementation “failures” in addition to “successes” can help others draw the appropriate lessons to plan more effective strategies before implementing their own interventions—in this case, hand hygiene electronic monitoring systems. A substantial investment of human capital is required to fully exploit the benefits of a GMS. A team of champions is likely needed to communicate information about the GMS, answer questions, engender confidence in the automated data, ensure consistent feedback to frontline staff, and optimize use of the data for improvement. Ongoing vendor support is needed to establish and maintain direct data feeds of census data. Leadership support and training in use of the data are essential to sustain gains in compliance. To achieve buy-in, HCP and managers accustomed to hand hygiene being monitored at room entry and exit should be trained in the WHO 5 Moments. Data reported as events per patient-hour or patient-visit may be as meaningful to HCP as an index; however, an index allows comparison across units with different patient acuities. Further research is needed to validate the number of times that hand hygiene can be expected in multiple settings with different patient populations.

Acknowledgments

Funding and equipment for this research was provided by DebMed. The data used in the study were collected on automated DebMed equipment. The funding source had no role or input into the design of the study, analysis, or interpretation of data. Laurie J. Conway was supported by a training grant funded by the National Institute of Nursing Research, T32NR013454 Training in Interdisciplinary Research to Prevent Infections. The authors thank William Fenstermaker and Mimeya Technology for acquiring the data for this study, Daniel English for overseeing the installation of the group monitoring system, and the clinicians and staff of Cooley Dickinson Hospital.

Contributor Information

Laurie J. Conway, Doctoral Candidate, Columbia University School of Nursing, New York City.

Linda Riley, Manager, Department of Infection Prevention and Control, Cooley Dickinson Hospital, Northampton, Massachusetts.

Lisa Saiman, Professor of Clinical Pediatrics, Department of Pediatrics, Columbia University Medical Center, New York City, and Hospital Epidemiologist, Department of Infection Prevention and Control, Morgan Stanley Children’s Hospital of New York-Presbyterian Hospital, New York City.

Bevin Cohen, Student at Columbia University School of Nursing.

Paul Alper, Vice President of Strategy and Business Development, DebMed USA, LLC, Charlotte, North Carolina.

Elaine L. Larson, Associate Dean for Research and Professor of Therapeutic and Pharmaceutical Research, School of Nursing, and Professor of Epidemiology, Mailman School of Public Health, Columbia University, New York City.

References

- 1.Larson E. A causal link between handwashing and risk of infection? Examination of the evidence. Infect Control. 1988;9(1):28–36. [PubMed] [Google Scholar]

- 2.Larson E. Skin hygiene and infection prevention: More of the same or different approaches? Clin Infect Dis. 1999;29(5):1287–1294. doi: 10.1086/313468. [DOI] [PubMed] [Google Scholar]

- 3.Pittet D, et al. Evidence-based model for hand transmission during patient care and the role of improved practices. Lancet Infect Dis. 2006;6(10):641–652. doi: 10.1016/S1473-3099(06)70600-4. [DOI] [PubMed] [Google Scholar]

- 4.Allegranzi B, Pittet D. Role of hand hygiene in healthcare-associated infection prevention. J Hosp Infect. 2009;73(4):305–315. doi: 10.1016/j.jhin.2009.04.019. [DOI] [PubMed] [Google Scholar]

- 5.Erasmus V, et al. Systematic review of studies on compliance with hand hygiene guidelines in hospital care. Infect Control Hosp Epidemiol. 2010;31(3):283–294. doi: 10.1086/650451. [DOI] [PubMed] [Google Scholar]

- 6.The Joint Commission. Measuring Hand Hygiene Adherence: Overcoming the Challenges. Oak Brook, IL: Joint Commission Resources; 2009. [Accessed Jul 27, 2014]. http://www.jointcommission.org/assets/1/18/hh_monograph.pdf. [Google Scholar]

- 7.Boyce JM, Pittet D Healthcare Infection Control Practices Advisory Committee; HICPAC/SHEA/APIC/IDSA Hand Hygiene Task Force Guideline for Hand Hygiene in Health-Care Settings. Recommendations of the Healthcare Infection Control Practices Advisory Committee and the HICPAC/SHEA/APIC/IDSA Hand Hygiene Task Force. Society for Healthcare Epidemiology of America/Association for Professionals in Infection Control/Infectious Diseases Society of America. MMWR Recomm Rep. 2002 Oct 25;51(RR-16):1–45. [PubMed] [Google Scholar]

- 8.World Health Organization. [Accessed Jul 27, 2014];WHO Guidelines on Hand Hygiene in Health Care. First Global Patient Safety Challenge: Clean Care Is Safer Care. 2009 http://whqlibdoc.who.int/publications/2009/9789241597906_eng.pdf. [PubMed]

- 9.Ivers N, et al. Audit and feedback: Effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012 Jun 13;6:CD000259. doi: 10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Braun BI, Kusek L, Larson E. Measuring adherence to hand hygiene guidelines: A field survey for examples of effective practices. Am J Infect Control. 2009;37(4):282–288. doi: 10.1016/j.ajic.2008.09.002. [DOI] [PubMed] [Google Scholar]

- 11.Haas JP, Larson EL. Measurement of compliance with hand hygiene. J Hosp Infect. 2007;66(1):6–14. doi: 10.1016/j.jhin.2006.11.013. [DOI] [PubMed] [Google Scholar]

- 12.Boyce JM. Measuring healthcare worker hand hygiene activity: Current practices and emerging technologies. Infect Control Hosp Epidemiol. 2011;32(10):1016–1028. doi: 10.1086/662015. [DOI] [PubMed] [Google Scholar]

- 13.Larson EL, et al. A multifaceted approach to changing handwashing behavior. Am J Infect Control. 1997;25(1):3–10. doi: 10.1016/s0196-6553(97)90046-8. [DOI] [PubMed] [Google Scholar]

- 14.World Health Organization. [Accessed Jul 27, 2014];Guide to Implementation: A Guide to the Implementation of the WHO Multimodal Hand Hygiene Improvement Strategy. 2009 http://www.who.int/gpsc/5may/Guide_to_Implementation.pdf.

- 15.Kirkland KB. Improving healthcare worker hand hygiene: Show me more! Qual Saf Health Care. 2009;18(6):419. doi: 10.1136/qshc.2009.036798. [DOI] [PubMed] [Google Scholar]

- 16.Larson EL, et al. Feedback as a strategy to change behaviour: The devil is in the details. J Eval Clin Pract. 2013;19(2):230–234. doi: 10.1111/j.1365-2753.2011.01801.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hysong SJ, Best RG, Pugh JA. Audit and feedback and clinical practice guideline adherence: Making feedback actionable. Implement Sci. 2006;(1):9. doi: 10.1186/1748-5908-1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ellingson K, et al. Healthcare personnel perceptions of hand hygiene monitoring technology. Infect Control Hosp Epidemiol. 2011;32(11):1091–1096. doi: 10.1086/662179. [DOI] [PubMed] [Google Scholar]

- 19.Steed C, et al. Hospital hand hygiene opportunities: Where and when (HOW2)? The HOW2 Benchmark Study. Am J Infect Control. 2011;39(1):19– 26. doi: 10.1016/j.ajic.2010.10.007. [DOI] [PubMed] [Google Scholar]

- 20.Sax H, et al. ‘My five moments for hand hygiene’: A user-centred design approach to understand, train, monitor and report hand hygiene. J Hosp Infect. 2007;67(1):9–21. doi: 10.1016/j.jhin.2007.06.004. [DOI] [PubMed] [Google Scholar]

- 21.Sax H, et al. The World Health Organization hand hygiene observation method. Am J Infect Control. 2009;37(10):827–834. doi: 10.1016/j.ajic.2009.07.003. [DOI] [PubMed] [Google Scholar]

- 22.Diller T, et al. Electronic hand hygiene monitoring for the WHO 5-moments method. Antimicrob Resist Infect Control. 2013;2(Suppl 1):O16. [Google Scholar]

- 23.McGuckin M, Waterman R, Govednik J. Hand hygiene compliance rates in the United States—A one-year multicenter collaboration using product/volume usage measurement and feedback. Am J Med Qual. 2009;24(3):205–213. doi: 10.1177/1062860609332369. [DOI] [PubMed] [Google Scholar]

- 24.Fisher DA, et al. Automated measures of hand hygiene compliance among healthcare workers using ultrasound: Validation and a randomized controlled trial. Infect Control Hosp Epidemiol. 2013;34(9):919–928. doi: 10.1086/671738. [DOI] [PubMed] [Google Scholar]

- 25.Marra AR, et al. Controlled trial measuring the effect of a feedback intervention on hand hygiene compliance in a step-down unit. Infect Control Hosp Epidemiol. 2008;29(8):730–735. doi: 10.1086/590122. [DOI] [PubMed] [Google Scholar]

- 26.Armellino D, et al. Using high-technology to enforce low-technology safety measures: The use of third-party remote video auditing and real-time feedback in healthcare. Clin Infect Dis. 2012 Jan 1;54(1):1–7. doi: 10.1093/cid/cis201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Armellino D, et al. Replicating changes in hand hygiene in a surgical intensive care unit with remote video auditing and feedback. Am J Infect Control. 2013;41(10):925–927. doi: 10.1016/j.ajic.2012.12.011. [DOI] [PubMed] [Google Scholar]

- 28.Morgan DJ, et al. Automated hand hygiene count devices may better measure compliance than human observation. Am J Infect Control. 2012;40(10):955–959. doi: 10.1016/j.ajic.2012.01.026. [DOI] [PubMed] [Google Scholar]

- 29.Marra AR, et al. Measuring rates of hand hygiene adherence in the intensive care setting: A comparative study of direct observation, product usage, and electronic counting devices. Infect Control Hosp Epidemiol. 2010;31(8):796– 801. doi: 10.1086/653999. [DOI] [PubMed] [Google Scholar]

- 30.Assanasen S, Edmond M, Bearman G. Impact of 2 different levels of performance feedback on compliance with infection control process measures in 2 intensive care units. Am J Infect Control. 2008;36(6):407–413. doi: 10.1016/j.ajic.2007.08.008. [DOI] [PubMed] [Google Scholar]

- 31.Gould DJ, Drey NS, Creedon S. Routine hand hygiene audit by direct observation: Has nemesis arrived? J Hospl Infect. 2011;77(4):290–293. doi: 10.1016/j.jhin.2010.12.011. [DOI] [PubMed] [Google Scholar]

- 32.Larson EL, et al. An organizational climate intervention associated with increased handwashing and decreased nosocomial infections. Behav Med. 2000;26(1):14–22. doi: 10.1080/08964280009595749. [DOI] [PubMed] [Google Scholar]

- 33.Saint S, et al. The importance of leadership in preventing healthcare-associated infection: Results of a multisite qualitative study. Infect Control Hosp Epidemiol. 2010;31(9):901–907. doi: 10.1086/655459. [DOI] [PubMed] [Google Scholar]

- 34.Crews JD, et al. Sustained improvement in hand hygiene at a children’s hospital. Infect Control Hosp Epidemiol. 2013;34(7):751–753. doi: 10.1086/671002. [DOI] [PubMed] [Google Scholar]

- 35.Talbot TR, et al. Sustained improvement in hand hygiene adherence: Utilizing shared accountability and financial incentives. Infect Control Hosp Epidemiol. 2013;34(11):1129–1136. doi: 10.1086/673445. [DOI] [PubMed] [Google Scholar]

- 36.Ward MA, et al. Automated and electronically assisted hand hygiene monitoring systems: A systematic review. Am J Infect Control. 2014;42(5):472–478. doi: 10.1016/j.ajic.2014.01.002. [DOI] [PubMed] [Google Scholar]