Abstract

Compromised social-perceptual ability has been proposed to contribute to social dysfunction in neurodevelopmental disorders. While such impairments have been identified in Williams syndrome (WS), little is known about emotion processing in auditory and multisensory contexts. Employing a multidimensional approach, individuals with WS and typical development (TD) were tested for emotion identification across fearful, happy, and angry multisensory and unisensory face and voice stimuli. Autonomic responses were monitored in response to unimodal emotion. The WS group was administered an inventory of social functioning. Behaviorally, individuals with WS relative to TD demonstrated impaired processing of unimodal vocalizations and emotionally incongruent audiovisual compounds, reflecting a generalized deficit in social-auditory processing in WS. The TD group outperformed their counterparts with WS in identifying negative (fearful and angry) emotion, with similar between-group performance with happy stimuli. Mirroring this pattern, electrodermal activity (EDA) responses to the emotional content of the stimuli indicated that whereas those with WS showed the highest arousal to happy, and lowest arousal to fearful stimuli, the TD participants demonstrated the contrasting pattern. In WS, more normal social functioning was related to higher autonomic arousal to facial expressions. Implications for underlying neural architecture and emotional functions are discussed.

Keywords: Emotion, Williams syndrome, Multisensory integration, Autonomic nervous system, Electrodermal activity, Social functioning, Facial expression, Vocalization

1. Introduction

Affective communication lies at the heart of successful social interactions and thus inter-personal relationships. Impairments in processing emotional expressions have been suggested to significantly contribute to dysfunctional social behavior and communication in neurodevelopmental disorders, autism spectrum disorder (ASD) being a case in point (Bachevalier & Loveland, 2006). Williams syndrome (WS), resulting from a clearly defined hemideletion of 25-30 genes in the chromosome region 7q11.23 (Ewart et al., 1993; Hillier et al., 2003), is associated with a “hypersocial” albeit relatively poorly understood social and emotional phenotypes. Individuals with WS display a strong drive to socially engage with others (e.g., an increased propensity to approach strangers), and idiosyncratic language features that facilitate social engagement (e.g., atypically high affective content in speech) (see Järvinen-Pasley et al., 2008; Järvinen, Korenberg, & Bellugi, 2013; Haas & Reiss, 2012, for reviews). Another prominent feature is that social information appears atypically salient individuals with WS, reflected as an attentional bias toward social over non-social stimuli both in social interaction contexts (e.g., Järvinen-Pasley et al., 2008; Mervis et al., 2003) and experiments (Riby & Hancock, 2008, 2009). These social attributes combine with a full-scale intelligence quotient (IQ) profile characterized by the mild-to-moderate intellectual disability range (mean of 50-60) (Mervis et al., 2000; Searcy et al., 2004). Notably, there is substantial heterogeneity in skills tapping into both cognitive (perception, attention, spatial construction, and social-emotional ability) (Porter & Coltheart, 2005) and social domains (social approach tendency in conjunction with response inhibition) (Little et al., 2013).

1.1 Social functioning in WS

Perhaps paradoxically, despite hypersociability, profound impairments in reciprocal social communicative and interactive behavior are evident in individuals with WS (Klein-Tasman, Li-Barber, & Magargee, 2011; van der Fluit, Gaffrey, & Klein-Tasman, 2012; Riby et al., 2014), including a lack of interpersonal relationships and subsequent social isolation (Davies, Udwin, & Howlin, 1998; Jawaid, Riby, Owens, White, Tarar, & Schultz, 2011), impacting such individuals’ wellbeing. A growing body of literature has focused on characterizing the nature and extent of social dysfunction evident in WS by utilizing diagnostic instruments commonly employed to screen for ASD. Empirical studies have delineated the socio-communicative impairments in individuals with WS employing the Social Responsiveness Scale (SRS; Constantino & Gruber, 2005). In one such study involving 4-16 year-olds with WS, Klein-Tasman et al. (2011) reported more profound impairments in social cognitive domains (communication and cognition) as opposed to pro-social functions (social awareness and motivation). Consistent with this, Riby et al. (2014) reported normative social functioning as measured by the SRS in merely ~17% of their sample of individuals with WS aged 6-36 years; this implicates that approximately 80% of the WS population exhibit severe social-communicative deficits. Finally, van der Fluit and colleagues (2012) utilized the SRS in tandem with an experimental social attribution paradigm in 8-15 year-olds with WS. On the SRS, the most severe deficits were observed in social cognition, while social motivation appeared unimpaired in those with WS. The results further showed that individuals with WS who performed similarly to typically developing (TD) individuals in interpreting ambiguous social dynamics also demonstrated more normal social functioning in real life. Notably, these associations remained after controlling for intelligence, suggesting that problems with interpreting social situations may play a unique role in interpersonal difficulties experienced by individuals with WS, beyond intellectual functioning. This profile suggesting more pronounced impairments in social-cognitive over pro-social/motivational functions appears stable across development in WS (cf. Klein-Tasman et al., 2011; Riby et al., 2014; Ng, Järvinen, & Bellugi, 2014).

1.2 Social-perceptual processing in WS

Within the domain of emotion processing, empirical investigations have largely reported deficits in the perception of basic emotions in individuals with WS within both visual and auditory modalities. For example, a study by Plesa-Skwerer et al. (2005) included dynamic face stimuli with happy, sad, angry, fearful, disgusted, surprised, and neutral expressions. The findings showed that chronological age (CA)-matched TD participants were superior at labeling disgusted, neutral, and fearful faces as compared to their counterparts with WS. The performance level of the participants with WS was similar to that of a mental age (MA)-matched group of individuals with mixed developmental disability (DD) conditions. Similarly, a study by Gagliardi et al. (2003) included animated faces displaying neutral, angry, disgusted, afraid, happy, and sad expressions. The results showed that participants with WS relative to CA-matched TD controls demonstrated difficulties particularly with disgusted, fearful, and sad face stimuli, while performance of these individuals was indistinguishable from that of a MA-matched, albeit a significantly younger TD control group. Another study by Plesa-Skwerer et al. (2006) utilized The Diagnostic Analysis of Nonverbal Accuracy – DANVA2 test (Nowicki & Duke, 1994), which includes happy, sad, angry, and fearful expressions, across both vocal and still face stimuli. The results showed that, across modalities, individuals with WS exhibited significantly poorer performance than CA-matched controls with all but the happy expressions. Taken together, in all of the above-mentioned studies, the performance of participants with WS was indistinguishable from that of MA-matched controls, with the exception of processing happy expressions, which appears relatively preserved.

Studies examining the processing of emotional prosody in individuals with WS are sparse; however, compromised ability has been reported with lexically/semantically intact utterances (Catterall, Howard, Stojanovik, Szczerbinski, & Wells, 2006; Plesa Skwerer et al., 2006), while significantly higher performance with emotional filtered speech sentences has been found in such individuals as compared to participants with developmental disabilities matched for IQ and CA (Plesa Skwerer et al., 2006). A dichotic listening study focusing on the hemispheric organization for positive and negative human nonlinguistic vocalizations in participants with WS and CA-matched TD individuals found that abnormalities in auditory processing in WS were restricted to the realm of negative affect (Järvinen-Pasley et al., 2010a; Plesa Skwerer et al., 2006). Taken together, this evidence may suggest relatively more competent affect processing in WS in contexts that are free of semantic/lexical interference. However, a recent ERP study using neutral, positive, and negative utterances with both intact and impoverished syntactic and semantic information reported abnormalities in all ERP components of interest linked to prosodic processing (N100, P200, and N300) in individuals with WS relative to TD controls (Pinheiro, Galdo-Alvarez, Rauber, Sampaio, Niznikiewicz, & Goncalves, 2011). This included diminished N100 for semantically intact emotional sentences, more positive N200 particularly for happy and angry semantically intact stimuli, and diminished N300 for both semantically intact and impoverished information. This suggests atypical localization of early auditory functions in WS, showing a bottom-up contribution to the compromised processing and understanding of affective prosody, as well as top-down influences of sematic processing at the level of sensory processing of speech. Overall, impairments in social perceptual skills have been postulated to contribute to the increased approachability and inappropriate social engagement in WS (Meyer-Lindenberg et al., 2005; Järvinen-Pasley et al., 2010b; Jawaid et al., 2011), urging studies to be directed at investigating social-emotional processing parallel to social functioning in this population. Importantly, however, the evidence discussed above fails to shed light into affect processing capabilities of individuals with WS required in naturalistic social interaction settings, such as the integration of emotion originating from different sensory modalities.

1.3 Audiovisual integration in social context

As discussed above, emotional messages can be transmitted via both visual (e.g., facial expressions, gestures) and auditory (e.g., affective prosody) channels and, in fact, in naturalistic social settings emotional information is rarely purely unimodal. As humans are constantly exposed to competing, complex audiovisual emotional information in social interaction contexts, a reliable interpretation of others’ affective states requires the integration of multimodal stimuli into a single, coherent percept (see De Gelder & Bertelson, 2003, for a review); an automatic function that is evident already at seven months of age in TD (Grossmann, Striano, & Friederici, 2006). Moreover, multisensory affective perception precedes unisensory affective perception in development (e.g., Flom & Bahrick, 2007). Existing behavioral literature into multisensory emotional face and voice integration in TD indicates that a congruence in affect between the two stimuli aids in the decoding of emotion (Dolan, Morris, & de Gelder, 2001); that multisensory presentation leads to more rapid and accurate emotion processing than unimodal presentation (Collignon et al., 2008); that signals obtained via one sense influence the information-processing of another sensory modality, even in situations where participants are instructed to orient to only one modality (de Gelder & Vroomen, 2000; Ethofer et al., 2006); and that visually presented emotion appears more salient as compared to that presented aurally (Collignon et al., 2008).

Attesting the importance of the human cognitive ability to successfully integrate socially relevant multisensory emotional information is neurobiological evidence pinpointing a dedicated brain circuitry for such functions in TD. Specifically, the audiovisual integration of emotion has been shown to take place at the overlap of the face and voice sensitive regions of the superior temporal sulcus (STS) over the right hemisphere (Kreifelts, Ethofer, Shiowaza, Grodd, & Wildgruber, 2009; Szycik, Tausche, & Munte, 2008; Wright, Pelphrey, Allison, McKeown, & McCarthy, 2003), and studies of neural connectivity have identified interactions between the relevant regions of the STS and the fusiform face area (FFA) (Kreifelts, Ethofer, Grodd, Erb & Wildgruber, 2007; Muller, Cieslik, Turetsky, & Eickhoff, 2012) and the amygdala (Muller et al., 2012; Jansma, Roebroeck, & Munte, 2014). Interestingly, Blank and colleagues (2011) recently demonstrated direct connections between voice sensitive areas of STS and the FFA, providing strong support to a dedicated neural system supporting the multisensory integration of emotional face and voice stimuli (Blank, Anwander, & von Kriegstein, 2011).

A central question that arises from the literature reviewed above concerns whether some of the broader social impairments that characterize individuals with WS may at least partially stem from a compromised ability to effectively process simultaneous emotional information originating from multiple sensory modalities. Importantly, studies of TD individuals have demonstrated that the multisensory integration of facial and vocal information typically allows for faster and more accurate recognition of emotion expressions in human observers, and can thus be considered a fundamental skill for typical social function (Collignon et al., 2008; de Gelder & Vroomen, 2000; Dolan et al., 2001; Kreifelts et al., 2007; Massaro & Egan, 1996). This question is of special interest as there are no known studies that have addressed audiovisual integration of visual and auditory emotion in purely social context in this population. In a previous audiovisual integration study, individuals with WS and comparison individuals with TD and DD were tested on a paradigm whereby facial expressions and non-social images matched for emotion (happy, fearful, and sad) were paired with affective musical excerpts in emotionally congruent and incongruent conditions (Järvinen-Pasley et al., 2010c). The main findings indicated similar levels of performance in individuals with WS and TD in processing emotion in faces across both congruent and incongruent conditions. Participants with WS uniquely demonstrated slightly superior levels of facial affect identification when paired with the emotionally incongruent relative to the congruent audiovisual stimuli. This finding was interpreted as indicating that the typical interference effect of conflicting emotional information resulting in compromised processing of incongruent pairs was diminished in individuals with WS in conditions in which a face is present, presumably because of an “attentional capture” to face stimuli. By contrast, DD controls showed poorer performance as compared to the WS and the TD groups, and these effects were particularly apparent when a facial expression was paired with emotionally incongruent music. This suggests that congruent auditory information enhances the processing of social visual information for individuals without WS, while incongruent auditory information has a detrimental effect on performance for such individuals. In a more recent study, individuals with WS and TD comparison individuals were presented with a musical affective priming paradigm, while electroencephalogram (EEG) oscillatory activity was measured (Lense, Gordon, Key, & Dykens, 2014). The participants were required to identify the emotional expression (happy or sad) of face stimuli, which were preceded by short affective music excerpts or neutral sounds. Similarly to the study of Järvinen-Pasley et al. (2010c), the participant groups showed similar levels of emotion identification accuracy of faces. However, unlike in Järvinen-Pasley et al.’s (2010c) study, participants with WS tested by Lense et al. uniquely demonstrated a musical priming effect, manifested as faster processing coupled with greater evoked gamma activity, in response to emotionally congruent as compared to incongruent stimulus pairs. The authors interpreted this finding as suggesting that music and social-emotional processing are unusually strongly intertwined in individuals with WS. However, as the studies of Järvinen-Pasley et al. (2010c) and Lense et al. (2014) involved auditory stimuli that did not originate from human sources (music), it is unclear how the findings may relate to audiovisual emotion integration in purely social contexts in WS.

1.4 Autonomic nervous system and emotional sensitivity in WS

In addition to the literature documenting receptive emotion processing abilities in WS, there are reports of unusual emotional and empathic sensitivity/reactivity in such individuals in the expressive domain (Järvinen-Pasley et al., 2008; Mervis et al., 2003; Fidler, Hepburn, Most, Philofsky, & Rogers, 2007). This aspect of the social profile of WS is currently particularly poorly understood and challenging to pin down. Specifically, amplified emotional responses in individuals with WS have been described in relation to their interactions with other people (Tager-Flusberg & Sullivan, 2000; Reilly, Losh, Bellugi, & Wulfeck, 2004) and to music (Levitin, Cole, Chiles, Lai, Lincoln, & Bellugi, 2004). One potentially useful and relatively novel way of probing this is through measuring autonomic nervous system (ANS) responses of individuals with WS while they attend to emotional information. ANS indices, such as electrodermal activity (EDA) and heart rate (HR), are amygdala-associated non-invasive measures that reflect sensitivity to social-affective information at physiological levels (Adolphs, 2001; Laine, Spitler, Mosher, & Gothard, 2009). Further, the physiological processes indexed by electrodermal responses and HR are regulated by the hypothalamic-pituitary (HPA) axis, which is implicated in a variety of social behaviors across species (Pfaff, 1999). It is thus of significant theoretical and clinical interest to examine the role of ANS sensitivity to emotion in individuals with WS, to obtain an approximate index of emotional reciprocity, and thereby begin to parse the role of ANS sensitivity in the characteristic social and emotional profiles.

Although ANS function represents a growing field in the context of research into WS, the current literature is sparse and mixed, with most studies employing visual paradigms (see Järvinen & Bellugi, 2013, for a review). For example, Plesa Skwerer and coauthors (2009) documented hypoarousal in response to dynamic face stimuli, together with more pronounced HR deceleration, which was interpreted as indexing heightened interest in such stimuli, in adolescents and adults with WS in relation to CA-matched controls with TD and DD. In a similar vein, Doherty-Sneddon et al. (2009) found that while individuals with WS displayed general hypoarousal as indexed by skin conductance level and reduced gaze aversion in a naturalistic context, similar to the TD controls, their arousal levels accelerated in response to face stimuli (Doherty-Sneddon, Riby, Calderwood, & Ainsworth, 2009). Our previous findings from adults with WS contrasted with a TD group showed that when viewing affective face stimuli, individuals with WS demonstrated increased HR reactivity, and a failure for EDA to habituate, suggesting increased arousal (Järvinen et al., 2012). It was further speculated that the lack of habituation may be linked to the increased affiliation and attraction to faces, which is one of the defining features of the syndrome. Riby, Whittle, and Doherty-Sneddon (2012) examined baseline electrodermal activity in response to live and video-mediated displays of happy, sad, and neutral affect in individuals with WS and TD controls matched for CA. The results showed that only live faces increased the level of arousal for those with WS and TD. Participants with WS displayed lower electrodermal baseline activity as compared to the TD group, which the authors interpreted as suggesting hypoarousal in this group. Taken together, despite of reports of general reduced arousal levels in individuals with WS, there is no evidence of hyporesponsivity to faces per se in such individuals in any study. Only one known study has examined the ANS sensitivity to affective (happy/fearful/sad) auditory stimuli (vocal/music) in individuals with WS contrasted with TD participants (Järvinen et al., 2012). Participants with WS uniquely exhibited amplified heart rate variability (HRV) to vocal as compared to musical information, which suggests diminished sympathetic arousal and heightened interest in vocal information. The authors interpreted the results to suggest that human vocalizations appeared more engaging than the musical information for individuals with WS. Individuals with WS further demonstrated increased HRV to happy stimuli. This result indexing greater vagal involvement is in line with the positive bias frequently documented in individuals with WS (Dodd & Porter, 2010), as positive emotional stimuli are particularly engaging socially and approach-promoting (Porges, 2007).

1.5 The present study

In light of the diverse strands of evidence discussed above, an important question concerns the extent to which the social difficulties experienced by individuals with WS may be related to social perceptual efficiency, particularly in multisensory contexts, and underlying autonomic reactivity. To this end, the aim of the present study was to construct profiles of basic emotion (fearful, happy, angry) identification across multimodal and unimodal contexts, and examine autonomic nervous system responses to social-emotional visual and auditory stimuli, in individuals with WS and CA-matched TD comparison individuals. Social-perceptual ability and autonomic reactivity were further related to the level of social functioning as measured by the SRS within the WS group, in an effort to elucidate potential sources of heterogeneity in this population. Given that the CA of the TD participants was above the targeted age range for the SRS, combined with the fact that we had no reason to suspect social impairments in this cohort on the basis of screening, the SRS was solely administered to the participants with WS. In the multisensory integration experiment portion of the study, participants were required to respond to the aural emotion. The rationale for this is that in the literature on TD, emotional signals transmitted by a face as compared to voice have been deemed to have higher salience in multisensory contexts, and also individuals with WS have been documented to display a bias toward viewing and a relatively strong skill in recognizing faces (Riby & Hancock, 2008, 2009; Bellugi, Lichtenberger, Jones, Lai, & St. George, 2000; Järvinen-Pasley et al., 2008). Requiring participants to respond to the facial emotion would thus somewhat overlook the more challenging aspect of audiovisual integration, i.e., that of processing the auditory-affective component.

Based on evidence to date, with respect to basic emotion recognition, we hypothesized that emotion judgments of individuals with WS may be biased toward the facial affect with stimuli conveying conflict in emotion between the face and voice, while the TD comparison individuals may demonstrate similar levels of affect identification across the congruent and incongruent conditions. We also predicted that participants with WS would show higher levels of performance with the positive (happy) as compared to negative (fearful and angry) stimuli, while the TD individuals would perform similarly across the different valence categories. This prediction was founded upon previous findings indicating that individuals with WS show difficulties in processing angry face stimuli at several levels, including deficient attention allocation (Santos, Silva, Rosset, & Deruelle, 2010), poor recognition accuracy (Porter, Shaw, & Marsh, 2010), and delayed identification (Porter, Coltheart, & Langdon, 2007). By sharp contrast, such individuals exhibit attentional bias toward positive social stimuli (Dodd & Porter, 2010), which may contribute to their unimpaired ability to identify happy expressions (e.g., Gagliardi et al., 2003; Plesa Skwerer et al., 2006; Järvinen-Pasley et al., 2010a). We further hypothesized that individuals with WS would exhibit significant social dysfunction as measured by the SRS. In light of the findings of van der Fluit et al. (2012), we also hypothesized that for WS, higher levels of real life social functioning may be associated with improved social perceptual ability.

In terms of ANS functioning, although specific hypotheses are difficult to formulate on the basis of the existing literature that is both scant and mixed, in light of previous study utilizing stimuli similar to that used in the present study (Järvinen et al., 2012), we hypothesized that within the visual domain, participants with WS would demonstrate both cardiac and electrodermal responses to faces that index greater arousal as compared to TD individuals, as well as a lack of EDA habituation. Within the auditory domain, in line with the findings of Järvinen et al. (2012), we hypothesized that relative to TD participants, individuals with WS would show increased HRV to vocalizations, indexing reduced arousal to vocal information. Individuals with WS were further hypothesized to exhibit emotion-specific autonomic response patterns, as reflected by increased HRV to happy stimuli. While ANS function is relatively little explored in WS, in light of the reported heterogeneity at the behavioral level (Porter & Coltheart, 2005; Little et al., 2013), and mixed literature on autonomic function, it seems a reasonable to further predict that heterogeneous ANS functioning overall may be associated with WS. Thus, social functioning, indexed by SRS, was included as a measure to explore its potential role in emotion processing and associated autonomic activity in individuals with WS contrasted with TD. In this vein, in light of both the aberrant organization of the ANS and atypical emotion processing profile in WS, we also hypothesized that both emotion processing and social functioning may be related to unusual patterns of autonomic functioning as compared to normative development.

2. Material and methods

2.1 Participants

A total of 41 individuals participated in the current study: 24 individuals with WS (12 females), and 17 TD comparison individuals (10 females). The genetic diagnosis of WS was established using fluorescence in situ hybridization (FISH) probes for elastin (ELN), a gene invariably associated with the WS microdeletion (Ewart et al., 1993). In addition, all participants with WS exhibited the medical and clinical features of the WS phenotype, including cognitive, behavioral, and physical features (Bellugi et al., 2000). The TD participants were screened for history of brain trauma, psychiatric concerns, and central nervous system disorders, and were required to be native English speakers. All participants were recruited through the Salk Institute as a part of a multi-site multidisciplinary program of research addressing neurogenetic underpinnings of human sociality. All participants were administered a threshold audiometry test using a Welch Allyn AM232 manual audiometer. Auditory thresholds were assessed at 250, 500, 750, 1000, 1500, 2000, 3000, 4000, 6000, and 8000 Hz, monaurally. The hearing of all participants included in the study was within the normal range. All experimental protocols were approved by the Institutional Review Board (IRB) at the Salk Institute for Biological Studies. Parents or legal guardians of all participants with WS provided written informed consent. Following a brief IRB-approved post-consent quiz describing the purpose and procedures of the study delivered verbally by research personnel, all participants with WS provided verbal assent, in addition to a written assent in the event they were able to do so. As there were no minors in the TD group, all participants provided written consent by themselves.

The participants’ cognitive functioning was assessed using the Wechsler Intelligence Scale. Participants were administered either the Wechsler Adult Intelligence Scale Third Edition (WAIS-III; Wechsler, 1997) or the Wechsler Abbreviated Scale of Intelligence (WASI; Wechsler, 1999). Table 1 shows the demographic characteristics of the sample of participants with WS and TD. The groups did not significantly differ in terms of CA (t (39) = 1.79, p = .082). The TD participants outperformed their counterparts with WS on verbal IQ (VIQ) (t (39) = −7.59, p < .001), performance IQ (PIQ) (t (39) = −11.54, p < .001), and full-scale IQ (FSIQ) (t (39) = −9.59, p < .001).

Table 1.

Mean characteristics of the participant groups

| CA (SD; range) | VIQ (SD; range) | PIQ (SD; range) | FSIQ (SD; range) | |

|---|---|---|---|---|

| WS (n=24) | 32.36 (10.65; 15.4-56.9) | 70 (9.15; 54-94) | 65 (5.41; 55-75) | 65 (7.10; 50-80) |

| TD (n=17) | 27.18 (6.42; 19.4-43.2) | 103 (17.20; 73-127) | 98 (11.86; 75-121) | 101 (15.47; 77-127) |

Despite the between-group differences in cognitive functioning, such differences were not controlled for in subsequent analyses as it has been suggested controlling for IQ does not contribute to the clarity of the meaningful differences seen in the autonomic responses to valence and social content in individuals with neurodevelopmental conditions (see Cohen, Masyn, Mastergeorge, & Hessl, 2013, for a discussion). Specifically, it has been postulated that IQ impairments may stem from shared ANS alterations that lead to the social-emotional processing deficits, and thus, it could be argued that social-emotional processing difficulties are not caused by IQ but rather have a shared origins rooted in the underlying neurodevelopmental disorder (Dennis et al., 2009).

For the psychophysiological portion of the study, the sample included 16 TD participants (mean CA = 25.44 years, SD = 6.56, range as above, 11 females) and 24 individuals with WS as indicated in the table above. Data from one TD participant was excluded from analyses due to excessive recording artifacts. The sample who participated in the social functioning portion of the study (i.e., completed the SRS; Constantino & Gruber, 2005) included 22 individuals with WS (mean CA = 33.43 years, SD = 10.59, range as above, 11 females). The remaining two participants with WS did not complete this inventory due to experimenter error.

2.2 Procedure

The stimuli were organized as two separate paradigms according to the sensory modality: (1) the multisensory block, and (2) two unimodal blocks comprised of single randomized presentations of affective vocalizations or facial stimuli. While both multisensory and unimodal paradigms included an active portion during which participants were required to make emotion identification judgments, only the unimodal block was also administered passively, during which psychophysiological recording was obtained. Within the multisensory block, the stimuli were organized with respect to both stimulus type (congruent/incongruent) and affective valence (fearful/happy/angry) into pseudo-random sequences, and were preceded by a blinking fixation cross. The duration of the auditory clips was between 2 to 3 s. The duration of the accompanying visual stimuli were matched to the durations of the auditory excerpts; thus, in the multisensory paradigm, the visual stimuli were presented for the exact duration of the paired auditory clips. As the same individual visual and auditory stimuli were used in both the multisensory and unimodal paradigms, the stimulus durations were equal between blocks.

The experiment was conducted in a quiet room. Participants sat in a comfortable chair in a well-lit room, 130 cm away from a TFT monitor (screen resolution of 1680 × 1050 pixels). To prevent autonomic habituation effects, the psychophysiological, passive portion of the unimodal visual and auditory blocks was always administered first, prior to the respective active affect identification portions. More specifically, the experiment had three parts: (1) a passive version involving unimodal visual and auditory paradigms (order counterbalanced within participants), (1) multisensory active paradigm, and (3) active unimodal visual and auditory visual paradigms (order counterbalanced within participants). Participants made affect identification judgments during (2) and (3). The order of administration of the three components was counterbalanced with respect to (2) and (3) between participants, with portion (1) invariably being administered first. Thus, only active version of the multisensory experiment was administered due to time restrictions for testing, and potential difficulties in interpreting the resulting autonomic data, as contributing effects of visual, auditory, and congruence-related information to the specific responses could not be determined, rendering the data potentially rather uninformative. The stimuli were presented on a desktop computer running Matlab (The MathWorks Inc., Natick, MA), which delivered a digital pulse embedded in the recording at the onset of each stimulus. To measure physiological responses, after a fixation cross for 1000 ms, each stimulus was presented for 2000 ms (for visual stimuli; between 2000 and 3000 ms for auditory stimuli), separated by an interstimulus interval (ISI) of 8000 ms (blank screen) to allow enough time for autonomic activity to return to near baseline levels. While our non-stimulus presentation consisted of 8 seconds of blank screen and 1 second of fixation time, yielding 9 seconds ISI total, however, for the purposes of analysis, specifically to adequately capture electrodermal responses to the stimuli which are relatively slow to occur, we sampled 7 seconds after the stimulus onset relative to 3 seconds prior to stimulus onset (which includes 1 second fixation time and 2 seconds of the blank screen). As was stated above, psychophysiological recording was solely obtained in response to the unimodal paradigm.

Within the unimodal visual and auditory blocks, the stimuli were randomized with respect to affective valence. Stimulus blocks were counterbalanced within experimental groups. For this portion of the experiment, participants were told that they would see pictures of faces showing fearful, happy, and angry expressions, or hear short vocal sounds that would express fear, happiness, and anger. For the passive task to measure ANS responses, participants were only instructed to look at the images, or listen to the sounds carefully while attending to a monitor displaying a fixation cross, and staying as quiet and still as possible. Ag/AgCl electrodes were applied to the skin with an isotonic NaCl electrolyte gel placed on the index and middle medial phalanges of the participant’s left hand to record EDA, according to a standard bipolar placement (Venables & Christie, 1980). ECG was recorded from Lead II configuration with two disposable electrodes, one attached to the right forearm and the other attached to the left ankle, below the true ankle joint. The recording sessions were divided into four bins separated by brief pauses, during which participants were allowed to stretch and relax and recordings were checked for misplacement and movement artifacts. The sessions were also preceded by a five-minute baseline period, during which ANS activity at rest was qualitatively inspected and participants were given the time to habituate to the sensors. During the experiment, stimulus onsets were marked with trigger codes, embedded into the recordings.

For the multisensory (active) block, the participants were told that they would see pictures of faces showing fearful, happy, and angry expressions appearing on the computer screen. The participants were also told that they would hear short pieces of human voices accompanying the images. Participants were told that their task would be to decide what emotion the voice is conveying. The experimenter then showed the response screen, which listed the three possible emotions to ensure that the participant understood each of the emotion options (happy, fearful, angry). An emotionally congruent audio-visual training stimulus of an angry female face, paired with angry vocalization, was then played. Neither the visual nor the auditory training stimuli were included in the actual test stimuli. The participant was instructed to verbally label the emotion that s/he thought best matched the emotion conveyed by the voice. If the participant’s response was incorrect (i.e., not “angry” or “mad”), the experimenter corrected this in an encouraging way, e.g., by saying, “What would you be feeling if you made a sound like that? You might also think that the person was angry or mad.” When correcting, the experimenter attempted to avoid teaching the participant a simple correspondence between the vocalization and emotion. An incongruent audiovisual stimulus item was then played to the participant, and the same procedure described above was followed. The training trials were replayed until the participant gave correct responses spontaneously to both of the trials.

For the active unimodal visual and auditory tasks, participants were told that they would again be played the same stimuli they quietly viewed and listened to in the psychophysiological portion, and this time, they would be asked to identify the emotion elicited by each image or sound at a forced-choice response screen. Prior to the onset of the active task, the experimenter showed the response screen to the participant, which listed the three possible emotions to ensure that the participant understood each of the emotion options (scary/scared, happy, and angry/mad). The participants responded verbally, and the experimenter operated the computer keyboard on the participant’s behalf. To ensure that the participants had paid attention during the experiment, they were verbally asked to identify the gender of the face/voice stimuli, subsequent to them having made the emotion judgment, and prior to proceeding to new stimulus item, in the active portions of the study. All participants demonstrated high levels of accuracy in this control task.

2.3 Materials

2.3.1 Experimental measures

For the multisensory portion of the study, the visual stimuli comprised 24 standardized static images of facial expression taken from the Mac Brain / NimStim Face Stimulus Set (Tottenham et al., 2009). There were 4 male and 4 female faces for each of three emotions (fearful, happy, and angry). The faces with the highest validation ratings were selected. As an auditory analogue, the auditory stimuli comprised 24 segments of non-verbal vocal bursts of emotion (2-3 seconds/segment) taken from the “Montreal Affective Voices”, a standardized set of vocal expressions without confounding linguistic information (freely available at http://vnl.psy.gla.ac.uk/resources.php). There were 4 female vocal and 4 male vocal segments for each of three emotions (fearful, happy, and angry). For the multisensory paradigm, image-sound stimuli were compiled as QuickTime movie files in such a way that there was a congruence of gender between the visual and auditory stimuli. Subsequently, the experiment comprised a total of 24 stimulus items. In 12 pairs, there was a match between the emotional content in the image and the voice, and in the remaining 12 image-sound pairs, there was an incongruity. Thus, the multisensory stimuli involved either a congruence between the emotional content in the visual image and the emotional content in voice (e.g., happy vocalization with a happy facial expression), or an incongruity (e.g., happy vocalization with a fearful facial expression). To test the possibility of reduced audiovisual integration in WS, participants were asked to judge the aural emotion. As a control condition, to assess multisensory integration within groups, the multisensory voice and face stimuli were also presented in isolation. In the unisensory task, 18 visual and 18 auditory items from the multisensory study were employed. Of note, all 36 unisensory stimuli were presented once in the active and passive tasks; however, when developing the pairs for the multisensory task 12 of these unisensory stimuli (6 visual, 6 vocalizations) were repeated. For example, the NimStim happy face #27 was paired with a happy vocalization for a congruent multisensory trial, and with another angry vocal stimuli for an incongruent trial.

2.3.2 Psychophysiology recordings and ANS measures

EDA and electrocardiogram (ECG) measures were recorded during the passive viewing portion of the experimental paradigm (see procedure below) using BioPac MP150 Psychophysiological Monitoring System (BioPac systems Inc., Santa Barbara, CA) at a 1000 Hz sampling rate.

Raw ECG signal was filtered and used to calculate HR and inter-beat interval (IBI) measures, after classifying the R peaks of the heart beat cycles. Besides the mean HR and IBI, we extracted the standard deviation of the inter-beat interval (sdIBI) to assess variability in heartbeats for each experimental condition, as suggested by Mendes (2009). Quantification of the mean IBI is used in conjunction with mean HR since it is a more sensitive and direct measure of parasympathetic and sympathetic systems activity (Bernston, Cacioppo, & Quigley, 1995).

For all ANS measurements, we sampled seven seconds subsequent to stimuli presentation and a three-second pre-stimulus baseline on a trial-by-trial basis, in order to compute event-related change scores. This approach allowed us to obtain weighted trial-specific percentage variations of autonomic activity, thus minimizing the influence of large-scale tonic fluctuations and assessing small-scale ANS reactivity and sensitivity, and thus offers a pure measure of ANS reactivity and accounts for differences in relative baseline levels. The EDA measurements were averaged over the 7 seconds, which was comprised of 2 seconds of stimulus presentation for visual stimuli, and 2-3 seconds for auditory stimuli, and 5 subsequent seconds of blank screen, and relative to the baseline of 3 seconds, computed from the 3 seconds prior to stimulus onset.

2.3.3 Index of social functioning

The Social Responsiveness Scale (SRS) (Constantino & Gruber, 2005) is a 65-item questionnaire that parents or caregivers complete for their child. It is aimed for children ages 4-18 years to screen for symptomatology associated with ASD, encompassing atypical communication, interpersonal relationships, and the presence of repetitive/stereotypic behaviors. The SRS was primarily developed as a screening tool for ASD, as it sensitively differentiates the social impairment that characterizes ASD from that which occurs in other childhood psychiatric conditions (Constantino & Gruber, 2005); thus, it can also be useful in identifying and characterizing individuals without ASD who display milder social difficulties. The caregivers’ responses to questionnaire items result in T-scores across the scales: Social Awareness, Social Cognition, Social Communication, Social Motivation, and Autistic Mannerisms, in addition to a Total Score. T-scores below 60 indicate no clinically significant concerns in social functioning; T-scores of 60-75 indicate mild-to-moderate social dysfunction; and T-scores higher than 76 indicate severe social dysfunction. For participants above the age of 18 years, age norms for 18 year-olds were used as representative of norms for mature individuals.

As was mentioned in the introduction, consistent with the existing literature (Klein-Tasman et al., 2011; Riby et al., 2014; van der Fluit et al., 2012), this measure was included in the study with a purpose of examining within WS population differences in social functioning, and thus was only administered to the WS sample. The rationale for this is that all individuals in the TD group were above the targeted age range for this instrument, and were living independently of their families, which would have complicated data collection. Additionally, we had no reason to suspect atypical social functioning in any individual on the basis of our screening, interactions with the participants, and their performance in the experimental tasks.

3. Results

3.1 Results of experimental measures

Table 2 displays the mean percent correct emotion identification scores within each affect category (fearful/happy/angry) across the four sensory modality conditions across the experimental paradigms (multisensory congruent/multisensory incongruent/unisensory visual/unisensory auditory) for participants with WS and TD. All participants’ performance was significantly above the chance level (33.33%) with all stimuli (all p values < .002), with the exception of performance of the WS group in the incongruent angry and unimodal angry conditions (p=.50 and .58, respectively).

Table 2.

Mean percent correct emotion identification performance for individuals with WS and TD across the different audiovisual sensory modality conditions of the experimental measures.

| WS (N=24) | TD (N=17) | |||

|---|---|---|---|---|

|

| ||||

| Mean % | SD | Mean % | SD | |

| Multisensory paradigm | ||||

| Fearful congruent | 96.88 | 8.46 | 97.06 | 12.13 |

| Happy congruent | 100 | 0 | 100 | 0 |

| Angry congruent | 82.29 | 27.07 | 95.59 | 13.21 |

| Fearful incongruent | 59.38 | 31.93 | 94.12 | 10.93 |

| Happy incongruent | 81.25 | 29.72 | 98.53 | 6.06 |

| Angry incongruent | 29.17 | 35.86 | 77.94 | 23.19 |

| Visual paradigm | ||||

| Fearful | 95.14 | 9.17 | 99.02 | 4.04 |

| Happy | 99.30 | 3.40 | 100 | 0 |

| Angry | 85.42 | 16.53 | 95.10 | 9.80 |

| Auditory paradigm | ||||

| Fearful | 83.33 | 21.98 | 95.10 | 9.80 |

| Happy | 98.61 | 4.71 | 99.00 | 4.04 |

| Angry | 37.50 | 29.59 | 58.82 | 28.33 |

A 4×3×2 repeated measures mixed analysis of variance (ANOVA) was conducted on the experimental social-perceptual data, with sensory mode (multisensory congruent/multisensory incongruent/unisensory visual/unisensory auditory) and emotion (angry/happy/fearful) entered as within-subjects variables, and group (WS/TD) entered as between-subjects variable. This analysis yielded significant main effects of sensory mode (F(3, 117)=39.41, p<.001, η²=.50), emotion (F(2, 78)=56.27, p<.001, η²=.59), and group (F(1, 39)=41.30, p<.001, η²=.51), in addition to interactions between sensory mode and group (F(3, 117)=14.28, p<.001, η²=.27), emotion and group (F(2, 78)=6.27, p=.003, η²=.14), and sensory mode and emotion (F (117, 78)=19.30, p<.001, η²=.33). The significant interactions involving group differences are depicted in Figures 1 and 2.

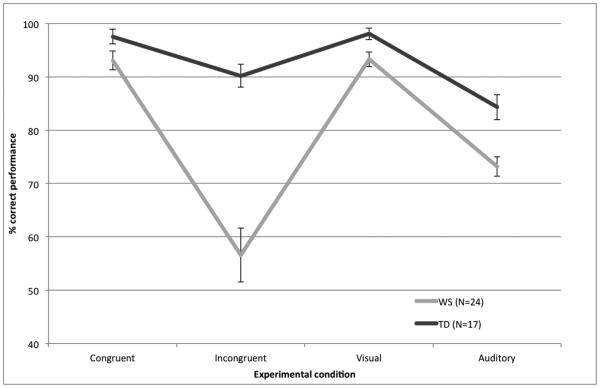

Figure 1.

Mean percent correct performance for individuals with WS and TD across the four audiovisual sensory modality conditions (Error bars represent ± 1 SEM).

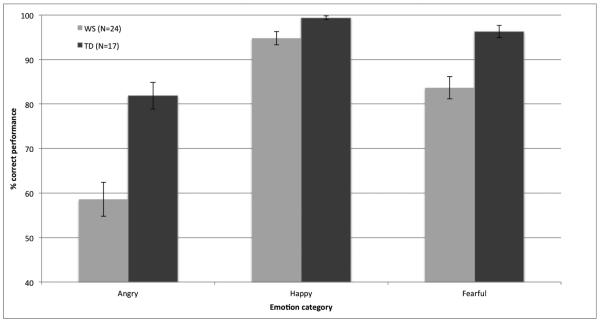

Figure 2.

Mean percent accuracy of identification of fearful, happy, and angry emotion in individuals with WS and TD collapsed across the four experimental conditions (multisensory emotionally congruent; multisensory emotionally incongruent; unisensory visual; unisensory auditory) (Error bars represent ±1 SEM).

Post-hoc Bonferroni-corrected t-tests (threshold alpha level set at p≤.01) examining the interaction effects with group showed that the sensory mode by group interaction was due to the TD individuals outperforming their counterparts with WS with the emotionally incongruent audiovisual stimuli (t(39)=−5.35, p<.001) and the unimodal auditory stimuli (t(39)=−3.81, p<.001), while between-group differences in performance with the emotionally congruent multisensory (t(39)=−1.85, p=.07) and unimodal visual (t(39)=−2.54, p=.02) did not reach the adjusted significance level. Within groups, for participants with WS, performance was higher with the congruent as compared to the incongruent multisensory stimuli (t(23)=6.67, p<.001), unimodal visual as compared to the unimodal auditory stimuli (t(23)=8.53, p<.001), multisensory congruent as compared to unimodal auditory stimuli (t(23)=9.45, p<.001), unimodal visual as compared to the multisensory incongruent stimuli (t(23)=6.94, p<.001), and unimodal auditory as compared to the multisensory incongruent stimuli (t(23)=−3.49, p=.002). The participants with WS showed similar levels of performance in identifying emotion in audiovisual congruent and unimodal visual stimuli (t(23)=.10 p=.92). For the TD group, performance was higher with unimodal visual as compared to the unimodal auditory stimuli (t(16)=5.17, p<.001), multisensory congruent as compared to unimodal auditory stimuli (t(16)=4.96, p<.001), and unimodal visual as compared to the multisensory incongruent stimuli (t(16)=3.24, p=.005). The TD individuals showed similar levels of performance in identifying emotion across congruent and incongruent multisensory stimuli (t(16)=2.70, p<.02) and audiovisual congruent and unimodal visual stimuli (t(16)=2.56 p=.02). To compare overall performance in audiovisual versus unimodal contexts, results showed that the TD group outperformed the individuals with WS in both the audiovisual (t(39)=−5.77, p<.001) and unimodal (t(39)=−4.75, p<.001) experiments. Within groups, participants with WS showed significantly higher performance overall with the unimodal as compared to multisensory stimuli (t(24)=−3.39, p=.002), while the TD group showed similar levels of performance across the two contexts (t(16)=2.23, p=.04), i.e., the marginally higher performance with the audiovisual as compared to unimodal sensory stimuli failed to reach the adjusted significance level.

The emotion by group interaction stemmed from the fact that while the TD group outperformed those with WS with identifying fearful (t(39)=−3.93, p<.001) and angry (t(39)=- 4.47, p<.001) stimuli, the differences in between-group performance with the happy stimuli did not reach significance (t(39)=−2.53, p=.02). Within groups, for the WS group, performance was higher with happy as compared to fearful stimuli (t(23)=−4.42, p<.001), fearful as compared to angry stimuli (t(23)=5.04 p<.001) , and happy as compared to angry stimuli (t(23)=9.01, p<.001). For the TD group, performance was higher with fearful as compared to angry stimuli (t(16)=4.34 p=.001) , and happy as compared to angry stimuli (t(16)=5.98, p<.001), while there was no significant difference in the identification of happy and fearful stimuli (t(16)=−1.97, p=.07).

Finally, an inspection of error patterns in the experimental tasks for the WS and TD groups showed that in the emotionally incongruent compounds, the participants’ incorrect responses reflected the facially expressed emotion, and in the unimodal tasks, the errors pertained to fearful/angry confusion.

Given that both groups exhibited relative difficulties with identifying aurally expressed emotion, the main ANOVA was repeated with a variable indexing the participants’ performance in the auditory unisensory condition entered as a covariate. The pattern of significant main effects remained unchanged.

3.2 ANS measures and statistical analyses

Autonomic standardized event-related change scores for EDA, IBI, and sdIBI were analyzed by utilizing a linear mixed-effects modeling approach. Statistical analyses were run and checked using R (R Development Core Team, 2008), and the R package nlme (Pinheiro, Bates, DebRoy, Sarkar, & R Development Team, 2013). The model was designed to account for random effects due to between-participant individual differences as well as potential confounding covariates such as gender and age. Further, we controlled for affect identification proficiency (measured as individual accuracy on the behavioral identification task) in order to account for potential confounding effects of inter-individual differences in conscious emotion recognition skills. Moreover, all trials containing outliers, i.e., scores that exceeded 2.5 SDs above or below the individual mean, were removed from analyses. Group (WS/TD), condition (auditory /visual), and emotion (angry/happy/fearful) were included as fixed effects in the mixed models. To accurately investigate and account for time-related confounds, we also included blocks (2 levels) and trial number (18 levels for both visual and auditory blocks) as discrete variables and we modeled autocorrelations between subsequent trial measurements. The autocorrelation structure has been designed as a first-order autoregressive covariance matrix. As suggested by Pinheiro and Bates (2000), we assessed the significance of terms in the fixed effects by conditional F-tests and then report F and p values of the Type III Sum of Squares computations. Here, we chose to report the degrees of freedom of our comparisons, but note that the calculations of the relevant denominator degrees in mixed-effects models are approximations. Significance levels of pair-wise comparisons (Welch two sample t-tests) were adjusted using Bonferroni correction. The normality and homogeneity assumptions for linear mixed-effects models were assessed by examining the distribution of residuals.

3.2.1 Results of ANS data analyses

The statistical model run on EDA standardized change scores highlighted significant two-way interactions between group and modality (F(1,1319)=12.04, p<.001) and between group and emotion (F(2,1319)=6.49, p=.002), in addition to a significant three-way interaction between group, modality and emotion (F(2,1319)=8.65, p<.001); these are illustrated in Figures 3a-c.

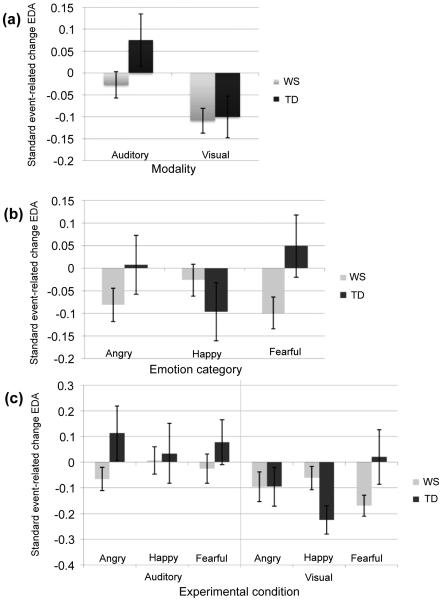

Figure 3a-c.

Mean standardized EDA event-related change scores across: a) groups and modalities; b) groups and emotions; c) groups, modalities, and emotions (Error bars represent ±1 SEM).

With respect to focused analyses, individuals with both WS and TD exhibited negative change scores (MWS =−.11, MTD =−.10, respectively) in response to visual stimuli, indexing decreases in arousal. While TD participants showed an increase in arousal in response to auditory stimuli, no such an effect was observed for the WS group (MWS =−.03, MTD =.08, respectively; see Figure 3a). Direct pair-wise comparisons highlighted a significant difference in arousal for the visual versus auditory stimuli for TD participants (p<.05), while no such effect was in evidence for the WS group.

Further, the comparison of EDA responses between groups to specific emotions (see Figure 3b) revealed an interesting difference concerning fearful stimuli regardless of their sensory modality. Namely, participants with WS and TD exhibited opposite patterns of arousal: while increase in arousal was evident in TD individuals (MTD =.05), diminished arousal characterized the WS group (MWS =−1.0; p=.057). An in-depth analysis of the significant three way interaction by pair-wise comparisons indicated that while EDA standardized event-related change scores were not significantly different for various emotional auditory stimuli (all p values >.05), happy and fearful faces elicited the contrasting group-specific patterns (see Figure 3c). Namely, TD participants tended to show increases in arousal in response to fearful faces whereas participants with WS showed decreases in arousal to these stimuli (MTD =.02, MWS =−.17; statistical trend: p<.10). The opposite pattern was observed for happy faces, with participants with WS exhibiting less decreases in arousal as compared to their TD counterparts (MWS =−.06, MTD =−.23; p<.05).

The analysis of the other ANS reactivity measures based on HR (mean IBI and sdIBI) yielded no significant results.

3.3 SRS

Mean T-scores and SDs for each subscale for participants with WS are displayed in Table 3. Figure 4 illustrates the number of participants with WS with T-scores falling within the different classifications across the six domains of social functioning (including the total score).

Table 3.

Mean SRS T-scores for participants with WS

| SRS domain | WS (n=22) mean T-score (SD) |

|---|---|

| Social Awareness | 60.55 (11.27) |

| Social Cognition | 74.27 (15.71) |

| Social Communication | 66.36 (12.47) |

| Social Motivation | 58.00 (13.62) |

| Autistic Mannerisms | 83.73 (17.66) |

| Total Score | 71.14 (13.16) |

Note: Higher T-scores reflect greater deficits in the domain.

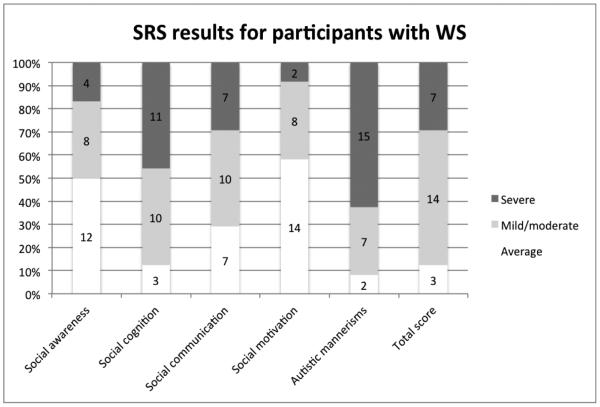

Figure 4.

Percentage of individuals with WS falling in the average, mild-to-moderate, and severe ranges on the SRS parent report inventory (number of participants indicated).

3.4 Relations between ANS functioning and SRS

Pearson correlations (two-tailed) were applied between event-related EDA change scores and SRS data for participants with WS in order to elucidate potential associations between autonomic activity and social functioning in this population, as sources of heterogeneity observed in these domains. The only significant correlations indicated that a more normal (i.e., lower) score on the SRS Social Motivation subtest was associated with a higher EDA response to unimodal emotional facial expression stimuli (r(22)=−.43, p=.046), and that a more normal (i.e., lower) score on the SRS Social Communication subtest was associated with a higher EDA response to the unimodal happy facial stimuli (r(22)=−.47, p=.028).

3.5 Relations between social perceptual ability and SRS

Finally, Pearson correlations (two-tailed) were performed on emotion identification scores and SRS data in order to elucidate relationships between social perceptual ability and social functioning in WS. The results indicated that for these individuals, a more competent identification of unimodally presented angry facial expressions was associated with a more normal (i.e., lower) score on the SRS Social Cognition (r(22)=−.47, p=.028) and Autistic Mannerisms (r(22)=−.44, p=.043) subscales. By contrast, a higher ability to process unimodal angry vocalizations was associated with a more dysfunctional (i.e., higher) Total SRS score (r(22)=.49, p=.021), and additionally with higher scores on Social Motivation (r(22)=.55, p=.008), Social Communication (r(22)=.55, p=.009), and Autistic Mannerisms (r(22)=.44, p=.041) subscales.

4. Discussion

As human communication relies heavily on the integration of multimodal emotional information, affective abnormalities have been proposed to contribute to social dysfunction in developmental disorders. We addressed this question through a multidimensional design that examined the processing of facially and vocally conveyed basic affective states across multimodal and unimodal presentations in individuals with WS, in conjunction with autonomic response patterns and social functioning. The main behavioral findings indicated that whereas both groups showed high and indistinguishable levels of performance from each other in the emotionally congruent multisensory contexts as well as with identifying emotion in unisensory face stimuli, the WS group showed a detriment in performance with the emotionally incongruent stimuli, as well as with identifying unisensory vocal emotion. This response pattern suggests that the competent processing of the congruent multisensory stimuli was at least partially driven by the salience of the accompanying face stimuli at least for the WS group, as both of these conditions resulted in a high emotion identification accuracy across groups. Although the vocal stimuli were clearly experienced as substantially more challenging than the counterpart facial expression stimuli, supporting the notion that face information does hold an increased salience over voice stimuli in social information processing (Collignon et al., 2008), an interesting difference emerged between individuals with WS and TD in recognizing vocal emotion in multisensory versus unisensory contexts. Namely, whereas TD individuals clearly benefited from the multisensory context even in emotionally incongruent conditions in identifying vocal emotion, those with WS showed superior performance with the unisensory auditory as compared to multisensory incongruent stimuli; both of these conditions directly assessed the ability to process vocal emotion. While TD individuals were apt to flexibly attend to selective social stimuli despite presented with interfering faces, participants with WS were clearly distracted by this information, as their performance in the multisensory incongruent stimuli was substantially poorer than that of unimodal vocalizations. In fact, this finding provides support to the idea that face stimuli holds atypically high salience for individuals with WS (Mervis et al., 2003; Järvinen-Pasley et al., 2008; Riby & Hancock, 2008, 2009), as their responses suggest that they were unable to suppress the emotional information supplied by the accompanying face. These findings have important implications for understanding the atypical emotion processing profile associated with WS: in everyday life, emotional displays rarely are expressed by the face alone, and if critical information supplied by voice is not given sufficient attention, it indeed would be expected that overall emotion understanding is compromised. This deficit in auditory social processing may thus potentially contribute to the widely reported impairments ranging in basic emotion perception to unusual empathic responses to mental state reasoning in individuals with WS (e.g., Gagliardi et al., 2003; Plesa Skwerer et al., 2006; Tager-Flusberg & Sullivan, 2000; Hanley, Riby, Caswell, Rooney, & Back, 2013).

The superior performance of the TD participants with the multisensory incongruent angry as compared to the unimodal auditory angry stimuli may be due to the multisensory context “highlighting” the auditory information better, or bringing it more strongly to the forefront of participants’ attention. For example, if angry vocalization is accompanied by a happy face, the participants are immediately alerted by the clear conflict in emotions provided by the different modalities, which may result in them making more careful judgments of the emotional content of the auditory information than when the auditory information is presented in isolation. An inspection of error patterns confirmed that all errors for the identification on angry voice pertained to confusion with fear. By contrast, in the unimodal auditory paradigm, no “supporting” or “conflicting” emotional information was provided in another sensory modality, thus making the task somewhat more demanding. As discussed above, for individuals with WS, the fact that performance was somewhat higher with the unimodal angry as compared with the multisensory incongruent angry stimuli suggests that the presence of a face in the multisensory context interfered with their processing of vocal information, while the opposite effect was observed in the TD group.

The current pattern of results fails to provide evidence of the “optimal” effect of emotion decoding, whereby audiovisual congruent information results in more accurate (and faster) emotion processing than that provided by either sensory modality alone (De Gelder & Vroomen, 2000), globally for either group of participants, as performance across multimodal congruent, unimodal visual, and auditory did not noticeably differ for fearful and happy stimuli. The TD participants only demonstrated this effect for the angry stimuli, as performance with the multimodal congruent stimuli exceeded that observed in the unimodal angry conditions. However, ceiling level performance particularly in the TD group may have masked both between-group differences as well as those relating to different experimental conditions within groups. For the WS group, overall performance level was higher with unimodal as compared to multimodal stimuli, and clear deficits were apparent in vocal emotion processing. The TD individuals, by contrast, showed a modest enhancement in affect identification performance in multimodal versus unimodal contexts; however, difficulties were almost entirely limited to the identification of angry stimuli, with high levels of performance with fearful and happy stimuli.

Recent neuroimaging investigations in normative development have mapped out brain regions that are specifically implicated in audiovisual integration of affective information implicating the STS over the right hemisphere (Kreifelts et al., 2009; Szycik et al., 2008; Wright et al., 2003), and interactions between the STS and the FFA (Kreifelts et al., 2007; Muller et al., 2012; Blank et al., 2011) and the amygdala (Muller et al., 2012; Jansma et al., 2014). The neurobiological literature on WS has identified widespread abnormalities in these structures. For example, in an MRI study, individuals with WS relative to age-matched TD controls displayed significantly reduced volumes of the right and left superior temporal gyrus (STG), an absence of the typical left>right STG asymmetry, as well as a lack of the typical positive correlation between verbal ability and the left STG volume (Sampaio, Sousa, Férnandez, Vasconcelos, Shenton, & Gonçalves, 2008). However, evidence with respect to STG volumes in individuals with WS relative to healthy controls is inconsistent (see e.g., Reiss et al., 2000). Another study employing fMRI to examine neural correlates of auditory processing reported significantly reduced activation bilaterally in STS in participants with WS relative to TD controls (Levitin et al., 2003). Further, in line with the preoccupation with face stimuli associated with WS, a recent fMRI investigation reported evidence of over two-fold absolute volume increase of the FFA in individuals with WS relative to TD participants, with the functional volume correlating positively with face processing accuracy (Golarai et al., 2010). While this study failed to find differences in the amplitude of responses of the amygdala, STS, and FFA between the WS and TD groups, increased FFA response to faces has also been reported in WS relative to TD participants (Paul et al., 2009). It has been postulated that the disproportionately large FFA in WS may reflect abnormally rapid specialization and development of the face sensitive regions of the FFA due to the robust attentional bias toward such stimuli beginning in early childhood (Haas & Reiss, 2012). Studies have also reported altered connectivity between the fusiform cortex and amygdala in WS (Sarpal et al., 2008; Haas et al., 2010). Taken together with the current behavioral data, this evidence suggests that multisensory emotion integration in individuals with WS may be supported by both structural and functional abnormalities of the key brain regions implicated in such processes in neurotypical individuals. Further research is thus warranted to clarify the exact neurobiological correlates of multimodal emotion integration in individuals with WS.

In line with extant literature (e.g., Gagliardi et al., 2003; Plesa Skwerer et al., 2005, 2006), a further behavioral result indicated a global deficit in individuals with WS in processing negative social-emotional expressions (both fearful and angry) as compared to the TD counterparts, while no between-group differences emerged in processing happy expressions. Performance patterns across the various experimental conditions however suggested that this largely resulted from a specific detriment in processing angry expressions. In the context of the ANS data analyses, data revealed contrasting EDA responsivity patterns that were emotion-specific in individuals with WS and TD within the visual domain: while participants with WS exhibited the least relative decrease in arousal as indexed by the EDA to happy, and the greatest relative decrease in arousal to the fearful stimuli, implicating greater arousal in response to happy as compared to fearful stimuli, the TD individuals demonstrated the highest relative arousal in response to fearful stimuli, and the lowest arousal to happy stimuli. These results were significant both within and between subjects. This pattern is consistent with previous fMRI studies, which showed that individuals with WS demonstrate diminished amygdala and OFC activation in response to negative facial expressions relative to TD controls (Meyer-Lindenberg et al., 2005). Additionally, combined event-related potentials (ERP) and fMRI data indicate that neural activity to negative face stimuli is reduced in WS, while brain responses to happy expressions are increased, relative to TD controls (Haas, Mills, Yam, Hoeft, Bellugi, & Reiss, 2009). It may thus be that “over-vigilance” of the amygdala and other brain regions linked to emotion processing of positive affect supports the competent behavioral performance of individuals with WS (e.g., Gagliardi et al., 2003; Plesa Skwerer et al., 2006), which may also serve to enhance the perceptual salience of positive stimuli (Dodd & Porter, 2010). Moreover, positive affective stimuli are more socially engaging than those of negative valence, as they promote approach-related behaviors (Porges, 2007). The current finding that individuals with WS uniquely showed highest relative arousal in response to happy faces is further consistent with these postulations. Taken together with the neurobiological data, this evidence raises the possibility that the valence-specific neural activation patterns observed in individuals with WS also manifest at the level of autonomic arousal, and may thus be a deeply-grained feature of the WS social profile.

The experimental groups exhibited similar EDA in response to angry visual stimuli, despite the substantially different behavioral identification performance. However, while the participants with WS showed attenuated arousal to both classes of negative stimuli, i.e., fearful and angry, those with TD only showed this pattern for the angry visual stimuli. By contrast, the TD group exhibited the highest increases in arousal in response to angry vocalizations of all auditory stimuli, suggesting that fearful images and angry voices were perceived as most emotionally charged and arousing. By contrast, individuals with WS showed the lowest arousal in response to the angry vocalizations and fearful facial expressions in the visual domain, and the highest relative EDA to happy expressions across the visual and auditory domains. This pattern is in agreement with previous behavioral reports of “positive affective bias” in WS (Dodd & Porter, 2010) as well as combined ERP and fMRI evidence that highlights significantly increased neural activity in individuals with WS relative to TD controls to happy faces (Haas et al., 2009), combined with a neglect of negatively valenced information (e.g., Meyer-Lindenberg et al., 2005; Santos et al., 2010). The decreased autonomic sensitivity to threatening and angry social information may represent an autonomic correlate for the general hyposensitivity to socially relevant fear and threat, and associated amygdala hypoactivation, which have been well established as some of distinguishing features of WS (Meyer-Lindenberg et al., 2005). The lack of arousal may thus correspond to neural hypoactivation, and potentially behavioral neglect of the stimuli. This interesting initial finding warrants further research with fine-grained analysis techniques of ANS measures into fear and threat perception in WS, which is a landmark characteristic of the syndrome. As such, this line of work may have implications for understanding the mechanisms underlying the increased approach behavior characteristic of WS, moreover, with implications for potential intervention techniques, as this behavior predisposes individuals with WS to social vulnerability (cf. Jawaid et al., 2011).

At the level of psychophysiology, an additional result indicated significantly increased autonomic arousal in response to auditory as compared to visual stimuli in TD, implicating hypoarousal to human affective vocalizations in individuals with WS, while overall EDA responses to visual stimuli were indistinguishable between groups. The finding from the auditory domain may further be reflected at the behavioral level as compromised recognition of these stimuli by individuals with WS. The lack of arousal to human voices may further contribute to the deficits in prosodic processing that have been reported for the WS population (Catterall et al., 2006; Plesa-Skwerer et al., 2006), particularly for negatively valenced utterances. Clues for the possibility that altered neurobiological functions may support the processing of human vocalizations was provided by a dichotic listening study (Järvinen-Pasley et al., 2010a), in which aberrant hemispheric organization in participants with WS relative to TD individuals was found for negative, but not for positive, vocalizations. While there are no known fMRI studies directly targeting the neurobiological substrates of processing of vocal stimuli in WS, literature on general auditory processing in WS nevertheless suggests widespread brain abnormalities (see Eckert et al., 2006; Gothelf et al., 2008). Interestingly, Holinger et al. (2005) reported an abnormally sizeable layer of neurons in a region of the auditory cortex receiving projections from the amygdala in individuals with WS, and suggested that the auditory cortex may be more densely connected to the limbic system than typical in WS, which may be linked to heightened emotional reactivity to specific auditory material. The current finding of hyporesponsive EDA in response to vocalizations in individuals with WS is at odds with this result; it is possible though that the neurons are responsive to specific classes of auditory stimuli only, such as music. It is also possible that the main role of the amygdala involves connoting emotional significance to auditory experience during the encoding phase. Given that the accurate decoding of human vocalizations is fundamental for being able to adequately navigate the social world, future studies are warranted to probe the neurobiological underpinnings of human voice processing in individuals with WS.

The current study failed to reveal significant between-group effects based on cardiac indices of ANS activity. Previously, Plesa Skwerer et al. (2009) reported increased HR deceleration in individuals with WS relative to those without the condition in response to dynamic emotional face stimuli, thought to index enhanced interest in such information. The lack of such finding in the current study may stem from differences in stimuli between the studies, such as the current study employing static images instead of dynamic ones, as well as differences in data analytic techniques. Another study by Järvinen et al. (2012) used static facial expressions together with affective non-verbal vocalizations, and within the visual domain, the results indicated that the WS group relative to the TD participants showed increased HR reactivity and non-habituating EDA pattern, reflecting increased interest in faces. Within the auditory domain, the WS group showed increased interest in human vocal stimuli as compared to music. However, as this study specifically compared ANS responses across social and non-social domains in WS, the lack of such effects in the current study may reflect differences between the paradigms. The current findings indicating valence-specific autonomic response patterns to visual and auditory social stimuli in WS may have been specifically afforded by the multi-dimensional experimental design that solely focused on social processing across different sensory modalities, which further presented the face and voice stimuli as separate blocks. This may have enabled more robust autonomic effects to be formed and measured specifically in response to these types of stimuli, without the potential confounding/interfering effect of e.g., non-social stimuli. A major difficulty in attempting to consolidate findings across studies of ANS function in WS concerns the rarity of such investigations, as well as wide differences in methodologies and paradigms employed (see Järvinen & Bellugi, 2013, for discussion).

For the WS population, the pattern of autonomic responses and social-perceptual capacity were related to their level of social functioning as measured by the SRS. The results from the SRS were largely in agreement with the current literature (Klein-Tasman et al., 2011; van der Fluit et al., 2012; Riby et al., 2014). In the present sample, the population mean for Social Motivation was within the normative range, and that for Social Awareness narrowly missed the normative cut-off score. Most severe impairments pertained to the Social Cognition and Autistic Mannerisms domains. When these data were considered together with autonomic responsivity data, more normal social functioning as indexed by Social Motivation and Social Communication were associated with higher EDA responses to emotional face stimuli in general, and to happy facial expressions, respectively. In the context of previous reports of hypoarousal in response to social stimuli in individuals with WS (Plesa Skwerer et al., 2009; Doherty-Sneddon et al., 2009), this pattern may suggest that when autonomic responses of individuals with WS accelerate to a more normal level, social functioning is also improved (cf. Mathersul, McDonald, & Rusby, 2013). Namely, individuals with WS who demonstrate higher autonomic sensitivity to others’ facial expressions of emotion may also exhibit less inappropriate social-affiliative behavior including better inhibition of approach, less social anxiety, and more normal empathic responses. The other significant finding indicating an association between higher autonomic arousal to happy facial expressions and more normal Social Communication as indexed by the SRS may suggests that a great sensitivity to others’ happy facial expressions correspond to more appropriate and/or sensitive expressive social behaviors. Further investigations should be directed to explore the possibility that this result may represent an ANS marker for social communicative capacity in WS.