Abstract

Medication mishaps are a common cause of morbidity and mortality both within and outside of hospitals. While the use of a variety of technologies and techniques have promised to improve these statistics, instead of eliminating errors, new ones have appeared as quickly as old ones have been improved. To truly improve safety across the entire enterprise, we must ensure that we create a culture that is willing to accept that errors occur in normal course of operation to the best of people. Focus must not be on punishment and shame, but rather building a fault tolerant system that maintains safety of both staff and patients.

Keywords: Patient safety, Culture of safety, Medication error, Complexity, System design

Introduction

Despite years of attention and focus, the healthcare system remains an odd combination of near miraculous cures and unbelievable harms. As a whole, we have yet to achieve a consistent level of harm-free care to our patients, and few areas are as difficult to assure safety as the administration of medications. Too often it takes acts of individual heroism to keep patients from harm, and when those individuals invariably fail, we blame them for their acts of apparent inattention. As medications proliferate and patients become increasingly dependent upon a variety of medications, we need to find opportunities beyond individual competence and diligence to keep our patients safe. The purpose of this article is to discuss the importance of culture in improving the safe delivery of care, particularly the high-risk activity of medication administration.

High Reliability and Culture

While there are few systems that are comparative to healthcare, lessons may be learned from those industries that are recognized as high reliability organizations (HROs). These are organizations that, despite ample opportunity for failure, have lower rates of accidents than expected given the complexity of their day-to-day activities. The best recognized HRO is the airline industry, and while it is obvious that a hospital is not an airplane, research has found that all HROs have five things in common: preoccupation with failure, reluctance to simplify, sensitivity to operations, commitment to resilience, and deference to expertise [1]. While no exact roadmap exists for becoming an HRO, organizational culture, specifically a culture focused intently on safety, plays a major role.

Culture of Safety

A safe culture is one in which those in charge are not only willing to hear bad news but also welcome that news as an opportunity to prevent or mitigate potential harms. Developing such a culture is essential to improve both the safety and the quality of care delivery [2, 3]. A culture of safety is comprised of several elements, some of which are discussed below in greater detail.

Just Culture

A Just Culture recognizes that even the most committed and intelligent individuals make mistakes and acknowledges that these same professionals may develop unhealthy norms such as short cuts, rule violations, or workarounds. However, this culture has no tolerance for behaviors that repeatedly or purposefully violates the policies/procedures put in place to maintain the safety of patients [4]. When an error, close call, or adverse event occurs, rather than asking “who does blame lie with,” a Just Culture asks “why or how did this happen.” Human error can be viewed in one of two ways: (1) it is the fault of the people working in the system (disregard for procedure or policy, carelessness of people) or (2) it is a symptom of a system that has latent vulnerabilities dispersed throughout. Latent vulnerabilities are faults created by policies, directives, equipment, and/or decisions, many of which may be far removed from immediate patient care setting where the actual error plays out. These latent failures typically have their genesis at higher levels within the organization, and they may be difficult to see until that moment when they combine with other active failures (a tired provider, a sound alike medication, etc.) and triggering factors that together combine to overwhelm barriers put in place to protect a patient. This results in a near miss or actual harm. Examples of latent failures that may impact the safe delivery of medications include high patient volumes resulting in cognitive overload, similar medication names, multiple interruptions during medication ordering or administration, limited (often inaccurate) information on current patient medications, the proliferation of multiple new medications, inadequate staffing, frequent overtime or long shifts, complex medical equipment, limited standardization of medical equipment, confusing and poorly designed computer interfaces, among others.

If we assume that healthcare providers do not come to work with the intent to cause harm, then it is important to move beyond blaming the individual and instead look for the system vulnerabilities (latent errors) that contribute to adverse events. HROs do not look for the simple or obvious cause when considering adverse events; they assiduously dig beyond the obvious to find latent problems within the system that if uncorrected will almost certainly cause a recurrent or similar event in the future. Punishing the individual involved in the event may be emotionally rewarding and feel justified, particularly if a patient is grievously harmed, but it will not identify the many underlying factors and vulnerabilities that contributed to the harm. Further, other providers who witness punitive actions will be unlikely to disclose their own errors or near misses. Opportunities to learn from and deeply understand failures, core principles of HROs, will be lost. Put simply, it is inappropriate and unhelpful to blame people who find themselves at the end of a series of events, most of which are beyond their control, that make it so very easy to err.

While a Just Culture does not punish people for making mistakes or speaking up, it does have clearly stated accountability principles. Individuals who purposefully violate policies that are intended to protect patients should be counseled and disciplined appropriately. Further, accountability in a Just Culture expects managers and supervisors to address problem employees before they make an error that harms a patient, rather than waiting to act until the problem employee makes the predictable error that harms a patient. Failure to appropriately hold people accountable for reckless behavior will harm morale in a manner similar to punishing people for making honest errors.

Before leaving this topic, it is appropriate to question whether we can ever fully understand the intention of individuals after an adverse event has occurred. When reviewing an error that has harmed a patient, it is almost impossible to avoid hindsight bias. All the actions that an individual should have taken are clear when looking backwards from a disastrous outcome. From this vantage point, it can seem as though some of the past actions and choices (now known to have been wrong) rise to the level of a willful disregard for the safety of the patient. But such confident leaps should be approached cautiously. Catastrophic failures are not necessarily caused by random and independent events, human error, and intentional choices. Rather, they often result from a systematic migration of organizational behaviors that are the result of operating in under-resourced environments where cutting corners and developing workarounds that appear to improve efficiency are not only valued but also openly celebrated. The more success that is attained at this limit of safe operating capacity, the more accepted (and even expected) it becomes until a tragedy occurs. This is called the normalization of deviance and is very difficult to detect as it is developing. Only in hindsight does it become abundantly clear that limits were being pushed well beyond what was safe. And when all these events conspire together to cause the now clearly predictable harm, there is a single individual seemingly at fault.

Engaged Leadership

Leadership is the driving force behind a safety culture, and senior leaders have the responsibility as well as the authority to make safety a priority. It is critical for leadership to make safety part of the daily discussion and a center point of major meetings and strategy sessions [5]. Frontline staff will only believe that safety is important to the organization’s senior leadership if those leaders visit the departments where the work occurs and engage directly with the staff about what they see and where their concerns are. This allows for cyclical flow of information [2], from staff to leaders and back. Leaders must make clear their expectation that the safety of patients is the highest priority and show support for directives and policies that enhance safety (time outs, checklists, follow through on actions from root cause analysis, daily safety huddles, etc.). It is also important for leadership to support the transparent reporting of adverse events to impacted patients and families. Finally, only through the unwavering support of non-punitive responses to those who make mistakes or who raise concern about the safe care of patients will leaders be able to make front line staff believe that it is safe to report their own errors and concerns.

Understanding Complexity and Improving the Environment of Care

Healthcare is complex. This means that it consists of a multitude of interdependent and diverse components that adapt to changes in the environment. This is distinct from complicated systems that are not able to adapt to change. As an example, your television is a complicated device having many interdependent parts which together yield a delightful visual experience. However, if you unplug it from the wall, it is unable to adapt itself in some way to restore its power source. The hospital on the other hand has innumerable interdependent parts, but if an area alters its staffing for a few hours during lunch breaks, it does not shut down, it adapts. Sometimes, those adaptations do just fine, other times, the workarounds or methods deviced to make up for the shortfall combine in unexpected ways causing harm in ways that are very difficult to understand or predict. If new technology is introduced, it may enhance care in several areas, but impact other areas in unexpected ways. This means that even the best of intended changes in people, technologies, or policies can ripple through the system and manifest in unexpected ways. At times, the unintended ripples interact in beneficial ways, yet in other instances, the interaction cause terrible harms. If luck is with us, the unexpected adaptation will be visible and easily fixed. But in other instances, the new vulnerability lurks just below the surface, waiting for the moment when it aligns with other vulnerabilities and together result in an adverse event that harms a patient. If one understands that the components of the healthcare system will interact and adapt as change is introduced into the environment, then it should be clear that a culture that values the reporting of errors, concerns, risks, or near misses is imperative to prevent or mitigate harms that may emerge in this system. The only way to identify harms that may be emerging is to have an organization that is willing to discuss and face its problems. This is why the Just Culture is such a critical part of any complex system. If we have any hope of catching these vulnerabilities before they harm a patient, we must create a vigilant staff that is “focused intently on failure” and a leadership that is willing to hear about the errors and near misses so that the system can be redesigned, organizational learning can be achieved, and safety improved.

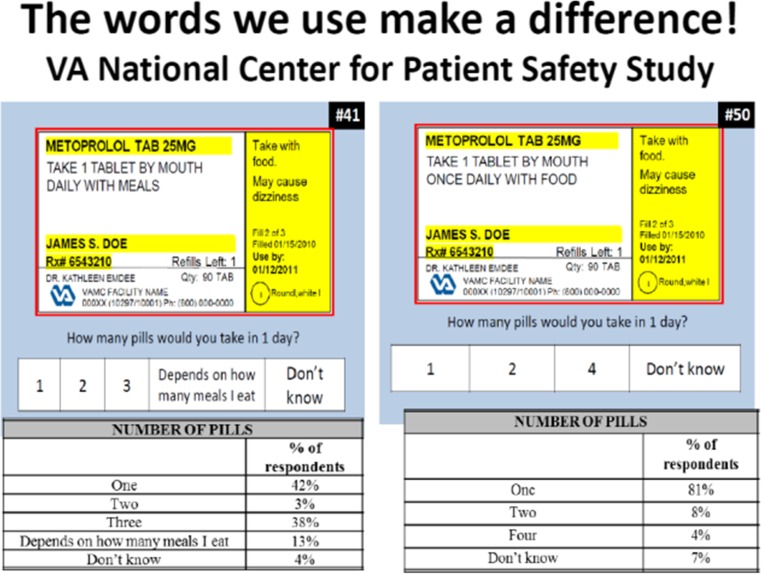

If individuals are punished for errors, it may seem that a problem has been solved, but organizational failures almost never have a single cause. Unless the less obvious latent errors that contributed to the problem are discovered, the risk of recurrent harm remains. Further, when individuals see others punished for making errors, they are unlikely to report their own errors or near misses. When these types of events occur, rather than punishment, these incidents should be understood to be a moment to make amends to the patient (if needed) and to learn from the event. Tools such as root cause analysis (RCA) may be used to more fully understand and trace back the system vulnerabilities that may have contributed to the event. Other tools such as Healthcare Failure Mode and Effect Analysis (HFMEA) offer opportunity to assess for system vulnerabilities in advance of a close call or adverse event. Each of these tools offers the possibility to recognize contributors of harm and to use this recognition to improve the environment of care. In the case of the RCA, the lessons learned are retrospective but can still be used to better understand why an error occurred and develop actions that may decrease the likelihood of reoccurrence. In both the RCA and the HFMEA, the goal is to recognize system vulnerabilities and put into place defenses that may prevent or mitigate harms from reaching the patient. Such improvements should be informed by use of human factor techniques when possible such that the system is designed with a greater understanding of how humans interface with surrounding environment, team members, and technologies. Well-designed environments and technologies make it easier to do the right thing, for both patients and providers. An example of improved medication safety has been the use of computer order entry that removed medication abbreviations for physician orders. This has decreased many medication mix-ups that had resulted from commonly used medication abbreviations [6]. However, it is worth noting that in complex systems, a new technology that helps reduce errors in one domain may cause new types of errors in other domains. For patients, changing the way medication labels are designed can help patients better understand the way they are supposed to take their medications (see Fig. 1).

Fig. 1.

Study showing improvement in understanding of prescription labels once the wording of the prescription is changed to make it clear that the patient should take only one pill per day

While such redesigns of systems present distinct challenges and are not always possible, these types of solutions should be the goal. Interventions such as remedial training programs, letters of reprimand, or firing people will not improve safety over the long term.

Approaches to Improving Patient Safety in the Delivery of Medications

Make a discussion of safety and quality a part of major meetings.

Commit to a Just Culture where adverse events and near misses are freely discussed without punishment and accountability processes are clearly defined.

Focus on improving the environment of care using human factor approaches to make it easier to do the right thing.

Complexity is the enemy of safety—focus on ways to minimize unneeded process steps.

Reduce reliance on memory—use checklists, algorithms, and easy access to current references.

Minimize interruptions.

Standardize processes for medication delivery when appropriate

Be familiar with The Joint Commission National Patient Safety Goals for 2015 and develop strategies to address those relevant to medication safety [7].

Conclusion

Despite years of effort and focus on improving safety and quality, the healthcare system still have far to go. It seems fair to say that what we have done in the past is unlikely to get us where we need to be. The physician offering something unique for each patient has taken us as far as it can, and in the increasingly complex world, we need a new model where the patient can depend on a highly functional team of individuals willing to admit errors and discuss problems before they reach patients.

Acknowledgments

Sources of Funding

None.

References

- 1.Wolk S, Paull DE, Mazzia LM, DeLeeuw LD, Paige JT, Chauvin SW, et al. Simulation-based team training for staff. Irvine: Association of VA Surgeons; 2011. [Google Scholar]

- 2.Frankel AS, Leonard MW, Denham CR. Fair and just culture, team behavior, and leadership engagement: the tools to achieve high reliability. Health Serv Res. 2006;41:1690–709. doi: 10.1111/j.1475-6773.2006.00572.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Weick KE, Sutcliffe KM. Managing the unexpected: assuring high performance in an age of complexity. San Francisco: Jossey-Bass; 2001. [Google Scholar]

- 4.Reason J. Achieving a safe culture: theory and practice. Work Stress Int J Work, Health Organ. 1998;12:293–306. [Google Scholar]

- 5.Botwinick L, Bisognano M, Harden C. Leadership guide to patient safety. IHI innovation. Cambridge: Institute for Healthcare Improvement; 2006. [Google Scholar]

- 6.Radley DC, Wasserman MR, Olsho LE, Shoemaker SJ, Spranca MD, Bradshaw B. Reduction in medication errors in hospitals due to adoption of computerized provider order entry systems. J Am Med Inform Assoc. 2013;00:1–7. doi: 10.1136/amiajnl-2012-001241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Vincent C, Burnett S, Carthey J. The measurement and monitoring of safety. Health Foundation 2013. Available at http://www.health.org.uk/publications/the-measurement-and-monitoring-of-safety/.