Abstract

High reliability organizations (HROs), such as the aviation industry, successfully engage in high-risk endeavors and have low incidence of adverse events. HROs have a preoccupation with failure and errors. They analyze each event to effect system wide change in an attempt to mitigate the occurrence of similar errors. The healthcare industry can adapt HRO practices, specifically with regard to teamwork and communication. Crew resource management concepts can be adapted to healthcare with the use of certain tools such as checklists and the sterile cockpit to reduce medication errors. HROs also use The Swiss Cheese Model to evaluate risk and look for vulnerabilities in multiple protective barriers, instead of focusing on one failure. This model can be used in medication safety to evaluate medication management in addition to using the teamwork and communication tools of HROs.

Keywords: High reliability organization, Crew resource management, Patient safety, Checklist, Sterile cockpit

Introduction

In 2000, the Institute of Medicine (IOM) began publishing a series of reports on quality in healthcare that ignited public interest in the problem of medical safety [1–3]. Four major points were made: errors are common and costly, systems cause errors, errors can be prevented and safety can be improved, and medication-related adverse events are the single leading cause of injury [2]. An epidemiological review conservatively estimates at least 1.5 million Americans are injured by medication errors every year [3]. On average, every hospitalized patient is subjected to at least one medication error every day, and medication errors are estimated to account for over 7000 deaths in 1993 [1, 3]. An estimated annual cost of treating the consequences of medication errors is US$3.5 billion dollars and does not include lost wages and worker productivity. These reports suggest that a chasm lies between the healthcare that exists and the healthcare that is possible, and offers a road map to traverse this chasm with a premise that keeping patients safe from harm cannot depend on human perfection [1]. Additionally, the path to achieve highly safe operations needs to take human fallibility into account when people are trained, systems are designed, and organizations are managed [4–6]. One recommendation from the Institute of Medicine’s Committee on Quality of Health Care in America is to adapt proven concepts and tools from high reliability organizations including crew resource management and simulations [1].

High Reliability

Pacific Gas and Electric Company’s electrical distribution system, the United States Navy nuclear aircraft carriers USS Carl Vinson and USS Enterprise, and the Federal Aviation Administration Air Traffic Control are three organizations that have been specifically studied for their impressive ability to succeed in simultaneously maintaining reliability, performance, and safety at extremely high levels. Like healthcare organizations, they share dependencies and extremely high degree of responsibility on the individual and collective skills of human operators [7]. These organizations have come to be known as “high reliability” organizations (HRO). “High reliability” is based on the response to the question “How many times could this operation [whatever you are doing] have failed with catastrophic results that it did not fail [8]?” If the answer is “repeatedly,” the organization qualifies for membership in the “high reliability” group. In other words, “high reliability” is to successfully engage in high-risk endeavors and have a low incidence of adverse events.

What are some distinguishing qualities of HRO? How do HRO maintain membership in the “high reliability” group? What do HRO do with errors, near failures, and failures? Processes in HRO are distinctive, people function as a collective and focus on certain practices (Table 1) [6]. One of these practices is preoccupation with failure or the possibility of failure. Because failure is an extremely rare event in the operations of HRO, there are very limited opportunities to learn from trial and error. Thus, they have to be ever vigilant to errors, possibility of failures, or failures such that they would be prepared or be flexible enough to adapt when crisis or catastrophe occur. HRO view errors, however unrelated, to have a cumulative effect that will culminate in a system failure. In addition, dysfunctional responses to success (e.g., restricted search, reduced attention, and complacency) are defined as failures. Consequently, errors and failures are high value opportunities for HRO to effect system wide reform. Every failure and near failure are analyzed. People are encouraged and rewarded for reporting errors. For example, Wernher Von Braun, father of the US space program, sent a bottle of champagne to an engineer who, when a Redstone missile went out of control, reported that he may have caused a short-circuit during pre-launch testing. Investigation into this incident revealed this was indeed the case, which meant that an expensive redesign was avoided. In another example, a navy seaman on a nuclear aircraft carrier reported the loss of a tool on the flight deck, and all aircraft were diverted to land bases until the tool was found; the seaman was commended for his actions the next day at a formal deck ceremony. In most organizations, such a culture would not exist and such admissions would have received a very different response.

Table 1.

Collective mindfulness

| Preoccupation with failure |

| Reluctance to simplify interpretations |

| Sensitivity to operations |

| Resilience |

| Deference to expertise |

Is the HRO culture comparable to the culture in healthcare (Table 2)? The aviation industry is considered to be a high reliability organization with its impressive safety record. One of the emphasis in the aviation industry is on “team” and team members usually address each other by their first names. In healthcare, traditionally, there are “hierarchical” relationships (e.g., medical students, residents, and attendings). It would be highly unlikely that a medical student would be on a first name basis with an attending physician. The aviation industry is sensitive to human factors such as stress, fatigue, and sleep deprivation, and there are regulations that govern such things as duty time (i.e., hours off between duty period) and mitigation of distractions while working on critical tasks. In healthcare, there is human factor awareness; however, human factors are often extended to their limits. It is not uncommon to miss meals, work when fatigued or under the influence of extreme multi-tasking, and be subject to distractions or interruptions when performing critical tasks. In the aviation industry, the Captain and first officer are responsible for the safe operation of an airplane and are focused on only those activities that will result in the safe operation of an airplane. For example, the Captain and first officer will not engage in any aspects of food service activities during a flight. In contrast, healthcare providers engage in an inordinate amount of non-core work and work outside of their training.

Table 2.

Culture

| Aviation | Health Care |

|---|---|

| “Team” emphasis | “Hierarchical” relationships |

| Human factor sensitivity | Human factors emphasized? |

| Regulatory protection | Regulatory protection (some) |

| Core work | Non-core work |

| “Indemnity” reporting | Fear/shame reporting errors |

The emphasis in the HRO culture is on “team.” What is the quality of teamwork in healthcare? Collaboration and communication have been shown to correlate with how well people work together. Two studies, one in a medical intensive care unit and the other in an operating theatre, looked at how doctors and nurses perceive the quality of collaboration and communication within their discipline and outside their discipline [9, 10]. Both studies found that most nurses do not perceive a high quality of collaboration and communication between nurses and doctors. Does this translate to patient safety? When examining the root causes of sentinel events reported to the Joint Commission for the past 5 years, “communication” has been consistently among the top four leading root causes of sentinel events [11]. The others included leadership, human factors, and assessment (e.g., care decisions).

Crew Resource Management

In 1979, NASA offered a workshop, “Resource Management on the Flight Deck,” based on their review and analysis of more than 60 jet transport accidents where resource management problems played a significant role [12]. The majority of air crashes were related to failures in effective management of available resources (e.g., communication, decision-making, leadership, and human factors) by the flight-deck crew. It was reasoned that it is possible to reduce pilot error by managing human errors through better use of human resources on the flight deck. Originally, this was called “Cockpit” Resource Management, and it was changed to “Crew” Resource Management (CRM) when it was realized that everyone on the team, the entire flight crew, may have important information to contribute. It is not surprising to identify the same problems that contributed to air crashes 35 years ago (i.e., failures in effective communication, decision-making, leadership, and human factors) are the four leading root causes of sentinel events in health care today [11].

Because clinicians are not pilots and patients are not aircrafts, CRM concepts and tools cannot be a direct translation into clinical reality. They have to be modified and adapted to each clinical practice environment. The paradigm is to reduce patient risk and enhance patient safety. Some CRM tools that can be adapted to healthcare are listed in Table 3. For example, effective leadership and followership (i.e., effective team member) are important aspects of CRM and the health care environment.

Table 3.

CRM tools for clinical practice

| Leadership - Followership |

| Briefings/Debriefings |

| Situational awareness |

| Workload management |

| Team monitoring |

| Cross check |

| Fatigue awareness |

| Managing technology |

| Standardization |

| Recurrent training and competency practice |

| Error management |

| Sterile cockpit |

| Read back |

| Verify actions |

Error Management

An underlying premise of CRM is that human error is ubiquitous and is inevitable, and it is critical to embrace the fact that errors will occur. CRM can be used as a set of error countermeasures with three lines of defense termed the error management troika (Fig. 1) [13]. The first line of defense is avoidance of error. The second line of defense is trapping incipient errors, and the third line of defense is mitigating adverse consequences of committed errors. One application of this error management troika is in medication safety. The goals in “Rights” of medication use are the right patient, drug, time, dose, route, indication/reason, response, and documentation. So, what CRM tools or concept can be adapted to improving medication safety?

Fig. 1.

Error management troika

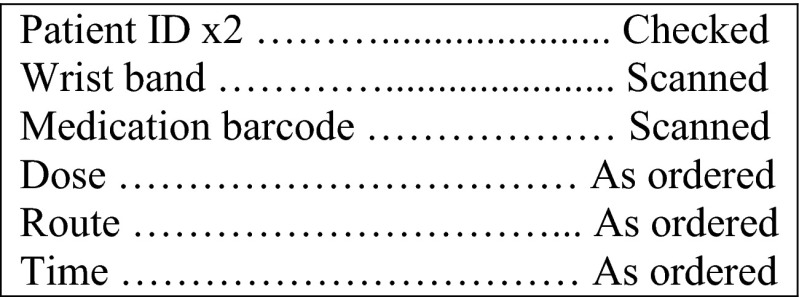

Both “checklist” and “sterile cockpit” have been used to help mitigate medication errors [14, 15]. A “Read and Verify” checklist is designed for “routine tasks” (e.g., medication administration) that are usually done by memory and then go back to the checklist to see if anything was missed. An example of a “Read and Verify” checklist for administering medication appears in Fig. 2. The principle of “sterile cockpit” is a rule (14CFR Part 121.542) mandated by the Federal Aviation Administration that applies to all flight operations below 10,000 ft: “No flight crewmember may engage in, nor may any pilot in command permit, any activity during a critical phase of flight which could distract any flight crewmember from the performance of his or her duties or which could interfere in any way with the proper conduct of those duties [16].” This rule also mandates that pilots are not required to perform “any duties during a critical phase of flight except those duties required for the safe operation of the aircraft.” Medication administration is a high-frequency activity performed by nurses in every clinical setting of care and is associated with great risks to patients. Safe and effective administration of medications is a cornerstone of nursing practice. Medication administration is a complex process that requires multiple clinical judgments, professional vigilance, and critical thinking, while remaining alert to prescribing or dispensing errors. Nurses are vulnerable to distractions and interruptions that affect their working memory and their ability to focus during medication administration, which contributes to medication errors. The application of the “sterile cockpit” principle during medication rounds limits distractions or interruptions when performing critical tasks. Strategies that have been shown to reduce nurses’ distractions and improve focus during medication administration include visible signage in critical areas of medication rounds such as “Do Not Disturb” signs, cordoned off areas to signify interruptions are not permitted within set boundaries, and donning on colored tabards or vests [14, 15, 17, 18].

Fig. 2.

“Read and Verify” checklist for administering medication

“Read back” and “verify actions” are two tools that can be adapted to trap incipient medication errors. For example, when a medication order is received, reading back the name, dose, and route of the medication to be administered will help confirm that proper medication will be administered to a patient. A “read back” is particularly important in situations such as when a verbal medication order is given or when medications are being ordered during a resuscitation. An example of “verify actions” is when administering a high-risk medication (e.g., chemotherapeutic agent) in which two people independently verify the medication to be administered is the right medication, the right dose, and is being administered at the right time, by the right route, for the right indication, to the right patient.

The focus of a HRO is safe reliable performance. The principles of HRO help people navigate toward mindful practices that encourage timely response toward unexpected events. If an event does occur, then the person is prepared to work on recovery and minimize disruption from the event. In order to properly mitigate adverse consequences of committed medication errors, one has to know, anticipate, and prepare for adverse events associated with a medication, particularly high-risk medications. For example, one serious adverse event associated with antivenom therapy is acute anaphylaxis. Not knowing, not anticipating, or not preparing for acute anaphylaxis would be disastrous when antivenom is being administered to a patient. Another more common example is insulin. Not knowing that there are multiple formulations of insulin, not anticipating adverse events such as hypoglycemia and or hypokalemia, and not preparing for management of such events could lead to permanent harm to a patient.

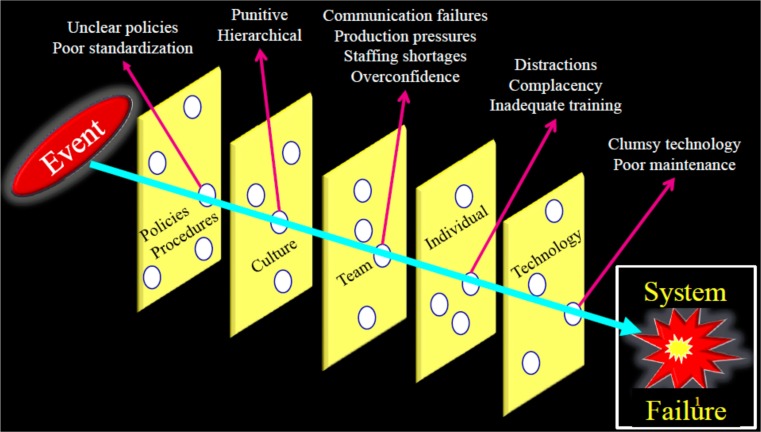

System Approach—System Reform

One distinguishing quality of HRO is how error or failure is managed. The Swiss Cheese Model is use in risk analysis and risk management of systems (Fig. 3) [19]. Each slice of Swiss cheese represents a protective barrier (e.g., policies, team work, and technology). These barriers help to prevent errors and help to keep patients safe. However, within each protective barrier, there are vulnerabilities (holes) that are continually opening, shutting, and shifting locations. For example, policies do not cover every contingency, distraction, or interruption that may occur when an individual is performing a critical task. Additionally, it is difficult to address different personalities with different agendas working on the same team and a punitive culture that squelches opposing viewpoints. When there is momentary alignment of holes within the protective barriers, an event can pass through the aligned vulnerabilities and become a sentinel event, which is a result of a system failure. HRO take this high valued opportunity to not just focus on a proximal cause (usually an individual) or focus on a vulnerability within a protective barrier but to scrutinize all protective barriers and their respective vulnerabilities to effect system wide reforms. This is a critical component in maintaining high reliability.

Fig. 3.

System Approach—System Reform

A medical toxicologist can aid in mapping out procedures for use of high-alert/high-risk medications, determining where vulnerabilities could lie, and establishing mitigation strategies to ensure their safe use (e.g., how to put orders in computerized provider order entry to help ensure safety measures for look-alike/sound-alike medications and how to store and minimize confusion with look-alike/sound-alike medications). Some of these safety measures could be developed by drawing on the experience of medical toxicologists and their knowledge of adverse medication events.

Summary

In a series of publications on quality in healthcare, the IOM ignited public interest in the problem of healthcare safety. Medication-related adverse events are the single leading cause of injury. IOM recommended adapting proven concepts and tools from HRO to achieve highly safe operations in healthcare.

HRO are defined by their ability to successfully engage in high-risk endeavors with a low incidence of adverse events. Processes in HRO are distinctive, people function as a collective and focus on certain practices. One of these practices is preoccupation with failure or the possibility of failure and their approach to managing errors or failures such as to encourage and reward reporting of errors and failures. CRM concepts and tools are part of the repertoire of HRO. CRM concepts and tools can be modified and adapted to healthcare, in particular, CRM as error management. CRM can be used as a set of error countermeasures with three lines of defense; error management troika (i.e., avoidance of error, trapping incipient errors, and mitigating adverse consequences of committed errors). An application of this error management troika is in medication safety where “checklist” and “sterile cockpit” are used to help avoid medication errors, “read back” and “verify actions” are used to trap incipient medication errors, and “knowing, anticipating, and preparing” for adverse events associated with a medication will help mitigate adverse consequences of committed medication errors.

The Swiss Cheese Model is used in risk analysis and risk management of systems. HRO take a systematic approach when analyzing errors and failures and regard errors and failures as a reflection of system failures. HRO take these high value opportunities to effect system wide reforms, which is a critical component in maintaining high reliability.

Acknowledgments

Conflicts of Interest

The authors have no conflicts of interest to declare.

Contributor Information

Luke Yip, Phone: 240-855-6911, Email: medicaltoxicology@gmail.com.

Brenna Farmer, Phone: 917-710-3802, Email: brennafarmer@gmail.com.

References

- 1.Institute of Medicine . To err is human: building a safer health system. In: Kohn LT, Corrigan JM, Donaldson MS, editors. Committee on health care in America. Washington DC: National Academy Press; 2000. [PubMed] [Google Scholar]

- 2.Institute of Medicine . Crossing the quality chasm: a new health system for the 21st century. Washington DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- 3.Institute of Medicine . Preventing medication errors: quality chasm series. In: Aspden P, Wolcott JA, Bootman JL, Cronenwett LR, editors. Committee on identifying and preventing medication errors. Washington DC: National Academy Press; 2006. [Google Scholar]

- 4.Roberts KH. Cultural characteristics of reliability enhancing organizations. J Manag Issues. 1993;5:165–181. [Google Scholar]

- 5.Reason J. Managing the risks of organizational accidents. Burlington, VT: Ashgate Publishing Co; 1997. [Google Scholar]

- 6.Weick KE, Sutcliffe KM, Obstfeld D. Organizing for high reliability: process of collective mindfulness. In: Sutton RS, Staw BM, editors. Research in organizational behavior. Stanford: JAI Press; 1999. pp. 81–123. [Google Scholar]

- 7.La Porte TR, Roberts K, Rochlin GI. Aircraft carrier operations at sea: The challenges of high reliability performance. No. IGS/OTP-1-88. California Univ Berkeley Inst of Governmental Studies; 1988.

- 8.Roberts KH. New challenges in organizational research: high reliability organizations. Ind Crisis Q. 1989;3:111–125. [Google Scholar]

- 9.Thomas EJ, Sexton JB, Helmreich RL. Discrepant attitudes about teamwork among critical care nurses and physicians. Crit Care Med. 2003;31:956–959. doi: 10.1097/01.CCM.0000056183.89175.76. [DOI] [PubMed] [Google Scholar]

- 10.Makary MA, Sexton JB, Freischlag JA, et al. Operating room teamwork among physicians and nurses: teamwork in the eye of the beholder. J Am Coll Surg. 2006;202:746–752. doi: 10.1016/j.jamcollsurg.2006.01.017. [DOI] [PubMed] [Google Scholar]

- 11.The Joint Commission. Sentinel Event Data Root Causes by Event Type 2004–2013. http://www.jointcommission.org/assets/1/18/Root_Causes_by_Event_Type_2004-2Q2013.pdf. Accessed 30 Jan 2015.

- 12.Cooper GE, White MD, Lauber JK, editors. Resource management on the flight deck. Proceedings of the NASA/Industry workshop. San Francisco, CA: NASA, June 26–28, 1979; NASA-CP-2120.

- 13.Helmreich RL, Merritt AC, Wilhelm JA. The evolution of crew resource management training in commercial aviation. Int J Aviat Psychol. 1999;9:19–32. doi: 10.1207/s15327108ijap0901_2. [DOI] [PubMed] [Google Scholar]

- 14.Pape TM. Applying airline safety practices to medication administration. Med-Surg Nursing. 2003;12:77–93. [PubMed] [Google Scholar]

- 15.Fore AM, Sculli GL, Albee D, et al. Improving patient safety using the sterile cockpit principle during medication administration: a collaborative, unit-based project. J Nurs Manag. 2013;21:106–111. doi: 10.1111/j.1365-2834.2012.01410.x. [DOI] [PubMed] [Google Scholar]

- 16.Federal Aviation Administration, DOT. § 121.542 Flight crewmember duties. http://www.gpo.gov/fdsys/pkg/CFR-2011-title14-vol3/pdf/CFR-2011-title14-vol3-sec121-542.pdf. Accessed 30 Jan 2015.

- 17.Pape TM, Guerra DM, Muzquiz M, et al. Innovative approaches to reducing nurses’ distractions during medication administration. J Contin Educ Nurs. 2005;36:108–116. doi: 10.3928/0022-0124-20050501-08. [DOI] [PubMed] [Google Scholar]

- 18.Relihan E, O’Brien V, O’Hara S et al (2010) The impact of a set of interventions to reduce interruptions and distractions to nurses during medication administration. Qual Saf Health Care 19:e52 [DOI] [PubMed]

- 19.Reason J. Human error: models and management. BMJ. 2000;320:768–770. doi: 10.1136/bmj.320.7237.768. [DOI] [PMC free article] [PubMed] [Google Scholar]