Abstract

We have developed a noninvasive, unobtrusive magnetic wireless tongue-computer interface, called “Tongue Drive,” to provide people with severe disabilities with flexible and effective computer access and environment control. A small permanent magnet secured on the tongue by implantation, piercing, or tissue adhesives, is utilized as a tracer to track the tongue movements. The magnetic field variations inside and around the mouth due to the tongue movements are detected by a pair of three-axial linear magneto-inductive sensor modules mounted bilaterally on a headset near the user’s cheeks. After being wirelessly transmitted to a portable computer, the sensor output signals are processed by a differential field cancellation algorithm to eliminate the external magnetic field interference, and translated into user control commands, which could then be used to access a desktop computer, maneuver a powered wheelchair, or control other devices in the user’s environment. The system has been successfully tested on six able-bodied subjects for computer access by defining six individual commands to resemble mouse functions. Results show that the Tongue Drive system response time for 87% correctly completed commands is 0.8 s, which yields to an information transfer rate of ~130 b/min.

Keywords: Assistive technologies (ATs), environment control, information transfer rate (ITR), magneto-inductive sensors, tongue–computer interfacing, tongue motion

I. Introduction

Persons severely disabled as a result of causes ranging from traumatic brain and spinal cord injuries (SCI) to amyotrophic lateral sclerosis (ALS) and stroke generally find it extremely difficult to carry out everyday tasks without continuous help1 [1], [2]. In the U.S. alone, 11 000 cases of severe SCI add every year to a population of a quarter of a million as a result of acts of violence, falls, and accidents [1]. Fifty-five percent of the SCI victims are between 16 and 30 years old, who will need lifelong special care that currently cost ~$4 billion each year. Assistive technologies (ATs) help individuals with severe disabilities communicate their intentions to others and effectively control their environments [3]. These technologies would not only ease the individuals’ need for receiving continuous help, thus reducing the burden on family members, releasing a dedicated caregiver, and reducing their healthcare and assisted-living costs, but may also help them to be employed and experience active, independent, self-supportive, and productive lives, equal to the other members of the society. Despite the wide variety of existing ATs for individuals with lower levels of disabilities, those with severe disabilities are still considered underserved, and would greatly benefit from having more choices, especially in utilizing ATs that can take advantage of whatever remaining capabilities they might have.

A number of ATs have been developed in the past for helping individuals with severe disabilities control their environments by discerning the individual’s intention and directly communicating it to a computer that plays the role of an interface between the user and his/her intended target, such as a powered wheelchair (PWC), robotic arm, telephone, or television [4]. It is not hard to imagine that once paralyzed individuals are “enabled” to effectively use computers, they will have access to all the opportunities that able-bodied individual may have through using computers.

There is considerable ongoing research on a series of devices, known as brain–computer interfaces (BCIs), which directly discern user’s intentions from their physiological brain activities such as electroencephalograms (EEGs). EEG-based BCIs need learning, concentration, and considerable time for setup and removal due to the need for preparing the scalp for good electrode contact and cleaning it after removal. They are also susceptible to interference and motion artifacts due to the small amplitude of the EEG signals. Heavy signal processing is also required for excluding signals resulted from other activities such as talking, redirecting gaze, and muscle contractions, a problem usually referred to as the “Midas touch” [4]-[6]. In order to gain more accurate and broader access to the neural signals, there are a number of intracranial BCIs (iBCIs) as well, which are based on signals recorded from skull screws, miniature glass cones, subdural electrode arrays, and intracortical electrodes [7]-[10]. However, the highly invasive nature of these approaches and their dependence on trained technicians and bulky carts of instrumentation have limited their application merely to research trials in controlled lab environments [4].

It is well known that cranial nerves, which are protected by the skull, often escape injuries even in severe cases of the SCI. Many of these nerves are not afflicted by neuromuscular diseases either [11], [12]. Hence, those parts of the body which are innervated by the cranial motor nerves, such as eyes, jaws, facial muscles, and tongue, are good candidates to be employed for discerning users’ intentions as intermediate links to the brain. A major advantage of these ATs over iBCIs, however, is that they can be noninvasive and potentially benefit a large population of individuals with different levels of disabilities.

One category of ATs operates by tracking eye movements and eye gaze from corneal reflections and pupil position [13], [14]. Electro-oculo-graphic (EOG) potentials have also been used for detecting the eye movements to generate control commands [15]. A drawback of these devices is that they all require extra eye movements, which interfere with the users’ normal visual activities. For instance, gaze towards objects of interest disrupts eye-based control [10]. It should also be noted that the eyes are evolved as sensory, not motor or manipulation parts of human body. The EOG method requires facial surface electrodes that are unsightly and may give the user a strange look and feelings of discomfort in public.

Tongue and mouth occupy an amount of sensory and motor cortex in the human brain that rivals that of the fingers and the hand. Therefore, they are inherently capable of sophisticated motor control and manipulation tasks with many degrees of freedom (DoF) [12]. The tongue muscle is similar to the heart muscle in that its rate of perceived exertion is very low and it does not fatigue easily [16]. The tongue is noninvasively accessible and is not influenced by the position of the rest of the body, which can be adjusted for maximum user comfort.

The above reasons have resulted in development of a number of tongue-computer interfaces (TCI) such as Tongue- Touch-Keypad (TTK), Tongue Mouse, Tongue Point, and a recent inductor-based system [17]-[21]. These devices, however, require bulky components inside the mouth which may interfere with the user’s speech, ingestion, and sometime breathing. TTK and Tongue Point need tongue contact and pressure at the tip which may result in tongue fatigue and irritation in the long run. There are also tongue- or mouth-operated joysticks such as Jouse2 or Integra Mouse [22], [23]. These devices can only be used when the user is in the sitting position and require a certain level of head movement to grab the mouth joystick if the stick is not to be held continuously inside the mouth, which could be quite uncomfortable.

Our goal has been developing a minimally invasive, unobtrusive, easy to use, and reliable TCI that can substitute some of the arm and hand functions, which are perceived as the highest priorities among the individuals with severe disabilities [24]. The TCI presented in this paper, called Tongue Drive, can wirelessly detect the tongue movements inside the oral cavity and translate them into a set of specific user-defined commands (tongue alphabet) without interfering with speech, ingestion, or breathing, and without the tongue touching anything. These commands can then be used to access a computer, operate a PWC, or control other devices in the user’s environment.

II. Tongue Drive System

A. System Overview

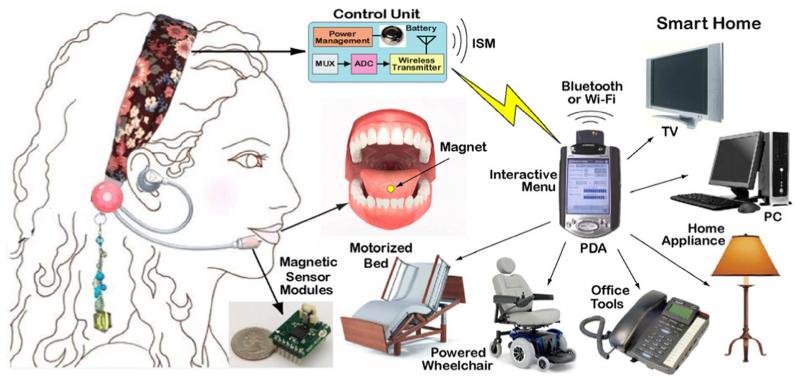

Tongue Drive system (TDS), which block diagram is shown in Fig. 1, consists of an array of magnetic sensors and a small permanent magnet, the size of a grain of rice, which is secured on the tongue as a tracer using tissue adhesives, tongue piercing, or implantation under the tongue mucosa through injection. The magnetic field generated by the magnetic tracer varies inside and around the mouth as a result of the tongue movements. These variations are detected by an array of magnetic sensors mounted on a headset outside the mouth, similar to a head-worn microphone, as shown in Fig. 1 external Tongue Drive system (eTDS), or on a dental retainer inside the mouth, similar an orthodontic brace (iTDS) [25]-[28]. The sensor outputs are wirelessly transmitted to a smartphone or personal digital assistant (PDA), also worn by the user. A sensor signal processing (SSP) algorithm running on the PDA classifies the sensor signals and converts them into user control commands, which are then wirelessly communicated to the targeted devices in the user’s environment.

Fig. 1.

Block diagram of the eTDS.

A principal advantage of the TDS is that a few sensors and a small magnetic tracer can potentially capture a large number of tongue movements, each of which can represent a particular command. By tracking tongue movements in real time, the TDS also has the potential to provide its users with “proportional control,” which is easier, smoother, and more natural than the switch-based control for complex tasks such maneuvering a PWC in confined spaces [3], [29]. In addition, it is possible to avoid the “Midas touch” problem by defining a specific command, i.e., tongue movement, to switch the TDS between standby and operational modes when the user intends to eat or engage in a conversation. In addition, the tongue gestures associated with the TDS commands can be defined such that the SSP algorithm would be able to discriminate between signals from tongue commands and those originated from voluntary or reflexive tongue movements resulted from speech, chewing, swallowing, coughing, or sneezing. Finally, the TDS users should avoid inserting ferromagnetic materials in their mouth. For instance, they should use silver, plastic, or wooden utensils instead of stainless steel.

B. Tongue Drive Hardware

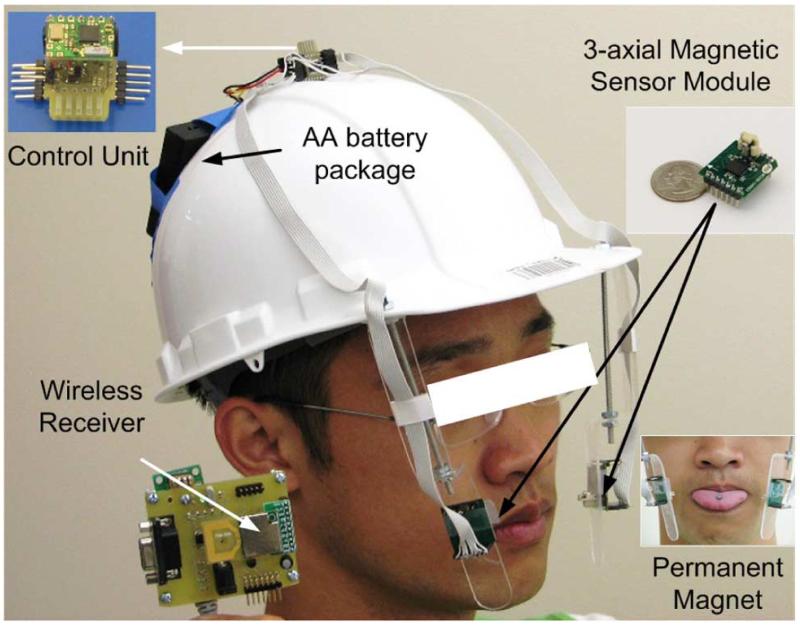

So far we have built several TDS prototypes using commercially available components [25]-[28]. The most recent eTDS prototype, shown in Fig. 2, uses a pair of three-axis magneto-inductive sensor modules (PNI, Santa Rosa, CA), mounted bilaterally on a hardhat. In magneto-inductive sensors, the alternating current (ac) impedance of a soft ferromagnetic material changes with the longitudinal component of a direct current (dc) magnetic field [30]. In the PNI sensors, an inductor that is part of an inductance–capacitance (LC)-oscillator varies the oscillation frequency proportional to the field, which is then measured to produce a digitized output. We also used a small (Ø5 mm × 1.3 mm) disk-shaped rare earth permanent magnet (RadioShack, Fort Worth, TX), as the tracer (see Fig. 2 inset).

Fig. 2.

eTDS prototype implemented on a hardhat for human trials. Insets clockwise from top: control unit, three-axis magnetic sensor, and permanent magnetic tracer attached on the subject’s tongue using tissue adhesive.

An ultra low-power microcontroller (MSP430, Texas Instruments, Dallas, TX), which is the heart of the control unit, takes 13 samples/s from each sensor, while activating only one sensor at a time to save power. The control unit always compares the right side module outputs with a predefined threshold value to check if the user has issued a standby/on command. This threshold is defined as the minimum sensor output when the magnetic tracer is held at 2 cm distance. If the user holds his/her tongue close to the right module (<2 cm) for more than 2 s, the TDS status switches between operational and standby mode. When the system is in the operational mode, all six sensors are read at 13 Hz, their samples are packaged in one data frame, and wirelessly transmitted to a PC across a 2.4-GHz ISM-band wireless link, established between two identical low power transceivers (nRF2401A, Nordic Semiconductor, Trondheim, Norway). Some of the important technical specifications of the eTDS prototype are summarized in Table I.

TABLE I. External Tongue Drive System Specifications.

| Specification | Value |

|---|---|

| Control Unit | |

|

| |

| Microcontroller | Texas Instruments - MSP430F1232 |

| Sampling rate per sensor | 13 Hz (operational), 1 Hz (standby) |

| Wireless Transceiver | Nordic nRF2401 @ 2.4 GHz |

| Operating voltage / current | 2.2 V/~4mA |

| Weight | 5 gr without batteries |

|

| |

| Magnetic Sensor Module | |

|

| |

| Type | Magneto-inductive (PNI Corp., CA) |

| Sensor dimensions | MS2100 (X and Y): 7 × 7 × 1.5 mm3 |

| SEN-S65 (Z): 6.3 × 2.3 × 2.2 mm3 | |

| Sensor module dimensions | 25 × 23 × 13 mm3 |

| Resolution | MS2100: 0.026, SEN-S65: 0.015 μT |

| Range | 1100 μT |

| Sensitivity (programmable) | 0.3 - 67 counts/μT |

| Weight | 3 gr |

|

| |

| Magnetic Tracer | |

|

| |

| Source and type | RadioShack rare-earth magnet 64-1895 |

| Size (diameter and thickness) | Ø 5 mm × 1.3 mm |

| Residual magnetic strength | 10800 Gauss |

| Weight | 0.2 gr |

C. External Magnetic Interference Cancellation

Due to their high sensitivity, the magneto-inductive sensors are inevitably affected by the external magnetic interference (EMI), such as the earth magnetic field (EMF). This results in a poor signal-to-noise ratio (SNR) at the sensor outputs, which degrades the performance of the SSP algorithm. Therefore, eliminating the EMI is necessary to enhance the TDS performance, and reduce the probability of errors in command interpretation.

In the present TDS prototype, the outputs of each three-axial sensor module are transformed, as if it was oriented in parallel to the opposite module, and subtracted from those outputs. As a result, the common-mode components in the sensor outputs, which are mainly resulted from the EMI are cancelled out, while the differential-mode components, mainly resulted from the movements of the local magnetic tracer, are retained and even magnified [28].

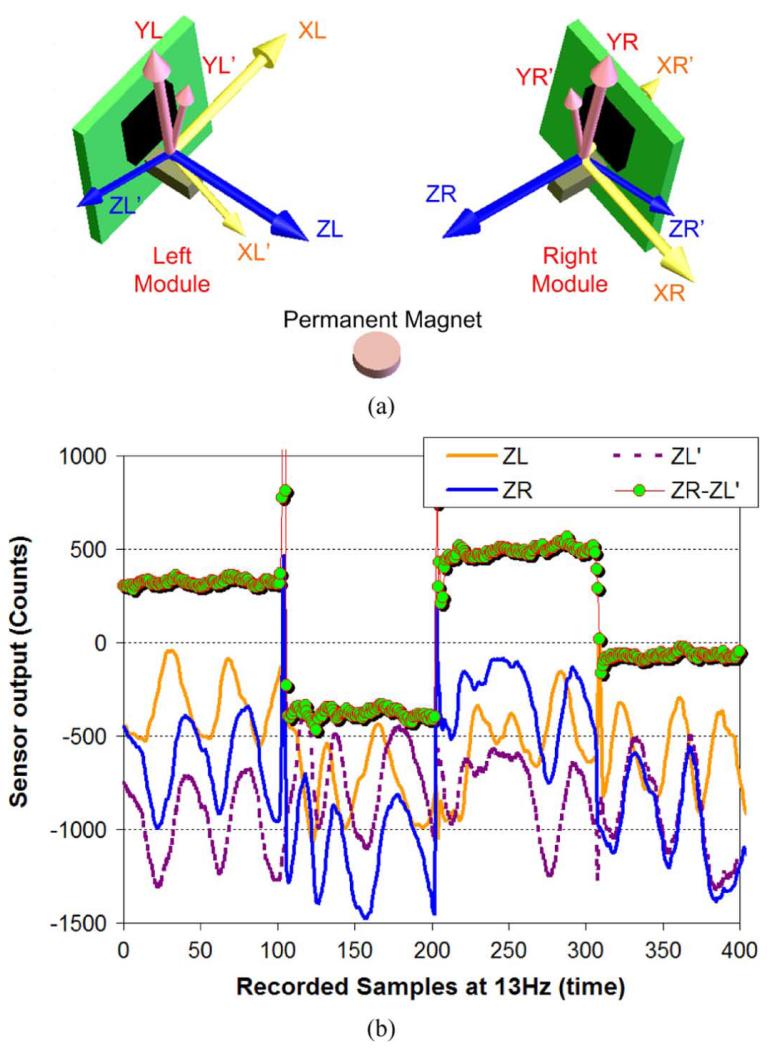

Fig. 3(a) depicts the relative position and orientation of the three-axial sensor modules and the magnetic tracer attached to the user’s tongue, where XL, YL, and ZL are the three axes of the left module, and XR, YR, and ZR are those of the right one. Since the relative position and orientation of the two modules are known, we can mathematically rotate each module and create a virtual module at the same location but parallel to the module on the opposite side using coordinate transformation theory [31]. The linear relationship between the original and the virtual rotated modules can be expressed in the matrix form,

| (1) |

where a,b,c, and d are the linear coefficients, which indicate the relative orientation and gain differences between the two sensor modules. These coefficients can be found using multilinear regression algorithm [33]

Fig. 3.

(a) Relative 3-D position and orientation of the bilateral three-axis sensor modules and the permanent magnetic tracer attached to the user’s tongue. (b) Original, transformed, and differential outputs of the Z-axis sensors when the subject issues two left mouse commands while walking in the laboratory.

Once the linear relationship between the two modules is setup, any source of EMI, which is usually far from the sensors, will result in equal outputs among each module and its virtual replica on the opposite side. On the other hand, the two module outputs resulted from the movements of the strong nearby magnetic tracer would be quite different unless the magnet moves symmetrically along the sagittal plane. Therefore, if we subtract the outputs of each sensor from its associated virtual sensor on the opposite side, the EMI components will be canceled out or significantly diminished, while the tracer components are likely to be amplified. As a result, the effects of EMI will be minimized and the SNR will be greatly improved.

Fig. 3(b) shows the output waveforms of the ZL and ZR sensors on the left and right module when the user, wearing the TDS prototype, issues two “left” commands while walking in the lab. This figure also shows the transformed output, ZL’, and the differential signal, ZR – ZL’, which is much cleaner than the two original raw signals.

D. Sensor Signal Processing Algorithm

We have developed the prototype SSP algorithm in the Lab-VIEW environment using MATLAB functions with three major components: EMI cancellation (described in Section II-C), feature extraction (FE), and command classification. For real time operation, we consider a sliding window of 3 consecutive samples at any time from each of the six individual sensors. The FE algorithm, which is based on principal component analysis (PCA), is used in order to reduce the dimensions of the incoming sensor data and accelerate computations [33].

During the feature identification session, users associate their preferred tongue movements or positions to each TDS command, and repeat those command for 10 times in 3-s intervals by moving their tongue from the resting position to the desired position after receiving a visual cue from a graphical user interface (GUI). A total of 18 samples (three per sensor) are collected in an 18-variable vector in each repetition, and labeled with the executed command. The FE algorithm calculates the eigenvectors and eigenvalues of the covariance matrix that consists of the training 18-variable vectors, offline. Three eigenvectors (v1, v2, v3) with the largest eigenvalues are then chosen to construct the PCA feature matrix, V = [v1, v2, v3]. This is equivalent to extracting the most significant features of the sensor output waveforms for each specific command in order to reduce the dimensions of the incoming data from 18 to 3. The labeled samples are then used to form a cluster in the 3-D feature space for each command.

During the normal TDS operation, the 18-variable incoming raw sensor vectors M0 from the three-sample sliding window are reflected onto the 3-D feature space using

| (2) |

where M is the 3 × 1 principal components vector. These three-variable vectors, which are easier to classify, still contain the most significant features that help discriminating them from the other commands when they are reflected onto the virtual 3-D feature space.

K-nearest neighbors (KNN) classifier is used within the feature space to evaluate the proximity of the incoming data points to the clusters formed during the feature identification session. The KNN algorithm starts at an incoming data point and inflates a virtual sphere until it contains K nearest training points. Then it associates the new data point to the command that has the majority of the training points inside the sphere [34]. In our case, considering that we had collected 10 training data points per cluster, K was chosen equal to 6.

III. Human Trials and Measured Results

To facilitate the human trials, we decided to emulate the mouse computer input with the eTDS prototype. As a result, whoever was familiar with operating a mouse in Windows operating system could directly use the eTDS. We defined six individual commands in the eTDS prototype GUI for the cardinal cursor movements: up, down, left, and right, as well as single- and double-click mouse gestures. Every one of these commands had a dedicated class in the SSP algorithm and a cluster in the 3-D feature space. In addition, we defined a seventh class/cluster to identify the tongue in its resting position.

A. Human Subjects

Six able-bodied human subjects were recruited from the engineering graduate student population of the North Carolina State University, comprising of two females and four males with ages from 23 to 34 years old. We obtained the necessary approvals from the NC State institutional review board (IRB) and informed consent from each subject. One of the subjects (subject A) had used the eTDS multiple times but not on a regular basis. Three of the subjects had used the TDS for less than 2 h in previous human trials. The last two subjects were novice and had no prior knowledge or experience with the TDS before this experiment.

B. Experimental Procedure

A new permanent magnet was disinfected using 70% isopropyl rubbing alcohol, dried, and attached to the subjects’ tongue, about 1 cm from the tip, using cyanoacrylic tissue adhesives (Cyanodent, Ellman International Inc., Oceanside, NY). The subjects then wore the eTDS prototype, as shown in Fig. 2, and were allowed to familiarize themselves with the eTDS and magnetic tracer on their tongue for up to 15 min.

1) Command Definition, Searching for Proper Tongue Positions for Different Commands

To facilitate command classification, the subjects were advised to choose their tongue positions for different commands as diversely as possible and also away from the sagittal plane, where most of the natural tongue movements occur. Even though it was not a command, the first and most important tongue position was in fact its resting position. Therefore, the other six commands had to be defined away from the tongue resting position.

During command definition, a visual feedback in the form of an analog gauge was included in the GUI to assist the subjects find proper tongue positions for different commands. The gauge reflected in real time the distance between the current tongue position and the closest previously defined command position (lmin) onto three zones, which were color coded. The subjects were asked to define each new command such that the gauge would stay within the green zone, which meant that the new command was far enough from all other previously defined commands.

The GUI did not confirm the new command definition unless the following criteria were met. First, lmin was greater than a predefined threshold value. Second, the variance of lmin was less than another predefined threshold for at least 3 s, which indicated that the move has been deliberate. The GUI automatically moved on to the next command once the proper position was found. The command positions were then saved and practiced for a few times to make sure that the subject had learned and memorized all tongue positions.

2) Feature Identification

During this session, the GUI prompted the subject to execute each command by turning on its associated indicator on the screen in 3-s intervals. The subjects were asked to issue the command by moving their tongue from its resting position to the corresponding command position when the command light was on, and returning it back to its resting position when the light went off. This procedure was repeated 10 times for the entire set of six commands excluding the tongue resting position.

3) Accuracy vs. Response Time

This experiment was designed to provide a quantitative measure of the TDS performance by measuring how quickly and accurately a command can be issued from the time it is intended by the user. This time, which we refer to as the TDS response time, includes thinking about the command and its associated tongue motion, physical tongue movement transients, sampling the magnetic field variations, wireless transmission of the acquired samples, and the SSP computation delays. Obviously, the shorter the response time is the better. However, it is not only important to issue commands quickly but also to detect them correctly and accurately. Therefore, we considered the percentage of correctly completed commands, CCC%, in addition the response time, T.

A dedicated GUI was developed for this experiment to randomly select one out of six direct commands and turn its indicator on. The subjects were asked to issue the indicated command within a specified time period T while a visual cue was showing on the screen, by moving their tongue from its resting position the same way that they had trained the eTDS for that particular command. The GUI also provided the subject with a real time visual feedback by changing the size of a bar associated to each command, indicating how close the tongue was to the position of that specific command. After 40 trials at every T = 2,1.5,0.8, and 0.6 s, in the order of larger to smaller periods, test results were obtained by calculating the CCC% for each T.

Information transfer rate (ITR) is a widely accepted measure to evaluate and compare the performance of different BCIs by roughly calculating the amount of useful information that can be transferred from the user to a computer within a certain time period. There are various definitions for the ITR. We calculated the ITR based on Wolpaw’s definition in [5]

| (3) |

where N is the number of individual commands that the system can issue, P = CCC% is the system accuracy, and T is the system response time in seconds or minutes. The advantage of this definition is that it takes into account the most important BCI, or in this case, TCI parameters.

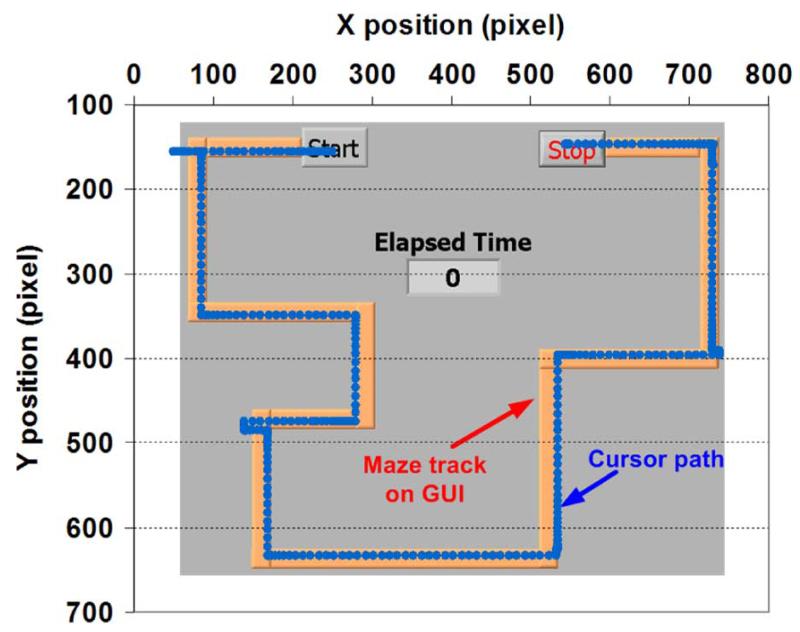

4) Maze Navigation

The purpose of this experiment was to examine the eTDS performance in navigation tasks, such as controlling a PWC, in a controlled and safe environment. Subjects were required to navigate the mouse cursor within a maze, shown in Fig. 4, from a starting point by issuing a double-click (start command) to a stopping point with a single-click (stop command), while the GUI was recording the cursor path and the elapsed time, ET. The cursor was driven by the discrete commands in an unlatched mode, which required the subjects to hold their tongue at the position defined for a specific direction to continue moving the cursor. To achieve finer control, the cursor started moving slowly, and gradually accelerated if the users held their tongue in the same position, until it reached a certain velocity. The maze was designed to force the users utilize all eTDS commands, and the cursor could not be moved forward unless it was within the track. Every subject repeated this task four times and the average ET was calculated.

Fig. 4.

Mouse pointer path recorded during the maze navigation experiment superimposed on the GUI track.

C. Experimental Results

In the following, we have separated the test results obtained from experienced subject A from the other subjects to demonstrate the effect of experience in using the eTDS.

Response Time

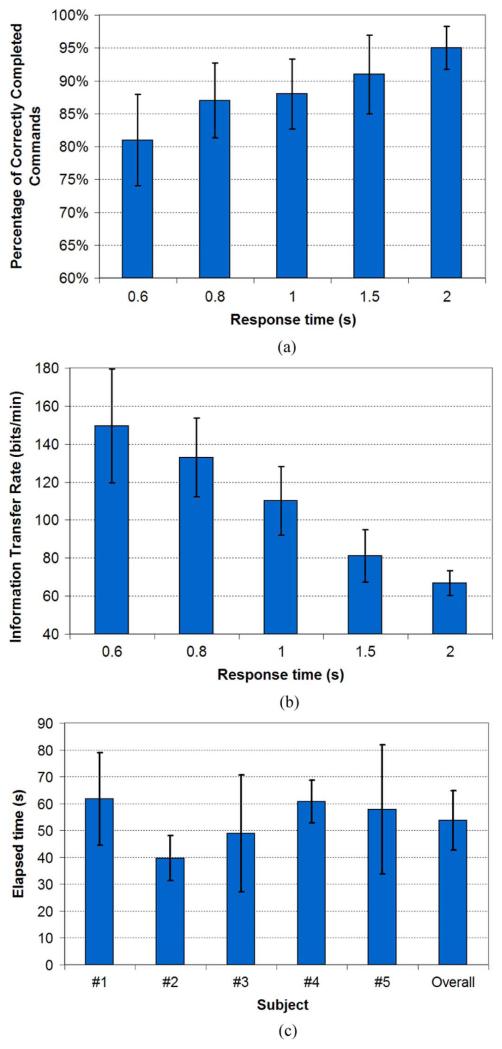

Fig. 5(a) shows the accuracy versus response time for five less-experienced subjects. It can be seen that an average performance for a TDS beginner with CCC% > 80% can be achieved with T ≥ 0.6 s. Fig. 5(b) shows the mean value and 95% confidence interval of the corresponding ITR for T = 0.6 ~ 2 s. The highest ITR, which was achieved at T = 0.6 s, results from very short response time and moderate CCC%. In practice, a good performance with CCC% ≈ 87% can be obtained with T ≥ 0.8 s, yielding an ITR of ~130 b/min. Subject A could, however, achieve a significantly better than average performance with CCC% = 97.5% at T = 0.8 s, which is equivalent to an ITR = 178 b/min.

Fig. 5.

(a) The percentage of correctly completed commands (CCC%) versus eTDS response time for five human subjects. (b) eTDS information transfer rate versus response time. (c) Mean values and 95% confidence interval of elapsed time for five subjects participating in the maze navigation experiment.

Navigation

The average ET with its 95% confidence interval for each less-experienced subject is shown in Fig. 5(c). The average ET of all 20 navigation experiments was 53.78 s, which was ~2.5 times longer than the time required for one of the subjects to navigate the mouse cursor through the maze using his hand. Considering the fact that the subjects had much more prior experience in moving the mouse cursor with their hand than with their tongue, this experiment showed the eTDS potential for performing complicated navigation tasks such as controlling a PWC in a crowded environment. Once again, subject A performed much better than the average for less experienced subjects by achieving an ET = 38.25 s.

IV. Discussions

Compared to our earlier TDS prototypes [25]-[27], key improvements in the presented eTDS prototype are the bilateral configuration of a pair of three-axial magneto-inductive sensor modules and utilization of the stereo-differential EMI cancellation algorithm. These changes helped eliminating a 3-D reference compass, which was used to measure the EMI, and lead to a more compact and lower power TDS. Higher SNR, also results from the new sensor configuration and SSP algorithm, improved the command classification accuracy, and produced a higher CCC% at lower response times.

Table II compares the number of commands, response time, and calculated ITR for a few TCIs and BCIs that are reported in the literature [28]. It can be seen that the current eTDS prototype with six direct commands, all of which are simultaneously accessible to the user, can provide the highest ITR to subjects with less than 2 h of prior experience. It should be noted that for calculating ITR for the TTK in [16], we had to use the total number of keys on a virtual keyboard N = 50 as the number of commands in (3) instead of the nine buttons available to the TTK user. Because the average time to activate each TTK button during typing was not mentioned.

TABLE II. Comparison Between the Tongue Drive System and Other BCIS/TCIs.

Another important qualitative observation during human trials was the enhanced robustness of the new eTDS prototype against the Midas touch problem. The new eTDS could better differentiate between the tongue command movements and those movements resulted from users’ voluntary activities such as speech and swallowing, or involuntary motions during coughing and sneezing. Since most of these movements are back and forth movements in the sagittal plane, they usually generate similar (i.e., common mode) changes in the magnetic fields around both sensor modules. Therefore, the differential mode sensor outputs resulted from these movements would be close to zero, and classify within the tongue resting position after EMI cancellation.

Several features were added to the GUI to improve the eTDS usability and proved to be helpful during human trials. With the assistance of a visual feedback instead of an operator, the subjects could independently define their desired tongue commands by observing an analog gauge that showed them the quality of the selected tongue position for command classification in real time. As a result, the duration of the training session to find proper command positions was reduced from 30 min to about 10 min.

We have also added a new standby/on command that puts the system in the standby mode when the user does not use the eTDS during eating, talking, or sleeping. In the standby mode, the wireless transceiver is off and the eTDS sampling rate is reduced from 13 to 1 Hz to reduce power consumption. The user can issue the same command to turn the eTDS back on.

In the current TDS prototype, all computations are performed on a desktop PC, which has more computational power than an ultra-portable computer or PDA that is intended for the eTDS final form, shown in Fig. 1. Therefore, it is important to use SSP algorithms with lighter computational load to maintain satisfactory performance and minimize delays. Larger number of commands is desired to provide the TDS users with more flexibility in controlling their environments. On the other hand, more commands lead to smaller differences between various tongue movements, more SSP computations, and higher probability of errors in classification. Therefore, the optimal number of the commands will depend on several factors including the ability of the TDS users to comfortably remember and consistently reproduce the “tongue commands.” Hence, we expect that the optimal number of TDS commands to be dependent on each individual user and his/her abilities and preferences.

The present eTDS has not yet been used by anyone on a regular basis. Therefore, we were not able to observe the effects of frequent TDS usage on the users’ performance in real life conditions. However, significant performance improvement was observed in a subject with more experience with eTDS in the laboratory environment. Therefore, we expect the performance of the TDS users to be further improved over time, particularly if it is used on a daily basis. This should, however, be evaluated on individuals with severe disabilities who are the intended end-users of this technology.

We intend to build interfaces between the TDS and PWCs as well as other home/office appliances. It is important to know the optimal number of tongue commands for the TDS, which can be reliably remembered, reproduced, and detected. We are also trying to add proportional control capability to those commands that are related to PWC or cursor navigation.

V. Conclusion

Tongue Drive system is designed to enable people with severe disabilities control their environments, access computers, and drive PWCs. The system discerns its users’ intentions by wirelessly tracking the movements of a permanent magnetic tracer that is secured on their tongue utilizing an array of magnetic sensors, which are placed inside the mouth in the form of an orthodontic brace or outside in the form of a headset. A set of user-defined voluntary tongue movements can then be translated to different commands for computer access or environment control. The present eTDS prototype consists of a pair of three-axis magneto-inductive sensor modules, extended symmetrically from a hardhat towards the user’s cheeks, and driven by a control unit that is equipped with a low-power wireless link to a nearby PC. Human trials on six able-bodied subjects demonstrated that the present eTDS prototype can effectively emulate the mouse cursor movements and gestures (i.e., button clicks) with six direct commands all of which were simultaneously available to the user.

Acknowledgment

The authors would like to thank E. Rohlik and P. O’Brien from the WakeMed Rehabilitation Hospital, Raleigh, NC, and M. Jones, J. Anschutz, and R. Fierman from the Shepherd Center, Atlanta, GA for their valuable comments.

This work was supported in part by Christopher and Dana Reeve Foundation, in part by the National Science Foundation under Grant IIS-0803184, and in part by startup funds provided by the North Caroloina State University and Georgia Institute of Technology.

Biographies

Xueliang Huo (S’07) was born in 1981. He received the B.S. and M.S. degrees in mechanical engineering (instrument science and technology) from Tsinghua University, Beijing, China, in 2002 and 2005, respectively. Currently, he is working toward the Ph.D. degree in the GT Bionics Laboratory, Department of Electrical and Computer Engineering, Georgia Institute of Technology, Atlanta.

His research interests include low power circuit and system design for biomedical applications, brain–computer interfacing, and assistive technologies.

Jia Wang (S’07) was born in China, in 1984. She received the B.S. degree in automation and control theory from Tsinghua University, Beijing, China, in 2006. She is currently working toward the M.S. degree in the Department of Electrical and Computer Engineering, North Carolina State University, Raleigh.

Her research involves brain–computer interfacing, assistive technologies, and sensor signal processing.

Maysam Ghovanloo (S’00–M’04) was born in 1973. He received the B.S. degree in electrical engineering from the University of Tehran, Tehran, Iran, in 1994, the M.S. degree in biomedical engineering from the Amirkabir University of Technology, Tehran, Iran, in 1997, and the M.S. and Ph.D. degrees in electrical engineering from the University of Michigan, Ann Arbor, in 2003 and 2004, respectively. The Ph.D. research was on developing a wireless microsystem for neural stimulating microprobes.

From 2004 to 2007, he was an Assistant Professor at the Department of Electrical and Computer Engineering, North Carolina State University, Raleigh, where he founded the NC-Bionics Laboratory. In June 2007, he joined the faculty of Georgia Institute of Technology, Atlanta, where he is currently an Assistant Professor and the Founding Director of the GT-Bionics Laboratory in the School of Electrical and Computer Engineering. He has more than 50 conference and journal publications. He has organized special sessions and served in technical review committees for major conferences and journals in the areas of circuits, systems, sensors, and biomedical engineering.

Dr. Ghovanloo has received awards in the 40th and 41st DAC/ISSCC student design contest, in 2003 and 2004, respectively. He is a member of Tau Beta Pi and Sigma Xi.

Footnotes

Color versions of one or more of the figures in this paper are available online at http://ieeexplore.ieee.org.

Contributor Information

Xueliang Huo, GT-Bionics Laboratory, Department of Electrical and Computer Engineering, Georgia Institute of Technology, Atlanta, GA 30308 USA (mghovan@ece.gatech.edu).

Jia Wang, NC-Bionics Laboratory, Department of Electrical and Computer Engineering, North Carolina State University, Raleigh, NC 27695 USA.

Maysam Ghovanloo, GT-Bionics Laboratory, Department of Electrical and Computer Engineering, Georgia Institute of Technology, Atlanta, GA 30308 USA.

References

- [1].National Spinal Cord Injury Statistical Center Facts and figures at a glance. 2008 Jul; Online. Available: http://www.spinalcord.uab.edu/show.asp?durki=21446. [PubMed]

- [2].National Institute of Neurological Disorders and Stroke (NINDS) National Institutes of Health (NIH) Spinal cord injury: Hope through research. 2008 Jul; Online. Available: http://www.ninds.nih.gov/disorders/sci/detail_sci.htm.

- [3].Cook AM, Hussey SM. Assistive Technologies: Principles and Practice. 2nd ed Mosby; New York: 2001. [Google Scholar]

- [4].Hochberg LR, Donoghue JP. Sensors for brain computer interfaces. IEEE Eng. Med. Biol. Mag. 2006 Sep;25(5):32–38. doi: 10.1109/memb.2006.1705745. [DOI] [PubMed] [Google Scholar]

- [5].Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- [6].Moore MM. Real-world applications for brain-computer interface technology. IEEE Trans. Rehabil. Eng. 2003 Jun;11(2):162–165. doi: 10.1109/TNSRE.2003.814433. [DOI] [PubMed] [Google Scholar]

- [7].Kennedy PR, et al. Using human extra-cortical local field potentials to control a switch. J. Neural Eng. 2004;1:72–77. doi: 10.1088/1741-2560/1/2/002. [DOI] [PubMed] [Google Scholar]

- [8].Kennedy PR, Kirby MT, Moore MM, King B, Mallory A. Computer control using human intracortical local field potentials. IEEE Trans. Neural Syst. Rehabil. Eng. 2004 Sep;12(3):339–344. doi: 10.1109/TNSRE.2004.834629. [DOI] [PubMed] [Google Scholar]

- [9].Leuthardt EC, Schalk G, Wolpaw JW, Ojemann JG, Moran DW. A brain-computer interface using electrocorticographic signals in humans. J. Neural Eng. 2004;1:63–71. doi: 10.1088/1741-2560/1/2/001. [DOI] [PubMed] [Google Scholar]

- [10].Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006 Jul;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- [11].Hardie RJ, Lees AJ. Neuroleptic-induced parkinson’s syndrome: Clinical features and results of treatment with levodopa. J. Neurol., Neurosurg., Psychiatry. 1988;51:850–854. doi: 10.1136/jnnp.51.6.850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Kandel ER, Schwartz JH, Jessell TM. Principles of Neural Science. 4th ed. McGraw-Hill; New York: 2000. [Google Scholar]

- [13].Hutchinson T, White KP, Jr., Martin WN, Reichert KC, Frey LA. Human-computer interaction using eye-gaze input. IEEE Trans. Syst., Man, Cybern. 1989 Nov-Dec;19(6):1527–1533. [Google Scholar]

- [14].Xie X, Sudhakar R, Zhuang H. Development of communication supporting device controlled by eye movements and voluntary eye blink. IEEE Trans. Syst., Man Cybern. 1995;25:1568–1577. [Google Scholar]

- [15].Barea R, Boquete L, Mazo M, Lopez E. System for assisted mobility using eye movements based on electrooculography. IEEE Trans. Rehabil. Eng. 2002 Dec;10(4):209–218. doi: 10.1109/TNSRE.2002.806829. [DOI] [PubMed] [Google Scholar]

- [16].Lau C, O’Leary S. Comparison of computer interface devices for persons with severe physical disabilities. Amer. J. Occupational Therapy. 1993 Nov;(47):1022–1030. doi: 10.5014/ajot.47.11.1022. [DOI] [PubMed] [Google Scholar]

- [17].Ghovanloo M. Tongue operated assistive technologies. Proc. IEEE 29th Eng. Med. Biol. Conf. 2007 Aug;:4376–4379. doi: 10.1109/IEMBS.2007.4353307. [DOI] [PubMed] [Google Scholar]

- [18].TongueTouch Keypad. newAbilities Systems Inc.; Santa Clara, CA: Online. Available: http://www.newabilities.com. [Google Scholar]

- [19].Nutt W, Arlanch C, Nigg S, Staufert and G. Tongue-mouse for quadriplegics. J. Micromechan. Microeng. 1998;8(2):155–157. [Google Scholar]

- [20].Salem C, Zhai S. An isometric tongue pointing device. Proc. CHI. 1997;97:22–27. [Google Scholar]

- [21].Struijk LNSA. An inductive tongue computer interface for control of computers and assistive devices. IEEE Trans. Biomed. Eng. 2006 Dec;53(12):2594–2597. doi: 10.1109/TBME.2006.880871. [DOI] [PubMed] [Google Scholar]

- [22].Jouse2, Compusult Limited . Compusult. Mount Pearl, NL, Canada: Online. Available: http://www.jouse.com. [Google Scholar]

- [23].USB Integra Mouse Tash Inc. Roseville, MN: Online. Available: http://www.tashinc.com/catalog/ca_usb_integra_mouse.html. [Google Scholar]

- [24].Anderson KA. Targeting recovery: Priorities of the spinal cord-injured population. J. Neurotrauma. 2004;21:1371–1383. doi: 10.1089/neu.2004.21.1371. [DOI] [PubMed] [Google Scholar]

- [25].Krishnamurthy G, Ghovanloo M. Tongue Drive: A tongue operated magnetic sensor based wireless assistive technology for people with severe disabilities. Proc. IEEE Int. Symp. Circuits Syst. 2006 May;:5551–5554. [Google Scholar]

- [26].Huo X, Wang J, Ghovanloo M. A magnetic wireless tonguecomputer interface. Proc. Int. IEEE EMBS Conf. Neural Eng. 2007 May;:322–326. [Google Scholar]

- [27].Huo X, Wang J, Ghovanloo M. Using magneto-inductive sensors to detect tongue position in a wireless assistive technology for people with severe disabilities. Proc. IEEE Sensors Conf. 2007 Oct;:732–735. [Google Scholar]

- [28].Huo X, Wang J, Ghovanloo M. A wireless tongue-computer interface using stereo differential magnetic field measurement. Proc. 29 th IEEE Eng. Med. Biol. Conf. 2007 Aug;:5723–5726. doi: 10.1109/IEMBS.2007.4353646. [DOI] [PubMed] [Google Scholar]

- [29].Wang J, Huo X, Ghovanloo M. Tracking tongue movements for environment control using particle swarm optimization. Proc. IEEE Int. Symp. Circuits Syst. 2008 May;:1982–1985. [Google Scholar]

- [30].Boukhenoufa A, Dolabdjian CP, Robbes D. High-sensitivity giant magneto-inductive magnetometer characterization implemented with a low-frequency magnetic noise-reduction technique. IEEE Sensors J. 2005 Oct;5(5):2594–2597. [Google Scholar]

- [31].Litvin FL, Fuentes A. Gear Geometry and Applied Theory. 2nd ed. Cambridge Univ. Press; Cambridge, U.K.: 2004. pp. 1–10. [Google Scholar]

- [32].Fox J. Applied Regression Analysis, Linear Models, and Related Methods. Sage; London, U.K.: 1997. pp. 98–103. [Google Scholar]

- [33].Cohen A. Biomedical Signal Processing. II. CRC Press; Boca Raton, FL: 1988. pp. 63–75. [Google Scholar]

- [34].Duda RO, Hart PE, Stork DG. Pattern Classification. 2nd ed. Wiley; New York: 2001. pp. 174–187. [Google Scholar]

- [35].Chen YL, et al. The new design of an infrared-controlled human-computer interface for the disabled. IEEE Trans. Rehabil. Eng. 1999 Dec;7(4):474–481. doi: 10.1109/86.808951. [DOI] [PubMed] [Google Scholar]