Abstract

In an effort to increase the number of researchers with skills “in identifying and addressing the ethical, legal, and social implications of their research,” the National Institutes of Health (NIH) solicited training grant proposals from 1999 to 2004 and subsequently funded approved programs. The authors describe the content, format, and outcomes of one such training program that ran from 2002–2006 and shares key lessons learned about program formats and assessment methods.

Jointly developed by the Saint Louis University Center for Health Care Ethics and the Missouri Institute of Mental Health Continuing Education department, the training program focused on mental health research and adopted a train-the-trainer model. It was offered in onsite and distance-learning formats. Key outcomes of the program included educational products (such as 70 case studies posted on the course website, a textbook, and an instructional DVD) and program completion by 40 trainees. Assessment involved pre- and post-testing focused on knowledge of research ethics, ethical problem-solving skills, and levels of confidence in addressing ethical issues in mental health research. The program succeeded in increasing participants’ knowledge of ethical issues and their beliefs that they could identify issues, identify problem-solving resources, and solve ethical problems. However, scores on the case-based problem-solving assessment dropped in post-testing, apparently due to diminished confidence about the right course of action in the specific dilemma presented; the implications of this finding for ethics assessment are discussed. Overall satisfaction was high and dropout rates were low, but 3 times higher for distance-learners than onsite participants.

In an effort to increase the number of researchers with skills “in identifying and addressing the ethical, legal, and social implications of their research,” the National Institutes of Health (NIH) solicited training grant proposals from 1999 to 2004. (For a description of aims and a list of all funded programs, see http://grants.nih.gov/training/t15.htm.) Through 2007, the NIH T15 “Short-Term Courses in Research Ethics” program supported a total of 26 different training programs. Each program focused on a specific type of research (e.g., behavioral, clinical, or genetic) or a specific population of research participant (e.g., international, minority, or vulnerable participants). This article describes the content, format, and outcomes of one such training program and shares key lessons learned about program formats and assessment methods.

The NIH supported our program from the fall of 2002 through the fall of 2006. The novel focus of this program was on training researchers whose participants are persons with mental health or substance abuse disorders. The program involved a collaborative agreement between the Center for Health Care Ethics at Saint Louis University (SLU) and the Continuing Education department at the Missouri Institute of Mental Health (MIMH). SLU was the academic home of the project director, an ethicist with training in psychology and assessment, as well as research assistants who provided organizational and research support for the project. The MIMH provided a co-director who was a mental health services consumer with a strong record of mental health services research, as well as continuing education staff member who offered most of the technical and logistical support for the development of educational materials and the delivery of the course.

Program Description

Aims and educational objectives

The “Ethics in Mental Health Research” (EMHR) training course was jointly developed by SLU and MIMH personnel to provide mental health researchers throughout the nation with access to an effective, theoretically grounded course in research ethics tailored to their special needs. This was to be accomplished through three specific aims: 1) developing ethics course materials tailored to mental health researchers (a textbook, DVD series, case studies, and slides); 2) developing a “train the trainer” program to prepare participants to use the course materials to teach at their local institutions; and 3) assessing and evaluating the program.

The educational theory behind this program was largely derived from the well-known “four component” model of moral development developed by James Rest and colleagues, which describes the components that moral education ought to address in order to facilitate ethical behavior: moral sensitivity; moral judgment; moral motivation; and moral character1,2. This theory guided the development of our five educational objectives: 1) increasing knowledge of ethical and regulatory issues in mental health research; 2) heightening ethical sensitivity or awareness of ethical issues in behavioral health research; 3) improving moral judgment by fostering decentration3, the ability to focus on all salient aspects of a moral situation; 4) fostering moral motivation by promoting self-reflection and illustrating the values that underlie the regulations and relevant professional codes; and 5) facilitating ethical action through the above objectives by increasing investigators’ confidence and by providing them with resources that can help to determine and do what is right.

Novel attributes of the course

While the EMHR course content addressed many common issues in research ethics (such as informed consent, privacy, risks and benefits), it had several standout features. The first was a special emphasis on consumer perspectives. By including video-clips of two focus groups with mental health services consumers in the DVD series, the course integrated the voices of consumers of services who had served as participants in research. This was meant to shift the focus of research ethics from merely protecting participants from harm to empowering consumers to provide input regarding how to maximize the benefits of research participation and the respect shown toward them by researchers.

A second noteworthy dimension of the EMHR course was the emphasis on systematic case discussion and moral development, as opposed to rote knowledge of and compliance with a research regulatory system. As noted above, the course aimed to foster ethical sensitivity, moral judgment, moral motivation, and ethical action. Data suggest that certain forms of case study analysis and small group discussion can play a large role in fostering the development of these traits, thus this became a focus of the training sessions1.2.4. The specific form of case analysis used is described below.

A third novel dimension of the course was its dissemination strategy. We utilized Internet-based video-streaming technology to combine the strengths of a traditional classroom-based program with the broad dissemination possibilities of a Web-based course. Nevertheless, because we believed that face-to-face discussion was the ideal format for systematic case analysis, we adopted a train-the-trainer program that aimed to prepare individuals to use the training program at their local institutions.

Course curriculum development and format

The EMHR course was created with input from a team of diverse faculty and advisory board members that included nationally recognized experts in mental health and related research as well as consumers and advocates. The course was pilot-tested with staff at the MIMH as course materials were finalized and prior to recruiting nationwide, in order to get feedback on the course content and to gain comfort with the streaming video technology. Table 1 details the course modules as well as corresponding textbook chapters, DVDs, expert consultants, and case discussion topics.

Table 1.

Ethics in Mental Health Research Training Course Content, Saint Louis University/Missouri Institute of Mental Health, 2005

| Modules | Textbook chapters | DVD topic and interviewee | Example cases |

|---|---|---|---|

| History and principles: Analyzing and justifying decisions, ethical framework, history, and principles (background, case studies, and consumer prospective) |

|

Responding to a history of abuse James Korn, PhD, Professor, Department of Psychology, St. Louis University |

|

| Cultural competence: Cultural competence (background, case studies, and consumer prospective) |

|

Cultural competence Vetta Sanders-Thompson, PhD, MPH, Associate Professor of Community Health, St. Louis University School of Public Health |

|

| Risks and benefits: Study design and risk benefit analysis (background, case studies, and consumer prospective) |

|

Study design Joan Sieber, PhD, Professor Emeritus of Psychology, California State University, East Bay |

|

| Informed consent: Elements, process, and challenges of obtaining free informed consent |

|

Informed consent Gerry Koocher, PhD, Dean of Graduate School of Health Studies, Simmons College |

|

| Decision-making capacity: Decision making capacity (background, case studies, and consumer prospective) |

|

Decision-making capacity Phil Candilis, MD, Assistant Professor, Law and Psychiatry Program, University of Massachusetts |

|

| Recruitment: Issues related to just and non-coercive recruitment |

|

Recruitment Diane Scott-Jones, PhD, Professor, Department of Psychology, Boston College |

|

| Privacy and confidentiality: Focus on needs and strategies for protecting identities and records |

|

Privacy Dorothy Webman, DSW, Chairperson, Webman Associates |

|

| Conflicts of interest: Identifying and managing a variety of conflicts of interest in behavioral health research |

|

Conflicts of interest Gerry Koocher, PhD, Dean of Graduate School of Health Studies, Simmons College |

|

The course was delivered in nine 2-hour sessions (held every other week) on-site at the MIMH and was broadcast to distance learners via streaming video. Each session consisted of a brief slide presentation, which addressed basic regulatory and ethical issues as well as consumer perspectives, followed by case discussions. Distance learners could call or email in questions while watching the live internet broadcast. Both informal participant feedback and evaluations from the pilot and first training course guided our revisions to the textbook and curriculum. For example, as the course developed, the instructors shortened the formal presentation, introducing core content primarily through the case discussions. The textbook made more extensive use of tables and textboxes to communicate core information. The first eight sessions covered the curriculum topics outlined in Table 1; the ninth session focused on using the materials for further teaching.

Case discussion method

Approximately 70 cases were either identified or developed for the course (www.emhr.net/cases.htm). Two to four cases were discussed at each session. Cases were designed to promote sustained critical discussion4 rather than merely to illustrate principles or best practices (as short vignettes often do)5,6. Each case presents a scenario that contains a problem and concludes by asking participants to determine and defend a definite course of action.

There is little or no evidence linking knowledge-based compliance education to improved behavior7,8,9. In contrast, there is a well-established link between improvements in moral reasoning and professionally desirable behaviors10. Too often ethics case discussion amounts to little more than unstructured conversation among a few individuals within a larger group, and there is no reason to believe that such conversations do anything to improve discussants’ knowledge, ethical decision-making skills, or ethical behavior. However, some case approaches have been demonstrated to enhance moral development and ethical problem-solving skills11.

A method of analyzing cases was presented during the first class session. The analysis method was developed by the project director (J.M. DuBois) and built upon several existing frameworks with demonstrate efficacy12,13,14. The method is described in chapter 3 of the textbook as well as in two online documents available on from the EMHR instructors’ materials website (http://www.emhr.net/materials.htm). The case analysis framework involves identifying 1) stakeholders, 2) relevant facts, 3) relevant ethical and legal norms, and 4) options, and then justifying a decision. The justification may require resolving a factual dispute or a dispute involving value conflicts or conflicting ethical norms. To justify a decision when the dispute involves an ethical/value conflict, the student must demonstrate that 1) the action is effective in achieving its aim, 2) it is indeed necessary to infringe upon the competing value or norms, 3) the good achieved is proportionate to the values that are infringed upon, and 4) the action is designed to infringe as little as possible on the competing value or norm13. The purpose of this decision-making framework is not to guarantee that one right conclusion is reached but to help users rule out unacceptable options (e.g., those that unnecessarily infringe on a value or overlook a group of stakeholders) and to better articulate their ethical rationale. While this model of decision-making was formally presented, most class discussions were spontaneous rather than structured, and key elements were often systematically identified only at the conclusion of the discussion.

Course materials

Course textbook

The project director (J.M. DuBois) wrote a textbook to accompany the course. While originally proposed as a short (80-paged) spiral-bound textbook, the manuscript grew threefold in response to participants’ feedback and literature reviews, and was subsequently published with an academic press15. The first three chapters cover foundational issues and the remaining seven chapters focus on applied topics. Each applied chapter begins with general ethical considerations and relevant regulatory information and concludes with at least one case study. Course participants were assigned readings from the in-progress textbook to be completed prior to each class session. Table 1 lists the relevant textbook assignments.

Instructional DVDs

An educational DVD complemented each course topic and relevant textbook chapters. Experts were asked in an interview format about important topics in the field (see Table 1 for a list of faculty and topics). Each DVD unit also included discussions of the issue from the consumer perspective through excerpts from two filmed focus groups and comments by the co-investigator (J.C.).*

Course web site with an online case compendium

A Web site was created for the course (www.emhr.net). This site includes links to articles presenting the case analysis framework, lecture slides, 70 case studies, codes of ethics, research regulations, and bibliographies. Case studies can be searched using keywords or existing headings. The case studies are divided into two categories: cases that are suitable for group discussion aimed at fostering decision-making skills, and short illustrative cases that are useful in highlighting either ethical problems or best practices. While the case compendium was intended to support the EMHR course, its full development was made possible by an “RCR Resource Development” award to the project director from the U.S. Office of Research Integrity in 2003.

Course Outcomes

Enrollment and participation

The course was held twice, once during the spring semester in the 2005 calendar year and again in the fall semester of 2005. There were 40 total participants: 18 on-site participants and 22 distance learners. (This does not include the 10 participants in the MIMH pilot course.) Participation was defined as having registered for the class, attended at least one class session, and completed at least one of the pre-test measures for the course (described below). Completion was defined as completing a majority of class sessions and at least one of the post-test measures. We conducted Chi-square tests for significant differences between the completion rates of distance learners and on-site learners, and between fall and spring semester learners. While no statistically significant differences were found (P value = .24), this was likely due to the small sample size: Only 11% of onsite participants failed to complete the course, whereas 32% (almost 3 times as many) distance learners failed to complete the course. Participation statistics are reported in Table 2.

Table 2.

Enrollment and Completion Status by Location and Course Section, Ethics in Mental Health Research Training Course, Saint Louis University/Missouri Institute of Mental Health, 2005

| Participant category (no.) | Completion status | P value* | |

|---|---|---|---|

| No. (%)complete | No. (%)incomplete | ||

| Location | 0.24 | ||

| On-site (18) | 16 (88.9) | 2 (11.1) | |

| Distance (22) | 15 (68.2) | 7 (31.8) | |

| Section | 0.92 | ||

| Spring course (15) | 11 (73.3) | 4 (27.7) | |

| Fall course (25) | 20 (80.0) | 5 (20.0) | |

Chi-square test.

Class participants had widely differing backgrounds and included psychiatrists, psychologists, social workers, epidemiologists, IRB staff and members, mental health services consumers, consumer advocates, ethicists in academic medical centers, and research coordinators. During the final class session, participants discussed their plans to use course materials and the skills they developed. None planned on delivering the course in the same format as the original training program. However, they had plans to starting training programs for psychiatric researchers, conducting orientation sessions for IRB members, hosting lunchtime educational sessions using DVD units as a springboard for discussion, incorporating slides into research employee training, and adapting the course to a semester long class for psychology majors.

Assessment

Three separate assessment tools (each described in more detail below) were used to gauge educational outcomes. Each assessment was administered to participants prior to the first course meeting as a pre-test, and an identical assessment was administered approximately 18–20 weeks later as a post-test, after completion of the penultimate session. The assessment program was determined to be exempt by the IRB at SLU after ensuring that the confidentiality of data was adequately protected and that course participants had adequate opportunity to withhold their data from publications. No participants chose to withhold their data. Participants’ responses were not anonymous during testing so that pre- and post-tests could be paired and completion tracked; however, names were not directly connected to any responses (email addresses and numerical identifiers were recorded instead) and only de-identified data were analyzed.

Basic Knowledge Assessment Form

The Basic Knowledge Assessment Form (BKAF) consisted of twenty objective questions, in multiple-choice and true/false format. The BKAF measures knowledge of widely accepted ethical norms and regulatory rules pertaining to mental health research. Pre-test and post-test scores were compared using paired-samples t-tests and non-parametric tests for small sample size (Wilcoxon rank-sum test). 10 participants were not included in this analysis because they failed to complete a post-test. The outcomes of this test are reported in Table 3. Overall, post-test score were an average of 7 percentage points higher than pre-test scores (t-test P value < 0.01). Onsite participants scored higher than distance-learners, but the difference was not statistically significant.

Table 3.

Assessment outcomes for BKAF and CASA pre and post testing, Ethics in Mental Health training course, Saint Louis University/Missouri Institute of Mental Health, 2005*

| Type of assessment (no. participants) | Pre-test | Post-test | T-test P-value | Wilcoxon P-value |

|---|---|---|---|---|

| BKAF, percent† | 75.17 | 82.71 | >0.01 | n/a |

| Section: spring (10) | 75.00 | 84.50 | 0.02 | 0.03 |

| Section: fall (20) | 75.25 | 81.00 | 0.08 | 0.07 |

| Location: on-site (16) | 74.38 | 82.19 | 0.03 | 0.02 |

| Location: distance (14) | 76.07 | 82.14 | 0.13 | 0.10 |

| CASA, score‡ | 24.56 | 22.16 | 0.06 | n/a |

| Section: spring (6) | 23.62 | 24.12 | 0.76 | 0.75 |

| Section: fall (18) | 24.88 | 21.50 | 0.04 | 0.04 |

| Location: on-site (12) | 23.17 | 20.27 | 0.06 | 0.06 |

| Location: distance (12) | 25.96 | 24.04 | 0.37 | 0.36 |

BKAF = Basic Knowledge Assessment Form; CASA = Case Analysis Skills Assessment.

BKAF percent: possible range was from 0 to 100.

CASA score: possible range was from 0 to 66.

Case Analysis Skills Assessment

We developed the Case Analysis Skills Assessment (CASA) specifically to assess ethical problem-solving skills. The CASA required participants to read a scenario in which a group therapy researcher needed to decide whether to breach confidentiality after a female participant discloses that she is having sex with a 16-year-old male student. While the case may seem straightforward given mandatory reporting rules, the researcher had a certificate of confidentiality. Not only had she promised strict confidentiality, but her consent form did not disclose any conditions under which confidentiality would be breached. Participants were then presented with a series of questions that were meant to elicit their ability to use the case analysis and decision justification approach taught in the course. We decided to use questions to elicit this knowledge, because we believed that other approaches that simply invite participants to state a decision and explain why they made the decision may fail to elicit narratives that make evident the tacit reasoning processes that experts may use (i.e., experts are often more efficient in solving problems and thus may be very terse in responding, thereby scoring artificially low on quantitative measures).

A scoring matrix was developed by a team that included two research ethicists and two research assistants who had completed the training course, as well as additional research ethics training. The matrix awarded points for: making a clear decision and providing a rationale that invoked relevant facts and ethical principles; the identification of key stakeholders, of ethically relevant facts, of the most relevant ethical and legal norms, of options, and of the benefits to various options; and efforts to reduce the infringement of relevant values. Using the scoring matrix, 2 scorers (J.M. Dueker and E.E.A.) independently graded the written responses of each participant. If the two scores fell within 4 points of each other, the scores were averaged into a final score. Scores differing by more than 4 points were re-evaluated in a meeting between both scorers. Scores were debated and changed until the reviewer scores were within 4 points of each other. These scores were then averaged and recorded as final. Score means for pre- and post-testing were compared using a paired samples t-test and the Wilcoxon rank-sum test. 16 participants were not included in this analysis because two had not completed a pre- or post-test and 14 had failed to complete a post-test. The score statistics are reported in Table 3. There was no statistically significant difference between overall pre-test and post-test scores; however, overall mean scores were 2 points lower (participants performed less well) in the post-test.

Sense of Preparedness Scale

The Sense of Preparedness Scale (SPS) consisted of four scaled-response questions designed to gauge participants’ confidence or level of preparedness to address ethical issues in mental health research. Median responses for pre- and post-testing were compared using the Wilcoxon rank-sum test and the sign test. 13 participants were not included in this analysis because one had not completed a pre- or post-test and 12 failed to complete a post-test. The results of this assessment are reported in Table 4. The median responses of participants improved from “agree” to “strongly agree” for two items: “Know where to find answers for ethical questions” and “Good at recognizing human subjects research ethical issues.” Although many pre- and post-test responses remained the same, when the response did change it was much more often in the positive direction, as indicated by the significant sign test P-values.

Table 4.

Average Sense of Preparedness Scale Assessment Outcomes, Ethics in Mental Health Research Training Course, Saint Louis University/Missouri Institute for Mental Health, 2005

| Sense of Preparedness Scale* | Pre-test | Post-test | Wilcoxon P-value | Sign test differences | Sign test P-value |

|---|---|---|---|---|---|

| Knowledge of regulations | Agree | Agree | <0.01 | 13+ 1− 13= |

<0.01 |

| Confident in ability to solve ethical problems | Agree | Agree | 0.02 | 8+ 1− 18= |

0.004 |

| Know where to find answers to ethical questions | Agree | Strongly agree | 0.02 | 10+ 2− 15= |

0.004 |

| Good at recognizing human participants research ethical issues | Agree | Strongly agree | <0.01 | 14+ 1− 12= |

<0.01 |

Median values reported for a 5-point scale of agreement (strongly agree to strongly disagree).

Course evaluation

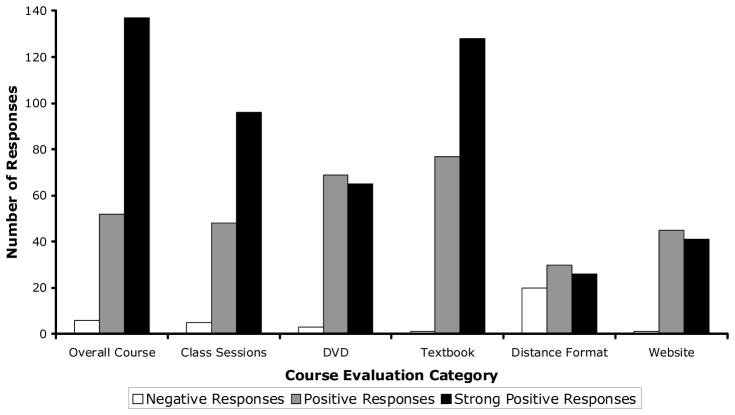

Participants also completed an evaluation at the end of the course. This form consisted of 31 scaled-response questions broken into six groups: overall course (7 items), class sessions (5 items), DVD (5 items), textbook (7 items), distance format (4 items), and website (3 items). Items were phrased positively, so in each case the response “strongly agree” indicated the most positive response. A graph of participant responses is shown in Figure 1. The median responses to all items in the overall course, class sessions, and textbook categories were “strongly agree,” except for one median response of “agree” for one item in the overall course category. A mix of “agree” and “strongly agree” median responses were given to the items in the DVD and website categories. The median responses to the distance format questions were all “agree,” however, the response “disagree” appeared in more than 25% of all responses to the questions in this category.

Figure 1.

Participants’ course evaluation responses, Ethics in Mental Health Research training course, Saint Louis University/Missouri Institute of Mental Health, 2005. Each course evaluation category consisted of 3 to 8 scaled-response statements that were all worded positively about a particular aspect of the course. Strong positive responses are responses of “strongly agree”, positive responses are responses of “agree” and negative responses are responses of “disagree” and “strongly disagree” to these statements. Responses to these statements were grouped by category and aggregated to create this figure.

In addition to these items, an open-ended question was posed to the participants to express any other comments or concerns about the course. Nineteen participants chose to answer this question. Seven of these (37%) experienced a poor quality class video feed on the website, 5 (26%) said the distance format limited their ability to feel involved with the class, 2 (11%) said the 11:00 a.m. to 1:00 p.m. class time was inconvenient, and 2 (11%) had minor problems with the instructors and class organization. However, the vast majority (74%) commented on a very positive experience with the course. When onsite participants were asked about the possibility of offering the training in more convenient formats including online training (using QuickTime videos and online reading) or intensive 2-day formats, the group unanimously preferred the existing format.

Discussion: Lessons Learned about Training and Assessment

Our training program had several strengths that are worth noting. First, our trainees were generally strongly satisfied with the overall program. Many participants joined the program with rich experience in conducting and reviewing research, yet they felt they benefited from this voluntary program. Second, by incorporating mental health services consumers in the development team and in filmed discussion groups, our training program allowed mental health research participants to share their views on what is beneficent, just, and respectful in research. Third, the program produced several enduring resources that were developed by our faculty and consultants: a DVD, a textbook, and a website with case studies and other resources. Each website hit or sale of a DVD or textbook indicates potential ongoing educational impact. Finally, the course directors have already used these materials in further training programs. They conducted a 4-hour continuing education seminar at the 2006 Convention of the American Psychological Association; the project director has incorporated aspects of the training program into training plans for NIH T32 post-doctoral fellows at a local university (through a Clinical and Translation Science Award program); and the co-director has used the DVDs in training sessions with mental health services consumers who may participate in research studies. Several trainees have reported using materials within their institutions. Thus, the long-term impact of the NIH T15 grant we received is likely to be far greater than has been reported here.

Nevertheless, we learned important lessons from this experience that should be considered as future training programs are developed. First, we had fewer participants than expected despite extensive advertising efforts. For each session, we advertised via bioethics and research ethics listservs, ads in leading mental health professional periodicals, including Psychiatry News and the APA Monitor, flyers mailed to 5,000 potential participants, and emails to local training directors (e.g., psychiatric residency directors and post-doctoral training preceptors). Despite this, we averaged about 20 participants per course. On the one hand, this is not an insignificant level of participation given that the program was (a) voluntary and (b) quite time intensive. On the other hand, we would recommend that future training programs be incorporated into existing training venues, perhaps as required components. For example, our seminars could be fruitfully integrated—either in whole or in part—into ethics courses for graduate students in psychology, psychiatric residency programs, or post-doctoral research programs.

Second, we were not entirely satisfied with the distance-learning format. Though not statistically significant, nearly 3 times as many distance-learners dropped the program, and only distance-learners provided negative feedback on the course. Some of this was due to technical difficulties, but it may have also been due to the lack of face-to-face contact or the fact that our program did not offer the flexibility that many distance-learning programs offer (i.e., the live video-stream required participation at fixed times). Moreover, although distance-learners had the opportunity to interact during case discussions either through teleconferencing or emails that we checked regularly during discussions, few participants took advantage of this. Without interactivity, case discussion cannot achieve its fullest aims. While some of these concerns can be easily addressed (e.g., an online format using QuickTime videos and online readings without any interactive component could provide greater convenience and would present fewer technical challenges), the issue of interactivity is much harder to address. In our first session, we provided online chat opportunities, but no participants took advantage of it (and within a voluntary training program, it could not be mandated).

Third, we were initially surprised by the outcomes of the CASA assessment, in which post-test scores were generally either unimproved or actually lower than pre-test scores. While the CASA is a new instrument and we do not have comparative data, we believe the initial scores were not unusually high, so it is unlikely that the failure to increase scores was due to the so-called ceiling effect. We believe that two factors were at work. Some participants were not highly motivated to complete post-testing due to several factors: the course was voluntary and assessment was not used to determine a “passing score”; no financial incentives were provided; and the case study was not novel, but rather the same case was used in pre- and post-testing. (We used the same case because no standardized cases exist with established difficulty levels, and our samples were too small to reliably establish the psychometric properties of the cases so as to reliably assess changes in problem-solving skills.) Perhaps more importantly, the case study we presented was the most ethically problematic case in our collection. In one sense, no decision was wholly satisfactory either in terms of consequences or the ability to honor all relevant ethical and legal responsibilities. (See chapter 9 of the textbook for a detailed commentary on the case.) Perhaps as a result of the training program, participants more fully understood the competing obligations involved in the case and, accordingly, fewer participants articulated either a clear decision or a strong justification for their decision (thereby lowering their scores). Thus one plausible interpretation of CASA scores is that rather than indicating poorer problem-solving skills, they indicate a reduction in what Joan Sieber has termed “unwarranted certainty”16. This interpretation is certainly plausible given that assessment instruments frequently tap into multiple constructs (in this case, ethical certainty and problem-solving).

Several lessons can be learned from the CASA experience. First, it is a mistake to assume that an instrument will assess only one construct. Second, multiple case studies must be used both for the sake of novelty (motivation) and to provide participants with the opportunity to evidence skills across a variety of “ethical difficulty levels.” Third, because case-based assessment is time-consuming and requires high levels of motivation, some sort of incentive may be important, whether financial or non-financial (e.g., a grade). However, establishing validity and reliability for multiple cases will require a large number of participants and a significant financial investment because scoring cases involves qualitative data analysis and establishing reliability requires multiple scorers.

It remains true that “while widespread consensus exists on the importance of assessment for medical ethics education, there is as yet no ‘gold standard’ for measuring students’ performance”17, p. 717. Nevertheless, a potentially more promising line of assessment has been developed recently by Mumford and colleagues,18 which involves the use of case scenarios and objective items responses. In response to an ethically problematic research scenario, participants are asked to select the best 2 of 8 possible behavioral responses. While such a format cannot elicit some of the more subtle moral reasoning processes that case analysis can (at least in principle), it goes far beyond standard multiple-choice items in contextualizing assessment, eliciting problem-solving abilities, and moving beyond the naïve assumption that there is always only one right response to an ethical question. Additionally, the “pick 2” format is far easier to administer than traditional, single-answer formats and has established validity18. In assessing future training programs, we intend to build upon this framework, which presents a reasonable compromise between what is desirable in the realm of ethics assessment and what is in fact feasible.

Acknowledgments

Special thanks to the program officer, Dr. Lawrence Friedman. The authors thank Karen Bracki O’Koniewski and Angela McGuire Dunn for research and teaching assistance; Ana Iltis for assistance in developing the CASA scoring matrix; Danny Wedding for institutional support from the Missouri Institute for Mental Health; Karen Rhodes for administrative assistance; John Kretschmann for programmatic assistance; and Kelly Gregory for patiently filming and editing DVD material and providing technical support for class sessions.

The Ethics in Mental Health Research Training Program was supported by an NIH T15 training grant (T15-HL72453).

Footnotes

The DVD set, “Ethical Dialogues in Behavioral Health Research,” is commercially available; please contact the corresponding author for further information.

Contributor Information

Dr. James M. DuBois, Hubert Mäder Professor and Department Chair, Center for Health Care Ethics, Saint Louis University, St. Louis, Missouri.

Mr. Jeffrey M. Dueker, Research assistant, Center for Health Care Ethics, Saint Louis University, St. Louis, Missouri.

Dr. Emily E. Anderson, Research Associate and Education Specialist within the Institute for Ethics of the American Medical Association.

Dr. Jean Campbell, Research Associate Professor at the Missouri Institute of Mental Health in St. Louis, MO.

References

- 1.Rest JR, Narvaez D, Bebeau MJ, Thoma SJ. Postconventional moral thinking: A neo-Kohlbergian approach. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 1999. [Google Scholar]

- 2.Bebeau MJ. The Defining Issues Test and the four component model: Contributions to professional education. Journal of Moral Education. 2002;31(3):271–295. doi: 10.1080/0305724022000008115. [DOI] [PubMed] [Google Scholar]

- 3.Gibbs JC, Basinger KS, Fuller D. Moral maturity: Measuring the development of sociomoral reflection. Hillsdale, NJ: Lawrence Erlbaum Associates; 1992. [Google Scholar]

- 4.Bebeau MJ. Cases for teaching and assessment. Bloomington, IN: Indiana University; 1995. [Accessed 2/20/2008]. Moral reasoning in scientific research. Available online at: www.indiana.edu/~poynter/mr-main.html. [Google Scholar]

- 5.Fisher CB. Decoding the ethics code: a practical guide for psychologists. Thousand Oaks, Calif: Sage Publications; 2003. [Google Scholar]

- 6.Koocher GP, Keith-Spiegel PC. Ethics in Psychology: Professional Standards and Cases. 2. New York: Oxford University Press; 1998. [Google Scholar]

- 7.Steneck N. Assessing the integrity of publicly funded research. In: Steneck N, Sheetz M, editors. Investigating research integrity. Proceedings of the first ORI research conference on research integrity. Bethesda, MD: Office of Research Integrity; 2000. pp. 1–16. [Google Scholar]

- 8.Powell S, Allison M, Kalichman M. Effectiveness of a responsible conduct of research course: A preliminary study. Science and Engineering Ethics. 2007 Jun;13(2):249–264. doi: 10.1007/s11948-007-9012-y. [DOI] [PubMed] [Google Scholar]

- 9.Macrina FL, Funk CL, Barrett K. Effectiveness of responsible conduct of research instruction: Initial findings. Journal of Research Administration. 2004;35(2):6–12. [Google Scholar]

- 10.Bebeau M, Tohma S. The impact of a dental ethics curriculum on moral reasoning. Journal of Dental Education. 1994;58(9):684–92. [PubMed] [Google Scholar]

- 11.Bebeau MJ, Rest JR, Yamoor C. Measuring dental students’ ethical sensitivity. Journal of Dental Education. 1985;49:225–235. [PubMed] [Google Scholar]

- 12.Thomasma DC, Marshall PA, Kondratowicz D. Clinical medical ethics: Cases and readings. Lanham, MD: University Press of America; 1995. [Google Scholar]

- 13.Childress JF, Faden RR, Gaare RD, Gostin LO, Kahn J, Bonnie RJ, et al. Public health ethics: Mapping the terrain. Journal of Law, Medicine and Ethics. 2002;30(2):170–178. doi: 10.1111/j.1748-720x.2002.tb00384.x. [DOI] [PubMed] [Google Scholar]

- 14.Jennings B, Kahn J, Mastroianni A, Parker LS. [Accessed 2/20/2008];Ethics and the public Health: Model curriculum. 2003 Available from: http://www.asph.org/document.cfm?page=723.

- 15.DuBois JM. Ethics in mental health research. Principles, guidance, and cases. New York, NY: Oxford University Press; 2008. [Google Scholar]

- 16.Sieber JE. Using our best judgment in conducting human research. Ethics and Behavior. 2004;14(4):297–304. doi: 10.1207/s15327019eb1404_1. [DOI] [PubMed] [Google Scholar]

- 17.Goldie J, Schwarz L, McConnachie A, Jolly B, Morrison J. Can students’ reasons for choosing set answers to ethical vignettes be reliably rated? Development and testing of a method. Medical Teacher. 2004;26(8):713–718. doi: 10.1080/01421590400016399. [DOI] [PubMed] [Google Scholar]

- 18.Mumford MD, Devenport LD, Brown RP, Connelly S, Murphy ST, Hill JH, et al. Validation of ethical decision making measures: Evidence for a new set of measures. Ethics and Behavior. 2006;16(4):319–345. [Google Scholar]