Abstract

Sign language comprehension requires visual attention to the linguistic signal and visual attention to referents in the surrounding world, whereas these processes are divided between the auditory and visual modalities for spoken language comprehension. Additionally, the age-onset of first language acquisition and the quality and quantity of linguistic input and for deaf individuals is highly heterogeneous, which is rarely the case for hearing learners of spoken languages. Little is known about how these modality and developmental factors affect real-time lexical processing. In this study, we ask how these factors impact real-time recognition of American Sign Language (ASL) signs using a novel adaptation of the visual world paradigm in deaf adults who learned sign from birth (Experiment 1), and in deaf individuals who were late-learners of ASL (Experiment 2). Results revealed that although both groups of signers demonstrated rapid, incremental processing of ASL signs, only native-signers demonstrated early and robust activation of sub-lexical features of signs during real-time recognition. Our findings suggest that the organization of the mental lexicon into units of both form and meaning is a product of infant language learning and not the sensory and motor modality through which the linguistic signal is sent and received.

Keywords: American Sign Language, lexical recognition, sub-lexical processing, eye tracking, age of acquisition, delayed language exposure

Introduction

Perceiving and comprehending spoken linguistic input is known to be a complex and dynamic process. During spoken word recognition, listeners interpret words by continuously evaluating information in the input stream against potential lexical candidates, activating those that share both semantic (Yee & Sedivy, 2006) and phonological (Allopenna, Magnuson, & Tanenhaus, 1998) features with the target word. These findings suggest that the mental lexicon for spoken language is organized both at the lexical and sub-lexical levels, and that linguistic input is continuously processed in real time at multiple levels. In contrast, little is known about lexical processing in sign language. Signed languages such as American Sign Language (ASL)—which are produced manually and perceived visually—are linguistically equivalent to spoken languages (Klima & Bellugi, 1979; Sandler & Lillo-Martin, 2006). Off-line experimental evidence suggests that signed languages are also organized at lexical and sub-lexical levels (Carreiras, Gutiérrez-Sigut, Baquero, & Corina, 2008; Emmorey & Corina, 1990; Klima & Bellugi, 1979; Orfanidou, Adam, McQueen, & Morgan, 2009). However, little is known about real-time lexical activation of signs, or how real-time recognition is affected by a highly delayed onset of language acquisition. In the present study, we find evidence that the mental lexicon of American Sign Language (ASL) is organized via sign sub-lexical features for deaf signers who were exposed to ASL from birth but not for deaf signers whose age-onset of first-language acquisition was delayed and whose childhood linguistic input was impoverished, suggesting that the dual architecture of words—form and meaning—arises from infant language learning.

A crucial factor in considering real-time processing of signs is the linguistic background of the language users. Except for very rare circumstances, hearing individuals are exposed to at least one language from birth and acquire it on a typical developmental scale. In contrast, the childhood linguistic experiences of deaf signers vary tremendously. Over 95% of deaf individuals are born to hearing parents (Mitchell & Karchmer, 2004) and receive their initial language exposure at a range of ages after birth. This highly atypical situation of delayed first language exposure has been associated with persistent deficits in linguistic processing, with evidence primarily coming from studies showing differential patterns of response on comprehension and production tasks in signers based on age of first language exposure (see Mayberry, 2010 for review). For example, Mayberry, Lock, and Kazmi (2002) used a sentence recall task and found that age at onset of acquisition had a significant effect on performance at all levels of linguistic structure. Similar effects have been found in studies probing grammatical processing (Emmorey, Bellugi, Frederici, & Horn, 1995; Cormier, Schembri, Vinson, & Orfanidou, 2012), and in production and comprehension of complex morphology (Newport, 1990).

Although the existence of negative effects of delayed exposure on language processing has been established, the mechanism underlying this differential performance has not been elucidated. Observed differences in performance could be attributed to the overall amount of time spent learning the language, however this is unlikely as such differences persist even after many years of experience using sign (Mayberry & Eichen, 1991). Alternatively, comprehension differences may reflect a fundamental difference in the organization of the mental lexicon that arises when first language exposure is delayed. Signs can be analyzed sub-lexically into discrete parameters including handshape, location, and movement (Stokoe, Casterline, & Croneberg, 1976).1 Recent studies have begun to demonstrate that late-learners show different patterns from native signers in their phonological perception of signs. For example, studies using primed lexical decision found that phonological overlap between the prime and target had a facilitative effect for signers who were exposed to ASL early, but had either an inhibitory effect (Mayberry & Witcher, 2005) or no effect (Dye & Shih, 2006) for late-learning signers. Similarly, in a sign similarity-judgment study, native signers relied primarily on phonological features to determine the similarity of two signs, while late-learners were influenced more by perceptual properties of signs (Hall, Ferreira, & Mayberry, 2012). These results suggest that late-learners of signs are not as attentive to phonology during sign perception. In contrast, other studies have shown that late-learners performing categorical perception tasks (Morford, Grieve-Smith, MacFarlane, Staley, & Waters, 2008; Best, Mathur, Miranda, & Lillo-Martin, 2010) are more likely to make multiple discriminations within a category (i.e. handshape, location)—such as noticing multiple gradations of finger closure—compared to early learners who tend to discriminate more categorically—such as noticing that the fingers are either closed or open. On sentence shadowing and recall tasks (Mayberry & Fischer, 1989), late learners are more likely to make lexical errors in which one sub-lexical parameter of the target sign is substituted for another (e.g. substituting the sign BIRD for the sign PRINT, two signs which differ only in location). These error patterns suggest that late-learners are slower to engage in deeper semantic processing of signs, or arrive at lexical semantics via a different route from native signers.

There is mounting evidence that signs are processed phonologically as well as semantically (Carreiras et al., 2008; Thompson, Vinson, Vigliocco, & Fox, 2013), and that phonological processes may operate differently for native and late-learning signers (Dye & Shih, 2006; Mayberry & Fischer, 1989). Unknown is whether sub-lexical processing occurs online, while signs are being perceived in real-time, or alternatively occurs via a post-lexical matching process after the sign is perceived. As online processing is a known correlate of vocabulary growth and other linguistic skills (Marchman & Fernald, 2008), this question has important implications for the language ability of individuals whose initial exposure to language is atypical.

We investigate these questions in the present study. In the first study, we ask if native signers show evidence of sub-lexical activation while perceiving signs dynamically in real-time interpretation. Such evidence would demonstrate both an organization and activation of the mental lexicon that is largely parallel to that of spoken language. We probed this question through a novel adaptation of the visual world paradigm (Tanenhaus, Spivey-Knowlton, Everhart, & Sedivy, 1995), in which signers’ eye-movements to a set of images are measured in response to a single ASL sign. The semantic and phonological relationship between the target and competitor images was manipulated to test when and how native signers recognize lexical and sub-lexical aspects of signs. If signers direct a greater proportion of gaze fixations to semantic competitors relative to unrelated competitors, this would suggest that semantic features of signs are activated, and if signers direct a greater proportion of fixations to phonological competitors relative to unrelated competitors, this would suggest that sub-lexical features of signs are also activated during recognition.

In a second study, we investigate the impact of delayed first language exposure on real-time sign processing. Using the same paradigm, we ask whether deaf adults who experienced delayed exposure to ASL as a first language show competitor activation patterns similar to those of the native signers. We predicted that late-learning signers would show similar rates of semantic competition as native signers, demonstrating a similar organization between groups at the lexical level, but given previous research findings regarding the sign processing of late-learners, we also predicted that they would differ from native learners in the degree to which they were impacted by phonological competition.

Experiment 1: Native signers

Methods

Participants

Eighteen deaf, native learner signers (8 female, M age = 25 years, range = 18–50 years) participated. Sixteen participants had at least one deaf parent and were exposed to ASL as their primary language from birth. The remaining two participants had hearing parents but were exposed to ASL before the age of two. Participants had a range of educational backgrounds, as follows: did not complete high school (3), high school graduate (3), some college (5), college graduate (4) and graduate degree (3).

Language measure.2

Participants completed a 142-item picture naming task, with the items consisting of all the stimulus pictures presented in the eye-tracking task. An item was scored as correct if the participant produced a sign identical to the target sign used in the eye-tracking task. This measure verified that participants shared the intended sign representation for each target and stimulus picture used in the eye-tracking task. When the participants’ sign differed significantly (i.e. by more than one parameter) trials for which that sign served as the target were removed from analysis.

Eye-tracking materials

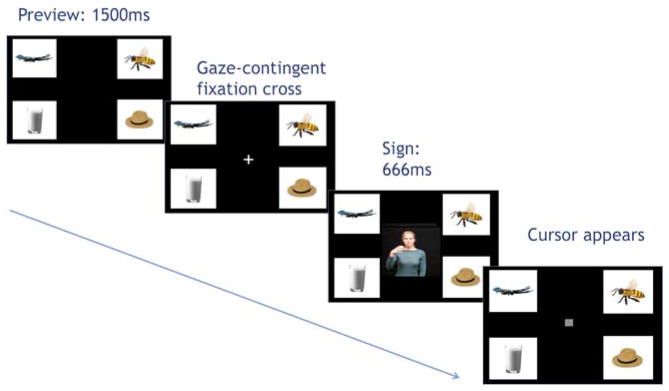

The stimulus pictures were color photo-realistic images presented on a white background square measuring 300 by 300 pixels. The ASL signs were presented on a black background square also measuring 300 by 300 pixels. The pictures and signs were presented on a 17-inch LCD display with a black background, with one picture in each quadrant of the monitor and the sign positioned in the middle of the display, equidistant from the pictures (Figure 1).

Figure 1.

Example of layout of pictures and video stimuli

Thirty-two sets of four pictures served as the stimuli for the lexical recognition task consisting of a target picture and three competitor pictures. Competitor pictures were linguistically related to the target picture as follows: The Unrelated (U) Condition consisted of a target picture and three competitor pictures whose corresponding ASL signs shared no semantic or phonological properties with the target sign. The Phonological (P) condition consisted of a target picture, a phonological competitor, in which the corresponding ASL sign was a phonological minimal pair with the target sign (i.e. the sign only differed in handshape, location, or movement from the target), and two unrelated competitors. The Semantic (S) Condition consisted of a target picture, a semantically related competitor, and two unrelated competitors. The Phono-Semantic (PS) Condition consisted of a target, a phonologically-related competitor, a semantically-related competitor, and one unrelated competitor. Each image set consisted of either all one-handed signs or all two-handed signs, with exceptions for four sets in which the phonological pairs precluded this possibility. Finally, we minimized phonological relationships between the English translations of the target and competitor items.

For the stimulus presentation, each picture set was presented twice such that each item was equally likely to appear as either a target or a competitor across versions of the stimuli sets. The pictures were further counterbalanced such that the target picture was equally likely to occur in any position. The positional relationship between the target and related competitors was balanced across trials. Finally, the order of trials was pseudo-randomized such that the first trial always fell into the Unrelated condition, and there were never more than three consecutive trials of any given condition.

To create the stimulus ASL signs, a deaf native signer was filmed producing multiple exemplars of each target. The best exemplar of each sign was then edited to be of uniform length by removing extraneous frames at the end of the sign. In order to ensure that articulation length did not influence looking time to the sign, each sign was edited to be exactly 20 frames (666 ms) long. The onset point for each sign was defined as the first frame in which all parameters of the sign (i.e. handshape, location, and movement) were in their initial position. This meant that all transitional movement from a resting position to the initial sign position was removed to eliminate any co-articulation effects from a previous sign in a carrier phrase and any variation in transition time from a resting position to the initial sign position, such as the difference in time it takes to move the hands to the torso vs. to the face. To further control for variation among signs, the signer produced each sign with a neutral facial expression.

Experimental task

After obtaining consent, participants were seated in front of the LCD display and eye-tracking camera. The stimuli were presented using a PC computer running Eyelink Experiment Builder software (SR Research). Instructions were presented in ASL on a pre-recorded video. Participants were told that they would see a set of pictures followed by an ASL sign, and that they should “click on the picture that matches the sign” with a mouse. Participants were given two practice trials before the start of the experiment. Next a 5-point calibration and validation sequence was conducted. In addition, a single-point drift correct was performed before each trial. The experimental trials were then presented in eight blocks of eight trials, for a total of 64 trials. After each block, participants were given a break that ended when the participant clicked to proceed to the next block.

On each experimental trial, the pictures were first presented on the four quadrants of the monitor. Following a 750ms preview period, a central fixation cross appeared. When the participant fixated gaze on the cross, this triggered the onset of the video stimulus. After the ASL sign was presented, it disappeared and, following a 500ms interval, a small square cursor appeared in the center of the screen. The participants then used a mouse to drag the cursor to the picture and click on it. The pictures remained on the screen until the participant clicked on a picture, which ended the trial (Figure 2).

Figure 2.

Example of trial sequence

Eye movements were recorded using an Eyelink 2000 remote eye-tracker with remote arm configuration (SR Research) at 500 Hz. The position of the display was adjusted manually such that the display and eye-tracking camera were placed 580–620 mm from the participant’s face. Eye movements were tracked automatically using a target sticker affixed to the participant’s forehead. Fixations were recorded on each trial beginning at the initial presentation of the picture sets and continuing until the participant clicked on the selected picture. Offline, the data were binned into 10-ms intervals.

Overall analysis approach

The resulting eye movement data were analyzed as follows. First accuracy on the eye-tracking task was measured and incorrect trials were removed. Time course plots were generated with the resulting trials and the point at which looks to target diverged from looks to all competitors was determined across conditions.3 To determine condition-specific effects, latency of the first saccade to the target was compared across conditions. Next, a representative time window was used to calculate mean proportion of fixations to the stimulus sign, target, and competitor pictures for each condition. Analysis of fixation proportion was verified with further analyses including first target fixation duration, number of fixations to target, and number of trial fixations to all areas.

Results

Language measure

Of the 18 participants, two did not complete the picture naming task. The mean accuracy for the 16 remaining participants was 95% (range 85% to 100%).4

Eye-tracking measures

Accuracy

Accuracy (i.e. correct picture chosen) was 98.5% (range 94% to 100%). Across participants, there were 17 errors. Participants selected a phonological competitor 10 times and a semantic competitor 7 times.

Time course

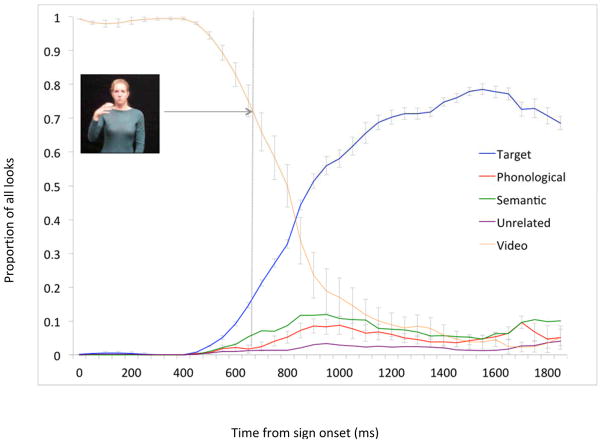

The time course of fixations towards the target and competitor images was plotted beginning at sign onset and continuing for 2000ms across all conditions (Figure 3). These plots revealed several patterns. For the first 400ms following sign onset, native signers gazed almost exclusively to the signer in the video stimulus. Beginning at 400ms (i.e. mid-sign), looks to the video began to decrease while looks to the target picture slowly increased, and by 500ms, fixations to the target diverged significantly from looks to all competitors. By about 1200ms, the peak of fixations to target was reached, and then remained at peak until approximately 1800ms, at which point target looks began to decrease steadily. From 600–1500ms, looks to the semantic and phonological competitors also increased relative to the unrelated competitors.

Figure 3.

Native signers’ time course of mean (s.e.) fixation proportions to sign, target picture, and competitor pictures 0–1800ms from sign onset. Proportions are averaged across conditions.

Saccade Latency

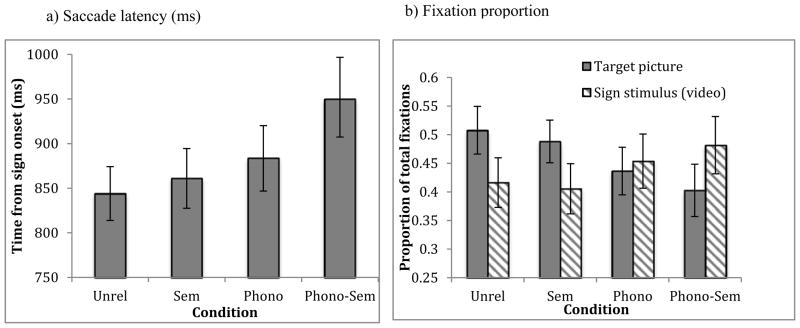

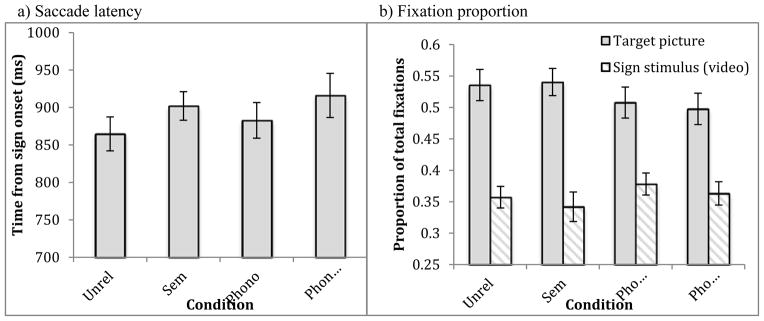

Saccade latency of looks that landed on the target picture were analyzed starting from the onset of the target sign. Participants initiated a saccade to target at 844ms in the Unrelated condition, 861ms in the Semantic condition, 884ms in the Phonological condition, and 950ms in the Phono-Semantic condition. A one-way ANOVA with condition as the independent variable yielded a significant main effect [F(3, 51) = 6.18, p < .005, η2 = .09]. Planned comparisons indicated that participants took significantly longer to launch a saccade to the target in the Phono-Semantic condition than in the other three conditions (p< .05; Figure 4a).

Figure 4.

Effects of semantic and phonological competitors on a) mean (s.e.) saccade latency and b) mean (s.e.) fixation proportion in native signers.

Overall looking time

Based on the time course, we then compared mean fixation proportions across a larger time window from 600–1800 ms following sign onset. The onset point of 600ms was chosen as this was the point at which there were a significantly higher proportion of looks to the target than to competitors across all conditions. The offset point of 1800 ms was chosen because looks to the target sharply declined after this point. In this time window, proportion of looks to the target averaged 51% (SE = 4.2) of the time in the Unrelated condition, 49% (3.7) in the Semantic condition, 44% (4.2) in the Phonological condition, and 40% (4.6) in the Phono-Semantic condition (Figure 4b). A repeated measures ANOVA on looking time with condition as the within-subjects factor and participants as a random variable showed a main effect of condition [F(3, 51) = 9.38, p < .001, η2 = .12]. Planned comparisons revealed that participants spent significantly more time looking to the target in the Semantic and Unrelated conditions than in the Phonological and Phono-Semantic conditions. This suggests that participants experienced more competition from the phonological competitors than from semantic or unrelated competitors. An additional analysis of looks to the video confirmed this effect. Participants looked longest at the video—48% (SE = 5.0) of total looking time—in the Phono-Semantic condition, followed by the Phonological condition (45%, 4.7), the Unrelated condition (42%, 4.3) and the Semantic condition (41%, 4.4). Looks to the video showed a main effect of condition [F(3, 51) = 6.99, p < .001, η2 = .10]. Planned t-tests revealed significantly more looking at the stimulus in the Phono-Semantic and Phonological conditions than the Semantic condition (ps < .05).

To determine whether competitor looking was influenced by condition, one-way repeated measures ANOVAs on looks to the related vs. unrelated competitors were conducted. As predicted, in the Unrelated condition there were no differences in looks to the competitors; participants looked at the competitor pictures an average of 1.3% of the time (range 1.0–1.9%). In the Phonological condition, participants looked at the phonological competitor 2.4% of the time and at the unrelated competitors 1.6% of the time, yet this difference was not significant. In the Semantic condition, there was an effect of competitor type [F(2, 34) = 20.77, p < .001, η2 = .27] with participants looking at the semantic competitor 4.9% of the time and at the unrelated competitors 1.2% of the time. Finally, the Phono-Semantic condition also showed an effect of competitor [F(2, 34) = 13.69, p < .001, η2 = .22]. Participants looked longer at the semantic competitor (4.6%) than at the phonological competitor (3.2%) and looked least to the unrelated competitor (1.4%).

Additional measures confirmed the effect of condition on looking behavior to the target picture in this time window. Significant differences by condition were present in the duration of the first fixation to the target picture following sign onset [F(3, 51) = 5.72, p < .005, η2 = .08], in the total number of fixations to the target picture [F(3, 51) = 3.76, p < .05], and in total fixations to all pictures [F(3, 51) = 7.90, p < .001, η2 = .11]. Participants’ looking behavior across these measures confirmed that they activated both phonological and semantic competitors (Table 1).

Table 1.

Mean (standard error) looking behavior of native ASL participants as a function of lexical competitor type.

| CONDITION | |||||

|---|---|---|---|---|---|

| MEASURE | Unrelated | Semantic | Phonological | Phono-Semantic | p-level |

| Proportion of looking to target picture | .51 (.04) | .49 (.04) | .44 (.04) | .40 (.05) | < .0001 |

| Duration of first fixation to target picture (ms) | 562 (35.2) | 460 (27.1) | 535 (32.5) | 495 (35.0) | < .005 |

| Number of fixations to target picture | 1.5 (.09) | 1.6 (.09) | 1.4 (.08) | 1.4 (.09) | < .05 |

| Number of fixations to all pictures | 2.9 (.10) | 3.1 (.12) | 3.1 (.11) | 3.3 (.16) | < .001 |

Experiment 1 Discussion

Three important findings emerged from Experiment 1. First, native signers interpreted single signs very quickly and used minimal information to gaze towards the appropriate image. Signers did not wait until the end of the sign to shift gaze to the target, and initiated saccades to the target within 400ms following sign onset approximately 260ms before the sign was completed. Second, phonological competition impacted saccade latency and looking time to the target; signers were slower to shift gaze and spent less time overall looking at the target in the presence of phonological competitors. Third, signers looked more to both phonological and semantic competitors than to unrelated competitors. These findings indicate that native signers process signs at both the phonological and semantic levels during real-time comprehension.

We found in Experiment 1 that native signers process signs in terms of both form and meaning in real time, showing the psychological reality of this linguistic description of ASL lexical structure as it unfolds across time. If this kind of ASL lexical processing characterizes the sign processing of all signers independent of early life experience with language, then the late-learners should evidence of phonological and semantic competition as native signers do.. However, if this group does not show sub-lexical or lexical competition, this would indicate that infant language exposure affects how lexical processing is organized in the human mind.

Experiment 2: Late-learning signers

Methods

Participants

Twenty-one individuals (12 female, M age = 31 years, range = 18–58 years) participated. Late-learning signers had diverse backgrounds with regard to the age at which they were first exposed to sign language (between age 5 and age 14) and the number of years they had been using sign language (5 to 39 years of experience); however all used ASL as their primary language. Participants had a range of educational backgrounds, as follows: did not complete high school (5), high school graduate (9), some college (4), and college graduate (3). As is typical of late-learning adult signers (Mayberry, 2010), participants in this group had a slightly lower average number of years of education than the participants in the native-signing group, due to the varied circumstances surrounding their early experiences.

Procedure

The language measure, eye-tracking materials, and experimental task were identical to those used in Experiment 1.

Results

Language measure

One participant did not complete the language task. The mean score on vocabulary production for 20 participants was 86% (range 56% to 100% accuracy).

Eye-tracking measures

Accuracy

Mean accuracy was 97% (range 89% to 100%). Of 40 errors, participants chose the phonological competitor on 23 trials, the semantic competitor on 7 trials, and an unrelated competitor on 10 trials. Incorrect trials were removed from further analysis.

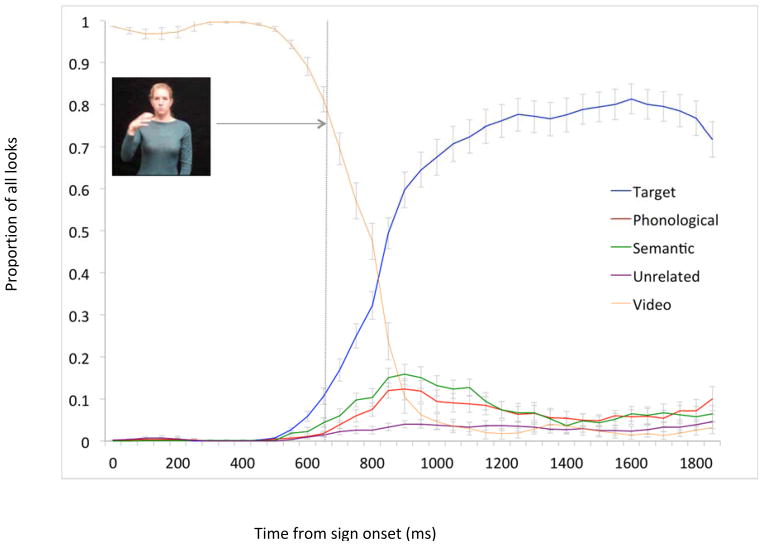

Time course

The time course of looking was plotted from 0–2000ms following sign onset (Figure 5). Participants looked almost exclusively to the video for the first 500ms, at which point looks to the video sharply decreased while looks to the target increased. At 530ms, looks to the target diverged from looks to all competitors across conditions. Following this initial delay relative to native signers, late-learners then showed a similar overall time course of looking to the video, target, and competitors as native signers.

Figure 5.

Late-learning signers’ time course of mean (s.e.) fixation proportions to sign, target picture, and competitor pictures 0–1800ms from sign onset. Proportions are averaged across conditions.

Saccade latency

Saccade latency to the target was calculated for each condition. Participants initiated saccades to the target at 863ms in the Unrelated condition, 866ms in the Semantic condition, 876ms in the Phonological condition, and 909ms in the Phono-Semantic condition (Figure 6a). A one-way repeated measures ANOVA of saccade latency by condition showed no main effect of trial condition [F(3, 60) = 1.73, p = .17]. Unlike the native signers, the presence of phonological and semantic competitors did not affect saccade latency for this group.

Figure 6.

Effects of semantic and phonological competitors on a) mean (s.e.) saccade latency and b) mean (s.e.) fixation proportion in late-learning signers.

Overall looking time

As before, we investigated differences in looking patterns as a function of condition in a time window of 600–1800ms following target onset. Proportion of looks to the target averaged 54% (SE = 2.5) in the Unrelated condition, 54% (2.2) in the Semantic condition, 51% (2.5) in the Phonological condition, and 50% (2.5) in the Phono-Semantic condition. A one-way repeated measures ANOVA showed no significant main effect of trial condition [F(3, 60) = 1.67, p = .18]. Additional measures of looking behavior showed the same pattern, i.e. no main effect of condition, including the duration of the first fixation to the target picture [F(3,60) = 1.44, p = .24], the total number of fixations on the target picture [F(3,60) = .29, p = .93], and the total number of fixations on all pictures [F(3,60) = 1.37, p = .26]. Finally, analysis of looks to the stimulus video also showed no significant effect of condition. Participants looked to the video 36% to 38% of the time across conditions (Figure 6b). In sum, overall looking to the sign stimulus or the target picture by the late-learners showed no effects of the presence of competitors.

Despite the fact that looks to the target and the video showed no effects of condition, late-learners did show some sensitivity to competition from phonological and semantic competitors in an analyses of their looking to the competitor pictures in the 600–1800ms time window. For this analysis, we compared proportion of fixations to each of the competitor pictures (i.e. excluding the target). In the Phonological condition, participants looked at the phonological competitor (4.4%) significantly more than at the unrelated competitors (2.3%), [F(2, 40) = 9.92; p < .001, η2 = .17]. In the Semantic condition, participants looked more to the semantic competitor (6.7%) than to the unrelated competitors (2.1%), [F(2, 40) = 24.91, p <.0001, η2 = .28]. Finally, in the Phono-Semantic condition, participants looked more to the phonological competitor (5.2%) and the semantic competitor (4.9%) than the unrelated competitor (1.7%) [F(2, 40) = 15.38, p < .0001, η2 = .22]. In the Unrelated condition, as expected, there were no differences in looks to the competitors. Participants looked to the competitors an average of 2.4% of the time (range 2.2–2.6%). Thus late-learners did direct gaze to competitor pictures more than unrelated pictures, but this had no impact on their overall looking to either the target or the video across conditions.

Experiment 2 Discussion

Late-learning signers showed evidence for processing single signs in real time. Although slower than the time course observed in native signers, late-learning signers still initiated saccades to the target before the sign was complete. However the pattern of performance in late-learning signers diverged from that of native signers in significant ways. Specifically, late-learners showed no effect of phonological and semantic competitors in their saccade latency or overall looking to the target. Late-learners looked just as quickly and for an equivalent amount of time to the target regardless of whether semantic and phonological competitors were present. Late-learners did show phonological competition effects in their error types and in their looks to competitor pictures. Together, these findings suggest that late-learning signers were sensitive to some relationships among signs, however this awareness did not translate to speed of processing during on-line lexical recognition.

General Discussion

We explored how sign language is interpreted in real-time recognition and how this ability is affected by the timing of first language acquisition. Our results provide the first evidence that the mechanisms underlying real-time lexical processing in ASL among native signers are similar to those that have been observed for spoken language (Huettig, Rommers, & Meyer, 2011), in that they involve continuous activation of both lexical and sub-lexical information. This suggests that the sign language mental lexicon, like that of spoken language, is linguistically organized both semantically and phonologically. Significantly, this kind of real-time lexical processing is primarily evident when language exposure begins in infancy. Language acquisition in early life leads to organization of the mental lexicon and lexical processing in a fashion that is not evident when language is learned after early childhood.

Overall, native and late-learning signers rapidly interpreted lexical signs on a timescale that rivals how hearing individuals interpret spoken words (Tanenhaus, Magnuson, Dahan, & Chambers, 2000). Beginning at 400–500ms following sign onset, native signers shifted gaze from the sign towards the corresponding picture. Given the presumed 200–300ms latency required to program a saccade (Haith, 1993, cf. Altmann, 2011) this suggests that signers are using information from the first 100–200ms of the sign to determine its identity. As expected, late-learning signers were slower than native signers to initiate gaze shifts, yet their initial saccades still occurred before sign offset. Our findings suggest that rapid, incremental linguistic processing is likely a modality-independent feature of word recognition and is not a unique feature of spoken language.

Like spoken words, ASL signs are characterized by a multi-layered linguistic architecture. Native signers activated both semantic and phonological features of signs during real-time processing. Moreover, the activation of phonological features appears early in the process of sign recognition, as demonstrated by increased looks to the video stimulus in the presence of phonological competitors even before participants fixated to the target. As the signed lexical item unfolds in the input, native signers are clearly activating phonological, sub-lexical features which leads to prolonged gaze to the sign, reduced gaze to the target, and increased looks to related competitors relative to unrelated ones. Our findings are consistent with recent studies demonstrating that signers activate phonological features during sign recognition (Thompson et al., 2013) and also during recognition of English words, either in print (Morford, Wilkinson, Villwock, Pinar, & Kroll, 2011) or in spoken word recognition (Shook & Marian, 2012). Despite the fact that signs are perceived visually and that a greater amount of phonological information is available simultaneously, signers activate phonological components of signs in addition to semantic meaning.

In contrast to the native-signers, the late-learning signers—all of whom had many years of experience using ASL as their primary language—did not show evidence for real-time activation of sub-lexical features of sign. In the critical measure of saccade latency, which is likely to be the most indicative of online, incremental processing, late-learners showed no effects of either phonological or semantic competitors. Sensitivity to the phonological and semantic relationships among the pictures by the late-learners was evident only later in the time course of lexical recognition (i.e. after the stimulus sign had completely unfolded), and only when the measure involved either a comparison of looking time to related vs unrelated competitor pictures or a comparison of error types. These competition effects appearing late in the time course of recognition suggest that phonological features of signs may become active during post-lexical comprehension, as has been found in some behavioral tasks (Mayberry & Witcher, 2005). Nevertheless, early language deprivation appears to affect the organization of the ASL mental lexicon in a way that yields reduced early and online activation of phonological, sub-lexical features.

These findings pose important questions regarding both how and why the mental lexicon might be organized differently in individuals whose exposure to a first language is delayed. It has long been established that during infancy and early childhood, learners are optimally attuned to the dynamic patterns in their linguistic input, both in perception of the speech/sign input stream and in early productions (Goldstein & Schwade, 2008). An awareness of and sensitivity to sub-lexical units are implicit in typical acquisition and are acquired before infants produce their first words. This early and automatic analysis of the sub-units of language that occurs during typical acquisition has been proposed as a possible mechanism behind the critical or sensitive period for language acquisition. Specifically, infants have a unique capacity to detect non-native phonetic distinctions, and that capacity diminishes over the first year of life as they become attuned to their native language (Kuhl, Conboy, Padden, Nelson, & Pruitt, 2005; Werker & Tees, 1984). This capacity has also been found to occur in perception of sign phonetic units (Baker, Golinkoff, & Petitto, 2006; Palmer, Fais, Golinkoff, & Werker, 2012). In contrast, individuals acquiring a first language later in life do not go through a babbling stage (Morford & Mayberry, 2000) and instead initially acquire vocabulary items quite rapidly (Ferjan Ramirez, Lieberman, & Mayberry, 2013; Morford, 2003). These individuals must learn the sub-lexical patterns of their language simultaneously with the semantic and pragmatic functions of words. Thus there may be a decreased awareness of phonological patterns in the lexicon and an increased focus on the semantic properties. Indeed in the current study the late-learners showed the greatest proportion of looks to semantic relative to unrelated competitors. The period of infancy is evidently a unique time for processing the patterns of linguistic input and establishing a mental lexicon that is organized according to these patterns.

If late-learners are less sensitive than native signers to the phonological features of language, it is important to elucidate what alternative processes may drive sign recognition in this population. Late-learners may possess a less sophisticated knowledge of the sign-symbol relationship that relies more on rote memory or on holistic perceptual features of signs. While iconicity has generally been found to have a minimal role in typical sign acquisition (Meier, Mauk, Cheek, & Moreland, 2008), more recent findings have suggested that iconicity may underlie some aspects of sign processing (Ormel, Hermans, Knoors, & Verhoeven, 2009), especially for adult learners (Baus, Carreiras, & Emmorey, 2013). Perhaps late-learners, who often rely heavily on iconic and referential gestures for communication before they are exposed to language, are more attuned to these holistic features of signs. Late-learners also acquire language at a point when their real-world knowledge is more extensive than is typically present during infant language learning, and they may rely heavily on semantic categories to map this previously acquired knowledge onto their newly acquired language. The current results provide a first step in revealing important differences in real-time recognition based on early experience; the focus now turns to the behavioral and neural mechanisms underlying these differences.

Footnotes

Some accounts of sign parameters also include palm orientation (Brentari, 1998) and facial expression (Vogler & Goldenstein, 2008).

Additional language measures were administered, however these were not used to differentiate the participants in the current analyses.

For details on how the point of divergence was determined, see Borovsky, Elman, & Fernald, 2012.

It should be noted that “errors,” in which the participant’s sign was not an exact match with the target sign for a given picture were often due to regional variations in signers’ dialects.

Contributor Information

Amy M. Lieberman, Center for Research on Language, University of California, San Diego

Arielle Borovsky, Department of Psychology, Florida State University.

Marla Hatrak, Department of Linguistics, University of California, San Diego.

Rachel I. Mayberry, Department of Linguistics, University of California, San Diego

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of memory and language. 1998;38(4):419–439. [Google Scholar]

- Altmann G. Language can mediate eye movement control within 100milliseconds, regardless of whether there is anything to move the eyes to. Acta psychologica. 2011;137(2):190–200. doi: 10.1016/j.actpsy.2010.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker SA, Golinkoff RM, Petitto LA. New insights into old puzzles from infants’ categorical discrimination of soundless phonetic units. Language Learning and Development. 2006;2(3):147–162. doi: 10.1207/s15473341lld0203_1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baus C, Carreiras M, Emmorey K. When does iconicity in sign language matter? Language and cognitive processes. 2013;28(3):261–271. doi: 10.1080/01690965.2011.620374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best CT, Mathur G, Miranda KA, Lillo-Martin D. Effects of sign language experience on categorical perception of dynamic ASL pseudosigns. Attention, Perception, & Psychophysics. 2010;72(3):747–762. doi: 10.3758/APP.72.3.747. [DOI] [PubMed] [Google Scholar]

- Borovsky A, Elman JL, Fernald A. Knowing a lot for one’s age: Vocabulary skill and not age is associated with anticipatory incremental sentence interpretation in children and adults. Journal of Experimental Child Psychology. 2012;112(4):417–436. doi: 10.1016/j.jecp.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. The MIT Press; 1998. [Google Scholar]

- Carreiras M, Gutiérrez-Sigut E, Baquero S, Corina D. Lexical processing in Spanish sign language (LSE) Journal of Memory and Language. 2008;58(1):100–122. [Google Scholar]

- Cormier K, Schembri A, Vinson D, Orfanidou E. First language acquisition differs from second language acquisition in prelingually deaf signers: Evidence from sensitivity to grammaticality judgement in British Sign Language. Cognition. 2012;124(1):50–65. doi: 10.1016/j.cognition.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dye MW, Shih S. Phonological priming in British Sign Language. In: Goldstein LM, Whalen DH, Best CT, editors. Laboratory phonology. Vol. 8. Berlin, Germany: Mouton de Gruyter; 2006. [Google Scholar]

- Emmorey K, Corina D. Lexical recognition in sign language: Effects of phonetic structure and morphology. Perceptual and motor skills. 1990;71(3f):1227–1252. doi: 10.2466/pms.1990.71.3f.1227. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Bellugi U, Friederici A, Horn P. Effects of age of acquisition on grammatical sensitivity: Evidence from on-line and off-line tasks. Applied Psycholinguistics. 1995;16:1–23. [Google Scholar]

- Ferjan Ramirez N, Lieberman AM, Mayberry RI. The initial stages of first-language acquisition begun in adolescence: when late looks early. Journal of child language. 2013;40(02):391–414. doi: 10.1017/S0305000911000535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein MH, Schwade JA. Social feedback to infants’ babbling facilitates rapid phonological learning. Psychological Science. 2008;19(5):515–523. doi: 10.1111/j.1467-9280.2008.02117.x. [DOI] [PubMed] [Google Scholar]

- Hall ML, Ferreira VS, Mayberry RI. Phonological similarity judgments in ASL: Evidence for maturational constraints on phonetic perception in sign. Sign Language & Linguistics. 2012;15(1) [Google Scholar]

- Haith MM. Future-oriented processes in infancy: The case of visual expectations. Visual perception and cognition in infancy. 1993:235–264. [Google Scholar]

- Huettig F, Rommers J, Meyer AS. Using the visual world paradigm to study language processing: A review and critical evaluation. Acta psychologica. 2011;137(2):151–171. doi: 10.1016/j.actpsy.2010.11.003. [DOI] [PubMed] [Google Scholar]

- Klima ES, Bellugi U. The signs of language. Harvard University Press; 1979. [Google Scholar]

- Kuhl PK, Conboy BT, Padden D, Nelson T, Pruitt J. Early speech perception and later language development: implications for the “Critical Period”. Language Learning and Development. 2005;1(3–4):237–264. [Google Scholar]

- Marchman VA, Fernald A. Speed of word recognition and vocabulary knowledge in infancy predict cognitive and language outcomes in later childhood. Developmental Science. 2008;11:F9–F16. doi: 10.1111/j.1467-7687.2008.00671.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayberry RI. Early Language Acquisition and Adult Language Ability: What Sign Language reveals about the Critical Period for Language. In: Marschark M, Spencer P, editors. Oxford Handbook of Deaf Studies, Language, and Education. Vol. 2. 2010. pp. 281–291. [Google Scholar]

- Mayberry RI, Eichen EB. The long-lasting advantage of learning sign language in childhood: Another look at the critical period for language acquisition. Journal of Memory and Language. 1991;30(4):486–512. [Google Scholar]

- Mayberry RI, Fischer SD. Looking through phonological shape to lexical meaning: The bottleneck of non-native sign language processing. Memory & Cognition. 1989;17(6):740–754. doi: 10.3758/bf03202635. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Lock E, Kazmi H. Linguistic ability and early language exposure. Nature. 2002;417:38. doi: 10.1038/417038a. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Witcher P. What age of acquisition effects reveal about the nature of phonological processing. Center for Research on Language Technical Report. 2005;17:3. [Google Scholar]

- Meier RP, Mauk CE, Cheek A, Moreland CJ. The form of children’s early signs: Iconic or motoric determinants? Language learning and development. 2008;4(1):63–98. [Google Scholar]

- Mitchell RE, Karchmer MA. Chasing the mythical ten percent: Parental hearing status of deaf and hard of hearing students in the United States. Sign Language Studies. 2004;4(2):138–163. [Google Scholar]

- Morford JP. Grammatical development in adolescent first-language learners. Linguistics. 2003;41:4, 681–721. [Google Scholar]

- Morford JP, Grieve-Smith AB, MacFarlane J, Staley J, Waters G. Effects of language experience on the perception of American Sign Language. Cognition. 2008;109(1):41–53. doi: 10.1016/j.cognition.2008.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morford JP, Mayberry RI. A reexamination of “Early Exposure” and its implications for language acquisition by eye. In: Chamberlain C, Morford J, Mayberry R, editors. Language Acquisition by Eye. Mahwah, NJ: Lawrence Erlbaum and Associates; 2000. pp. 111–128. [Google Scholar]

- Morford JP, Wilkinson E, Villwock A, Piñar P, Kroll JF. When deaf signers read English: Do written words activate their sign translations? Cognition. 2011;118(2):286–292. doi: 10.1016/j.cognition.2010.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newport EL. Maturational constraints on language learning. Cognitive Science. 1990;14(1):11–28. [Google Scholar]

- Orfanidou E, Adam R, McQueen JM, Morgan G. Making sense of nonsense in British Sign Language (BSL): The contribution of different phonological parameters to sign recognition. Memory & cognition. 2009;37(3):302–315. doi: 10.3758/MC.37.3.302. [DOI] [PubMed] [Google Scholar]

- Ormel E, Hermans D, Knoors H, Verhoeven L. The role of sign phonology and iconicity during sign processing: the case of deaf children. Journal of Deaf Studies and Deaf Education. 2009 doi: 10.1093/deafed/enp021. enp021. [DOI] [PubMed] [Google Scholar]

- Palmer SB, Fais L, Golinkoff RM, Werker JF. Perceptual narrowing of linguistic sign occurs in the 1st year of life. Child Development. 2012;83(2):543–553. doi: 10.1111/j.1467-8624.2011.01715.x. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge: Cambridge University Press; 2006. [Google Scholar]

- Shook A, Marian V. Bimodal bilinguals co-activate both languages during spoken comprehension. Cognition. 2012 doi: 10.1016/j.cognition.2012.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stokoe WC, Casterline DC, Croneberg CG. A dictionary of American Sign Language on linguistic principles. Silver Spring: Linstok Press; 1976. [Google Scholar]

- Tanenhaus MK, Magnuson JS, Dahan D, Chambers C. Eye movements and lexical access in spoken-language comprehension: Evaluating a linking hypothesis between fixations and linguistic processing. Journal of Psycholinguistic Research. 2000;29(6):557–580. doi: 10.1023/a:1026464108329. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, Sedivy JC. Integration of visual and linguistic information in spoken language comprehension. Science. 1995;268(5217):1632–1634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Thompson R, Vinson D, Fox N, Vigliocco G. In: Knauff M, Pauen M, Sebanz N, Wachsmuth I, editors. Is Lexical Access Driven by Temporal Order or Perceptual Salience? Evidence from British Sign Language; Proceedings of the 35th Annual Meeting of the Cognitive Science Society; Austin, TX: Cognitive Science Society; 2013. pp. 1450–1455. [Google Scholar]

- Vogler C, Goldenstein S. Facial movement analysis in ASL. Universal Access in the Information Society. 2008;6(4):363–374. [Google Scholar]

- Werker JF, Tees RC. Cross-language speech perception: Evidence for perceptual reorganization during the first year of life. Infant Behavior and Development. 1984;7(1):49–63. [Google Scholar]

- Yee E, Sedivy JC. Eye movements to pictures reveal transient semantic activation during spoken word recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32(1):1. doi: 10.1037/0278-7393.32.1.1. [DOI] [PubMed] [Google Scholar]