Metacognition correlates with learning outcomes and student performance. In this study, the authors examined the metacognitive-regulation skills used by introductory biology students. They found that prompting students to use these skills is effective for some students, but other students need additional help with learning strategies to respond optimally.

Abstract

Strong metacognition skills are associated with learning outcomes and student performance. Metacognition includes metacognitive knowledge—our awareness of our thinking—and metacognitive regulation—how we control our thinking to facilitate learning. In this study, we targeted metacognitive regulation by guiding students through self-evaluation assignments following the first and second exams in a large introductory biology course (n = 245). We coded these assignments for evidence of three key metacognitive-regulation skills: monitoring, evaluating, and planning. We found that nearly all students were willing to take a different approach to studying but showed varying abilities to monitor, evaluate, and plan their learning strategies. Although many students were able to outline a study plan for the second exam that could effectively address issues they identified in preparing for the first exam, only half reported that they followed their plans. Our data suggest that prompting students to use metacognitive-regulation skills is effective for some students, but others need help with metacognitive knowledge to execute the learning strategies they select. Using these results, we propose a continuum of metacognitive regulation in introductory biology students. By refining this model through further study, we aim to more effectively target metacognitive development in undergraduate biology students.

INTRODUCTION

Students who reflect on their own thinking are positioned to learn more than peers who are not metacognitive. Metacognition is a critical component of education that correlates with learning outcomes (Wang et al., 1990), student performance (Young and Fry, 2008; Vukman and Licardo, 2009), and problem-solving ability (Rickey and Stacy, 2000; Sandi-Urena et al., 2011). Owing to the significant potential to impact learning, opportunities for practicing metacognition have been included in undergraduate science courses. Posing questions to prompt students to engage in metacognitive reflection is one of the most common approaches reported in the literature (Zohar and Barzilai, 2013). For example, instructors might ask their students, “Which part of this activity was most confusing for you?” Although questions like this encourage metacognition in students, it is not clear whether all undergraduates are in a position to fully benefit from this type of prompting. Optimal targeting of metacognitive skill development requires a better understanding of metacognition in undergraduate students.

Two key elements of metacognition are metacognitive knowledge and metacognitive regulation (Brown, 1978; Jacobs and Paris, 1987). Metacognitive knowledge is our awareness of our thinking. For example, students with effective metacognitive knowledge skills can differentiate between concepts they have mastered and ones they must study further. In contrast, students lacking these skills can confuse their ability to recognize vocabulary words with mastery of the material. In undergraduate biology courses, students’ perceived knowledge may not align well with their actual knowledge (Ziegler and Montplaisir, 2014), which can prevent them from spending more time learning the information (Pintrich, 2002). Metacognitive knowledge also encompasses an understanding of strategies for learning (Brown, 1987; Jacobs and Paris, 1987; Schraw and Moshman, 1995). This entails knowing what learning strategies exist, how to carry them out, and when and why they should be used.

While metacognitive knowledge includes the ability to identify what we do and do not know, metacognitive regulation involves the actions we take in order to learn (Sandi-Urena et al., 2011). Although the theoretical framework that delineates these components is well established in educational and cognitive psychology (Schraw, 1998; Bransford et al., 2000; Pintrich, 2002; Veenman et al., 2006; Zohar and Barzilai, 2013), biologists may not be as familiar with metacognitive regulation. Metacognitive regulation is how we control our thinking to facilitate our learning. For example, students with effective metacognitive-regulation skills can select appropriate learning strategies for a task and modify their approaches based on outcome. In contrast, students who plan to do “more of the same” after earning a poor grade on an exam lack these skills.

Metacognitive regulation is also a significant part of self-regulated learning (Zimmerman, 1986; Schraw et al., 2006). Self-regulated learners have the ability to: 1) understand what a task involves, 2) identify personal strengths and weaknesses related to the task, 3) create a plan for completing the task, 4) monitor how well the plan is working, and 5) evaluate and adjust the plan as needed (Ambrose, 2010). These abilities can form a cycle, and the last three processes (planning, monitoring, and evaluating) are key metacognitive-regulation skills (Jacobs and Paris, 1987; Schraw and Moshman, 1995; Schraw, 1998). The extent to which students use these skills is affected by their beliefs about learning and intelligence (Ambrose, 2010). For example, students who believe intelligence is fixed (Dweck and Leggett, 1988) are less likely to evaluate and adjust their plans for learning than students who believe intelligence can be developed over time and through effort.

Metacognition develops throughout the course of a person’s life (Alexander et al., 1995). Children first become aware of metacognition between the ages of three to five as “theory of the mind” develops (Flavell, 2004). At this stage, a child begins to understand that someone else’s thoughts may be different from his or her own (Lockl and Schneider, 2006). Children use nascent metacognitive-regulation skills such as planning while playing (Whitebread et al., 2009), but they do not use these abilities for academic purposes until ages eight to 10 (Veenman and Spaans, 2005). From here, metacognitive regulation grows linearly through middle and high school (Veenman et al., 2004) and is thought to advance well into adulthood (Kuhn, 2000; Vukman, 2005). Therefore, metacognition is likely to be an area of ongoing development for young adults. While we know that awareness and control of thinking progress over time, we do not know the important stages that occur in the metacognitive development of undergraduate students (Dinsmore et al., 2008; Zohar and Barzilai, 2013).

To enhance student learning through improved metacognition, we need to characterize the key transitions that occur as undergraduates acquire metacognitive-regulation skills. As a first step toward identifying these transitions, we asked what metacognitive-regulation skills are evident in undergraduates taking an introductory biology course. We used the task of preparing for an exam as a vehicle for examining metacognition. We reasoned that students would see this as an important endeavor that would merit their reflection. Our primary interest was in the metacognitive-regulation skills students used while preparing for an exam rather than the particular study strategies they selected. To this end, students were given a self-evaluation assignment after the first exam in the course and a follow-through assignment after the second exam. We used qualitative methods to analyze assignments for student statements of monitoring, evaluating, and planning. On the basis of these data, we propose a continuum of metacognitive-regulation development in introductory biology students. We use this working model to generate hypotheses for further study and to make suggestions for instructors interested in facilitating student metacognition.

METHODS

Participants and Context

Participants were students in an introductory biology lecture and lab course at a public land-grant university, with an RU/VH Carnegie Foundation classification (research university with very high research activity). BIOL107, Introductory Biology, focuses on cell biology and genetics, and is one part of a yearlong introductory series that can be taken in any order. One semester of college chemistry is the only prerequisite. The course serves ∼350–450 students each semester through one lecture section taught by a single professor and 18–22 lab sections taught by graduate teaching assistants (TAs). The majority of the students are freshmen and sophomores, but juniors and seniors also take the course. Although BIOL107 is intended for science and pre–professional majors, some nonmajors take it as a general education requirement.

All BIOL107 students were given the metacognition assignments described below, because one of the course goals is for students “to develop an approach to the study of biology that will facilitate success in future courses.” Only students who were 18 years or older, gave written informed consent, and completed both assignments were included in this study. In the Fall 2013 semester, 245 of the 346 students who completed BIOL107 met these criteria (n = 245 for this study). One student was not included because he was a minor, 18 students did not sign the consent form, and the remaining nonparticipating students did not turn in one or both of the assignments (described in the following section). Each assignment was worth five points or 0.7% of the total course grade (for a total of 10 points or 1.4%), and this may not have been enough of an incentive for students to complete both assignments. The Washington State University Institutional Review Board declared this study exempt (IRB 12702).

Metacognition Assignments

After the first exam in the course, students were given a self-evaluation for exam 1 assignment (E1-SE, see Supplemental Material, Appendix 1). This two-page assignment included three short-answer and six open-ended questions/prompts written to encourage students to monitor and evaluate the strategies they used to prepare for exam 1 and to create a study plan for exam 2. As part of the assignment, students read a list of study strategies used by students who earned a grade of “A” on exams in previous semesters of the course (see Supplemental Material, Appendix 3). This list included all of the methods provided by the “A” students; more “passive” approaches were not filtered out. On E1-SE, students were asked to consider including one or more of these strategies in their study plans for exam 2. This assignment was piloted in the Spring 2013 semester and modified slightly following initial data analysis.

A second assignment was created based on preliminary data collected from Spring 2013, which indicated that nearly all students were willing to modify their study plans in some way. Following the second exam, students were given an exam 2 follow-through assignment (E2-FT, see Supplemental Material, Appendix 2) designed to determine whether they spent more time studying for exam 2, and the extent to which they followed their study plan. This one-page assignment was composed of two short-answer and three open-ended questions/prompts, and it also asked students to create a study plan for exam three. Students had copies of their completed E1-SE assignment when answering these questions. They were given 1 wk to complete E1-SE and E2-FT, and graduate TAs collected the assignments. Students earned full credit for completing each assignment (five points each, 10 points total); points were not awarded based on the quality of their answers. Together, E1-SE and E2-FT constituted only 1.4% of the total course grade.

Qualitative Data Analysis

E1-SE.

Students’ metacognition assignments were studied using content analysis. In essence, we used students’ words to make inferences about their levels of metacognitive regulation. First, we read all of the E1-SE assignments with metacognitive regulation in mind. Initially, we used magnitude codes (Weston et al., 2001; Saldaña, 2013) to score students’ statements about metacognitive regulation as high, medium, or low; however, it was difficult for us to distinguish among the three levels. Instead, we developed a coding system in which we rated the students’ statements as either “sufficient/provides evidence” or “insufficient/provides no evidence” (Tables 1 and 2). These ratings were assigned to each student for: 1) monitoring exam 1 strategies and 2) evaluating exam 1 strategies when planning for exam 2. We coded evaluating and planning together, because we did not specifically ask them to rate their exam 1 study plan. Instead, we asked them to select learning strategies for exam 2 (planning) using insights from their exam 1 experiences (evaluating). We also coded direct student quotes that exemplified the range of metacognitive regulation students reported using, and we coded statements that described any actions the students reported taking (Saldaña, 2013). Two authors (J.D.S. and N.C.C.) coded ∼10% of the assignments before discussing them for approximately 2 h and developing an initial codebook. Another 10% of the assignments were coded and discussed, and the codebook was revised. Once the codebook was fully developed, we coded the remaining assignments in ∼20% increments, with discussions taking place after each increment. The process was iterative, and assignments were recoded whenever the codebook was revised. The final codes for each assignment were compared, and in cases of disagreement, we discussed them until we were able to come to consensus.

Table 1.

Qualitative analysis of monitoring learning-strategy effectiveness for exam 1 (E1-SE)a

| Monitoring code | Percentage of students | Example student response and content analysis notes |

|---|---|---|

| Sufficient evidence | 49.0 (120/245) | Quote: “I don't feel that my flashcard method worked because I focused on terms instead of concepts.” |

| Notes: student identifies a learning strategy that was not effective and provides a specific explanation for why it was not effective. | ||

| Insufficient evidence | 51.0 (125/245) | Quote: “It's not necessarily study strategies didn't work for me, it's that I didn't try enough. I need to try more!” |

| Notes: student does not identify any learning strategies that were effective or ineffective and provides an ambiguous reason for exam results. |

aTo examine monitoring of learning-strategy effectiveness, we asked students to respond to two prompts focused on the approaches that worked and did not work for exam 1 (see Results). Using content analysis, we coded students’ responses as providing sufficient or insufficient evidence of monitoring. The percentage and number of students in each category are shown (n = 245).

Table 2.

Qualitative analysis of evaluating and planning for exam 2 (E1-SE)a

| Evaluating and planning code | Percentage of students | Example student response and content analysis notes |

|---|---|---|

| Sufficient evidence (willing to change) | 44.9 (110/245) | New strategy selected: reviewing notes after each class |

| Quote: “This will allow me to focus on areas where I am struggling and ask questions sooner.” | ||

| Notes: student is willing to change his study plan for exam 2. He selects a new strategy and provides a reason based on his exam 1 experience. | ||

| Insufficient evidence (willing to change) | 53.5 (131/245) | New strategies selected: pursue free tutoring on campus and join a study group |

| Quote: “Anything can help if you have not yet received a hundred percent.” | ||

| Notes: student is willing to change his study plan for exam 2, however, he selects two new strategies without providing any reasons based on his exam 1 experience. | ||

| Insufficient evidence (unwilling to change) | 1.6 (4/245) | New strategy selected: not applicable Quote: “I got 78% with NO studying. I think I will be ok. I’ve taken tests before.” |

| Notes: student is not willing to change her study plan for exam 2 and is not reflecting on her exam 1 experience. |

aWe posed two prompts and one question to assess whether students reflected on their exam 1 experiences and adjusted their study plans for exam 2 accordingly (see Results). We used content analysis to code student responses as providing sufficient or insufficient evidence of evaluating and planning (n = 245). A small percentage of students (1.6%) reported that they would not do anything differently for exam 2. These students are shown in the bottom category “Insufficient evidence/unwilling to change.”

In qualitative research, the researchers are the instruments, and they act as filters through which the data are analyzed (Bogdan and Biklen, 2003; Denzin and Lincoln, 2003; Yin, 2010). In this study, the researchers who coded came to the data with different perspectives: as an instructor (J.D.S.), as a graduate TA (I.J.G.), and as an undergraduate student (N.C.C.). We found that each of us could bring insights to the data. Guided and checked by a researcher with extensive training and experience in qualitative methods (X.N.N.), we completed multiple cycles of coding the data separately and then discussing the data together as described earlier. This approach allowed us to discover nuanced details that would otherwise be overlooked if our primary goal was to calculate interrater reliability (Bogdan and Biklen, 2003; Denzin and Lincoln, 2003, 2005).

E2-FT.

Two authors (J.D.S. and I.J.G.) used the same content analysis process described above to code E2-FT. We noted when students reported that they spent more time studying for exam 2 than exam 1. We also coded for evidence that they followed the key features of the study plans for exam 2 that they outlined in E1-SE. Student statements were coded as “yes/followed plan” or “no/did not follow plan.” We gave students the benefit of the doubt when we were not certain, because we did not have a way to determine whether the students spent more time or followed their plans. For example, if a student said he or she followed the plan and provided no information to the contrary, we coded the E2-FT with “yes/followed plan.”

RESULTS

To study the extent to which metacognitive-regulation skills were evident in introductory biology students, we examined two metacognition assignments related to exam preparation. As described in the Methods, the E1-SE was given after exam 1, and the E2-FT was given after exam 2. Owing to our interest in understanding metacognitive regulation, we focused on evidence of students’ monitoring, evaluating, and planning of learning strategies rather than the learning strategies themselves.

Monitoring Exam 1 Learning Strategies

We examined students’ ability to monitor the effectiveness of their learning strategies. This skill should not be confused with monitoring of conceptual understanding, which is another important metacognitive skill (see Evaluating Exam 1 Study Plan While Planning for Exam 2). After describing their approaches to studying for exam 1, students were asked to respond to two prompts: 1) “Now that I have seen the grade I earned on exam one, these are the study strategies that I feel worked well for me, and I plan on using them again for exam two” and 2) “Now that I have seen the grade I earned on exam one, these are the study strategies that I feel did not work well for me, and I don’t plan on using them again for exam two.” We found evidence of strategy monitoring in 120 of 245 assignments (49.0%; Table 1). For example, two students referred to their use of note cards. One student explained why note cards were helpful for her, while another student explained why flashcards were not effective for her.

In response to what worked: “Doing note cards made me read through the material and put it into my own words so that I could understand it.”

In response to what did not work: “I don’t feel that my flashcard method worked because I focused on terms instead of concepts.”

As another example of monitoring learning strategies, several students explained why “looking over,” “going over,” or “skimming” course materials such as the textbook, class notes, and online homework assignments were not effective strategies:

In response to what did not work: “I feel just looking at lecture slides did not help me. I felt that time would be better spent to quiz myself and see what I don’t know.”

In response to what did not work: “When I would look at notes and homework and not write anything down to study from. I need to be interactive with the material to fully grasp the concepts.”

While 120 of 245 students provided evidence that they monitored the effectiveness of individual learning strategies, the other 125 students (51.0%) listed their approaches but did not provide any explanation for why a particular strategy did or did not work for them (Table 1). Within the group that did not provide evidence of monitoring, an important theme emerged. Seventy-five students expressed that they did not need to use different learning strategies; they only needed to spend more time studying.

In response to what worked: “I feel my strategies were sound I just need to begin studying earlier.”

In response to what did not work: “I must start studying earlier! I think my strategies were good, but my time spent was poor.”

In a few cases, students reported that the strategies they used for exam 1 did not work, but they still included them in their plans for exam 2. These students seemed to know that they should try something different but did not do so for reasons that could not be discerned.

In response to what worked: “After seeing my grade, these study strategies obviously were not helpful because I didn’t do well at all.”

This student did not provide evidence of monitoring of her exam 1 learning strategies. Interestingly, in the same assignment, the same student said

In response to what didn’t work: “I plan on using all the same things but adding other strategies on top of that.”

Related to inability to monitor learning-strategy effectiveness, another sentiment was some students’ inability to monitor their exam performance. Sixteen students specifically described this problem.

“I felt like I did great on (exam one) but my grade doesn’t reflect it.”

“I feel like nothing I did worked because I felt very confident before and after the exam; however, I ended up getting a 65%.”

The difference between how students predict they performed on an exam and how they actually performed is directly related to metacognition (Pieschl, 2009; Ziegler and Montplaisir, 2014). Students lacking metacognitive knowledge cannot accurately judge what they know and, more importantly, what they do not know. This inability can be a barrier to learning. For example, if students believe they have mastered concepts because they recognize terms, this may cause them to underprepare for an exam and then overestimate their performance.

In summary, we found evidence of learning-strategy monitoring from 49.0% of the students. These students could explain why an approach they took was successful for them or not. The other 51.0% of the students did not provide evidence that they were monitoring the effectiveness of individual strategies they used. In many cases, students pointed to time rather than approach as being the most important factor.

Evaluating Exam 1 Study Plan While Planning for Exam 2

We wanted to know whether students at this level could reflect on their study plans for exam 1 and adjust them based on their performance. We asked students to respond to two statements: 1) “A compiled list of study strategies used by students who earned high grades on Biology 107 exam 1 in past semesters is posted (online). After reading this document, I might try the following new study strategy for exam two” and 2) “The reason I think this may be helpful is.” Next, we asked students: “Besides what you already wrote, what else do you plan to do differently for exam two now that you have the experience of taking exam one?” Surprisingly, 241 out of 245 students (98.4%) were willing to change their initial study plan. Only four out of 245 (1.6%) reported that they would not change their plans in any way. One of these four students stated this was because she earned 100% on exam 1. While almost all students selected at least one new learning strategy, only 110 students out of 245 (44.9%) indicated that their selection was based on their exam 1 experiences (Table 2).

New strategy selected: “Write detailed answers to the study questions every week.”

In response to why this would be helpful: “It will make studying for the exam easier because I will already understand the key concepts that I might only need to briefly review later rather than ‘study.’”

New strategy selected: “Take my own detailed notes.”

In response to why this would be helpful: “I will retain the information in a way that I understand rather than copying down the notes word for word.”

Forty students recognized that a new strategy they selected could help them monitor conceptual understanding (a skill different from but related to monitoring effectiveness of learning strategies), so that they could identify concepts they had not yet mastered.

New strategy selected: self-testing

In response to why this would be helpful: “I think (this technique) will help me because just simply running over the material is not enough. I need to practice without looking at notes to see what I know and what I need to spend more time on.”

New strategy selected: review notes after each class

In response to why this would be helpful: “This will allow me to focus on areas where I am struggling and ask questions sooner.”

The remaining students, 135 out of 245 (55.1%), did not provide evidence that they were reflecting on and adjusting their plans based on exam 1 performance (Table 2). Often, these students did not seem to know why a new strategy might be effective.

New strategies listed: review notes weekly, review study questions, and take the practice test

In response to why these would be helpful: “These are all things I didn’t do and got a poor grade, if I do what people did that got an A it can only help.”

New strategies listed: pursue free tutoring on campus and join a study group

In response to why a new strategy would be helpful: “Anything can help if you have not yet received a hundred percent.”

In summary, very few students were unwilling to do something different for exam 2. We found that 44.9% of students could evaluate their study plans for exam 1 and adjust their plans for exam 2 accordingly. A theme that emerged from these students was monitoring of conceptual understanding. Among the other 53.5% of students who changed their plans for exam 2 were several students who did not describe why a new strategy might be helpful to them.

Student Realizations about Studying after Exam 1

While coding E1-SE, we found two common realizations made by students in the process of reflecting on exam 1. Both are related to the self-regulated learning skill of understanding the task. The first theme was the realization that engagement with the material is required. Thirty-three students (13.5%) recognized that success in the course would require active approaches to learning.

In response to what did not work: “Just attending class and doing the homework is not enough.”

In response to what did not work: “Only studying the content and not applying them [sic] to understand completely. Studying concepts individually and not connecting them to make sense.”

The second theme was the realization that more exposure to the material is required. More than half of the students (55.5%) saw the need to spend more time with the material, yet many still focused on retention of information.

“I need to not just cram for the exam, if I can at least grasp all the info before an exam by studying more my (study) sessions would be more a review than trying to learn everything over a weekend.”

“Your brain cannot remember information over a two day period. Spreading the time out allows for less stress and more memorization and understanding.”

Both of these realizations could further metacognitive regulation, because not only did the students recognize that their study plans were not effective, but they were also beginning to understand why they were not effective. This understanding may have helped them select strategies aimed at increasing engagement and exposure. Additionally, these realizations relate to the self-regulated learning skill of being able to assess the assignment. In this case, students were beginning to understand the nature of the exams in the course and what these assessments might require of them.

Time Spent Studying for Exam 2

Because the need to devote more time to studying was a common statement on the exam 1 self-evaluation, we asked students if they spent more time preparing for exam 2 than they did for exam 1. On the E2-FT, 183 of 245 students (74.7%) said they spent more time studying for exam 2. When asked how they were able to spend more time, students reported that they: started earlier, scheduled time to study, and spread their studying out over time.

“Instead of only doing work for one class for an extended period of time I would switch between classes so that I studied each over a longer period of time.”

Some students mentioned that they were motivated to improve their grades, which allowed them to make studying a priority.

“I was able to put more time in because I made more time for studying and cut off some free time I was doing nothing with.”

Sixty-two students (25.3%) said they did not spend more time. While a few mentioned this was because they did not need to, most of these students gave other reasons. Common factors included: work, illness, other classes, and factors outside of school. Some indicated that they did not make studying a priority.

In response to why more time was not spent: “Time escaped me.”

“I didn’t have much time and didn’t get around to it when I did have time.”

Following the experience of exam 1, most students were able to spend more time studying for exam 2. We were curious to know whether following a new study plan would be as achievable.

Study Plan for Exam 2

We were surprised that 241 students (98.4%) reported on E1-SE that they would change their exam 1 study plans to prepare for exam 2. On E2-FT, we asked students whether they followed their study plans for exam 2, and if so, how they were able to do so. We coded for evidence that they used the learning strategies they outlined in the E1-SE assignment. We found that 125 out of 245 students (51.0%) followed key parts of their plans. Although students were generally unable to explain the mechanism that enabled them to change, some described a commitment to studying as an important factor.

“I made myself follow my study plan. I went to the library and told myself that I could not leave until I had studied for two hours, not counting breaks in concentration. I did this every day leading up to the test starting the Friday before.”

“I actually sat down and studied, while on exam one, I never actually studied because I thought I knew the material.”

Other students described a mind-set or motivation that may have allowed them to try new learning strategies:

“I felt that by changing some of the ways I study it would help me more than just studying longer.”

“I was not happy about the first exam’s result so I was motivated to increase my grade. Thus, I studied more materials.”

The other 49.0% (120 out of 245 students) did not provide evidence that they followed their study plans for exam 2. When asked why they were not able to, some students explained that they thought they did not need to.

“Although I planned to read over the questions and do the study questions every week, instead I assumed I understood it because I could explain it but I struggled applying it.”

Some students reverted to using learning strategies for exam 2 that they previously said did not work for them on exam 1. Other students selected more active approaches to studying on E1-SE and then replaced them with more passive approaches. For example, on E1-SE, one student planned to test his understanding of the material by taking the practice test without notes and answering the study questions without notes for exam 2, but reported on E2-FT that he decided to watch online biology videos instead. Other students gave reasons why their plans were not easy to follow.

“I didn’t read as much of the textbook as I would have liked. It’s super painful to read.”

“I studied a little more but if I study too far in advance, I can’t remember the material I studied.”

Data from E2-FT suggest that while spending more time studying was doable for most students, trying a new learning strategy was not as easy to carry out. Most students were willing to change, but because it is difficult to change, students may need more help in order to do this.

DISCUSSION

We studied metacognition in undergraduates by using exam preparation as a mechanism for investigating their monitoring, evaluating, and planning skills. We found evidence that approximately half of the students (49.0%) monitored the effectiveness of the learning strategies they used for exam 1 (Table 1). These students could identify strategies that were helpful and unhelpful, and they provided explanations for their answers. While monitoring, some students gained a better understanding of the task, which is another important self-regulated learning skill (Ambrose, 2010; Meijer et al., 2012). Students reported that more engagement with the material was required (13.5%), and greater exposure to the concepts was necessary (55.5%). These realizations are valuable, because they prime students to use metacognitive-regulation skills such as planning more effectively. The rest of the students (51.0%) were not able to monitor the effectiveness of their learning strategies and usually wrote ambiguous statements to explain why all or none of their approaches worked. This is not surprising, given that monitoring is a skill that develops later in life than evaluating and planning (Schraw, 1998), and it can be weak even in adults (Pressley and Ghatala, 1990; Alexander et al., 1995).

Although the monitoring data were interesting, what was most intriguing was the fact that nearly all of the students (98.4%) were willing to select new learning strategies as part of their exam 2 study plans on the E1-SE. As researchers who are invested in teaching, we found this openness to change encouraging. It suggests that the students were beginning to reflect on their approach to studying, which is a first step toward using metacognition to regulate learning. While analyzing student responses for evidence of evaluating and planning, we found that 53.5% of the students did not seem to know which new strategies would be appropriate, despite a willingness to change (Table 2). They selected strategies from the list provided, but they could not give a reason why alternative approaches might be helpful to them (Table 2, Evaluating and planning insufficient evidence/willing to change). Conversely, we were impressed by the percentage of students (44.9%) who could reflect on their first study plans and select strategies based on their exam 1 experiences (Table 2, Evaluating and planning sufficient evidence).

Because metacognitive regulation involves the actions we take to learn (Sandi-Urena et al., 2011), we wanted to know whether students who reflected on exam 1 and adjusted their plans for exam 2 carried out the new plans they made. As part of the E2-FT, we asked whether or not students followed their study plans. We found that only half of the students reported following the key parts of their plans that related to their exam 1 experience. When the other half was asked why they did not follow their plans, these students explained that they did not need to change and/or they did not know how to change. This fits with a previous study that showed introductory biology students do not use active-learning strategies if their courses do not require them to (Stanger-Hall, 2012), and they will not use deep approaches to learning if they do not know how to (Tomanek and Montplaisir, 2004). We conclude that prompting students to use metacognitive-regulation skills is enough for some students to take action, but others need additional instruction in order to respond optimally (Zohar and Barzilai, 2013).

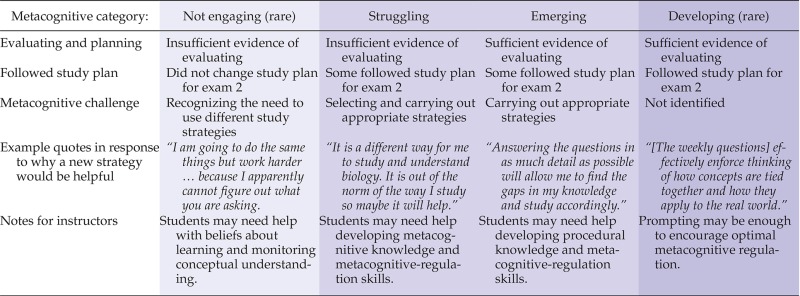

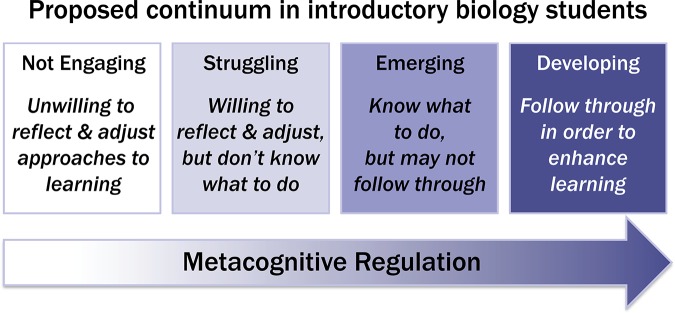

Using our data, we have outlined a working model to represent potential categories of metacognitive-regulation development represented in this population of undergraduates. We suggest four possible metacognitive-regulation categories: “not engaging,” “struggling,” “emerging,” and “developing” (Table 3 and Figure 1), which we propose exist in a continuum. We then use the data to generate hypotheses, make predictions, and provide suggestions for instructors.

Table 3.

Proposed continuum of metacognitive regulation in introductory biology studentsa

|

aUsing data from the E1-SE and the E2-FT assignments, we propose a continuum of metacognitive regulation in introductory students and make suggestions for instructors on how to help students in each category.

Figure 1.

We propose four categories of metacognitive regulation in introductory biology students based our data. Most of the students in this study were in the “struggling” through “emerging” parts of the continuum.

“Not Engaging” in Metacognitive Regulation

Very few students were unwilling to change any parts of their study plans. We placed these students in a category in the continuum that we describe as “not engaging” in metacognitive regulation (Table 3). They saw no reason to alter their approach to studying, and therefore did not select new learning strategies. Their plans for exam 2 remained the same as for exam 1. Although we did not set out to study agency and self-efficacy, these ideas appeared in the students’ assignments. Briefly, agency refers to the belief that learning is your responsibility (Baxter Magolda, 2000), while self-efficacy is the belief that you are capable of learning (Bandura, 1997; Estrada-Hollenbeck et al., 2011; Trujillo and Tanner, 2014). Both agency and self-efficacy have the potential to affect students’ metacognitive regulation. We found evidence that students in the “not engaging” category felt they were capable of learning but did not necessarily see it as their responsibility:

“I clearly could not apply the knowledge to the type of questions you asked. Rather than straight forward questions that I can confidently answer correctly, these are curveball questions that do not test my knowledge but how well I can interpret the meaning of the question.”

Hypothesis: Students “Not Engaging” in Metacognitive Regulation Lack Agency

We hypothesize that students in the “not engaging” category lack agency and do not believe they can determine their own success in a course. They may also have a dualistic view, seeing the world in “black and white,” where there is only right or wrong (Perry, 1968; Markwell and Courtney, 2006). Students in a dualism position believe that the instructor knows everything and that it is the instructor’s job to teach students the correct answers (Perry, 1968; Markwell and Courtney, 2006). If this hypothesis holds true, then we predict that lack of agency will prevent “not engaging” students from recognizing the need to use metacognitive-regulation skills. For example, if a student believes that instructors determine student performance, then he or she is unlikely to focus on monitoring, evaluating, and planning his or her strategies for studying.

Hypothesis: Students “Not Engaging” in Metacognitive Regulation Are Unable to Monitor Their Conceptual Understanding of Material

We also hypothesize that “not engaging” students struggle with the ability to accurately assess what they do and do not know. If this holds true, then we predict that inability to monitor conceptual understanding will be another barrier to metacognitive regulation. For example, if students believe that they have learned course material based on familiarity rather than true understanding, then they will not see a reason to reflect on their approaches to learning (Tobias and Everson, 2002). In addressing the “not engaging” category, we recommend that instructors include formative assessments that help students identify concepts they do not truly understand. For example, regular online quizzes with immediate feedback on incorrect answers could help students in the “not engaging” category confront their misconceptions.

“Struggling” with Metacognitive Regulation

Our data indicate that most of the students in this study fit into categories we describe as “struggling” with or “emerging” metacognitive regulation. Students in the “struggling” category (Table 3) were willing to change their study plans, but they often used noncommittal language such as “might” and “try” regarding new learning strategies. They did not choose strategies that addressed issues they reported having. For example, a student wrote on his E1-SE that he could narrow down the answers to multiple-choice questions to the two most likely options, but he did not have the depth of knowledge to select the correct one. Yet this student’s exam 2 study plan centered on reading chapter summaries, which was not likely to give him the level of understanding he needed. Students in the “struggling” category also selected passive approaches that involved “going over” and “looking over” course material. This is consistent with recent research showing that undergraduate chemistry students primarily focus on reviewing material when studying (Lopez et al., 2013). “Struggling” students seemed to have agency, but they lacked self-efficacy and were not confident in their ability to select appropriate learning strategies. Specifically, students in the “struggling” category indicated that they were willing to modify their study plan, but some reported that they did not know what to change.

“Honestly I don’t know what to do. Even starting to study a week in advance, I got a lower score then when I (didn’t study) at all. I do not know what works for me in this class.”

Hypothesis: Students “Struggling” with Metacognition Lack Metacognitive Knowledge

We hypothesize that students in the “struggling” with metacognitive-regulation category lack metacognitive knowledge. Metacognitive knowledge can be divided into declarative knowledge, procedural knowledge, and conditional knowledge (Brown, 1987; Jacobs and Paris, 1987; Schraw and Moshman, 1995). Declarative knowledge includes knowing about yourself as a learner, procedural knowledge involves knowing what learning strategies exist and how to use them, and conditional knowledge entails knowing when and why to use a learning strategies (Schraw and Moshman, 1995). In this study, we provided students with a list of study approaches used by past students who earned a grade of “A” on exams in the course. This could have helped students become aware of existing strategies, but it would not help them with procedural and conditional knowledge if they did not know how, when, and why to utilize an approach they picked from the list. If “struggling” students lack metacognitive knowledge, then we predict that they can move to the “emerging” category (see “Emerging” Metacognitive Regulation) if they are given direct instruction in learning strategies.

To help students develop metacognitive knowledge, we recommend that instructors create class activities that introduce students to learning strategies and help students understand how to execute those strategies (procedural knowledge). Strategies should be explored in the context of course material (Dignath and Büttner, 2008), so students can begin to recognize when and why to use them (conditional knowledge; Meijer et al., 2012). For example, an instructor could explain what a concept map is and what it is used for (Novak, 1990) and then model this approach using a recent course topic while explaining his or her thought processes (Schraw, 1998). This think-aloud technique gives students insights into the metacognition the instructor uses while carrying out the strategy (Kolencik and Hillwig, 2011). Following this demonstration, the instructor could ask students to generate a list of topics that are best served by a concept map and then facilitate a class discussion of student ideas. This type of approach could help students in the “struggling” category gain metacognitive knowledge, which would help them select learning strategies that better align with their studying needs.

“Emerging” Metacognitive Regulation

Other students in our study seemed to be in a later part of the continuum, and they could be characterized as possessing “emerging” metacognitive regulation (Table 3). These students recognized a need to change their study plans for exam 2, but in contrast to “struggling” students, those with “emerging” metacognitive regulation could select appropriate learning strategies. For example, a student wrote that she struggled to make connections between concepts on exam 1 because she focused on terms while studying. For exam 2, she planned to draw diagrams to help identify relationships between concepts. “Emerging” students also recognized the importance of trying to understand the material rather than just retain it. Students in the “emerging” category did not always follow their study plans but seemed to have both agency and self-efficacy.

Example of agency: “[The exam] was a little more difficult than I expected, I’m sure that this is because I wasn’t as prepared as I should/could have been.”

Example of self-efficacy in response to why she would continue using a learning strategy: “I’m able to understand the concepts more and by doing this I can sometimes find answers to my own questions about a topic or subject.”

Hypothesis: Students with “Emerging” Metacognitive Regulation Have Conditional Knowledge, but Lack Procedural Knowledge

Students in this category were willing to change their study plans and could select appropriate learning strategies but did not always carry them out. We hypothesize that “emerging” students lack metacognitive knowledge and, specifically, procedural knowledge of how to use approaches to learning. Whereas “struggling” students also lacked both procedural and conditional knowledge, our data suggest that “emerging” students know what learning strategies exist and when and why to use them. We predict that “emerging” students can move to the “developing” category (see “Developing” Metacognitive Regulation) of metacognitive regulation if they are provided with training in procedural knowledge.

To help students with procedural knowledge, we suggest that instructors follow the three steps for metacognitive skill development outlined by Veenman et al. (2006). First, instructors should model learning strategies using relevant course topics, as recommended for “struggling” students. It is also valuable to have an experienced student model the learning strategy, because a peer’s ability to use the strategy will be closer to that of the “emerging” students (Schraw et al., 2006). This will not only help students better understand the steps involved in a learning strategy, but it will also make it more difficult for them to claim that a particular approach is not doable (Bandura, 1997). Second, instructors should also be very explicit about the benefits of each learning strategy, which may help students overcome the perceived difficulty involved in trying something new. Third, it is recommended that instructors train students over time and provide ample opportunity for practice (Tomanek and Montplaisir, 2004), so students can fully understand how to use the learning strategies. Students will also benefit from instructor feedback as they try to acquire new metacognitive knowledge skills (Schraw et al., 2006). Further development of procedural knowledge could help “emerging” students move to the next category of metacognitive regulation.

“Developing” Metacognitive Regulation

We found a small percentage of students in a “developing” metacognitive-regulation category (Table 3). These students recognized the benefit of adjusting their study plans and could select learning strategies for exam 2 that appropriately addressed issues they had in preparing for exam 1. They reported on E2-FT that they had followed their study plans for exam 2. Interestingly, “developing” students who earned high grades on exam 1 still used their metacognitive-regulation skills to enhance their learning. For example, a student who scored 100% on exam 1 identified an ineffective strategy that he would no longer use (flashcards) and selected new strategies (creating visual representations of concepts and integrating notes from different sources) with the goal of refining his study plan. Another student who earned a high grade reported that his exam 1 study plan was effective, but he still wanted to adjust his approach based on what he learned about exams in the course.

“I will spend more time thinking about how the concepts can be applied to real-world situations; there were a lot more critical-thinking questions on the exam than simple facts.”

“Developing” students focused on studying to learn rather than to earn high grades. In one theoretical framework, this goal is associated with a mastery approach to learning and is contrasted with a performance approach focused on showing competence (Ames and Archer, 1988; Heyman and Dweck, 1992). In another framework, this goal is categorized as a deep approach to learning, with a focus on meaning rather than memorization (Biggs, 1987). While other students reported that they selected strategies because those strategies would help them memorize material so they could do well on the exam, “developing” students focused on understanding the concepts. For example, a student explained why it would be helpful to answer study questions without her notes.

“It requires that I fully understand on my own and can explain it. I will know I understand the information if I can say what it is without needing to ‘jog my memory.’”

Students in this category also made statements that demonstrated their agency and self-efficacy. Self-efficacy in undergraduate students strongly correlates with metacognition (Coutinho and Neuman, 2008). Students who believe they are capable of learning are likely to use metacognition to improve their understanding.

“Some days, I get behind and think I’ll learn it later on. I know that the concepts in this course build upon each other so I should take the time to understand main points for each topic.”

Prompts such as the questions posed in our postexam assignments can be effective in encouraging “developing” students to engage in metacognitive regulation. This fits with data indicating that a mastery approach to learning is correlated with use of metacognition (Coutinho and Neuman, 2008). We hypothesize that more “developing” students might be found in upper-division biology courses. We are currently studying students in 300- and 400-level biology courses to further document metacognition in “developing” students. We plan to use this data to train other students to emulate “developing” students’ use of metacognitive-regulation skills.

Limitations of Study/Alternative Explanations

We gained several insights into the metacognitive regulation used by introductory biology students through metacognition assignments. Nevertheless, it is important to acknowledge that these are “offline assessments” occurring before or after a metacognitive event (van Hout-Wolters, 2000; Veenman et al., 2006). This type of assessment relies on a student’s ability to accurately remember and report what he or she did. For example, as noted in the Methods, we did not know whether students actually followed their plans or not. We looked at whether or not they checked “yes” when asked if they followed their plans, and we also considered whether the students provided any evidence to the contrary. In addition to the limitation of self-report, written data do not allow researchers to ask participants follow-up questions to clarify their intended meaning. To address these concerns, in our current studies, we are interviewing students about their studying, and we will use think-aloud protocols to do “online assessment” of metacognition as it is happening (Meijer et al., 2012).

We were surprised that so few students at the introductory level fit into the “not engaging” category of metacognitive regulation based on their postexam assignments. It is possible that students felt pressure to write what they thought the instructor wanted or that they may have had social desirability bias, the desire to show themselves in a favorable light (Gonyea, 2005). We tried to minimize this by having the graduate TAs collect the assignments and by giving full credit for completing the assignments, no matter what the responses. An alternative explanation is that students taking this introductory biology course were primarily science and pre–professional majors who may be more invested in doing well in the course. It would be interesting to study students in a nonmajors introductory course to see whether undergraduates’ use of metacognitive-regulation skills depends on interest in the area of study.

CONCLUSION

To begin to characterize metacognition in undergraduates, we asked what metacognitive-regulation skills are evident in students taking an introductory biology course. Using exam preparation as a vehicle for studying metacognition, we found that approximately half of the students were able to monitor, evaluate, and plan the learning strategies they used to prepare for exams. Interestingly, nearly all of the students were willing to reflect and adjust their study plans, but many did not identify appropriate learning strategies, and many did not carry out their new plans. We conclude that postexam assignments encouraged students to engage in metacognitive regulation, but many of the students needed additional help with metacognitive knowledge before they could fully benefit from the metacognition prompts in these exercises.

We have proposed a continuum of metacognitive-regulation development that represents what we saw in introductory biology students (Figure 1). We have used this model to formulate new research hypotheses and to help instructors target metacognition more effectively in the classroom. By helping students enhance their metacognitive-regulation skills, we will help improve their performance in our courses and others. Importantly, we will also help them become self-regulated learners, which will serve them well beyond their time in college.

Supplementary Material

ACKNOWLEDGMENTS

The authors thank Dr. Steve Hines for his encouragement of this project, Dr. Tessa Andrews for helpful discussions on this study, and Drs. Peggy Brickman and Paula Lemons for valuable feedback on the manuscript. This work was funded by generous start-up funds from the University of Georgia (to J.D.S.) and a Washington State University College of Veterinary Medicine Education Research Grant (to J.D.S. and X.N.N.). The initial design of this study was developed as part of the Biology Scholars Program Research Residency (J.D.S.).

REFERENCES

- Alexander JM, Carr M, Schwanenflugel PJ. Development of metacognition in gifted children—directions for future research. Dev Rev. 1995;15(1):1–37. [Google Scholar]

- Ambrose SA. How Learning Works: Seven Research-Based Principles for Smart Teaching. 1st ed. San Francisco, CA: Jossey-Bass; 2010. [Google Scholar]

- Ames C, Archer J. Achievement goals in the classroom: students’ learning strategies and motivation processes. J Educ Psychol. 1988;80:260. [Google Scholar]

- Bandura A. Self-Efficacy: The Exercise of Control. New York: Freeman; 1997. [Google Scholar]

- Baxter Magolda MB. Interpersonal maturity: integrating agency and communion. J Coll Stud Dev. 2000;41:141–156. [Google Scholar]

- Biggs JB. Student Approaches to Learning and Studying, Research. Hawthorne, Victoria: Australian Council for Education Research; 1987. [Google Scholar]

- Bogdan R, Biklen SK. Qualitative Research for education: An Introduction to Theories and Methods. Boston, MA: Pearson; 2003. [Google Scholar]

- Bransford JD, Brown AL, Cocking RR. How People Learn: Brain, Mind, Experience, and School. expanded ed. Washington, DC: National Academies Press; 2000. [Google Scholar]

- Brown AL. Knowing when, where, and how to remember: a problem of metacognition. In: Glaser R, editor. In: Advances in Instructional Psychology, vol. 1. ed. Hillsdale, NJ: Erlbaum; 1978. pp. 77–165. [Google Scholar]

- Brown AL. Metacognition, executive control, self-regulation, and other more mysterious mechanisms. In: Kluwe FWR, editor. In: Metacognition, Motivation, and Understanding. ed. Hillsdale, NJ: Erlbaum; 1987. pp. 65–116. [Google Scholar]

- Coutinho S, Neuman G. A model of metacognition, achievement goal orientation, learning style and self-efficacy. Learn Environ Res. 2008;11:131–151. [Google Scholar]

- Denzin NK, Lincoln YS. Introduction: the discipline and practice of qualitative research. In: Denzin NK, Lincoln YS, editors. In: The Landscape of Qualitative Research: Theories and Issues. 2nd ed. Thousand Oaks, CA: Sage; pp. 1–46. [Google Scholar]

- Denzin NK, Lincoln YS. Introduction: the discipline and practice of qualitative research. In: Denzin NK, Lincoln YS, editors. In: Handbook of Qualitative Research. 3rd ed. Thousand Oaks, CA: Sage; 2005. pp. 1–32. [Google Scholar]

- Dignath C, Büttner G. Components of fostering self-regulated learning among students. A meta-analysis on intervention studies at primary and secondary school level. Metacogn Learn. 2008;3:231–264. [Google Scholar]

- Dinsmore D, Alexander P, Loughlin S. Focusing the conceptual lens on metacognition, self-regulation, and self-regulated learning. Educ Psychol Rev. 2008;20:391–409. [Google Scholar]

- Dweck CS, Leggett EL. A social cognitive approach to motivation and personality. Psychol Rev. 1988;95:256–273. [Google Scholar]

- Estrada-Hollenbeck M, Woodcock A, Hernandez PR, Schultz PW. Toward a model of social influence that explains minority student integration into the scientific community. J Educ Psychol. 2011;103:206–222. doi: 10.1037/a0020743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flavell JH. Theory-of-mind development: retrospect and prospect. Merrill Palmer Q. 2004;50:274–290. [Google Scholar]

- Gonyea RM. Self-reported data in institutional research: review and recommendations. New Dir Inst Res. 2005;2005(127):73–89. [Google Scholar]

- Heyman GD, Dweck CS. Achievement goals and intrinsic motivation: their relation and their role in adaptive motivation. Motiv Emotion. 1992;16:231–247. [Google Scholar]

- Jacobs JE, Paris SG. Childrens metacognition about reading—issues in definition, measurement, and instruction. Educ Psychol. 1987;22:255–278. [Google Scholar]

- Kolencik PL, Hillwig SA. Encouraging Metacognition: Supporting Learners through Metacognitive Teaching Strategies. New York: Lang; 2011. [Google Scholar]

- Kuhn D. Metacognitive development. Curr Dir Psychol Sci. 2000;9:178–181. [Google Scholar]

- Lockl K, Schneider W. Precursors of metamemory in young children: the role of theory of mind and metacognitive vocabulary. Metacogn Learn. 2006;1:15–31. [Google Scholar]

- Lopez EJ, Nandagopal K, Shavelson RJ, Szu E, Penn J. Self-regulated learning study strategies and academic performance in undergraduate organic chemistry: An investigation examining ethnically diverse students. J Res Sci Teach. 2013;50:660–676. [Google Scholar]

- Markwell J, Courtney S. Cognitive development and the complexities of the undergraduate learner in the science classroom. Biochem Mol Biol Educ. 2006;34:267–271. doi: 10.1002/bmb.2006.494034042629. [DOI] [PubMed] [Google Scholar]

- Meijer J, Veenman MV, van Hout-Wolters B. Multi-domain, multi-method measures of metacognitive activity: what is all the fuss about metacognition. . . indeed. Res Papers Educ. 2012;27:597–627. [Google Scholar]

- Novak JD. Concept mapping: a useful tool for science education. J Res Sci Teach. 1990;27:937–949. [Google Scholar]

- Perry WG. Forms of Ethical and Intellectual Development in the College Years: A Scheme. San Francisco: Jossey-Bass; 1968. [Google Scholar]

- Pieschl S. Metacognitive calibration-an extended conceptualization and potential applications. Metacogn Learn. 2009;4:3–31. [Google Scholar]

- Pintrich PR. The role of metacognitive knowledge in learning, teaching, and assessing. Theory Pract. 2002;41:219–225. [Google Scholar]

- Pressley M, Ghatala ES. Self-regulated learning: monitoring learning from text. Educ Psychol. 1990;25:19–33. [Google Scholar]

- Rickey D, Stacy AM. The role of metacognition in learning chemistry. J Chem Educ. 2000;77:915–920. [Google Scholar]

- Saldaña J. The Coding Manual for Qualitative Researchers. 2nd ed. Los Angeles: Sage; 2013. [Google Scholar]

- Sandi-Urena S, Cooper MM, Stevens RH. Enhancement of metacognition use and awareness by means of a collaborative intervention. Int J Sci Educ. 2011;33:323–340. [Google Scholar]

- Schraw G. Promoting general metacognitive awareness. Instruct Sci. 1998;26:113–125. [Google Scholar]

- Schraw G, Crippen K, Hartley K. Promoting self-regulation in science education: metacognition as part of a broader perspective on learning. Res Sci Educ. 2006;36:111–139. [Google Scholar]

- Schraw G, Moshman D. Metacognitive theories. Educ Psychol Rev. 1995;7:351–371. [Google Scholar]

- Stanger-Hall KF. Multiple-choice exams: an obstacle for higher-level thinking in introductory science classes. CBE Life Sci Educ. 2012;11:294–306. doi: 10.1187/cbe.11-11-0100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobias S, Everson HT. Knowing What You Know and What You Don’t: Further Research on Metacognitive Knowledge Monitoring. New York: College Board; 2002. [Google Scholar]

- Tomanek D, Montplaisir L. Students’ studying and approaches to learning in introductory biology. Cell Biol Educ. 2004;3:253–262. doi: 10.1187/cbe.04-06-0041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trujillo G, Tanner KD. Considering the role of affect in learning: monitoring students’ self-efficacy, sense of belonging, and science identity. CBE Life Sci Educ. 2014;13:6–15. doi: 10.1187/cbe.13-12-0241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hout-Wolters B. Assessing active self-directed learning. In: Simons RJ, van der Linden J, Duffy T, editors. In: New Learning. ed. Dordrecht, Netherlands: Springer; 2000. pp. 83–99. [Google Scholar]

- Veenman MV, Spaans MA. Relation between intellectual and metacognitive skills: age and task differences. Learn Individ Differ. 2005;15:159–176. [Google Scholar]

- Veenman MV, van Hout-Wolters B, Afflerbach P. Metacognition and learning: conceptual and methodological considerations. Metacogn Learn. 2006;1:3–14. [Google Scholar]

- Veenman MV, Wilhelm P, Beishuizen JJ. The relation between intellectual and metacognitive skills from a developmental perspective. Learn Instr. 2004;14:89–109. [Google Scholar]

- Vukman KB. Developmental differences in metacognition and their connections with cognitive development in adulthood. J Adult Dev. 2005;12:211–221. [Google Scholar]

- Vukman KB, Licardo M. How cognitive, metacognitive, motivational and emotional self-regulation influence school performance in adolescence and early adulthood. Educ Stud. 2009;36:259–268. [Google Scholar]

- Wang MC, Haertel GD, Walberg HJ. What influences learning? A content analysis of review literature. J Educ Res. 1990;84:30–43. [Google Scholar]

- Weston C, Gandell T, Beauchamp J, McAlpine L, Wiseman C, Beauchamp C. Analyzing interview data: the development and evolution of a coding system. Qual Sociol. 2001;24:381–400. [Google Scholar]

- Whitebread D, Coltman P, Pasternak DP, Sangster C, Grau V, Bingham S, Almeqdad Q, Demetriou D. The development of two observational tools for assessing metacognition and self-regulated learning in young children. Metacogn Learn. 2009;4:63–85. [Google Scholar]

- Yin RK. Qualitative Research from Start to Finish. New York: Guilford; 2010. [Google Scholar]

- Young A, Fry JD. Metacognitive awareness and academic achievement in college students. J Sch Teach Learn. 2008;8(2):1–10. [Google Scholar]

- Ziegler B, Montplaisir L. Student perceived and determined knowledge of biology concepts in an upper-level biology course. CBE Life Sci Educ. 2014;13:322–330. doi: 10.1187/cbe.13-09-0175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmerman BJ. Becoming a self-regulated learner—which are the key subprocesses? Contemp Educ Psychol. 1986;11:307–313. [Google Scholar]

- Zohar A, Barzilai S. A review of research on metacognition in science education: current and future directions. Stud Sci Educ. 2013;49:121–169. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.