Abstract.

Glaucoma is neurodegenerative disease characterized by distinctive changes in the optic nerve head and visual field. Without treatment, glaucoma can lead to permanent blindness. Therefore, monitoring glaucoma progression is important to detect uncontrolled disease and the possible need for therapy advancement. In this context, three-dimensional (3-D) spectral domain optical coherence tomography (SD-OCT) has been commonly used in the diagnosis and management of glaucoma patients. We present a new framework for detection of glaucoma progression using 3-D SD-OCT images. In contrast to previous works that use the retinal nerve fiber layer thickness measurement provided by commercially available instruments, we consider the whole 3-D volume for change detection. To account for the spatial voxel dependency, we propose the use of the Markov random field (MRF) model as a prior for the change detection map. In order to improve the robustness of the proposed approach, a nonlocal strategy was adopted to define the MRF energy function. To accommodate the presence of false-positive detection, we used a fuzzy logic approach to classify a 3-D SD-OCT image into a “non-progressing” or “progressing” glaucoma class. We compared the diagnostic performance of the proposed framework to the existing methods of progression detection.

Keywords: glaucoma, nonlocal Markov field, change detection, fuzzy logic classifier

1. Introduction

Glaucoma is a leading cause of visual impairment and blindness around the world.1 It is characterized by structural changes in the optic nerve head (ONH) (neuroretinal rim and retinal nerve fiber layer (RNFL) thinning and optic nerve cupping are usually the first visible signs), and visual field defects. Glaucoma is painless and gradually damages the optic nerve, which makes patients not aware of any visual loss until the optic nerve is severely damaged. Untreated it can cause an irreversible vision loss, and hence it is critical to early detect glaucoma and to monitor its progression in order to avoid permanent damage to the ONH.

It has now been more than 150 years since physicians were able to visualize damages in the ONH associated with glaucoma using an ophthalmoscope. However, clinical examination of the ONH remains subjective, qualitative, and often with higher variabilities among observers.2

Over the past three decades, advances in technology for ocular imaging using the optical properties of the optic nerve and RNFL layer have gained widespread use in the diagnosis and management of glaucoma patients. In particular, the Heidelberg retina tomograph (HRT) (Heidelberg Engineering, Heidelberg, Germany), a confocal scanning laser technology, has been commonly used for glaucoma diagnosis since its commercialization 20 years ago.3 Several strategies have been proposed for automated change detection using HRT images. One strategy, the proper orthogonal decomposition,4 indirectly utilizes the spatial relationship among voxels by controlling the family-wise type I error rate. The Markov model was used in Ref. 5 to model the inter/intra observations dependency allowing a better glaucoma regression detection rate. However, because HRT imaging is limited to ONH surface topography, it cannot directly measure the RNFL thickness.6

In order to overcome this drawback, the three-dimensional (3-D) spectral domain optical coherence tomography (SD-OCT), an optical imaging technique, is now the standard of care for obtaining images of both the ONH and RNFL thicknesses. Several studies have been proposed for glaucoma diagnosis using SD-OCT images. Most of the studies use the peripapillary RNFL thickness measurements (i.e, circular B-scans (3.4-mm diameter, 768 A-scans) centered at the optic disk were automatically averaged to reduce speckle noise)7–11 or the ONH measurements (e.g, rim area, minimum rim width, etc.)12–14 which are either provided by the commercially available spectral-domain optical coherence tomographers or manually calculated by experts.13 In Ref. 15, authors used the 3-D volume to extract the whole RNFL thickness map instead of the peripapillary RNFL thickness and proposed a variable-size super pixel segmentation to improve the discrimination between early glaucomatous and healthy eyes. In Ref. 16, authors used the RNFL thickness map to identify and to quantify glaucoma defects. Because of the high reproducibility of the SD-OCT, many studies used the peripapillary RNFL measurements for glaucoma change detection.17–19 In Ref. 20, authors showed that the instrument built-in segmentation software is relatively robust to the image quality and the noise may lower the accuracy of the RNFL layer thickness estimation. However, the detection of glaucoma progression with optical coherence tomography (OCT) remains a challenge because when assessing structural changes over time, it is difficult to discriminate between glaucomatous structural damage and measurement variability.

In this paper, we propose a new strategy for glaucoma progression detection using 3-D SD-OCT images. This strategy is divided into two steps:

-

1.

Change detection step: it consists of detecting changes between a baseline image and a follow-up image. In contrast to previous works that use the RNFL thickness measurement provided by commercially available instruments, we consider the whole 3-D volume for change detection. Note that to the best of our knowledge, this is the first time that the whole 3-D SD-OCT volume is considered for glaucoma progression detection. In this work, we propose a fully Bayesian framework for change detection. Bayesian methods are relatively simple and offer efficient tools to include priori through a posteriori probability density functions (PDF). In this work, we propose the use of the Markov random field (MRF) to exploit the statistical correlation of intensities among the neighborhood voxels.21 In particular, consider the use of a nonlocal framework for the MRF energy function definition which has been proposed for the image denoising task.22 Indeed, the nonlocal approach has been successfully applied in several image processing applications such as image restoration23 and image segmentation.24The main idea of the nonlocal approach is to exploit repetitive structures in the image which leads to a multi-model approach using only a single observation. Moreover, in order to develop a noise robust algorithm, we propose the consideration of the change detection problem as a missing data problem where we jointly estimate the noise hyperparameters and the change detection map. Because we used the MRF model with the change detection map as the prior for the change detection map, the optimization step is intractable. Hence, we propose the use of a Monte Carlo Markov chain (MCMC) technique.25

-

2.

Classification step: it consists of classifying an SD-OCT image into the “non-progressing” and the “progressing” glaucoma classes using the estimated change detection map. For this, a threshold-based classification method is generally used to accommodate the presence of false-positive detection.4 However, the choice of the threshold may affect the robustness of the classification method. We will show that the classification results are better when we use a fuzzy set-based classifier compared with other classifiers.

This paper is divided into four sections. In Sec. 2, the proposed glaucoma change detection scheme is presented. In Sec. 3, we describe the classification scheme. Then, in Sec. 4 results obtained by applying the proposed scheme to real data are presented. Specifically, we compare the diagnostic accuracy, robustness, and efficiency of this novel proposed approach to the two existing progression detection RNFL-based approaches: the artificial neural network classifier (ANN) and the support vectors machine classifier.

2. Change Detection

Let us consider the detection of changes in a pair of 3-D SD-OCT images. The change detection problem is formulated as a multihypotheses testing problem by denoting the “no-change,” “increase change” (i.e., ONH hypertrophy), and “decrease change” (i.e., ONH atrophy) hypotheses as , , and , respectively. We denote by and two images acquired over the same eye at times and , respectively (), and co-registered. In this paper, we assume that the noise is additive, white, and normally distributed, whereas the noise-free SD-OCT images follow a gamma distribution. Indeed, the gamma distribution is widely used for fitting non-negative data such as the synthetic aperture radar images26 and the high resolution magic angle spinning nuclear magnetic resonance images27 due to its shape hyperparameters , which allow us to fit spectral data that may present a background.28 The direct model for both images and , where is the number of voxels, is then given by

| (1) |

where , , and , are the noise-free 3-D SD-OCT ONH images and , , , and are the Gaussian additive noises. As no simple expression of the distribution of the difference of two gamma distribution variables exists, we adopted for image an ratioing approach instead of the differencing image approach, which generates a ratio image resulting in the following direct model:

| (2) |

where . This model is unfortunately intractable. To overcome this problem, we propose a hierarchical change detection framework which consists of estimating the noise-free SD-OCT images and and then using the image ratio approach for change detection. The new direct model is then given by . The gamma ratio distribution is expressed as

| (3) |

Proof.

The PDF of the ratio is given by

(4) since the PDF integrates to 1.

For the sake of simplicity, we assume that and , is then given by

| (5) |

The change detection is handled through the introduction of change class assignments . Hence, the posterior probability distribution of change detection map at each voxel location (i.e., ) is given by

| (6) |

where is the normalization constant, consists of the model hyperparameters and , and is the energy function of the MRF model. In the case of an isotropic Potts model-type prior,29 the corresponding posterior energy to be minimized is

| (7) |

where is the gamma ratio probability density function of the ’th amplitude ratio belonging to the source neighboring system given the class , is the set of the gamma ratio distribution hyperparameters of the class , , is the delta Kroneker function, and is a positive parameter. Note that we opted for the 3-D eight-connexity neighboring system.

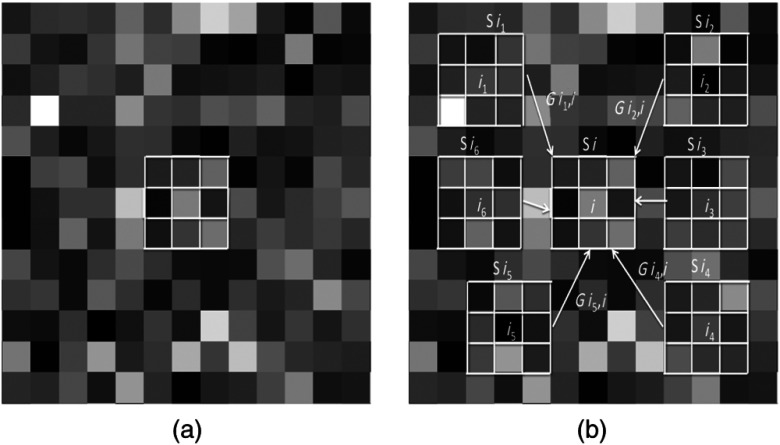

In this work, we adopt a nonlocal approach to define the energy function. The nonlocal approach aims to take advantage of the redundancy presented in the images by extending the neighboring system. This is based on the assumption that a given neighborhood around a voxel may be similar to the neighborhoods around other voxels in the same image. Figure 1 presents an example of the classical and the nonlocal neighboring systems. In contrast to the classical approach, the nonlocal approach allows us to exploit the information available in other neighborhoods called patches. The contribution of each new neighborhood or patch depends on the similarity between the main neighborhood and the other patches where is the center of the patch [Fig. 1(b)]. The more similar they are, the greater their contribution is. To this end, a weighted graph that links together voxels over the image is calculated. In Ref. 30, authors showed that the Euclidean distance is sufficient to reliably measure the similarity. Hence, the similarity between two voxels and , based on the pairwise Euclidean distance, is expressed as follows:

| (8) |

Fig. 1.

Comparison of (a) the classical approach (b) the nonlocal approach where the different neighborhoods/patches will contribute to the estimation of the voxel based and the their similarities .

The new energy function, which integrates the non-local weights associated with different patches around the voxel of interest and located in an extended neighborhood , is expressed as follows:

| (9) |

The first part of the energy function models the a priori we have on the image ratio conditioned to change and no-change hypotheses as well as the information available in other patches (information fusion). The second part models the spatial dependency by the use of the second-order isotropic Potts model with parameter . Hence, the definition of the energy function favors the generation of homogeneous areas reducing the impact of the speckle noise, which could affect the classification results of the SD-OCT images.31 The hyperparameters handle the importance of the energy terms. On one hand, allows us to tune the importance of the spatial dependency constraint. In particular, high values of correspond to a high spatial constraint. On the other hand, models the reliability of the patches and is usually assumed to take on values in [0, 1]. This constraint can easily be satisfied by choosing the appropriate a priori distribution for .

The whole set of the model hyperparameters is then given by . Without information prior knowledge, the following priors are retained: , . The non-negativity of the the hyperparameters is guaranteed through the use of the exponential densities: , , , , and . Note that the values of are empirically fixed but do not really influence the results. The hierarchical approach we proposed and the prior knowledge we opted for our model lead to an intractable posterior distribution . Therefore, we propose the use of an MCMC procedure to estimate the model parameters and hyperparameters. Indeed, the principle of the MCMC method is to generate samples drawn from the posterior densities and then to be able to achieve parameter estimation. We use a Gibbs sampler based on a stationary ergodic Markov chain allowing us to draw samples whose distribution asymptotically follows the a posteriori densities. Indeed, the Gibbs sampler decomposes the problem of generating a sample from a high dimension PDF by simulating each variable separately according to its conditional PDF. Since no classical expressions for the posterior PDFs are available, there is no direct simulation algorithm for them. To overcome this problem, we perform Hastings–Metropolis steps within the Gibbs sampler25 to ensure that all convergence properties are preserved.32 Moreover, since no knowledge on the posterior definition is available, we have opted for the random walk version of the Hastings–Metropolis algorithm. The proposal distribution is then defined as where is a distribution which does not depend on and is usually centered on 0. Hence, at each iteration, a random move is proposed from the actual position. The choice of a good proposal distribution is crucial to obtain an efficient algorithm, and the literature suggests that, for low dimension variables, a good proposal should lead to an acceptance rate of 0.5.25 The determination of a good proposal distribution can be solved by using a standard Gaussian distribution with an adaptive scale technique.33 The main Gibbs sampler steps are described in Algorithm 1. We used a burn-in period of iterations followed by another 1000 iterations for convergence (). The change detection map is estimated using the maximum a posteriori MAP estimator: , where , and .

Algorithm 1.

Sampling Algorithm.

| 1. Initialization of and |

| 2. For each iteration repeat: |

| i) Sampling from |

| ii) Sampling from |

| iii) Calculating |

| iv) Creating a configuration of basing on |

| v) Calculating |

| vi) Sampling from |

| vii) Until convergence criterion is satisfied |

3. Classification

This step aims at classifying an image into the nonprogressor or the progressor classes based on the estimated change detection map. In contrast to Ref. 4, we considered two-layer fuzzy classifier rather than a threshold-based classifier. Indeed, the fuzzy set theory is used to quantify the membership degrees of a given image to each class (i.e, progressor and nonprogressor).

As in Ref. 4, we considered two features as input for the classifier: (1)feature 1: the number of changed sites and (2) feature 2: the ratio image intensity of changed sites. In this work, only the loss of retinal height in neighboring areas is considered change due to glaucomatous progression because an increase in retinal height is considered improvement (possibly due to treatment or tissue rearrangement).

We now calculate the elementary membership degree to the nonprogressor class given each feature using an -membership function whose expression is given in Eq. (10). Note that the range defines the fuzzy region.

| (10) |

where .

| (11) |

where , , and stand for the number of changed sites and the changed site class, respectively, and are the hyperparameters of the -membership functions. The hyperparameters are estimated with the genetic algorithms34 using longitudinal SD-OCT data from a training dataset which contains 10 normal eyes, 5 nonprogressing eyes, and 10 progressing eyes. Note that the training dataset is independent of the test dataset described in Sec. 4.1.

The membership degree to the glaucoma class is given by

| (12) |

To decide if a given SD-OCT image belongs to the glaucoma progressor class, another membership function is used. We opted for the trapezoidal function denoted by as a membership function. The expression of is given by

| (13) |

where . Alternately, the genetic algorithm was used to estimate this quadruple. The decision to classify an image into the glaucoma class depends on the output of the function . As can be observed, the function depends on the quadruple as well as on and . If the image is assigned to the glaucoma progressor class. Note that an eye is considered as a progressor if one follow-up image is a progressor.

4. Experiments

The different datasets used for validation are described in the next sub-section 4.1. Then, change detection results on simulated and semi-simulated datasets are presented in sub-section 4.2. Finally, the classification results on clinical datasets are presented in sub-section 4.3.

4.1. Datasets

The proposed framework was experimentally validated on simulated, semi-simulated, and clinical datasets. Our clinical datasets consist of 117 eyes of 75 participants. Table 1 presents a description of the clinical dataset. Specifically, the first clinical dataset consists of 27 eyes from 27 participants with glaucoma progression. The glaucomatous progression was defined based on: (1) likely progression by visual field35 or (2) progression by stereo-photographic assessment of the optic disk.36 A third observer adjudicated any differences in assessment between these two observers. The second clinical dataset consists of 40 eyes from 23 healthy participants. The third clinical dataset consists of 50 eyes from 26 participants considered stable (each participant was imaged once a week for five consecutive weeks). All eligible participants were recruited from the University of California, San Diego Diagnostic Innovations in Glaucoma Study (DIGS) with at least five good quality visual field exams to ensure an accurate diagnosis. Note that for each exam, the baseline image and the follow-up image are automatically co-registered with built in instrument software. Specifically, the Spectralis SD-OCT (Heidelberg Engineering GmbH, Heidelberg, Germany) instrument features two different options to enhance reproducibility. A real-time eye-tracking device (eye tracker) compensates for involuntary eye movements during the scanning process, and a retest function assures that follow-up measurements are taken from the same area of the retina as the baseline examination. Many studies demonstrate that the retest recognition and eye tracker options led to high reproducibility and accurate and repeatable alignment of the baseline and the follow-up images.36–38

Table 1.

Description of the clinical dataset used to assess the diagnosis accuracy of the proposed framework.

| Number of patients | Number of eyes | Follow-up duration (median/interquartile range) | |

|---|---|---|---|

| Normal group | 23 | 40 | 2.03 (1.8) years |

| Stable glaucoma group | 50 | 26 | 5 (0) weeks |

| Progressing glaucoma group | 27 | 27 | 2.4 (1.6) years |

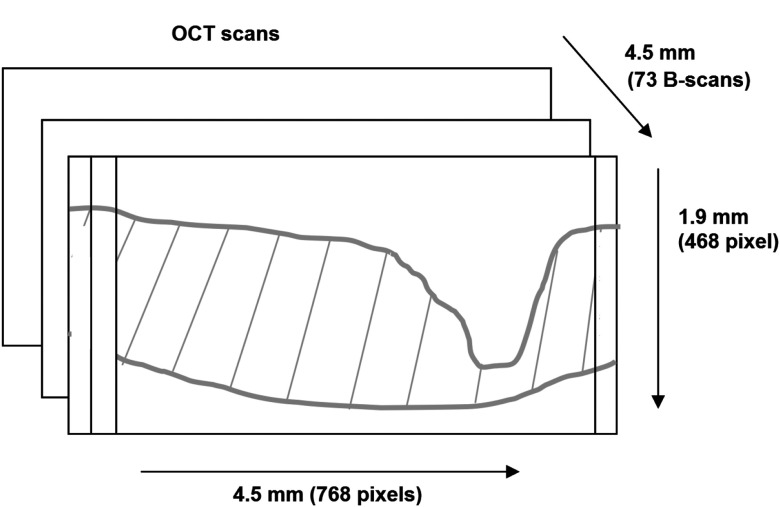

Because the performance of any change detection algorithm can be affected by the OCT instrument specifications39 (axial/lateral resolution, voxel sizes, etc.), a test with low measurement variability is important for detecting change during longitudinal analysis. This is of particular importance in the evaluation of glaucoma progression where visit-to-visit changes may be very small. Several studies have shown that Spectralis SD-OCT can reliably measure very small changes.37,40,41 Using the high resolution mode and a () pattern size centered on the ONH, we obtained an axial and lateral resolution of 3.87 and , respectively, where the distance between two consecutive B-scans is (c.f, Fig. 2). Therefore, each 3-D OCT images consists of () voxels. All experiments were performed on a workstation with Intel Core i7, 1.6 GHz CPU and 16 GB RAM memory. MATLAB® 7.14.0 (R2012a) and C++ were used for processing. The processing time is approximately 5 min per image.

Fig. 2.

Dimension of the three-dimensional (3-D) optical coherence tomography (OCT) images.

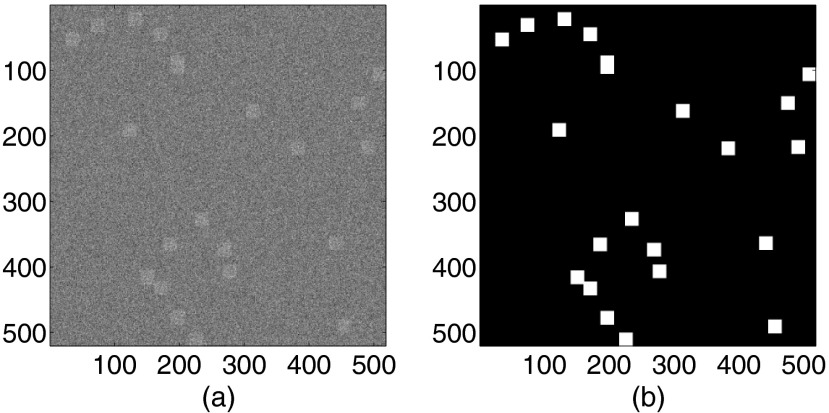

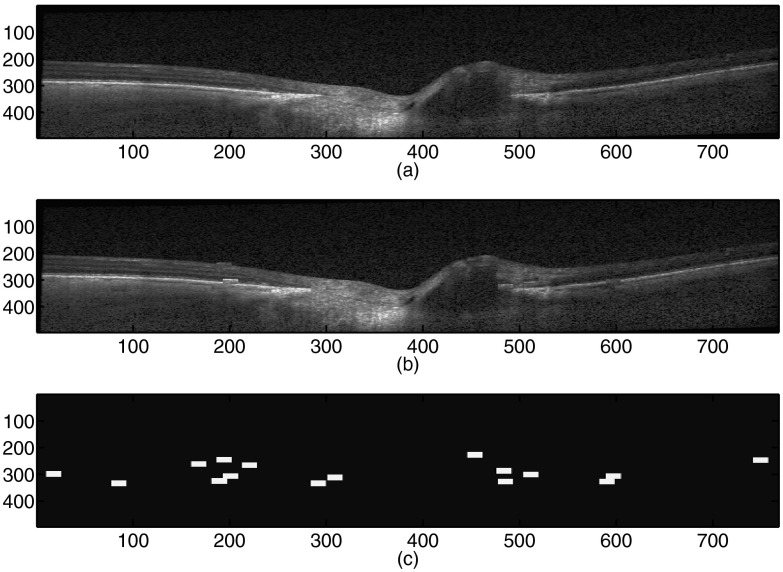

Because no ground truth for glaucomatous change in the 3-D SD-OCT images is available, we generated simulated and semi-simulated datasets to assess the proposed change detection method. The simulated dataset consists of 100 images generated according to the proposed model with different values of peak signal to noise ratio (PSNR) (30, 25, 20, and 15 dB) (). Figure 3 presents an example of a simulated image. The semi-simulated dataset was constructed from 100 normal 3-D SD-OCT images. Changes were simulated (1) by the permuting 1%, 2%, 3%, and 5% of image regions in the images, respectively, and (2) by modifying the intensities of each voxel-sized region in the images randomly with 0.5%, 0.75%, 1%, and 1.25% probabilities, respectively. The intensities were randomly modified by multiplying the real intensities by 0.5, 0.75, 1.5, or 2 with a 0.25% probability. In order to emphasize the robustness of the proposed approach, an additive Gaussian noise with different values of PSNR (30, 25, 20, and 15 dB) was added to both the original image and the semi-simulated images. Figure 4 presents an example of a semi-simulated image. Table 2 summarizes the simulated and semi-simulated datasets.

Fig. 3.

Simulated image: (a) the simulated image () and (b) the ground truth; the white boxes represent the changes.

Fig. 4.

Semi-simulated image by the permutation of image regions: (a) the original B-scan image, (b) the simulated B-scan image, (c) the ground truth: the white boxes represent the changes.

Table 2.

Description of the simulated and semi-simulated dataset used to evaluate the proposed nonlocal Bayesian Markovian approach for change detection.

| Number of images | |

|---|---|

| Simulated dataset | 100 |

| Semi-simulated dataset by region permutation | 100 |

| Semi-simulated dataset by intensity modification | 100 |

4.2. Change Detection Algorithm Assessment

In order to emphasize the benefit of the proposed change detection approach called the nonlocal Bayesian Markovian approach and particularly the use of the nonlocal approach to define the MRF energy function, we compared the proposed nonlocal Bayesian Markovian approach with three change detection methods:

-

1.

The proposed method with a classic MRF neighboring system

-

2.

The kernel -means algorithm6 with the RBF Gaussian kernel

-

3.

A Bayesian threshold-based method.42

To perform the change detection evaluation, we use the percentage of false alarm (PFA), the percentage of missed detection (PMD) and the percentage of total error (PTE) measurements defined by

where FA stands for the number of unchanged voxels that were incorrectly determined as changed ones, stands for the total number of unchanged voxels, MD stands for the number of changed voxels that were mistakenly detected as unchanged ones, and stands for the total number of changed voxels. The kappa coefficient of agreement between the ground truth and the change detection map was also calculated. The kappa statistic was originally developed by Cohen43 in order to discern the amount of agreement that could be expected to occur through chance. A detailed description of the kappa coefficient of agreement can be found.44 Strength of agreement can be categorized according to the method proposed in Ref. 45: , 0 to , 0.21 to , 0.41 to , 0.61 to , and 0.81 to perfect. Table 3 shows the results of the change detection on simulated and semi-simulated datasets. The nonlocal Bayesian Markovian approach () tends to perform better than the kernel -means (), classic MRF approach (), and the Bayesian threshold-based () methods. This means that the proposed nonlocal Markov priori we considered improves the change detection results by modeling the spatial dependency of voxels as well as the redundancy presented in the images by extending the neighboring system.

Table 3.

Mean and standard deviation (SD) of false detection, missed detection, total errors, and the kappa coefficient resulting from the proposed nonlocal Bayesian Markovian approach, the classic Markov random field (MRF) approach, the kernel -means method and the threshold method using simulated and semi-simulated.

| Semi-simulated datasets | False detection () | Missed detection () | Total errors () | Kappa |

|---|---|---|---|---|

| Nonlocal Bayesian Markovian approach | 0.37 () % | 3.12 () % | 0.61 () % | 0.84 |

| Classic MRF approach | 0.54 () % | 5.62 () % | 0.91 () % | 0.78 |

| Kernel -means | 0.89 () % | 8.03() % | 2.03() % | 0.71 |

| Threshold |

2.14() % |

10.52() % |

3.45() % |

0.64 |

| Simulated dataset |

False detection () |

Missed detection () |

Total errors () |

Kappa |

| Nonlocal Bayesian Markovian approach | 0.84 () % | 5.17 () % | 0.97 () % | 0.82 |

| Classic MRF approach | 1.24 () % | 7.05 () % | 1.51 () % | 0.72 |

| Kernel -means | 1.65 () % | 8.89 () % | 2.38 () % | 0.69 |

| Threshold | 3.78 () % | 15.25 () % | 6.41 () % | 0.53 |

Note: Bold values correspond to the best values.

4.3. Classification Results

This section describes the glaucoma progression detection results obtained with the proposed scheme. In order to perform the classification evaluation, we have retained the area under the receiver operating characteristic area under the receiver operating characteristic curve (AUROC), the sensitivity and the specificity measurements:

where TP stands for the number of true positive identifications, FN stands for the number of false-negative identifications, and FP stands for the number of false-positive identifications.

The proposed framework was experimentally validated with clinical datasets. It is important to note that we used independent training and test sets to estimate the diagnostic accuracy of the methods. Specifically, we trained our classifiers, as well as the other classifiers used for the comparison, on 10 normal eyes, 5 stable glaucoma eyes, and 10 progressor eyes. In order to emphasize the benefit of the proposed glaucoma progression detection scheme, we have compared the proposed framework called as nonlocal Bayesian-fuzzy detection scheme (BFDS) to six other methods:

-

1.

The support vector machine (SVM) classifier of the RNFL thickness. As in Ref. 46, we used the radial basis function as a kernel. The SVM was trained by a variation of Platt’s sequential minimal optimization algorithm.47 The SVM hyperparameters were determined by a global optimization technique based on simulated annealing48 (RNFL-SVM).

-

2.

The ANN classifier of the RNFL thickness. As in Ref. 46, we used the multiayer perceptrons version of the ANN49 (RNFL-ANN).

-

3.

The proposed method with the classic MRF a priori knowledge on the change detection map standard BFDS.

Results are presented in Table 4. The nonlocal BFDS method with the use of the whole 3-D SD-OCT volume instead of the RNFL measurements results in high specificity in both normal and stable glaucoma eyes (94% and 94% respectively) while maintaining good sensitivity (70%). This can be explained by the fact that we take the noise for change detection instead of the machine measurements which strongly depend on the image quality. Moreover, the nonlocal approach led to a better sensitivity compared to the classic approach. This is due to the accuracy of the changes detection of the nonlocal approach. It is important to note that the specificity is generally considered more important in glaucoma progression detection than the sensitivity since glaucoma is a slow disease50 and false negatives (people falsely identified as nonprogressing) can be eliminated in the next follow-up visits. However, if the specificity is low, this would lead to substantial follow-up costs, unnecessary treatment (e.g., surgery) and may cause much distress for people who are not progressing.

Table 4.

Diagnostic accuracy of the nonlocal BFDS, the standard BFDS, the RNFL-ANN, and the RNFL-SVM methods.

| Progressing glaucoma group sensitivity (%) | Normal group specificity (%) | Stable group specificity (%) | AUROC | |

|---|---|---|---|---|

| Nonlocal BFDS | 70 | 94 | 94 | 0.87 |

| standard BFDS | 64 | 90 | 92 | 0.83 |

| RNFL-ANN | 69 | 71 | 78 | 0.69 |

| RNFL-SVM | 52 | 68 | 79 | 0.60 |

Note: Bold values correspond to the best values.

5. Conclusion

A new framework for glaucoma progression detection has been proposed. We particularly focus on the formulation of the change problem as a missing data problem. The task of inferring the glaucomatous changes is tackled with a hierarchical MCMC algorithm that is used for the first time to our knowledge in the glaucoma diagnosis framework. The new nonlocal approach we proposed to define the MRF energy function increased the robustness of the proposed change detection scheme compared to the classic method. The validation using clinical data of the proposed approach has shown that it has a high diagnostic accuracy for detecting glaucoma progression compared with other methods.

Acknowledgments

The authors acknowledge the funding and support of the National Eye Institute (Grant Nos. U10EY14267, EY019869, EY021818, EY022039 and EY08208, EY11008, P30EY022589 and EY13959).

Biographies

Akram Belghith received his PhD degree in computer science from University of Strasbourg. He is an assistant scientist at the Hamilton Glaucoma Center, University of California, San Diego, California, USA. His current research interests include machine learning, image processing, pattern recognition, and data analysis.

Christopher Bowd received his PhD degree from Washington State University, Pullman, Washington, USA. Currently, he is a research scientist at the Hamilton Glaucoma Center, University of California, San Diego, California, USA. His current work involves early detection and monitoring of glaucoma with structural imaging of the optic nerve, visual function, and electrophysiological testing using standard and machine learning classifier-based analyses.

Felipe A. Medeiros received the graduate degree and residency from the University of Sao Paulo. He is a professor of ophthalmology and the medical director of the Hamilton Glaucoma Center, University of California San Diego, California, USA. He is also the director of vision function research at the same institution.

Madhusudhanan Balasubramanian received his PhD degree from Louisiana State University, USA. Currently, he is an assistant professor in the Department of Electrical and Computer Engineering at the University of Memphis, Tennessee, USA. His research interests include the intersection of computational science and engineering, biosolid mechanics and biofluid dynamics with emphasis in studying ocular structures, and dynamics, and the mechanism of vision loss in glaucoma.

Robert N. Weinreb received his electrical engineering degree from the Massachusetts Institute of Technology, USA, and his MD degree from Harvard Medical School, Massachusetts. He is a clinician, a surgeon, an educator, and a scientist. He is the distinguished professor of ophthalmology and the chairman of the Department of Ophthalmology at the University of California, San Diego, California, USA.

Linda M. Zangwill received her MS degree from the Harvard School of Public Health and her PhD degree from Ben-Gurion University of the Negev. She is a professor of ophthalmology at the University of California, San Diego, California, USA. Her research interests include studying the relationship between structural and functional changes in the aging and glaucoma eye, and developing computational techniques to improve glaucomatous change detection.

References

- 1.Dielemans I., et al. , “Primary open-angle glaucoma, intraocular pressure, and diabetes mellitus in the general elderly population. The Rotterdam study,” Ophthalmology 103(8), 1271 (1996). 10.1016/S0161-6420(96)30511-3 [DOI] [PubMed] [Google Scholar]

- 2.Jaffe G., Caprioli J., “Optical coherence tomography to detect and manage retinal disease and glaucoma,” Am. J. Ophthalmol. 137(1), 156–169 (2004). 10.1016/S0002-9394(03)00792-X [DOI] [PubMed] [Google Scholar]

- 3.Mistlberger A., et al. , “Heidelberg retina tomography and optical coherence tomography in normal, ocular-hypertensive, and glaucomatous eyes,” Ophthalmol. 106(10), 2027–2032 (1999). 10.1016/S0161-6420(99)90419-0 [DOI] [PubMed] [Google Scholar]

- 4.Balasubramanian M., et al. , “Localized glaucomatous change detection within the proper orthogonal decomposition framework,” Invest. Ophthalmol. Vis. Sci. 53(7), 3615–3628 (2012). 10.1167/iovs.11-8847 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Belghith A., et al. , “A Bayesian framework for glaucoma progression detection using Heidelberg retina tomograph images,” Int. J. Adv. Comput. Sci. Appl. 4(9), 223–229 (2013). 10.14569/issn.2156-5570 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chen T. C., “Spectral domain optical coherence tomography in glaucoma: qualitative and quantitative analysis of the optic nerve head and retinal nerve fiber layer (an AOS thesis),” Trans. Am. Ophthalmol. Soc. 107, 254–281 (2009). [PMC free article] [PubMed] [Google Scholar]

- 7.Mwanza J.-C., et al. , “Ability of cirrus HD-OCT optic nerve head parameters to discriminate normal from glaucomatous eyes,” Ophthalmology 118(2), 241–248 (2011). 10.1016/j.ophtha.2010.06.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cho J. W., et al. , “Detection of glaucoma by spectral domain-scanning laser ophthalmoscopy/optical coherence tomography (SD-SLO/OCT) and time domain optical coherence tomography,” J. Glaucoma 20(1), 15–20 (2011). 10.1097/IJG.0b013e3181d1d332 [DOI] [PubMed] [Google Scholar]

- 9.Seong M., et al. , “Macular and peripapillary retinal nerve fiber layer measurements by spectral domain optical coherence tomography in normal-tension glaucoma,” Invest. Ophthalmol. Vis. Sci. 51(3), 1446–1452 (2010). 10.1167/iovs.09-4258 [DOI] [PubMed] [Google Scholar]

- 10.Leite M. T., et al. , “Comparison of the diagnostic accuracies of the spectralis, cirrus, and rtvue optical coherence tomography devices in glaucoma,” Ophthalmology 118(7), 1334–1339 (2011). 10.1016/j.ophtha.2010.11.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kotowski J., et al. , “Glaucoma discrimination of segmented cirrus spectral domain optical coherence tomography (SD-OCT) macular scans,” Br. J. Ophthalmol. 96(11), 1420–1425 (2012). 10.1136/bjophthalmol-2011-301021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gardiner S. K., et al. , “A method to estimate the amount of neuroretinal rim tissue in glaucoma: comparison with current methods for measuring rim area,” Am. J. Ophthalmol. 157(3), 540–549 (2014). 10.1016/j.ajo.2013.11.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chauhan B. C., et al. , “Enhanced detection of open-angle glaucoma with an anatomically accurate optical coherence tomography–derived neuroretinal rim parameter,” Ophthalmology 120(3), 535–543 (2013). 10.1016/j.ophtha.2012.09.055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Patel N., Sullivan-Mee M., Harwerth R. S., “The relationship between retinal nerve fiber layer thickness and optic nerve head neuroretinal rim tissue in glaucoma,” Invest. Ophthalmol. Vis. Sci. 55(10), 6802–6816 (2014). 10.1167/iovs.14-14191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Xu J., et al. , “Three-dimensional spectral-domain optical coherence tomography data analysis for glaucoma detection,” PloS One 8(2), e55476 (2013). 10.1371/journal.pone.0055476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shin J. W., et al. , “Diffuse retinal nerve fiber layer defects identification and quantification in thickness maps,” Invest. Ophthalmol. Vis. Sci. 55(5), 3208–3218 (2014). 10.1167/iovs.13-13181 [DOI] [PubMed] [Google Scholar]

- 17.Savini G., Carbonelli M., Barboni P., “Spectral-domain optical coherence tomography for the diagnosis and follow-up of glaucoma,” Curr. Opin. Ophthalmol. 22(2), 115–123 (2011). [DOI] [PubMed] [Google Scholar]

- 18.Strouthidis N. G., et al. , “Longitudinal change detected by spectral domain optical coherence tomography in the optic nerve head and peripapillary retina in experimental glaucoma,” Invest. Ophthalmol. Vis. Sci. 52(3), 1206–1219 (2011). 10.1167/iovs.10-5599 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wessel J. M., et al. , “Longitudinal analysis of progression in glaucoma using spectral-domain optical coherence tomography,” Invest. Ophthalmol. Vis. Sci. 54(5), 3613–3620 (2013). 10.1167/iovs.12-9786 [DOI] [PubMed] [Google Scholar]

- 20.Balasubramanian M., et al. , “Effect of image quality on tissue thickness measurements obtained with spectral-domain optical coherence tomography,” Opt. Express 17(5), 4019 (2009). 10.1364/OE.17.004019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Li S., Markov Random Field Modeling in Computer Vision, Springer-Verlag, New York: (1995). [Google Scholar]

- 22.Buades A., Coll B., Morel J.-M., “A review of image denoising algorithms, with a new one,” Multiscale Model. Simul. 4(2), 490–530 (2005). 10.1137/040616024 [DOI] [Google Scholar]

- 23.Sun J., Tappen M. F., “Learning non-local range Markov random field for image restoration,” in Proc. 2011 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Providence, Rhode Island pp. 2745–2752, IEEE, Colorado Springs: (2011). [Google Scholar]

- 24.Caldairou B., et al. , “A non-local fuzzy segmentation method: application to brain mri,” Pattern Recognit. 44(9), 1916–1927 (2011). 10.1016/j.patcog.2010.06.006 [DOI] [Google Scholar]

- 25.Gilks W., Richardson S., Spiegelhalter D., Markov Chain Monte Carlo in Practice, Chapman & Hall/CRC, UK: (1996). [Google Scholar]

- 26.Tison C., et al. , “A new statistical model for Markovian classification of urban areas in high-resolution SAR images,” IEEE Trans. Geosci. Remote Sens. 42(10), 2046–2057 (2004). 10.1109/TGRS.2004.834630 [DOI] [Google Scholar]

- 27.Belghith A., et al. , “A unified framework for peak detection and alignment: application to HR-MAS 2D NMR spectroscopy,” Signal, Image Video Process., 7(5), 833–842 (2013). 10.1007/s11760-011-0272-2 [DOI] [Google Scholar]

- 28.Dobigeon N., et al. , “Bayesian separation of spectral sources under non-negativity and full additivity constraints,” Signal Process. 89(12), 2657–2669 (2009). 10.1016/j.sigpro.2009.05.005 [DOI] [Google Scholar]

- 29.Besag J., “On the statistical analysis of dirty pictures,” J. R. Stat. Soc. 48(3), 259–302 (1986). [Google Scholar]

- 30.Coupé P., et al. , “An optimized blockwise nonlocal means denoising filter for 3-D magnetic resonance images,” IEEE Trans. Med. Imaging 27(4), 425–441 (2008). 10.1109/TMI.2007.906087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hangai M., et al. , “Ultrahigh-resolution versus speckle noise-reduction in spectral-domain optical coherence tomography,” Opt. Express 17(5), 4221–4235 (2009). 10.1364/OE.17.004221 [DOI] [PubMed] [Google Scholar]

- 32.Gelman A., Roberts G., Gilks W., “Efficient metropolis jumping rules,” Bayesian Stat. 5, 599–608 (1996). [Google Scholar]

- 33.Gilks W. R., Roberts G. O., Sahu S. K., “Adaptive Markov chain Monte Carlo through regeneration,” J. Am. Stat. Assoc. 93(443), 1045–1054 (1998). 10.1080/01621459.1998.10473766 [DOI] [Google Scholar]

- 34.Goldberg D., Genetic Algorithms in Search and Optimization, Addison-Wesley, Boston, Massachusetts: (1989). [Google Scholar]

- 35.Leske M. C., et al. , “Early manifest glaucoma trial: design and baseline data,” Ophthalmology 106(11), 2144–2153 (1999). 10.1016/S0161-6420(99)90497-9 [DOI] [PubMed] [Google Scholar]

- 36.O’Leary N., et al. , “Glaucomatous progression in series of stereoscopic photographs and Heidelberg retina tomograph images,” Arch. Ophthalmol. 128(5), 560–568 (2010). 10.1001/archophthalmol.2010.52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Langenegger S. J., Funk J., Töteberg-Harms M., “Reproducibility of retinal nerve fiber layer thickness measurements using the eye tracker and the retest function of spectralis SD-OCT in glaucomatous and healthy control eyes,” Invest. Ophthalmol. Vis. Sci. 52(6), 3338–3344 (2011). 10.1167/iovs.10-6611 [DOI] [PubMed] [Google Scholar]

- 38.Pemp B., et al. , “Effectiveness of averaging strategies to reduce variance in retinal nerve fibre layer thickness measurements using spectral-domain optical coherence tomography,” Graefe’s Arch. Clin. Exp. Ophthalmol. 251(7), 1841–1848 (2013). 10.1007/s00417-013-2337-0 [DOI] [PubMed] [Google Scholar]

- 39.Kang J. U., et al. , “Real-time three-dimensional fourier-domain optical coherence tomography video image guided microsurgeries,” J. Biomed. Opt. 17(8), 081403 (2012). 10.1117/1.JBO.17.8.081403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wu H., de Boer J. F., Chen T. C., “Reproducibility of retinal nerve fiber layer thickness measurements using spectral domain optical coherence tomography,” J. Glaucoma 20(8), 470–476 (2011). 10.1097/IJG.0b013e3181f3eb64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Serbecic N., et al. , “Reproducibility of high-resolution optical coherence tomography measurements of the nerve fibre layer with the new Heidelberg spectralis optical coherence tomography,” Br. J. Ophthalmol. (2010).http://dx.doi.org/10.1136/bjo.2010.186221 [DOI] [PubMed] [Google Scholar]

- 42.Bazi Y., Bruzzone L., Melgani F., “An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images,” IEEE Trans. Geosci. Remote Sens. 43(4), 874–887 (2005). 10.1109/TGRS.2004.842441 [DOI] [Google Scholar]

- 43.Cohen J., “A coefficient of agreement for nominal scales,” Educ. Psychol. Meas. 20, 37–46 (1960). 10.1177/001316446002000104 [DOI] [Google Scholar]

- 44.Brennan R. L., Prediger D. J., “Coefficient kappa: some uses, misuses, and alternatives,” Educ. Psychol. Meas. 41(3), 687–699 (1981). 10.1177/001316448104100307 [DOI] [Google Scholar]

- 45.Landis J. R., Koch G. G., “The measurement of observer agreement for categorical data,” Biometrics 159–174 (1977). 10.2307/2529310 [DOI] [PubMed] [Google Scholar]

- 46.Bizios D., et al. , “Machine learning classifiers for glaucoma diagnosis based on classification of retinal nerve fibre layer thickness parameters measured by stratus OCT,” Acta Ophthalmol. 88(1), 44–52 (2010). 10.1111/aos.2010.88.issue-1 [DOI] [PubMed] [Google Scholar]

- 47.Fan R., Chen P., Lin C., “Working set selection using second order information for training support vector machines,” J. Mach. Learn. Res. 6, 1889–1918 (2005). [Google Scholar]

- 48.Imbault F., Lebart K., “A stochastic optimization approach for parameter tuning of support vector machines,” in Proc. 17th Int. Conf. on Pattern Recognition, (ICPR), Vol. 4, pp. 597–600, IEEE, Cambridge, UK: (2004). [Google Scholar]

- 49.Gardner M., Dorling S., “Artificial neural networks (the multilayer perceptron)–a review of applications in the atmospheric sciences,” Atmos. Environ. 32(14–15), 2627–2636 (1998). 10.1016/S1352-2310(97)00447-0 [DOI] [Google Scholar]

- 50.Weinreb R. N., Khaw P. T., “Primary open-angle glaucoma,” The Lancet 363(9422), 1711–1720 (2004). 10.1016/S0140-6736(04)16257-0 [DOI] [PubMed] [Google Scholar]