Abstract

Background/Objective

To review, compare and synthesize current faculty development programs and components. Findings are expected to facilitate research that will increase the competency and competitiveness of less-established biomedical research faculty.

Methods

We reviewed the current literature on research faculty development programs, and report on their type, components, outcomes and limitations.

Results

Nineteen articles met inclusion criteria. There were no prospective studies; most were observational and all lacked a control group. Mentoring was the most successful program type, and guided and participatory learning the most successful enabling mechanism, in achieving stated program goals.

Conclusions

Our findings are limited by the small number of current studies, wide variation in implementation, study design, and populations, and the lack of uniform metrics. However, results suggest that future prospective, randomized studies should employ quantitative criteria, and examine individual, human factors that predict “success.”

Keywords: Research faculty development, mentoring, grants, open learning, training curriculum

Introduction

A survey of the literature reveals that most recent studies on faculty development involve clinicians and teachers, rather than those in biomedical research. This exposes a critical need: Early-career biomedical investigators do not appear highly competitive in securing independent grant funding. A 2008 National Institutes of Health (NIH) report (National Institutes of Health [NIH], 2008) reveals that the average age of the first R01-equivalent recipient increased from 37 years in 1980 to 42 years in 2008. Although the NIH budget increased between 2000 and 2009, most of this funding went to experienced investigators with previous awards. In fact, the number of grants to early-career investigators relative to the total number awarded has dropped steadily since 1989, with a slight rebound in 2007. It is therefore critical that less-established and -experienced faculty be adequately trained and prepared to compete for potentially fewer funding opportunities, and to advance biomedical research and science. To provide information and insight into effective programs, interventions and components that may increase competency and competitiveness, we examined recent peer-reviewed articles on faculty development programs for biomedical researchers. We report on their type, underlying adult learning and enabling mechanisms, respective outcomes, and limitations. We then offer recommendations for future studies based on gaps found in current research.

Methodology

Review Protocol

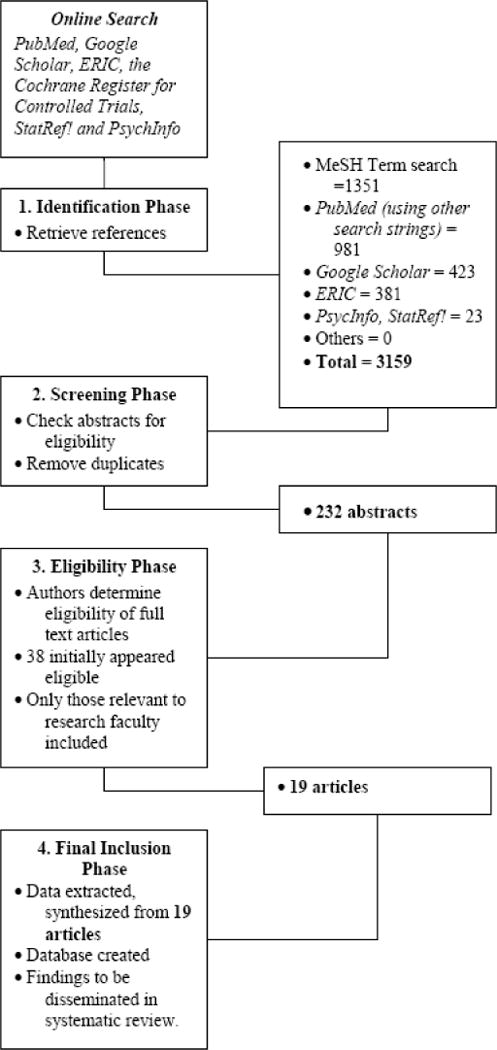

Our protocol (Figure 1, below) followed the 2009 Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist (Moher, Liberati, Tetzlaff, & Altman, 2009), incorporating similar guidelines by Eden and colleagues (Eden, Levit, Berg, & Morton, 2011) and the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI-Centre) (Gough, Oliver, & Thomas, 2012).

Figure 1.

Review Search Flow.

Inclusion and Exclusion Criteria

We excluded studies that involved exclusively clinical and teaching faculty, and student training, as well as review or opinion pieces. To be included, studies must have met the following a priori criteria:

Focused on researchers in the biomedical sciences.

Involved biomedical research faculty development.

Employed a model and/or intervention for implementing research faculty development.

Published in English.

Per accepted review standards (Creswell, 2010; Shojania et al., 2007), was published within the past 10 years (2004 – 2014).

Search Strategy and Information Sources

The Identification Phase of our review began in June 2013. A list of MeSH terms (Table 1, below) was used to search PubMed (US National Library of Medicine National Institutes of Health). We also searched PubMed, Google Scholar, StatRef!, ERIC and PsycINFO using the following keywords alone and in combination: “adult learning,” “learning models,” “biomedical research,” “research faculty,” “faculty development,” “didactic training” and “curriculum.” Keyword searches were replicated independently between August 2013 and October 2014 to verify the results of our initial search.

Table 1.

MeSH Terms used in Systematic Review Search

| Population | Faculty Development Methods | Outcomes |

|---|---|---|

| Biomedical Research Education Medical Faculty Academic Medical Centers Research Personnel Schools, Medical Health Personnel/Education |

Staff Development Models Educational Benchmarking Teaching Mentors Evidence-Based Medicine Problem-Based Learning Awards and Prizes Fellowships and Scholarships Foundations Program Development Program Evaluation Certification Computer-Assisted Instruction Education, Continuing |

Career Choice Authorship Research Support Financing, Organized Financing, Government Publishing Professional Autonomy Job Satisfaction Interprofessional Relations Diffusion of Innovation Leadership |

In the Screening Phase, duplicates found during the Identification Phase were eliminated. In the Eligibility Phase, inclusion and exclusion criteria were applied to non-redundant abstracts identified in the Screening Phase. From those deemed eligible, full manuscripts were examined in the Final Inclusion Phase. These were analyzed and yielded findings to be disseminated as part of our review process. We completed all phases by October 30, 2014.

Search Process

Our MeSH search yielded 1351 references. Separate searches using keywords described above, both alone and in combination, resulted in an additional 981 references and abstracts from PubMed, 423 from Google Scholar, 381 from ERIC, and 23 from StatRef! and PsycINFO databases. Of these 3159 articles found during the Identification Phase, 232 remained when duplicates were removed. In the Eligibility Phase, the authors reviewed the abstracts and if needed, the complete manuscripts. Of these, 38 initially appeared to meet our review criteria.

However, 15 were found to involve student, medical resident, nursing and general practitioner training, and were subsequently excluded, as were an opinion piece, and a non-empirical study that tested a proposed faculty development model through a self-assessment survey. Of the remainder, 18 were found in PubMed and one in ERIC. Due to the small number of studies, it was not possible to demonstrate statistical significance as to which programs or interventions lead to the most successful outcomes. However, we were able to provide a descriptive summary of the programs and identify trends.

Descriptive Statistics and Analysis

Seventeen of the 19 included studies originated from institutions in the United States and Puerto Rico; two were from universities in Denmark and Australia (Table 2, below). Ten of the included studies (52.6%) were conducted by universities with schools of medicine; six (31.6%) came from schools or departments of health science or biomedical research, and three (15.8%) were undertaken by foundations and medical societies.

Table 2.

Summary of Included Studies

| Institution and Reference (Author[s], Year) |

Type of Program or Intervention | Program Component(s) | Learning/Facilitating Mechanism(s) | Population (n) | Stated Goal(s) Achieved (Y or N) | Program Limitations and Concerns |

|---|---|---|---|---|---|---|

| 1. American Cancer Society (Vogler, 2004) | Grant/Award | Mentoring, lectures, financial support | Didactic learning, mentoring, financial support | Clinician researchers (51) | Research competency (N) | Could not assess if training program or other factors led to “success,” e. g. those with prior research experience may be more likely to get subsequent funding |

| 2. American College of Gastroenterology (Crockett, et al., 2009) | Grant/Award | Financial support | Financial support | Biomedical researchers (341) | Publications and research career development (Y) | Participants may have received support from other sources |

| 3. Cornell University (Supino & Borer, 2007) | Research Curriculum/Coursework | Research Training | Didactic learning | Clinician researchers (500+) | Research competency (Y) | Attendance at lectures not mandatory; no pre- or post-testing |

| 4. Cornell University (Bruce, et al., 2011) | Mentoring | Mentoring, grant review, lectures | Didactic learning, mentoring, guided and participatory learning | Clinician researchers (42) | Research competency (Y) | Possible selection bias, e. g. motivated volunteer participants may be more likely to succeed |

| 5. Duke University (Armstrong, Decherney, Leppert, Rebar, & Maddox, 2009) | Degree/Certificate Program | Research Training | Didactic learning, guided and participatory learning | Clinician researchers (10) | Research competency (Y) | Participation limited due to difficulties in obtaining financial support |

| 6. Marshfield Clinic Research Foundation (Yale, et al., 2011) | Mentoring | Mentoring, manuscript and grant review, research collaboration with emeritus mentors | Mentoring, guided and participatory learning | Clinician researchers (3 physician emeriti) | Facilitate mentoring by emeritus physicians, dentists, PhDs (Y) | Study focused on self-perceived effectiveness by emeritus faculty mentors, rather than on actual effect on mentees |

| 7. Ohio University College of Osteopathic Medicine (Balaji, Knisely, & Blazyk, 2007) | Grant/Award | Financial support, grant review | Financial support for selected faculty in submitting NIH grant application | Clinician researchers (3 in 2005, 2 in 2006)) | High-priority NIH review scores, NIH funding (N) | No awardees received high priority NIH scores in 2005. Scores for those given University awards in 2006 were not available at time article was published |

| 8. Southern Cross University, Australia (Davis, et al., 2012) | Research Curriculum/Coursework | Research Training | Didactic learning, guided and participatory learning | Clinician researchers (7) | Junior researcher career development (N) | Participants had high workload demands, institutional restrictions |

| 9. Texas Tech University Health Science Center (Eder & Pierce Jr., 2011) | Grant/Award | Administrative and statistical support, financial support, research training | Financial support, didactic learning, guided and participatory learning | Clinician researchers (91) | Improved research infrastructure (Y) | Methodological concerns: confounding variables, effect not generalizable to other schools or campuses, productivity and “success” of participants not primary metric |

| 10. Thoracic Surgery Foundation for Research and Education (Jones, Mack, Patterson, & Cohn, 2011) | Grant/Award | Financial support | Financial support | Biomedical researchers (75) | Research competency and activity (Y) | Not all grant recipients participated in study; lack of control group; possible self-reporting bias and/or inaccuracy |

| 11. University of California-San Diego (Daley, et al., 2009) | Grant/Award | Research Training | Mentoring, didactic learning, guided and participatory learning | Clinician researchers (18) | Research competency and activity (N) | Study limited to under-represented minority faculty members |

| 12. University of Cincinnati (Bragg et al., 2011) | Grant/Award | Research Training | Didactic learning | Clinical and basic science researchers (139) | Research productivity (N) | Lack of follow up data for comparative analysis |

| 13. University of Copenhagen (Tulinius et al., 2012) | Grant/Award | Research Training | Financial support, guided and participatory learning | Biomedical researchers (95) | Research competency (N) | Participants experienced teaching and research conflicts |

| 14. University of Michigan (Gruppen, Yoder, Frye, Perkowski, & Mavis, 2011) | Research Curriculum/Coursework | Research Training | Didactic learning, guided and participatory learning | Clinician researchers (33) | Research activity (Y) | Minimum enrollment targets not always met; logistical concerns, e. g. difficulties in arranging travel |

| 15. University of Pennsylvania School of Medicine (Strom et al., 2012) | Degree/Certificate Program | Research Training | Didactic learning, mentoring, guided and participatory learning | Clinician researchers (500+) | Research competency/Research productivity (N) | Concerns included obtaining sufficient funding, retaining mentors, and recruiting trainees from highly paid specialties |

| 16. University of Pittsburgh (Santucci et al., 2008) | Mentoring | Peer mentoring | Mentoring | Health sciences researchers (5) | Research productivity (Y) | Peer mentoring seen to augment, rather than replace traditional mentoring |

| 17. University of Puerto Rico Medical Sciences Campus (Estape et al., 2011) | Research Curriculum/Coursework | Research Training | Didactic learning | Clinical and basic science researchers (N/A) | Research activity (N) | Proposed model has not been implemented or tested |

| 18. University of Virginia (Schroen, Thielen, Turrentine, Kron, & Slingluff Jr., 2012) | Grant/Award | Financial incentives for increased publications and extramural grants | Financial support | Clinician researchers (18) | Increased number of publications and grant awards (Y) | Authors raise concerns over using publications and grants as metrics in different environments and under different institutional conditions. |

| 19. Vanderbilt School of Medicine (Brown et al., 2008) | Mentoring | Mentoring, shared research projects | Mentoring, guided and participatory learning | Biomedical researchers (70) | Research activity and productivity/Research career development (Y) | Methodological challenges in assessing results: comparison groups, sample size, follow-up duration. |

Participants in the included studies (total n = 19) consisted of a) clinicians in basic science, clinical or biobehavioral research (n = 11, 57.9%); b) biomedical researchers in basic science, social or biobehavioral research (n = 4, 21.1%), c) clinical and basic science researchers (n = 2, 10.5%), and d) health science researchers (n = 1, 5.3%).

The 19 faculty development programs identified in our review (Table 3) utilized a) grants or research awards that provided financial support (as salaries or monetary awards) and/or research training (n = 9, 47.4%), b) mentoring (n = 4, 21.1%), c) research training (n = 4, 21.1%), usually in the form of structured or semi-structured coursework, counseling and oversight, and d) a degree or a certificate program (n = 2, 10.5%). Of those that provided financial support, none reported on quantifiable protected time specifically allocated for research.

Table 3.

Program Type, Frequency and Outcomes

| Type of Program | n and % of implementations | n and % that met program goals |

|---|---|---|

| Grant or Research Award | n = 9, 47.4% | n = 4, 44.4% |

| Mentoring | n = 4, 21.1% | n = 4, 100% |

| Research Training | n = 4, 21.1% | n = 2, 50% |

| Degree or Certificate | n = 2, 10.5% | n = 1, 50% |

Four distinct underlying learning approaches or facilitating mechanisms were identified in the 19 programs (Table 4). Most incorporated more than one mechanism, e. g. a combination of didactic learning, mentoring, and guided and participatory learning at Cornell University (Bruce et al., 2011).

Table 4.

Learning or Enabling Mechanisms, Frequency and Outcomes

| Learning or Enabling Mechanism | n and % of implementations | n and % that met program goals |

|---|---|---|

| Didactic Learning | n = 11, 57.9% | n = 5, 45.5% |

| Guided and Participatory Learning | n = 10, 52.6% | n = 6, 60% |

| Mentoring | n = 7, 36.8% | n = 4, 57.1% |

| Financial Support | n = 7, 36.8% | n = 4, 57.1% |

Didactic learning as lectures or presentations by instructors was the most common (n = 11, 57.9%). This was followed by guided and participatory learning through group, social, collaborative and experiential activities and approaches (n = 10, 52.6%), mentoring in the form of counseling, scientific and career guidance by senior faculty or others (n = 7, 36.8%), and financial support or incentives as salary or monetary awards for research productivity (n = 7, 36.8%).

Measuring Success

The primary metric used in this review to determine “success” was whether the study concludes that the goals or objectives of the program were met through its own criteria. Cornell University’s curriculum and coursework approach (Supino & Borer, 2007), for example, was found to have achieved its goal of improving research competency among clinician researchers through participation metrics. Conversely, studies such as that at Southern Cross University, Australia (Davis et al., 2012), were not deemed “successful” in our analysis because implementers did not explicitly indicate a) whether program goals and objectives had been met through their curriculum and coursework approach, and b) how these would have been assessed.

In terms of achieving stated goals and objectives, mentoring programs were reported successful in four out of four implementations (100%). This was followed by grants/awards (four out of eight, 50%), research curriculum/coursework (two out of four, 50%), degree/certificate programs (one out of two, 50%) and grant or research awards (four out of nine, 44.4%) (Table 3). In terms of learning or enabling mechanisms, guided and participatory learning appears to have been the most successful (six out of ten, 60%), followed by mentoring and providing financial support (both four out of seven, 57.1%), and lastly, didactic learning (five out of eleven, 45.5%) (Table 4).

It is important to note that of the 19 programs, 11 (57.9%) employed more than one learning or enabling mechanism or component. Texas Tech University’s Health Science Center grant/award program (Eder & Pierce Jr., 2011), for example, provided financial support as well as didactic and guided and participatory learning. However, out of a total of 11 “successful” programs, seven (63.6%) employed a single component, e. g. financial support or mentoring, compared to four programs (33.4%) that employed multiple mechanisms, such as combined didactic training, mentoring and financial support, to achieve their stated goals.

Discussion

We found that grant or research awards, mentoring, research training, and degree or certificate programs were commonly employed in biomedical research faculty development. Mentoring programs achieved all their stated goals and objectives. Research curriculum/coursework and degree/certificate programs followed, having met their goals 50% of the time. Grants and research awards were the least “successful” at 44.4%.

The majority of participants in faculty development programs were clinicians in basic science, or clinical or biobehavioral research. It is therefore not surprising that didactic learning, commonly employed in medical school curricula, was the most pervasive (57.9%), followed by guided and participatory learning (52.6%), and mentoring and financial support or incentives (both 36.8%). Guided and participatory learning was the most successful enabling mechanism in terms of meeting stated program goals and objectives (60%), followed by mentoring and didactic learning (each at 57.1%), and lastly, financial support (45.5%).

It is important to note that programs with multiple enabling or learning components, e. g. mentoring and didactic and guided and participatory learning (Daley, Broyles, Rivera, & Reznik, 2009), did not clearly demonstrate more “successful” outcomes than those that relied on a single mechanism, such as financial support (Crockett, Dellon, Bright, & Shaheen, 2009). It is equally important to emphasize, however, the limitations of using program goals and objectives in measuring the efficacy or appropriateness of the program or its components. These criteria may be subjective, post hoc and/or biased.

The Marshfield Clinic Research Foundation’s mentoring program (Yale, Jones, Wesbrook, Talsness, & Mazza, 2011), for example, was assessed using self-rated perceptions of effectiveness by emeritus faculty mentors, rather than through quantitative measures such as the number of peer-reviewed mentee publications or the number of grant applications. These objective metrics may allow us to more accurately and objectively assess programs and learning mechanisms (Roy, Roberts, & Stewart, 2006; Rust et al., 2006; Sax, Hagedorn, Arredondo, & Dicrisi, 2002).

It is also important to consider that, even for studies such as that of the American Cancer Society (Vogler, 2004), with the relatively precise goal of building research competency, the authors acknowledged that they could not determine whether the program or other factors such as having prior research experience may have affected outcomes. The core of this uncertainty is highlighted in an NIH report of its mentored career development programs (K awards) (National Institutes of Health [NIH], 2011). While these grant mechanisms appear to positively impact research productivity, their effects were found to vary based on each awardees’ prior background and training. This suggests that trainees with stronger research backgrounds and more experience may be more likely to demonstrate greater productivity and scientific competency, and/or may simply be more dedicated to, and have a genuine passion for, biomedical research. Even subtle psychological variables such as changes in the level of readiness to commit fully to a research career (Prochaska, Norcross, & DiClemente, 1995) may be better predictors of research “success.”

Limitations

The small number of studies in this review precludes any statistically-based determination in regards to the best faculty development program and/or mechanism(s) for biomedical researchers. Our examination considers only biomedical research faculty, and findings may not be applicable to those in disciplines such as engineering, humanities and social sciences. Similarly, although we included two international studies, results may not be generalizable to biomedical research faculty in other countries. Moreover, varied and diverse programs and approaches, study methodologies, metrics and goals are commonly employed, and we could determine “success” only for those programs with clearly stated goals, objectives or desired outcomes.

Perhaps most importantly, included studies generally did not examine what may be significant individual, psychosocial factors. These include motivation and perseverance, personal attributes such as age, gender, inherent scientific and research skills and abilities, or the effect of factors such as university prestige or non-monetary institutional incentives, including tenure.

Conclusion and Recommendations for Future Research

In terms of achieving stated goals and objectives, mentoring, and guided and participatory learning, appear to be the most successful biomedical research faculty development program approach and enabling components, respectively. However, because of a) the limited number of relevant studies, b) the wide variation in faculty development methods and approaches, c) diverse populations considered, e. g. clinician researchers v. full-time PhD faculty, and d) the lack of uniform, accepted metrics through which to gauge “success,” any conclusions will be limited. These weaknesses may potentially be overcome through prospective studies involving participants with similar backgrounds, who are randomized in receiving either the intervention program or act as a control, and whose performance is assessed through standard, objective criteria such as grants or publications.

However, even with randomized studies, predictors of research “success” at the individual level remain unclear. Qualities such as motivation and commitment are possibly highly significant, and should be assessed. Future quantitative and qualitative investigation that measures previous research experience, scientific accomplishments, and explores individual characteristics and attributes are warranted. These may employ focus groups or interviews, from which we may be able to better determine and understand those personal factors that contribute to and predict greater research competency and competitiveness, and improved career trajectory.

Acknowledgments

S.A.T., S.B-H and T.C.F. are supported in part by NIH-NIMHD grant U54MD007598 (formerly U54RR026138) and S06GM068510. T.C.F is also supported by U54 HD41748, R24DA017298 and S21MD000103.

References

- Armstrong AY, Decherney A, Leppert P, Rebar R, Maddox YT. Keeping clinicians in clinical research: The clinical research/reproductive scientist training program. Fertility and Sterility. 2009;91(3):664–666. doi: 10.1016/j.fertnstert.2008.10.029. doi: 10.1016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balaji RV, Knisely C, Blazyk J. Internal Grant Competitions: A New Opportunity for Research Officers to Build Institutional Funding Portfolios. Journal of Research Administration. 2007;38(2):44–50. [Google Scholar]

- Bragg EJ, Warshaw GA, Van der Willik O, Meganathan K, Weber D, Cornwall D, Leonard AC. Paul B. Beeson career development awards in aging research and U.S. medical schools aging and geriatric medicine programs. Journal of the American Geriatric Society. 2011;59(9):1730–1738. doi: 10.1111/j.1532-5415.2011.03546.x. [DOI] [PubMed] [Google Scholar]

- Brown AM, Morrow JD, Limbird LE, Byrne DW, Gabbe SG, Balser JR, Brown NJ. Centralized oversight of physician-scientist faculty development at Vanderbilt: Early outcomes. Academic Medicine. 2008;83(10):969–975. doi: 10.1097/ACM.0b013e3181850950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce ML, Bartels SJ, Lyness JM, Sirey JA, Sheline YI, Smith G. Promoting the transition to independent scientist: A national career development program. Academic Medicine. 2011;86(9):1179–1184. doi: 10.1097/ACM.0b013e3182254399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Creswell J. Research Design. Thousand Oaks, California: Sage Publications; 2010. [Google Scholar]

- Crockett SD, Dellon ES, Bright SD, Shaheen NJ. A 25-year analysis of the American College of Gastroenterology research grant program: Factors associated with publication and advancement in academics. American Journal of Gastroenterology. 2009;104(5):1097–1105. doi: 10.1038/ajg.2009.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daley SP, Broyles SL, Rivera LM, Reznik VM. Increasing the capacity of health sciences to address health disparities. Journal of the National Medical Association. 2009;101(9):881–885. doi: 10.1016/s0027-9684(15)31034-8. [DOI] [PubMed] [Google Scholar]

- Davis K, Brownie S, Doran F, Evans S, Hutchinson M, Mozolic-Staunton B, Van Aken R. Action learning enhances professional development of research supervisors: An Australian health science exemplar. Nursing and Health Sciences. 2012;14(1):102–108. doi: 10.1111/j.1442-2018.2011.00660.x. [DOI] [PubMed] [Google Scholar]

- Eden J, Levit L, Berg A, Morton S. Finding what works in health care: Standards for systematic reviews. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- Eder M, Pierce JR., Jr Innovations in faculty development: Study of a fesearch assistance unit designed to assist clinician-educators with research. Southern Medical Journal. 2011;104(9):647–650. doi: 10.1097/SMJ.0b013e3182294e82. [DOI] [PubMed] [Google Scholar]

- Estape E, Laurido LE, Shaheen M, Quarshie A, Frontera W, Mays MH, White R., III A multiinstitutional, multidisciplinary model for developing and teaching translational research in health disparities. Clinical and Translational Science. 2011;4(6):434–438. doi: 10.1111/j.1752-8062.2011.00346.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gough D, Oliver S, Thomas J. An introduction to systematic reviews. London: Sage Publications; 2012. [Google Scholar]

- Gruppen LD, Yoder E, Frye A, Perkowski LC, Mavis B. Supporting medical education research quality: The Association of American Medical Colleges’ medical education research certificate program. Academic Medicine. 2011;86(1):122–126. doi: 10.1097/ACM.0b013e3181ffaf84. [DOI] [PubMed] [Google Scholar]

- Jones DR, Mack MJ, Patterson GA, Cohn LH. A positive return on investment: research funding by the Thoracic Surgery Foundation for Research and Education (TSFRE) The Journal of Thoracic and Cardiovascular Surgery. 2011;141(5):1103–1106. doi: 10.1016/j.jtcvs.2011.03.010. [DOI] [PubMed] [Google Scholar]

- Moher D, Liberati A, Tetzlaff J, Altman D. Preferred reporting items for systematic reviews and meta-analyses: The prisma statement. Public Library of Science (PLoS) Medicine. 2009;6(7):e1000097. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institutes of Health [NIH] Peer-review self study 2007–2008. 2008 http://enhancing-peer-review.nih.gov/meetings/NIHPeerReviewReportFINALDRAFT.pdf [accessed 15 November 2013]

- National Institutes of Health [NIH] Individual mentored career development programs. 2011 http://grants.nih.gov/training/K_Awards_Evaluation_FinalReport_20110901.pdf [accessed 15 November 2013]

- Roy K, Roberts M, Stewart P. Research productivity and academic lineage in clinical psychology: Who is training the faculty to do research? Journal of Clinical Psychology. 2006;62(7):22. doi: 10.1002/jclp.20271. [DOI] [PubMed] [Google Scholar]

- Rust G, Taylor V, Herbert-Carter J, Smith Q, Earles K, Kondwani K. The Morehouse faculty development program: Evolving methods and 10-year outcomes. Family Medicine. 2006;38(1):43–49. [PubMed] [Google Scholar]

- Santucci AK, Lingler JH, Schmidt KL, Nolan BA, Thatcher D, Polk DE. Peer-mentored research development meeting: A model for successful peer mentoring among junior level researchers. Academic Psychiatry. 2008;32(6):493–497. doi: 10.1176/appi.ap.32.6.493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sax L, Hagedorn L, Arredondo M, Dicrisi F. Faculty research productivity: Exploring the role of gender and family-related factors. Research in Higher Education. 2002;43(4):423–446. doi: 10.1023/a:1015575616285. [DOI] [Google Scholar]

- Schroen AT, Thielen MJ, Turrentine FE, Kron IL, Slingluff CL., Jr Research incentive program for clinical surgical faculty associated with increases in research productivity. Journal of Thoracic and Cardiovascular Surgery. 2012;144(5):1003–1009. doi: 10.1016/j.jtcvs.2012.07.033. [DOI] [PubMed] [Google Scholar]

- Shojania KG, Sampson M, Ansari MT, Ji J, Doucette S, Moher D. How quickly do systematic reviews go out of date? A survival analysis. Annals of Internal Medicine. 2007;147(4):224–233. doi: 10.7326/0003-4819-147-4-200708210-00179. [DOI] [PubMed] [Google Scholar]

- Strom BL, Kelly TO, Norman SA, Farrar JT, Kimmel SE, Lautenbach E, Feldman HI. The Master of Science in clinical epidemiology degree program of the Perelman School of Medicine at the University of Pennsylvania: a model for clinical research training. Academic Medicine. 2012;87(1):74–80. doi: 10.1097/ACM.0b013e31823ab5c2. [DOI] [PubMed] [Google Scholar]

- Supino PG, Borer JS. Teaching clinical research methodology to the academic medical community: A fifteen-year retrospective of a comprehensive curriculum. Medical Teacher. 2007;29(4):346–352. doi: 10.1080/01421590701509688. [DOI] [PubMed] [Google Scholar]

- Tulinius C, Nielsen AB, Hansen LJ, Hermann C, Vlasova L, Dalsted R. Increasing the general level of academic capacity in general practice: Introducing mandatory research training for general practitioner trainees through a participatory research process. Quality in Primary Care. 2012;20(1):57–67. [PubMed] [Google Scholar]

- US National Library of Medicine National Institutes of Health. PubMed. 2014 Retrieved August 12, 2014 from http://www.ncbi.nlm.nih.gov/pubmed.

- Vogler WR. An analysis of the American Cancer Society Clinical Research Training program. Journal of Cancer Education. 2004;19(2):91–94. doi: 10.1207/s15430154jce1902_8. [DOI] [PubMed] [Google Scholar]

- Yale S, Jones M, Wesbrook SD, Talsness S, Mazza JJ. The emeritus clinical-researcher program. Wisconsin Medical Journal. 2011;110(3):127–131. [PubMed] [Google Scholar]