Abstract

Differences in vocabulary that children bring with them to school can be traced back to the gestures they produce at 1;2, which, in turn, can be traced back to the gestures their parents produce at the same age (Rowe & Goldin-Meadow, 2009b). We ask here whether child gesture can be experimentally increased and, if so, whether the increases lead to increases in spoken vocabulary. Fifteen children aged 1;5 participated in an 8-week at-home intervention study (6 weekly training sessions plus follow-up 2 weeks later) in which all were exposed to object words, but only some were told to point at the named objects. Before each training session and at follow-up, children interacted naturally with caregivers to establish a baseline against which changes in communication were measured. Children who were told to gesture increased the number of gesture meanings they conveyed, not only during training but also during interactions with caregivers. These experimentally-induced increases in gesture led to larger spoken repertoires at follow-up.

Keywords: vocabulary, gesture, language development, nonverbal communication, intervention

Children increase their spoken vocabularies at very different rates during the first few years of life (Bates et al., 1994; Fenson et al., 1994). The disparities in vocabulary size that result from these early differences appear to matter, as early vocabulary is a key predictor of school success (Anderson & Freebody, 1981; Dickinson & Tabors, 2001; O’Neill, Pearce, & Pick, 2004). One predictor of children’s vocabulary development in the first few years is their early gesturing. Before children speak, most children communicate using gesture and, at these ages, advances in emerging language skills can be measured through both gestured and spoken modalities (Bates, Benigni, Bretherton, Camaioni, & Volterra, 1979). Naturalistic studies have found that children’s early gesture use relates to later word learning in infancy and through preschool (Acredolo & Goodwyn, 1988; Iverson & Goldin-Meadow, 2005; Rowe & Goldin-Meadow, 2009a). For example, the greater the diversity of meanings a child conveys in gesture at 1;2, the larger the child’s vocabulary at school entry (Rowe & Goldin-Meadow, 2009b). As another example, the gesture-speech combinations children produce early in development predict the age at which they first produce two-word utterances (Iverson & Goldin-Meadow, 2005: see also Ozcaliskan & Goldin-Meadow, 2005).

Although these data underscore the point that gesture and speech form an integrated system, they do not bear on the degree to which one component of the gesture-speech system can impact the other. Does gesture merely correlate with subsequent vocabulary development, or can it play a causal role in fostering that development? Gesture has been shown to play a causal role in older children learning to solve mathematical equivalence problems. Telling 9 and 10-year-old children to gesture either prior to (Broaders, Cook, Mitchell, & Goldin-Meadow, 2007) or during (Cook, Mitchell, & Goldin-Meadow, 2008; Goldin-Meadow, Cook, & Mitchell, 2009) a math lesson facilitates their ability to profit from that lesson. In one case (Broaders et al., 2007), children were told to move their hands while explaining their responses to a set of math problems. Children complied and gestured, but the interesting result is that the gestures they produced when told to move their hands revealed knowledge about the problem that they had not previously revealed in either speech or their solutions to the problem. Having articulated this knowledge in the manual modality, the children were then more open to input from a math lesson.

In another study in which gesture was manipulated (Goldin-Meadow et al., 2009), children were not just told to move their hands, they were shown which movements to make in relation to the problem. The movements instantiated the “grouping” strategy, a strategy that results in correct answers to the problem but one that the children did not produce prior to instruction in either gesture or speech. Children taught how to move their hands to instantiate a correct grouping strategy solved significantly more problems correctly after instruction than children taught to move their hands to instantiate a partially correct grouping strategy, and than children given no instructions at all about their hands. Importantly, children’s success after training was mediated by how well they had learned the grouping strategy (as measured by whether they produced this strategy in speech on the posttest after the lesson). The grouping strategy was not explicitly mentioned in speech by either teacher or child, nor was it mentioned in gesture by the teacher in any of the conditions. Grouping was found during instruction only in the gestures that the children were taught to produce, suggesting that their new-found math knowledge came from abstracting information from their own hands. Although we do not yet understand the precise mechanism underlying the effect that learners’ gestures have on their own learning, it seems clear that producing a hand movement whose meaning is relevant to a task primes learners to take in information that has the potential to facilitate change, in this case, positive change, on the task.

Our goal here is to explore whether gesture can play the same type of causal role for young children in the early stages of language learning. Children’s own actions have been shown to direct their attention to aspects of the environment important for acquiring particular skills (for review, see Rakison & Woodward, 2008). The gestures a child produces are, in fact, actions and, when produced in a naming context, could increase attention to both the objects named and to the names themselves. Increasing attention in this way has the potential to be useful for early word learning, particularly since object names are among the first words learned and account for a majority of children’s early spoken lexicons (Fenson et al., 1994). In addition, increasing pointing gestures in labeling contexts could emphasize the pragmatic utility of pointing for the transmission of knowledge, including transmitting names for objects (Csibra & Gergely, 2009). A child’s own gesturing thus has the potential to affect the child’s word learning.

Although there is much observational research on gesture and language development during the first two years of life, very little experimental work has been done in this domain at these early ages. The one exception is a study by Goodwyn, Acredolo and Brown (2000), which provides indirect evidence that child gesture may have causal effects on child language development. Goodwyn and colleagues (2000) trained parents to model gestures for their 11-month-old infants in everyday interactions, and to teach the children to use the gestures (training condition). They found that children in the gesture training condition performed significantly better than controls on a variety of language measures assessed through age three years. Importantly, however, the study did not address the question that is the focus of the current study––was increased child gesture the cause of the experimental effects on child language? Children’s gesture was not directly observed, and effects of increases in child gesture on child speech were not tested. Thus, it is possible that the effects that parent gesture training had on child language operated through a mechanism other than child gesture.

To determine whether gesture plays a causal role in vocabulary learning, as in the math studies with older children we need to go beyond observing the spontaneous gestures young language-learners produce and begin to manipulate their gestures. We accomplish this goal here by showing children pictures of objects, pointing to one of the pictures while labeling the object, and then telling the child to put his or her pointing figure on the picture (e.g., “that’s a dress,” said while pointing at a picture of a dress, followed by “can you put your finger here”). We compare this experimental condition (Child & Experimenter Gesture, C&EG) to two control conditions, one in which the experimenter labels the picture while pointing at it but does not instruct the child to point (Experimenter Gesture, EG), and one in which the experimenter labels the picture and does nothing else (No Gesture, NG). Our goal was to change children’s pointing behavior in interactions with the experimenter, and then examine whether this experimental manipulation had an effect on the children’s spontaneous interactions with their caregivers. The two control conditions resemble behaviors that parents typically do while interacting with their children and thus might not be special enough to have a ripple effect outside of the experimental context. In contrast, the experimental condition involves a somewhat unusual behavior (telling children to put their finger on a picture) that might be expected to receive notice not only from the child, but also from their caregivers. We therefore examined gesturing behavior during the naturalistic caregiver-child interactions over the course of 6 weeks for both caregiver and child.1 There is reason to believe that a manipulation carried out in the lab can have far-reaching effects outside of the lab (Smith, Jones, Landau, Gershkoff-Stowe, & Samuelson, 2002). Smith and colleagues conducted a 9-week longitudinal study on 17-month-old children and found that children’s experiences with object-naming based on shape in the lab had dramatic effects on the number of object names the children added to their vocabularies outside of the lab. Our hypothesis is that telling children to gesture in an experimental context will have a similarly powerful effect on their use of gestures and words outside of the experimental context.

Telling children to point at pictures of objects could influence word learning in a variety of ways. First, encouraging children to point at an object while the experimenter is labeling that object could focus their attention on the particular word-object link. This focused attention might be expected to increase the likelihood that children will learn the labels for the particular objects to which they pointed, but have no effect on their word learning skill overall. Alternatively, encouraging children to point at objects could focus their attention on the referential properties of pointing gestures in particular, and all gestures in general. Attending to gesture’s referential properties might be expected to improve word learning overall, although it might have little effect on learning the particular words to which the children were trained to point. Our goal was to test this second hypothesis. Note that this hypothesis depends on the pointing gesture being referential. There is, in fact, evidence for this claim from data on young language-learners. As mentioned earlier, the gesture-speech combinations a child produces early in development predict when that child will begin to combine words into a sentence. More specifically, the age at which a child first conveys information in gesture that differs from the information conveyed in the accompanying speech (e.g., “mommy” + point at hat) predicts the age at which that child will produce her first two-word utterance (“mommy hat”). Importantly, it is not just the presence of gesture that signals the onset of two-word speech, it is the meaning of the gesture taken in relation to the meaning of the accompanying word––the age at which a child first conveys the same information in gesture and the accompanying speech (e.g., “hat” + point at hat) does not predict the onset of two-word speech (Iverson & Goldin-Meadow, 2005). Since most of the gestures children produce during this early stage of language-learning are pointing gestures, this findings can be taken as evidence that the pointing gesture serves a referential function for young children.

In addition to these two potential mechanisms that affect the word learner directly, telling children to point at pictures of objects could also have ripple effects that shape the learning environment, thus affecting word learning indirectly. For example, the more children gesture, the more opportunities their caregivers have to respond to those gestures with targeted input; if a child points at a bird, mother could respond by saying, “that’s a bird,” thus providing the child with timely input that might facilitate learning the word “bird” (Goldin-Meadow, Goodrich, Sauer, & Iverson, 2007; Golinkoff, 1986; Masur, 1982). As another example, an increase in child gesture could evoke an increase in caregiver gesture, which might naturally bring with it an increase in caregiver speech; that increase in caregiver speech might then have an impact on child word learning (Huttenlocher, Haight, Bryk, Seltzer, & Lyons, 1991).

In summary, observational research on the relation between gesture and language development, and experimental research on the role gesture plays in learning in other cognitive domains, give us reason to believe that gesture could play a role in facilitating the transition to spoken language. If so, telling children who are at the earliest stages of word learning to gesture ought to have an impact on the words they subsequently produce. At present, however, there is no evidence that we can alter the rate at which young children gesture. The goals of this paper are thus two-fold: (i) to determine whether we can increase children’s gesturing through experimental manipulation and, if so, (ii) to determine whether this increase has a positive effect on the number of spoken words children produce in a naturalistic setting. We predict that if we can experimentally increase child gesture, we will also increase child speech.

Method

Participants

Fifteen infants (8 males) with no known medical conditions and their primary caregivers participated in the study. Families were recruited through a university maintained infant database. We enrolled children (mean age = 1;5; range = 1;4 −1;6) who met the following criteria: (1) monolingual English speaker, (2) spoken vocabulary <50 words at the start of the study, as measured by the MacArthur-Bates Communicative Development Inventory (CDI) (Fenson et al., 1993), (3) no multi-word utterances. We chose to enroll children with a spoken vocabulary of fewer than 50 words because we wanted to study children at the earliest stages of word learning (Bates et al., 1994). We did not ask caregivers whether their child was exposed to a sign language (e.g. “baby sign”). However, we did observe 4 caregivers using signs at pretest (1 C&EG, 1 EG, 2 NG). In addition to our sample of 15 infants, there were 4 children (2 C&EG, 1 EG, 1 NG) who enrolled in the study, but were unable to maintain weekly visits and are thus not included in this study.

Although we did not recruit children based on socioeconomic status (SES), families in our sample were from a limited SES range (as determined by primary caregiver education). All children in the study were middle to high SES; primary caregivers had, at minimum, some education beyond a high school degree and, at maximum, a doctorate degree (7 with education beyond high school including a bachelor’s degree; 8 with education beyond a bachelor’s degree). Most children were Caucasian and all were non-Hispanic (11 White, 1 Black or African American, 3 mixed ethnicity).

Procedure

Children received in-home experimental training once a week for 6 weeks. Two weeks after the final training session, we observed unstructured caregiver-child interaction in an in-home follow-up session. Each training session began with a 30-minute videotaped play session between the child’s primary caregiver and the child to establish a baseline against which to measure changes in the child’s communication over time. The same primary caregiver was observed at all 6 training sessions and at follow-up. Primary caregivers included 13 mothers, 1 father, and 1 grandmother. The same procedure was used for the caregiver-child interaction at follow-up as was used for each of the training sessions.

After each caregiver-child interaction, the experimenter played with the child in a videotaped 30-minute structured play segment during which training was administered. During this time, the experimenter played with a standard set of toys that included the experimental stimuli (picture books). In addition to administering the experimental manipulation with the picture books (see below), the child and experimenter participated in free play with the standard set of toys brought by the experimenter (e.g. balls, toy animals, and toy cars). If the child needed a break at any time from the picture books, we resumed play with the free play toys or, rarely, the child took a break with a parent. When not engaging in the experimental manipulation, the experimenter kept labeling and gesture production to a minimum. In all experimental conditions, children interacted with the experimenter for 30-minutes. In some cases, the experimenter had difficulty engaging the child in the picture book activity and, in these cases, the experimenter-child interaction period lasted slightly longer than 30 minutes because many breaks were taken to play with the other toys. The same experimenter was used for all sessions and all conditions.

Children were randomly assigned to one of three conditions (five per condition), with an eye toward matching the conditions on child sex and vocabulary size: Child and Experimenter Gesture (C&EG), Experimenter Gesture (EG), and No Gesture (NG). Caregivers completed the MacArthur-Bates Communicative Development Inventory (CDI) Words and Gestures form within one week prior to our first visit. We used this CDI to enroll children in the study, assign children to conditions, and used the Spoken Vocabulary and Comprehension Vocabulary measures from the CDI as pretest measures. In addition, after completing the observations, we coded the speech and gesture children produced during the caregiver-child interaction that took place prior to the first experimental session, and used these scores as pretest measures as well.

Table 1 presents pretest measures for children in each condition, two standardized measures from the CDI completed by mothers prior to session 1 (Pretest Child Spoken Vocabulary; Pretest Child Comprehension Vocabulary) and two measures from the caregiver-child interaction at session 1 (Pretest Child Speech; Pretest Child Gesture). There were no significant differences across conditions in any pretest measures (all 2-tail p-values > .34). However, our small sample size may lead to low power, which could limit our ability to detect differences on pretest measures. As a result, we control for Pretest Child Speech in our analysis of indirect effects on child speech outcome; the Pretest Child Speech measure was taken from the same observational context from which our speech outcome measure was taken and, thus, we believe, is the most appropriate covariate. In addition, as seen in Table 1, children in our experimental condition, Child and Experimenter Gesture (C&EG), had higher caregiver-reported Spoken Vocabulary and Comprehension Vocabulary scores than children in the other two conditions, Experimenter Gesture (EG) and No Gesture (NG). Even though the difference was not significant, we thought it important to check whether taking these individual differences in caregiver-reported pretest vocabulary measures into account would affect our results. We first re-ran our analyses of indirect effects on child speech outcome including Spoken Vocabulary as a covariate. We next re-ran these analyses including Comprehension Vocabulary as a covariate. In both cases, the results were unchanged. Similarly, we re-ran analyses using Pretest Child Gesture as a covariate and results were unchanged.

Table 1.

Pretest Child Speech and Gesture Measures by Condition

| Condition | Age | Standardized Measures of Child Speech |

Child Communication during Caregiver-Child Interactions |

||

|---|---|---|---|---|---|

| Pretest Child Spoken Vocabulary |

Pretest Child Comprehension Vocabulary |

Pretest Child Speech |

Pretest Child Gesture |

||

| No Gesture (NG) (n=5, 3 M) |

516.80 (SD = 31.25; 491 – 567) |

20.00 (14.30) |

123.20 (48.52) |

7.40 (6.77) |

15.60 (10.16) |

| Experimenter Gesture (EG) (n=5, 3 M) |

505 (SD = 9.75; 490 – 514) |

19.80 (14.96) |

117.00 (107.46) |

11.40 (4.72) |

9.80 (2.59) |

| Child and Experimenter Gesture (C&EG) (n=5, 2 M) |

508 (SD = 11.28; 491 518) |

25.60 (12.08) |

188.00 (76.86) |

9.40 (6.27) |

10.00 (6.96) |

Note. The table contains means for all pretest measures; standard deviations and ranges are provided in parentheses. The standardized measures of child speech were obtained from the MacArthur-Bates Communicative Development Inventories. Age was balanced across conditions.

Experimental Manipulation

During each training session, the child and experimenter looked at two 6-page picture books. In all conditions, the experimenter provided a spoken label for one of the three pictures on each page (the target picture). The experimenter produced the label twice, pausing between each labeling utterance (e.g. “Look at the dress. That’s a dress.”). All target labels were provided when the child was attending to the page.

In the experimental condition, Child and Experimenter Gesture (C&EG), the experimenter pointed at the target picture while providing the spoken label for the picture and then asked the child to point to/put her finger on the picture. For example: Experimenter Target Utterance 1: “Look at the dress” + EXPERIMENTER POINTS AT DRESS; Experimenter Gesture Instruction: “Can you do this?” CHILD POINTS AT DRESS; Experimenter Target Utterance 2: “That’s a dress” + EXPERIMENTER POINTS AT DRESS.

We also had two control conditions in which children were not told to gesture. In the first control condition, Experimenter Gesture (EG), the experimenter pointed at the target while providing the spoken labels, but did not ask the child to point. In the control condition, No Gesture, (NG), the experimenter provided the spoken labels, but did not point at the target and did not ask the child to point. Having two control conditions allowed us to isolate the effect of seeing vs. doing gesture. We grouped the conditions into two groups as a function Instruction-to-Gesture: Told-to-gesture (C&EG) and Not-told-to-gesture (EG, NG). In all analyses, we predict an effect of Instruction-to-Gesture.

To increase the child’s compliance with our instructions to gesture, the experimenter provided enthusiastic positive reinforcement (e.g. “good job!”) following the child’s pointing gesture in the C&EG condition. Because children in the EG and NG conditions were not being encouraged to point, they did not receive this type of reinforcement. However, they did receive positive acknowledgement (e.g., nod or “yes”) to their responses. Further, in all conditions, the experimenter was positive and engaging during book reading to encourage the child’s participation in the task. In all conditions, if the child became distracted and wanted to stop playing with the book, the experimenter tried to re-engage the child in the task and, if that failed, a break was taken during which the experimenter and child played with an item from the standard set of toys. Sometimes the child began to look away from the book before the experimenter completed producing the label for the target word. In this case, the label was repeated. Over the 6 weeks, 720 target label utterances were planned for each condition (144 for each child). Including repeats, the number of target label utterances produced for each condition was: C&EG=738, EG=748, NG=744. The mean number of target utterances produced to each child over the course of the study did not differ between conditions (Group means: C&EG = 146.80; EG = 148.60, NG = 145.00; p > .41).

Stimuli

We designed four 6-page picture books to use in training. The target labels and books were identical across conditions. We used labels that children might encounter in their everyday lives (e.g., “dress”) because the books were meant to serve as a medium within which we could teach children to gesture in a communicative context. For all children in all conditions, two books were presented at each session and we alternated books between sessions (Books A and B were shown at sessions 1, 3, 5; Books C & D were shown at sessions 2, 4, 6). Each page of the book contained three colored line drawings (Figure 1). One picture on each page was the target, and the experimenter labeled only the target. All target words were whole-object labels (i.e., not labels for the parts of the object). None of the pictures appeared on more than one page or in more than one book. The target items were selected prior to beginning the study so that they would represent a spectrum of unknown and familiar words similar to the words young children are likely to encounter in everyday interactions. The target object labels and non-target object labels were of varying levels of difficulty––some items were object labels that most 16-month-olds knew (e.g., cat), some were known by very few 16-month-olds (e.g., giraffe). We used CDI age-norms for the proportion of 16-month-old children who comprehended each lexical item to assign a difficulty level to each lexical item (Dale & Fenson, 1996). We divided words into 3 groups from “easy” (known by many children) to “hard” (known by few children), with an equal distribution across the 3 groups. Keeping these items constant across children and conditions allowed us some control over what the children saw, heard, and did, and also allowed us to explore whether any effects we might find were due to learning these words in particular, or to learning to use gesture in general. Over the course of the study, children were given labels for 24 target items, each presented at 3 sessions.

Figure 1.

Sample Stimuli Page (target item = dress).

We did not include assessments of how well the children learned the 24 words that we explicitly taught for two reasons. First, our focus was on whether the experimental manipulation would have an impact on the children’s overall word learning skills (which we measured by assessing the number of words children used in conversation with their caregivers), not on their acquisition of these particular words. Second, we thought that including a test-session probing the 24 words might encourage children to focus on the taught words to the exclusion of others. However, we were able to look at whether children in each group used the 24 target items in their spontaneous speech with caregiver during the follow-up session. Overall, children produced too few of the target items for us to systematically investigate use of the target words. Out of the 15 children, only 5 children produced target words in their spontaneous speech at follow-up, with a maximum of two words produced by any one child. Of the 5 children who produced at least 1 target word: 1 was in the NG condition, 1 was in the EG condition, and 3 were in the C&EG condition. To investigate whether our analyses of child speech outcome were influenced by the particular words taught during the training sessions, we ran our analyses predicting speech outcome again, but this time we excluded all target words. Results were unchanged. Thus, we continue to include all children’s spontaneously produced words in our measure of child speech at follow-up.

Gesture Coding

Child Gesture in Experiment measure

We coded all pointing gestures that children produced to the target pictures in response to the experimenter’s target utterances for all 6 training sessions. The child’s gesture could occur before, during, or after either of the two experimenter target utterances for that page, but the gesture was counted only if it pointed to the target item in the book. We then calculated the total number of target item presentations to which the child responded with a point. Since the child was given 72 opportunities to produce a pointing gesture over the course of the 6 sessions, possible scores ranged from 0 to 72.

Child Gesture with Caregiver measures

Using previously developed criteria for coding communicative gesture (Rowe & Goldin-Meadow, 2009a,b; Iverson & Goldin-Meadow, 2005), we coded all of the gestures that each child produced during 20 minutes of the caregiver-child interactions at sessions 1 (pretest), 3, 5, and follow-up. Data were sometimes not available for the full 30 minutes (e.g. due to scheduling problems or video error); we therefore coded the first 20 minutes for each child.

Communicative hand movements that did not involve direct manipulation of objects (e.g., twisting a jar open) or a ritualized game (e.g., patty cake) were considered gestures (Goldin-Meadow & Mylander, 1984). Head nods and shakes were also considered gestures. Children produced three kinds of gestures: (1) deictic gestures indicate concrete objects, people, or locations, e.g. pointing to a dog to indicate a dog; (2) iconic gestures depict the attributes or actions of an object via hand or body movements, e.g., flapping arms to convey a flying bird; (3) conventional gestures have forms and meanings that are prescribed by the culture, e.g.. shaking the head side-to-side to convey no.

We coded each gesture for meaning and then calculated the number of unique gesture meanings (gesture types) that each child produced at each session. For example, a point at a dog is assumed to mean dog and thus counts as a gesture type. This procedure for attributing meaning to early child gesture has been used in previous studies and results in systematic patterns (Rowe & Goldin-Meadow, 2009a,b). An independent coder blind to condition membership performed gesture coding on 10% of the videos. Percent agreement between coders was 86% for identifying gestures and 95% for assigning meanings to gestures.

Our measure of Pretest Child Gesture is the number of gesture types the child produced when interacting with the caregiver at session 1. The Child Gesture with Caregiver measure is the slope of the number of gesture types each child produced across sessions 1, 3, 5, and follow-up. A slope was calculated individually for each child by performing child-specific regressions using session (1, 3, 5, follow-up) as the predictor and gesture type as outcome. Each child’s slope was the estimated regression coefficient resulting from this analysis.

Speech Transcription

We transcribed child speech during the same 20 minutes of caregiver-child interaction coded for gesture. Sounds that were reliably used to refer to entities, properties, or events (e.g., ‘doggie’, ‘nice’, ‘broken’), along with onomatopoeic sounds (e.g., ‘meow’, ‘choo-choo’) and conventionalized evaluative sounds (e.g., ‘oopsie’, ‘uh-oh’), were counted as words. Morphologically inflected variants of words (e.g., run, running) were considered a single type. An independent coder blind to condition membership coded half of the videos. We established reliability for our speech transcripts on 10% of transcripts. Percent agreement between coders was calculated at the utterance level and transcribers were reliable when percent agreement reached 90%.

Our measure of Pretest Child Speech is the number of unique word types the child produced when interacting with the caregiver at session 1. The Child-Speech-with-Caregiver-at-Follow-up measure, the speech outcome measure used in our analyses, is the number of unique word types the child produced when interacting with the caregiver at the follow-up session.

Analytic Plan

We analyze our data in two parts. First, we examine instruction effects on child gesture (our mediator) and instruction effects on child speech (our outcome). Based on our a priori predictions, we used Instruction-to-Gesture group as our experimental instruction variable. Thus, analyses of instruction effects test differences between the: (1) Told-to-gesture group (the C&EG condition), and (2) Not-told-to-gesture group (i.e., both control conditions, EG and NG). Although we focus on differences between Instruction-to-Gesture groups, we did examine whether there were differences between the two control conditions, and found no significant differences between the EG and NG conditions on any of our mediator and outcome measures (all 2-tail p-values > .21). We consider possible differences between control conditions in our discussion of future directions.

Second, we test our hypothesis that increases in child gesture (our mediator) lead to increases in child speech (our outcome). We do this by testing indirect effects of our instruction (Instruction-to-Gesture group) on child speech through child gesture. To account for variability that may be attributed to differences between control conditions, we include control group assignment as a residual contrast in our indirect effects analyses. The residual contrast was not significant in any of our models (2-tail p-values > .23).

In developing this analytic plan we paid particular attention to two aspects of the study. First, we hypothesized an indirect effect of the experimental intervention on speech outcome. Because we are investigating causal effects of child gesture on child speech, we predict that the intervention will impact child speech only to the extent that it impacts child gesture. Thus, analyses of simple effects of our intervention (comparison of group means on our outcome variable) would not adequately test our hypothesis. For instance, we could observe a total effect of intervention on speech outcome, but not know whether it was child gesture that was causing the effects on child speech. Alternatively, we could not find an effect of intervention on speech outcome, but not know whether individual differences in the potency of our intervention on child gesture masked gesture effects on child speech. Second, our sample size is small and thus may be sensitive to violations of assumptions of traditional parametric statistics. To address both of these concerns, (1) in addition to testing total effects, we test our hypothesis by using indirect effects analysis, and (2) in addition to using parametric statistics, we use permutation analyses when possible.

Statistical Analyses

We analyze our data using both parametric and nonparametric methods and present results for both. To test the significance of our effects using nonparametric methods, we use permutation analysis. For permutation analysis, we perform approximate randomization tests (Good, 2000; MacKinnon, 2008). Rather than resample all possible rearrangements of our data as in a true permutation test, we set our number of random resamples at 1000 to approximate the complete randomization distribution (Hesterberg, Moore, Monaghan, Clipson, & Epstein, 2006). For all randomization tests: (1) we randomly resampled without replacement in a manner consistent with the null hypothesis (e.g., randomly reassigning conditions); then (2) for each of the 1000 resamples, we calculated the statistic of interest (e.g. mean difference between groups); and finally (3) we used the values generated in each resample to construct the permutation distribution. The proportion of values greater than or equal to the values observed in our sample is the 1-tail p-value (Hesterberg et al., 2006). For all tests involving Instruction-to-Gesture effects, we permuted Instruction-to-Gesture by randomly assigned conditions and then collapsed the permuted conditions into Instruction-to-Gesture groups: Told-to-gesture (C&EG condition) vs. Not-Told-to-gesture (EG, NG conditions). Because our predictions are directional, we present 1-tail p-values for all analyses. When statistical significance differs for 2-tail tests, 2-tail p-values are reported. Unless otherwise stated, all results that are significant at alpha .05 with a 1-tail test are also significant using a 2-tail test.

Results

We hypothesize that telling children to gesture will increase child gesture and, through child gesture, increase child speech. We present findings for (1) the total effects of our experimental manipulation (Instruction-to-Gesture) on children’s gesture and speech, and (2) the indirect effects of this experimental manipulation on child speech through child gesture. The total effects analyses in Part I do not include any covariates. However, we provide results from specification checks that include covariates for all Part 1 analyses. The indirect effects analyses in Part 2 include Pretest Child Speech as a covariate. As mentioned earlier, if we re-run indirect effects analyses including Comprehension Vocabulary or Pretest Child Gesture as a covariate, the results are unchanged.

Part 1. Instruction Effects on Child Gesture and Speech

Instruction Effects on Gesture

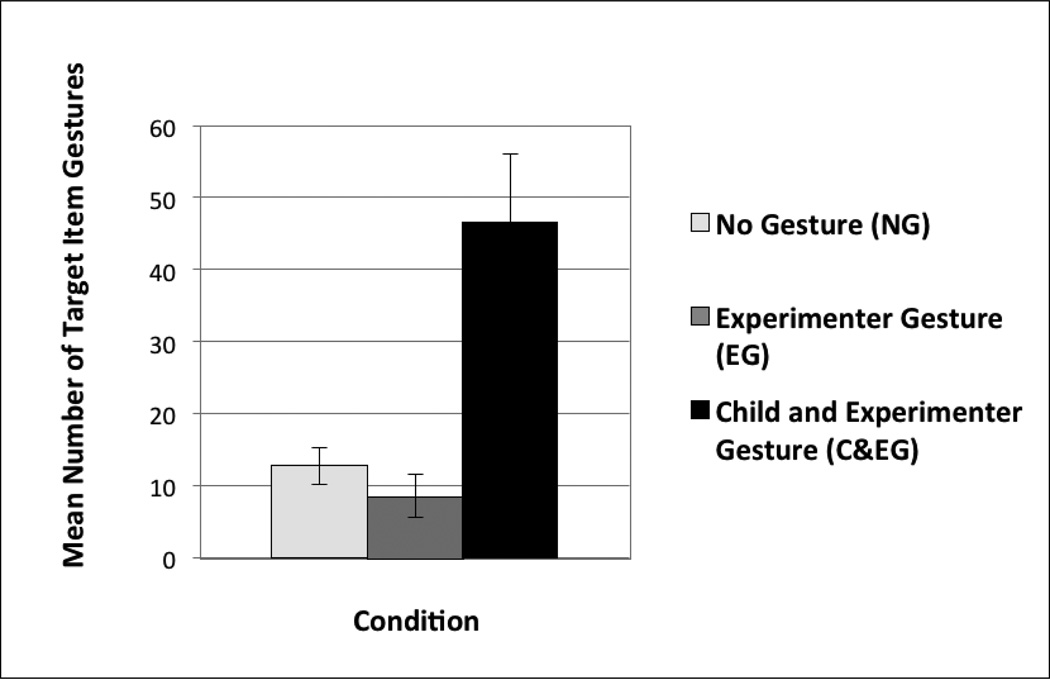

We first asked whether instructing children to gesture increased child gesture during the experiment (Figure 2). We found a significant effect of Instruction-to-Gesture on Child Gesture in Experiment, using both nonparametric2 (Randomization test: mean difference = 35.9; p = .002) and parametric (t (13) = 5.074, p = .009) tests3. As predicted, children in the Told-to-gesture group (M = 46.6, SD = 21.3) produced more gestures during the experiment than children in the Not-told-to-gesture group (M = 10.7, SD = 6.3).

Figure 2.

Child Gesture with Experimenter. Mean number of gestures at targets produced by children in each condition during the 6 training sessions with the experimenter. Error bars represent standard errors of the mean.

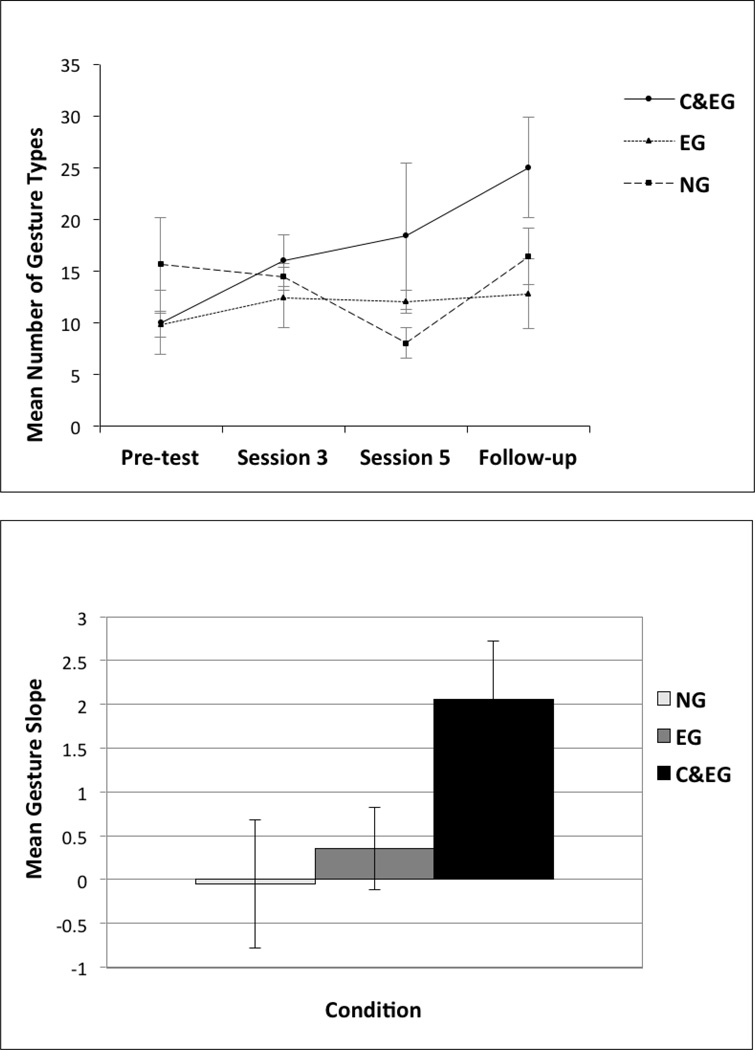

We next asked whether this effect extended beyond the experimenter-child context to interactions with the caregiver. We first counted the number of gesture types the child produced at sessions 1, 3, 5 and follow-up (Figure 3, top). We then calculated the slope of child gesture types from pretest to follow-up for each child, our Child Gesture with Caregiver measure (Figure 3, bottom), by calculating a regression line for each child using gesture types from sessions 1, 3, 5 and follow-up. There was a significant effect of Instruction-to-Gesture on Child Gesture with Caregiver, using both nonparametric (Randomization test: mean difference = 1.902, p = .015) and parametric (t (13) = 2.531, p = .013) tests4. As predicted, children who were Told-to-gesture (M = 2.06, SD = 1.49) had a greater increase in gesture with caregiver over the course of the study than children Not-told-to-gesture (M = 0.16, SD = 1.31).

Figure 3.

Child Gesture with Caregiver. Top: mean number of gesture types produced by children in each condition at sessions 1(pretest), 3, 5, follow-up. Bottom: growth in gesture types over sessions (mean slope) in each condition. Error bars represent standard errors of the mean.

To increase the stability of our gesture measure, in subsequent analyses we combine the two child gesture measures (Child Gesture in Experiment; Child Gesture with Caregiver) into one Child-Gesture-Composite score by averaging the z-score of each. Child-Gesture-Composite scores were higher for the Told-to-gesture group (M = 0.95, SD = 0.64) than for the Not-told-to-gesture group (M = −0.48, SD = 0.46). Group differences were significant using both nonparametric (Randomization test: mean difference = 1.423, p = .001) and parametric (t (13) = 4.982, p < .001) tests5.

As mentioned earlier, we did not find significant differences between our control conditions on our mediator and outcome measures. However, before continuing with our data analysis, we used randomization tests of differences between means to explore whether the Told-to-gesture condition differed from each of the control conditions. For Child-Gesture-Composite, scores for the Told-to-gesture condition (C&EG) significantly differed from both control conditions (EG: mean difference = 1.410; p =. 002; NG: mean difference = 1.438; p < .001). Similar patterns were found for the component measures: Child Gesture in Experiment (EG: mean difference = 38; p =. 001; NG: mean difference = 33.8; p < .001); Child Gesture with Caregiver (EG: mean difference = 1.7; p =. 052; NG: mean difference = 2.104; p = .012). Thus, in the absence of evidence that our instruction operates differently for the two groups, we continue to follow our analytic plan of comparing groups based on Instruction-to-Gesture group. However, in Part 2 analyses, we account for any potential variability between the 2 control conditions by including condition as a residual factor.

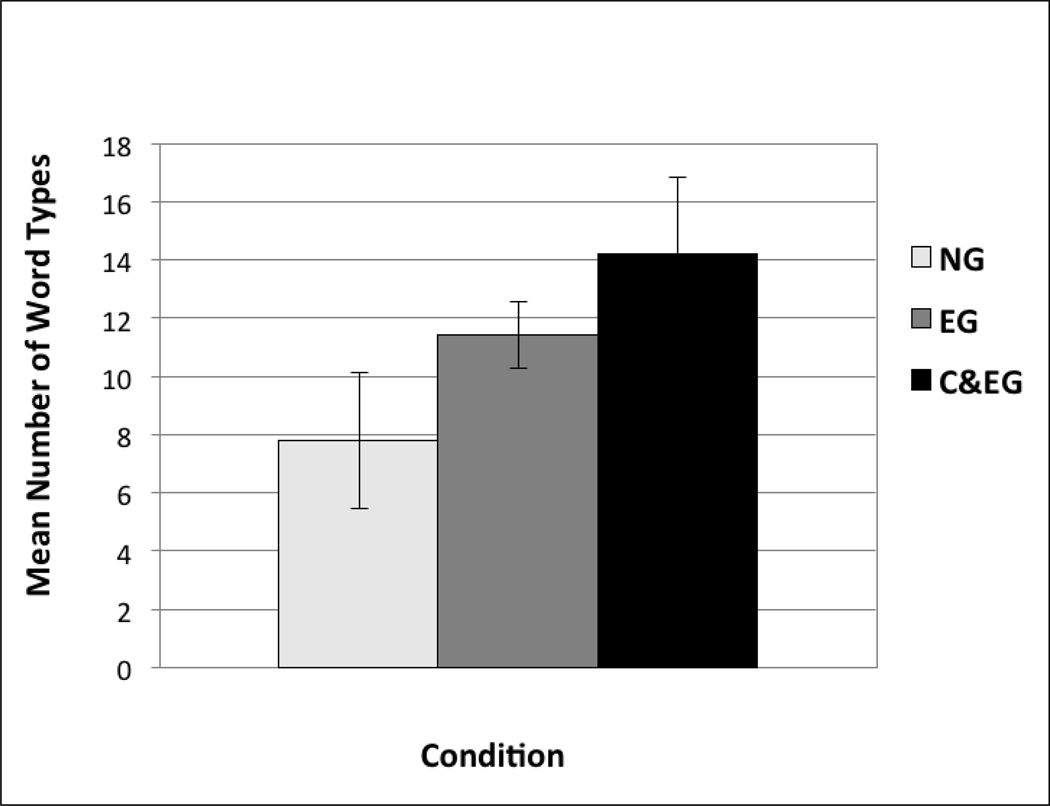

Instruction Effects on Speech

We next investigate whether Child-Speech-with-Caregiver-at-Follow-up, which took place two weeks after the final experimental session, differs for Instruction-to-Gesture groups. At follow-up, children in the Told-to-gesture group produced an average of 14.20 (SD = 5.93) word types and children in the Not-told-to-gesture group produced 9.60 (SD = 4.35) (Figure 4). These differences were marginally significant, using both nonparametric (Randomization test: mean difference = 4.6, p = .056) and parametric (t (13) = 1.717, p = .055) tests.6 These differences were not statistically significant using a 2-tail test (Randomization test: p = .12; t-test: p = .11).

Figure 4.

Child Speech with Caregiver at Follow-up. Mean number of word types produced by children in each condition when interacting with caregiver during follow-up. Error bars represent standard errors of the mean.

Part 2. Indirect Effects on Child Speech through Child Gesture

We used Indirect Effects analysis (MacKinnon, 2008) to explore whether our experimental manipulation has an indirect effect on child speech that operates through child gesture. Our hypothesis is that our randomly assigned experimental manipulation will impact child speech only to the extent that it impacts child gesture. Thus, we predict that the effect of Instruction-to-Gesture (our treatment variable) on Child-Speech-with-Caregiver-at-Follow-up (our outcome variable) will operate through Child-Gesture-Composite (our mediator variable).7 As in our other analyses, we use both parametric and nonparametric tests. Nonparametric tests such as our randomization tests are useful with small sample sizes and can be used to test significance of indirect effects (MacKinnon, 2008). The Indirect Effects analysis assumes that there are no unobserved confounders correlated with both the mediator and outcome (MacKinnon, 2008). We address this assumption by controlling for potential confounders in the Indirect Effects analysis.8

The Indirect Effects analysis we use is similar to a mediation analysis, but differs in the guidelines for evaluating the indirect effect. An indirect effects approach requires a significant path a (between treatment and mediator) and a significant path b (between mediator and outcome, controlling for treatment) but does not require a significant relation between treatment and outcome (Hayes, 2009). The estimate of the indirect effect is the product (a*b) where “a” is the unstandardized coefficient for the Treatment in the path a regression model and “b” is the unstandardized coefficient for the Mediator in the path b regression model (MacKinnon, Lockwood, & Williams, 2004; Preacher & Hayes, 2004). A significant indirect effect indicates that treatment and outcome are significantly related (albeit indirectly) and that the relation operates through the mediator.

We conduct our analysis in a series of three steps. In Steps 1 and 2, we perform a regression of Child-Gesture-Composite on Instruction-to-Gesture (path a) and then we perform a regression of Child-Speech-with-Caregiver-at-Follow-up on Child-Gesture-Composite, controlling for Instruction-to-Gesture (path b). In steps 1 and 2 we control for Pretest Child Speech.9 We assume there are no unobserved confounders correlated with both Child-Gesture-Composite and Child-Speech-with-Caregiver-at-Follow-up, controlling for Instruction-to-Gesture and Pretest Child Speech. In all of our regression models that include Instruction-to-Gesture as a factor, we also include a residual contrast factor. Because there were three conditions in the study, we needed two orthogonal contrasts to test our experimental effects. Our Instruction-to-Gesture factor, Told-to-gesture (C&EG) vs. Not-told-to-gesture (EG, NG), captures the effect of doing gesture vs. not doing gesture. The residual contrast, EG vs. NG, captures any differences between the two control conditions and thus tests the effect of seeing (but-not-doing) gesture vs. no gesture at all. In step 3 we calculate the product a*b from the values generated in steps 1 and 2. In all steps, we tested significance using randomization tests, but also report significance from parametric tests.

In step 1, we investigate whether Instruction-to-Gesture significantly predicts Child-Gesture-Composite (path a). Our regression model predicting Child-Gesture-Composite included Instruction-to-Gesture, Pretest Child Speech, and the residual contrast. In all regression analyses, the categorical variables were coded in the following manner: Instruction-to-Gesture (C&EG = 2; EG=−1; NG=−1); residual contrast (C&EG = 0; EG=1; NG=−1). Consistent with our findings in Part 1, we found that Instruction-to-Gesture predicts Child-Gesture-Composite score, B = 0.475, SE = 0.093, β = 0.810, p < .001. We performed randomization tests of significance by permuting the regression model outcome variable (Child-Gesture-Composite) and performing a regression analysis that included the permuted values of the outcome and the actual values of our predictor and covariates (Anderson & Legendre, 1999; Good, 2000). This method accounts for the possibility that the predictor variables are related. We find that Instruction-to-Gesture significantly predicts Child-Gesture-Composite (p < .001),10 and thus meets our indirect effects analysis criterion of a significant path a.

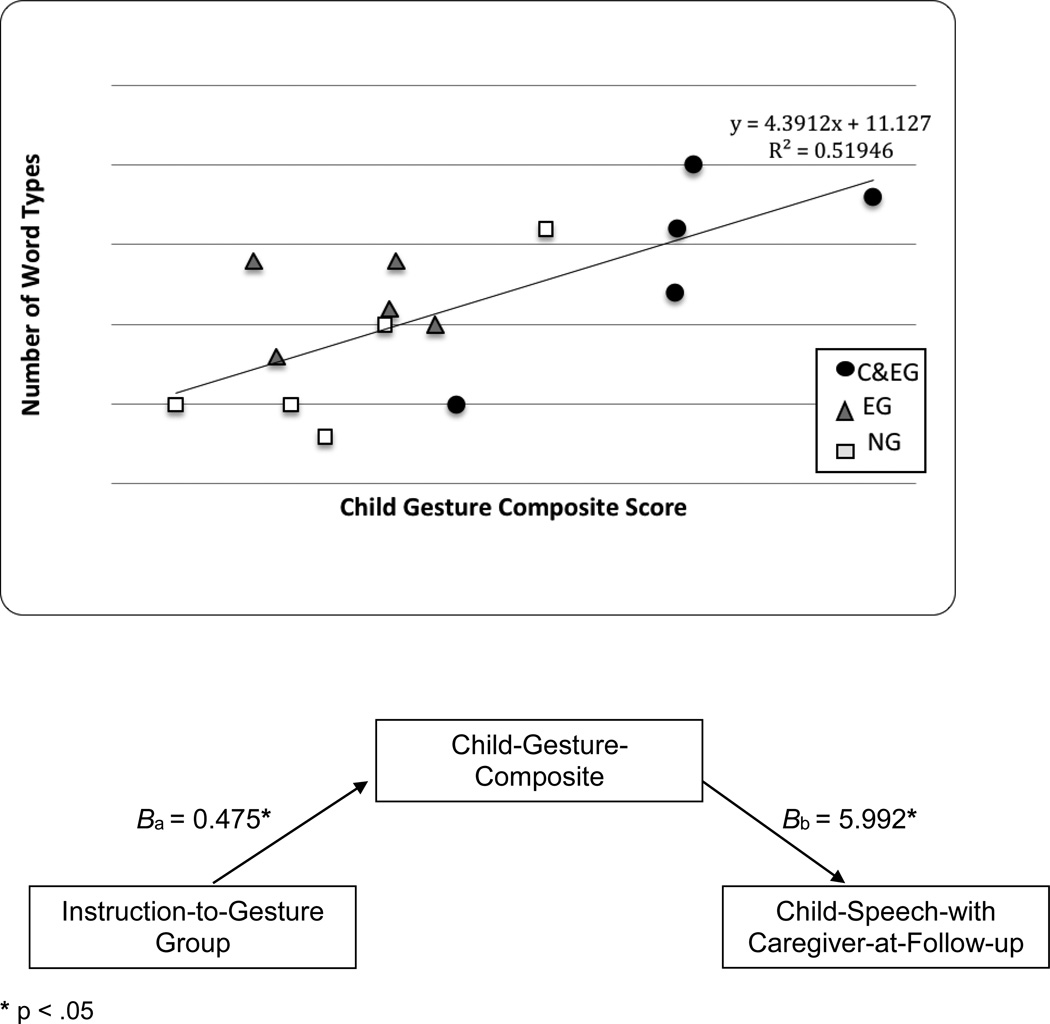

In step 2, we investigated whether Child-Gesture-Composite relates to Child-Speech-with-Caregiver-at-Follow-up, controlling for our experimental manipulation, Instruction-to-Gesture (path b). Our regression model predicting Child-Speech-with-Caregiver-at-Follow-up included the following predictors: Child-Gesture-Composite, Instruction-to-Gesture, Pretest Child Speech, and the residual contrast. Child-Gesture-Composite predicted Child-Speech-with-Caregiver-at-Follow-up, B = 5.992, SE = 2.074, β = 0.984, p = .008. We performed randomization tests of significance by randomly permuting our regression model outcome variable (Child-Speech-with-Caregiver-at-Follow-up), and then we regressed our permuted outcome variable on the actual values of our predictors. Child-Gesture-Composite was a significant predictor of Child-Speech-with-Caregiver-at-Follow-up (p = .021), thus meeting the indirect effects analysis criterion of a significant path b. Children with higher Child-Gesture-Composite scores produced more word types at follow-up than children with lower scores (Figure 5, top).

Figure 5.

Predicting Child Speech. Top: mean number of word types children in each condition produced when interacting with the caregiver during follow-up, as a function of each child’s gesture composite. Bottom: indirect effects analysis showing that the experimental manipulation (Instruction-to-Gesture) had an effect on child speech (Child-Speech-with-Caregiver-at-Follow-up) through child gesture (Child-Gesture-Composite).

In step 3, we tested our prediction that there was an indirect effect of experimental manipulation (Instruction-to-Gesture) on outcome (Child-Speech-with-Caregiver-at-Follow-up) that operates through child gesture (Child-Gesture-Composite). We estimated the indirect effect by calculating the product a*b for our sample (a*b = 2.846) and then testing significance with randomization tests. To do so, we recalculated paths a and b for each iteration and then calculated the product a*b using the coefficients generated for paths a and b in that iteration (MacKinnon, 2008). We generated the coefficients for path a and path b as follows: we permuted Child-Gesture-Composite and Child-Speech-with-Caregiver-at-Follow-up and used these permuted values in the path a and path b regression models presented earlier. We used actual values for the remaining variables in the models. We found a significant indirect effect of Instruction-to-Gesture on Child-Speech-with-Caregiver-at-Follow-up through Child-Gesture-Composite (p < .001) (Figure 5, bottom).

To summarize, we were able to experimentally increase the rate at which children gestured and, by doing so, increase the number of different words they produced in spontaneous interactions with their caregivers. Our data permit us to ask one further question––did the caregivers play a role in increasing child gesture? Most of the caregivers watched the experimenter as she interacted with the child and thus might have, on their own, encouraged the children to gesture, or they may have increased their own gesture in response to the child’s increase in gesture, thus reinforcing gesturing as a means of communication. We explore here whether any increases in caregiver gesture might have played a role in our effects on child gesture and child speech. If parents play a role in our effects, we would predict an effect of Instruction-to-Gesture on caregiver gesture and a relation between caregiver gesture and child gesture. However, if our effect is still operating through the child’s own gesture production, we would find that child gesture remains a significant predictor of child speech when accounting for caregiver gesture.

To explore this possibility, we coded the gestures caregivers produced during the 20 minutes of caregiver-child interactions at sessions 1, 3, 5, and follow-up using the same system used for the children, and calculated the number of gesture types per session. We first examined whether differences in caregiver gesture at pretest may be underlying our findings. There were no significant differences between conditions in mean caregiver gesture at pretest (session 1): C&EG: 20.40 (SD = 2.61); EG: 20.20 (SD = 1.79); NG: 21.20 (SD = 6.02) (ANOVA: F (2) = 0.091, p = .457; Kruskal-Wallis: K (2) = 0.270, p = .437). In addition, caregiver gesture at pretest does not relate to Child Gesture Composite (p-values for parametric tests and randomization tests > .38). Lastly, if we re-run our series of indirect effects analyses using caregiver gesture at pretest as a covariate instead of Pretest Child Speech, we obtain a similar pattern of results (all paths were significant at p < .01 using parametric and randomization analyses; the indirect effect was significant using the randomization test at p < .001).

We next calculated the slope of caregiver gesture production by performing caregiver-specific regressions using session (1, 3, 5, follow-up) as the predictor and gesture type as outcome. Each caregiver’s slope was the estimated regression coefficient resulting from this analysis. We used slope of caregiver gesture production as our measure of Caregiver Gesture. Mean Caregiver Gesture slopes were: 2.30 (SD = 1.29) C&EG; 0.05 (SD = 1.08) EG; −0.13 (SD = 1.21) NG. We found a significant effect of Instruction-to-Gesture on Caregiver Gesture in a model that also included the residual contrast and caregiver gesture at pretest as covariates (B = 0.767, SE = 0.211, p = .004; Randomization test p = .001). Caregiver Gesture also significantly related to our Child Gesture Composite measure (B = 0.387, SE = 0.104, p = .003; Randomization test p < .001). Importantly, however, the increases in caregiver gesture did not account for the increases in child speech: including Caregiver Gesture as a covariate, Child Gesture Composite still significantly related to Child Speech with Caregiver at Follow-up (B = 4.951, SE = 1.732, p = .014; Randomization test p = .011). The increases we see in caregiver gesture are nonetheless important as they suggest that we can, with little effort, engage caregivers in attempts to manipulate young children’s gestures.

Discussion

In summary we found (i) we can increase infant (age 1;5) gesture through experimental manipulation, and (ii) this increase in child gesture has a positive effect on the number of different words children use in spontaneous interactions with their caregivers. We discuss each point in turn.

Increasing Child Gesture through Experimental Manipulation

Our study adds to the literature on early child gesture by demonstrating that it is possible to experimentally manipulate typically developing child pointing in an experimental context, and that these experimental effects generalize to children’s spontaneous gesturing in naturalistic interactions with caregivers. Compared to other studies (e.g. Goodwyn et al., 2000), our experimenter-administered intervention was relatively short and involved little time commitment—the intervention lasted 6 weeks, with one 30-minute experimental session per week. The finding that gesture is malleable in children and can be manipulated by non-caregivers may have implications for the design of studies aimed at increasing child gestural communication not only in typically developing children, but also in children with developmental delays (e.g. Yoder & Warren, 2002). For both typically and atypically developing children, simply being able to increase infant gestural communication may have positive cascading consequences for learning (e.g., through the effect child gesture has on interactions with others, as discussed later). Lastly, although all children in the group that was told to gesture had larger increases in gesture than the control group children (with the exception of one child in the NG condition; Figure 5 top), there were individual differences in the potency of our intervention on child gesture. The fact that our manipulation did not have a uniform effect on child gesture (and that children in the control condition were not prevented from gesturing and thus could, and in some cases did, gesture) may account, at least in part, for the fact that the indirect effect of instruction through gesture was significant but the direct effect was only marginal. Future work is needed to investigate ways to increase the potency of our experimental manipulation.

Increases in Gesture Lead to Increases in Spoken Words

Our study provides evidence that gesture can be used to facilitate early spoken word use. We were able to experimentally increase the rate at which children gestured and, by doing so, increase the number of different words they produced in naturalistic interactions with their caregivers. Unfortunately, we did not have assessments of the children’s comprehension vocabularies at the end of the study, and thus do not know whether our manipulations affected the number of different words the children had in their repertoires, or the number of different words they used when talking to their caregivers at home. Future work is needed to determine precisely where gesture has its effects.

Why did our instruction to gesture have an impact on the number of words children produced? Multiple mechanisms may underlie our findings. First, we found that the caregivers of children who were instructed to gesture increased their own gesturing over the course of the study, either because they discovered the importance of gesture from watching the experimenter, or because they responded to increases in their child’s gestures with increases in their own. An increase in caregiver gesture might bring with it an increase in caregiver speech. Increased speech could, in turn, have an impact on child vocabulary, particularly since the amount of talk parents direct to their children has been found to affect the size of the child’s vocabulary (Huttenlocher et al., 1991).

A second possibility is that the increase in child gesture gives parents more opportunities to provide their children with targeted speech input. Parents’ translations of their children’s gestures (e.g., saying, “yes, that’s a dog,” in response to a child’s point to a dog) have been found to relate to child vocabulary (Goldin-Meadow et al., 2007; Golinkoff, 1986; Masur, 1982). The children in our study who were told to gesture increased the number of gestures they produced and thus gave their caregivers more opportunities for these translations. If caregivers respond to their children’s gestures by providing a label for the object that is pointed at, not only are they increasing the number of words they say to their children, but the words are produced at just the moment when the child is focused on the relevant object––a teachable moment.

These first two possibilities grow out of the fact that gesture is a communicative tool. Increasing the child’s use of the tool has the potential to affect the kinds of communications and interactions the child has with others, which, in turn, can shape the child’s language-learning environment. In other words, gesture affects learning indirectly through its impact on the learning environment.

The third possibility is that increasing child gesture affects learning directly by having an impact on child cognition. Experimental research shows that children’s actions can influence cognitive development (Campos et al., 2000; Campos, Bertenthal, & Kermoian, 1992; Rakison & Woodward, 2008), as can their gestures (Broaders et al., 2007; Cook et al., 2008; Goldin-Meadow et al., 2009). In our study, gesturing to the target pictures in the context of labeling may have focused children’s attention to objects in the environment, the labels accompanying them, and/or object-word relations (Clark, 1978; Goldfield & Reznick, 1990; Werner & Kaplan, 1963). Gesturing also gives children practice in referring to objects, which could encourage them to seek out information relevant to word learning. Children’s active engagement in the bidirectional labeling context when told to gesture may also enrich their understanding of the function of gesture, which could have beneficial consequences for children’s vocabulary development (Csibra & Gergely, 2009; Tomasello, Carpenter, & Liszkowski, 2007; Woodward & Guajardo, 2002; Yoon, Johnson, & Csibra, 2008).

Future Directions

As discussed earlier, we found individual differences in the magnitude of our effects on child gesture, leading us to ask whether there are other factors, in addition to our experimental instructions, that could have influenced child gesture in our experiment. For example, individual caregivers may have facilitated our intervention. In all conditions, 80% of caregivers observed the experimenter interact with the child during at least one session and thus might have, on their own, begun to employ those same behaviors in their everyday interactions with their children. Caregivers in the Told-to-gesture group did, in fact, increase their own gestures, which could serve to reinforce gesturing as a means of communication and thus enhance the impact of our manipulation. One possibility for future studies is to enlist parents, either explicitly (as was done in Goodwyn et al.’s, 2000, study) or implicitly (as may have happened in our study) as “experimenters” to administer gesture interventions. Positive relations have been observed between caregiver gesture and infant gesture (Namy, Acredolo, & Goodwyn, 2000; Rowe & Goldin-Meadow, 2009b), and future research with larger samples is needed to deepen our understanding of the role that caregivers are able to play in increasing child gesture. Considering factors other than direct instruction that could influence child gesture may be useful in thinking about different ways to translate our findings into interventions.

In addition, future studies are needed to examine potential differences between our control conditions. In our Part I analyses, we predicted larger increases in gesture for children who were told to gesture (C&EG condition) than for children who were not told to gesture (EG and NG control conditions). Our findings supported this prediction. However, our small sample did not permit us to fully explore differences between the two control conditions. Studies with larger samples are needed to investigate whether seeing an experimenter gesture (our EG condition) itself has an effect on learning. For example, Capone and McGregor’s (2005) study of children ages 2;3 – 2;6 suggests that seeing gesture can increase performance on a word-learning task. It is worth noting, however, that their effect may have been through child gesture since a number of children in the gesture input condition spontaneously imitated the experimenter’s gesture (Capone, 2007; see also Cook & Goldin-Meadow, 2006, who found, in older children, that experimenter gesture led to increased child gesture, which, in turn, led to improved performance on a math task).

Lastly, our findings offer a potential intervention for children with speech delays and those at risk for low vocabulary. Children’s early gesture predicts speech delay (Capone & McGregor, 2004; Sauer, Levine, & Goldin-Meadow, 2010; Thal & Tobias, 1994); our findings raise the possibility that gesture can be used as a tool to prevent or lessen those delays. Intervention could also be targeted to children from low socioeconomic (SES) families who, as a group, have smaller vocabularies than children from high SES families (Hoff, 2006). Vocabulary differences that children from high vs. low socioeconomic families bring with them to school can be traced, in part, back to how the children gestured at 1;2, which, in turn, can be traced back to how their parents gestured to their children (Rowe & Goldin-Meadow, 2009b). These findings suggest that one means for reducing disparities in vocabulary may be to manipulate early gesturing in parents and children.

Acknowledgments

The research was supported in part by a grant from the National Institute of Child Health and Human Development to Susan Goldin-Meadow. We thank A. Evenson, G. Spharim for help in coding; S.Beilock, J.Iverson, K.Kinzler, S.Levine for comments on earlier drafts.

Footnotes

A gesture intervention in which a gesture was modeled for the child and the child was then encouraged to use it has been shown to successfully increase gesturing outside of the training context in autistic children (Ingersoll, Lewis, & Kroman, 2007). Our study asks whether the strategy also works with typically developing children.

If we use a standard non-parametric Mann-Whitney test for our four Part 1 analyses we obtain similar results.

As specification checks, we re-ran analyses of effects of Instruction-to-Gesture on Child Gesture in Experiment, this time including covariates and obtained similar results: (1) Pretest Child Speech as a covariate (B = 11.967, SE = 2.244, β = 0.815, p < .001; Randomization test: p < .001); (2) Pretest Child Gesture as a covariate (B = 12.424, SE = 2.387, β = 0.846, p < .001; Randomization test: p < .001). For both analyses, we obtain similar results if we also include the residual contrast described in Part 2.

We re-ran analyses of effects of Instruction-to-Gesture on Child Gesture with Caregiver, this time including covariates and obtained similar results: (1) Pretest Child Speech as a covariate (B = 0.634, SE = 0.253, β = 0.575, p = .014; Randomization test: p = .011); (2) Pretest Child Gesture as a covariate (B = 0.530, SE = 0.207, β = 0.480, p = .013; Randomization test: 1-tail p = .032, 2-tail p = .068). We obtain similar results if we also include the residual contrast in each analysis.

We re-ran analyses of effects of Instruction-to-Gesture on Child Gesture Composite, this time including covariates and obtained similar results: (1) Pretest Child Speech as a covariate (B = 0.475, SE = 0.090, β = 0.810, p < .001; Randomization test: p < .001); (2) Pretest Child Gesture as a covariate (B = 0.453, SE = 0.095, β = 0.773, p < .001; Randomization test: p < .001). We obtain similar results if we also include the residual contrast in each analysis.

If we include Pretest Child Speech as a covariate in this analysis, we find an effect of Instruction-to-Gesture group on Child-Speech-with-Caregiver-at-Follow-up (B = 1.533, SE = 0.812, β = 0.430, 1-tail p = .042, 2-tail p = .083; Randomization test: 1-tail p = .062, 2-tail p = .124). If we instead include Pretest Child Gesture as a covariate, there is a marginally significant effect (B = 1.333, SE = 0.889, β = 0.374, 1-tail p = .080, 2-tail p = .160; Randomization test: 1-tail p = .083, 2-tail p = .157). Results for both analyses are similar if we also include the residual contrast.

If we use the CDI-toddler form, which was administered at follow-up, as our speech outcome measure in path b, we find similar effects. Child-Gesture-Composite is a significant predictor of speech outcome, as measured by the CDI (B = 41.555, SE = 22.521, β = 0.817, 1-tail p = .0475, 2-tail p = .095; Randomization test: p = .002). The Part 1 analysis of instruction effects is not significant (t (13) = 0.394, p = .350; Randomization test: mean difference = 9.7, p = .366).

We also performed an Instrumental Variables analysis as a complementary method of testing indirect effects. In this analysis, random assignment is our Instrument (in our study, Instruction-to-Gesture) and is thought to address the issue of omitted variables that could be confounders (Angrist & Krueger, 2001). We tested significance of the indirect effect using a randomization test, and both 1- and 2-tail tests were marginally significant (1-tail p = .098, 2-tail p = .099). Though not conclusive, these findings suggest a causal effect of manipulated child gesture on child speech outcome, a result confirmed in our Indirect Effects Analysis.

As reported earlier, if we instead control for our parent-reported pretest child language measures (Pretest Child Spoken Vocabulary and Pretest Child Comprehension Vocabulary), we obtain similar results.

We also ran a randomization test using an alternative method of permuting the data that was similar to the one used in Part I. We randomly permuted Instruction-to-Gesture group, but used actual values for all other predictors and outcome. Our findings are unchanged (1-tail p < .001).

Contributor Information

Eve Sauer LeBarton, Department of Psychology, The University of Chicago.

Susan Goldin-Meadow, Department of Psychology, The University of Chicago.

Stephen Raudenbush, Department of Sociology and the College, The University of Chicago.

References

- Acredolo L, Goodwyn S. Symbolic gesturing in normal infants. Child Development. 1988;59:450–466. [PubMed] [Google Scholar]

- Anderson MJ, Legendre P. An empirical comparison of permutation methods for tests of partial regression coefficients in a linear model. Journal of Statistical Computation and Simulation. 1999;62:271–303. [Google Scholar]

- Anderson RC, Freebody P. Vocabulary knowledge. In: Guthrie JT, editor. Comprehension and Teaching: Research Reviews. Newark, DE: International Reading Association; 1981. pp. 77–117. [Google Scholar]

- Angrist JD, Krueger AB. Instrumental variables and the search for identification: From supply and demand to natural experiments. The Journal of Economic Perspectives. 2001;15(4):69–85. [Google Scholar]

- Bates E, Benigni L, Bretherton I, Camaioni L, Volterra V. The emergence of symbols. New York: Academic Press; 1979. [Google Scholar]

- Bates E, Marchman V, Thal D, Fenson L, Dale P, Reznick JS, Hartung J. Developmental and stylistic variation in the composition of early vocabulary. Journal of Child Language. 1994;21:85–123. doi: 10.1017/s0305000900008680. [DOI] [PubMed] [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136:539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Campos J, Anderson D, Barbu-Roth M, Hubbard E, Hertenstein M, Witherington D. Travel broadens the mind. Infancy. 2000;1:149–219. doi: 10.1207/S15327078IN0102_1. [DOI] [PubMed] [Google Scholar]

- Campos J, Bertenthal B, Kermoian R. Early experience and emotional development: The emergence of wariness of heights. Psychological Science. 1992;3:61–64. [Google Scholar]

- Capone N. Tapping toddlers’ evolving semantic representation via gesture. Journal of Speech, Language, and Hearing Research. 2007;50:732–745. doi: 10.1044/1092-4388(2007/051). [DOI] [PubMed] [Google Scholar]

- Capone N, McGregor K. Gesture development: A review for clinical and research practices. Journal of Speech, Language, and Hearing Research. 2004;47:173–186. doi: 10.1044/1092-4388(2004/015). [DOI] [PubMed] [Google Scholar]

- Capone N, McGregor K. The effect of semantic representation on toddlers’ word retrieval. Journal of Speech, Language, and Hearing Research. 2005;48:1468–1480. doi: 10.1044/1092-4388(2005/102). [DOI] [PubMed] [Google Scholar]

- Clark R. The transition from action to gesture. In: Lock A, editor. Action, gesture, and symbol: the emergence of language. New York: Academic Press; 1978. pp. 231–257. [Google Scholar]

- Cook SW, Goldin-Meadow S. The role of gesture in learning: Do children use their hands to change their minds? Journal of Cognition and Development. 2006;7:211–232. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Csibra G, Gergely G. Natural pedagogy. Trends in Cognitive Sciences. 2009;13:148–153. doi: 10.1016/j.tics.2009.01.005. [DOI] [PubMed] [Google Scholar]

- Dale PS, Fenson L. Lexical development norms for young children. Behavioral Research Methods, Instruments, & Computers. 1996;28:125–127. [Google Scholar]

- Dickinson DK, Tabors PO. Beginning Literacy with Language: Young Children Learning at Home and School. Baltimore: Brookes Publishing; 2001. [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Bates E, Thal D, Pethick S. Variability in early communicative development. Monographs of the Society for Research in Child Development. 1994;59 (5, Serial No. 242) [PubMed] [Google Scholar]

- Fenson L, Dale PS, Reznick JS, Thal D, Bates E, Hartung JP, Reilly JS. The MacArthur Communicative Development Inventories: User’s guide and technical manual. San Diego, CA: Singular Publishing Group; 1993. [Google Scholar]

- Goldfield BA, Reznick JS. Early lexical acquisition – Rate, content, and the vocabulary spurt. Journal of Child Language. 1990;17:171–183. doi: 10.1017/s0305000900013167. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Cook SW, Mitchell ZA. Gesturing gives children new ideas about math. Psychological Science. 2009;20:267–272. doi: 10.1111/j.1467-9280.2009.02297.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Goodrich W, Sauer E, Iverson J. Young children use their hands to tell their mothers what to say. Developmental Science. 2007;10:778–785. doi: 10.1111/j.1467-7687.2007.00636.x. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Mylander C. Gestural communication in deaf children: The effects and noneffects of parental input on early language development. Monographs of the Society for Research in Child Development. 1984;49 (3–4, Serial No. 207). [PubMed] [Google Scholar]

- Golinkoff RM. ‘I beg your pardon?’ – The preverbal negotiation of failed messages. Journal of Child Language. 1986;13:455–476. doi: 10.1017/s0305000900006826. [DOI] [PubMed] [Google Scholar]

- Good P. Permutation Tests: A Practical Guide to Resampling Methods for Testing Hypotheses. 2nd ed. New York: Springer; 2000. [Google Scholar]

- Goodwyn SW, Acredolo LP, Brown C. Impact of symbolic gesturing on early language development. Journal of Nonverbal Behavior. 2000;24:81–103. [Google Scholar]

- Hayes A. Beyond Baron and Kenny: Statistical mediation analysis in the new millennium. Communication Monographs. 2009;76:408–420. [Google Scholar]

- Hesterberg T, Moore DS, Monaghan S, Clipson A, Epstein R. Bootstrap methods and permutation tests. In: Moore DS, McCabe GP, editors. Introduction to the practice of statistics. 2006. [Google Scholar]

- Hoff E. How social contexts support and shape language development. Developmental Review. 2006;26:55–88. [Google Scholar]

- Huttenlocher J, Haight W, Bryk A, Seltzer M, Lyons T. Early vocabulary growth: Relation to language input and gender. Developmental Psychology. 1991;27:236–248. [Google Scholar]

- Ingersoll B, Lewis E, Kroman E. Teaching the imitation and spontaneous use of descriptive gestures in young children with autism using a naturalistic behavioral intervention. Journal of Autism and Developmental Disorders. 2007;37:1446–1456. doi: 10.1007/s10803-006-0221-z. [DOI] [PubMed] [Google Scholar]

- Iverson J, Goldin-Meadow S. Gesture paves the way for language development. Psychological Science. 2005;16:367–371. doi: 10.1111/j.0956-7976.2005.01542.x. [DOI] [PubMed] [Google Scholar]

- MacKinnon DP. Introduction to Statistical Mediation Analysis. New York: Psychology Press; 2008. [Google Scholar]

- MacKinnon D, Lockwood C, Williams J. Confidence limits for the indirect effect: Distribution of the product and resampling methods. Multivariate Behavioral Research. 2004;39:99–128. doi: 10.1207/s15327906mbr3901_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masur E. Mothers’ responses to infants’ object-related gestures: Influences on lexical development. Journal of Child Language. 1982;9:23–30. doi: 10.1017/s0305000900003585. [DOI] [PubMed] [Google Scholar]

- Namy LL, Acredolo L, Goodwyn S. Verbal labels and gestural routines in parental communication with young children. Journal of Nonverbal Behavior. 2000;24:63–79. [Google Scholar]

- O’Neill DK, Pearce MJ, Pick JL. Relation between early narrative and later mathematical ability. First Language. 2004;24:149–183. [Google Scholar]

- Ozcaliskan S, Goldin-Meadow S. Gesture is at the cutting edge of early language development. Cognition. 2005;96:B101–B113. doi: 10.1016/j.cognition.2005.01.001. [DOI] [PubMed] [Google Scholar]

- Preacher KJ, Hayes AF. SPSS and SAS procedures for estimating indirect effects in simple mediation models. Behavior Research Methods, Instruments, & Computers. 2004;36:717–731. doi: 10.3758/bf03206553. [DOI] [PubMed] [Google Scholar]

- Rakison D, Woodward A. New perspectives on the effects of action on perceptual and cognitive development. Developmental Psychology. 2008;44:1209–1213. doi: 10.1037/a0012999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe M, Goldin-Meadow S. Early gesture selectively predicts later language learning. Developmental Science. 2009a;12:182–187. doi: 10.1111/j.1467-7687.2008.00764.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe ML, Goldin-Meadow S. Differences in early gesture explain SES disparities in child vocabulary size at school entry. Science. 2009b;323:951–953. doi: 10.1126/science.1167025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauer E, Levine S, Goldin-Meadow S. Early gesture predicts language delay in children with pre- or perinatal brain lesions. Child Development. 2010;81:528–539. doi: 10.1111/j.1467-8624.2009.01413.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith LB, Jones SS, Landau B, Gershkoff-Stowe L, Samuelson L. Object name learning provides on-the-job training for attention. Psychological Science. 2002;13:13–19. doi: 10.1111/1467-9280.00403. [DOI] [PubMed] [Google Scholar]

- Thal D, Tobias S. Relationships between language and gesture in normally developing and late-talking toddlers. Journal of Speech and Hearing Research. 1994;37:157–170. doi: 10.1044/jshr.3701.157. [DOI] [PubMed] [Google Scholar]

- Tomasello M, Carpenter M, Liszkowski U. A new look at infant pointing. Child Development. 2007;78:705–722. doi: 10.1111/j.1467-8624.2007.01025.x. [DOI] [PubMed] [Google Scholar]

- Werner H, Kaplan B. Symbol Formation: An Organismic-developmental Approach to Language and the Expression of Thought. NY: Wiley; 1963. [Google Scholar]

- Woodward A, Guajardo J. Infants’ understanding of the point gesture as an object-directed action. Cognitive Development. 2002;17:1061–1084. [Google Scholar]

- Yoder PJ, Warren SF. Effects of prelinguistic milieu teaching and parent responsivity education on dyads involving children with intellectual disabilities. Journal of Speech, Language, and Hearing Research. 2002;45:1158–1174. doi: 10.1044/1092-4388(2002/094). [DOI] [PubMed] [Google Scholar]

- Yoon J, Johnson M, Csibra G. Communication-induced memory biases in preverbal infants. Proceedings of the National Academy of Sciences of the United States of America. 2008;105:13690–13695. doi: 10.1073/pnas.0804388105. [DOI] [PMC free article] [PubMed] [Google Scholar]