Abstract

Functional neuroimaging research on the neural basis of social evaluation has traditionally focused on face perception paradigms. Thus, little is known about the neurobiology of social evaluation processes based on auditory cues, such as voices. To investigate the top-down effects of social trait judgments on voices, hemodynamic responses of 44 healthy participants were measured during social trait (trustworthiness [TR] and attractiveness [AT]), emotional (happiness, HA), and cognitive (age, AG) voice judgments. Relative to HA and AG judgments, TR and AT judgments both engaged the bilateral inferior parietal cortex (IPC; area PGa) and the dorsomedial prefrontal cortex (dmPFC) extending into the perigenual anterior cingulate cortex. This dmPFC activation overlapped with previously reported areas specifically involved in social judgments on ‘faces.’ Moreover, social trait judgments were expected to share neural correlates with emotional HA and cognitive AG judgments. Comparison of effects pertaining to social, social–emotional, and social–cognitive appraisal processes revealed a dissociation of the left IPC into 3 functional subregions assigned to distinct cytoarchitectonic subdivisions. In total, the dmPFC is proposed to assume a central role in social attribution processes across sensory qualities. In social judgments on voices, IPC activity shifts from rostral processing of more emotional judgment facets to caudal processing of more cognitive judgment facets.

Keywords: fMRI, social cognition, social judgments, temporo-parietal junction, voice evaluation

Introduction

Presumably as adaptive guidance in social interaction, humans automatically engage in social trait estimations of other individuals from the first impression (Bourdieu 1979). In this context, 2 of the most influential and most studied social judgments are those on trustworthiness (TR; Winston et al. 2002; Engell et al. 2007; Baas et al. 2008; Todorov and Engell 2008; Todorov, Baron, et al. 2008; Said et al. 2009) and attractiveness (AT; Bray and O'Doherty 2007; Kim et al. 2007; Cloutier et al. 2008; Chatterjee et al. 2009). TR judgments govern generosity in economic trust games (van't Wout and Sanfey 2008) and the selection of cooperation partners in daily life (Cosmides and Tooby 1992). Similarly important, AT judgments influence human behavior beyond the choice of potential mating partners (Zebrowitz and Montepare 2008): Attractive individuals receive longer eye-contact from infants (Langlois et al. 1991), higher salaries (Frieze et al. 1991), and even lower penalties (Stewart 1980; Langlois et al. 2000).

Besides behavioral studies reporting high intercorrelation of both judgments (Todorov 2008; Todorov, Baron, et al. 2008; Todorov, Said, et al. 2008), neuroimaging research indicates a largely overlapping neural basis for both types of decisions on others (Bzdok et al. 2011; Bzdok, Langner, et al. 2012; Mende-Siedlecki et al. 2012). Furthermore, TR and AT have been described as “complex social judgments” (Adolphs 2003) involving neural components from multiple functional domains, including affective responses and rational reasoning. For example, trustworthy and attractive faces are consistently shown to recruit brain regions that also respond to basic emotions, such as the amygdala (Bzdok et al. 2011; Mende-Siedlecki et al. 2012). Given that social trait formation is delicately biased by momentary expressions of emotions (Knutson 1996), complex social assessment has been suggested to extend neural systems for emotional processing (Todorov 2008; Todorov, Said, et al. 2008; Oosterhof and Todorov 2009). On the other hand, TR and AT characterize identity traits, which are expected to remain relatively stable over time, prospecting for long-term interaction such as cooperation and mating. In contrast, judgments about basic emotions such as happiness (HA) target more time-variant features of other individuals (Ekman 1992), limiting their social impact to immediate reactions (Izard 2007). Therefore, cognitive systems may modulate emotional information during social judgment formation (Cunningham et al. 2004).

Emotional and cognitive subsystems may, however, not completely explain the neural basis of social trait judgments. To dissociate the emotional and cognitive components from complex social judgments, a recent functional magnetic resonance imaging (fMRI) study in our lab directly contrasted TR and AT judgments with 2 different control conditions, HA and age (AG) judgments, as examples for more emotional and cognitive judgments, respectively (Bzdok, Langner, et al. 2012). Since HA judgments have frequently been used to study the neural correlates of basic emotions (Adolphs et al. 1996; Dolan et al. 1996; Gorno-Tempini et al. 2001; Fusar-Poli et al. 2009; Morelli et al. 2012), they were employed as a control condition for emotional assessment during social trait judgments (Bzdok, Langner, et al. 2012). Conversely, AG judgments have been commonly used as cognitive control conditions against both emotional and social judgments (Habel et al. 2000; Gur et al. 2002; Karafin et al. 2004; Harris and Fiske 2007; Winston et al. 2007; Gunther Moor et al. 2010; Bos et al. 2012), assuming AG discrimination to involve relatively little emotional, but more cognitive features such as memory-related processes (Winston et al. 2007). The results of our previous fMRI study confirmed complex social judgments to share neural patterns with emotion (HA) as well as trait (AG) recognition. Intriguingly, TR and AT judgments showed specifically higher responses than both control conditions in dorsomedial prefrontal cortex (dmPFC) and inferior frontal gyrus (IFG), possibly due to enhanced integration of other- and self-related knowledge during social judgments (Bzdok, Langner, et al. 2012). That is, previous research on face judgments showed that, well in line with behavioral data, complex social judgments share features with both emotional and cognitive judgments, but also revealed that on top of these commonalities specific neuronal correlates for these complex social trait assessments may be identified. Taken together, the complexity of social trait judgments such as TR and AT has been suggested to contain at least 3 components: Both judgments may involve 1) recognition of emotional states, 2) cognitive trait assessment, and most specifically, 3) integration of self-oriented values and goals. The correlates of social trait judgments could thus be dissociated from its inherent emotional and trait-related processes.

Notably, neuroimaging research including our previous study traditionally focused on face-derived judgments. This is somewhat surprising as voices not only provide information on the sender's affect and identity (Belin et al. 2004), but also on TR (Rockwell et al. 1997; Streeter et al. 1977) and AT (Hughes et al. 2004). Analogously to faces, voice decoding involves distinct neural pathways leading to conceptual processing of affect and identity in transmodal brain areas, such as IFG and posterior cingulate cortex (PCC), respectively (Campanella and Belin 2007; Belin et al. 2011). The roles of these pathways in complex social judgments, for example, TR and AT, on vocal stimuli are yet very poorly understood. The present study, therefore, aimed at the dissection of the neural components of complex social judgments on voices, comparing social trait judgments (TR and AT) with emotional state (HA) and identity trait (AG) judgments on vocal stimuli. The paradigm including its 4 judgment conditions was designed in close analogy to our previous study on social face judgments (Bzdok, Langner, et al. 2012) to increase the comparability of both studies. In total, blood oxygenation level-dependent (BOLD) responses of 44 healthy right-handed adults were scanned rating short everyday sentences recorded from 40 different individuals. Aiming at the isolation of top-down effects driven by the particular judgment task, the presented stimuli were balanced across judgment conditions. Thus, stimulus-driven BOLD effects were selectively partialed out when subtracting any pair of 2 judgment conditions.

Drawing parallels from facial judgments proposes the following hypotheses: Comparing with social judgments on faces, social judgments on voices also share a neural basis with emotional and trait-related judgments. Candidate regions for such shared processing are the IFG and posterior superior temporal sulcus (pSTS), both associated with judgment tasks on vocal affect (Ethofer et al. 2006; Schirmer and Kotz 2006; Wildgruber et al. 2006). Less is known about the neural basis for auditory trait-related judgments, such as AG. Yet, the PCC, which has been observed higher activated in AG, compared with AT judgments (Winston et al. 2007), has been suggested to integrate identity features on a transmodal level (Campanella and Belin 2007). Moreover, social trait judgments on voices are expected to engage specific brain regions when compared with emotional or trait-related control tasks. They include the dmPFC, a transmodal region, which has been discussed to reflect higher self-related processing during TR and AT judgments. Testing whether the effects during vocal judgments overlap with previous data on facial judgments might further indicate modality-independent underpinnings of social trait judgments.

Materials and Methods

Participants

Fourty-four healthy, right-handed adults (21 females, 36.0 ± 11.2 years, range 21–60 years) without any neurological or psychiatric disorder, nor contraindications for fMRI, participated in the study. All participants had normal or corrected-to-normal vision. Written informed consent, approved by the local ethics committee of the School of Medicine of the RWTH Aachen University, was obtained before entering the study.

Stimuli

In total, 40 sentences (2409 ± 356 ms) from 40 native German speaking individuals (20 females, 40.7 ± 14.9 years, range 20–83 years) were recorded, so that each sentence was spoken by a different voice. Sentences contained everyday statements of relatively neutral matter, such as “Entschuldigung, wissen Sie, wie spät es ist?” (“Excuse me, do you know what time it is?”) or “Hallo! Darf ich sie kurz stören?” (“Hello. May I bother you for a moment?”). Moreover, 4 additional sentences, spoken by further different individuals, were recorded to provide a demo set of voices for a training session before the fMRI paradigm. The experiment was run using the Presentation 14.2 software package (Neurobehavioral Systems, Inc., San Francisco, CA, USA). All auditory stimuli were recorded at a sampling rate of 44.1 kHz and presented via headphones.

Experimental Paradigm

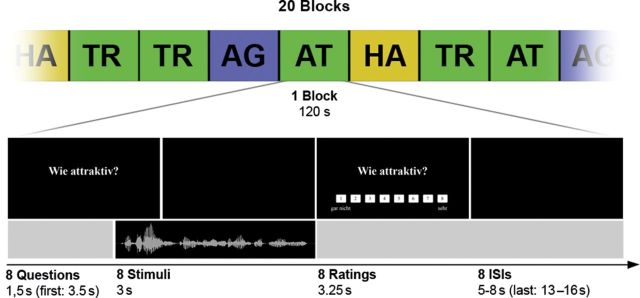

The experiment consisted of 20 blocks, with 5 blocks per condition, each block including 8 trials, resulting in 160 trials in total (Fig. 1). Importantly, “condition” refers to the judgment the subject had to make and not to different stimulus material. That is, 40 different stimuli were presented once for every judgment condition. Thus, for every condition or judgment type, the same stimulus material was used.

Figure 1.

Schematic illustration of the MRI experiment with a timeline for trials of one block. In each trial, participants were asked to rate a voice recording with respect to 1 of the 4 questions: “How trustworthy?” (TR), “How attractive?” (AT), “How happy?” (HA), and “How old?” (AG). ISI, interstimulus interval.

Every trial started with the instruction, which indicated the judgments, participants had to make on the upcoming stimuli. This instruction consisted of one of the following questions (in German): “How trustworthy?,” “How attractive?,” “How happy?,” and “How old?” Within one block, participants rated stimuli regarding solely one judgment category. The instruction of the first trial of every block was displayed for 3.5 s and every subsequent trial of the block was preceded by the same question for 1.5 s. Subsequently, vocal stimuli were presented 0.5 s before the judgment question disappeared, for a maximum of 3 s. After vocal stimulus presentation, stimulus rating was prompted for 3.25 s displaying the initial question together with an 8-point Likert scale, ranging from 1 (“not at all”) to 8 (“very”, e.g. trustworthy). Participants were instructed to judge the voices intuitionally and quickly using one of the left and right 4 long fingers. Every block was followed by a short resting baseline from 13 to 16 s and within each block, intervals between trials were randomly jittered, varying from 5 to 8 s. Importantly, we investigated the 4 judgment conditions in separate blocks, but modeled each individual trial individually in an event-related manner. Whereas the block segmentation of trials reduced potential task-switching and sequence effects, the event-related analysis increased specificity by elimination of instruction frames and pauses between trials on a trial-by-trial basis.

In total, 160 trials in 20 blocks were presented to each participant. As one block of 8 trials lasted 2 min, the overall experiment lasted 40 min. Blocks were presented in a pseudorandomized order with each of the 4 judgment conditions represented in 5 blocks (40 trials). Across all blocks, all 40 stimuli were pseudorandomly presented exactly once in every judgment condition. Importantly, using identical stimulus material in all 4 conditions enabled isolation of top-down effects depending on the type of judgment, rather than the actual stimuli. Before the scan, all participants performed a short training session with a demo set of voices to ensure comprehension of the required task.

Behavioral Data Analysis

The behavioral data obtained during the fMRI experiment were analyzed off-line using MATLAB and IBM SPSS 21.0.0. To test for significant differences in reaction times between conditions, a repeated-measures analysis of variance (ANOVA) was computed. Violations of sphericity were Greenhouse–Geisser corrected. Furthermore, correlations between stimulus ratings across different judgment conditions were tested, performing Pearson correlation analyses. All data were confirmed to be normally distributed.

fMRI Image Acquisition

Imaging data were acquired on a 3-T Siemens MRI whole-body system (Siemens Medical Solutions, Erlangen, Germany) with the vendor-supplied 12-channel phased-array head coil. The BOLD signal was measured using a 2-dimensional (2D) echo-planar imaging (EPI) sequence with the following parameters: Gradient-echo EPI pulse, echo time = 30 ms; repetition time = 2200 ms; field of view = 192 × 3 × 192 mm3, 3 × 3 × 3 mm3 within-slice pixel size; flip angle = 90°. Whole-brain coverage was achieved with 36 axial scans with 3.1 mm slice thickness (distance factor = 15%). In total, 1088 volumes were acquired. The initial 4 of these images were dummy scans to allow for longitudinal equilibrium and were discarded before further analysis.

fMRI Image Processing

Using SPM8 (Wellcome Department of Imaging Neuroscience, London, UK; http://www.fil.ion.ucl.ac.uk/spm), the EPI images were corrected for head movement by affine registration using a 2-pass procedure, by which images were initially realigned to the first image and subsequently to the mean of the realigned images. After realignment, the mean EPI image for each subject was spatially normalized to the Montreal Neurological Institute (MNI) single-subject template (Holmes et al. 1998) using the “unified segmentation” approach (Ashburner and Friston 2005). The resulting parameters of a discrete cosine transform, which define the deformation field necessary to move the participant data into the space of the MNI tissue probability maps, were then combined with the deformation field transforming between the latter and the MNI single-subject template. The ensuing deformation was subsequently applied to the individual EPI volumes that were hereby transformed into the MNI single-subject space and resampled at 1.5 mm isotropic voxel size. The normalized images were spatially smoothed using an 8-mm full-width at half-maximum Gaussian kernel to meet the statistical requirements for corrected inference on the general linear model and to compensate for residual macroanatomical variations. Spatial smoothing is also a necessary prerequisite for correcting the statistical inference using Gaussian random field (GRF) theory. Thresholds using the GRF theory to control corrected P-values assume that the residual field is a sufficiently smooth lattice approximation of an underlying smooth random field. Only if these requirements are met, the resel count, denoting the kernel that an independent noise field needs to be convolved to yield the same smoothness as the residuals, becomes meaningful.

fMRI Image Analysis

The fMRI data were analyzed using a general linear model as implemented in SPM8. Each experimental condition and its ratings was modeled using the stimulus onset and the time until a response was made convolved with a canonical hemodynamic response function and its first-order temporal derivative. Low-frequency signal drifts were filtered using a cutoff period of 128 s. Parameter estimates were subsequently calculated for each voxel using weighted least squares to provide maximum-likelihood estimators based on the temporal autocorrelation of the data (Kiebel and Holmes 2003).

Besides, the regressors according to the experimental tasks, 2 other regressors were based on the response onset, that is, button presses with the left or right hand to capture motor activity. In particular, the 2 motor regressors were especially introduced into the design to remove the motor-related variance from the fMRI time-series signal. Additionally, we included 6 nuisance regressors as movement parameters to remove artificial motion-related signal changes. For each subject, simple main effects for each experimental condition were computed by applying appropriate baseline contrasts.

The individual first-level contrasts of interest were then fed into a second-level, random-effects ANOVA (factor: condition, blocking factor subject). In the modeling of variance components, we allowed for violations of sphericity by modeling nonindependence across images from the same subject and allowing unequal variances between conditions and subjects as implemented in SPM8. Simple main effects of each task (vs. resting baseline) as well as comparisons between experimental factors were tested by applying appropriate linear contrasts to the ANOVA parameter estimates. Composite main effects (i.e., activations, which were present in each of 2 different conditions) were tested by means of a conjunction analysis using the minimum statistic (Nichols et al. 2005). The resulting SPM(t) and SPM(F) maps were then thresholded at voxel-level P < 0.001. Secondly, based on the random field theory, statistical maps were cluster-level corrected (family-wise error (FWE) corrected for multiple comparisons, Worsley et al. 1996) at P < 0.05 (cluster-forming threshold at the voxel level: P < 0.001).

Anatomical Localization

The anatomical localizations were obtained using the SPM Anatomy Toolbox (Eickhoff et al. 2005, 2007). By means of a maximum probability map, activations were assigned to the most likely cytoarchitectonic area. These maps are based on earlier studies about cytoarchitecture, intersubject variability, and quantitatively defined borders of areas. Please note that not all areas have been cytoarchitectonically mapped, so not all activations could be assigned to a cytoarchitectonic correlate. If no observer-independent 3D delineations were available, anatomical localizations were assigned to Brodmann's areas (Brodmann 1909) to provide the highest possible level of anatomical information.

Results

Behavioral Results

A repeated-measures ANOVA indicated significant differences in reaction times between conditions (Greenhouse–Geisser corrected: F2.27, 97.6 = 10.43; P = 3.7 × 10−5). Post hoc tests revealed significantly longer reaction times in the TR condition compared with all other conditions (TR: 1154 ± 398 ms; AT: 1051 ± 351 ms; HA: 1032 ± 367 ms; AG: 998 ± 373 ms; P < 0.001 Bonferroni corrected). Reaction times between AT, HA, and AG judgments did not differ significantly from each other (AT − HA: 19 ms, P = 1.00; AT − AG: 53 ms, P = 0.61; HA − AG: 33 ms, P = 1.00), whereas participants took significantly more time for TR judgments (TR − AT: 103 ms, P = 6.8 × 10−5; TR − HA: 122 ms, P = 2.7 × 10−4; TR − AG: 155 ms, P = 4.8 × 10−4).

Correlation analysis revealed positive correlations between ratings of TR, AT, and HA (AT − TR: r = 0.61, P = 2.7 × 10−5; TR − HA: r = 0.53, P = 4.6 × 10−4; AT − HA: r = 0.78, P < 0.1 × 10−5), whereas AG negatively correlated with AT and HA (AG − AT: r = −0.79, P < 0.1 × 10−5; AG − HA: r = −0.57, P = 1.2 × 10−4). No significant correlation was found between stimulus ratings of AG and TR (AG − TR, r = −0.10, P = 0.55). These results indicate that stimuli that were estimated to belong to older speakers tended to be rated lower in AT and HA, but not in TR judgments.

The mean number of missed responses of participants per condition did not differ significantly: On average, 0.66 responses were missed in 40 TR judgments, 0.52 in 40 AT judgments, 0.70 in 40 HA judgments, and 0.57 in 40 AG judgments. In other words, only every second participant missed about one response in each category.

To test interrater reliability, Cronbach's alphas were computed across all participant's ratings for each judgment type (α[TR] = 0.90; α[AT] = 0.89; α[HA] = 0.91; α[AG] = 0.82). Although all judgments may contain subjective features, the consistency of ratings indicates large agreement among participants in all 4 judgment conditions.

Imaging Results

All reported fMRI results were derived from whole-brain analyses, are reported in the MNI space, and survived FWE corrected thresholding on cluster level (P < 0.05).

We implemented AG and HA judgments as 2 different control conditions to allow a more precise dissociation of the neural effects underlying explicit social trait judgments. On the one hand, brain regions involved in the judgment of basic emotions were expected to be up-regulated in metabolic activity by explicit HA judgment. On the other hand, regions stimulated by cognitive tasks, which are relatively unrelated to emotional assessment of vocal features, were meant to be specifically enhanced by explicit AG judgment.

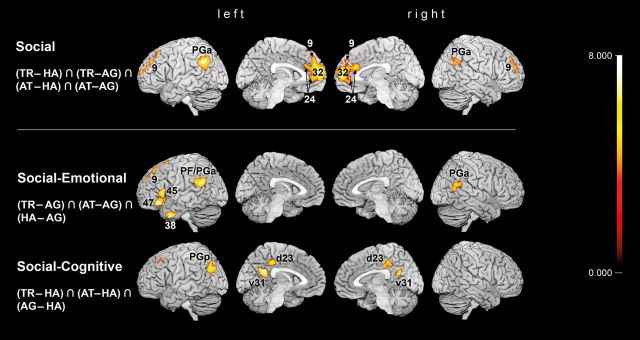

First, to delineate brain regions specifically activated during TR and AT judgments, a conjunction was computed among all contrasts comparing their hemodynamic effects (TR and AT) relative to control conditions, that are HA and AG judgments [(TR − HA) ∩ (AT − HA) ∩ (TR − AG) ∩ (AT − AG)]. This conjunction analysis revealed significantly higher responses of the dmPFC (BA 9), extending into the perigenual anterior cingulate cortex (BA 32, BA 24; pACC) (Vogt et al. 2003; Palomero-Gallagher et al. 2009), the left superior frontal gyrus (BA 9; SFG), and bilateral inferior parietal cortex (IPC, areas PGa bilaterally, right PGp and PFm, Caspers et al. 2008) during TR and AT compared with explicit HA or AG judgments (Table 1 and Fig. 2). In a more liberal approach, we only subtracted each one of the control conditions from the effects of TR and AT (see Supplementary Fig. S1). HA judgments were assumed to involve brain regions for basic emotion recognition, but less trait-related elaboration, as expected in TR and AT judgments. Therefore, the conjunction [(TR − HA) ∩ (AT − HA)] allowed to remove those neural effects from the activation during social trait judgments, which relate to basic emotion recognition. In contrast, AG judgments have been suggested to involve brain regions for cognitive trait assessment, but less emotion-related features than social trait judgments. Hence, the conjunction [(TR − AG) ∩ (AT − AG)] aimed to dissect the neural correlates of TR and AT judgments from trait-related features. These 2 conjunctions allow a more detailed view on the common neural patterns of TR and AT judgments depending on the contrasted control condition.

Table 1.

Main effects of social judgments and shared emotional and cognitive components

| Macroanatomical location | k | Cytoarchitectonic area | x | y | z | T |

|---|---|---|---|---|---|---|

| Main effects of social judgments | ||||||

| [(TR − HA) ∩ (AT − HA) ∩ (TR − AG) ∩ (AT − AG)] | ||||||

| L dmPFC (extending into right hemisphere) | 6638 | −4 | 58 | 9 | 7.04 | |

| L inferior parietal cortex | 1603 | IPC PGa | −51 | −56 | 36 | 6.91 |

| L superior frontal gyrus | 486 | −22 | 52 | 24 | 4.64 | |

| R inferior parietal cortex | 370 | IPC PGp | 45 | −60 | 30 | 4.35 |

| IPC PGa | 56 | −58 | 36 | 3.80 | ||

| IPC PFm | 56 | −51 | 32 | 3.49 | ||

| Overlapping effects during social and emotional judgments | ||||||

| [(TR − AG) ∩ (AT − AG) ∩ (HA − AG)] | ||||||

| L inferior parietal cortex | 1030 | IPC PFm | −57 | −54 | 27 | 5.91 |

| IPC PFcm | −52 | −44 | 27 | 4.76 | ||

| L inferior frontal gyrus | 997 | −51 | 32 | −14 | 6.26 | |

| Area 45 | −58 | 22 | 8 | 5.42 | ||

| L superior frontal gyrus | 972 | −14 | 28 | 54 | 5.59 | |

| L temporal pole | 468 | −50 | 8 | −38 | 6.11 | |

| R inferior parietal cortex (extending into pSTS) | 527 | IPC PGa | 60 | −60 | 14 | 4.40 |

| IPC PFm | 63 | −50 | 22 | 3.84 | ||

| Overlapping effects during social and cognitive judgments | ||||||

| [(TR − HA) ∩ (AT − HA) ∩ (AG − HA)] | ||||||

| L posterior cingulate cortex (extending into right hemisphere) | 960 | −8 | −51 | 22 | 5.72 | |

| L inferior parietal cortex | 536 | IPC PGp | −45 | −70 | 32 | 4.62 |

| L posterior cingulate cortex (extending into right hemisphere) | 535 | −4 | −36 | 39 | 4.49 | |

| L superior frontal gyrus | 516 | −26 | 24 | 45 | 4.75 | |

Notes: MNI coordinates derived from respective cluster peaks (x, y, and z), cluster size (k) and T-scores (T). Locations of functional brain activity are assigned to the most probable brain areas as revealed by the SPM Anatomy Toolbox (Amunts et al. 1999; Eickhoff et al. 2005; Caspers et al. 2006).

TR, trustworthiness; AT, attractiveness; HA, happiness; AG, age judgments.

Figure 2.

Rendering of brain activations on single-subject MNI templates from top to bottom: Main effects of social judgments TR and AT compared with emotional (HA) and cognitive (AG) judgments; shared activation of social and emotional judgments; shared activation of social and cognitive judgments. As far as possible, clusters are assigned by the SPM Anatomy Toolbox (Amunts et al. 1999; Eickhoff et al. 2005; Caspers et al. 2006). All results are cluster-level corrected at P < 0.05 (cluster-forming threshold at voxel level: P < 0.001), minimal cluster sizes after cluster-level correction: [(TR − HA) ∩ (AT − HA) ∩ (TR − AG) ∩ (AT − AG)] = 370; [(TR − AG) ∩ (AT − AG) ∩ (HA − AG)] = 468; [(TR − HA) ∩ (AT − HA) ∩ (AG − HA)] = 516.

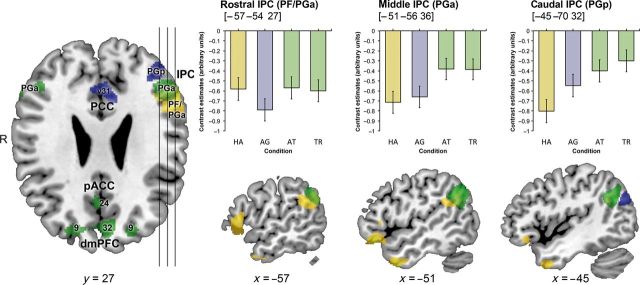

After opposing social trait judgments to both control conditions, we tested for commonalities between social trait judgments and one control condition compared with the other. Thus, a conjunction of TR, AT, and HA relative to AG judgment effects [(TR − AG) ∩ (AT − AG) ∩ (HA − AG)] was computed to reveal brain regions, which are more involved in social and emotional judgments than in relatively unaffective AG judgments. Removing the effects of AG judgments revealed a set of brain regions commonly recruited by TR, AT, and HA judgments, composing left IFG (BA 45, BA 47), left temporal pole (BA 38; TP), left SFG (BA 9), bilateral IPC (bilateral areas PFm, left PFcm, and right PGa), extending into pSTS in the right hemisphere. In contrast, social trait judgments were expected to share neural correlates with AG judgments, corresponding to those more cognitive aspects of these complex judgments that are less involved in emotional state evaluation. Hence, a conjunction of TR, AT, and AG judgments, contrasted to HA judgments [(TR − HA) ∩ (AT − HA) ∩ (AG − HA)], revealed a set of brain regions consisting of PCC (areas d23, v31; PCC bordering precuneus), left superior frontal sulcus, and left IPC (area PGp) (Table 1 and Fig. 2). Comparing all the 3 conjunctions, revealing correlates of social trait judgments and their relations to each control condition, cytoarchitectonical assignment revealed a functional dissociation of the left IPC from rostral to caudal areas: Social trait and emotional state judgments, compared with AG judgments elicited higher neural activation in rostral IPC (areas PFm, PFcm, and PGa), whereas caudal IPC (area PGp) was engaged higher during social trait and AG, than HA judgments. Social trait judgments, finally, recruited central IPC area PGa specifically stronger than both control conditions (Fig. 4).

Figure 4.

(Left) Axial section showing functionally dissociated areas of the IPC participating in social judgments on voices; green, brain activity in social trait, relative to basic emotion and age judgments; yellow, shared activation of social trait and basic emotion judgments; blue, shared activation of social trait and age judgments. (Right) Sagittal sections through left IPC with relative BOLD changes in all 4 judgment conditions at each contrast's maximum. TR, trustworthiness; AT, attractiveness; HA, happiness judgments; AG, age judgments.

It is important to note that we aimed at the investigation of task-driven features of the judgment process, not primarily implicit, stimulus-driven components. We therefore balanced the stimuli across all 4 conditions, so that bottom-up effects are equally presented in judgment conditions and therefore subtracted out in contrasts. Yet, interactions of top-down and bottom-up effects cannot be fully excluded, since different tasks may lead to varying regulation processes on stimulus-driven effects. Consequently, although purely stimulus-driven bottom-up effects are eliminated in our paradigm, the results may contain task-dependent interaction with stimulus information.

Discussion

This fMRI study examined the neural basis of explicit social judgment on vocal stimuli using sentences frequently occurring in everyday social interaction. Hemodynamic responses during 2 complex social trait judgments, namely TR and AT, were characterized in relation to 2 different control tasks: HA judgments as an example for emotional state assessment and AG judgment as a cognitive control condition.

This approach allowed us to specify the neuroanatomy of social trait judgments (TR and AT) and to allocate its components likewise recruited during HA and AG judgments on voices.

Behavioral Data

As demonstrated for face judgments (Todorov, Said, et al. 2008; Bzdok, Langner, et al. 2012), the ratings on vocal judgments also feature high positive correlations between TR, AT, and HA judgments and negative correlations between AG, AT, as well as HA. The observation of older faces appearing less trustworthy, however, was not reproduced for judgments of voices. Robust correlations between different judgments on faces have prompted the concept that social trait judgments involve emotional (Oosterhof and Todorov 2008) and cognitive neural mechanisms (Cunningham et al. 2004). This hypothesis seems to apply to judgments on voices in a similar manner, as indicated by the behavioral results of the current study.

Stimulus Independent dmPFC in Social Trait Judgments

Comparison of the activation patterns during social trait judgments (TR and AT) with those during basic emotional (HA) and cognitive (AG) ones revealed that both social judgments elicit specific neural activity increases in the left SFG, bilateral central IPC (PGa), and dmPFC, extending into pACC. Contributions of these brain regions to social trait judgments are, therefore, unlikely to be explained by mere emotional or cognitive processes involved in HA and AG judgments, respectively.

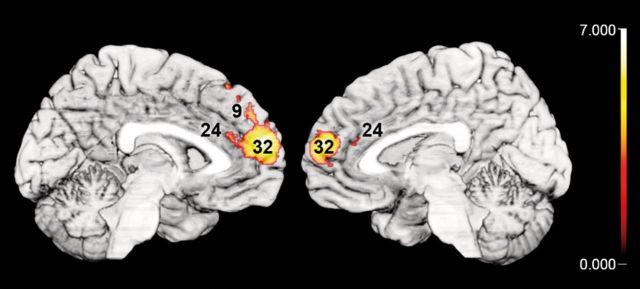

The dmPFC potentially integrates information ranging from reward value to behavioral planning on an abstract level and has been discussed in the domains of action monitoring, mental state inference, and social semantic knowledge about others and self (Amodio and Frith 2006). Among different action identification tasks, enhanced dmPFC activation was, in particular, found when predictions on own and other goals interfered with secondary demanding tasks (Ouden et al. 2005; Spunt and Lieberman 2013). These findings indicate mediation of attentional resources during social reasoning within the dmPFC. Such high-level integration of knowledge about oneself and others may be likely driven by task, independent from the sensory input. Indeed, higher involvement of dmPFC in social trait judgments on vocal stimuli, compared with HA and AG judgments, was well in line with previous results obtained in a very similar setup but using facial stimuli (Bzdok, Langner, et al. 2012). Assuming social trait judgments to specifically recruit dmPFC on a transmodal level, we hypothesized our present results to overlap with the previous data. To test this, we computed a conjunction between the neural correlates during social judgments on faces (earlier study) and on voices (present study). Intriguingly, this analysis revealed a topographical overlap exclusively in the dmPFC (Fig. 3).

Figure 3.

Demonstration of overlap between the main effects of social judgments (TR and AT contrasted to HA and AG judgments) on voices (this study) and on faces (Bzdok, Langner, et al. 2012). Note that congruent increases in brain activation were exclusively located in the dmPFC, as here depicted on medial views of the single-subject MNI template. All results are cluster-level corrected at P < 0.05 (cluster-forming threshold at voxel level: P < 0.001).

These results support the hypothesis of the dmPFC's role in top-down-driven integration of self- and other-related aspects in social cognition (Bzdok, Langner, Schilbach, Engemann, et al. 2013), which may be especially engaged in self-relevant judgments (Seitz et al. 2009). Other than the basic emotional judgment of another's HA or the estimation of another person's AG, TR, and AT judgments imply a long-term prediction for opportunities of social interaction, which may be relevant for the judging individual. Hence, more than HA and AG judgments, TR and AT judgments require oneself's social knowledge to the perceived, potentially self-relevant characteristics of a voice.

Taken together, the observations of dmPFC engagement in TR and AT judgments on faces as well as voices emphasize its most likely transmodal role in self-relevant integration during social reasoning.

IPC in Social Trait Judgments on Voices

Besides dmPFC, social trait judgments, relative to control conditions, specifically recruited bilateral IPC. Owing to its functional involvement in a broad spectrum of neural processes such as semantics, comprehension, unconstrained cognition, memory retrieval, social cognition, and its role in attentional reallocation (Corbetta et al. 2008; Bzdok, Langner, Schilbach, Jakobs, et al. 2013; Seghier 2013), a specific role of the IPC in mediating integration of bottom-up perceptual and higher conceptual processing has been suggested (Ciaramelli et al. 2008; Seghier 2013). With regard to vocal sentence perception, imaging data on language processing robustly associated IPC activation with phonological, syntactical, and semantic assessment of vocal information (Shalom and Poeppel 2008; Binder et al. 2009; Price 2010). Additionally, lesion studies reported impairment of auditory–verbal memory in patients with left IPC disruption (Warrington and Shallice 1969; Baldo and Dronkers 2006).

Given the left-hemispheric dominance in linguistic processing, it may be tempting to explain the left-lateralized results by the use of sentence stimuli. Although stimuli were identically presented in all judgment conditions to minimize stimulus-driven effects, interactions of stimulus and task effects may involve, for example, sentence processing. However, lateralization has been shown less dependent of stimuli than tasks (Stephan et al. 2007). A previous fMRI study pitted the influence of stimuli and tasks on hemispheric lateralization against each other, similarly using identical stimuli (written words) in different task categories, demonstrating leftward lateralization in a linguistic task and rightward lateralization in a visuospatial task (Stephan et al. 2003). In total, assuming lateralization to be dominated by the task, rather than by stimulus, we emphasize the present left-dominant lateralization to relate to top-down social trait judgment processing on vocally presented sentences.

Indeed, social tasks such as evaluating people (Zysset et al. 2002), uncovering lies (Harada et al. 2009), detecting embarrassment and guilt (Takahashi et al. 2004), and reasoning about other's minds (Lissek et al. 2008) or moral dilemmata (Bzdok, Schilbach, et al. 2012) have frequently reported IPC engagement. Investigating vocal judgments, a recent fMRI study provides evidence for the IPC's role in implicit AT processing on vocal sounds (Bestelmeyer et al. 2012). Stimulus-driven effects depending on the voices’ AT were found in the left parietal, temporal, and inferior frontal cortex, but only IPC and IFG responses survived correction for low-level acoustic components of vocal AT. These results suggest that the left IPC is involved in processing highly associative vocal information beyond its discrete linguistic components. Whereas these previous results report the left IPC to respond to attractive voices ‘implicitly’, the present study, furthermore, identifies the IPC (PGa) to be recruited in ‘explicit’ judgments on vocal AT extending into TR. The synopsis of present and previous findings, thus, converges on the possible conclusion that central parts of the IPC (PGa) link social semantic and highly preprocessed perceptual information during voice assessment.

Interestingly, IPC activity was not solely modulated by social trait judgments, but dissociable areas neighboring PGa are likewise engaged in HA or AG judgments relative to the respective other control condition: Social and emotional judgments commonly recruited rostral IPC and IFG more than AG judgments, whereas all trait-related judgments including AG shared higher neural activity than emotional judgments in caudal IPC (area PGp) and PCC (Fig. 4). The functional co-activation of rostral IPC with IFG and caudal IPC with PCC is well in line with earlier histological examinations and diffusion tensor imaging (DTI) describing alterations in IPC cytoarchitecture, receptor, and connectivity features along a rostro-caudal axis (Caspers et al. 2006, 2008, 2011, 2013). Functional and anatomical connectivity studies in humans (Rushworth et al. 2006; Petrides and Pandya 2009; Uddin et al. 2010; Caspers et al. 2011; Mars et al. 2012) and tracing studies in monkeys (Cavada and Goldman-Rakic 1989; Rozzi et al. 2006) agree on strong connectivity of the rostral IPC with the ventrolateral prefrontal cortex and of the caudal IPC with the temporal lobe, PCC, parahippocampal regions, and hippocampus. Given this pattern of IPC connectivity, our results suggest that vocal judgments involve heterogeneous subdivisions of the IPC contributing to distinct features of social trait judgments.

More globally, fMRI experiments from several domains hint at a segregation of the IPC, following a functional gradient from rostral to caudal locations. For example, semantic representations of concrete sensorimotor knowledge are identified more rostrally in the IPC than those of abstract concepts (Binder et al. 2009). In keeping with a higher level of conceptuality towards caudal IPC, linguistic studies found rostro-caudal trends from phonological to semantic discrimination (Shalom and Poeppel 2008; Sharp et al. 2010). Recent results from the domain of social cognition indicate the rostral IPC to be more engaged in judgments about the present state and biological motion, whereas the caudal IPC is more activated in future prospection and moral judgments (Andrews-Hanna et al. 2010; Bahnemann et al. 2010). Taken together, these findings point to a shifting recruitment from rostral to caudal IPC regions in tasks requiring concrete to abstract processing, respectively.

The present study extends the evidence for functional IPC heterotopy to social trait judgments on voices, relating affect-oriented functions to anterior IPC and rational trait-oriented functions to posterior IPC regions. In the following paragraphs, we offer an interpretation how IPC subdivisions may variably contribute to explicit categorization of voices.

Common Neural Correlates in Social Trait and Emotional State Judgments on Voices

Explicit assessment of TR, AT, and HA, relative to AG, congruently recruited a set of areas, comprising the left IFG, anterior TP, SFG, rostral IPC (assigned to PFm and PFcm), and right rostral IPC (PGa and PFm), extending into the pSTS, which thus indicate the functional basis for emotion assessment inherently engaged during social trait judgments on vocal stimuli.

Rostrally, the IPC has extensive connections to the IFG and sensorimotor cortex, as indicated by in vivo DTI tractography in humans (Makris et al. 2005; Rushworth et al. 2006; Caspers et al. 2011), possibly explaining its functional relation to action imitation (Decety et al. 2002), judgments of biological motion (Bahnemann et al. 2010), and semantic motor-sequence knowledge (Binder et al. 2009). Such processing of motor sequences in rostral IPC and pSTS includes recognition of socially and emotionally meaningful patterns, such as intentional body movement (Saxe et al. 2004; Blake and Shiffrar 2007), facial mimics, and gaze (Haxby et al. 2000; Nummenmaa and Calder 2009) as well as prosody (speech melody) (Wildgruber et al. 2004, 2005). Specifically, emotional prosody is regarded as an essential feature to convey emotions by voice (Belin et al. 2011). To meet an explicit judgment on a voice's affect, current models for emotional prosody processing propose the right pSTS to receive preprocessed acoustic information, which is subsequently fed into the bilateral IFG (Ethofer et al. 2006; Schirmer and Kotz 2006; Wildgruber et al. 2006). Interestingly, the current study revealed higher right pSTS and left IFG activation not only in emotional state, but also social trait judgments on voices, compared with AG judgments. This observation suggests that TR and AT judgments on voices implicitly recruit neural systems associated with emotional prosody processing. Given the high correlation between social and emotional judgments in the behavioral data, it is not surprising that these judgments might be based on common features such as affective prosody appraisal.

However, explicit social and emotional judgment tasks might share more common processes than only the analysis of prosody. First, discrimination tasks of emotional connotation especially recruited a left-lateralized set of brain regions including IFG, rostral IPC, SFG and anterior TP (Ethofer et al. 2006; Beaucousin et al. 2007), concordant with the present results. Although not explicitly requested in judgments on ‘voices,’ language may have been similarly screened for affective content in complex social and basic emotional judgments, more than in AG judgments. Secondly, higher response in rostral IPC hints at enhanced attention toward the speaker's intentions during TR, AT, and HA judgments. Specifically, the right rostral IPC has been reported to encode other's intentions and to integrate those during social judgment formation (Saxe and Kanwisher 2003; Young and Saxe 2008; Saxe 2010). Correspondingly, social trait and emotional state judgments engaged right rostral IPC higher than the assessment of a physical characteristic (AG).

Taken together, the present findings reveal shared neural mechanisms underlying social trait and emotional state judgments on voices, possibly interpreting relatively short vocal sequences such as prosody and connotation in their social and emotional meaning.

Common Neural Correlates in Social and Rational Trait Judgments on Voices

TR, AT, and AG, relative to HA judgments, concomitantly recruited the left caudal IPC (PGp) and 2 regions in the PCC bordering the precuneus (Margulies et al. 2009), which thus compose a potential functional basis for the categorization of relatively unaffective, trait-related features in vocal judgments.

Possibly homolog areas in monkeys are not only strongly interconnected, but both, caudal IPC (PGp) and PCC, share strong connectivity to hippocampal and parahippocampal regions as indicated by axonal tracing studies (Goldman-Rakic et al. 1984, Goldman-Racik et al. 1988; Suzuki and Amaral 1994; Kobayashi and Amaral 2003; Kondo et al. 2005). In humans, these connections were also described functionally and structurally using resting-state functional connectivity and DTI (Uddin et al. 2010). Functional implications of PGp and PCC include highly abstract, cognitively challenging social processes such as theory of mind, future prospection, navigation, and autobiographical memory (Buckner et al. 2008; Spreng et al. 2009). Moreover, recent fMRI investigations on first impression judgment revealed that PCC activity predicts the extent of positive and negative evaluation (Schiller et al. 2009), especially when judgments are based on verbal information (Kuzmanovic et al. 2012).

Comparing the present results with the meta-analyses of Spreng et al. (2009), we observed striking proximity to 2 PCC regions, consistently engaged in autobiographical memory tasks. Psychological models suggest that autobiographic memory is essential for identity schemes that are consistent over time (Wilson and Ross 2003), and that identity schemes provide a basis to find consistent analogies in one's environment (Bar 2007). Indeed, the present results indicate an up-regulation of PCC and caudal IPC (PGp) in judgments of TR, AT, and AG. Importantly, all 3 of these judgments differ from HA judgments in their consistency over time. In particular, HA, as a basic emotion, resembles an inconsistent state, while the other 3 person characteristics are regarded as enduring traits (Scherer 2005; Izard 2009).

With respect to trait judgments of voices, we consider activity increase in the caudal IPC (PGp) and PCC to reflect memory-informed judgment formation relating stable trait schemes to vocal information.

Conclusion

We investigated the neural basis underlying explicit social trait judgments on voice records of everyday statements. The observed convergence of brain regions recruited during social trait judgments on voices in the present study, and on faces in previous studies, indicates a central, most likely transmodal role of the dmPFC in complex social attribution processes across judgment modalities and sensory qualities. Furthermore, we characterized the functional heterogeneity of the left IPC in social evaluation tasks on voices, suggesting selective recruitment of the IPC along a rostro-caudal functional axis mediating affective processes more rostrally and cognitive trait-oriented features more caudally.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This study was supported by the Deutsche Forschungsgemeinschaft (DFG, EI 816/4-1; S.B.E. and LA 3071/3-1; S.B.E.), the National Institute of Mental Health (R01-MH074457; S.B.E.) and the Helmholtz Initiative on systems biology (The Human Brain Model; K. Z. and S.B.E.).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Adolphs R. 2003. Cognitive neuroscience of human social behaviour. Nat Rev Neurosci. 4:165–178. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Damasio AR. 1996. Cortical systems for the recognition of emotion in facial expressions. J Neurosci. 16:7678–7687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. 2006. Meeting of minds: the medial frontal cortex and social cognition. Nat Rev Neurosci. 7:268–277. [DOI] [PubMed] [Google Scholar]

- Amunts K, Schleicher A, Bürgel U, Mohlberg H, Uylings HB, Zilles K. 1999. Broca's region revisited: cytoarchitecture and intersubject variability. J Comp Neurol. 412:319–341. [DOI] [PubMed] [Google Scholar]

- Andrews-Hanna JR, Reidler JS, Sepulcre J, Poulin R, Buckner RL. 2010. Functional-anatomic fractionation of the brain's default network. Neuron. 65:550–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. 2005. Unified segmentation. NeuroImage. 26:839–851. [DOI] [PubMed] [Google Scholar]

- Baas D, Aleman A, Vink M, Ramsey NF, de Haan EHF, Kahn RS. 2008. Evidence of altered cortical and amygdala activation during social decision-making in schizophrenia. NeuroImage. 40:719–727. [DOI] [PubMed] [Google Scholar]

- Bahnemann M, Dziobek I, Prehn K, Wolf I, Heekeren HR. 2010. Sociotopy in the temporoparietal cortex: common versus distinct processes. Soc Cogn Affect Neurosci. 5:48–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldo JV, Dronkers NF. 2006. The role of inferior parietal and inferior frontal cortex in working memory. Neuropsychology. 20:529–538. [DOI] [PubMed] [Google Scholar]

- Bar M. 2007. The proactive brain: using analogies and associations to generate predictions. Trends Cogn Sci. 11:280–289. [DOI] [PubMed] [Google Scholar]

- Beaucousin VV, Lacheret AA, Turbelin M-RM, Morel MM, Mazoyer BB, Tzourio-Mazoyer NN. 2007. fMRI study of emotional speech comprehension. Cereb Cortex. 17:339–352. [DOI] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Bédard C. 2004. Thinking the voice: neural correlates of voice perception. Trends Cogn Sci. 8:129–135. [DOI] [PubMed] [Google Scholar]

- Belin PP, Bestelmeyer PEGP, Latinus MM, Watson RR. 2011. Understanding voice perception. Br J Psychol. 102:711–725. [DOI] [PubMed] [Google Scholar]

- Bestelmeyer PEG, Latinus M, Bruckert L, Rouger J, Crabbe F, Belin P. 2012. Implicitly perceived vocal attractiveness modulates prefrontal cortex activity. Cereb Cortex. 22:1263–1270. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. 2009. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb Cortex. 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blake R, Shiffrar M. 2007. Perception of human motion. Annu Rev Psychol. 58:47–73. [DOI] [PubMed] [Google Scholar]

- Bos PA, Hermans EJ, Ramsey NF, van Honk J. 2012. The neural mechanisms by which testosterone acts on interpersonal trust. NeuroImage. 61:730–737. [DOI] [PubMed] [Google Scholar]

- Bourdieu P. 1979. La distinction: critique sociale du jugement. Paris (France): Editions de Minuit. [Google Scholar]

- Bray S, O'Doherty J. 2007. Neural coding of reward-prediction error signals during classical conditioning with attractive faces. J Neurophysiol. 97:3036–3045. [DOI] [PubMed] [Google Scholar]

- Brodmann K. 1909. Vergleichende Lokalisationslehre der Grosshirnrinde. Leipzig (Germany): Barth. [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL. 2008. The brain's default network: anatomy, function, and relevance to disease. Ann N Y Acad Sci. 1124:1–38. [DOI] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Caspers S, Kurth F, Habel U, Zilles K, Laird A, Eickhoff SB. 2011. ALE meta-analysis on facial judgments of trustworthiness and attractiveness. Brain Struct Funct. 215:209–223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Hoffstaedter F, Turetsky BI, Zilles K, Eickhoff SB. 2012. The modular neuroarchitecture of social judgments on faces. Cereb Cortex. 22:951–961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Schilbach L, Engemann DA, Laird AR, Fox PT, Eickhoff SB. 2013. Segregation of the human medial prefrontal cortex in social cognition. Front Human Neurosci. 7:232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Schilbach L, Jakobs O, Roski C, Caspers S, Laird AR, Fox PT, Zilles K, Eickhoff SB. 2013. Characterization of the temporo-parietal junction by combining data-driven parcellation, complementary connectivity analyses, and functional decoding. NeuroImage. 81:381–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Schilbach L, Vogeley K, Schneider K, Laird AR, Langner R, Eickhoff SB. 2012. Parsing the neural correlates of moral cognition: ALE meta-analysis on morality, theory of mind, and empathy. Brain Struct Funct. 217:783–796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campanella S, Belin P. 2007. Integrating face and voice in person perception. Trends Cogn Sci. 11:535–543. [DOI] [PubMed] [Google Scholar]

- Caspers S, Eickhoff SB, Geyer S, Scheperjans F, Mohlberg H, Zilles K, Amunts K. 2008. The human inferior parietal lobule in stereotaxic space. Brain Struct Funct. 212:481–495. [DOI] [PubMed] [Google Scholar]

- Caspers S, Eickhoff SB, Rick T, Kapri von A, Kuhlen T, Huang R, Shah NJ, Zilles K. 2011. Probabilistic fibre tract analysis of cytoarchitectonically defined human inferior parietal lobule areas reveals similarities to macaques. NeuroImage. 58:362–380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K. 2006. The human inferior parietal cortex: cytoarchitectonic parcellation and interindividual variability. NeuroImage. 33:430–448. [DOI] [PubMed] [Google Scholar]

- Caspers S, Schleicher A, Bacha-Trams M, Palomero-Gallagher N, Amunts K, Zilles K. 2013. Organization of the human inferior parietal lobule based on receptor architectonics. Cereb Cortex. 23:615–628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavada C, Goldman-Rakic PS. 1989. Posterior parietal cortex in rhesus monkey: II. Evidence for segregated corticocortical networks linking sensory and limbic areas with the frontal lobe. J Comp Neurol. 287:422–445. [DOI] [PubMed] [Google Scholar]

- Chatterjee A, Thomas A, Smith SE, Aguirre GK. 2009. The neural response to facial attractiveness. Neuropsychology. 23:135–143. [DOI] [PubMed] [Google Scholar]

- Ciaramelli E, Grady CL, Moscovitch M. 2008. Top-down and bottom-up attention to memory: a hypothesis (AtoM) on the role of the posterior parietal cortex in memory retrieval. Neuropsychologia. 46:1828–1851. [DOI] [PubMed] [Google Scholar]

- Cloutier J, Heatherton TF, Whalen PJ, Kelley WM. 2008. Are attractive people rewarding? Sex differences in the neural substrates of facial attractiveness. J Cogn Neurosci. 20:941–951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL. 2008. The reorienting system of the human brain: from environment to theory of mind. Neuron. 58:306–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cosmides L, Tooby J. 1992. Cognitive adaptations for social exchange. London, (UK): Oxford University Press. [Google Scholar]

- Cunningham WA, Johnson MK, Raye CL, Gatenby JC, Gore JC, Banaji MR. 2004. Separable neural components in the processing of black and white faces. Psychol Sci. 15:806–813. [DOI] [PubMed] [Google Scholar]

- Decety J, Chaminade T, Grèzes J, Meltzoff AN. 2002. A PET exploration of the neural mechanisms involved in reciprocal imitation. NeuroImage. 15:265–272. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Fletcher P, Morris J, Kapur N, Deakin JF, Frith CD. 1996. Neural activation during covert processing of positive emotional facial expressions. NeuroImage. 4:194–200. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. 2005. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 25:1325–1335. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras M-H, Evans AC, Zilles K, Amunts K. 2007. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. NeuroImage. 36:511–521. [DOI] [PubMed] [Google Scholar]

- Ekman P. 1992. An argument for basic emotions. Cogn Emotion. 6:169–200. [Google Scholar]

- Engell AD, Haxby JV, Todorov A. 2007. Implicit trustworthiness decisions: automatic coding of face properties in the human amygdala. J Cogn Neurosci. 19:1508–1519. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Anders S, Erb M, Herbert C, Wiethoff S, Kissler J, Grodd W, Wildgruber D. 2006. Cerebral pathways in processing of affective prosody: a dynamic causal modeling study. NeuroImage. 30:580–587. [DOI] [PubMed] [Google Scholar]

- Frieze IH, Olson JE, Russell J. 1991. Attractiveness and income for men and women in management. J Appl Soc Psychol. 21:1039–1057. [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, et al. 2009. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci. 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- Goldman-Racik PS. 1988. Topography of cognition: parallel distributed networks in primate association cortex. Annu Rev Neurosci. 11:137–156. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS, Selemon LD, Schwartz ML. 1984. Dual pathways connecting the dorsolateral prefrontal cortex with the hippocampal formation and parahippocampal cortex in the rhesus monkey. Neuroscience. 12:719–743. [DOI] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Pradelli S, Serafini M, Pagnoni G, Baraldi P, Porro C, Nicoletti R, Umità C, Nichelli P. 2001. Explicit and incidental facial expression processing: an fMRI study. NeuroImage. 14:465–473. [DOI] [PubMed] [Google Scholar]

- Gunther Moor B, Crone EA, van der Molen MW. 2010. The heartbrake of social rejection: heart rate deceleration in response to unexpected peer rejection. Psychol Sci. 21:1326–1333. [DOI] [PubMed] [Google Scholar]

- Gur RC, Schroeder L, Turner T, McGrath C, Chan RM, Turetsky BI, Alsop D, Maldjian J, Gur RE. 2002. Brain activation during facial emotion processing. NeuroImage. 16:651. [DOI] [PubMed] [Google Scholar]

- Habel U, Gur RC, Mandal MK, Salloum JB, Gur RE, Schneider F. 2000. Emotional processing in schizophrenia across cultures: standardized measures of discrimination and experience. Schizophr Res. 42:57–66. [DOI] [PubMed] [Google Scholar]

- Harada T, Itakura S, Xu F, Lee K, Nakashita S, Saito DN, Sadato N. 2009. Neural correlates of the judgment of lying: a functional magnetic resonance imaging study. Neurosci Res. 63:24–34. [DOI] [PubMed] [Google Scholar]

- Harris LT, Fiske ST. 2007. Social groups that elicit disgust are differentially processed in mPFC. Soc Cogn Affect Neurosci. 2:45–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby J, Hoffman E, Gobbini M. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Holmes CJ, Hoge R, Collins L, Woods R, Toga AW, Evans AC. 1998. Enhancement of MR images using registration for signal averaging. J Comput Assist Tomogr. 22:324–333. [DOI] [PubMed] [Google Scholar]

- Hughes SM, Dispenza F, Gallup GG., Jr 2004. Ratings of voice attractiveness predict sexual behavior and body configuration. Evol Hum Behav. 25:295–304. [Google Scholar]

- Izard CE. 2007. Basic emotions, natural kinds, emotion schemas, and a new paradigm. Perspect Psychol Sci. 2:260–280. [DOI] [PubMed] [Google Scholar]

- Izard CE. 2009. Emotion theory and research: highlights, unanswered questions, and emerging issues. Annu Rev Psychol. 60:1–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karafin MS, Tranel D, Adolphs R. 2004. Dominance attributions following damage to the ventromedial prefrontal cortex. J Cogn Neurosci. 16:1796–1804. [DOI] [PubMed] [Google Scholar]

- Kiebel S, Holmes AP. 2003. The general linear model. Hum Brain Funct. 2:725–760. [Google Scholar]

- Kim H, Adolphs R, O'Doherty JP, Shimojo S. 2007. Temporal isolation of neural processes underlying face preference decisions. Proc Natl Acad Sci. 104:18253–18258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B. 1996. Facial expressions of emotion influence interpersonal trait inferences. J Nonverbal Behav. 20:165–182. [Google Scholar]

- Kobayashi Y, Amaral DG. 2003. Macaque monkey retrosplenial cortex: II. Cortical afferents. J Comp Neurol. 466:48–79. [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem K, Price J. 2005. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkeys. J Comp Neurol. 493:479–509. [DOI] [PubMed] [Google Scholar]

- Kuzmanovic B, Bente G, Cramon von DY, Schilbach L, Tittgemeyer M, Vogeley K. 2012. Imaging first impressions: distinct neural processing of verbal and nonverbal social information. NeuroImage. 60:179–188. [DOI] [PubMed] [Google Scholar]

- Langlois JH, Kalakanis L, Rubenstein AJ, Larson A, Hallam M, Smoot M. 2000. Maxims or myths of beauty? A meta-analytic and theoretical review. Psychol Bull. 126:390–423. [DOI] [PubMed] [Google Scholar]

- Langlois JH, Ritter JM, Roggman LA, Vaughn LS. 1991. Facial diversity and infant preferences for attractive faces. Dev Psychol. 27:79–84. [Google Scholar]

- Lissek S, Peters S, Fuchs N, Witthaus H, Nicolas V, Tegenthoff M, Juckel G, Brüne M. 2008. Cooperation and deception recruit different subsets of the theory-of-mind network. PLoS ONE. 3:e2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makris N, Kennedy DN, McInerney S, Sorensen AG, Wang R, Caviness VS, Pandya DN. 2005. Segmentation of subcomponents within the superior longitudinal fascicle in humans: a quantitative, in vivo, DT-MRI study. Cereb Cortex. 15:854–869. [DOI] [PubMed] [Google Scholar]

- Margulies DS, Vincent JL, Kelly C, Lohmann G, Uddin LQ, Biswal BB, Villringer A, Castellanos FX, Milham MP, Petrides M. 2009. Precuneus shares intrinsic functional architecture in humans and monkeys. Proc Natl Acad Sci. 106:20069–20074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mars RB, Sallet J, Schüffelgen U, Jbabdi S, Toni I, Rushworth MFS. 2012. Connectivity-based subdivisions of the human right “temporoparietal junction area”: evidence for different areas participating in different cortical networks. Cereb Cortex. 22:1894–1903. [DOI] [PubMed] [Google Scholar]

- Mende-Siedlecki P, Said CP, Todorov A. 2012. The social evaluation of faces: a meta-analysis of functional neuroimaging studies. Soc Cogn Affect Neurosci. 8:285–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morelli SA, Rameson LT, Lieberman MD. 2012. The neural components of empathy: predicting daily prosocial behavior. Soc Cogn Affect Neurosci. doi:10.1093/scan/nss088. [DOI] [PMC free article] [PubMed]

- Nichols T, Brett M, Andersson J, Wager T, Poline J-B. 2005. Valid conjunction inference with the minimum statistic. NeuroImage. 25:653–660. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L, Calder AJ. 2009. Neural mechanisms of social attention. Trends Cogn Sci. 13:135–143. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Todorov A. 2008. The functional basis of face evaluation. Proc Natl Acad Sci. 105:11087–11092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Todorov A. 2009. Shared perceptual basis of emotional expressions and trustworthiness impressions from faces. Emotion. 9:128–133. [DOI] [PubMed] [Google Scholar]

- Ouden den H, Frith U, Frith C, Blakemore S. 2005. Thinking about intentions. NeuroImage. 28:787–796. [DOI] [PubMed] [Google Scholar]

- Palomero-Gallagher N, Vogt BA, Schleicher A, Mayberg HS, Zilles K. 2009. Receptor architecture of human cingulate cortex: evaluation of the four-region neurobiological model. Hum Brain Mapp. 30:2336–2355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. 2009. Distinct parietal and temporal pathways to the homologues of Broca's area in the monkey. PLoS Biol. 7:e1000170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ. 2010. The anatomy of language: a review of 100 fMRI studies published in 2009. Ann N Y Acad Sci. 1191:62–88. [DOI] [PubMed] [Google Scholar]

- Rockwell P, Buller DB, Burgoon JK. 1997. The voice of deceit: refining and expanding vocal cues to deception. Commun Res Rep. 14:451–459. [Google Scholar]

- Rozzi S, Calzavara R, Belmalih A, Borra E, Gregoriou GG, Matelli M, Luppino G. 2006. Cortical connections of the inferior parietal cortical convexity of the macaque monkey. Cereb Cortex. 16:1389–1417. [DOI] [PubMed] [Google Scholar]

- Rushworth MFS, Behrens TEJ, Johansen-Berg H. 2006. Connection patterns distinguish 3 regions of human parietal cortex. Cereb Cortex. 16:1418–1430. [DOI] [PubMed] [Google Scholar]

- Said CP, Baron SG, Todorov A. 2009. Nonlinear amygdala response to face trustworthiness: contributions of high and low spatial frequency information. J Cogn Neurosci. 21:519–528. [DOI] [PubMed] [Google Scholar]

- Saxe R. 2010. The right temporo-parietal junction: a specific brain region for thinking about thoughts. In: Leslie A, German T, editors. Handbook of theory of mind. 1st ed. Philadelphia, PA: Psychology Press. [Google Scholar]

- Saxe R, Kanwisher N. 2003. People thinking about thinking people—the role of the temporo-parietal junction in “theory of mind”. NeuroImage. 19:1835–1842. [DOI] [PubMed] [Google Scholar]

- Saxe R, Xiao D-K, Kovacs G, Perrett DI, Kanwisher N. 2004. A region of right posterior superior temporal sulcus responds to observed intentional actions. Neuropsychologia. 42:1435–1446. [DOI] [PubMed] [Google Scholar]

- Scherer KR. 2005. What are emotions? And how can they be measured? Soc Sci Inf. 44:695–729. [Google Scholar]

- Schiller D, Freeman JB, Mitchell JP, Uleman JS, Phelps EA. 2009. A neural mechanism of first impressions. Nat Neurosci. 12:508–514. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. 2006. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci. 10:24–30. [DOI] [PubMed] [Google Scholar]

- Seghier ML. 2013. The angular gyrus: multiple functions and multiple subdivisions. Neuroscientist. 19:43–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz RJ, Franz M, Azari NP. 2009. Value judgments and self-control of action: the role of the medial frontal cortex. Brain Res Rev. 60:368–378. [DOI] [PubMed] [Google Scholar]

- Shalom DB, Poeppel D. 2008. Functional anatomic models of language: assembling the pieces. Neuroscientist. 14:119–127. [DOI] [PubMed] [Google Scholar]

- Sharp DJ, Awad M, Warren JE, Wise RJS, Vigliocco G, Scott SK. 2010. The neural response to changing semantic and perceptual complexity during language processing. Hum Brain Mapp. 31:365–377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreng RN, Mar RA, Kim ASN. 2009. The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. J Cogn Neurosci. 21:489–510. [DOI] [PubMed] [Google Scholar]

- Spunt RP, Lieberman MD. 2013. The busy social brain: evidence for automaticity and control in the neural systems supporting social cognition and action understanding. Psychol Sci. 24:80–86. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Fink GR, Marshall JC. 2007. Mechanisms of hemispheric specialization: insights from analyses of connectivity. Neuropsychologia. 45:209–228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KE, Marshall JC, Friston KJ, Rowe JB, Ritzl A, Zilles K, Fink GR. 2003. Lateralized cognitive processes and lateralized task control in the human brain. Science. 301:384–386. [DOI] [PubMed] [Google Scholar]

- Stewart JE. 1980. Defendant's attractiveness as a factor in the outcome of criminal trials: an observational study. J Appl Social Pyschol. 10:348–361. [Google Scholar]

- Streeter LA, Krauss RM, Geller V, Olson C, Apple W. 1977. Pitch changes during attempted deception. J Pers Soc Psychol. 35:345–350. [DOI] [PubMed] [Google Scholar]

- Suzuki WA, Amaral DG. 1994. Perirhinal and parahippocampal cortices of the macaque monkey: cortical afferents. J Comp Neurol. 350:497–533. [DOI] [PubMed] [Google Scholar]

- Takahashi H, Yahata N, Koeda M, Matsuda T, Asai K, Okubo Y. 2004. Brain activation associated with evaluative processes of guilt and embarrassment: an fMRI study. NeuroImage. 23:967–974. [DOI] [PubMed] [Google Scholar]

- Todorov A. 2008. Evaluating faces on trustworthiness: an extension of systems for recognition of emotions signaling approach/avoidance behaviors. Ann N Y Acad Sci. 1124:208–224. [DOI] [PubMed] [Google Scholar]

- Todorov A, Baron SG, Oosterhof NN. 2008. Evaluating face trustworthiness: a model based approach. Soc Cogn Affect Neurosci. 3:119–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todorov A, Engell AD. 2008. The role of the amygdala in implicit evaluation of emotionally neutral faces. Soc Cogn Affect Neurosci. 3:303–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todorov A, Said CP, Engell AD, Oosterhof NN. 2008. Understanding evaluation of faces on social dimensions. Trends Cogn Sci. 12:455–460. [DOI] [PubMed] [Google Scholar]

- Uddin LQ, Supekar K, Amin H, Rykhlevskaia E, Nguyen DA, Greicius MD, Menon V. 2010. Dissociable connectivity within human angular gyrus and intraparietal sulcus: evidence from functional and structural connectivity. Cereb Cortex. 20:2636–2646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van't Wout M, Sanfey AG. 2008. Friend or foe: the effect of implicit trustworthiness judgments in social decision-making. Cognition. 108:796–803. [DOI] [PubMed] [Google Scholar]

- Vogt BA, Berger GR, Derbyshire SWG. 2003. Structural and functional dichotomy of human midcingulate cortex. Eur J Neurosci. 18:3134–3144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warrington EK, Shallice T. 1969. The selective impairment of auditory verbal short-term memory. Brain. 92:885–896. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Ackermann H, Kreifelts B, Ethofer T. 2006. Cerebral processing of linguistic and emotional prosody: fMRI studies. Prog Brain Res. 156:249–268. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Hertrich I, Riecker A, Erb M, Anders S, Grodd W, Ackermann H. 2004. Distinct frontal regions subserve evaluation of linguistic and emotional aspects of speech intonation. Cereb Cortex. 14:1384–1389. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Riecker A, Hertrich I, Erb M, Grodd W, Ethofer T, Ackermann H. 2005. Identification of emotional intonation evaluated by fMRI. NeuroImage. 24:1233–1241. [DOI] [PubMed] [Google Scholar]

- Wilson A, Ross M. 2003. The identity function of autobiographical memory: time is on our side. Memory. 11:137–149. [DOI] [PubMed] [Google Scholar]

- Winston JS, O'Doherty J, Kilner JM, Perrett DI, Dolan RJ. 2007. Brain systems for assessing facial attractiveness. Neuropsychologia. 45:195–206. [DOI] [PubMed] [Google Scholar]

- Winston JS, Strange BA, O'Doherty J, Dolan RJ. 2002. Automatic and intentional brain responses during evaluation of trustworthiness of faces. Nat Neurosci. 5:277–283. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. 1996. A unified statistical approach for determining significant signals in images of cerebral activation. Hum Brain Mapp. 4:58–73. [DOI] [PubMed] [Google Scholar]

- Young L, Saxe R. 2008. The neural basis of belief encoding and integration in moral judgment. NeuroImage. 40:1912–1920. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA, Montepare JM. 2008. Social psychological face perception: why appearance matters. Soc Pers Psych Compass. 2:1497–1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zysset S, Huber O, Ferstl E, Cramon von DY. 2002. The anterior frontomedian cortex and evaluative judgment: an fMRI study. NeuroImage. 15:983–991. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.