Abstract

Human activity detection within smart homes is one of the basis of unobtrusive wellness monitoring of a rapidly aging population in developed countries. Most works in this area use the concept of “activity” as the building block with which to construct applications such as healthcare monitoring or ambient assisted living. The process of identifying a specific activity encompasses the selection of the appropriate set of sensors, the correct preprocessing of their provided raw data and the learning/reasoning using this information. If the selection of the sensors and the data processing methods are wrongly performed, the whole activity detection process may fail, leading to the consequent failure of the whole application. Related to this, the main contributions of this review are the following: first, we propose a classification of the main activities considered in smart home scenarios which are targeted to older people’s independent living, as well as their characterization and formalized context representation; second, we perform a classification of sensors and data processing methods that are suitable for the detection of the aforementioned activities. Our aim is to help researchers and developers in these lower-level technical aspects that are nevertheless fundamental for the success of the complete application.

Keywords: activity monitoring, activity characterization, sensors, data processing, smart home, independent living, e-health services development

1. Introduction

Recent advances in sensing, networking and ambient intelligence technologies have resulted in a rapid emergence of smart environments. Among these, the so-called Smart Home (SH) has gained a lot of attention for the provision of enhanced quality of life within the home. The smart home concept was formalized by Lutolf [1], who primarily focused on the integration of different services within a home environment by using a common communication system. More recently, Satpathy [2] proposed a smart home concept more centered on helping the residents live independently and comfortably with the help of mechanical and digital devices. This definition is closer to our current understanding of SHs.

One of the motivations for smart home research is the significant worldwide increase of an aging population. In fact, according to the World Health Organization (WHO), the world’s elderly population (defined as people aged 60 and older) has increased drastically in the past decades and will reach about 2 billion in 2050 [3]. In Europe, the proportion of the EU27 elderly population above 65 years of age is foreseen to rise to 30% in 2060 [4].

The elderly have specific health issues that have to be considered. A significant proportion of elderly population suffer or may suffer with higher probability from age-related conditions such as Parkinson’s disease, diabetes, cardiovascular disease, Alzheimer’s disease, different chronic diseases and limitations in physical functions. For them, SH technologies may help to enhance quality of life, prolong independent living and reduce caregivers’ necessary time and healthcare costs in general, without losing the safety that a continuous and unobtrusive monitoring provides. Thus the benefits of these technologies are not only for the older adults, but also their families, caregivers and society in general. This is what is sometimes known as Ambient Assisted Living (AAL).

Research objectives in this area range from low-level data acquisition by sensors up to high-level data integration and inference of knowledge through both data-driven and knowledge-driven approaches. Many recent works are related to activity recognition as a means of extracting higher-level information. There are different types of activities, but the common ground to all of them is that they should be recognizable as such by a non-technician (e.g., “preparing a meal”, “taking a bath” or “watch television while sitting on the sofa”). If human activities are correctly and automatically identified, a wide range of applications and services become possible, such as detection of health emergencies, early disease detection, professional advice on routine lifestyle, health status monitoring and help in treatment prescription. Some concrete examples of applications can be found in [5] (a mobile emergency response system), [6] (a fall detection system) and [7] (which deals with monitoring activities of daily life and recommending services for active lifestyle).

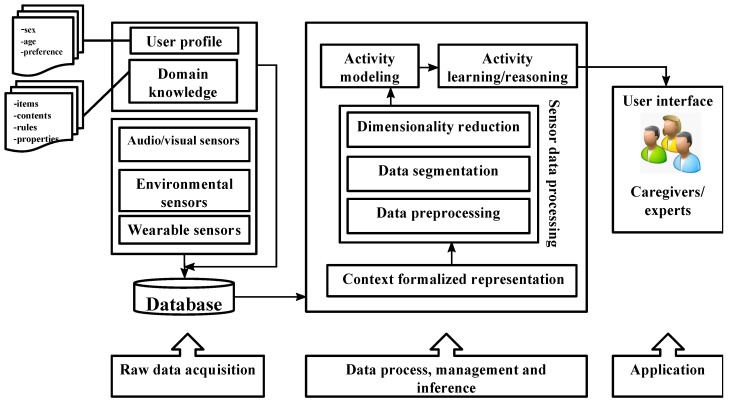

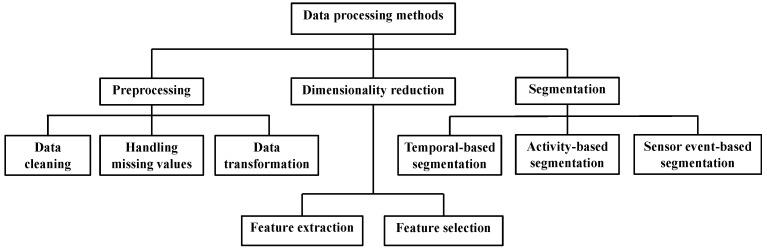

Figure 1 shows the general structure of activity-based AAL systems. Three stages are identified: raw-data acquisition, sensor data processing to obtain context information and learning/reasoning methods to identify activities and provide caregivers and experts with useful and significant information.

Figure 1.

Overall architecture of activity monitoring systems in smart home environments.

It is clear that in order for activities to be detected, a set of sensors have to be deployed inside the home. Some of these sensors may be located on furniture, appliances, walls and doors, whereas others may be “worn” by the inhabitants, attached to their bodies either directly or indirectly (e.g., a smartphone that is carried in a pocket). In general, different sensors are needed simultaneously to correctly identify the different activities, and each of them may provide its measurements (i.e., the raw data) in a different format. Raw data by itself is therefore of little (or no) use for the activity detection algorithms. The preprocessing of this data is a must in order to obtain useful and significant information for the application.

Whereas it is possible to find a good number of research reviews related to aspects such as sensor design, monitoring techniques or machine learning algorithms and reasoning approaches, to the best of our knowledge the underlying and fundamental issues of activity context information representation, proper sensor selection and sensor raw data processing have not received enough attention yet in the concrete context of elderly people needs. In addition, reviews tend to focus on describing solutions from a technical viewpoint, but not so much from the perspective of service developers and providers. We devote the whole Section 2.1 (Recent surveys on smart homes) to summarize some of the most significant and recent research review papers in this area, in order to contextualize the principal open issues.

The main objective of this review paper is twofold: first, we propose a classification of the main activities considered in smart home scenarios targeted to the elderly’s independent living, as well as their characterization and formalized context representation; second, we advance towards a general set of guidelines that would help researchers and developers select the sensors and processing techniques best suited to the target activities to detect, focusing on older adults and indoor smart home activities. Some processing techniques (especially preprocessing and segmentation approaches) are identified and classified in detail. Finally, we present some identified research challenges and suggestions for further research.

The rest of the paper is organized as follows: Section 2 summarizes the most recent smart home projects and applications, establishing a classification into three categories that are relevant for older adults’ independent living. Also in this context, a classification of the main activities considered in smart home scenarios and their characterization and formalized context representation is proposed in Section 3. A review of the different types of sensors used to monitor these activities as well as their main utility and limitations is provided in Section 4. Section 5 is devoted to sensor data processing techniques, together with their applicability to different scenarios related to the elderly’s independent living, whereas Section 6 contains the description of some identified research challenges and possible future directions in this area. Finally, we conclude this review in Section 7.

2. Smart Home Projects and Applications

The numerous research advancements achieved in the SH area have improved access to healthcare services for the elderly. Various projects and applications have been developed throughout the world to prolong independent living for this population segment. In this section, we review the most recent surveys on SH, as well as the projects and applications tailored to the elderly’s specific requirements.

Even if this paper is not intended to dive deep into the complex matter of human factors, we cannot ignore the fact that these are increasingly gaining importance in any information and communication technologies (ICT) system. This is why we will conclude Section 2 with a brief summary on the importance that human factors have for the success and acceptability of any SH deployment.

2.1. Recent Surveys on Smart Homes

A recent survey on smart homes, written by Alam et al. [8], gives a general overview of past developments, present situations and future challenges. It reviews the smart home projects according to their research objectives and three desired services: comfort, healthcare and security, and also describes information about sensors, multimedia devices, communication protocols, and algorithms used for these desired services. Another survey on ambient assisted living technologies for older adults is conducted by Rashidi and Mihailidis [9], who summarized AAL technologies and tools in terms of smart homes, assistive robotics, e-textile and wearable sensors, also exploring healthcare applications that focus on activity recognition algorithms and context modelling.

Salih et al. [10] presented a review on ambient intelligence assisted healthcare monitoring, summarizing wireless sensor networks technologies, communication technologies and applications. Their research mainly focuses on ambient intelligence methods and data mining techniques used in wearable and ambient sensor monitoring within smart home for older adults and patients with chronic diseases.

Peetoom et al. [11] conducted a systematic investigation on current literature on monitoring technologies to detect activities of daily life or significant events (e.g., falls and changes in health status) for elderly people in-home. Their research identifies five main types of monitoring technologies: PIR motion sensors, body-worn sensors, pressure sensors, video monitoring and sound recognition. Additionally, the functionalities and outcomes of applying these technologies to prolong independent living of elderly people are demonstrated. The results of their studies suggest positive effects, both to residents and caregivers, of using monitoring technologies.

Khusainov et al. [12] presented a study on automated methods for real-time human wellness monitoring and various algorithmic techniques on sensor data analysis to devise effective means of addressing the demands of assisted living and clinical observation. Their survey reviews three areas of sensor-based monitoring systems: sensor types, frameworks and applications; data collection, processing and analysis; and research gaps, limitations and challenges.

Our review differs from the above in two significant aspects: firstly, we focus on activities and applications useful for the elderly inside a SH, which guides the application taxonomy we use, and furthermore, we propose a formalization of the description of the activities including properties and context representation. Secondly, although our review does not include aspects such as hardware and communications, it contains a deeper discussion and classification on signal processing techniques (especially preprocessing and segmentation approaches).

Avci et al. [13] presented a survey about activity recognition for healthcare, wellbeing and sports applications by using inertial sensors. This work is arranged according to the main steps involved in the activity recognition process and the main techniques utilized in each of those. However, this survey only deals with inertial sensors. Bulling et al. [14] give a comprehensive introduction on human activity recognition, but similarly, they focus on a specific type of sensors (in this case body-worn inertial sensors).

2.2. Smart Home Projects

In this section, we conduct an investigation on the most significant smart home projects that aim at enhancing assisted living for older people in the recent past. These SH projects fully simulate the smart home environment including the deployment of a wide range of sensors. A chronological order is chosen for this presentation, in order to give a clearer picture on how the expectations and research issues related to SH have evolved with time. In the earliest projects presented, the main issues discussed were related to physical and logical connectivity of devices. This kind of projects evolved to multidisciplinary approaches very focused on improving the usability of the interaction of devices with the inhabitants. This form of interaction demanded technologies with a high grade of abstraction such as those related to Artificial Intelligence which, in turn, has fostered the evolution to more natural and personalized interaction approaches. In addition, these technologies introduced the capacity of managing uncertainty, a common issue in smart home solutions. In recent years the projects have no longer been focused on the Smart Home as a final objective but rather as an enabling technology to achieve other purposes such as independent living for seniors.

A summary on the widely reusable datasets collected by the different smart home projects is also presented to make choosing between them easier for researchers.

GatorTech [15] is an early smart home project carried out at the University of Florida, which integrated a set of sensors and devices to provide services such as voice recognition and inhabitants’ activity tracking. Outstanding early smart home projects also include the following: Adaptive Versatile home (MavHome) [16] from the University of Texas at Arlington, PlaceLab [17] from the MIT and Intelligent System Lab (ISL) [18] from the University of Amsterdam.

The CASAS smart home project [19], developed at Washington State University in 2007, is a multi-disciplinary research project focused on creating an intelligent home environment by using unobtrusive sensors and actuators. The research areas included in CASAS are assistive technology, artificial intelligence, machine learning and activity recognition. This same team has developed in its recent research the “smart home in a box” [20], which is a lightweight smart home design that is easy to install and provides SH capabilities out of the box with no customization or training needed. These capabilities include activity recognition, which provides real time activity labelling as sensor events arrive in a stream, and activity discovery for unlabeled data by using an unsupervised learning algorithm.

SWEET-HOME [21] is a nationally supported French research project that aims to design a new smart home system based on audio technology. This project has three main goals: providing an audio-based interaction technology that lets the users have full control over their home environment, detecting distress situations and easing the social inclusion of the elderly and frail population. An interesting research direction of their smart home system is the context-aware decision process, which uses a dedicated Markov Logic Network approach to enhance the ability of coping with uncertain events inferred from real sensor data [22].

A recent smart home project is “Unobtrusive Smart Environments for Independent Living” (USEFIL), an FP7 project which started in 2011. It aims to provide an advanced and affordable health-care assistance in a smart home environment, adopting a three-layer architecture and an unobtrusive sensor network to support a Decision Support System (DSS) for providing inputs to monitoring apps by user-friendly interaction [23]. A limited set of sensors and devices, such as a wrist wearable unit, a camera, a microphone and a Kinect sensor, are used in a SH setting to identify the basic physical activities (lying, sitting, walking, standing, cycling, running, ascending and descending stairs) of elderly people [24]. A low cost off-the-self system and open source platforms are developed to facilitate the generation of applications addressing the gap between advanced technologies and aging population.

Qing and Mohan proposed a Smarter and Safer Home solution at CSIRO to enhance elderly people’s quality of life [25]. To achieve this, a number of environmental sensors are placed in various locations within smart home, acting as non-intrusive monitoring devices for identifying human behaviors. Based on this project, Zhang et al. [26] proposed a Smart Assistive Living (SAL) platform to enable elderly people to remain at their homes independently as long as possible. Sensors placed in smart home are expected to provide a continuous data stream to a server. Extracting and analyzing these data using machine learning mechanisms are helpful to perform diagnosis and decision making by clinical experts and health caregivers.

These smart home projects provide a large amount of datasets, some of which are publicly available and can be used by researchers to conduct further studies. Among the publicly available datasets listed in the “Home Dataset” [27], those collected by MIT, the University of Amsterdam and Washington State University are widely used in Smart Home research. Moreover, the dataset provided by MIT [17] with more than 900 sensor inputs, including those coming from motion, switch and RFID sensors, is, to the best of our knowledge, by far the largest dataset collected from a real-world environment. In addition to data from embedded sensors, the dataset provided by [28] contains acceleration and gesture data. The benchmark dataset described in [29] is also widely used and contains data collected from a set of nine inertial sensors attached to different parts of the body. Concretely, there is motion data related to 33 fitness activities recorded from 17 volunteers.

2.3. Smart Home Applications Suited to Elderly People

There is not a single way of classifying the SH applications that may enhance older adults’ quality of life and health status. We propose here a division into three main categories and summarize some of the most significant recent work found in the literature inside each of them. These categories are: “Specific health monitoring”, “Daily activities monitoring, prediction and reminding”, and “Detection of anomalous situations”. We also note that these categories are not mutually exclusive: the same application may contain ingredients that fit into more than one of them.

2.3.1. Specific Health Monitoring

On occasions older adults need specific monitoring of either vital signs or daily activities in order to assess if a medical condition is being correctly controlled. Other possible aims of this application are to detect early when an illness is developing or to react quickly when a sudden change in medical parameters occurs. In the following we summarize information from some projects focused on this type of functionality and related with specific diseases such as diabetes and stroke patients.

Pulkkinen et al. [30] described a platform for monitoring daily activities by using different types of sensors for elderly people with diabetes at home and help detecting if the patients are following the recommended exercise and diet routines. Data obtained from the sensors for a long period of time can be used to distinguish the user’s life patterns, helping doctors to design treatment plans and, after that, providing the patient with automatic notifications. Chatterjee et al. [31] built an in-home activity monitoring system to detect the daily routines of elderly people with diabetes by using environmental sensors and body wearable sensors. Daily messages based on previous behaviors, a tailored health newsletter that summarizes biological parameters and blood glucose level prediction are sent to the users and caregivers to determine the health status.

Chiang et al. [32] developed a set of wireless sensor network devices to support physical therapy to be carried out by elderly stroke patients in smart home environments. Stroke patients usually have to repeat specific movements or postures during their rehabilitation, as indicated by their physiotherapists. Thus, the system described by Chiang et al. is aimed at measuring both static postures and dynamic movements, and to record them as routines. As an example of vital signs monitoring, Sardini and Serpelloni [33] developed a t-shirt with embedded sensors to take measurements that include heart rate, respiratory rate and body temperature of the patient. The acquired data can be wirelessly sent to caregivers and then analyzed continuously by healthcare experts to perform proper evaluation and assess sudden changes in the health status of the patient.

Apart from health monitoring systems developed in laboratory environments, some commercially available solutions also exist. Those solutions have a broader scope in healthcare management but are usually less integrated with the home. To mention a few, Genesis by Honeywell [34], TeleStation by Philips [35] and HealthBuddy by Bosch [36] fall into this category.

2.3.2. Daily Activities Monitoring, Prediction and Reminding

Patterns obtained from daily routines can be very useful for helping elderly people who suffer from any kind of cognition decline. Reasoning over these patterns allows to predict actions and to provide reminders of the things that should be done (for instance, guidance when the person does not remember the steps to follow to complete an activity). Many elderly people who live alone also suffer from other age-related diseases such as depression and diabetes, and the activities of these patients can be influenced by their diseases. For example, patients with depression could exhibit infrequent leaving the house, less talking, sleeping disorder and less eating, whereas patients with diabetes could perform frequent drinking, eating, sleeping and toileting. Being capable of detecting these patterns can help in diagnosing these illnesses.

In the literature different approaches can be found when dealing with activities detection: detection of activities as the main objective, remainder of tasks to inhabitants, detection of changes in routines as signs of possible risks or diagnosis of specific diseases based on activity patterns. Some significant examples of each of these approaches are discussed in the following.

Chernbumroong et al. [37] proposed a practical multi-sensor activity recognition system for monitoring daily life activities, including brushing, exercising, feeding, ironing, reading, scrubbing, walking, walking on stairs, washing, watching and wiping by using seven types of sensors attached to the body.

Lara et al. [38] proposed Centinela, a system that continuously monitors five activities (walking, running, sitting, ascending and descending) by using a single sensing device and a mobile phone. The system consists of a portable and unobtrusive data collection platform to provide real-time human activity monitoring. The mobile application they implemented is based on acceleration and physiological signals to recognize physical activities automatically.

Chaminda et al. [39] proposed a smart reminder system for reminding older adults with memory impairment of the forgotten complex activities in smart home environment. Reminders of forgotten activities are predicted according to the inhabitant’s current location, current behavior patterns and past activity patterns. Wearable sensors are used to acquire data to generate these activity patterns. A similar system is proposed in [40]. Suryadevara et al. [41] develop an intelligent home monitoring system to detect behavior changes and forecast the behavior of elderly people.

Han et al. [42] proposed a four layers healthcare framework to predict the risk of depression and diabetes by monitoring long-term disease related activity and generating long-term activity patterns. When the symptoms of these diseases appear in people with irregular activity patterns, the information will be sent to doctors and caregivers for early detection and prevention of the depression and diabetes.

2.3.3. Detection of Anomalous Situations

Anomaly detection aims to identify uncommon patterns in a dataset, that is, patterns which do not conform to the notion of normal behavior [43]. To detect them, techniques based on rule-based approaches, temporal relation discovery approaches and similarity-based approaches may be used. The identification of anomaly patterns, especially in daily activities monitoring systems, can be significantly valuable for experts to make decisions or diagnoses in emergency situations. It can also be used to provide elderly people with memory impairments with reminders or audio signals if an anomalous behavior is being detected.

As an important subset of the detection of anomalous situations we find fall detection applications. Older adults are more prone to falls, and their consequences are in general more serious than for younger people. This is the reason why it is possible to encounter a good number of proposals specifically focused in fall detection for the elderly, as an important issue to facilitate their independence at home.

In [44], Phua et al. proposed an Erroneous-Plan Recognition (EPR) to detect faults in the daily activities of elderly people with dementia, by using sensors deployed in the SH to monitor daily activities. When an error is detected, timely audio or visual prompts are sent to the dementia patients to replace some of their diminished memory and enhance their problem-solving abilities. Similar works about anomaly detection for dementia patients can be found in [45,46,47].

Ordóñez et al. [48] developed an automated behavior analysis system for elderly people who live alone at home. It captures measurements from various sensors, detects the activities of each user, and is capable of detecting anomalous behavior that reflects changes in health status, by learning standard behavior patterns. Gjoreski et al. [49] proposed a system to monitor users’ daily activity by combining two accelerometers and an electrocardiogram (ECG) sensor. Measured acceleration data can be analyzed in conjunction with the ECG signals to detect anomalies in the user’s behavior and heart-related problems.

Regarding fall detection systems, Wu and Xue [50] developed a portable pre-impact fall detection and injury prevention system with inertial sensors. By using the inertial frame velocity profile of the body, they distinguish falls from non-fall activities as well as identify early falls by using a threshold detection algorithm. The designed pre-impact detection device is embedded into a wearable belt that can be worn by the older adult when they are performing daily activities. This system has been proved to increase the confidence of independent living for elderly people.

Rimminen et al. [51] also proposed a fall detection system by using a near-field imaging floor sensor and pattern recognition. The shape, size and magnitude of the patterns are used for classification, and the result shows good performance on fall detection even on unclear falls (ending up sitting or on one’s knees) and a good tolerance of daily activities. Zhuang et al. [52] described a fall detection system to distinguish noise coming from falls from other noise in smart home environment. In their system they only use a far-field microphone to identify various sounds. Then a Gaussian Mixture Models (GMM) supervector is used to model each fall or noise segment by applying Euclidean distance to measure the pairwise difference between audio segments. A Support Vector Machine built on a GMM supervector kernel is used to classify audio segments into falls and various types of noise.

More generally, although also usable for detecting falls, Ghasemzadeh et al. [53] introduced a physiological monitoring system, which collects acceleration and muscle activity signals to assess standing balance. They use machine learning algorithms and statistical techniques to infer relevant information from the correspondence between the accelerometer and the electromyogram (EMG) sensors measurements.

2.4. Human Factors

Few applications and systems are as sensitive to human factors as the ones with which this paper deals. Generally speaking, the consideration of human factors in technological systems consists in integrating, from the very early stages of the design, the specific capabilities, limitations and preferences of the human beings that are going to be involved with the application. In the case of Smart Home applications related to the elderly (or any other population group), acceptability is probably the most important factor to consider, not only for the older people themselves but also for their caregivers. Some authors have identified a friendly design which includes these human factors as an important element to improve the acceptance of the deployed solutions [54].

Design patterns are design solutions that have proven to be effective in some specific area or for a specific set of functionalities. Upon completion of this survey we have not found in the literature any design pattern oriented to encourage the acceptance of solutions for SH. However, users’ acceptance of solutions should be a prerequisite for proper implementation. In fact, according to Gaul et al. [55], the level of acceptance of technological solutions in the medical field is low, especially in the case of SH solutions oriented to the elderly. This same paper states that existing solutions are technically viable but lack a proper study of human factors, leading to acceptability problems. There are generic guidelines that establish design principles for applying human factors in sensitive areas such as telecare [56]. However, these guidelines do not provide design aids to develop solutions aligned with the factors described.

Even if general design patterns have not yet been proposed related to human factors in SH, it is fair to mention that there are authors who have considered these issues into their software architectures to a greater or lesser extent. For instance, Becker [57] performed a detailed review of the quality requirements that AAL environments must comply with, as well as their related technologies and architectural models. The conceptualization found in this paper is useful when dealing with the software architectural requirements, although it is not easily transferable to a low-level design made by developers. For their part, Vega et al. [58] identified the main challenges in the development of services based on smart environments (considered as a generalization of SH) and proposed an architecture to deal with these. The proposed architecture is based on the model-view controller paradigm commonly used in programming. Unlike other approaches, the core functionality is fully specified in the documents exchanged between the entities of the architecture, called Document-Contracts. This architecture facilitates the development, maintenance and creation of new solutions for non-technical users because the behavior of the system will be guided by the definition of these Document-Contracts in a high level language. It can also be adapted to the specific technical conditions of each home without altering the basic functionality of activities. However, the authors of [58] do not specify any design pattern to be used in the definition of the activities.

3. Conceptualization and Formalization of Activities in Smart Home

A clear understanding of the target activities in SH and their specific characteristics is critical for proper sensor selection and system design. In addition, accurate knowledge on the activities’ context, such as location and involved objects, if included into the activity conceptualization, may enhance the effectiveness of SH systems. In this section we firstly describe a taxonomy of SH activities in a manner that is meaningful for our target population (the elderly). This leads to a proposal for the formalization of activities, including their relationships with their context as well as with other activities. We then go into deeper detail on the specific techniques and languages that have been proposed in the literature for describing contextual information in a formalized manner. The whole section organized in this manner presents information that grows in specificity and technical detail with each sub-section, paving the way for Section 4, in which sensors capable of capturing the relevant information are tackled.

3.1. Taxonomy of Activities

In this sub-section, we propose a taxonomy applicable to activities that are related to healthcare, wellbeing and independence in SH environments, with special emphasis on the elderly population. This sub-section includes information of a very general and high-level nature, appropriate for being significant and understandable to personnel which is expert in the interpretation of the medical and care implications of specific activities (e.g., doctors) but not in the technical low-level aspects of the SH deployment.

On one hand, Activities of Daily Life (ADLs) are defined as “the things we normally do in daily living including any daily activity we perform for self-care such as feeding ourselves, bathing, dressing, grooming work, homemaking and leisure” [59] by the medical community. ADLs are used in smart home as an umbrella term encompassing self-care and domestic activities or tasks that the user undertakes routinely. The capacity of performing ADLs at home without assistance from other people can be considered as a reference for the estimation of the independent living level of the older adults [60]. Katz and Lawton define a range of ADLs [61] and Instrumental ADLs (IADLs) [62]. According to their definitions, the ADLs can be subdivided into personal self-care activities, or basic ADLs (BADLs), and domestic activities, or instrumental ADLs (IADLs). BADLs (a concept commonly used in current literature related to SH, see for instance [63]) refer to the necessary self-care activities, such as bathing, dressing, eating and drinking, functional mobility, using toilet and grooming. IADLs are activities which are not strictly necessary but let an individual live independently in a community, for instance shopping, housekeeping, managing money, preparing food, taking medication, using telephone and transportation.

On the other hand, ambulatory activities are related to either specific motions or postures of the person. These activities can be subdivided into stationary activities, transitional activities and dynamic activities. Stationary activities describe a posture such as sitting, standing or lying. Transitional activities refer to the change from a stationary state to a dynamic state or vice versa, such as sit-to-stand, stand-to-sit, stand-to-walk and sit-to-lie. Dynamic activities include a set of simple dynamic actions such as walking, running, cycling and jogging. Ambulatory activity monitoring is very useful for detecting the physical activity level, promoting health (e.g., doing exercise) and detecting hazardous situations such as falling. Additionally, ambulatory activities can be useful for estimating the psychological wellbeing of older adults. For example, Tartarisco et al. [64] proposed a system for prolonged stress monitoring during normal activity performance in a smart home environment. They evaluated the individual immediate stress level by analyzing both the ambulatory activity frequency and the heart rate when the individual performs these ambulatory activities.

The classification and detailed description of the activities that are significant for the elderly well-being and independent living in SH is included in Table 1.

Table 1.

Taxonomy of activities significant for the older adults in SH.

| Type | Activity | Description of How the Activity Relates to the Elderly Independent Living |

|---|---|---|

| Basic ADLs | Bathing | Performs sponge bathing, tub bathing or showering without assistance |

| Brushing teeth | Brushes one’s teeth without assistance (including the use of toothbrush and toothpaste) | |

| Dressing | Puts on and off clothes and shoes without assistance (except for tying shoes) | |

| Using toilet | Goes to toilet, uses it, dresses and returns without assistance (may use cane or walker) | |

| Eating and drinking | Feeds oneself and drinks without assistance (including the use of cutlery) | |

| Sleeping | Sleeps on a bed in the bedroom without assistance | |

| Instrumented ADLs | Preparing meals | Chooses material and food in the kitchen, prepares meals autonomously without assistance |

| Preparing drinks | Chooses the type of drinks, prepares drinks with sugar or milk | |

| Resting | Reads a book, listens to music, operates and watches TV without assistance during leisure time | |

| Housekeeping | Keeps house clean (sweeps floor with broom, washes dishes and glasses in kitchen, etc.) and does housework (such as ironing) without assistance | |

| Using a telephone | Picks up the telephone, dials the number, has a conversation or answers a call without assistance | |

| Taking medicine | Takes the prescribed medicines appropriately and timely without assistance | |

| Ambulatory Activities | Walking | Walks from one place to another, walks up or down the stairs without assistance |

| Doing exercise | Does exercise such as running and cycling without assistance | |

| Transitional activities | Performs transitional movements (such as sit-to-stand, sit-to-lie, stand-to-sit, lie-to-sit) in and out of bed or chair without assistance | |

| Stationary activities | Sits in the sofa, stands for a period of time (may use cane or walker), lies in bed or sofa |

To compile the information contained in Table 1 we have taken into account the contributions included in the works referenced above. This taxonomy establishes a first basis for the activities definition that is aimed at decoupling the specification of sensors and processing techniques from the final service high level description. Depending on the application specific goals, some activities will be more interesting to be detected than others. For instance, a healthcare application for elderly people may be concerned about the user’s movements and fall detection, but an assistance application for elderly people with cognitive decline would be more focused on the sequences of activities that the user performs.

In general, in smart home applications it is critical to detect the boundaries of an activity and then identify what the concrete activity carried out is. This can be achieved with a proper characterization of the activities. As a first step towards this, we propose the details of a conceptualization of activities and their context in the following sections.

3.2. Activity Conceptualization

Once a first taxonomy has been described, the next step for the characterization of activities is related to their conceptualization. The conceptualization process allows a first characterization of the activities in terms that can be later formalized to be understandable by computers. Thus, it is a step forward towards a completely detailed and automated treatment of activities.

Since an activity is usually related with other activities, the first classification we use for the conceptualization deals with the relationships among different activities. These relationships can be mainly classified into the following categories, depending on the interactions that take place during the activity timespan:

Specialization of activities—Activities can be categorized at multiple granularity levels. In [65], the authors distinguish the ADLs in the smart home from the actions, in order to define the human behavior at different complexity levels and durations. An action is an atomic activity that is performed by a single subject and lasts for a relatively short time. Some examples of action are “open the door”, “turn on the light” and “go to bed”. An ADL is usually defined as a more complex behavior performed by either a single user or multiple users and which lasts for a longer time than an action. Furthermore, a so-called coarse-grained activity may be specialized into two or more fine-grained activities. For example, “preparing drinks” may have as its child activities: “preparing hot drink” and “preparing cold drink”, whereas “preparing hot drink” can be further broken down into its child activities: “preparing tea”, “preparing hot milk” and “preparing coffee”. Meditskos et al. [66] defined the specialization of activities differently. As an example, in their activity pattern, the activity “night sleep” is defined as the overall night sleep activity of a person and the activity “out of bed” is detected when the person gets out of bed. With the addition of context description and activity type interpretation, an “out of bed” activity can be further specialized as a “bed exit” activity, which refers to the “out of bed” activity when it occurs during the “night sleep”.

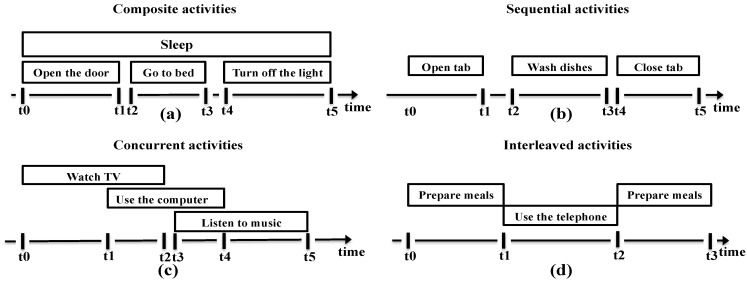

Composition of activities—Most complex ADLs are composed of an ordered succession of simpler activities. For instance, “sleeping” may consist of: “opening the door”, “going to bed”, and “turning off the light” (as shown in Figure 2). The ordering of the simple activities may depend an individual’s preferences or habits, thus leading to several variants of an activity. Furthermore, activities may have time-related connections to each other, to form a composite activity. At this respect we may distinguish three situations: sequential activities, concurrent activities and interleaved activities. Figure 2 shows graphically these temporal relationships.

Figure 2.

Diagrams showing different relationships between activities: (a) composite activities; (b) sequential activities; (c) concurrent activities; (d) interleaved activities.

In terms of time interval, the so-called Allen relations [67] are commonly used to describe temporal links between activities: (1) Sequential activities are described by associating their time intervals using the before/after and meet/meetby relations. The before/after relation is found when one activity is performed either before or after another activity, and the two associated time intervals have a gap between them. For example, “opening tab” occurs before “washing dishes” and “closing tab” occurs after “washing dishes”. On the other side, the meet/meetby relation indicates that the two associated time intervals do not have a gap between them; (2) Concurrent activities occur at the same time, thus they share the same time intervals either fully or partially. There are nine options in this case: the overlaps/overlappedby relation indicates that the two intervals have a common shared sub-interval, and one interval starts or finishes before the other; the contains/during relation is found when the interval of a composite activity encloses the interval of its composing activities; the starts/startedby and finishes/finishedby relations relate to the case of an activity that starts or finishes during the timespan of another activity with longer time interval; the equals relation indicates that two activities occur in parallel and thus their start and finish intervals occur at the same time [51]. Concurrent activities take place either when one user performs two different activities simultaneously or when multiple users perform activities at the same time. For example, one user can perform “watching TV” and “using the computer” at the same time, or two users can perform “drinking tea” together; (3) Interleaved activities have time intervals that “preempt” each other, which indicates that a long and complex activity has a long time interval that contains the shorter one. The during/contains relation is also used to describe interleaved activities, but with a different meaning to the concurrent activities, since in this case the two interleaved activities are not part of the same coarse-grained activity. For example, the user may use the telephone or drink water at intervals that occur during the activity “prepare meals”.

Besides the relationships between activities, the understanding of context information of an activity is also critical in activity monitoring. Within a smart home environment, there exist many types of contextual information that can be used to characterize an activity, such as interaction with objects, location and time. Object and location are concepts used very much to describe and distinguish activities. Object refers to the relevant participant in an activity, either a person or a physical object. For example, if the TV set is the object, it can be inferred that we are dealing with “watching TV” activity, whereas having the broom as the object can lead to assume the “sweeping the floor” activity.

Location is the specific place where an activity occurs, for example, “preparing meals” takes place in the kitchen and “taking a shower” occurs in bathroom. Moreover, the location can help to discard some objects outside the target place. Time is another key characteristic for activity description, since the user may perform an activity in different times but some activities only occur at specific times of the day. For example, the activity “preparing breakfast” usually occurs in the morning, “preparing dinner” usually occurs in the evening and “taking shower” normally occurs once or twice a day: after waking up and/or before going to bed.

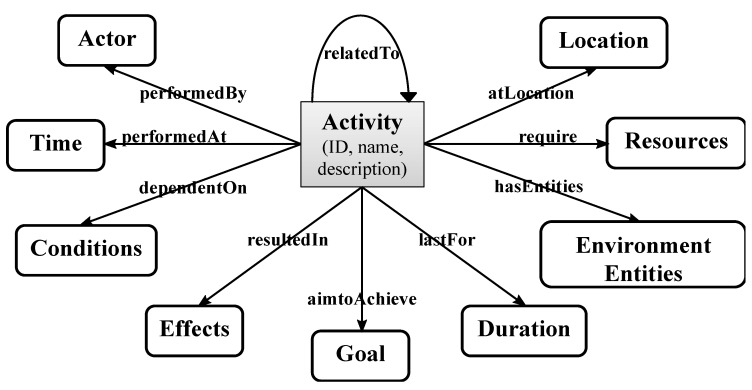

Other context information useful for activities characterization includes human posture, temperature, humidity and speed. Wongpatikaseree et al. [68] used “human posture” to help improve the accuracy of the definition of the ADLs in the smart home environment. For example, currently, most of the intelligent activities monitoring systems detect “sleeping” when bed pressure sensors are triggered. However, there is a considerable uncertainty in the “sleeping” recognition defined this way, since the sensors may be triggered by the user while sleeping but also while sitting on the bed. As a consequence, human posture such as “standing”, “sitting” and “lying down” can help to recognize the activity more accurately. Figure 3 (based on [69] and modified by the authors of this paper) depicts the conceptual description of activities characterization in smart home.

Figure 3.

Conceptual description of an activity characterization (based on [69]).

Apart from the activity ID, name and textual description, an activity can be described by a number of properties. A property of an activity is specified with its domain and range. This way, the activity can be related to other activities or conceptual entities. Some properties, such as time, object and location, represent the context information within which the activity takes place (note that in Figure 3 “object” is split into physical objects, which are called “Resources”, and alive objects, called “Actors”). Properties like conditions and effects represent the causal and functional relationships, which are used for inference during activity level reasoning.

3.3. Activity Context Representation Formalization

The standard formalization of the contextual information defined in the conceptualization process is a key step to allow effective designs and implementations of smart home infrastructures and services. Usually the contextual information related to the activity is not formalized in a standard way due to the heterogeneity of sensor records from different sources. Table 2 shows an example of the differences between three datasets collected from real world smart home environments ([70,71,72]). This heterogeneity hinders interoperability, and the different context representations need separate process approaches to do further reasoning and analysis, which is not efficient for the data exchange and reusability within smart home community. A common context formalization forms the basis for context management to facilitate expressive representation, semantic sharing and interoperability of heterogeneous computational entities. In the following, we present the major methods on activity context representation formalism found in current literature (summarized in Table 3). We will follow, in this sub-section, a bottom-up approach in the sense that we will start by including the most concrete and straightforward structures (key-value pairs) and will end by the most powerful and complex models (ontologies).

Table 2.

Differences in the format of three datasets collected from real world smart home environments (based on information in [70,71,72]).

| References | Sensor ID Coding | Timestamp Coding | Sensor Value Representation | Other Information |

|---|---|---|---|---|

| Ye et al. [70] (based on [71]) | Name indicating the location or the object to which the sensor is attached | Date and time in a single feature | ON/OFF (activation or deactivation) | Activity information in the form of annotations (optional) |

| Cook et al. [71] | Number | Date and time in a single feature | ON/OFF (activation or deactivation) | |

| Tapia et al. [72] | Number or name (e.g., “PDA”) | Two timestamps corresponding to the activation and deactivation times. An additional feature shows the difference between them in seconds | Implicit in the activation (OFF to ON) and deactivation (ON to OFF) timestamps | Contextual information (optional): room and object type to which the sensor is attached |

Table 3.

Context representation: options for its formalization.

| Type | References | Advantages | Disadvantages |

|---|---|---|---|

| Key-value modelling | Aiello et al. [73] CC/PP [74] |

Key-value pairs are easy to manage | Limited capacities in capturing sophisticated context types |

| Markup scheme modelling | McDonald et al. [75] Gonçalves et al. [76] |

Allow defining interaction patterns | Lack of design criteria, only available in a limited scale |

| Graphical modelling | Rialle et al. [77] Henricksen et al. [78] |

More comprehensive support for capturing imperfect and historical information | Flat information model, limited in supporting interoperability within context-aware systems |

| Object-oriented modelling | Zhang et al. [80] | Good performance in object related activity context representation | Limitation of interoperability |

| Logic-based modelling | Bruno et al. [81] Chen et al. [82] |

Clear and elegant semantics in describing contextual information | Unable to represent uncertain context and inflexibility to represent user’s habits |

| Ontology-based modelling | Okeyo et al. [65] Ye et al. [84] Perich et al. [83] Chen et al. [85] |

Represent context in terms of heterogeneity, interoperability, and usability with user friendly interface | Require well-built knowledge engineering skills, limited ability in dealing with uncertain and changing context |

The earliest approaches found in the literature on contextual information modelling are the key-value modelling and the markup scheme modelling. Key-value modelling is based on the simplest data structure for describing the activity based on flexible units of sensor data representation. A list of simple key-values pairs is used to define the set of attributes and corresponding value mappings as a single record. The key-value modelling approach is adopted frequently in distributed service system architectures (e.g., MAPS [73]), in which the services are described by using a list of attributes. Composite Capability/Preference Profile (CC/PP) [74] is the first W3C standard context modelling approach based on a key-value-based formalism. This specification uses Resource Description Framework (RDF) language and includes elementary constraints and relationships between context types. Particularly, the key-value modelling provides contextual information in a format that is easy to manage. However, it is not expressive enough to capture more sophisticated context data such as relationships, dependencies, time stamping and quality of the context information. Another major limitation is its lack of support for reasoning on context uncertainty or higher context abstractions.

Markup scheme modelling includes a variety of markup languages including XML to define hierarchical data structure, being the hierarchy expressed by means of markup tags with attributes and content. For example, McDonald et al. [75] proposed homeML, an XML-based format, as a solution for the heterogeneous nature of data records, in order to support data exchange and storage within smart home environments. They have also developed the homeML suite as an online tool to support data exchange and reuse. Gonçalves et al. [76] proposed ecgAWARE, an ECG XML-based markup language, used as an ambulatory electrocardiogram data format standardization to support storage and transmission of ECG data in order to perform patients’ heart telemonitoring during their daily activities. Markup scheme modelling approaches allow one to define interaction patterns and dependencies under the consideration of contextual information, but they suffer from scalability and interoperability problems due to the lack of common design criteria, which are only available at very limited scales.

Graphical modelling includes diagrammatical representation of contextual information at design phase. Unified Modelling Language (UML) and Object-Role Modelling (ORM) are commonly used as graphical approaches to appropriately represent activity contextual information by means of a graphical language. Rialle et al. [77] used UML context model as the standardization of telemonitoring systems representation to present the components of the system. They developed two types of UML models: the static model, to describe the agents along with their structural relationships, and the dynamic model, to describe the dynamic relationships between agents. The derivation of contextual information from sensor resources, such as profile preferences and location information, is used to identify scenarios. UML models show advantages in capturing information about the static structure and dynamic behaviour of a system, but have limitations in providing precise semantics and supporting reasoning on human behaviours. Henricksen et al. [78] developed Context Modelling Language (CML), based on ORM, to allow fact types to be categorized. CML provides graphical notions of different types of contextual information to support the analysis and formal specification of the context requirements, interrelationship representation, grammar support for runtime querying and high-level context abstraction. The CML model also has extended abilities, including capturing different classifications of facts, quality metadata, conflicting assertions (such as conflicting locations from multiple sensors), dependencies among various fact types and histories on the fact types. However, the CML model defines a flat information model in which all the context types are uniformly represented as atomic facts, being as a consequence not appropriate for representing a hierarchical structure. Additionally, it emphasizes the development of context appropriate representations for a particular dominant application, and does not facilitate interoperability with other context-aware systems.

Object-oriented modelling uses a set of object oriented programming principles, including abstraction, inheritance, polymorphism, composition, and aggregation, to represent context information. This approach essentially allows to translate between the activity name and the involved objects. For example, the activity “make coffee” is usually related to objects “mug” and “milk”. In object-oriented models entities have related contexts items as attributes or subobjects. An entity is defined as “a person, place or objects that is considered relevant to the interaction between a user and an application, including the user and applications themselves” [79]. Zhang et al. [80] adopted a general object-oriented context model to be used in a context-aware smart home system. In their context model, the information is structured around a set of entities, each describing a physical or conceptual object such as a person or an activity. These contextual entities are linked to their attributes and other entities with relationships. For example, a subject (e.g., Ray), that is a contextual entity, may be related to an object (e.g., BedRoom), that is a contextual entity or a datatype value, by means of a verb (e.g., locatedIn), that describes the attributes of the subject or the relationship between the subject and the object. Object-oriented models take advantage of object-oriented features to encapsulate context processing and representation through well-defined interfaces. They also have some drawbacks including the limitation of interoperability and the need of further work in terms of context semantics to be used by services.

Logic-based modelling mainly focuses on adding context as logical facts and extracting contextual information by using rules. Bouchard et al. [81] proposed a logical framework to represent the contextual information gathered in a smart home using a variety of sensors. Their approach is based on description logic (DL) to overcome the problem of intra-dependencies among context items. DL is used to formalize actions, entities and variables states in smart home environments by creating a hierarchy of contextual information for the specific domain. The logical model consists of a set of conceptual and assertive objects to define the current state of the environment. When new inputs are received from hardware sensors, the agent updates the state of the environment and creates an action structure, representing the changes that happened to the environment. Another logic-based approach is based on event calculus (EC). This approach is a highly developed logical theory of actions that is able to describe dynamically environment state changing in sorted first-order logic. In addition, it has the capability of incorporating a temporal dimension during the description process. Chen et al. [82] proposed a formalized framework using EC as the representation approach for the specification of smart home knowledge domain. EC completes the formalization of the domain by using fluents, events, and predicates. Fluents represent any property related to the state of a smart home, events represent any change in a property state and predicates identify the relationships between fluents and events for further formalisation of the domain. In their EC-based framework, they model sensor activations as events and object states as fluents. Additionally they develop a set of high-level logic-based models to represent compound activities, such that the occurrences and effects of compound activities can be formalised in the same way as with primitive actions. This model has clear and elegant semantics in describing contextual information and could serve as a proper way to represent facts, expressions and rules to do further inference and derive new facts from existing action observations. Nevertheless, this model has limitations including its incapability of representing context information uncertainty and its inflexibility to represent multiple users’ activity habits.

Ontology modelling provides a semantic contextual information representation of activities. Ontology modelling is particularly interesting to represent activity contextual information since it provides a representation of concepts in a hierarchal manner that has been explicitly agreed upon. Ontology-based markup languages provide a portable specification together with reasoning mechanisms. The core elements in the formalism are concepts, instances and relationships. Standard Ontology for Ubiquitous and Pervasive Application (SOUPA) [83] is one of the most comprehensive ontologies to provide formal and well-structured semantics for context information programming. It contains representation of generic domain knowledge (such as agents, events, space and time) in different scenarios as well as particular concepts in narrower domains (such as home and office environments). Ye et al. [84] proposed a reusable formal ontology model to describe the intelligent environment context. The context ontology model consists of four components: object, location, sensor and activity. The structural properties of the activities are represented by the other three concepts (object, location and sensors). Additionally, they specify two types of time conditions: occurring time and duration. For example, the activity “prepare breakfast” should occur in the morning (6 am to 12 am) and the activity “take shower” should not last more than 5 min. Chen et al. [85] proposed a formal ADL ontology model to establish links between activities and contextual information through activity-based properties. They used web ontology language (OWL) for ontological modelling and representation. OWL is a formalized markup language theoretically based on the well-developed knowledge representation formalism of DL. It is primarily designed to represent contextual information about classes of objects and inter-relationships between them. The main feature of their ontology model is that it can model domain knowledge at two levels of abstraction: the conceptual level, in which an activity class is described by a number of properties according to generic activity knowledge; and the specific level, in which the special way a user performs an activity can be modelled as an instance. A similar work can be found in [65]. Ontology-based representations approaches show clear advantages for context knowledge sharing among different entities by using OWL formalisms. They also work well as a solution for capturing sensor information in terms of heterogeneity, interoperability and usability with user-friendly graphic tools (e.g., protégé [86]). However, there are current limitations of this domain ontology models that must be tackled: they require solid knowledge engineering skills; they do not support time-related reasoning, they are normally computationally expensive in context reasoning; they have limited ability to deal with uncertain and changing context of objects.

4. Sensors in the Smart Home

The concept of ubiquitous sensing arises in the smart home, in which a wide variety of sensors/devices integrated in daily objects and infrastructure at home is connected by network technologies in order to gather contextual information about human activities. There are mainly two categories of activity monitoring approaches in terms of sensor deployment: Audio/visual-based approach and sensor-based approach. The audio/visual-based approach involves audio and visual sensing devices, such as microphones and cameras, to monitor the inhabitants’ movements and environmental changes. On its side, the sensor-based approach is based on the use of sensors embedded in the smart home environment or worn by the users. Environmental sensors are used to detect human activities related to specific objects or performed in specific areas, whereas wearable sensors are used for monitoring ambulatory activities and physiological signals. In this section, we will summarize the commonly used sensors for monitoring different activities in recent research and analyse the advantages/disadvantages of these sensors. The main characteristics of these sensors are summarized in Table 4.

Table 4.

Sensors in the smart home: summary of main characteristics relevant to activity detection.

| Sensor | Measurement | Data Format | Advantages | Disadvantages |

|---|---|---|---|---|

| Video cameras | Human actions/environmental state | Image, video | Precise information | Privacy issues, computational expense, acceptability issues |

| Microphones | Voice detection, other sounds | Audio | Certain and rich information about sound | Implementation difficulty and high computation cost, potential acceptability issues |

| Simple binary sensors | User-object interaction detection/movements and location identification | Categorical | Low-cost, low-maintenance, easy to install and replace, inexpensive, less privacy-sensitivity, minimal computation requirements | Provide simple and limited information for composite and multi-user activity monitoring |

| RFID | Object and user identification | Categorical | Small size and low cost | Reader collision and tag collision, range limited |

| Temperature sensors/light sensors/humidity sensors | Environmental parameters | Time series | Intuitive monitoring of environment and object | Limited information for activity monitoring |

| Wearable inertial sensors | Acceleration/orientation | Time series | Compact size, low cost, non-intrusiveness, high accuracy, unique identification of users, user’s location easily tracked. | Cumbersome and uncomfortable feeling, cannot provide sufficient context information |

| Wearable vital signs sensors | Vital signs | Analog signal | Sensitive to slight change in vital signs monitoring, more accurate in emergency situation detecting | Reliability constraints, Security issues and uncomfortable feeling for long-time skin attaching |

Video cameras are low-cost devices that can provide very detailed and rich context information about human actions and environmental states. A sequence of images is directly used to detect human activity within their monitored areas. Video information can provide direct and clear information about the objects within smart home, for example, the number of people. Thus video cameras have strong advantages on multiple-users’ activity monitoring. However, they face difficulties including privacy issues, high computational expense and environment dependency. On their part, microphones have as advantages their ability of providing accurate information about users’ communications and sounds in specific locations inside smart home. However, they suffer from implementation difficulties and high computational costs associated to the audio processing algorithms necessary to distinguish different sounds, especially when there are multiple residents inside the home. Microphones, although probably to a lesser extent than video cameras, can also be perceived as privacy threats, since they can potentially record private conversations. It is anyway worth mentioning that there are studies that show that the acceptability of this technology is strongly influenced by the increase in freedom that caregivers’ perceive while preserving safety of their loved elderly [87] and the potential usefulness of voice-driven interfaces inside home [88].

We firmly believe that the acceptance of the technology by the users is a critical issue in smart home environments (as already stated in Section 2.4 above). That is the reason why our analysis is mainly focused on sensors other than the audio/visual ones.

4.1. Environmental Sensors

Diverse sensors can be deployed in different home areas or attached on a range of objects to monitor activities in smart home. Most ADLs are performed in specific locations and with specific user-object interactions. For example, cooking activity usually takes place in the kitchen (specific space), and telephoning involves interacting with the phone (specific interaction with an object). Thus the activity can be recognized from user-object interactions combined with environment observation. For example, if sensors indicate that the stove is on and that there is water usage in the kitchen, it can be strongly suggested that the activity of preparing meals is taking place. Therefore, it is assumed that environmental sensors data can constitute powerful information to observe the human behaviours within smart home. In this section, we will summarize the commonly used sensors embedded in smart home for detecting ADLs and discuss their advantages and disadvantages.

Simple binary sensors, including state-change sensors, motion sensors, contact switches and pressure sensors, may be deployed on a range of objects in smart home environments for monitoring users’ movements and locations. A simple state-change sensor can be used to detect any change of the state of an object which can be subsequently be used to reflect the user-object interactions. For example, a state-change sensor attached to the handset of a telephone detects if the handset has been lifted from the telephone base station. Motion sensors are used for detecting the inhabitant’s presence and location throughout the house. Infrared presence sensor is the most commonly used type of motion sensor in smart environments to detect users’ presence. Contact switches are usually installed on the doors of rooms, fridge and cabinets for detecting specific interactions that the user performs with these objects. Pressure sensors can be discreetly installed on objects such as sofas, beds, chairs and floors for tracking movements and locations of the user. For example, the usage of pressure sensors on the floor in front of the kitchen sink could serve for detecting the meal preparation activity with the help of other sensors.

In real-world scenarios using a single sensor type normally cannot provide enough information for detecting activities, especially for some complex ones. Thus multiple sensors are needed to provide more accurate information related to activity monitoring. Wilson and Atkeson [89] chose four kinds of binary sensors (motion detectors, break-beam sensors, pressure sensors and contact switches) which can be triggered by gross movement, point movement, gross manipulation and point manipulation for tracking and monitoring activities. Ordónez et al. [90] monitored seven daily activities (leaving house, using toileting, taking shower, sleeping, eating breakfast, having dinner, and drinking) by using three kinds of sensors. Passive infrared sensors are used to detect motion in a specific area, reed switches are used to detect open/close states of doors and cupboards, and float sensors are used to measure the toilet being flushed. These low-cost, easy-to-install and long-lived binary sensors exhibit the advantages of unobtrusive user-object interaction monitoring in a privacy-preserving way. In addition, they are easy to replace and the gathered data require minimal computation resources. The main drawback is that they can only provide very limited information especially for composite and multi-user activity monitoring.

Radio-Frequency Identification (RFID) works as a combination of environmental sensor and wearable sensor technologies. It consists of a reader worn by the user and an electronic tag attached to an object. The tag responds to a unique identifier, electronically stored in memory, when interrogated by a reader. In smart home both passive and active RFID tags may be used. A passive RFID tag does not contain a power source and is usually attached to an object for detecting the interaction between a user and the object. An active RFID tag contains a battery and is often carried by a user for personal identification throughout the house. Fujinami et al. [91] inserted RFID tags into slippers to track the long-term daily life activities of the elderly with dementia in a group home in Japan. Philipose et al. [92] developed a system to monitor activities in a home environment by using RFID tags attached to the objects. In this system, the activity information is presented with a probabilistic sequence of the used objects. Kim et al. [93] proposed an indoor ubiquitous-healthcare system (U-healthcare) based on RFID technology to accurately locate and monitor in real-time the elderly’s whereabouts. They analyse the locations in association with the time slots and the length of time the user stays in the same place. Then, the collected data is used to infer information such as movements and activity patterns, and determine the elderly’s wellbeing. RFID has as obvious advantages its small size (it can be placed out of sight) and low cost. Moreover, due to the unique identification, RFID is well suited for tracking multiple-users’ location and interactions in order to support activities monitoring of several people [94]. RFID also has disadvantages, such as reader collision and tag collision. Reader collision is produced when the tag is being read by several RFID readers simultaneously, being unable to answer to them. Tag collision usually occurs when a large volume of tags are read by the RFID tag reader and this cannot distinguish these signals. In addition, the problem of reliability and stability, especially when reading through liquid and metals, is another limitation of RFID.

A variety of other sensors such as light sensors, temperature sensors, humidity sensors or power sensors have been also deployed and used in smart home environments to help in the detection of activities. Light sensors are used to measure the intensity of light in a specific location. Temperature sensors are used to measure the temperature of the object and its surrounding environment. Humidity sensors are used to detect the air humidity in a specific area. Power sensors are used to detect the usage of electric devices. These sensors can perform intuitive monitoring of environment and object, but on their own they only can provide very limited information for activity monitoring.

4.2. Wearable Sensors

Wearable sensors refer to sensors that are attached to human body either directly or indirectly, and which usually provide a continuous flow of information. Their small size allows that they are embedded into belts, clothes, glasses, wristwatches, shoes and mobile devices to make them easier to wear. These sensors can be divided into inertial sensors and vital sign sensors (or biosensors). Wearable inertial sensors can give accurate descriptive features of user’s movement and body posture. Vital signs collected from wearable biosensors such as heart rate, blood pressure and skin temperature are critical for elderly people’s health condition monitoring. In this part, we summarize the most commonly used wearable sensors for monitoring ambulatory activities and vital signs.

4.2.1. Inertial Sensors

Accelerometers are the most frequently used sensors for ambulatory activity monitoring. They can measure the value of acceleration along a sensitive axis and are particularly effective in monitoring activities related to body motion such as doing exercise, walking, standing, sitting, or walking upstairs and downstairs. Data collected from accelerometers has four attributes: time, acceleration from x-axis, acceleration from y-axis and acceleration from z-axis. They can provide information to indicate human movement responding to frequency and tilt, which is critical to assess the posture. Due to their small size and relatively low cost, accelerometers can be embedded into wrist bands, watches, bracelets and belts to monitor the user’s activities and wirelessly send data to mobile computing devices. The context inferred from these data can be used for long-time continuous activities monitoring and emergency situation recognition such as fall detection. Recent researches make a try to place accelerometers on different body parts for identifying the optimal activity monitoring performance. For example, in [95], Zhu and Sheng monitored eight daily activities (sitting, standing, lying, walking, sit-to-stand, stand-to-sit, lie-to-sit, and sit-to-lie) in an indoor apartment environment. They use an accelerometer worn on the right thigh of the user to collect motion data. Mannini and Rosenberger [96] used a single accelerometer placed at the wrist or ankle of the user to collect raw data for long-time monitoring. Lei and Bourke [97] placed four accelerometers on four different parts of the body (chest, left under-arm, waist and thigh) to monitor and recognize five activities (standing, sitting, lying, walking and transition. Additional recent research and experiments about different placements of accelerometers are detailed in Table 5.

Table 5.

Different placements of accelerometers on human body for activity monitoring.

| References | Number of Accelerometers | Placements | Activities |

|---|---|---|---|

| Gjoreski et al. [103] (2011) | 7 | Chest, left thigh, right ankle | Standing, sitting, lying, going down, standing up, sitting on the ground, on all fours |

| Jiang et al. [104] (2011) | 4 | Left forearm, right forearm, left shank and right shank | Standing straight, sitting on a chair, lying on a bed, walking, jogging, cycling, walking on an elliptical machine, running on an elliptical machine, rowing and weight lifting. |

| Jennifer et al. [105] (2011) | 1 | Smartphone | Walking, jogging, upstairs, downstairs, standing, sitting |

| Zhu and Sheng [95] (2011) | 1 | Right thigh | Sitting, standing, lying, walking, sit-to-stand, stand-to-sit, lie-to-sit, sit-to-lie |

| Siirtola et al. [106] (2012) | 1 | Smartphone placed in trousers’ front pocket | Walking, cycling, sitting, standing, driving a car |

| Hemalatha and Vaidehi [107] (2013) | 1 | Chest | Standing, walking, sitting, lying, fall |

| Mannini et al. [108] (2013) | 1 | Wrist/ankle | 26 daily activities |

| Zheng et al. [96] (2013) | 1 | Wrist/hip/waist pocket | Lying, sitting, standing, walking, running, dancing, jogging, upstairs, downstairs, skipping |

| Muaaz et al. [109] (2014) | 1 | Waist, right-hand side of the hip | Walking |

| Gao et al. [97] (2014) | 4 | Chest, left under-arm, waist and thigh | Lying, sitting, standing, flat walking and up & down stairs, lie-to-stand, stand-to-lie, sit-to-stand, stand-to-sit |