Abstract

Information processing in the brain crucially depends on the topology of the neuronal connections. We investigate how the topology influences the response of a population of leaky integrate-and-fire neurons to a stimulus. We devise a method to calculate firing rates from a self-consistent system of equations taking into account the degree distribution and degree correlations in the network. We show that assortative degree correlations strongly improve the sensitivity for weak stimuli and propose that such networks possess an advantage in signal processing. We moreover find that there exists an optimum in assortativity at an intermediate level leading to a maximum in input/output mutual information.

Introduction

Revealing the neural code is one of the most ambitious goals in neuroscience. The theory of complex networks is a promising approach to this aim: high cognitive processes are treated as emergent properties of a complex connectivity of many ‘simple’ neurons. An important question is then how the network structure relates to the collective activity of the connected neurons and how this activity can be interpreted in terms of neuronal coding and processing strategies. Advanced techniques for visualization of the activity of single neurons and groups of neurons allow for identification of synaptic links between neurons, leading to the possibility of inferring statistical network characteristics. Neuronal network topology is far from being completely random [1, 2]. Among the most intriguing topological properties are small-world features [3], modularity [4] and large variations in connectivity, e.g. scale-free functional structure [5] and the presence of strongly connected and highly active hubs [6–8]. How are these network properties related to neuronal dynamics? Although the influence of network parameters on the dynamics of neuronal populations has been subject to a large number of studies [1, 9–15], the relevance of heterogeneous connectivity and higher order network statistics have been investigated only recently [12–16]. Simulations show that heterogeneous connectivity structures induce heterogeneous activity patterns [12–16]. Indeed, activity in the neocortex is highly variable: A large proportion of neurons fires at very low rates, whereas a small subset of neurons is highly active and better connected [17–20]. Variability in neuronal network structure and dynamics may be important for information processing such as the detection of weak input signals [12, 15, 20, 21]. Remarkably, some brain regions, such as the barrel cortex, can sense very weak stimuli down to a few spikes to a single neuron [22]. It has been hypothesized, that a sub-set of highly active and highly interconnected neurons may play a significant role in the encoding of sensory information in this region [20].

Here, we study the relevance of two basic network topological features, the degree distribution and degree correlations, for the ability of a network to sense and amplify small input signals. A first approach requires a simple mathematical model describing the activity of neurons. As a basic yet very relevant representation of neuronal dynamics, we here consider rate coding in a network of leaky integrate-and-fire (LIF) neurons and use a mean-field model to account for their synaptic coupling. The powerful mean-field theory rests on the assumption that neurons interact through their synaptic activity but generally fire asynchronously. The information contained in the neuronal network is encoded in their mean firing rate [23, 24]. At this level, the ability of the network to process information is shaped by the relationship of the input signal in form of an input spike frequency and the resulting firing rate of the network. The former is an independent Poissonian spike train injected synaptically into all neurons and the latter can be characterized as an output signal by averaging over the entire population or a part of the population.

This mapping of inputs to the average activity of many neurons has been described as one of the fundamental processing strategies of the brain [25]. One of the major advantages of this population coding is its robustness to failure as information is encoded across many cells. Population coding is important in many brain functions such as orientation discrimination in the primary visual cortex [26], control of eye [27] and arm movements [28]. How the brain can access and process information encoded in the collective activity and how it manipulates this activity to perform these computations efficiently is one of the main questions in computational neuroscience. We aim at this problem by calculating the input/output relationship of large LIF networks using a generalized mean-field method, which takes into account the complex network topology. Furthermore, we are interested in the ability of the network to convey information about stimuli of small amplitude. For weak stimuli, where afferent injected currents are too small to trigger significant single neuron firing, collective activity of the network strongly depends on recurrent input [16, 29], and it may be expected that the effect of recurrent network structure on signal processing is most pronounced in this regime.

To describe collective neuronal activity one often uses the population-density approach, which was successfully applied to cortical circuits of identical neurons [9, 30–32] and to networks of heterogeneous neurons [21]. In the population density approach, the spiking and interplay of many neurons, for instance in the network of a single cortical column, is captured by a probability density function for the states of statistically similar neurons [24, 31]. Here we extend this theory to include the heterogeneity of the network in terms of the degree of a given neuron, i.e., the number of synaptic connections the neuron possesses. We find that the network’s heterogeneity leads to substantial deviations from simple mean-field calculations, where one ignores the network properties. Our method is to divide the whole neuronal population in subpopulations according to the number of incoming synaptic links, or the in-degree k, of neurons. This allows us to consider networks with different levels of assortativity with respect to k (Fig 1), which is a measure of the correlations in the degree of nodes [33]. Degree correlations in neural networks may result from a number of processes including plasticity and they are interesting for a number of reasons. First, degree correlations can be considered the most basic statistical property of a complex network except for the degree distribution itself. Second, there have been large efforts devoted to understand correlations in neuronal spiking [34], but the effects of correlations in structural connectivity have been much less studied so far. Finally, statistical network properties have been considered in the context of synchronization [35], but the characterization of spiking in the unsynchronized regime has not received similar attention in the context of complex networks.

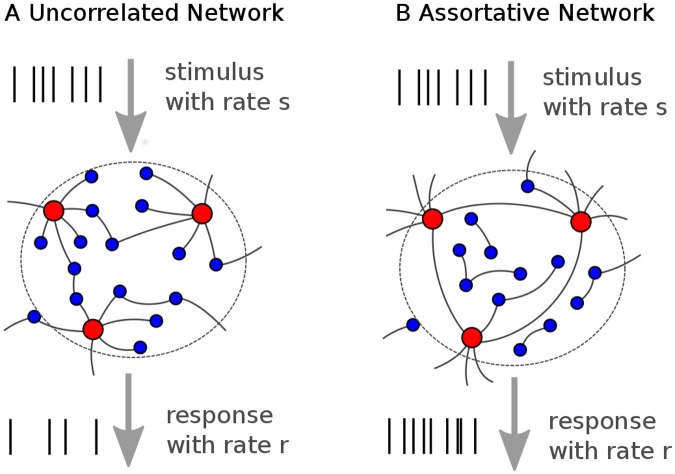

Fig 1. Schematic of the heterogeneous neuronal networks.

(A) In the uncorrelated network, highly connected neurons and poorly connected neurons are joined randomly. Here, red nodes represent an exemplary well connected subpopulation, while blue nodes represent all remaining populations with smaller in-degree k. (B) In the assortative network, neurons with similar connectivity are joined preferably. The network is stimulated by Poissonian external input spike trains with mean rate s, which are injected to each neuron. The network response r to the stimulus is quantified by the average firing rate of a randomly chosen fraction of the network (n neurons).

Using the subpopulation density approach, we calculate the firing rates for the coupled k-populations and show that the obtained values exhibit excellent agreement with results of direct numerical simulation of a network of LIF-neurons. In-degree distribution and degree correlations of the network enter the equations in form of a joint degree distribution N kk′, which is the average number of connections from a k′- to a k-neuron. To be specific in this analysis, we derive simple expressions for N kk′ for exemplary networks of various degree correlation types in the limit of large network size. We apply our method to a correlated network with broad degree distribution and show that the shape of the input/output relationship is strongly affected by different levels of assortativity. Assortative networks sustain activity at sub-threshold levels of input, where uncorrelated networks would not fire, and thus, are more sensitive to weak stimuli. Hence, assortativity may tune the input/output relationship to the distribution of inputs and noise of the system to optimize information transfer. Finally, we calculate the mutual information between sub-threshold stimuli and network response, assuming that each stimulus is equally likely. Recently, mutual information has been used in a similar way to investigate information transmission in cortical networks with balanced excitation and inhibition [36]. For our model network, an excess of assortativity leads to an increase in noise in the firing rate and an optimum of input/output mutual information is found in a range of assortativity consistent with recent estimates from neuronal cultures [37, 38]. Preliminary results of our investigations were published in abstract form in [39].

Results

Meanfield Method for Heterogeneous Correlated Networks

Deriving the Self-Consistent Equations

We begin with a mean-field method for calculating the stationary firing rate of a heterogeneous LIF network with degree correlations. Assume a network of LIF-neurons with heterogeneously distributed in-degrees (Fig 1). Each neuron is stimulated with an independent external Poisson spike train with rate s quantifying the input signal or stimulus strength. If the input strength is small, an unconnected neuron will not reach the threshold for excitation, while for sufficiently large s the neuron will fire. Following Brunel [9], we normalize s by the rate ν thr (see below) that is needed for a neuron to reach threshold in the absence of feedback. For s ≥ 1 the network is mostly driven by the external input, whereas for s < 1 the response is dominated by sustained recurrent activity. The network response r is quantified by the average firing rate of a subset n of its neurons and can be considered a feed-forward activity of the neural population or output.

We are interested in the dependence of the input/output relationship on degree correlations in the network. Since the network of neuronal connections is directed, we have to distinguish between the number of incoming and outgoing connections of a neuron, its in-degree k and out-degree j. In the following we mainly focus on networks with k = j, suggested by findings for C. elegans [40], where in- and out-degree per neuron strongly correlated. The distribution of in- and out-degrees of the neurons is denoted by P in(k) and P out(j), respectively. Furthermore, we take into account in-degree correlations. Main attention is paid to the assortative case when neurons with high in-degree preferably connect to other neurons with high in-degree. Such correlations have been found in cortical networks and in neuronal cultures [5, 37, 41].

The LIF dynamics of a neuron in the population is governed by a single state quantity. The depolarization V i(t) of neuron i follows the ordinary differential equation

| (1) |

where τ is the membrane time constant, R is the membrane resistance and and are the recurrent and external input, respectively. If the depolarization V i(t) reaches a threshold value Θ, the neuron fires an action potential and V i(t) is reset to a refractory voltage V r for a constant refractory period τ ref. Since the neuron cannot fire in this time interval, its maximum firing rate is 1/τ ref. Action potentials arriving at the i-th neuron are modeled as delta spikes contributing to the recurrent synaptic current

| (2) |

where J is the postsynaptic amplitude, and a ij is the adjacency matrix of the network. The second sum runs over all pulses l fired by neuron j at times . These arrive at neuron i after a short synaptic delay D ij.

Let us consider the dynamics of neurons in a k-population assuming that they receive uncorrelated inputs. In the limit of a large network the Poisson pulse flow can be replaced by a Gaussian process by means of the central limit theorem. Then, the input current of the i-th neuron is given by

| (3) |

with a mean μ k(t) and a fluctuating part (noise) with intensity σ k. Here ξ(t) is Gaussian white noise with zero mean and unit variance. Each k-population receives inputs from other k′-populations: μ k(t) and σ k depend on the firing rates r k′ of other populations. Using Eq (3) we rewrite Eq (1) as a Langevin equation for :

| (4) |

The voltage distribution P(V (k)) is given by the corresponding Fokker-Planck equation, and the average stationary firing rate (given by the self-consistency conditions, see materials and methods) is given by

| (5) |

where is the error function, k min, k max are the minimum and maximum degree in the network, and is the coupled transfer function. Thus, the steady-state firing rate of each k-population is coupled to the firing rates of all other k′-populations via the input mean and variance

| (6) |

| (7) |

where N kk′ is the average number of k′-neurons that synapse into a k-neuron (a joint degree distribution) and [9]. Given N kk′, all result by numerically solving Eq (5). N kk′ follows from the adjacency matrix of the network by averaging the number of links from k′-neurons to a k-neuron. In general, it is of the form

| (8) |

where f(k, k′) is the probability that an incoming link of a k-neuron originates from a k′-neuron. Normalization requires

| (9) |

Solving the Self-Consistent Equations

The approximate dynamics of the neuronal network can be described by the differential equation

| (10) |

where and Φ are the vectors of population firing rates and coupled transfer functions , and τ x is a time-constant of appropriate choice [24, 31]. Solutions of the self-consistent Eq (5) are fixed points of the above equation and can be found by numerical integration. In order to integrate Eq (10), one needs to evaluate the input mean and variance of Eqs (6 and 7), where the joint degree distribution N kk′ enters. Thus, it is necessary to first assess the network topology by means of inferring this joint degree distribution. In the following, we will derive particularly simple expressions of N kk′ = kf(k, k′) in the thermodynamic limit for networks with arbitrary degree distributions and different in-degree correlations.

First assume a maximally random network of N neurons, where in- and out-degree of each neuron are drawn independently from the in- and out-degree distributions P in(k) and P out(j), respectively. In this case, in-degree correlations are minimal and f(k, k′) is simply the fraction of links that originate from neurons with in-degree k′, where E = N⟨k⟩ = N⟨j⟩ is the total number of links in the network. The size of a k′-population corresponds to the total number of k′-neurons in the network, which is NP in(k′). A fraction P out(j′) of this population has j′ outgoing links. Hence, the number of outgoing links of this fraction is

| (11) |

We now sum up the outgoing links of all fractions of the k′-population to obtain the total number of outgoing links from all k′-neurons

| (12) |

Thus, the fraction of links that originate from neurons with in-degree k′ is

| (13) |

The input mean and variance of Eqs (6 and 7) become

| (14) |

| (15) |

where is the mean firing rate of the network

| (16) |

Thus, the self-consistent Eq (5) for the mean-firing rate of the network decouple and reduce to

| (17) |

and we can write a one-dimensional self-consistent equation for the mean firing rate of the network

| (18) |

Interestingly, Eq (18) does not depend on the out-degree distribution of the network, because effects of its heterogeneity are averaged out in the limit of large network size.

In the following we assume random networks with in-degree distribution P in(k) and equal in- and out-degree per neuron, j = k. Synaptic connections show no in-degree correlations if they are drawn at random, but now in-degree and out-degree of each neuron are positively correlated, as shown for the neuronal network of C. elegans [40]. In this case the number of outgoing links from all k′-neurons in the network is simply k′ times the number of k′-neurons

| (19) |

The fraction of links that originate from neurons with in-degree k′ is then

| (20) |

This gives for the input mean and variance of Eqs (6 and 7), respectively

| (21) |

| (22) |

Thus, in a random network with equal in- and out-degree per neuron and without in-degree correlations of the synaptic connections, the input mean and variance of each k-population are dependent on the steady-state firing rate of all the other k′-populations. Note, that in networks without in-degree correlations, the probability f(k, k′) is always independent of k.

For networks with in-degree correlations, the average number of connections between a k′-population and a k-population differs from the uncorrelated case. In networks with assortative in-degree correlations, neurons preferably connect to neurons with similar in-degree. In the extreme case, the network segregates into disconnected subnetworks of neurons with the same in-degree:

| (23) |

where δ(k, k′) is the Kronecker delta. Note, that in networks with equal in- and out-degree per neuron the number of outgoing links and incoming links is equal for each k-population. Thus, in strongly assortative networks, all k-population subnetworks can be disconnected from each other. In networks with nonequal in- and out-degree per neuron, complete segregation into decoupled k-populations is only possible if the average number of outputs of k-neurons is k. A mismatch between the average number of inputs and outputs in a k-population results in connections between different populations and in this case, the distribution f(k, k′) may depend on the in- and out-degree distribution and, potentially, on correlations between in- and out-degree per neuron.

In networks with disassortative in-degree correlations, high-degree populations are connected to populations with low degree and vice versa. The dependence of the joint degree distribution on the probability distribution of the input and out-degrees can be calculated analytically for undirected networks [42]. In contrast to the assortative network, f(k, k′) here depends on the in-degree distribution of the network. Therefore, we sample N kk′ from the adjacency matrix of the disassortative network and show that our mean-field model accurately predicts its mean firing rate.

Stimulus Response of Heterogeneous Correlated Networks

In what follows we use the k-population model to analyze the response of three representative heterogeneous networks with different degree correlations to a stimulus s. Theoretical predictions are then compared to full simulations of 105 LIF neurons. For details on the model and theory, see materials and methods. We chose a power-law in-degree distribution of P(k) = Zk −2 with normalization constant Z between a minimum degree k min = 10 and a maximum degree k max = 500, because we are interested in networks of strong heterogeneity. Power-law degree distributions have been found in recent in vitro experiments [37, 43, 44]. In-degree correlations are quantified by the Pearson correlation coefficient p, as defined in [45]

| (24) |

where e kk′ is the probability, that a randomly chosen directed link leads into a neuron of in-degree k and out of a neuron of in-degree k′. is the excess in-degree distribution of the neuron at the origin of a random link

| (25) |

The Pearson degree correlation coefficient is normalized by the variance of the excess in-degree distribution

| (26) |

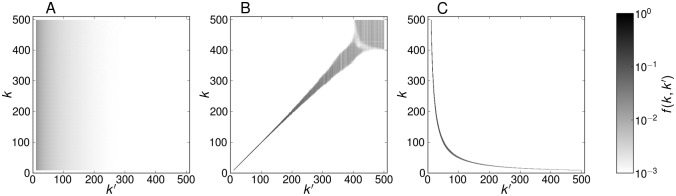

and ranges from -1 for a fully disassortative network up to 1 for a fully assortative network. Note, that Eq (24) suffices to quantify in-degree correlations if in- and out-degrees are equal per neuron. Otherwise, alternative measures may be required [41]. For full simulations, we first construct large random networks with the desired in-degree distribution using a configuration model [46] and then impose degree correlations by shuffling links according to a Metropolis algorithm [47]. For details of the network model, see materials and methods. The three representative networks have p = 0.997 (assortative), p = −0.004 (uncorrelated) and p = −0.662 (disassortative). The connection probabilities f(k, k′) sampled from the adjacency matrices of the three networks are shown in Fig 2.

Fig 2. Probability f(k, k′) for a random input of a k-neuron to originate from a k′-neuron, sampled from the adjacency matrices of simulations with N = 105.

Normalization demands ∑k′ f(k, k′) = 1. (A) Uncorrelated network, (B) assortative network, (C) disassortative network. The corresponding Pearson coefficients are p = −0.004 (uncorrelated); p = 0.997 (assortative); p = −0.662 (disassortative).

For the mean-field analysis, it is feasible to use simplified approximations for f(k, k′), so that sampling from full IF networks is not necessary. Additionally, the dimension of the self-consistent Eq (5) may be reduced for efficient computation. For the uncorrelated network, we use Eq (20), which gives

| (27) |

In the maximally assortative network, neurons with the same in-degree are connected almost exclusively, and f(k, k′) = δ(k, k′) is a sufficient approximation of the peaks in Fig 2B. The maximally disassortative network segregates into subnetworks where a population of neurons with large in-degree is recurrently connected to a population of neurons with small in-degree. Similar to the assortative network, the probability f(k, k′) of the disassortative network can be approximated by employing a Kronecker delta, using a fit of the peak positions in Fig 2C, which yields . The probability f(k, k′) is then

| (28) |

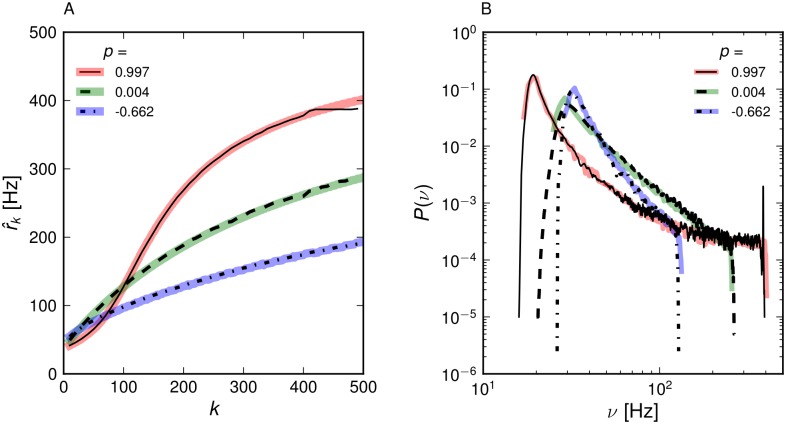

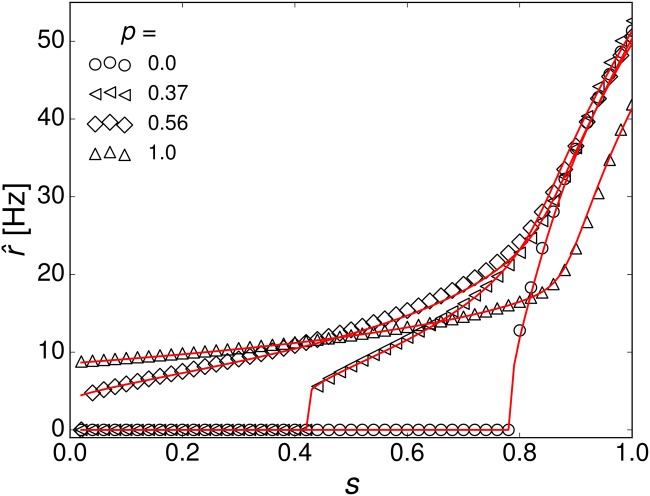

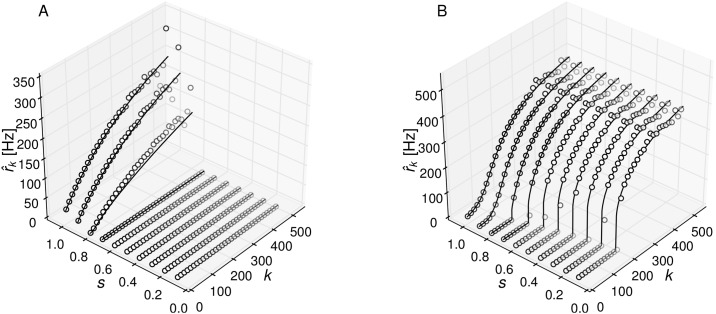

Results for the population firing rates corresponding to a stimulus s = 1.2 are shown in Fig 3A together with the mean-field predictions. Theory and simulations agree very well. In the assortative network, firing rates of high-degree populations are raised and the ones of low-degree populations are lowered compared to the uncorrelated network. Disassortative networks show the opposite effect. The distribution of single-neuron firing rates P(ν) can be estimated directly from the population means, if one assumes that the firing rates of all neurons in a given k-population are equal. Then, each firing rate occurs NP in(k) times and this distribution of firing rates can simply be binned and normalized. This estimation is close to the actual firing rate distribution in the network (Fig 3B). Assortativity broadens the distribution, whereas disassortativity narrows it. Note, that the firing rate distributions appear to be power-law tailed in disassortative and uncorrelated networks only. Let us now consider the mean firing rate as a response to a sub-threshold stimulus s < 1 for different levels of assortativity. This is plotted in Fig 4 (open symbols) together with theoretical predictions (red lines). In an uncorrelated network for small s and shows a sharp transition to sustained activity at s ≈ 0.8, whereas assortative networks are active even for small s. The qualitative explanation is as follows. The neurons with low input degree eventually stop firing when their total input current becomes low. In uncorrelated networks this leads to a cascading failure of spiking of stronger connected neurons. In assortative networks the failure of neurons with low in-degree only leads to failure of the low-degree subnetwork, whereas high-degree subnetworks sustain their recurrent activity (Fig 5). This behavior is reminiscent of findings for percolation in complex networks: At low densities of links, assortative networks remain robust under random failure [48]. It is important to mention that the network may exhibit bistability for very low firing rates, where the mean-field solutions exhibit an additional unstable branch below the stable one that is shown in our results. In practice, bistability leads to hysteresis, where network dynamics depends on previous activity. This effect is discussed extensively in [16, 49]. However, we assume the network to operate in the stable upper branch exclusively by adjusting its sustained activity to changes in the stimulus instead of switching on and off. Networks with strong recurrent activity and the assortative networks considered below will sustain their activity even when the stimulus drops to zero.

Fig 3. Stationary activity of correlated networks.

(A) Population firing rates and (B) distribution of single neuron firing rates for a network with P(k) ∼ k −2, k = [10, …, 500] for s = 1.2 from simulations (thin full and dotted lines) and theory (thick lines).

Fig 4. Mean firing rate of the uncorrelated and correlated networks in the sub-threshold regime from simulations (open symbols) and mean-field theory (straight red lines).

The joint degree distributions N kk′ for the mean-field calculations were sampled from the adjacency matrices of the constructed networks (N = 105).

Fig 5. Mean firing rate of the k-populations for decreasing sub-threshold stimulus s.

(A) Uncorrelated network, p = 0.000 and (B) strongly assortative network, p = 0.996.

Assortativity Optimizes Information Transfer of Heterogeneous Networks

Our results show that assortativity has a strong impact on the input/output relationship of the model network for small inputs. Here, we are particularly interested in the ability of the network to obtain information about stimuli in this regime and how this ability is affected by assortativity. We quantify the efficacy of this information transfer by the input-output mutual information [50]:

| (29) |

Here P(s) is the probability distribution of inputs, which we here assume as an ensemble of stationary stimuli presented to the network, P(r∣s) is the probability distribution of network responses conditioned on the stimulus strength, and P(r) is the corresponding unconditional probability. The distribution P(r) could be obtained by collecting a large number of network responses r, when the network is subjected to the distribution P(s) of stimuli over time.

The network response r is the average firing rate of n randomly chosen neurons, since only a finite fraction of neurons feeds-forward information to a different area of the nervous system for further processing. This response is noisy, because it is sampled from a heterogeneous distribution of single-neuron firing rates. For sufficiently large n the variability can be approximated by a Gaussian with mean and variance σ 2/n, where σ 2 is the variance of mean firing rates in all k-populations

| (30) |

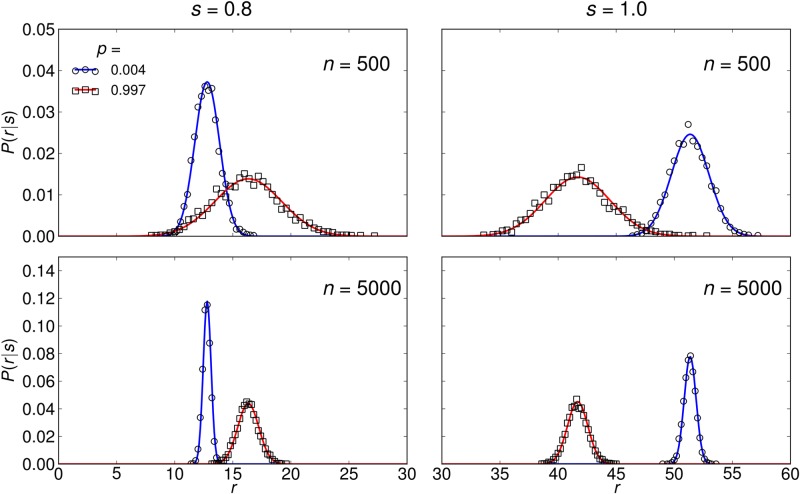

Exemplary plots of P(r∣s) from simulations are shown in Fig 6 together with the approximation of Eq (30). Since the distribution of single-neuron firing rates is broadened by assortativity (Fig 3B), the corresponding response r is more noisy in the assortative network, arguing that the assortative network is less reliable in encoding the signal. However, assortative networks respond to very weak stimuli (s < 0.8), where the uncorrelated network cannot fire, which results in increased sensitivity. Therefore, a quantitative approach is needed to draw conclusion about the signal transmission capabilities of the networks. We calculate I using a small noise approximation [51] by expanding Eq (29) as a power series in

| (31) |

where the first term (which we denote as H) approximates the response variability, or entropy, and the second term corresponds to the noise entropy H noise so that I = H − H noise. Higher order terms vanish as noise decreases. is the probability distribution of mean rates in the absence of sampling noise when the network is exposed to a distribution P(s) of stimuli

| (32) |

Fig 6. Distribution P(r∣s) of network responses to a stimulus s.

Normalized histogram of 105 averages of n random individual neuron firing rates (symbols), and Eq (30) (lines). For evaluation of Eq (30) we calculated the mean stationary network activity using the self-consistent Eq (5). The corresponding variance of the sampling noise σ(s)2/n was obtained from the approximate stationary firing rate distribution as previously discussed for Fig 3B. The distributions are broadened by assortativity, corresponding to larger noise of the response of assortative networks.

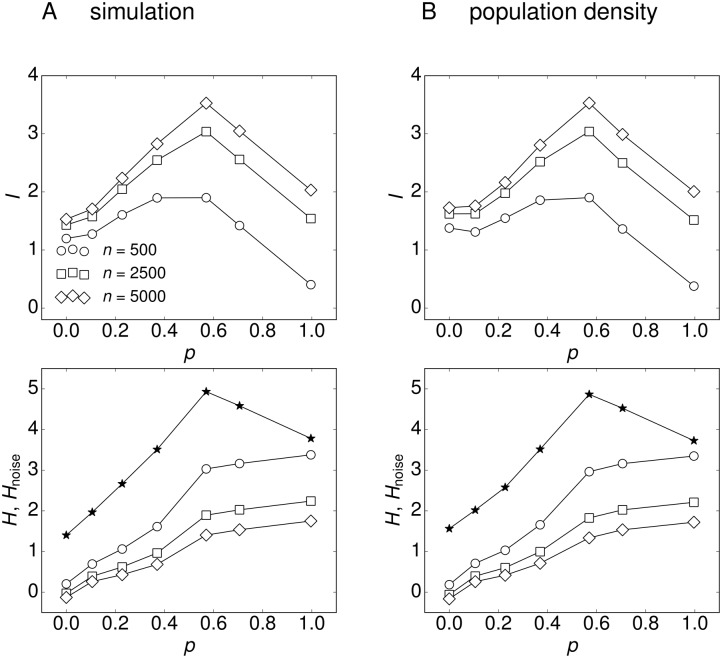

We make the simplifying assumption, that all sub-threshold stimuli are equally likely, P(s) = const, 0 < s < 1. Then, follows from the average response function , Fig 4, and the associated sampling noise. We found that I is optimized for networks with intermediate degree of assortativity, p ∼ 0.6 (Fig 7, top row). First, some amount of assortativity increases sensitivity to weak stimuli, which is related to the fact that the response curves in Fig 5 assume a more linear functional dependence. Second, in extremely assortative networks, the network response approaches an almost constant value for different stimuli and thus does not contain much information about the inputs. Additionally, the increase in noise entropy with increasing assortativity reduces I even further (Fig 7, bottom row), indicating that the network response is too noisy to reliably transmit information about the stimulus.

Fig 7. Information transfer of assortative networks.

(Top row) Mutual information I = H − H noise of the input/output relation is optimized for intermediate assortativity. (Bottom row) Response variability H (stars) and noise entropy H noise (open symbols). Network output is quantified by the average firing rate of n randomly chosen neurons. (A) Numerical simulations of networks with N = 105 neurons and (B) calculations with the population-density approach, where N kk′ is sampled from the adjacency matrices of the correlated networks.

In conclusion, the ability of the neuronal network to transmit information is characterized by the shape of the response curve and the variance σ(s) of the firing rate distribution of the neurons. These features are largely controlled by the degree distribution and degree correlations: Increasing the mean degree of the network results in stronger recurrent activity and a decreased stimulus threshold at which the network begins to fire. In addition, the variance of the degree distribution and degree correlations have a large impact on the shape of the response curve and the firing rate distribution. A larger variance of the degree distribution results in a broader firing rate distribution and increased noise in the signal transmission. Assortative degree correlations further increase the output noise, but also smooth the response curve and increase the sensitivity of the network to low stimuli due to a decreased stimulus threshold. In the following, we investigate the signal transmission capabilities of the networks with respect to changes in their degree distributions and discuss the robustness of our findings.

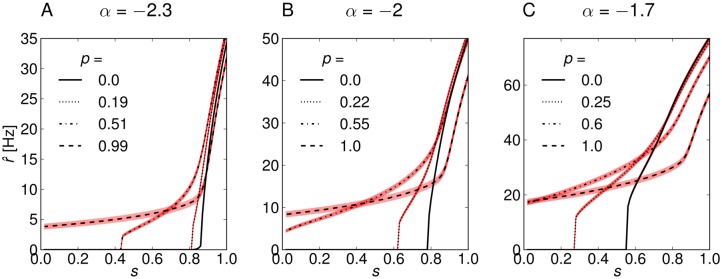

In Fig 8 we show the response curves and the associated standard deviation of the output for n = 5000 of three model networks with power-law distribution between k min = 10 and k max = 500 and increasing exponents α = (−2.3, −2, −1.7). The response curves and variances of the firing rate distributions were calculated with the mean-field approach. The joint degree distributions N kk′ were sampled from the adjacency matrices of the networks. The means ⟨k⟩ and variances of the degree distributions are shown in Table 1. The first network with large negative exponent α = −2.3 and small mean degree fires at very low rates (Fig 8A), which corresponds to a low response variability. Assortativity changes the shape of the response curve and decreases the stimulus threshold, but strongly increases the output noise. The network with small negative exponent α = −1.7 and large mean degree fires at high rates, and the stimulus threshold for the uncorrelated network is shifted to a lower value, s ≃ 0.55 (Fig 8C). Hence, this network has a large response variability even when no in-degree correlations are present. For this network, weaker levels of assortativity are sufficient to reshape the response curve and lower the stimulus threshold even further. For all three networks, the noise level is strongly increased by assortativity.

Fig 8. Response curves of three model networks with power-law in-degree distribution P in(k) ∼ k α, k = [10, … 500] and slightly different exponents α.

For each network we show the response curves for four different levels of assortativity (thin black lines). The thick light red lines indicate ±1SD of the noise of the output from n = 5000 neurons. (A) Model network with large negative exponent of α = −2.3 and small mean degree. The network responses to sub-threshold stimuli are very weak due to small recurrent activity. The uncorrelated network (p = 0) begins to fire above the stimulus threshold of s > 0.8. This threshold is reduced for increasing assortativity, and the response becomes very noisy. (B) Model network with intermediate exponent α = −2 and intermediate mean-degree. The network responses are stronger than for α = −2.3, but the stimulus threshold for the uncorrelated network is similar at s ≃ 0.8. (C) Model network with small negative exponent and large mean degree. The network fires at high rates and has a low stimulus threshold s ≃ 0.55 due to strong recurrent activity.

Table 1. Mean and variance of the in-degree distribution P in(k) ∼ k α, and k min = 10 and k max = 500.

| α | ⟨k⟩ | |

|---|---|---|

| -2.3 | 29 | 1707 |

| -2 | 38 | 3283 |

| -1.7 | 54 | 6001 |

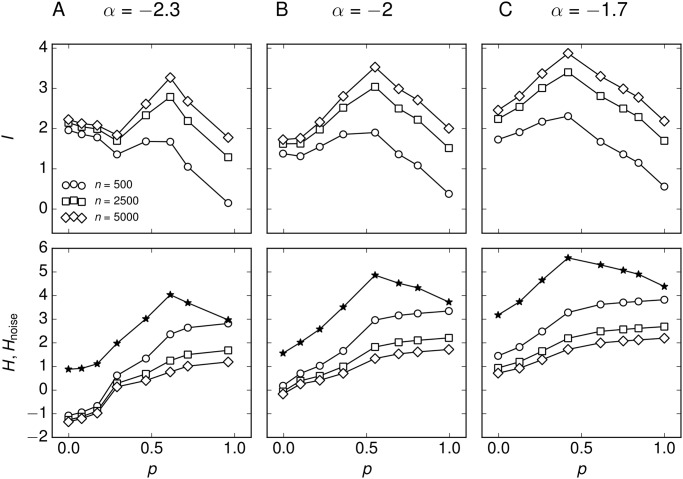

In the following we compare the three networks with respect to their input-output mutual information (Fig 9). First, the maximum value of mutual information increases slightly for increasing α (top row in Fig 9), primarily because increasing α increases the mean degree, leading to larger recurrent activity and higher firing rates of the neurons. Increased firing rates correspond to a larger response variability of the networks, e.g. the range of mean responses for α = −2.3 (0–35 Hz) is smaller than for α = −2 (0–50 Hz), Fig 8A and 8B. However, increasing α also increases the variance of the degree distribution, and hence, increases the noise of the network response to a signal. The larger response variability and noise are represented by a larger entropy H and noise entropy H noise, respectively (bottom row in Fig 9). Second, increasing α shifts the peak position of the mutual information to lower p. This indicates that less assortativity is needed for networks with larger mean-degree and variance of the degree distribution to optimize the information transfer. We conclude that there is a range of network configurations that have their signal transmission optimized by assortative degree correlations, but the optimal level of assortativity depends on the degree distribution of the network. Finally, the optimum vanishes for degree distributions with extremely small or extremely large mean and variance (data not shown). In the former case the mutual information of assortative networks is greatly reduced by exceeding noise levels, because only very few strongly connected neurons sustain firing for small inputs and the vast majority of neurons do not fire. In the latter case, most of the neurons already sustain firing for low inputs in the uncorrelated network, so that assortative degree correlations do not increase the response variability.

Fig 9. Information transfer of the correlated networks.

(Top row) Mutual information I = H − H noise of the input/output relation of the networks. (Bottom row) Entropy H (stars) and noise entropy H noise (open symbols). (A) The network with large negative exponent α = −2.3 has an optimum in information transfer for slightly higher value of p compared to the other networks. Additionally, its signal transmission capabilities are poorer which is characterized by lower values of I that result from small entropy H due to low firing rates. (B) The network with intermediate exponent α = −2.3 has its signal transmission optimized at an intermediate value of assortativity, p ≃ 0.6. (C) The network with small negative exponent α = −1.7 exhibits the most efficient signal transmission. The mutual information peaks for a relatively low value of assortativity p ≃ 0.4, which means that the uncorrelated network is already quite efficient in signal transmission.

Discussion

Recurrent connectivity is an important property of neuronal circuits, which shapes the dynamics of a complex neuronal network in combination with the external input [29]. Although inter-area connectivity plays a dominant role for cognitive functions, in many parts of the nervous system, particularly the neocortex, excitatory recurrent activity is expected to play a significant role in neuronal computations [29]. One of the most interesting functions of feedback through synaptic links within a circuit is the amplification of small input signals. The general questions we pursue are: (i) How does the sensitivity to low-amplitude signals depend on the network properties and (ii) Can optimization in the topology be achieved for a fixed number of connections in the network? As a first step in this analysis we here presented a generalized mean-field approach to calculate the firing rates of excitatory networks with different topologies of the recurrent connections. Our method is based on dividing the network into populations of neurons with equal in-degrees and solving a system of coupled self-consistent equations for all those populations. In general, the method can be applied to all types of complex neuronal networks that have a limited in- and out-degree and are large and random enough so that the central limit theorem can be applied for each population of neurons.

The structural properties we consider are captured by a single matrix, the joint degree distribution N kk′, which is the average number of connections from a k′-neuron to a k-neuron. Hence, the impact on network topology on its steady-state dynamics can be easily assessed by examining this distribution. For low external input, the network sustains firing through recurrent activity and the effect of network topology on dynamics becomes very pronounced. In this regime, assortativity increases the sensitivity of a network to very low external inputs, where uncorrelated networks or disconnected neurons would not fire. This effect is similar, but not equivalent, to the smoothing of the single neuron response curve due to increased noise, e.g. from balanced excitatory and inhibitory background activity [52]. Increased noise improves the network sensitivity to small inputs so that less assortativity would be needed to optimize information transfer. In contrast to balanced background activity, purely excitatory background activity would shift the reponse curve to lower values of s with a similar effect: less assortativity would be needed to optimize signal transmission. However, the strategy of neuronal networks to amplify low inputs could be based on a combination of sustained recurrent activity and background noise.

We quantified the information transfer between sub-threshold stimuli and network response by their mutual information, assuming that each sub-threshold stimulus is equally likely. In this case, assortativity increases the ability of our model network to transfer sub-threshold signals due to the improved stimulus-response relationship. In a more general sense, degree correlations could be used to tune the stimulus-response relationship to the specific distribution of stimuli. To the aim of enhancing sub-threshold sensitivity of the network through sustained firing, a much simpler strategy of the brain would be to raise recurrent activity by increasing the mean connectivity of the network. However, tuning the network dynamics by degree correlations has two major advantages: First, it does not requires additional axons and synapses, which optimizes wiring economy and second, the mean firing rate of the network is lower, which decreases energy consumption [53].

Our finding that assortativity increases signal transmission is consistent with recent studies, where assortativity has been found to enhance neural network memory in noisy conditions [54] and increases the information content of heterogeneous directed networks [41]. In contrast, assortativity is known to decrease synchronizability and robustness of complex networks [35, 55, 56]. This contradiction can be explained by the different conditions applied to the networks [54]: Assortativity tends to enhance network performance in bad conditions (low density of links and high noise), whereas disassortative networks perform best in good conditions (high density of links and low noise). In our model ‘bad conditions’ are imposed by low level of external inputs. For strongly heterogeneous networks, an excess of assortativity leads to a decrease of mutual information, partly because of an increase in noise in the firing rate. In this case, there exists an optimum in assortativity with respect to information transfer, which is consistent with recent estimates from neuronal cultures [37, 38].

In another related study, Vasquez, Heouweling and Tiesinga [12] investigated the sensitivity and stability of networks with correlations between in- and out-degrees per neuron. They find that disassortative in-out-degree correlations improve stability of the networks and have no impact on the network sensitivity. While the sensitivity of their network model also relies on amplification of the signal by recurrent activity, they use background noise that drives neuronal activity and is independent of the stimulus. Hence, in their model the network does not operate in the sub-threshold regime, where neurons would not spike without recurrent activity. Contrary to them, the stimulus in our model is the only source of external driving force to the network, so that sustained recurrent activity is important to activate the neurons. Moreover, they investigated correlations between in- and out-degrees of the neurons and assume no correlations between in-degrees of connected neurons, whereas in our study in- and out-degrees are positively correlated and correlations between in-degrees of connected neurons are considered. Their finding that various in-out-degree correlations have no impact on the sensitivity of the networks are thus no contradiction to our results and may even generalize them.

We examined highly simplified model networks so that we could focus on their connectivity structure. Possibly the most relevant feature we neglected in our study is inhibitory connectivity. Our model could easily be extended to include inhibitory neurons by treating them as separate populations. However, one would have to decide weather to focus on the structural properties of the excitatory sub-network [13], or to include the inhibitory neurons as a random subset of neurons in the network, without changing the statistics of the connections [15]. Examining the population dynamics of inhibitory and excitatory neurons goes beyond the scope of this paper, but the dynamics of mixed networks with degree correlations could be approached in further studies. So far, we can only speculate how our results would be affected by including inhibitory neurons in the model: The most important effect leading to an increased signal transmission of correlated networks is their improved ability to sustain firing for low inputs. In a mixed excitatory-inhibitory network (EI), assortativity in the excitatory sub-network (E) would possibly create a similar effect, because recurrent excitatory activity is increased for highly connected neurons, while inhibitory activity remains very low. In a recent study of Roxin [13], the analysis of a rate model revealed that a broad in-degree distribution of E-to-E connections in an EI network promotes oscillations because of higher firing rates of strongly connected neurons: These neurons inject large excitatory currents into the network and raise the mean recurrent activity of the whole excitatory population. Hence, we could expect that assortativity further increases the mean firing rate of the excitatory population in these networks. Another study by Pernice, Deger, Cardanobile and Rotter [15] showed that assortativity increases the firing rate of a network, where a random fraction of the neurons are inhibitory.

On a final note, while our results show that intermediate assortative connectivity correlations optimize signal transmission, it is important to stress that the neuronal activity itself is assumed to be uncorrelated. Research focusing on correlations of activity in uncorrelated networks also shows that moderate levels of correlation can be linked to advantages in information processing [36, 57, 58]. On the one hand, highly correlated activity occurs in networks with strong recurrent excitation and is associated with decreased information transfer [59]. On the other hand, uncorrelated activity in networks with weak recurrent excitation is insufficiently small to carry significant information. Consequently, optimal information transfer has been found in networks of intermediate excitability which operate at criticality [36, 60, 61]. Our results are consistent with these findings: Activity of uncorrelated networks is too low to contain information about low inputs, whereas activity in highly assortative networks is not sensitive enough to changes in the stimulus for optimal information transmission.

Materials and Methods

Fokker-Planck Description of Complex Networks of Leaky IF Neurons

We would like to describe a population of leaky integrate-and-fire (LIF) neurons that form a network with assortative (or disassortative) property. First, we need to characterize the network statistically with the aim to extract appropriate means and variances of connectivity. Generally we will classify the neurons according to their in-degree, which will be denoted by k. Thus, the network consists of neural subpopulations distinguished by k. Now, we count the number of directed links E kk′ that originate from neurons with in-degree k′ (k′-neurons) and go into neurons with in-degree k (k-neurons). We are interested in the mean number of k′-neurons that synapse into a random k-neuron

| (33) |

We will refer to N kk′ as the joint degree distribution.

k-Population Density Approach

In the following we want to express the dynamics of a network of integrate and fire neurons subject to the usual evolution equation of their membrane potential . Here the superscript k denotes the in-degree of the neuron and i loops over the neurons of the k-population. Then, the equation for the potential is

| (34) |

where τ = RC is a time constant related to an RC-circuit. When the voltage reaches a threshold θ we assume that a δ-function spike is emitted by the neuron and the voltage is reset to a value V reset for a constant refractory time τ ref.

The synaptic input current is given by

| (35) |

Here J is the efficacy of synaptic connections, j loops over the k′-neurons that synapse onto the k-neuron i (here we assume all synapses to be of equal strength) and is the arrival time of the l-th spike of the j-th k′-neuron. Below threshold, the Eq (34) can be integrated analytically:

| (36) |

where H(⋅) is the Heaviside function. We will now follow the population density approach as described by Deco, Jirsa, Robinson, Breakspear and Friston [31], but, different from them, we treat neurons as distinguishable by their in-degree. Thus, we define the k-th probability density by

| (37) |

which is the probability of a k-neuron to have a membrane potential in the interval [v k, v k + dv k]. We employ a Kramers-Moyal expansion to find the relevant Fokker-Planck equation. For this purpose we need the infinitesimal evolution of the voltage

| (38) |

Here, is the firing rate of an individual neuron averaged for the k′-population. From this equation we can determine the first two moments of depolarization

| (39) |

and

| (40) |

From this we find the Fokker-Planck equations

| (41) |

where drift and diffusion coefficients are and with the input mean and variance

| (42) |

and

| (43) |

respectively. We should note that the derivation of the system of Fokker-Planck Eq (41) is based on the assumption that each population can be described by a separate probability distribution. In general, the system of coupled Langevin Eq (4) is equivalent to a multivariate Fokker-Planck equation (see [62]). The coupled system of one-dimensional Fokker-Planck Eq (41) can be derived from the multivariate Fokker-Planck equation by separation of variables.

Self-Consistent Equations for the Stationary Problem

The Fokker-Planck equations can be written in a conservation-of-probability form:

| (44) |

with

| (45) |

At the voltage threshold, the stationary solution should vanish,

| (46) |

and the probability current J k should represent the mean firing rate, , of the population:

| (47) |

At v k → −∞ we need for the integrability of p k

| (48) |

Finally, we need to account for the neurons leaving the threshold at time t to be reinjected at the reset potential V r after a refractory time τ ref:

| (49) |

The stationary solution of Eqs (46–49) is

| (50) |

The normalization of probability mass requires

| (51) |

We solve Eqs (50 and 51) for and find

| (52) |

where is the error function and k min ≤ k ≤ k max, with k min and k max being the minimum and maximum in-degree in the network, respectively.

Note that the functions depend on the firing rates of all populations and thus couple all firing rates. A self-consistent solution of Eq (52) can be obtained by dynamically solving the coupled system of equations [31]

| (53) |

where τ x is a time-constant of appropriate choice (we used τ x = 3 ms for our simulations) [24, 31].

Network Model

We apply our k-population model to a network with power-law in-degree distribution P(k) ∼ k −γ. In real neuronal networks, the power-law dependency is always confined to a region between a minimum and maximum degree k min < k < k max, where k max cannot exceed the number of neurons of the network. Hence, we use the following degree distribution

| (54) |

with the normalization constant

| (55) |

We show exemplary results for the following set of parameters: γ = −2, k min = 10, k max = 500. Simulation parameters resemble typical values found in the cortex: τ = 20 ms, τ ref = 2 ms, V r = 10 mV, J = 0.1 mV, N = 105. The time-step of the integration is 0.01 ms and synaptic delays D ij are drawn uniformly at random between 0 ms and 6 ms [9]. Random delays are included to prevent synchronized cascading firing of the whole network, which is discussed extensively in [63]. Importantly, they do not alter the steady-state Eq (52). Firing rates of the neurons are obtained by counting their spikes in 1 s simulation.

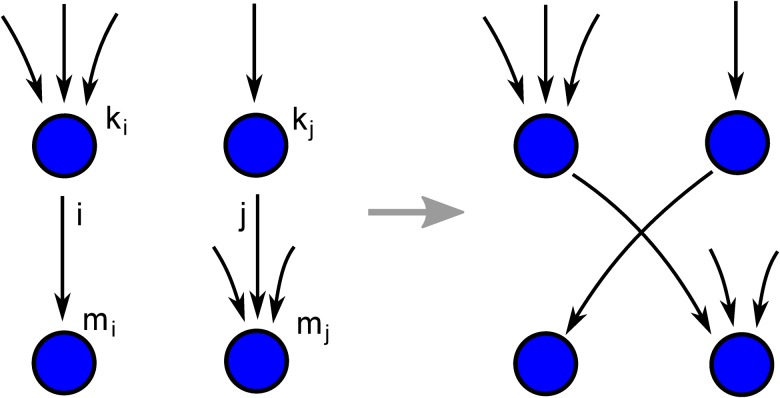

For construction of the model network we employ the configuration model of Newman, Strogatz, and Watts [46], which creates random networks with the desired in- and out-degree distribution. In short, the algorithm works as follows: Each neuron of the network is assigned a target in- and out-degree, drawn from the desired degree distribution. The target-degrees of a neuron can be regarded as a number ingoing and outgoing stubs. The algorithm successively connects randomly selected in- and out-stubs until all neurons in the network match their target-degrees with no free stubs left. Self-connections and multiple connections between the same neurons are removed. Then, degree correlations are imposed on the network by a Metropolis algorithm [47], which swaps links according to the in-degrees of the connected neurons. The algorithm randomly selects two links i, j, originating at neurons with in-degrees k i, k j and going into neurons with in-degrees m i, m j (Fig 10). The targets are swapped with probability g if the swap increases the desired in-degree correlations of the network. Respectively, the targets are swapped at random with probability 1 − g, which reduces existing degree correlations in the network. Thus, the strength of degree correlations can be adjusted by setting a value of g between 0 (uncorrelated) and 1 (maximally correlated).

Fig 10. Schematic of a link swap that increases assortative in-degree correlations in the network without changing the in- and out-degrees of the nodes.

A swap increases assortativity, if

| (56) |

A simple schematic for an link swap that increases assortative in-degree correlations is shown in Fig 10. A swap increases disassortativity, if

| (57) |

The swapping procedure is repeated until the network reaches a steady state. We found the steady-state to set in at about 109 iterations for a network of size N = 105 (Fig 11).

Fig 11. Connectivity correlations of the model network.

(A) Pearson degree correlation coefficient for the model network of size N = 105 after repeated node swapping for different probabilities g. (B) Pearson degree correlation coefficient after saturation (1010 swaps).

Acknowledgments

We thank C.S. Zhou and J. Mejias for valuable discussions.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

CS, IS, and SR were supported by the Deutsche Forschungsgemeinschaft (IRTG 1740). AK was supported by FAPESP. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Bullmore E, Sporns O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nature Reviews Neuroscience. 2009. February;10(3):186–198. [DOI] [PubMed] [Google Scholar]

- 2. Sporns O. Networks of the Brain. MIT press; 2011. [Google Scholar]

- 3. Bassett DS, Bullmore E. Small-world brain networks. The neuroscientist. 2006;12(6):512–523. 10.1177/1073858406293182 [DOI] [PubMed] [Google Scholar]

- 4. Wu K, Taki Y, Sato K, Sassa Y, Inoue K, Goto R, et al. The overlapping community structure of structural brain network in young healthy individuals. PLoS One. 2011;6(5):e19608 10.1371/journal.pone.0019608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Eguiluz VM, Chialvo DR, Cecchi GA, Baliki M, Apkarian AV. Scale-free brain functional networks. Physical review letters. 2005;94(1):018102 10.1103/PhysRevLett.94.018102 [DOI] [PubMed] [Google Scholar]

- 6. Achard S, Salvador R, Whitcher B, Suckling J, Bullmore E. A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. The Journal of Neuroscience. 2006;26(1):63–72. 10.1523/JNEUROSCI.3874-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Sporns O, Honey CJ, Kötter R. Identification and classification of hubs in brain networks. PloS one. 2007;2(10):e1049 10.1371/journal.pone.0001049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. van den Heuvel MP, Sporns O. Rich-club organization of the human connectome. The Journal of neuroscience. 2011;31(44):15775–15786. 10.1523/JNEUROSCI.3539-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Brunel N. Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of computational neuroscience. 2000;8(3):183–208. 10.1023/A:1008925309027 [DOI] [PubMed] [Google Scholar]

- 10. Benayoun M, Cowan JD, van Drongelen W, Wallace E. Avalanches in a stochastic model of spiking neurons. PLoS computational biology. 2010;6(7):e1000846 10.1371/journal.pcbi.1000846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Voges N, Perrinet L. Complex dynamics in recurrent cortical networks based on spatially realistic connectivities. Frontiers in computational neuroscience. 2012;6 10.3389/fncom.2012.00041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Vasquez JC, Houweling AR, Tiesinga P. Simultaneous stability and sensitivity in model cortical networks is achieved through anti-correlations between the in-and out-degree of connectivity. Frontiers in computational neuroscience. 2013;7 10.3389/fncom.2013.00156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Roxin A. The role of degree distribution in shaping the dynamics in networks of sparsely connected spiking neurons. Frontiers in computational neuroscience. 2011;5 10.3389/fncom.2011.00008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Zhao L, Bryce Beverlin I, Netoff T, Nykamp DQ. Synchronization from second order network connectivity statistics. Frontiers in computational neuroscience. 2011;5 10.3389/fncom.2011.00028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Pernice V, Deger M, Cardanobile S, Rotter S. The relevance of network micro-structure for neural dynamics. Frontiers in computational neuroscience. 2013;7 10.3389/fncom.2013.00072 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Cai D, Tao L, Shkarayev MS, Rangan AV, McLaughlin DW, Kovacic G. The role of fluctuations in coarse-grained descriptions of neuronal networks. Comm Math Sci. 2012;10:307–354. 10.4310/CMS.2012.v10.n1.a14 [DOI] [Google Scholar]

- 17. Margrie TW, Brecht M, Sakmann B. In vivo, low-resistance, whole-cell recordings from neurons in the anaesthetized and awake mammalian brain. Pflügers Archiv. 2002;444(4):491–498. [DOI] [PubMed] [Google Scholar]

- 18. Brecht M, Roth A, Sakmann B. Dynamic receptive fields of reconstructed pyramidal cells in layers 3 and 2 of rat somatosensory barrel cortex. The Journal of physiology. 2003;553(1):243–265. 10.1113/jphysiol.2003.044222 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hromádka T, DeWeese MR, Zador AM. Sparse representation of sounds in the unanesthetized auditory cortex. PLoS biology. 2008;6(1):e16 10.1371/journal.pbio.0060016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Yassin L, Benedetti BL, Jouhanneau JS, Wen JA, Poulet JF, Barth AL. An embedded subnetwork of highly active neurons in the neocortex. Neuron. 2010;68(6):1043–1050. 10.1016/j.neuron.2010.11.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Mejias J, Longtin A. Optimal heterogeneity for coding in spiking neural networks. Physical Review Letters. 2012;108(22):228102 10.1103/PhysRevLett.108.228102 [DOI] [PubMed] [Google Scholar]

- 22. Houweling AR, Doron G, Voigt BC, Herfst LJ, Brecht M. Nanostimulation: manipulation of single neuron activity by juxtacellular current injection. Journal of neurophysiology. 2010;103(3):1696–1704. 10.1152/jn.00421.2009 [DOI] [PubMed] [Google Scholar]

- 23. Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophysical journal. 1972;12(1):1–24. 10.1016/S0006-3495(72)86068-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Gerstner W, Kistler WM. Spiking neuron models: Single neurons, populations, plasticity. Cambridge university press; 2002. [Google Scholar]

- 25. Pouget A, Dayan P, Zemel R. Information processing with population codes. Nature Reviews Neuroscience. 2000;1(2):125–132. 10.1038/35039062 [DOI] [PubMed] [Google Scholar]

- 26. Paradiso M. A theory for the use of visual orientation information which exploits the columnar structure of striate cortex. Biological Cybernetics. 1988;58(1):35–49. 10.1007/BF00363954 [DOI] [PubMed] [Google Scholar]

- 27. Lee C, Rohrer WH, Sparks DL, et al. Population coding of saccadic eye movements by neurons in the superior colliculus. Nature. 1988;332(6162):357–360. 10.1038/332357a0 [DOI] [PubMed] [Google Scholar]

- 28. Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233(4771):1416–1419. 10.1126/science.3749885 [DOI] [PubMed] [Google Scholar]

- 29. Douglas RJ, Martin KA. Recurrent neuronal circuits in the neocortex. Current Biology. 2007;17(13):R496–R500. 10.1016/j.cub.2007.04.024 [DOI] [PubMed] [Google Scholar]

- 30. Abbott L, van Vreeswijk C. Asynchronous states in networks of pulse-coupled oscillators. Physical Review E. 1993;48(2):1483 10.1103/PhysRevE.48.1483 [DOI] [PubMed] [Google Scholar]

- 31. Deco G, Jirsa VK, Robinson PA, Breakspear M, Friston K. The dynamic brain: from spiking neurons to neural masses and cortical fields. PLoS computational biology. 2008;4(8):e1000092 10.1371/journal.pcbi.1000092 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Burkitt AN. A review of the integrate-and-fire neuron model: II. Inhomogeneous synaptic input and network properties. Biological cybernetics. 2006;95(2):97–112. 10.1007/s00422-006-0082-8 [DOI] [PubMed] [Google Scholar]

- 33. Newman ME. Assortative mixing in networks. Physical review letters. 2002;89(20):208701 10.1103/PhysRevLett.89.208701 [DOI] [PubMed] [Google Scholar]

- 34. Averbeck BB, Latham PE, Pouget A. Neural correlations, population coding and computation. Nature Reviews Neuroscience. 2006;7(5):358–366. 10.1038/nrn1888 [DOI] [PubMed] [Google Scholar]

- 35. Arenas A, Díaz-Guilera A, Kurths J, Moreno Y, Zhou C. Synchronization in complex networks. Physics Reports. 2008;469(3):93–153. 10.1016/j.physrep.2008.09.002 [DOI] [Google Scholar]

- 36. Shew WL, Yang H, Yu S, Roy R, Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. The Journal of Neuroscience. 2011;31(1):55–63. 10.1523/JNEUROSCI.4637-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. de Santos-Sierra D, Sendiña-Nadal I, Leyva I, Almendral JA, Anava S, Ayali A, et al. Emergence of small-world anatomical networks in self-organizing clustered neuronal cultures. PLOS ONE. 2014;9(1):e85828 10.1371/journal.pone.0085828 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Teller S, Granell C, De Domenico M, Soriano J, Gómez S, Arenas A. Emergence of assortative mixing between clusters of cultured neurons. Entropy. 2013;15:5464–5474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Schmeltzer C, Kihara A, Sokolov I, Rüdiger S. A k-population model to calculate the firing rate of neuronal networks with degree correlations. BMC Neuroscience. 2014;15(Suppl 1):O14 10.1186/1471-2202-15-S1-O14 [DOI] [Google Scholar]

- 40. Varshney LR, Chen BL, Paniagua E, Hall DH, Chklovskii DB. Structural properties of the Caenorhabditis elegans neuronal network. PLoS computational biology. 2011;7(2):e1001066 10.1371/journal.pcbi.1001066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Piraveenan M, Prokopenko M, Zomaya A. Assortative mixing in directed biological networks. IEEE/ACM Transactions on Computational Biology and Bioinformatics (TCBB). 2012;9(1):66–78. 10.1109/TCBB.2010.80 [DOI] [PubMed] [Google Scholar]

- 42.Xulvi-Brunet R. Structural properties of scale-free networks. Humboldt-Universität zu Berlin, Mathematisch-Naturwissenschaftliche Fakultät I; 2007.

- 43. Bettencourt LMA, Stephens GJ, Ham MI, Gross GW. Functional structure of cortical neuronal networks grown in vitro. Physical Review E. 2007;75(2):021915 10.1103/PhysRevE.75.021915 [DOI] [PubMed] [Google Scholar]

- 44. Downes JH, Hammond MW, Xydas D, Spencer MC, Becerra VM, Warwick K, et al. Emergence of a Small-World Functional Network in Cultured Neurons. PLoS computational biology. 2012;8(5):e1002522 10.1371/journal.pcbi.1002522 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Newman ME. Mixing patterns in networks. Physical Review E. 2003;67(2):026126 10.1103/PhysRevE.67.026126 [DOI] [PubMed] [Google Scholar]

- 46. Newman ME, Strogatz SH, Watts DJ. Random graphs with arbitrary degree distributions and their applications. Physical Review E. 2001;64(2):026118 10.1103/PhysRevE.64.026118 [DOI] [PubMed] [Google Scholar]

- 47. Xulvi-Brunet R, Sokolov IM. Reshuffling scale-free networks: From random to assortative. Phys Rev E. 2004. December;70:066102 10.1103/PhysRevE.70.066102 [DOI] [PubMed] [Google Scholar]

- 48. Newman ME. Assortative mixing in networks. Physical Review Letters. 2002;89(20):208701 10.1103/PhysRevLett.89.208701 [DOI] [PubMed] [Google Scholar]

- 49. Kovačič G, Tao L, Rangan AV, Cai D. Fokker-Planck description of conductance-based integrate-and-fire neuronal networks. Phys Rev E. 2009;80:021904 10.1103/PhysRevE.80.021904 [DOI] [PubMed] [Google Scholar]

- 50. Dayan P, Abbott LF. Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems. The MIT Press; 2005. [Google Scholar]

- 51. Tkačik G, Callan CG, Bialek W. Information flow and optimization in transcriptional regulation. Proceedings of the National Academy of Sciences. 2008;105(34):12265–12270. 10.1073/pnas.0806077105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kandel ER, Schwartz JH, Jessell TM, et al. Principles of neural science. vol. 4 McGraw-Hill New York; 2000. [Google Scholar]

- 53. Laughlin SB. Energy as a constraint on the coding and processing of sensory information. Current Opinion in Neurobiology. 2001;11(4):475–480. 10.1016/S0959-4388(00)00237-3 [DOI] [PubMed] [Google Scholar]

- 54. de Franciscis S, Johnson S, Torres JJ. Enhancing neural-network performance via assortativity. Physical Review E. 2011;83(3):036114 10.1103/PhysRevE.83.036114 [DOI] [PubMed] [Google Scholar]

- 55.Brede M, Sinha S. Assortative mixing by degree makes a network more unstable. arXiv preprint cond-mat/0507710. 2005;.

- 56. Wang B, Zhou T, Xiu Z, Kim B. Optimal synchronizability of networks. The European Physical Journal B. 2007;60(1):89–95. 10.1140/epjb/e2007-00324-y [DOI] [Google Scholar]

- 57. Pola G, Thiele A, Hoffmann K, Panzeri S. An exact method to quantify the information transmitted by different mechanisms of correlational coding. Network-Computation in Neural Systems. 2003;14(1):35–60. 10.1088/0954-898X/14/1/303 [DOI] [PubMed] [Google Scholar]

- 58. Jacobs AL, Fridman G, Douglas RM, Alam NM, Latham PE, Prusky GT, et al. Ruling out and ruling in neural codes. Proceedings of the National Academy of Sciences. 2009;106(14):5936–5941. 10.1073/pnas.0900573106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Rieke F, Warland D, Deruytervansteveninck R, Bialek W. Spikes: Exploring the Neural Code (Computational Neuroscience). 1999;.

- 60. Kinouchi O, Copelli M. Optimal dynamical range of excitable networks at criticality. Nature Physics. 2006;2(5):348–351. 10.1038/nphys289 [DOI] [Google Scholar]

- 61. Beggs JM. The criticality hypothesis: how local cortical networks might optimize information processing. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences. 2008;366(1864):329–343. 10.1098/rsta.2007.2092 [DOI] [PubMed] [Google Scholar]

- 62. Risken H. The Fokker-Planck Equation. Springer; 1984. [Google Scholar]

- 63. Newhall KA, Kovačič G, Kramer PR, Cai D. Cascade-induced synchrony in stochastically driven neuronal networks. Physical Review E. 2010;82(4):041903 10.1103/PhysRevE.82.041903 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.