Abstract

We form first impressions of many traits based on very short interactions. This study examines whether typical adults judge children with high-functioning autism (HFA) to be more socially awkward than their typically developing (TD) peers based on very brief exposure to still images, audio-visual, video-only, or audio-only information. We used video and audio recordings of children with and without HFA captured during a story-retelling task. Typically developing adults were presented with 1-second and 3-second clips of these children, as well as still images, and asked to judge whether the person in the clip was socially awkward. Our findings show that participants who are naïve to diagnostic differences between the children in the clips judged children with HFA to be socially awkward at a significantly higher rate than their typically developing peers. These results remain consistent for exposures as short as 1 second to visual and/or auditory information, as well as for still images. These data suggest that typical adults use subtle non-verbal and non-linguistic cues produced by children with HFA to form rapid judgments of social awkwardness with the potential for significant repercussions in social interactions.

Keywords: autism, facial and vocal expressions, social awkwardness, thin slices, first impressions, zero-acquaintance

During any face-to-face interaction, people extract information on many traits from facial and vocal expressions. First impressions that have relevance for survival, such as the perception of how threatening a new conversation partner is, can be formed in as little as 39ms (Ghijsen, 2004). After only 100ms, participants reliably determine less survival-driven traits, such as attractiveness, likeability, trustworthiness, competence, and aggressiveness based on exposure to photographs of unknown faces (Wearden et al., 1998). People can make accurate judgments of even more complex socially and interpersonally relevant features from “thin slices” (Hassin et al., 2013; Whalen et al., 2013) of behavior, i.e. very short (seconds to minutes) of dynamic video and audio presentations (Rule and Ambady, 2009; Lindquist and Gendron, 2013; Judd et al., 2012; Rigato and Farroni, 2013; Parkinson, 2013). These first impressions, formed rapidly from dynamic social information, have significant implications for our interactions with others. Evidence shows that we often do not significantly revise our first impressions with prolonged exposure (Widen, 2013), indicating that our first impressions remain the dictating principle of whatever interaction follows. For individuals who project less than optimal social competence at a first glance, this behavior pattern can have significant negative effects.

Individuals with Autism represent one such group, since this developmental disorder is characterized by significant deficits in social interaction, which are at least in part linked to difficulty with non-verbal communication (Lord et al., 2012; McCann and Peppé, 2003). The segment of the ASD population seeing the largest increase in numbers is comprised of individuals with high-functioning autism (HFA), who are characterized by preserved language and cognitive skills despite significantly impaired social communication abilities (CDC, 2009).

There are a few studies indicating that typically developing (TD) individuals perceive the nonverbal expressions of individuals with HFA as awkward, less engaging, or unusual (Heerey et al., 2003; de Marchena and Eigsti, 2010; McCann and Peppé, 2003; Yirmiya et al., 1989). So far, the literature has documented this perceived awkwardness mostly on the basis of less formal observations as part of a related study, or coding of expressions that is performed by trained observers.

Our previous work showed that trained observers, who were blind to diagnosis, but aware that half the stimuli were produced by children with HFA, rated these children as more awkward than their TD peers on a Likert-type scale (Grossman et al. 2013). Those ratings were based on video and audio recordings that were 5–10 seconds long, each containing a complete sentence or phrase, thereby providing significant language and communication information to influence observer perceptions. The raters themselves were research assistants, trained to work with children with HFA and therefore aware of the specific behaviors and nuances that might trigger a perception of social awkwardness related to autism diagnosis. It is important to point out that these raters reported increased awkwardness despite concurrent ratings showing that the emotions portrayed by children with HFA were categorically as accurate as those of their TD peers (Grossman et al. 2013). In other words, children with HFA are capable of producing accurate emotional communicative facial and vocal expressions, but these productions are nonetheless perceived as qualitatively awkward by trained raters who are asked to pay attention to the natural quality of the expression.

Very little attention has been paid to whether naïve observers, in contrast to trained research assistants, perceive the communicative facial and vocal expressions of children with HFA to be quantifiably more awkward than those of their TD peers without the framework of directed observation of autism-specific traits. Stagg et al. (2013) found that TD adults who were naïve to diagnosis, rated children with ASD as less expressive overall. TD children (ages 10–11) rated the same cohort of children with ASD to be less desirable as friends based on 50second videos of personal narratives that contained no associated sound track. These data indicate that the facial expressions of children with ASD may signal certain traits that are perceived as undesirable in close social interactions, even by naïve observers. The question remains how quickly this impression is formed and what type of input (auditory, visual, or both) is required to lead TD observers to these types of judgments.

Considering the importance of first impressions in any social interaction, we wanted to know whether TD observers perceive increased social awkwardness in individuals with HFA from very thin slices, or short segments, of behavior. We were particularly interested in the first impressions of TD individuals who are naïve to diagnostic status, not trained on any particular rating scale, and have no familiarity with autism in general. This information is critical to our understanding of the underlying mechanism hindering social integration of children with HFA. If children with HFA are perceived by their TD peers as socially awkward and “different” from the mainstream based on very brief exposures, it might explain the barriers to social integration experienced by these high-functioning individuals. To pursue the possible origins of these barriers further, we ask two main questions: 1.) Do naïve TD adults without prior experience with autism rate children with and without HFA differently on perceived social awkwardness from very brief exposures to audio and/or visual information? and 2.) What type of information (facial, vocal, dynamic or still) do naïve TD adults rely on most to make that determination? Evidence shows that dynamic cues are highly relevant to this type of determination (Dittrich et al., 1996; Balas et al., 2012) and that the facial and vocal expressions of children with HFA may show differences from the TD norm, specifically in aspects of timing. Individuals with HFA show subtle differences in synchrony of facial and vocal expressions compared to their TD peers (Grossman et al., 2010; Atkinson and Smithson, 2013; Peppé, 2009) that may be foundational to this perceived awkwardness. We therefore predict that very brief exposures, or “thin slices,” of behavior containing dynamic features in video and audio modalities will allow typical adults to differentiate between children with and without HFA based purely on the perception of social awkwardness. We further predict that still images, which are devoid of dynamic contours, will not provide sufficient perceptual cues to allow for that distinction.

Methods

Stimuli

We used videotapes recorded as part of a prior study (Heerey et al., 2003) in which children with HFA and TD peers were asked to retell four brief stories (25–32 seconds each) about a young man's photo safari adventures. Each story contained positive (happiness, surprise) and negative (anger, fear) emotions. We asked the children to imagine themselves telling these stories to young children to elicit engaging retellings, because evidence shows that individuals who are highly expressive allow coders to produce more accurate judgments of their traits (Borkenau and Liebler, 1992; Friedman et al., 1980; Riggio and Friedman, 1982). Children in the stimulus elicitation study were provided with written scripts suspended below the camera-lens, which ensured that all participants looked toward the camera for the duration of the retelling. This task allowed us to equalize the verbal content across videos, so that vocabulary choice and grammatical structure could not provide cues to differentiate between the two groups of stimulus producers (Hassin et al., 2013). Children with HFA were confirmed to be on the autism spectrum through direct assessment with the Autism Diagnostic Observation Schedule (ADOS, Lord et al., 2000) and expert opinion. They were categorized as having HFA by documenting preserved language and cognitive skills and were group matched on IQ and receptive vocabulary with their TD peers.

In Grossman et al. (2013) we report Likert-type ratings of the degree of awkwardness for all videos. These ratings were conducted by research assistants who were trained on the rating scales, but were blind to diagnosis of the children in the videos. We used those ratings to select videos for the study presented here and eliminated videos of children (HFA or TD) whose awkwardness scores represented extreme outliers within their respective groups. We further excluded stimuli based on poor video and sound quality. From the original set of videos of fourteen children with HFA and twelve TD controls, we were able to create stimuli from videos of nine children with HFA and ten TD controls, ranging in age from 9:10 to 18:10, with a mean age of 12:7. Two of the TD children were female, all others, including all children with HFA, were male and all children were Caucasian. The matching criteria for children with and without ASD and detailed procedure for recording the videos can be found in Grossman et al. (2013). We edited the original story-retelling video tapes into very brief clips, each starting at the onset of a phrase or sentence. All four of the target emotions (happiness, fear, anger, and positive surprise) were included in the collection of video clips. We did not include phrases with neutral emotions, which were typically the introductory sentences of each story. We saved all clips once as a 1-second video and once as a 3-second video. We selected these specific lengths because three seconds was the length of some of the shortest complete sentences or phrases, while one second was deemed to be the shortest clip length that could still reasonably be perceived as containing meaningful communicative information. In contrast to ratings of personality traits from much briefer exposures to still images, we wanted to preserve the linguistic nature of these clips by presenting a minimum of recognizable language content. These stimuli were designed to answer the question of whether untrained, naïve TD adults perceive children with HFA to be more socially awkward than their TD peers, even based on minimal communicative information.

Each clip was exported in multiple formats: one audio-visual (audio and video), one video-only (video without sound), one audio-only (sound, but no video), and one still image. Still images were extracted from each original video at the 1second mark. If that frame showed the child in a particularly odd position, such as with their mouth contorted in mid-articulation, we advanced the video one or two frames and extracted that frame as a still image.

We established three stimulus sequences containing stimuli in the four different formats (audio-visual, video-only, audio-only, still images). One stimulus sequence contained only audio-visual clips and one contained only still images. One stimulus sequence contained video-only and audio-only clips that were shown to a single cohort, since there was no modality (voice vs. face) overlap between them. In other words, participants who heard the audio files would not be able to recognize a given child when they saw the same child's video-only clips.

Each stimulus sequence contained 31 unique stimuli taken from videos of children with HFA and 31 unique stimuli taken from TD children. In each stimulus sequence there were no more than six occurrences of each child, no more than two clips of the same emotion from a given child, no repetitions of the same sentence spoken in the same emotion by a given child, and an equal number of 3-second and 1-second stimuli. Stimuli within a sequence were pseudo-randomized so that no more than two instances of the same child, the same emotion, or the same sentence occurred consecutively. We created a reverse version of each stimulus sequence to counter-balance across participants. The sequence containing audio-only and video-only stimuli presented each stimulus type in a continuous block and the starting modality (audio-only or video-only) was counterbalanced across participants. Stimulus sequences were presented using Presentation software (Neurobehavioral Systems), which allows for precisely timed presentation of video and image files, as well as collection of button press responses via a hand-held gaming device.

Participants

All procedures were conducted within the guidelines of the local Institutional Review Board. We recruited typical adults via word of mouth and advertisements posted on campus and reimbursed them with a $10 gift card. After completion of the task, participants were asked about prior exposure to individuals with autism spectrum disorder (ASD). Eight participants with prior experience, such as having a family member with ASD, or working at a summer camp for children with ASD were excluded from the sample. We present data for a total of 87 participants, including 64 females and 23 males, ranging in age from 19 to 49, with the average age at 23. Eight participants self-identified as Asian, four as Hispanic, and four as African American or Black. Thirty-one participants completed the audio-visual trial, 29 the still images trials, and 27 the audio-only and video-only trial.

Procedure

Participants were familiarized with the task, but were not told anything regarding the diagnostic status of some of the children in the videos. Participants were told to look and/or listen to each clip/image carefully and answer the question of whether the person they just saw or heard appeared to be socially awkward. To illustrate that concept, we asked participants to imagine themselves in a social group situation where they encountered the children in the videos or images for only a brief glimpse and had to provide their immediate response as to whether that person was socially awkward based on that brief perception. We used this visualization scenario since it proved successful in achieving inter-coder reliability of greater than 85% in our previous study (Grossman et al. 2013), indicating that TD adults interpret the concept of social awkwardness in this context in fairly similar ways. We opted for a simple dichotomous choice, rather than a Likert-type scale to ensure that participants made a definite decision for each stimulus.

Prior to beginning the study, participants had time to become comfortable with the gaming device where pressing a button with the right index finger indicated “yes,” and pressing another button with the left index finger indicated “no.” We collected data on the frequency with which stimuli were rated as socially awkward, based on button-press responses.

Results

We first established that there was no difference in how male vs. female participants rated the stimuli by conducting a one-way ANOVA for overall frequency of “socially awkward” ratings across all versions of the ask (F (1,113) = .475, p = .492). To determine whether children with HFA are more frequently perceived as awkward than their TD peers, we compared the frequency of “socially awkward” judgments for each stimulus type (audio-visual, audio-only, video-only, still images) across these two diagnostic groups. We also conducted one-sample t-tests for each stimulus type comparing frequency of socially awkward ratings to chance level performance of 50%. We then compared frequencies of these ratings across stimulus types to determine whether any specific stimulus type resulted in higher ratings of social awkwardness. Because one cohort of TD adults rated audio-only and video-only sequences, we used paired t-tests to compare frequencies across those two stimulus types. We used independent samples t-tests to compare frequencies across the other stimulus types, since those were rated by different participant groups.

Frequency

We calculated frequency of socially awkward ratings as a percentage within each diagnostic group (HFA, or TD). Since there was no relevant hypothesis regarding the response accuracy for different emotions within those two groups, we collapsed our data within condition (audio-visual, audio-only, video-only, stills) across emotions and produced summary statistics for all trials containing 3-second stimuli, all trials containing 1-second stimuli, and all still stimuli. To determine whether ratings of awkwardness were different from chance for each of the two groups of children in the stimuli, we conducted one-sample t-tests with 50% (chance) as the test value for each stimulus type and stimulus length. Results show that awkwardness ratings were made at significantly higher frequency than chance level for stimuli showing children with HFA, and significantly below chance for stimuli showing TD controls. The only exception was ratings for 1-second audio-only clips of children with HFA, which were not significantly different from chance (see Table 1). Rating frequencies were not significantly different across stimulus types (audio-visual, video-only, audio-only, still images) for 3-second and 1-second stimuli. We also compared frequencies of awkwardness ratings for children with HFA directly with frequencies of awkwardness rating for TD children within each stimulus type to determine whether children with HFA were rated significantly more frequently as awkward than their TD peers for each expressive modality.

Table 1.

Frequency of Socially Awkward Ratings by Stimulus Type

| Stimulus Type | Stimulus Producer Diagnostic Status | Mean % (StdDev) | Significance from chance |

|---|---|---|---|

| Audio-Visual 3-second | HFA | 65.2 (21) | t (30) = 4.1, p < .0001 |

| Audio-Visual 1-second | HFA | 64.6 (20) | t (30) = 4.0, p < .0001 |

| Video-only 3-second | HFA | 62.1 (23) | t (26) = 2.6, p = .01 |

| Video-only 1-second | HFA | 60.4 (19) | t (26) = 2.9, p = .008 |

| Audio-only 3-second | HFA | 60.2 (18) | t (26) = 2.9, p = .007 |

| Audio-only 1-second | HFA | 55.0 (21) | t (26) = 1.3, p = .223 |

| Still Images | HFA | 57.9 (18) | t (28) = 2.4, p = .022 |

| Audio-Visual 3-second | TD | 35.2 (16) | t (30) = −5.2, p < .0001 |

| Audio-Visual 1-second | TD | 33.1 (15) | t (30) = −6.5, p < .0001 |

| Video-only 3-second | TD | 30.8 (19) | t (26) = −5.3, p < .0001 |

| Video-only 1-second | TD | 27.2 (19) | t (26) = −6.1, p < .0001 |

| Audio-only 3-second | TD | 28.1 (15) | t (26) = −7.8, p < .0001 |

| Audio-only 1-second | TD | 30.5 (14) | t (26) = −7.2, p < .0001 |

| Still Images | TD | 40.9 (18) | t (28) = −2.7, p = .01 |

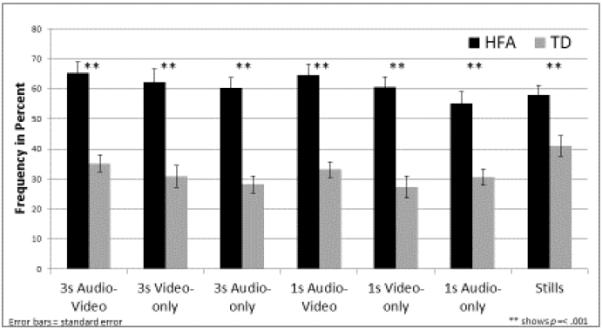

Results of paired-samples t-tests show that children with HFA were rated significantly more frequently as awkward than TD peers for audio-visual stimuli (1-second: t (30) = 9.7, p < .0001, 3-second: (t (30) = 6.9, p < .0001), video-only stimuli (1-second: t (26) = 8.1, p < .0001, 3-second: (t (26) = 6.2, p < .0001), audio-only stimuli (1-second: t (26) = 5.1, p < .0001, 3-second: (t (26) = 6.8, p < .0001), and still images (t (28) = 3.7, p = .001, see Figure 1).

Figure 1.

Frequency of socially awkward ratings per stimulus type.

Discussion

Our data clearly show that typical adults rate children with HFA as socially awkward more frequently than their TD peers based on thin slices of facial and/or vocal communicative information. The overall frequencies (between 55% and 67%) with which children with HFA are rated as socially awkward in this task are commensurate with accuracy rates reported in the literature for judging traits from brief exposures, such as intelligence (Gill et al., 2014; Bartlett et al., 2011) or sexual orientation (Dittrich et al., 1996). One of the most striking findings is that TD adults make this socially relevant judgment based on exposures as brief as one second, indicating a highly automatic decision making process, which is commensurate with judgments of other socially relevant traits, such as leadership ability (Rule and Ambady, 2009). Even though not all stimuli portraying individuals with HFA were rated as socially awkward, and several productions of TD children did receive a socially awkward rating, there is a significant difference between the ratings for the two groups, showing that the frequency of socially awkwardness ratings for children with HFA is significantly higher than that for TD children in all conditions. These data indicate that there are highly salient cues within the stimuli that guide rapid ratings of social awkwardness. Since verbal and grammatical content did not vary across stimuli, these cues are most likely contained in the non-verbal aspects of the communication, i.e. the facial expressions and tone of voice.

The finding that still images resulted in social awkwardness ratings for the HFA cohort that were significantly above chance and significantly higher than those for their TD peers negates our hypotheses that still images, which are devoid of any dynamic information, would not provide sufficient information for participants to differentiate between children with and without HFA. There is evidence in the literature showing the possibility of determining traits such as charm (Back et al., 2010), narcissism (Naumann et al., 2009; Vazire et al., 2008), and sexual orientation (Dittrich et al., 1996) based on still images. However, the images used in those studies contained the participants' whole body, thereby providing significantly more information about their traits embedded in body posture or clothing choice. The images used in this study focused mostly on the individuals' face, without including the body.

One possible explanation for our surprising result is that there are anatomic differences in the face and head structure (dysmorphology) of individuals with HFA that can be detected quickly and effectively by TD adults in this task. Some recent studies have investigated this possibility, but the findings so far are inconclusive. Some data show an array of Minor Physical Abnormalities (MPA), including two measures of the epicanthal structure of the eye, to be characteristic of individuals with ASD when compared to typically developing age-matched individuals (Ozgen et al., 2011). However, this type of dysmorphology appears to define a subgroup of individuals with autism who have more complex medical involvement, lower IQ, and poorer outcomes (Corbett et al., 2012; Corbett et al., 2013), which does not match the population with high-functioning autism in our stimuli. Recent work using high-definition 3-D imaging has identified several facial features that are significantly more prevalent in children with ASD and their unaffected siblings, compared to TD peers (Morecraft et al., 2001). However, these features are most prevalent in unaffected siblings, making it doubtful that they would result in easily perceived social awkwardness in children with ASD.

A more compelling explanation for our surprising finding for still images may be found in the fact that the still images were extracted as screenshots from videos. Although we ensured that children were centered within the video frame at the beginning of the story-retelling task, participants with HFA tended to move toward the edges of the frame by slouching, leaning, or rocking over the course of the recording session. In contrast, TD children had a greater tendency to remain centered within the frame. Children with HFA were also more likely to move limbs into the frame, such as pulling their knees up to their chin or placing their hands near their head. It is possible that the typical adult participants in this study used overall body position, rather than facial features to determine social awkwardness in this task. One could argue that body and head position within the image frame are a reflection of dynamic patterns captured in a still screenshot, which would support the claim that the dynamic features of social communication are the most salient cues for perception of social awkwardness in this population, rather than dysmorphology of the face. Future studies should use still images of children with ASD that do not reveal overall body posture to verify this concept.

There is some evidence in the literature indicating that the auditory and visual domains influence each other during tasks requiring perception of personality or emotional traits (Atkinson, 2009; Clarke et al., 2005; Lopata et al., 2008). These data suggest that the combination of both channels of dynamic information should result in higher levels of perception accuracy for targeted traits than single-channel information. Our data do not bear this out, since there is no significant difference between frequency of awkwardness ratings for audio-only, video-only, and audio-visual stimuli. Our data do, however, substantiate other claims in the literature that thin-slice judgment accuracy does not differ significantly across type of expressive channel (see Hassin et al., 2013:, for a review).

Conclusion and Clinical Implications

Peppé (2009) makes a strong case for increasing research and intervention attention into qualitative perceptual ratings of vocal and facial prosody, rather than focusing solely on detailed analyses of individual quantifiable features, such as measures of pitch and dynamic ranges for vocal prosody. The data presented here support the importance of further investigating qualitative aspects of vocal and facial expressions, since they have such salient and rapid impact on how children with HFA are perceived by naïve TD observers. Overall, our data show that naïve TD adults rate children with HFA significantly more frequently as socially awkward than TD children based on very thin slices of social behavior. Since these results are based on videos of only 19 children recorded during a specific story retelling task, we cannot generalize the findings to the entire ASD population, particularly due to the inherent variability of profiles in this group (Judd et al., 2012). The findings also do not speak to the naturalness of productions during naturalistic social interactions, since our ratings were based on a scripted story retelling task. Nevertheless, within the framework of this study, our results indicate that qualitative awkwardness in social communication is an easily identified characteristic of individuals with HFA, which may lead TD individuals to be less inclined to interact with individuals with HFA after merely one second of exposure.

This finding provides information that may speak directly to the pervasive observations of involuntary social isolation among individuals with HFA. The rapidly formed impression of social awkwardness by TD adults could explain why TD individuals may be disinclined to fully integrate individuals with HFA into social interactions, despite their good cognitive and language skills. The question remains whether same-aged TD children – the true peers of these children with HFA –form similar judgments equally quickly and robustly. It would also be important to determine whether children with HFA are able to perceive this qualitative difference in their own productions. There is evidence to show that within-group participants are better at recognizing cues signaling group identity than out-of-group participants (Dittrich et al., 1996). It would be important to follow up on our presented data by looking at within-group judgments of social awkwardness for thin slices of audio and visual stimuli in both age- and diagnosis-matched cohorts.

The long-term implications of this line of research are to inform intervention into qualitative aspects of non-verbal communication production in children with HFA, while at the same time providing important evidence for the need to educate TD individuals including family members, teachers, employers, and peers, on the origins and immediacy of this perceived awkwardness to improve tolerance of communicative variations in the increasingly large group of individuals with HFA. Approaching the issue from these two sides has the potential of significantly improving social integration of this highly capable cohort.

Acknowledgements

I thank Hannah Brown and Kathryn Hasty for their assistance in stimulus creation, task administration, and data analysis. Thank you also to Daniel Kempler, Teresa Mitchell, and Helen Tager-Flusberg for their helpful comments during the preparation of this manuscript. I am grateful to the children and families who gave their time to participate in stimulus creation for this study.

Funding Acknowledgement

This work was supported by NIDCD Grant R21 DC010867-01, by NIH Intellectual and Developmental Disabilities Research Center P30 Grant HDP30HD004147, and by a grant from the Emerson College Faculty Advancement Fund.

Works Cited

- Atkinson AP. Impaired recognition of emotions from body movements is associated with elevated motion coherence thresholds in autism spectrum disorders. Neuropsychologia. 2009;47:3023–3029. doi: 10.1016/j.neuropsychologia.2009.05.019. [DOI] [PubMed] [Google Scholar]

- Atkinson AP, Smithson HE. Distinct Contributions to Facial Emotion Perception of Foveated versus Nonfoveated Facial Features. Emotion Review. 2013;5:30–35. [Google Scholar]

- Back MD, Schmukle SC, Egloff B. Why are narcissists so charming at first sight? Decoding the narcissism-popularity link at zero acquaintance. J Pers Soc Psychol. 2010;98:132–145. doi: 10.1037/a0016338. [DOI] [PubMed] [Google Scholar]

- Balas B, Kanwisher N, Saxe R. Thin-slice perception develops slowly. Journal of Experimental Child Psychology. 2012;112:257–264. doi: 10.1016/j.jecp.2012.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartlett M, Littlewort G, Vural E, et al. Insights on spontaneous facial expressions from automatic expression measurement. In: Curio C, Bulthoff HH, Giese MA, editors. Dynamic faces: Insights from experiments and computation. The MIT Press; Cambridge, MA: 2011. pp. 211–238. [Google Scholar]

- Borkenau P, Liebler A. Trait inferences: Sources of validity at zero acquaintance. Journal of Personality and Social Psychology. 1992;62:645–657. [Google Scholar]

- CDC . Brain and Language. Centers for Disease Control and Prevention; United States: 2009. Prevalence of Autism Spectrum Disorders – Autism and Developmental Disabilities Monitoring Network, 14 Sites. [PubMed] [Google Scholar]

- Clarke TJ, Bradshaw MF, Field DT, et al. The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception. 2005;34:1171–1180. doi: 10.1068/p5203. [DOI] [PubMed] [Google Scholar]

- Corbett B, Schupp C, Lanni K. Comparing biobehavioral profiles across two social stress paradigms in children with and without autism spectrum disorders. Molecular autism. 2012;3:13. doi: 10.1186/2040-2392-3-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbett B, Swain D, Newsom C, et al. Biobehavioral profiles of arousal and social motivation in autism spectrum disorders. Journal of Child Psychology and Psychiatry. 2013 doi: 10.1111/jcpp.12184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Marchena A, Eigsti I-M. Conversational gestures in autism spectrum disorders: Asynchrony but not decreased frequency. Autism Research. 2010;3:311–322. doi: 10.1002/aur.159. [DOI] [PubMed] [Google Scholar]

- Dittrich WH, Troscianko T, Lea SE, et al. Perception of emotion from dynamic point-light displays represented in dance. Perception. 1996;25:727–738. doi: 10.1068/p250727. [DOI] [PubMed] [Google Scholar]

- Friedman H, Riggio RE, Segall DO. Personality and the enactment of emotion. Journal of Nonverbal Behavior. 1980;5:35–48. [Google Scholar]

- Ghijsen M. Facial expression analysis for human computer interaction. Affective Computing, IEEE Transactions on. 2004;2:147–161. [Google Scholar]

- Gill D, Garrod O, Jack R. Facial Movements Strategically Camouflage Involuntary Social Signals of Face Morphology. Psychological Science. 2014 doi: 10.1177/0956797614522274. [DOI] [PubMed] [Google Scholar]

- Grossman RB, Bemis RH, Plesa Skwerer D, et al. Lexical and affective prosody in children with high-functioning autism. Journal of Speech, Language, and Hearing Research. 2010;53:778(716). doi: 10.1044/1092-4388(2009/08-0127). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassin RR, Aviezer H, Bentin S. Inherently Ambiguous: Facial Expressions of Emotions, in Context. Emotion Review. 2013;5:60–65. [Google Scholar]

- Heerey EA, Keltner D, Capps LM. Making sense of self-conscious emotion: linking theory of mind and emotion in children with autism. Emotion. 2003;3:394–400. doi: 10.1037/1528-3542.3.4.394. [DOI] [PubMed] [Google Scholar]

- Judd CM, Westfall J, Kenny DA. Treating stimuli as a random factor in social psychology: a new and comprehensive solution to a pervasive but largely ignored problem. J Pers Soc Psychol. 2012;103:54–69. doi: 10.1037/a0028347. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M. What's in a Word? Language Constructs Emotion Perception. Emotion Review. 2013;5:66–71. [Google Scholar]

- Lopata C, Volker M, Putnam S, et al. Effect of social familiarity on salivary cortisol and self-reports of social anxiety and stress in children with high functioning autism spectrum disorders. Journal of autism and. 2008 doi: 10.1007/s10803-008-0575-5. [DOI] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, et al. The Autism Diagnostic Schedule – Generic: A standard measures of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30:205–223. [PubMed] [Google Scholar]

- Lord C, Rutter M, DiLavore PC, et al. Autism Diagnostic Observation Schedule, Second Edition (ADOS-2) Western Psychological Services; Torrance, CA: 2012. [Google Scholar]

- McCann J, Peppé S. Prosody in autism spectrum disorders: a critical review. International Journal of Language & Communication Disorders. 2003;38:325–350. doi: 10.1080/1368282031000154204. [DOI] [PubMed] [Google Scholar]

- Morecraft R, Louie J, Herrick J, et al. Cortical innervation of the facial nucleus in the non-human primate: a new interpretation of the effects of stroke and related subtotal brain trauma on the muscles of facial expression. Brain : a journal of neurology. 2001;124:176–208. doi: 10.1093/brain/124.1.176. [DOI] [PubMed] [Google Scholar]

- Naumann LP, Vazire S, Rentfrow PJ, et al. Personality Judgments Based on Physical Appearance. Personality and Social Psychology Bulletin. 2009;35:1661–1671. doi: 10.1177/0146167209346309. [DOI] [PubMed] [Google Scholar]

- Ozgen H, Hellemann G, Stellato R, et al. Morphological Features in Children with Autism Spectrum Disorders: A Matched Case–Control Study. Journal of Autism and Developmental Disorders. 2011;41:23–31. doi: 10.1007/s10803-010-1018-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkinson B. Contextualizing Facial Activity. Emotion Review. 2013;5:97–103. [Google Scholar]

- Peppé SJE. Aspects of identifying prosodic impairment. International Journal of Speech-Language Pathology. 2009;11:332–338. [Google Scholar]

- Rigato S, Farroni T. The Role of Gaze in the Processing of Emotional Facial Expressions. Emotion Review. 2013;5:36–40. [Google Scholar]

- Riggio RE, Friedman H. The interrelationship of self-monitoring factors, personality traits, and nonverbal social skills. Journal of Nonverbal Behavior. 1982;7:33–45. [Google Scholar]

- Rule N, Ambady N. She's Got the Look: Inferences from Female Chief Executive Officers' Faces Predict their Success. Sex Roles. 2009;61:644–652. [Google Scholar]

- Stagg S, Slavny R, Hand C, et al. Does facial expressivity count? How typically developing children respond initially to children with autism. Autism. 2013 doi: 10.1177/1362361313492392. http://dx.doi.org/10.1177/1362361313492392. [DOI] [PubMed]

- Vazire S, Naumann LP, Rentfrow PJ, et al. Portrait of a narcissist: Manifestations of narcissism in physical appearance. Journal of Research in Personality. 2008;42:1439–1447. [Google Scholar]

- Wearden JH, Edwards H, Fakhri M, et al. Why “Sounds are judge longer than lights”:Application of a model of the internal clock in humans. The Quarterly Journal of Experimental Psychology. 1998;51B:97–120. doi: 10.1080/713932672. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Raila H, Bennett R, et al. Neuroscience and Facial Expressions of Emotion: The Role of Amygdala-Prefrontal Interactions. Emotion Review. 2013;5:78–83. [Google Scholar]

- Widen SC. Children's Interpretation of Facial Expressions: The Long Path from Valence-Based to Specific Discrete Categories. Emotion Review. 2013;5:72–77. [Google Scholar]

- Yirmiya N, Kasari C, Sigman M, et al. Facial Expressions of Affect in Autistic, Mentally Retarded and Normal Children. Journal of Child Psychology and Psychiatry. 1989;30:725–735. doi: 10.1111/j.1469-7610.1989.tb00785.x. [DOI] [PubMed] [Google Scholar]