Abstract

Child and adolescent patients may display mental health concerns within some contexts and not others (e.g., home vs. school). Thus, understanding the specific contexts in which patients display concerns may assist mental health professionals in tailoring treatments to patients' needs. Consequently, clinical assessments often include reports from multiple informants who vary in the contexts in which they observe patients' behavior (e.g., patients, parents, teachers). Previous meta-analyses indicate that informants' reports correlate at low-to-moderate magnitudes. However, is it valid to interpret low correspondence among reports as indicating that patients display concerns in some contexts and not others? We meta-analyzed 341 studies published between 1989 and 2014 that reported cross-informant correspondence estimates, and observed low-to-moderate correspondence (mean internalizing: r = .25; mean externalizing: r = .30; mean overall: r = .28). Informant pair, mental health domain, and measurement method moderated magnitudes of correspondence. These robust findings have informed the development of concepts for interpreting multi-informant assessments, allowing researchers to draw specific predictions about the incremental and construct validity of these assessments. In turn, we critically evaluated research on the incremental and construct validity of the multi-informant approach to clinical child and adolescent assessment. In so doing, we identify crucial gaps in knowledge for future research, and provide recommendations for “best practices” in using and interpreting multi-informant assessments in clinical work and research. This paper has important implications for developing personalized approaches to clinical assessment, with the goal of informing techniques for tailoring treatments to target the specific contexts where patients display concerns.

Keywords: Construct Validity, Incremental Validity, Informant Discrepancies, Multiple Informants, Operations Triad Model

Child and adolescent mental health patients lead complex lives (i.e., collectively referred to as “children” unless otherwise specified). Indeed, mental health concerns arise out of an interplay among biological, psychological, and socio-cultural factors that pose risk for, or offer protection against, developing maladaptive reactions to environmental or social contexts (e.g., Cicchetti, 1984; Luthar, Cicchetti, & Becker, 2000; Sanislow et al., 2010). However, not all contexts elicit mental health concerns to the same degree (e.g., Carey, 1998; Kazdin & Kagan, 1994; Mischel & Shoda, 1995). Therefore, patients may display concerns within some contexts, such as home and school, but not others, such as within peer interactions. In fact, these contextual variations in displays of mental health concerns occur within a variety of mental health domains including social anxiety, attention and hyperactivity, and conduct problems (e.g., Bögels et al., 2010; Dirks, De Los Reyes, Briggs-Gowan, Cella, & Wakschlag, 2012; Drabick, Gadow, & Loney, 2007, 2008; Kraemer et al., 2003). Thus, identifying the specific contexts in which patients display concerns may facilitate treatment planning and boost treatment efficacy (e.g., De Los Reyes, 2013; National Institute of Mental Health [NIMH], 2008).

The most prevalent strategy for assessing contextual variations in mental health is the multi-informant assessment approach (Kraemer et al., 2003). Specifically, this approach involves taking reports from informants who share close relationships with the patients about whom they are providing reports, or at minimum spend a significant amount of time observing patients' behavior (Achenbach, 2006). By considering reports from informants who vary among each other in the specific contexts in which they observe patients' behavior (e.g., home vs. school vs. peer interactions), mental health professionals may gain an understanding as to how consistently or inconsistently patients display concerns across contexts (Dirks et al., 2012). For child patients, these informants most often include parents, teachers, and patients themselves (Hunsley & Mash, 2007). Further, trained raters might also complete reports, such as clinical interviewers and independent observers of patients' behavior on standardized clinical tasks (e.g., structured social interactions) or unstandardized home or school observations (Groth-Marnat, 2009).

Clinicians may use informants' reports to make decisions about mental health care, such as assigning diagnoses and planning treatment (e.g., Hawley & Weisz, 2003). Researchers may also use these reports to draw conclusions from empirical work on such topics as identifying treatments that successfully ameliorate mental health concerns (i.e., evidence-based treatments; Weisz, Jensen Doss, & Hawley, 2005). In both practice and research settings, collecting multiple informants' reports generates a great deal of information about patients' concerns. However, the individual reports often yield inconsistent conclusions (i.e., informant discrepancies; Achenbach, 2006; De Los Reyes & Kazdin, 2004, 2005, 2006; Goodman, De Los Reyes, & Bradshaw, 2010).1 For instance, a female adolescent patient in a pre-treatment assessment may be identified as experiencing “low” positive mood based on a parent or teacher report whereas the adolescent self-reports her mood as “elevated.”

Informant discrepancies often create considerable uncertainties in delivering services to patients and drawing conclusions from research (De Los Reyes, Kundey, & Wang, 2011). A key reason for these uncertainties originates from the near-exclusive focus in mental health research on whether informant discrepancies reflect measurement error or reporting biases (e.g., De Los Reyes, 2011; Richters, 1992). Consequently, what remains unclear is whether multi-informant approaches to assessment validly capture contextual variations in displays of patients' mental health concerns, and which informants ought to be included in mental health assessments. As we explain below, these decisions might involve considering data from multiple assessment literatures, chief among them research on multi-informant clinical child assessments and their (a) correspondence, (b) incremental validity, and (c) construct validity. Prior work has addressed each of these issues as they relate to clinical child assessment broadly (e.g., Achenbach, McConaughy, & Howell, 1987; Johnston & Murray, 2003; Mash & Hunsley, 2005), and within assessments in other clinical literatures (e.g., Achenbach, 2006; Campbell & Fiske, 1959; Dawes, 1999; Garb, 2003; Hunsley & Mash, 2005). However, with regard to multi-informant clinical child assessments, these literatures have advanced largely in isolation of one another.

The Present Review

The purpose of this paper is to review and synthesize research on using and interpreting multiple informants' reports in clinical child assessments. We expand the literature on this topic in five ways. First, as an update to the seminal meta-analysis on cross-informant correspondence of clinical child assessments carried out by Achenbach and colleagues (1987), we conduct a quantitative review of 341 studies of cross-informant correspondence in reports of children's mental health published over the last 25 years (1989-2014). Second, we use this updated quantitative review as a backdrop for discussing recent work on the conceptual rationale for conducting multi-informant assessments (De Los Reyes, Thomas, Goodman, & Kundey, 2013). We expand upon this conceptual work by developing specific predictions about the incremental and construct validity of the multi-informant approach. Third, we review research on the incremental validity of multi-informant assessments, or work examining whether a multi-informant approach yields reports that, relative to one another, incrementally contribute information in the prediction of relevant criterion variables (e.g., diagnostic status or treatment response; see Dawes, 1999; Garb, 2003; Hunsley & Meyer, 2003). Fourth, we summarize recent work on the construct validity of multi-informant assessments. By construct validity, we mean research examining whether it is valid to interpret patterns of convergence and divergence among multiple informants' reports as accurate reflections of contextual variations in children's mental health concerns (e.g., Borsboom, Mellenbergh, & van Heerden, 2004; Campbell & Fiske, 1959). Lastly, we synthesize work on multi-informant assessments to provide recommendations for both future research and “best practices” for using and interpreting multi-informant assessments administered in clinic settings.

Correspondence in Multi-Informant Clinical Child Assessments

One of the most normative observations in assessments of child patients is that of low correspondence between informants' reports about patients' mental health (Achenbach, 2011). Over five decades ago, Lapouse and Monk (1958) were the first to report about this phenomenon. Their primary aim was to estimate the prevalence of children's mental health concerns in an epidemiological study of 482 6- to 12-year-old children representative of the community from which they were sampled (i.e., Buffalo, New York, USA). To estimate prevalence, the researchers relied on structured clinical interviews administered to mothers about a single child from the household. In this study, the researchers also wanted to address what they termed “the problem of validity” with respect to mothers' interview responses. Thus, the researchers conducted a secondary study using a separate convenience sample of 193 children ages 8-12 and their mothers, recruited from local outpatient hospitals and pediatricians' offices. In this sample, researchers conducted structured clinical interviews with the mothers and the identified child separately and simultaneously, using two different interviewers. To assess correspondence between mother and child reports, researchers examined responses on a subset of behavioral domains assessed in the interview, including fears, nightmares, bed wetting, restlessness, and repetitive behaviors (e.g., tics, thumb sucking, skin picking). Using percent agreement to assess correspondence, the authors reported agreement between 46% (amount of food intake) and 84% (bed wetting). Broadly, the authors observed greater correspondence between reports of relatively more observable behaviors (e.g., bed wetting, stuttering, thumb sucking) than relatively less observable behaviors (e.g., fears and worries, nightmares, restlessness). Further, with two exceptions (bed wetting and overactivity), lack of correspondence was primarily due to children self-endorsing behaviors that the mother did not endorse about the child.

Highlighting the robust nature of cross-informant correspondence patterns, in their seminal meta-analysis of 119 studies of cross-informant correspondence in reports of children's mental health, Achenbach and colleagues (1987) reported, similar to Lapouse and Monk (1958), that correspondence levels exhibited a range from low-to-moderate in magnitude (i.e., rs ranging from .20 to .60). Importantly, Achenbach and colleagues (1987) noted that the low correlations they observed among informants' reports of children's mental health did not necessarily indicate that such reports carried poor psychometric qualities, as they also observed satisfactory test-retest reliability estimates for the informants' reports they examined. In fact, levels of correspondence systematically varied as a function of three key factors. First, pairs of informants who observed children in the same context (e.g., pairs of parents or pairs of teachers) tended to exhibit greater levels of correspondence than pairs of informants who observed children in different contexts (e.g., parent and teacher). Similarly, a second key finding was that greater levels of cross-informant correspondence occurred when informants provided reports about younger children relative to reports about older children. Younger children may be relatively more constrained than older children in the contexts in which they display mental health concerns (e.g., De Los Reyes & Kazdin, 2005; Smetana, 2008). Additionally, Achenbach and colleagues (1987) conducted their meta-analysis at a time when cross-informant correspondence studies predominantly focused on comparing adults' reports of children's concerns (e.g., parent vs. teacher; mother vs. father; see Table 2 of Achenbach et al., 1987). Thus, this age effect might have also reflected the idea that less variation among informants in contexts of observation pointed to greater correspondence between informants' reports, particularly for adult informants' reports of children's concerns. Third, Achenbach and colleagues (1987) observed larger correspondence levels between informants' reports of children's externalizing (e.g., aggression and hyperactivity concerns) versus internalizing (e.g., anxiety and mood) concerns. This third finding may have reflected greater correspondence between reports of directly observable concerns, relative to concerns that are internally experienced by the child and thus relatively less observable in nature.

Meta-Analysis of the Last Quarter-Century of Cross-Informant Correspondence Studies

Since the seminal work of Lapouse and Monk (1958) and Achenbach and colleagues (1987), researchers have conducted many additional cross-informant correspondence studies. As in earlier work, more recent studies find that low-to-moderate levels of cross-informant correspondence characterize such varied assessment settings and mental health domains as inpatients' depressive mood symptoms (Frank, Van Egeren, Fortier, & Chase, 2000), outpatients' anxiety symptoms (Rapee, Barrett, Dadds, & Evans, 1994), and disruptive behavior assessments taken from representative non-clinic samples (Offord et al., 1996). Further, the Lapouse and Monk (1958) finding of greater endorsements among children's self-reports relative to mothers' reports was corroborated by comparisons between mother and child behavioral checklist reports from 25 countries (Rescorla et al., 2013). Since the Achenbach et al. (1987) review, researchers have expanded the range of domains examined for cross-informant correspondence (e.g., anxiety, conduct problems, hyperactivity, mood; De Los Reyes, 2011). Further, studies conducted after the Achenbach et al. (1987) review collectively examined to a greater extent variations in cross-informant correspondence across multiple (a) measurement and scaling methods (e.g., behavioral checklists, clinical interviews, symptom rating scales), (b) patients' developmental periods, and (c) informant pairs (e.g., greater focus on children's self-reports for assessing internalizing concerns) (e.g., Achenbach, 2006; De Los Reyes & Kazdin, 2005).

In light of the continued attention to estimating cross-informant correspondence, have the findings of Achenbach and colleagues (1987) stood the test of time? To address this question, we conducted a meta-analysis of the last 25 years of cross-informant correspondence studies for assessments of children's internalizing and externalizing mental health concerns. We focused on these domains because they encompass the most commonly assessed and treated childhood concerns (i.e., anxiety, attention and hyperactivity, conduct, mood; Hunsley & Mash, 2007; Weisz et al., 2005). Further, we took two approaches to identifying studies for our review. First, we sampled studies included in meta-analyses of cross-informant correspondence published since Achenbach et al. (1987), which is a variant of the second-order meta-analytic approach (e.g., Butler, Chapman, Forman, & Beck, 2006; Cuijpers & Dekker, 2005; Lipsey & Wilson, 1993; Møller & Jennions, 2002; Peterson, 2001; Tamim, Bernard, Borokhovski, Abrami, & Schmid, 2011). Second, we searched for studies published in the years (i.e., 2000-2014) following the meta-analyses sampled in our review. Importantly, Achenbach and colleagues (1987) reviewed 119 studies published over roughly a quarter-century (i.e., 1960-1986). Similarly, as we explain below, we reviewed 341 studies published in the most recent quarter-century (i.e., 1989-2014). Thus, we were well-positioned to assess whether more recent work replicated the findings of Achenbach and colleagues (1987). To this end, we focused our review on studies that examined correspondence among parent, teacher, and child reports of children's mental health. We focused on these three informants because these are the reporters on which mental health professionals most commonly rely when administering and interpreting the outcomes of clinical child assessments (e.g., Hunsley & Mash, 2007; Kraemer et al., 2003).

Method

Literature review

We identified meta-analyses and empirical studies published since Achenbach and colleagues (1987). We conducted two searches. First, to identify relevant meta-analyses we searched via Google Scholar of all peer-reviewed scholarly work citing the Achenbach et al. (1987) review (N = 3,978 citations; search conducted March 2, 2014). We searched within these cited articles using the search terms “informant” and “quantitative review.” We augmented this search with an additional Google Scholar search of cited articles using the terms “meta-analysis OR quantitative review OR systematic review,” and conducted this same literature search using the Web of Science search engine. Combined across searches, we identified 1,799 articles. This search yielded an initial set of four quantitative reviews (i.e., Crick et al., 1998; Duhig, Renk, Epstein, & Phares, 2000; Meyer et al., 2001; Renk & Phares, 2004), to which we applied the inclusion and exclusion criteria described below. As an additional check, we conducted Google Scholar searches using the above-listed terms of all work citing these four quantitative reviews, yielding an additional set of 858 articles. This search yielded no additional quantitative reviews.

Second, to identify empirical articles of cross-informant correspondence conducted in the years (i.e., between 2000 and 2014) following the meta-analyses included in our review, we searched via Google Scholar for all peer-reviewed scholarly work citing the Achenbach et al. (1987) review between 2000 and 2014 using the search terms “internalizing symptoms/problems/difficulties OR externalizing symptoms/problems/difficulties” (N = 1,440 citations; search conducted September 9, 2014). Additionally, we searched the reference lists of narrative reviews of cross-informant correspondence research published since 2000.

Inclusion and exclusion criteria

To be included in our quantitative review, meta-analyses and studies must have (a) focused on informants' reports of children at or under the age of 18 years; (b) examined correspondence between informants' reports of children's mental health concerns (i.e., internalizing and/or externalizing concerns); (c) examined correspondence between reports completed by pairs of parents, teachers, and/or children (i.e., mother-father, parent-child, parent-teacher, teacher-child); and (d) been published in English. In addition to these criteria, included meta-analyses must have provided a list of individual studies used to calculate metrics of cross-informant correspondence. We employed this criterion to ensure that articles examined in our review were published after 1987 (i.e., not included in the original review by Achenbach et al., 1987). Further, knowledge of individual studies within each of the reviews allowed us to identify any articles examined by more than one review and multiple articles that examined the same sample or cohort of children. This step allowed us to ensure that effects observed did not occur because reviews examined the same studies or sample(s) across studies.

Among individual empirical studies identified either via the reference lists of meta-analyses or our additional search for individual studies published between 2000 and 2014, to be included in our review studies must have provided sufficient data to code estimates of cross-informant correspondence. Specifically, we required studies to report between-informant correspondence metrics (e.g., Pearson correlations for dimensional measures or Kappa coefficients for categorical measures) on measures completed by one or more informant pairs (e.g., mother-father, parent-child, parent-teacher, teacher-child). Measures completed by these informants must have assessed the same construct at the same time point (e.g., measures about internalizing problems completed by parent and child when the child was 10 years old). We excluded studies that only reported correspondence metrics as an average or range across multiple informant pairs (e.g., correlations ranged from .15-.40 depending on informant pair), as this information did not allow us to code moderator variables. Similarly, we excluded studies that only reported correspondence metrics as an average or range across informants' reports of different mental health domains. Finally, studies needed to report cross-informant correspondence estimates for measures of mental health on the internalizing (e.g., anxiety and mood) and/or externalizing (e.g., aggression and hyperactivity) spectrum. We excluded studies focused on related constructs, namely studies on risk and protective factors of mental health (e.g., emotion regulation, parenting practices, personality traits, resiliency, self-esteem).

For meta-analyses identified in our search, employing inclusion criteria led to our excluding the Crick et al. (1998) and Renk and Phares (2004) reviews. We excluded Crick et al. (1998) because it did not list the individual studies the authors used to calculate cross-informant correspondence estimates. We excluded the Renk and Phares (2004) review because it focused on correspondence between reports on a construct (i.e., social competence) that fell outside of the spectra of internalizing and externalizing mental health concerns. Thus, we identified relevant studies via quantitative review based on two meta-analyses that met our inclusion criteria: Duhig et al. (2000) and Meyer et al. (2001). Across studies identified via these meta-analyses and our search of individual studies published between 2000 and 2014, collectively we coded effect sizes on a final sample of 1218 data points taken from 341 studies published between 1989 and 2014. A complete study list can be retrieved online at https://sites.google.com/site/caipumaryland/Home/people/director.

Data extraction and covariates coded from each study

Two doctoral graduate students served as coders for our quantitative review, and received coding training by the first-author and fifth-author through discussion and practice coding. After the two coders completed study coding, the fifth-author served as an independent assessor on 50% of the studies coded. This independent assessment resulted in 100% inter-rater agreement on effect sizes coded (i.e., effect size magnitude and metrics), and Cohen's Kappa coefficients of 1.0 in terms of inter-rater agreement on coding of each of the covariates described below (i.e., child age, informant pair, measurement method, mental health domain).

Outcomes representing mean estimates of cross-informant correspondence were presented as Pearson r correlations, Cohen's Kappa coefficients, Cohen's d, Hedge's g, means and standard deviations of informants' reports, or odds ratios that we converted to r for the meta-analyses. In addition to mean estimates of cross-informant correspondence, we also coded for four covariates, consistent with effects observed by Achenbach and colleagues (1987): (1) mental health domain (internalizing vs. externalizing); (2) informant pair (mother-father, parent-child, parent-teacher, teacher-child); (3) child age (younger [10 years and younger] vs. older [11 years and older]); and (4) measurement method (categorical vs. dimensional). We coded studies based on measurement method because recent work indicates that, relative to categorical scaling, dimensional scaling results in measures that evidence greater estimates of reliability and validity (Markon, Chmielewski, & Miller, 2011). Thus, we would expect greater levels of cross-informant correspondence for reports taken using dimensional scales (e.g., behavioral checklists), relative to categorical scales (e.g., diagnostic interview endorsements).

Data-analytic strategy

As described below, we observed significant differences between cross-informant correspondence estimates for reports of internalizing concerns versus externalizing concerns (Table 1). Thus, we performed two primary meta-analyses, one for internalizing concerns and one for externalizing concerns, using published or calculated rs to estimate the precision of the mean for all included studies, using Comprehensive Meta-analysis Version 2 (Biostat, Englewood, NJ, n.d.) software. Because the studies included in the meta-analyses varied in methodology and design, we calculated a random-effects model. In addition, for some studies and samples, we observed multiple effect sizes that varied across our moderator variables (e.g., multiple effect sizes reported for different informant pairs, categorical vs. dimensional approaches). We accounted for this nesting in the data by calculating (a) effect sizes for each cohort or sample and then (b) an overall effect size. Specifically, we computed effect sizes and variances for each cohort. Next, we calculated a weighted mean for each study cohort (i.e., effect sizes drawn from the same sample). We based these weights on the inverse of the total variance associated with each of the data points. Lastly, we computed a weighted mean of the effect sizes for each of the study cohorts, which were based on both within-cohort error and between-cohort variance, to produce an overall summary effect (Borenstein, Hedges, Higgins, & Rothstein, 2009). This method allowed us to capitalize on the multiple sources of variance present both within and across studies, rather than alternative methods that would have resulted in lost sources of variance (e.g., taking a simple average of correspondence estimates for a study that included multiple informant pairs).

Table 1. Meta-Analytic Findings Regarding Cross-Informant Correspondence in Reports of Children's Mental Health.

| Mean Cross-Informant Correlations | ||||||

|---|---|---|---|---|---|---|

|

| ||||||

| Symptom Type | # of Data Points | Overall Effect Size (95% CI) | P-C | P-T | T-C | M-F |

| Internalizing | 697 | .25 (.24-.25) (p < .001) | .26 (n =372) | .21 (n =155) | .20 (n =62) | .48 (n =109) |

| Externalizing | 521 | .30 (.30-.31) (p < .001) | .32 (n =198) | .28 (n =162) | .29 (n =53) | .58 (n =109) |

Note. Correlations are in the Pearson r metric. P-C = Parent-Child; P-T = Parent-Teacher; T-C = Teacher-Child; M-F = Mother-Father.

Additionally, we addressed the issue of statistical stability in two ways. First, we individually excluded each study from the analysis and recalculated the pooled r and 95% confidence interval (CI). If an individual study contributed heavily to the pooled r, we would observe a change in the magnitude or significance of the pooled r. Second, given the possibility of publication bias (i.e., significant findings are more likely to be published), we calculated Orwins' Fail-safe N (Borenstein et al., 2009), which provides an index of the number of data points necessary to make the overall effect size trivial (i.e., defined as an r of .10).

Following determination of the combined effect size across studies, we considered heterogeneity or observed variance across studies. Specifically, we calculated tau, a metric that is sensitive to the unit of measurement and acts as the standard deviation of the summary effect, and I2, which provides an index of the proportion of observed variability that is attributable to heterogeneity among the data points and reflects “real” differences among studies (Borenstein et al., 2009; Higgins, Thompson, Deeks, & Altman, 2003). To explore the impact of the categorical variables or covariates on this observed variability among studies, we conducted ancillary, subgroup analyses to determine effect sizes (rs) for each level of the categorical variable (e.g., separately for studies that used categorical vs. dimensional assessment approaches or examined younger vs. older children). For these analyses, we calculated rs and associated p-values for each level of the categorical variable (Borenstein et al., 2009). We then examined the Q statistic, which is based on the weighted sum of squares for each level of the covariate and thus provides an index of dispersion (Borenstein et al., 2009), to determine whether the magnitude of correspondence differed between levels of the covariates.

Results and Discussion

Overall effect sizes

Combined across 341 studies and 1218 data points, we observed an overall cross-informant correlation of .28 (95% CI [.22, .33]; p < .001). These estimates are virtually identical to the mean cross-informant correlations reported by Achenbach and colleagues (1987). In Table 1, we report cross-informant correlations for reports of children's internalizing and externalizing concerns. Consistent with Achenbach and colleagues (1987), we observed low-to-moderate and statistically significant magnitudes of cross-informant correspondence for both domains. As seen in Table 1, we observed non-overlapping 95% CI for cross-informant correspondence estimates for reports of children's internalizing concerns versus externalizing concerns. Overall, informant correspondence rates tended to be larger for reports of children's externalizing concerns, relative to reports of children's internalizing concerns. Thus, below we report effects of covariates on levels of cross-informant correspondence separately for reports of children's internalizing concerns and externalizing concerns.

Heterogeneity in effect sizes and stability of results

Consistent with Achenbach et al. (1987), we observed an overall large variance in results for internalizing and externalizing concerns, respectively (I2 = 99.25 and 99.40), suggesting heterogeneity among studies in effect sizes. That is, the percentage of total variability that is attributable to heterogeneity among the data points included in the meta-analysis is approximately 99% for reports of internalizing and externalizing concerns. Further, removal of any individual study from the analysis did not affect relations between magnitudes of cross-informant correspondence and covariates (rs with each individual study removed ranged from .27 to .28 for reports of internalizing concerns, r with each individual study removed was.36 for reports of externalizing concerns; all ps < .001). Using the respective effect sizes for these data points, defining a trivial effect size as an r of .10, and a threshold of p < .05, Orwin's Fail-safe N was 1,875 for reports of internalizing concerns and 2,292 for reports of externalizing concerns. This indicates that one would have to include in the meta-analysis over 1,800 data points of reports of children's internalizing concerns and well over 2,000 data points of reports of children's externalizing concerns with a mean r of .00 before the cumulative effects would become trivial.

Effects of covariates

To explore whether the covariates could account for some of the variability among the effect sizes for each of the data points (697 for internalizing, 521 for externalizing), we conducted ancillary analyses where we calculated separate r and p-values for each level of the categorical covariates. Because the data points within studies and cohorts often differed in these covariates (e.g., child age, measurement method), these supplementary analyses considered the data points independently. Beyond the effects of mental health domain reported previously, we observed significant effects for two other covariates. First, pairs of mother and father informants (i.e., informants who observe child in the same setting) yielded larger magnitudes of cross-informant correspondence, relative to all other informant pairs (i.e., parent-child, parent-teacher, teacher-child). We observed this effect for both reports of internalizing concerns (rs: .48 vs. .24; Q = 72.42, p < .001) and externalizing concerns (rs: .58 vs. .30; Q = 96.26; p < .001). Second, informants completing reports on a dimensional scale tended to yield higher magnitudes of correspondence, relative to informants completing reports on a categorical scale. We observed this effect of measurement method for both reports of internalizing concerns (rs: .29 vs. .06; Q = 38.54, p < .001) and externalizing concerns (rs: .37 vs. .06; Q = 30.86, p < .001). Further, we observed non-significant effects of child age on levels of correspondence for reports about younger children (i.e., 10 years and younger) versus older children (i.e., 11 years and older) for both reports of internalizing concerns (rs: .32 vs. .26; Q = 1.42, p = .23) and externalizing concerns (rs: .38 vs. .35; Q = 0.48, p = .49).

In sum, we made three findings consistent with previous reviews (Achenbach et al., 1987; Markon et al., 2011). First, informants' reports of relatively more observable or externalizing concerns tended to evidence greater levels of cross-informant correspondence, relative to reports of internalizing concerns. Second, informant pairs for which both informants observed the child in the same setting (i.e., mother-father) tended to yield the highest levels of correspondence, relative to all other informant pairs. Third, when informants completed reports using measures that tended to evidence greater reliability and validity estimates (i.e., dimensional), we observed greater levels of cross-informant correspondence, relative to when informants completed reports using categorical measures.

One finding from our review that was inconsistent with the Achenbach et al. (1987) meta-analysis was our null finding regarding the effects of child age on magnitudes of cross-informant correspondence. These inconsistencies may reflect changes since Achenbach et al. (1987) with regard to evidence-based assessment practices for clinical child assessments. Specifically, the Achenbach et al. (1987) review consisted of a relatively small proportion of studies comparing parent and teacher reports to children's self-reports (see Table 2 of Achenbach et al., 1987). Since 1987, a proliferation of evidence-based assessment research focused on understanding children's perspectives on their own mental health concerns, and this increased focus resulted in an increased number of options available for taking self-reports of mental health, particularly for older children and adolescents (for reviews, see Klein, Dougherty, & Olino, 2005; McMahon & Frick, 2005; Silverman & Ollendick, 2005). Consequently, we based our cross-informant correspondence estimates for reports of children's mental health concerns on a sample of studies in which over 50% compared children's self-reports to reports from other informants (Table 1). For some mental health domains and contexts (e.g., worry and anxiety displayed within peer interactions; covert delinquent behaviors displayed within peer interactions), children may be in a unique position to observe displays of these concerns, relative to parents and teachers (e.g., Comer & Kendall, 2004; McMahon & Frick, 2005). Thus, it may be that the null effects of child age on magnitudes of cross-informant correspondence that we observed in fact reflected a greater use since Achenbach et al. (1987) of self-reports to assess children's mental health concerns.

Conceptual Foundations of Multi-Informant Mental Health Assessments

Operations Triad Model

Collectively, our meta-analytic findings indicated that clinical child assessments tend to yield low-to-moderate levels of cross-informant correspondence, with higher levels of correspondence occurring when informants complete reports about mental health concerns that both informants either have relatively greater opportunities to observe (e.g., externalizing vs. internalizing) or observe within the same context (e.g., mother-father vs. parent-teacher). The main findings from the Achenbach and colleagues (1987) review, as well as the stability of these findings in research published since their review (Table 1), have greatly informed the development of theoretical principles about using and interpreting multi-informant assessment outcomes. Indeed, an underlying assumption of the multi-informant approach is that informants each carry a unique and valid perspective of the patients about whom they provide reports (De Los Reyes, 2013). Thus, to maximize the clinical value offered by the multi-informant approach, the informants selected to provide reports ought to differ in their opportunities for observing patients' mental health concerns (e.g., observations that vary across home and school contexts; Kraemer et al., 2003). Therefore, if patients contextually vary in where they display concerns, then discrepancies among informants' reports should, in part, reflect these contextual variations.

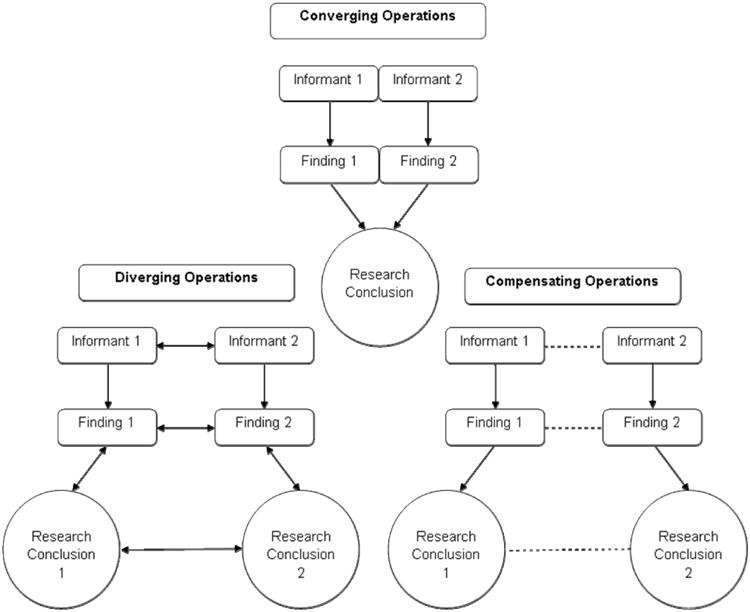

These basic assumptions underlying the multi-informant assessment approach have been elaborated upon and codified in recent theoretical work. Specifically, the idea that integrating multiple distinct pieces of information (e.g., research evidence and clinical expertise) improves clinical decision-making relative to relying on limited information (e.g., only clinical expertise) is a foundational principle of evidence-based practice (e.g., Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996). Yet, much of multi-informant assessment research has focused on whether informant discrepancies reflect error or rater biases (for a review, see De Los Reyes, 2013), and this research often conflicts with the key rationale for taking a multi-informant approach (De Los Reyes, Thomas et al., 2013). To address these inconsistencies between theory and interpretations of multi-informant assessments, researchers developed the Operations Triad Model (OTM; Figure 1; De Los Reyes, Thomas et al., 2013). The OTM addresses a key issue underlying these inconsistencies, namely, that research methodology in clinical psychology has largely relied on the concept of Converging Operations to interpret research conclusions and assessment outcomes in practice. Converging Operations is a set of measurement conditions within which one draws inferences of the veracity of multiple methodologically distinct research observations based on whether the observations yielded similar conclusions (Garner, Hake, & Eriksen, 1956). Under this concept, one draws stronger inferences from studies within which observations converged on a common conclusion (e.g., multiple informants' reports all supported treatment efficacy), and in turn, one draws weaker inferences from studies within which observations diverged toward different conclusions (De Los Reyes, Thomas et al., 2013).

Figure 1.

Graphical representation of the research concepts that comprise the Operations Triad Model. The top half (Figure 1a) represents Converging Operations: a set of measurement conditions for interpreting patterns of findings based on the consistency within which findings yield similar conclusions. The bottom half denotes two circumstances within which researchers identify discrepancies across empirical findings derived from multiple informants' reports and thus discrepancies in the research conclusions drawn from these reports. On the left (Figure 1b) is a graphical representation of Diverging Operations: a set of measurement conditions for interpreting patterns of inconsistent findings based on hypotheses about variations in the behavior(s) assessed. The solid lines linking informants' reports, empirical findings derived from these reports, and conclusions based on empirical findings denote the systematic relations among these three study components. Further, the presence of dual arrowheads in the figure representing Diverging Operations conveys the idea that one ties meaning to the discrepancies among empirical findings and research conclusions and thus how one interprets informants' reports to vary as a function of variation in the behaviors being assessed. Lastly, on the right (Figure 1c) is a graphical representation of Compensating Operations: a set of measurement conditions for interpreting patterns of inconsistent findings based on methodological features of the study's measures or informants. The dashed lines denote the lack of systematic relations among informants' reports, empirical findings, and research conclusions. Originally published in De Los Reyes, Thomas, et al. (2013). © Annual Review of Clinical Psychology. Copyright 2012 Annual Reviews. All rights reserved. The Annual Reviews logo, and other Annual Reviews products referenced herein are either registered trademarks or trademarks of Annual Reviews. All other marks are the property of their respective owner and/or licensor.

To expand upon the Converging Operations concept, the OTM delineates conditions for two additional research concepts for interpreting discrepancies among informants' reports. First, the OTM includes a concept, Diverging Operations, developed for interpreting instances in which multiple informants' reports diverge from each other for meaningful reasons. An example of Diverging Operations may involve a circumstance in which a clinician expects two informants' reports about a patient's mental health concerns to meaningfully diverge because (a) the informants observe the patient's behavior within completely different contexts (e.g., home vs. school) and (b) the patient displays mental health concerns to a greater degree in one context than the other (e.g., home to a greater extent than school). Second, the OTM also includes conditions for a research concept, Compensating Operations, reflecting those circumstances in which multiple informants' reports diverge from each other because of measurement error or some other methodological process. For instance, two informants' reports may yield divergent information because they completed reports using distinct measures (e.g., different item content and scaling) and the measures may have varied in their psychometric properties (e.g., internal consistency estimates). Thus, within instances that reflect Compensating Operations, methodological features of the assessment process provide a parsimonious account for why informants' reports yielded different outcomes. In sum, the OTM promotes an evidence-based approach to testing whether meaningful data can be gleaned from interpreting the consistencies and discrepancies observed among multiple informants' reports.

Predictions about the Incremental and Construct Validity of Multi-Informant Assessments

Mental health professionals can make a strong conceptual case for taking a multi-informant approach to assessing child patients. Further, the OTM may facilitate linking the concepts underlying administering multi-informant assessments with interpreting their outcomes (Figure 1). Yet, unknown is how to use the OTM to make specific predictions about the incremental and construct validity of multi-informant assessments. That is, when multi-informant assessments yield low correspondence among informants' reports, how should the evidence appear if the low correspondence reflects contextual variations in patients' mental health? An illustrative example of multi-informant assessment outcomes may be helpful.2 For the purposes of this illustration, we focus on interpreting reports from informants who differ in the contexts in which they observe patients, specifically parents at home versus teachers at school. We also focus on assessments of a mental health domain for which patients' concerns may significantly vary across home and school contexts, namely preschoolers' disruptive behavior (for a review, see Wakschlag, Tolan, & Leventhal, 2010).

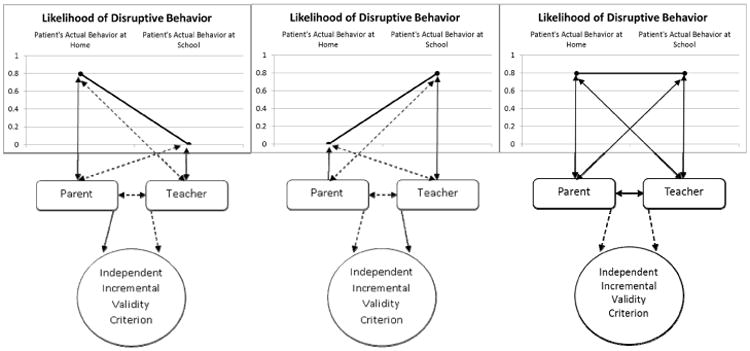

To assess a sample of patients experiencing disruptive behavior, consider a research team conducting a multi-informant assessment that involved collecting parent and teacher reports. In Figure 2, we present graphical depictions of the outcomes of these assessments. Figure 2 demonstrates links among parent and teacher reports of disruptive behavior and “actual” disruptive behavior as displayed by the patient within home and/or school contexts. We include this measure of “actual” disruptive behavior in Figure 2 to illustrate the construct validity of parent and teacher reports. Specifically, we graphically depict three different patients, or patients who display concerns in home but not school (Figure 2a), school but not home (Figure 2b), and across home and school (Figure 2c). For each example, arrows link informants' reports to patients' behavior. These arrows depict the strength of relations between informants' reports and patients' behavior. That is, solid-line arrows linking informants' reports to patients' behavior indicate stronger relations between reports and behavior, relative to the dashed-lines that indicate weaker relations between reports and behavior. Thus, Figures 2a and 2b depict examples of Diverging Operations, in that the parent and teacher reports differ because the patient displays concerns in either the home context or school context, but not both contexts. In contrast, Figure 2c depicts an example of Converging Operations, in that the parent and teacher reports corroborate each other because the patient displays concerns across home and school contexts.

Figure 2.

Graphical depiction of incremental and construct validity predictions that follow from the OTM and conceptual rationale for taking a multi-informant approach to mental health assessment.

In Figure 2, we also describe the strength of relations between parent and teacher reports and an independently administered criterion variable; an example of such a variable might be a consensus diagnostic assessment by an experienced clinical team. We include this criterion variable in Figure 2 to illustrate the incremental validity of parent and teacher reports. Here, we use the same “solid-line/dashed-line” approach as with the links between informants' reports and measures of “actual” patients' behavior that we used to illustrate construct validity. That is, in illustrating relations between parent and teacher reports and the criterion variable, solid-line (relative to dashed-line) arrows linking informants' reports to each other indicate stronger relations between reports. Further, solid-line (relative to dashed-line) arrows linking an informant's report to the validity criterion indicate stronger incremental validity for the “solid-line” informant's report, relative to the “dashed-line” informant's report. Therefore, in Figure 2a parent (and not teacher) reports incrementally predict variance in the criterion. In contrast, in Figure 2b teacher (and not parent) reports incrementally predict variance in the criterion. In Figure 2c, the overlap between parent and teacher reports results in neither report incrementally predicting variance in the criterion.

If low correspondence among reports validly reflected contextual variations in patients' disruptive behavior, the patterns of assessment outcomes would be likely to reflect two predictions, each of which are graphically depicted in Figure 2. The first prediction is that each informant's report should “build upon the other” in terms of predicting or relating to relevant clinical metrics (e.g., consensus diagnoses, referral status, treatment response). The uniqueness of informants' reports cannot merely reflect “noise” and must reveal something important about patients' clinical presentations, prognoses, and/or responses to treatment protocols (see also Youngstrom, 2008). Thus, in Figure 2 one can see how conducting a study on the incremental validity of the multi-informant approach depends heavily on the contexts in which patients displayed concerns. Specifically, a sample of patients whose concerns were specific to the home context may result in teacher reports that did not yield incrementally valid information relative to parent reports (Figure 2a). Conversely, a sample of patients who exhibited school-specific concerns may yield parent reports that did not evidence incremental validity relative to teacher reports (Figure 2b). Thus, for multiple informants' reports to be considered incrementally valid relative to each other, patients in a sample should be heterogeneous in terms of the contexts in which they displayed concerns (i.e., a sample of Figure 2a, 2b, and 2c patients). That is, if a sample of patients exhibited individual differences in the context(s) in which they displayed concerns, then each kind of informant who provided reports about these patients (e.g., parents and teachers) would have had the opportunity to observe at least a subgroup of patients' concerns in the sample. Without an informant having the opportunity to observe patients' concerns, one cannot expect that informant to provide incrementally valid reports about such concerns.

The second prediction is that patterns of cross-informant correspondence should reflect contextual variations inherent in patients' concerns. For instance, a pattern of parents reporting high levels of disruptive behavior for which teachers' reports do not corroborate should indicate that patients exhibited disruptive behavior to a greater extent at home than school (Figure 2a). Alternatively, consistently high levels of disruptive behavior reported by both parents and teachers should indicate that patients experienced concerns across contexts (Figure 2c).

Considering Constructs Other than Context When Interpreting Informants' Reports

One key issue warrants comment. Specifically, a great deal of research has focused on testing whether low versus high cross-informant correspondence reflects constructs other than contextual variations in displays of patients' concerns (for reviews, see Achenbach, 2006; De Los Reyes & Kazdin, 2005; Kraemer et al., 2003). Thus, drawing inferences about patients' concerns from multi-informant assessments ought to involve examining plausible rival hypotheses for why informants' reports may converge or diverge.

Clinical severity

First, one interpretation of the patterns of mental health concerns illustrated in Figure 2 is that consistencies and inconsistencies between informants' reports reflect individual differences in severity of patients' clinical presentations, and not necessarily contextual variations in patients' concerns. Importantly, the literature is quite equivocal as to whether cross-contextual consistency in patients' mental health concerns can be treated as a proxy for high levels of clinical severity. Indeed, prior work identified relations between informant discrepancies and context-specific displays of mental health concerns, even when accounting for participants' clinical severity (e.g., De Los Reyes, Bunnell, & Beidel, 2013; De Los Reyes, Henry, Tolan, & Wakschlag, 2009). Additionally, studies of patients from a variety of clinical domains (e.g., attention and hyperactivity, conduct problems, social anxiety) are quite inconsistent as to whether patients evidencing concerns across contexts also evidence significantly greater clinical severity or impairment levels than patients who display concerns within specific contexts (e.g., Bögels et al., 2010; Dirks et al., 2012; Drabick et al., 2007). In contrast, studies of informant discrepancies in assessments of large samples of psychiatric inpatients (Carlson & Youngstrom, 2003) and outpatients (Thuppal, Carlson, Sprafkin, & Gadow, 2002) found that patients whose concerns were endorsed by at least two informants evidenced greater levels of clinical impairment, relative to patients whose concerns were endorsed by a single informant. Overall, in informant discrepancies studies, one ought to statistically account for the clinical severity of patients' concerns when examining the relations between informants' reports and contextual variations in patients' concerns.

Informants' perspectives and rater biases

Perhaps the most frequently studied set of constructs in informant discrepancies research are those that reflect variations in informants' perspectives about patients' concerns and possible biases in informants' reports. Research on these informant characteristics has been extensively reviewed in prior work (e.g., De Los Reyes, 2013; De Los Reyes & Kazdin, 2005). Consequently, we will focus on the evidence regarding the most often-studied characteristics. For instance, informants may vary in their perspectives as to which mental health concerns in child patients warrant care (Brookman-Frazee, Haine, Gabayan, & Garland, 2008; Hawley & Weisz, 2003; Jensen-Doss & Weisz, 2008; Yeh & Weisz, 2000). In fact, informants may vary in perceiving that a child's mental health concerns distress the child and thus warrant care, even when all informants converge on endorsing that concerns exist (e.g., Phares & Compas, 1990; Phares & Danforth, 1994). Consequently, informants may vary considerably in their perspectives of patients' mental health concerns, and these discrepant perspectives may impact such aspects of mental health care as access to services and therapeutic engagement (see also Hawley & Weisz, 2003; Yeh & Weisz, 2000). Similarly, informants from different ethnic or racial backgrounds may vary in whether or which behaviors reflect mental health concerns and warrant care, although research on the relation between such backgrounds and informant discrepancies has yielded inconsistent findings (see De Los Reyes & Kazdin, 2005; Duhig et al., 2000). Further, low-to-moderate correspondence levels and directional differences in reporting (e.g., whether children self-report greater concerns than parents report about children) similarly characterize cross-informant correspondence in reports examined in over 20 countries (Rescorla et al., 2013; Rescorla et al., 2014).

Few characteristics in the informant discrepancies literature have been given more research attention than the impact of informants' mental health concerns on their reports of children's mental health. Broadly, the conceptual rationale for this work can be encapsulated in what researchers have termed the depression→distortion hypothesis: When an informant experiences low mood, this causes the informant to attend to, encode, and thus rate children's behavior with greater negative descriptors, relative to use of positive or neutral descriptors (see Richters, 1992; Youngstrom, Izard, & Ackerman, 1999). Importantly, depression→distortion effects should not be seen as mutually exclusive from the previously described characteristics. For instance, depression→distortion effects may result in depressed informants reporting greater levels of patients' mental health concerns than non-depressed informants. These effects potentially impact patients' access to care if mental health professionals encounter discrepant reports, and these discrepant reports result in uncertainties when making clinical decisions. Among the informants providing reports, parents' reports have been the most frequently studied in terms of depression→distortion effects, perhaps because a common risk factor of children's mental health concerns is a family history of such concerns (e.g., Goodman & Gotlib, 1999). Thus, within clinic samples of child patients, parents providing reports of patients' concerns may often experience a key characteristic thought to bias informants' reports.

A key concern with research on the depression→distortion hypothesis is that the hypothesis does not have strong empirical support on its behalf. As mentioned previously, many studies have tested the depression→distortion hypothesis, with some studies finding that informants experiencing depressive symptoms provide reports that indicate greater levels of children's mental health concerns, relative to reports taken from other informants (De Los Reyes & Kazdin, 2005). However, a number of studies have found no such support (e.g., Conrad & Hammen, 1989; De Los Reyes, Goodman, Kliewer, & Reid-Quiñones, 2010; De Los Reyes, Youngstrom et al., 2011a; Hawley & Weisz, 2003; Weissman et al., 1987). Further, most depression→distortion studies do not include independent ratings of children's behavior in the same situation (see also Richters, 1992), and interactions between informants (e.g., parents and teachers) and the subject of the assessment (e.g., children receiving treatment) are often context-dependent (e.g., occur exclusively in home and/or school contexts). Consequently, observing a child behaving differently with their depressed parent at home than they did with teachers at school might be a logical consequence of the nature of interactions between patients and informants.

Among those studies that have used experimental designs and constrained other possible confounding factors (e.g., context of observation), researchers have observed, at best, modest support for the depression→distortion hypothesis. For instance, Jouriles and Thompson (1993) experimentally induced negative mood states in parents before they viewed a previously recorded task involving a “cleanup” activity with their child, and had parents and independent observers rate the child's behavior during the task. The researchers observed non-significant differences between parents' and independent observers' reports of children's behavior during the task. Other studies using mood induction procedures have also failed to support the depression→distortion hypothesis (Youngstrom, Kahana, Starr, & Schwartz, 2004).

The depression→distortion hypothesis's strongest empirical support comes from a quasi-experimental study examining the relation between mothers' depressive mood symptoms and their reports of children's behavior during completion of a frustrating task (Youngstrom et al., 1999). Youngstrom and colleagues (1999) controlled for the context on which informants based their reports by comparing mothers' reports of children completing a frustrating task to independent observers' reports of children during the same task. The researchers observed statistically significant depression→distortion effects, and yet these effects only accounted for between 2%-20% of incremental variance in mothers' reports, relative to independent observers' reports. Consequently, one would be hard-pressed to discard mothers' reports based on even the highest observed figure of incremental variance (i.e., 20%), as even this figure constitutes a minority of the variance in the mothers' report that could possibly be “afflicted” with rater bias. Overall, the lack of strong support for depression→distortion effects indicate that these effects cannot fully account for the presence of informant discrepancies.

Measurement error

A construct related to that of informants' perspectives and rater biases is measurement error. Measurement error has key implications for research on the validity of the multi-informant approach. Indeed, measurement error truncates the ability of informants' reports to predict criterion variables, thus hindering the ability of multi-informant assessments to evidence incremental and construct validity (see Dirks, Boyle, & Georgiades, 2011; Dirks et al., 2012). Thus, if measurement error explained informant discrepancies, then mental health professionals could not validly infer that discrepancies among informants' reports reflect changes in displays of patients' mental health concerns across contexts.

The psychometrics literature points to three important components of measurement error that have implications for the multi-informant assessment approach: (a) transient error, (b) random error, and (c) systematic error. The first, transient error refers to characteristics of the rater that might hinder measurement reliability (Schmidt & Hunter, 1996). In many respects, the literature reviewed previously on the depression→distortion hypothesis represents a case of research on transient error in multi-informant assessment. Second, randomly distributed error variance, particularly within the individual informants' reports in a multi-informant assessment, may pose challenges to reliably assessing discrepancies among informants' reports. This is because assessments of discrepancies between informants' reports can only be as reliable as the individual informants' reports from which one assesses discrepancies (e.g., Rogosa, Brandt, & Zimowski, 1982; Rogosa & Willett, 1983). Third and with regard to systematic error, recent work indicates that multi-informant assessments may yield consistent differences between informants' reports across multiple measures (De Los Reyes, Alfano, & Beidel, 2010, 2011; De Los Reyes, Bunnell et al., 2013; De Los Reyes, Goodman et al., 2008, 2010; De Los Reyes, Youngstrom et al., 2011a, b). Yet, the presence of systematic error may result in giving consistent differences between informants' reports the appearance of meaningful differences (see also De Los Reyes, 2013).

Overall, measurement error may pose challenges to validly interpreting the outcomes of multi-informant assessments as reflecting contextual variations in patients' concerns. Thus, researchers have examined components of the measurement process that either constrain levels of such error or improve interpretations of informants' reports. Two research literatures appear particularly pertinent in this regard. First, even subtle changes in measurement scaling can have profound effects on informants' reports. For example, on a positively valenced self-report measure of life success, scaling items on a “-5 to 5” range produced greater proportions of respondents rating high life success than the proportion observed when the scale ranged from “0 to 10” (for a review, see Schwarz, 1999). Along these lines, a key element of best practices in multi-informant assessment involves ensuring that assessors hold such measurement features as item content, scaling, and response labeling constant across multiple informants' reports. In this way, an assessor can decrease the likelihood that discrepancies among reports are the result of methodological artifacts of the measurement process (for a review, see De Los Reyes, Thomas et al., 2013). In fact, when researchers hold these measurement factors constant across reports, and the measures informants complete exhibit the same factor structures and similar levels of internal consistency, informants nevertheless provide reports that correspond with each other at low-to-moderate magnitudes (e.g., Achenbach & Rescorla, 2001; Baldwin & Dadds, 2007).

At the same time, holding measurement factors constant across informants' reports does not guarantee that the resulting discrepancies reflect contextual variations in patients' concerns. Indeed, informants may nonetheless react differently to the same measurement instrument, and the reasons for these different reactions may or may not reflect differences in the contexts in which informants observe patients' behavior. For instance, in recent work researchers administered assessments of parental knowledge of adolescents' whereabouts and activities (i.e., a risk factor for adolescent delinquency) to a community-based sample of parents and adolescents (De Los Reyes, Ehrlich et al., 2013). Researchers randomly assigned parents and adolescents to the order in which they completed two different assessments of parental knowledge. Within one assessment protocol, parents and adolescents received training on how to use the number of contexts in which they tend to observe behaviors indicative of parental knowledge to provide Likert-type ratings on survey reports about such knowledge (i.e., greater number of contexts → greater ratings; De Los Reyes, Ehrlich et al., 2013). Within a second protocol, parents and adolescents received instructions to complete the assessments that did not involve any training (i.e., a typical questionnaire completion protocol). Interestingly, the training received by parents and adolescents increased the differences between their reports, relative to the no-training condition. These findings suggest that parents and adolescents differ in reports about parental knowledge, in part, because they view behaviors indicative of parental knowledge in different contexts.

In contrast to the findings of De Los Reyes, Ehrlich et al. (2013), in another study clinicians with experience in assessing and treating child patients read vignettes of hypothetical children receiving a screening evaluation for conduct disorder (De Los Reyes & Marsh, 2011). The clinicians read vignettes that described contextual features in a child's life that the researchers randomly manipulated to reflect either risks for conduct disorder (e.g., associating with deviant peers) or no such risks. The vignettes also described the child as evidencing a single symptom within the fourth edition of the Diagnostic and statistical manual of mental disorders (DSM-IV) diagnostic criteria for conduct disorder (American Psychiatric Association [APA], 2000). Researchers prompted clinicians to make likelihood ratings of the children that reflected their impressions of whether the children would meet DSM-IV criteria for conduct disorder if a clinician were to administer a full diagnostic evaluation to the child. Further, the clinicians made judgments for 30 hypothetical children, in order to make likelihood judgments for all 15 DSM-IV conduct disorder symptoms rated in both high-risk and low-risk contexts. Thus, all clinicians in the sample made ratings about the same hypothetical children and conduct disorder symptoms, were exposed to the same rating instructions, and had access to the same information about the hypothetical children's environments. Overall, clinicians made greater likelihood ratings for children described in high-risk contexts versus those described in low-risk contexts. These findings suggest that clinicians tended to apply contextual information to their judgments about the children's conduct disorder symptoms. However, clinicians exhibited little correspondence in terms of the specific symptoms for which they applied contextual information. For instance, whereas high-risk contextual information might have influenced one clinician's judgments about a child's truancy, the same high-risk contextual information might have had little influence on another clinician's judgments about the child's truancy. These findings have since been replicated when examining judgments about attention-deficit/hyperactivity concerns, as well as judgments completed by laypeople (Marsh, De Los Reyes, & Wallerstein, 2014).

The findings of De Los Reyes, Ehrlich et al. (2013) and De Los Reyes and Marsh (2011) indicate that holding measurement factors constant alone does not guarantee interpretability of any one informant's report or the discrepancies between informants' reports. Thus, a second literature involves identifying and using validity criteria for assessing context-specific displays of mental health concerns. Using context-specific criterion measures, mental health professionals might improve understanding of the reasons why informants provide discrepant reports. For example, to assess cross-contextual variations in preschool children's disruptive behavior, researchers recently developed the Disruptive Behavior Diagnostic Observation Schedule (DB-DOS; Wakschlag et al., 2010). This behavioral observation measure consists of assessments of children's disruptive behavior as displayed within interactions between children and adult authority figures. The adult authority figures consist of the assessed child's parent and an unfamiliar clinical examiner. Assessors hold the nature of the interactions constant across adult-child interactions. For instance, the parent prompts the child in one interaction to help with cleaning up toys, and in a separate interaction the clinical examiner administers a similar cleanup prompt to the child. The consistency in the structure of adult-child interactions on the DB-DOS allows one to assess in an analogue sense disruptive behavior as displayed in home contexts (e.g., with parental adults) and/or non-home contexts (e.g., with non-parental adults such as teachers). Thus, the DB-DOS might serve as an independent assessment of whether patients display disruptive behavior consistently across contexts or within specific contexts. Such an assessment could serve as a criterion for assessing the validity in interpreting patterns of informants' reports (e.g., parent and teacher reports) as reflective of contextual variations in patients' concerns. Below, we describe a study that took exactly this approach.

Another example of a context-sensitive validity criterion comes from research on the social interactions that tend to elicit displays of children's behavioral and emotional concerns (Hartley, Zakriski, & Wright, 2011). In this work, researchers administered behavioral checklists for informants (e.g.., parents and teachers) to complete about children. The informants also completed measures about how the same children tend to react (e.g., aggressive behavior) within interactions with peers (e.g., peer bosses child around) and adult authority figures (e.g., adult giving child instructions). This second measure allows researchers to examine whether convergence between informants' reports about children's behavioral and emotional concerns signals similarities in the kinds of interactions encountered across contexts (e.g., child encounters teasing by peers at home and school). Alternatively, one could examine whether divergence between informants' reports about children's concerns reflects the idea that interactions that tend to elicit children's concerns are present in one context (e.g., school) and not another (e.g., home).

The previous examples of context-sensitive validity criteria relied on behavioral observations or survey methods. One final example leverages the time- and context-sensitive properties of physiological arousal. Specifically, individuals experiencing social anxiety vary in that some experience social anxiety in different ways, depending on the context (e.g., Bögels et al., 2010). Direct assessments of physiological arousal may distinguish social anxiety patients' experiences within and across these contexts (e.g., arousal during public speaking vs. arousal in anticipation of public speaking; De Los Reyes, Augenstein et al., 2015). These assessments have great potential as validity indicators to interpret informants' reports (De Los Reyes & Aldao, 2015). This is because technology now allows mental health professionals to assess arousal using wireless, ambulatory devices (e.g., heart rate monitors), and implement them in vivo within the laboratory as well as routine clinic settings (see also Thomas, Aldao, & De Los Reyes, 2012).

For example, in one study researchers recruited adolescents who met DSM-IV criteria for social anxiety disorder as well as adolescents who did not meet criteria for any mental disorder (Anderson & Hope, 2009). In this study, adolescents engaged in a series of laboratory social interaction tasks totaling 20 minutes (e.g., one-on-one social interaction and public speaking tasks with trained confederates), to assess adolescents' self-reported changes in arousal in reference to arousal indices of physiological habituation taken during these interactions (i.e., wireless, ambulatory heart rate monitors). Regardless of diagnostic status, adolescents experienced physiological habituation to the social interactions (i.e., sharp increase in heart rate at beginning of task, followed by gradual decrease in heart rate over course of task). Yet, adolescents' self-reports varied as a function of diagnostic status. Specifically, adolescents experiencing social anxiety disorder were more likely than adolescents not meeting criteria for any mental disorder to self-report stable and high levels of arousal from pre-to-post tasks. This observation is consistent with key components of exposure-based therapies for social anxiety. Indeed, these therapeutic approaches often involve training patients to subjectively perceive decreases in physiological arousal within and across social situations that at the outset of therapy patients find anxiety provoking (e.g., Beidel et al., 2007). Overall, these three examples illustrate how mental health professionals might use independent behavioral, survey, and/or physiological assessments to use and interpret informants' clinical reports of patients' concerns.

False-positive and false-negative reports

A set of constructs related to measurement error but with different implications for the multi-informant approach involves concepts drawn from judgment and decision-making research, namely signal detection theory (SDT; for a review, see Swets, Dawes, & Monahan, 2000). Specifically, let us assume that the parent and teacher reports illustrated in Figures 2a and 2b reflected error-free representations of children's behavior, and the parents and teachers systematically varied in terms of exclusive access to observations of children's behavior as displayed at home and school, respectively. If so, then parents and not teachers would endorse every instance of disruptive behavior displayed at home and not school, whereas teachers and not parents would endorse every instance of disruptive behavior displayed at school and not home. Stated another way, both parents and teachers would evidence perfect rates of positive endorsements of “true cases” of disruptive behavior as displayed within their own context of observation. Parents and teachers would also refrain from endorsing disruptive behavior that only occurred outside of their observational context.

Within SDT, researchers refer to true cases of endorsement as true positives and true cases of non-endorsement as true negatives (see also Swets, 1992). However, one should not expect any measure (e.g., informant's report), to provide an error-free representation of the construct being assessed. Thus, SDT delineates concepts for representing incorrect positive endorsements for non-cases (i.e., false positives) and incorrect non-endorsements for true cases (i.e., false negatives). For instance, in a case in which a parent endorsed disruptive behavior that a teacher did not endorse, if in reality the patient did not display any disruptive behavior in any context, the parent report would reflect a false positive. Conversely, both parent and teacher might fail to endorse disruptive behavior in a case in which the patient actually did display disruptive behavior in both contexts; in this case both reports would reflect false negatives.

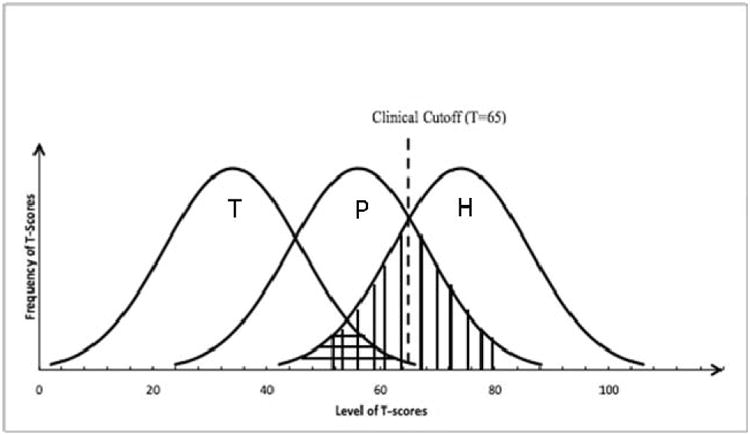

As in addressing measurement error, one might address false positive and false negative outcomes with an independent, context-sensitive assessment of patients' concerns. Figure 3 provides an illustration of this approach. For example, consider a hypothetical sample of children in which teachers and parents completed standardized behavioral checklists of children's disruptive behavior, and researchers also administered an independent observation of children's disruptive behavior in the home context. In Figure 3, the left-most distribution of scores relates to teacher reports, the center distribution to parent reports, and the right-most distribution to the independent home observation. The x-axis denotes a standardized score (i.e., T-score) derived from each assessment to represent children's disruptive behavior. Further, we included a demarcation at the T-score “65” to denote the clinical cutoff at which researchers would identify cases of disruptive behavior. The y-axis denotes the frequency for scores at a given T-score level. Given standardization of the disruptive behavior scores, all three assessment modalities in the example follow a normal distribution.

Figure 3.

Graphical depiction of T-score distributions of teacher reports (left, labeled “T”), parent reports (center, labeled “P”), and independent home-based assessments of disruptive behavior (right, labeled “H”), in a hypothetical sample of young children.

In Figure 3, the independent assessment occurred in the home context. Thus, one would expect greater overlap between identified cases of disruptive behavior concerns using the independent assessment and identified cases using parent report (i.e., relative to teacher report). Thus, using the independent assessment as a reference point, researchers could examine whether patients identified via the independent assessment were more likely to be those patients positively endorsed by parents relative to teachers. Stated another way, the independent assessment allows the research team to identify the proportions of parent and teacher reports evidencing true positives and negatives, as well as false positives and negatives. With these proportions, one could estimate how sensitive (i.e., proportion of true positives relative to false positives) and specific (i.e., proportion of true negatives to false negatives) the parent reports were in detecting home-specific observations of patients' concerns, relative to an informant who observed patients in a context other than home (see also Youngstrom, 2013). One could also structure an assessment similar to that described in Figure 3 to gauge the context-sensitivity of teachers' reports (i.e., independent school observation). Thus, one might incorporate methods from SDT with the OTM to improve use and interpretation of multi-informant assessments.

Informants' cognitive abilities and social desirability

Lastly, how might one interpret the unique qualities of informants' reports, beyond that they reflect contextual variations in patients' concerns, levels of clinical severity, and/or rater biases? That is, discrepancies between adult informants such as parents and teachers might originate from patients' concerns varying across contexts. However, they may also vary in light of the relationship that the patient shares with the informant and the context in which this relationship develops. For instance, a parent may provide a report based on extensive experiences with the patient, and although these experiences may develop over the course of several years, they may not be perceived within the context of normative childhood behavior (De Los Reyes et al., 2009). Conversely, a teacher's relationship with the patient may develop within the context of a single academic year and a large classroom of other students. That is, a teacher's report may reflect a relatively limited time range of experiences with a patient. However, the teacher may have the opportunity to calibrate their report based on normative classroom behavior (see also Drabick et al., 2007).