Abstract

The Workload and Time Management Survey of Central Cancer Registries was conducted in 2011 to assess the amount of time spent on work activities usually performed by cancer registrars. A survey including 39 multi-item questions, together with a work activities data collection log, was sent by email to the central cancer registry (CCR) manager in each of the 50 states and the District of Columbia. Twenty-four central cancer registries (47%) responded to the survey. Results indicate that registries faced reductions in budgeted staffing from 2008–2009. The number of source records and total cases were important indicators of workload. Four core activities, including abstracting at the registry, visual editing, case consolidation, and resolving edit reports, accounted for about half of registry workload. We estimate an average of 12.4 full-time equivalents (FTEs) are required to perform all cancer registration activities tracked by the survey; however, estimates vary widely by registry size. These findings may be useful for registries as a benchmark for their own registry workload and time-management data and to develop staffing guidelines.

Keywords: caseload, central registry staffing, staffing survey, time management, workload

Introduction

As of 2009, more than 12.5 million Americans were living with cancer.1 To help address the incidence of malignant or neoplastic disease, hospital and state-based cancer registries collect and report statistical data to state and federal cancer agencies. As of 2010, there are an estimated 1,500 hospital and 51 central cancer registries in the United States.2 These registries employ over 7,500 specially trained cancer registrars and other staff who collect, manage, and analyze data on persons diagnosed with cancer. The work of cancer registries is critical to informing national and state policy on cancer treatment, cancer research, cancer screening, and cancer preventive services. Despite the invaluable work of the registrars in hospital-based and central cancer registries, little is known about their workload, staffing levels, and challenges in staffing.

Cancer registrars are expected to be experts in cancer patient data management. Their primary responsibilities are to “provide timely, accurate, and complete data” on all cancer diagnoses and patients in the United States.3 In 2006, the National Cancer Registrars Association (NCRA) formed a recruitment and retention taskforce that commissioned a study of cancer registrars to seek more information about the profession, characteristics of registrars, educational pathways and certification, and registrar concerns about their work and workload. That study found that recruiting and keeping qualified cancer registry staff was a major concern of registry managers and directors.4 Although most cancer registrars expressed a strong commitment to their field, many revealed that they felt overwhelmed by the demands of the job, and undervalued given the amount of work they do and its importance to cancer surveillance. A key theme from the focus groups and key informant interviews was the absence of staffing standards and guidelines across registries. Participants stated that workload standards would help them advocate for adequate staffing as well as assist them with staffing plans.5

The purpose of the 2011 Workload and Time Management Survey of Central Cancer Registries was to describe the environment in which registrars work, current issues that may make performing central cancer registry work more challenging, and the relationship between workload and staffing within the registry. The University of California, San Francisco (UCSF) conducted the study with funding from the Centers for Disease Control and Prevention (CDC). Findings from this study provide national data so that central cancer registries may have a benchmark for comparison to their own cancer registry data to make decisions about staff size and configuration. In addition, these findings provide central cancer registry administrators with the data needed to advocate, plan, and budget for their cancer registries.

Methods

With input from the funding organizations, researchers formed a 26-member technical advisory committee (TAC) comprised of cancer registry experts with extensive experience in hospital and central cancer registries. The study team developed and submitted the survey tool and data collection instruments to the US Office of Management and Budget (OMB). The survey instrument included 39 multi-item questions covering the following subject areas:

Facility and registry characteristics

Caseload size and composition

StafÀng and administration

Reporting

Registry procedures

Data management and automation

Registrar activities and workload

Respondent opinions and concerns

OMB approval (control no. 0920-0706) was received on December 5, 2012. An email was sent to each of the 51 CCR managers and included an invitation to the survey, a glossary of words and terms used in the survey, the Work Activities Journal, and instructions for completing the journal. The Work Activities Journal included 20 work activities identified by the researchers and the TAC as the most important components of registrar workload in central cancer registries. Activities in the Work Activities Journal were divided into 3 categories: weekly, monthly, and yearly activities. For frequently performed activities, staff were asked to record the amount of time spent performing those activities each day for 1 week. For less frequently performed activities, staff were asked to estimate the amount of time required to perform those activities on a monthly or annual basis. The manager or director then totaled the times reported for the entire staff and entered the totals for the registry as a whole in the online survey.

After multiple email and telephone reminders, 24 central cancer registries responded to the survey for a response rate of 47% (Appendix 1). One of the central cancer registries was involved in an experimental source record study at the time of this data collection. They received over 1 million source records as part of that study, so their data were excluded from all analyses of this study. Eighteen central cancer registries responded to all questions and 5 responded to some, but not, all questions. Respondents were divided into 4 nearly equivalent group sizes. Data were analyzed for 23 registries using the statistical package Stata. The number of respondents in this survey do not allow for correlation or inferential analyses.

Results

Central cancer registries were asked a number of questions about where they are housed, what organizations they report to, and the reference year for the registry. These factors may be related to workload in that some states may require additional data reporting. Reference year is important in that older registries are likely to follow more cases than newer registries. Nearly 80% of the survey respondents reported that their central cancer registry was housed in a state health department. The remaining 20% reported their central cancer registries were located either in universities or through a consultant relationship with an entity in the state. The average age of the registries, based on reference year, was 15.2 years, with a minimum of 10 years and a maximum of 17 years. The 3 responding registries that report to the National Cancer Institute’s (NCI), Surveillance Epidemiology and End Results (SEER) program had an older mean age; 1 SEER registry reported a reference year dating back 38 years. Of the 24 respondents, 23 respondents reported to the CDC’s National Program of Cancer Registries (NPCR), 2 reported to both CDC-NPCR and NCI-SEER, and 1 reported exclusively to NCI-SEER.

Respondents Concerns about Staffing, Training, and Resources

Staffing, training of staff, and technology are fundamental components of a central registry’s ability to perform. Survey respondents were asked several questions around hiring and retention, adequate staffing, meeting regulatory requirements, staff development, and other concerns. Questions about “concerns” were asked on a 6-point scale, with a score of 1 indicating no concern and a score of 6 indicating extreme concern. Registries were asked if hiring and retention was of concern for them. Over 65% of all registries reported that compensating staff well enough to retain them, finding qualified staff, and funding an additional position were a “strong” or “extreme” concern. About 58% of registries said that staffing another FTE registrar was a “strong” or “extreme” need. Several questions were asked about regulatory requirements and whether the registries needed more training/ development to meet those goals. Few registries reported needing to improve software training, medical or coding training, or needing help to meet specific state, NPCR, or SEER requirements. However, over 54% of registries reported that education/ training for collaborative staging was a “strong” or “extreme” need for staff.

Number of Source Records and Caseload

The survey sought to quantify the levels of caseload, staffing, and time spent in various cancer registration activities. The total number of source records, the origin of those records, and the number of unique cases resulting from those source records may all have an impact on registry workload. Registries in the survey reported receiving, on average, 72,211 source records per year with a range between 4,623 to 290,974 records. Table 1 displays the average and range of source records per quartile of respondents. Survey respondents were also asked about the origin of source records (where they came from) and the means used to transmit them to the registry. On average, over 67% of source records came from hospitals. Nearly 10% came from pathology reports, and another 10% came from other sources. Nearly two thirds of all records were sent to the registries via a secure Web site. Some registries (6.1%) reported traveling to the site to abstract records.

Table 1.

Average Source Records by Quartile of Respondents

| No. of responses | Mean | Median | Min | Max | |

|---|---|---|---|---|---|

| All registries | 23 | 72,211 | 56,023 | 4,263 | 290,974 |

| Quartile 1 | 6 | 7,997 | 8,135.5 | 4,263 | 11,745 |

| Quartile 2 | 6 | 34,567 | 31,690.5 | 17,956 | 56,023 |

| Quartile 3 | 6 | 83,100 | 82,881.0 | 63,155 | 56,023 |

| Quartile 4 | 5 | 181,373 | 142,045.0 | 101,708 | 290,974 |

Source records may be combined to create a single unique case that may or may not be reportable. Workload may be impacted if a large number of source records are reviewed to become a single reportable case. Table 2 displays information on the average number and range of reportable and non-reportable cases reported. Registries reported having, on average, 34,103 unique and reportable cases, and 2,796 unique and non-reportable cases. Registries had, on average, 1.9 source records per case.

Table 2.

Number of Reportable and Non-Reportable Cases

| No. of responses | Mean | Median | Min | Max | |

|---|---|---|---|---|---|

| Unique and reportable cases | 20 | 34,103 | 21,307 | 3,407 | 12,734 |

| Unique and non-reportable cases | 20 | 2,796 | 224 | 0 | 33,215 |

| Source records per case | 20 | 1.9 | 1.5 | 0.8 | 7.1 |

Note: One registry was excluded because it was involved in an experimental source record study and received more than 1 million source records as part of that study. Three registries that did not report both reportable and non-reportable cases were excluded.

Trends in Staffing Budgets

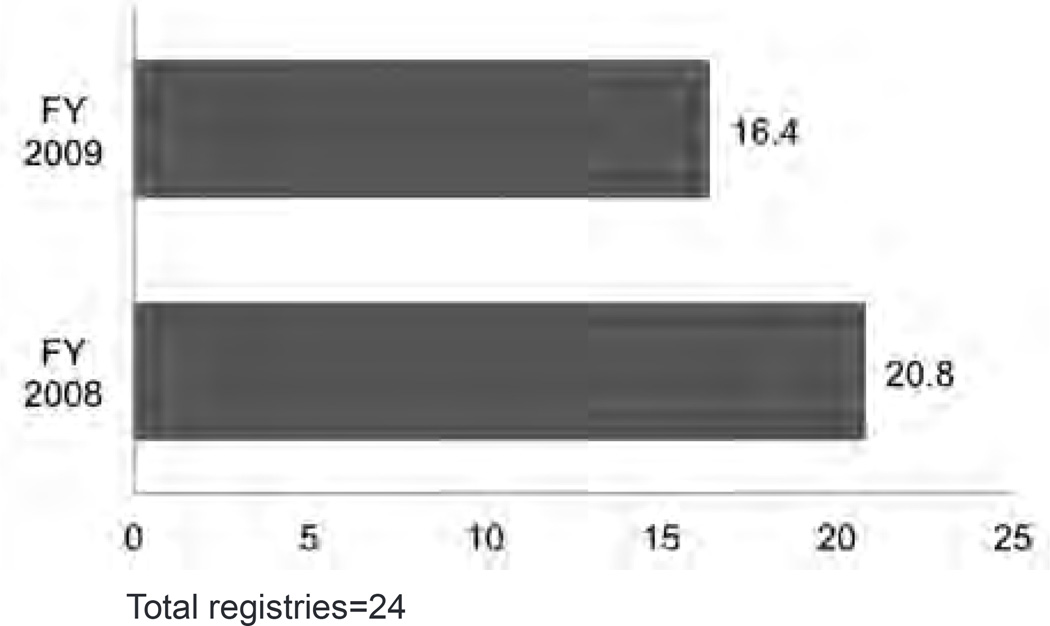

A common theme in the concerns and needs was the level of staffing available to perform central registry functions. Survey respondents reported a decline in budgeted staffing between 2008 and 2009; budgeting on average for 20.8 FTEs in 2008 and 16.4 FTEs in 2009 (Figure 1). The pattern of reduction in budgeted staffing is also seen in the patterns of filled and vacant positions. On average, registries reported 15.8 filled and 1 vacant FTEs in 2008 and 15.2 filled and 1.5 vacant FTEs in 2009.

Figure 1.

Average Budgeted Staff: Comparison of 2008 and 2009

Total registries=24

Hours Worked in Specific Activities

Registrars were asked to collect data and report on weekly, monthly, or yearly hours spent by the registry on specific activities. Ideally, these activities would capture the majority of the cancer registration work. The statistics reported below reflect 17 registries that responded to all questions and did not report unusual circumstances that would influence their hours worked. Respondents were asked how many hours they spent performing the following cancer registration activities:

Case Ànding (manual and electronic)

Abstracting (at hospitals and at the central registry)

Follow-up (active and passive)

Visual editing

Case consolidation (manual and electronic)

Resolving EDIT reports

Resolving quality issues

Audits (caseÀnding, re-abstracting)

Database management

StafÀng training (central registry, reporting)

Travel (operations, conferences)

Death Clearance (matching follow-up)

Findings from this survey identified 4 activities that required the most staff time in respondent registries. Those included the following:

Abstracting at registry

Visual editing

Case consolidation

Resolving EDIT (Evaluation-Guided Development of New In Vitro Tests) reports

These 4 core activities account for approximately half of all the workload at central cancer registries.

The Work Activities Journal is found in Appendix 2.

Table 3 details the weekly hours spent in certain work-load activities. Due to the number of respondents, data could not be analyzed by different sizes of registry. The wide range of minimum and maximum hours reported are likely due to the range in size of registry caseloads. On average, the 17 registries included reported spending the most hours on abstracting at the central registry (69.3 hours per week), electronic case consolidation (59.1 hours per week), visual editing (55.4 hours per week), resolving EDIT reports (31.3 hours per week), and resolving quality issues (29.7 hours per week). On average, the least hours are spent on passive follow-up (6.4 hours per week), travel for conferences/ education (6.4 hours per week), death clearance matching (4 hours per week), travel for operations (3.8 hours per week), and active follow-up (0.9 hours per week). Each registry spent, on average, 435.4 total hours per week performing the activities included in this survey.

Table 3.

Weekly Hours Spent in Workload Activities

| Mean hours | Median hours | Min hours | Max hours | |

|---|---|---|---|---|

| Case finding, manual | 18.1 | 6.0 | 0.0 | 151.0 |

| Case finding, electronic | 18.7 | 1.0 | 0.0 | 109.5 |

| Abstracting, at hospital | 13.1 | 0.0 | 0.0 | 149.0 |

| Abstracting, at central registry | 69.3 | 53.0 | 0.0 | 225.5 |

| Follow-up, active | 0.9 | 0.0 | 0.0 | 10.0 |

| Follow-up, passive | 6.4 | 2.0 | 0.0 | 34.0 |

| Visual editing | 55.4 | 30.0 | 0.0 | 307.8 |

| Case consolidation, manual | 29.0 | 15.0 | 0.0 | 134.0 |

| Case consolidation, electronic | 59.1 | 15.0 | 0.0 | 239.0 |

| Resolving edit report | 31.3 | 10.0 | 0.0 | 102.5 |

| Resolving quality issues | 29.7 | 10.0 | 0.0 | 207.5 |

| Audits, case finding | 23.7 | 20.9 | 0.0 | 88.9 |

| Audit, re-abstracting | 8.1 | 5.3 | 0.0 | 27.0 |

| Database management | 24.3 | 18.6 | 0.0 | 83.7 |

| Training, registry staff | 14.5 | 7.6 | 0.9 | 76.9 |

| Training, reporting facility staff | 10.8 | 5.1 | 0.1 | 59.4 |

| Travel, operations | 3.8 | 1.5 | 0.0 | 20.0 |

| Travel, conferences | 6.4 | 3.3 | 0.2 | 20.0 |

| Death clearance, matching | 4.0 | 2.7 | 0.1 | 16.1 |

| Death clearance, follow-back | 8.9 | 6.2 | 0.8 | 44.7 |

| Total activities | 435.4 | 321.7 | 64.4 | 1304.7 |

Estimating Staffing from Hours Spent on Cancer Registration Activities

The total number of estimated FTEs was calculated, using a 35-hour work week, from the annual hours worked (see Table 4) divided by 1,820 hours per year. This calculation suggests that, in order to perform the cancer registration activities listed, a hypothetical registry with this workload would need at least 12.4 FTEs. The median, or midpoint, of the range of calculated FTEs was 9.2. However, the wide range of estimated FTEs (1.8–37.3) using these data suggests that needs of many registries, particularly those with extremely large source records workloads, may be quite far away from the mean.

Table 4.

Annual Hours Spent in Workload Activities and Calculated FTE

| Mean hours | Median hours | Min hours | Max hours | |

|---|---|---|---|---|

| Case finding, manual | 939.1 | 312.0 | 0.0 | 7,852.0 |

| Case finding, electronic | 972.7 | 52.0 | 0.0 | 5,694.0 |

| Abstracting, at hospital | 679.1 | 0.0 | 0.0 | 7,748.0 |

| Abstracting, at central registry | 3,604.8 | 2,756.0 | 0.0 | 11,726.0 |

| Follow-up, active | 45.9 | 0.0 | 0.0 | 520.0 |

| Follow-up, passive | 331.9 | 104.0 | 0.0 | 1,768.0 |

| Visual editing | 2,881.4 | 1,560.0 | 0.0 | 16,003.0 |

| Case consolidation, manual | 1,505.7 | 780.0 | 0.0 | 6,968.0 |

| Case consolidation, electronic | 3,071.1 | 780.0 | 0.0 | 12,428.0 |

| Resolving edit report | 1,625.8 | 520.0 | 0.0 | 5,330.0 |

| Resolving quality issues | 1,546.6 | 520.0 | 0.0 | 10,790.0 |

| Audits, case finding | 1,222.8 | 1,080.0 | 0.0 | 4,587.0 |

| Audit, re-abstracting | 418.6 | 276.0 | 0.0 | 1,392.0 |

| Database management | 1,252.1 | 960.0 | 0.0 | 4,320.0 |

| Training, registry staff | 753.4 | 396.0 | 48.0 | 3,997.5 |

| Training, reporting facility staff | 563.4 | 266.5 | 3.0 | 3,090.0 |

| Travel, operations | 200.2 | 80.0 | 0.0 | 1,040.0 |

| Travel, conferences | 332.3 | 170.0 | 8.0 | 1,042.0 |

| Death clearance, matching | 209.3 | 140.0 | 7.0 | 835.0 |

| Death clearance, follow-back | 462.8 | 320.0 | 40.0 | 2,325.0 |

| Total activities | 22,618.8 | 16,710.2 | 3,342.0 | 67,809.0 |

| Mean FTE | Median FTE | Min FTE | Max FTE | |

| Estimated FTE (total activities/2034) | 12.4 | 9.2 | 1.8 | 37.3 |

FTE=full-time equivalent.

Discussion

This survey of central cancer registries was an important first step at the national level to describe and collect information on registry staffing and workload. These results provide some important new information that can be used by registries to compare their staffing and workload with other registries. The survey also provides a framework for assessing workload and staffing needs that could be replicated by states over time and may be useful for staff training or in the distribution of workload within a registry.

A key finding from this study is that workload standards are in place in some registries, although we do not have information on when those workload standards were established or how they compare or differ across registries. About 20% of respondents reported that they have workload standards for all positions. Central cancer registries may want to develop a resource to share best practices in workload staffing across registries.

This study also highlighted important changes in budgeted staffing, filled positions, and vacancy rates. In recent years, there has been a decrease in filled positions and higher rate of vacancies in central cancer registries. This may be due to state budget cuts as well as difficulties in recruiting that have been highlighted in other studies.2,4 Registries reported an average of 0.6 fewer filled positions between 2008 and 2009 and an average vacancy of 1.5 FTEs in 2009. This may impact productivity and the ability to meet state and national reporting requirements. Registries may use these workload data as evidence to support the need for specific positions, such as the need for more certified tumor registrars. Using similar data collection tools, registries could study workload over time as operational changes occur, such as the introduction of additional reporting requirements, and use that data to identify changes needed in staffing.

This study highlighted that both the number of source records reviewed and the number of cases are measures that can indicate workload. The relationship between the number of source records and reportable and non-reportable cases varied greatly among registries. The number of cases ranged from about 3,400 to 160,500 with a mean of nearly 37,000 cases. The vast majority of these cases were reportable. The number of source records had even more variation, ranging from about 56,000 to over 290,000 with a mean of 72,000 source records. If a registry reviews a greater than average number of case records to form a single case, such as in the case of the registry that reviewed over 1 million source records, the need for staff would be greater than indicated by merely looking at the number of cases reported.

Limitations

The survey has several limitations that were noted as well by some of the respondents. In the open-ended comments at the end of the survey, several respondents noted that the list of work activities on the data collection tool was not comprehensive enough to capture all the work that they do, or sufficiently detailed to allow them to determine which practices fell under which questions. Therefore, reports in this section may be an underestimate or a lower bound of the actual hours spent by registrars performing their work.

Another limitation with these data is that not every registry reported hours for all activities listed. In addition, the total FTEs reported may be an overestimate if senior level staff were counted in the FTE total but do not perform any or most of the activities listed in the Work Activities Journal. Central registry staff who perform non-cancer registration functions may also have been erroneously included in the FTE count. However, their hours and type of work are not captured in the activities journal. This limitation may have the impact of causing an underestimate of the workload and staffing estimates compared to caseload. Future workload studies should further delineate and track the time it takes for cancer registration and non-cancer registration activities.

This first time survey of staffing and workload in central cancer registries provides descriptive information about these registries as well as baseline information about workload and staffing. Respondents expressed a concern about adequate staffing and the need to find qualified cancer registry staff to hire. These concerns are consistent with those expressed in the 2011 hospital registry workload study and the 2006 NCRA workforce study of cancer registrars.2,4,5 These workload findings and staffing guidelines provide an opportunity for registries to compare to their own registries and assess how their registries may differ. These findings may help central cancer registries to begin to build the evidence for the staffing needed to meet the cancer data reporting objectives and requirements.

Acknowledgments

Linda Mulvihill is a Public Health Advisor for the Centers for Disease Control and Prevention. The findings and conclusions in this publication are those of the author(s) and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Appendix 1

List of State Registries Responding to the Survey

Alaska Cancer Registry

Arizona Cancer Registry

Arkansas Cancer Registry

Illinois State Cancer Registry

Kansas Cancer Registry

Louisiana Tumor Registry

Massachusetts Cancer Registry

Minnesota Cancer Surveillance System

Montana Central Tumor Registry

Nebraska Cancer Registry

New Hampshire Cancer Registry

New Jersey State Cancer Registry

New Mexico Cancer Registry

New York State Central Cancer Registry

North Carolina Central Cancer Registry

North Dakota Statewide Cancer Registry

Ohio Cancer Incidence Surveillance System

Oklahoma Central Cancer Registry

Pennsylvania Cancer Registry

South Dakota Cancer Registry

Tennessee Cancer Registry

Texas Cancer Registry

Vermont Cancer Registry

West Virginia Cancer Registry

Appendix 2

Work Activities Journal

| NCRA/ CDC-NPCR Workload & Time Management Survey of Central Cancer Registries Work Activities Journal OMB No. 0920-0706 | |||||||

|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | |

| 1 | Weekly Activities | ||||||

| 2 | Job Activities | Day1 | Day 2 | Day 3 | Day 4 | Day 5 | Weekly Total |

| 3 | hh:mm | hh:mm | hh:mm | hh:mm | hh:mm | hh:mm | |

| 4 | Casefinding: | ||||||

| 5 | Manual | ||||||

| 6 | Electronic | ||||||

| 7 | Abstracting: | ||||||

| 8 | Abstracting at hospital/facility | ||||||

| 9 | Abstracting at central registry | ||||||

| 10 | Follow Up: | ||||||

| 11 | Active follow-up | ||||||

| 12 | Passive follow-up | ||||||

| 13 | Quality Assurance: | ||||||

| 14 | Visual editing | ||||||

| 15 | Manual case consolidation | ||||||

| 16 | Electronic case consolidation | ||||||

| 17 | Resolving EDIT reports | ||||||

| 18 | Resolving other quality control issues | ||||||

| 19 | Monthly Activities | ||||||

| 20 | Audits: | hh:mm | |||||

| 21 | Casefinding audits | ||||||

| 22 | Re-abstracting audits | ||||||

| 23 | Database Management: | hh:mm | |||||

| 24 | Database management | ||||||

| 25 | Yearly Activities | ||||||

| 26 | Training/Development: | hh:mm | |||||

| 27 | Central registry staff | ||||||

| 28 | Reporting facility staff | ||||||

| 29 | Travel: | hh:mm | |||||

| 30 | For registry operations (eg, facility site visits for technical assistance, one-on-one training, software support, etc) | ||||||

| 31 | For education/workshops/conferences | ||||||

| 32 | Death Clearance: | hh:mm | |||||

| 33 | Death clearance matching | ||||||

| 34 | Death clearance follow back | ||||||

References

- 1.Howlader N, Noone AM, Krapcho M, et al., editors. SEER Cancer Statistics Review, 1975–2009 (Vintage 2009 Populations) Bethesda, MD: National Cancer Institute; 2012. Available at: http://seer.cancer.gov/csr/1975_2009_pops09. [Google Scholar]

- 2.Chapman SA, Lindler V. NCRA Workload and Staffing Study: Guidelines for Hospital Cancer Registry Programs. University of California, San Francisco, Center for the Health Professions. Full Report. 2011 Jan [Google Scholar]

- 3.National Cancer Registrars Association. Cancer Registrar FAQ. [Accessed January 21, 2012]; Available at: http://www.ncra-usa.org/i4a/pages/index.cfm?pageid=3301. [Google Scholar]

- 4.Chapman SA, Lindler V, McClory V, Nielsen C, Dyer W. Frontline Workers in Cancer Data Management: Workforce Analysis Study of the Cancer Registry Field; Final Report. University of California, Center for the Health Professions and Department of Social and Behavioral Sciences, School of Nursing; 2006. Jun, [Google Scholar]

- 5.Mulvihill L, Chapman S, Lindler V. Frontline Workers in Cancer Data Management: Workforce Analysis Study of the Cancer Registry Field. J Registry Manage. 2006;33(3):89–90. [Google Scholar]