Abstract

Visual attention has long been known to be drawn to stimuli that are physically salient or congruent with task-specific goals. Several recent studies have shown that attention is also captured by stimuli that are neither salient nor task-relevant, but that are rendered in a color that has previously been associated with reward. We investigated whether another feature dimension—orientation—can be associated with reward via learning and thereby elicit value-driven attentional capture. In a training phase, participants received a monetary reward for identifying the color of Gabor patches exhibiting one of two target orientations. A subsequent test phase in which no reward was delivered required participants to search for Gabor patches exhibiting one of two spatial frequencies (orientation was now irrelevant to the task). Previously rewarded orientations robustly captured attention. We conclude that reward learning can imbue features other than color—in this case, specific orientations—with persistent value.

Keywords: attentional capture, reward learning, orientation, Gabor patch

The perception of visual scenes often entails the selection of some stimuli (and not others) by attention. Attention can be voluntarily deployed in accordance with ongoing goals, or it can be captured involuntarily. The ability to voluntarily deploy visual attention selectively is critical for effective behavioral performance. Attention increases the speed and accuracy with which organisms identify stimuli and subsequently take appropriate action (e.g., Posner, 1980). Focused attention can also reduce distraction by irrelevant stimuli and thereby reduce the likelihood of erroneous or slowed responses (Yantis & Johnston, 1990). Attention can also be involuntarily captured under some circumstances. Two forms of attentional capture have been well documented: capture by stimuli that are physically salient (e.g., Theeuwes, 1992, 2010; Yantis & Jonides, 1984), and capture by stimuli that are congruent with current search goals (e.g., Folk, Remington, & Johnston, 1992; Folk & Remington, 1998).

Recently we reported that attention can also be captured by stimuli that are neither physically salient nor goal-relevant, but instead possess features that have previously been associated with reward—even when that reward is no longer available (Anderson, Laurent, & Yantis, 2011a, 2011b, 2012; Anderson & Yantis, 2012, 2013; see Anderson, 2013, for a review). This phenomenon, termed value-driven attentional capture, is sufficiently robust to persist across experiment contexts (e.g., from a visual search task to a flankers task; see Anderson et al., 2012) and over several months with no further training (see Anderson & Yantis, 2013). Such findings argue that learned value plays a distinct role in the guidance of attention.

These prior demonstrations of value-driven attentional capture have typically used color as the critical feature that is associated with reward and that comes to capture attention. For example, in Anderson et al. (2011b), red and green targets were each associated with different amounts of reward during a training phase; in a subsequent task requiring search for particular shapes, formerly rewarded but currently irrelevant colors captured attention. In the present study we sought to determine whether perceptual features other than color can be imbued with value and thereby elicit the capture of attention. Color might be especially susceptible to being associated with rewarding outcomes; like intensity, color is represented at the earliest stages of the visual system. The possible extension of value-driven attentional capture to features other than color would suggest there may exist a general capacity for associating reward with arbitrary features, with the reward-associated features then serving to guide the deployment of attention.

Attentional biases for reward-associated stimulus features other than color have been reported in two prior studies. Following reward training in which each of two oriented bars were associated with different amounts of reward, these oriented bars served as physically salient shape-singleton distractors during an unrewarded test phase (Theeuwes & Belopolsky, 2012). Oculomotor capture was found to be greater for the oriented bar previously associated with a comparatively high reward, mirroring effects for reward-associated colors observed in a covert attention task (Anderson et al., 2011a). In one of the first studies of value-driven attention, Della Libera and Chelazzi (2009) employed a design in which different complex shapes were associated with high reward when presented as either a target or a distractor. Subsequent competition between a target shape and a superimposed previously-reward-associated distractor shape was found to be modulated by reward history. Specifically, the distractor competed for attention more robustly when previously associated with high reward as a target, and less robustly when previously associated with high reward as a distractor.

Although both of these elegant studies document an influence of prior reward on attention to features other than color, it remains unclear whether such features can specifically influence the guidance of attention when associated with reward. The effects of learned value reported by Della Libera and Chelazzi (2009) reflect biases in the ability of attention to resolve spatiotemporal competition; in fact, the same reward-associated shapes were shown not to involuntarily capture attention when presented as distractors in an orienting task (Della Libera & Chelazzi, 2009, Experiment 2). In Theeuwes and Belopolsky (2012), factors other than the learned value of a specific orientation would be expected to guide attention to the distractors, owing to their relative physical salience and status as a shape-singleton. Therefore, in the present study, we paired specific Gabor patch orientations with high and low reward during training, and assessed whether those orientations would capture attention in a subsequent test phase when presented as an irrelevant and unrewarded distractor feature. Importantly, in our design, the orientation of the distractor was not physically salient, such that its specific orientation was all that differentiated it from other nontargets. Attentional capture by such reward-associated distactors would be uniquely attributable to attention having selected the distactor by virtue of the fact that its orientation had been previously paired with reward.

All previous studies of value-driven attentional capture by nonsalient stimuli have required a discrimination of stimulus shape in the test phase (e.g., diamonds vs. circles) and value was associated with stimulus color (learned during the previous training phase). Shape is resolved at higher levels of the ventral visual stream (represented explicitly in area V4; Pasupathy & Connor, 2002) than color (represented explicitly in V1; Johnson, Hawken, & Shapley, 2008). One possibility is that value-driven attentional capture only occurs when reward-associated features are represented earlier in the visual processing stream than the task-relevant features, as was the case in our prior experiments (e.g., Anderson et al., 2011a, 2011b, 2012; Anderson & Yantis, 2012, 2013).

The present experiment offers an opportunity to test this idea. The task-relevant dimension at test (spatial frequency) and the feature imbued with value during training (orientation) are represented at similar early levels of the visual system (Victor et al., 1994; Mazer et al., 2002). This experiment will therefore allow us to determine whether value-driven attentional capture is observed even when the target and distractor features are both represented at similar stages of visual processing.

The present experiment included a training phase and a test phase. In the training phase, one of the two target orientations (the high-value orientation) was usually followed by high reward and the other orientation was usually followed by low reward. Participants then completed a test phase in which they searched for a target Gabor patch that exhibited a unique spatial frequency, and once again reported its color; the orientation of the Gabor patches constituted a heterogeneous, nonsalient feature that was not task-relevant. The results revealed that formerly rewarded orientations came to capture attention in a later task when participants searched for spatial frequency singletons. A control experiment ruled out the influence of any physical properties of the reward-associated stimuli in driving such capture.

Both visual working memory (VWM) and resistance to attentional capture in part reflect the ability to exert control over information processing (e.g., Vogel, McCollouge, & Machizawa, 2005). Consistent with this, attentional capture by previously rewarded colors has been shown to be inversely correlated with VWM capacity using a color change detection task (Anderson et al., 2011b, 2013a; Anderson & Yantis, 2012). To test whether this relationship would extend to attentional capture by previously rewarded orientations, we also had participants perform the same change detection task that was used in these prior studies.

Method

Participants

Twenty participants were recruited from the Johns Hopkins University community. All were screened for normal or corrected-to-normal visual acuity and color vision. For the rewarded portion of the task, participants earned monetary compensation based on their performance ranging from $17.00 to $24.00 (mean = $21.60). For the remainder of the experiment, participants were paid a flat rate of $10 per hour. Twenty new participants were recruited for a control experiment and received course credit as compensation.

Apparatus and Tasks

Participants viewed stimuli presented in a dimly lit room at a distance of 61 cm on an Asus VE247 LCD monitor running at a resolution of 1920 × 1080 pixels at 75 Hz. Stimuli were generated by a Mac Mini running Matlab software and custom-written software using the Psychophysics toolbox (Brainard, 1997).

Visual Working Memory (VWM) Task

Immediately prior to the main experiment we collected a measure of VWM capacity using a custom Matlab/Psychophysics Toolbox implementation of a color change detection task (Fukuda & Vogel, 2009). On each of 120 trials, a fixation cross appeared in the center of the screen for 500 ms. A randomly generated memory array of four, six, or eight small non-overlapping squares, each a different color, was then presented on the screen for 100 ms. Each square subtended approximately 0.5° of visual angle. After a 900 ms blank period, a probe screen was displayed showing a single colored square in the same position that a square had previously occupied in the memory array. Participants made an unspeeded key press to indicate whether the square on the probe screen had the same color as the corresponding square from the memory array. To respond in the affirmative, participants pressed the ‘z’ key; otherwise participants pressed the ‘m’ key. No feedback was provided. Visual working memory capacity was computed from the responses as described by Fukuda and Vogel (2009).

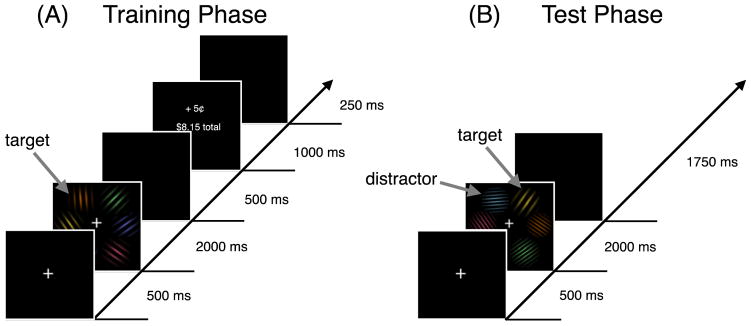

Training Phase

As shown in Figure 1A, each training phase trial began with a fixation display, followed by a search array, and ended with a feedback display. All displays had a black background. The fixation display consisted of a central white cross (0.5° × 0.5° of visual angle). The search array consisted of the fixation cross and six Gabor patches (approximately 2.3° × 2.3° of visual angle) equally spaced along an imaginary circle with a radius of 5°. Each of the six Gabor patches was rendered in a different color (red, orange, yellow, green, cyan, or indigo). Each display included a single target stimulus: on half of the trials, the target had a near-vertical orientation (356° or 4°), and on the other half of the trials the target was near-horizontal in orientation (86° or 94°). These tilt offsets were introduced to avoid preferential selection by preattentive processes that are known to occur for non-oblique orientations (Meigen et al., 1994); this encouraged attention to the specific orientation of the target, thereby maximizing the featural specificity of the reward learning. The direction of the tilt offset of the target was randomly selected on each trial. The remaining five nontarget stimuli were presented at orientations from the set of 35°, 45°, 55°, 125°, 135°, and 145° without replacement. Each of the Gabor patches had a spatial frequency of either 4 cycles per degree or 8 cycles per degree, randomly selected. The feedback display informed participants of the amount of reward earned on the present trial (or no reward if the response was in error), and the total amount of reward they had earned to that point.

Figure 1.

Sequence of trial events in the training phase (A) and test phase (B). In the training phase, the target was a near-vertically or near-horizontally oriented Gabor patch. In the test phase, the target was a low spatial frequency Gabor patch among high spatial frequency Gabor patches or a high spatial frequency Gabor patch among low spatial frequency Gabor patches.

Test Phase

As shown in Figure 1B, each test phase trial began with a fixation display, followed by a search array. The search array was similar to that in the training phase with two important differences. First, the target was now a spatial frequency singleton, either 8 cycles per degree (when presented among 4-cycles-per-degree nontarget stimuli) or 4 cycles per degree (when presented among 8-cycles-per-degree nontarget stimuli). Second, on half of the trials one of the nontargets was a distractor: on one-quarter of the trials the distractor was oriented near-horizontally; on another quarter of the trials the distractor was oriented near-vertically. All of the other stimuli, including the target, had orientations from the set of 35°, 45°, 55°, 125°, 135°, and 145° without replacement.

Control Experiment

Participants in the control experiment performed only the test phase without the prior training phase.

Design and Procedure

The experimenter provided participants with written and oral instructions at the beginning of the experiment. The training phase took place over two days no more than two days apart, with 576 trials on the first day and a further 240 trials on the second day. The test phase took place after the training phase trials on the second day and consisted of 480 trials. Participants were given the total amount of compensation they had earned at the end of their participation on the second day.

Training Phase

In the training phase, each trial began with a fixation display presented for 500 ms, followed by the onset of the array of Gabor patches. The display remained visible for 2000 ms during which participants were to respond. The screen was then blank for 500 ms and was followed by the feedback display for 1000 ms. The next trial began after a 250 ms blank screen.

Participants were instructed to perform a two-alternative forced choice task in which they reported the color of the near-horizontal or near-vertical Gabor patch in the display. If the patch was of a “cool” color (i.e., green, cyan, or indigo) participants were instructed to press the “z” key on the keyboard using their left index finger. If the patch was of a “warm” color (i.e., red, orange, or yellow) participants were instructed to press the “m” key using their right index finger.

Following each correct response in the training phase, a feedback screen indicated the amount of monetary reward participants earned from that trial and the total amount of reward obtained during the experiment thus far. For half of the participants, the near-vertical orientations were high-value targets and the near-horizontal orientations were low-value targets. This was reversed for the remaining participants. Correct responses to high-value targets resulted in a 5 cent reward 80% of the time, and a 1 cent reward 20% of the time. Correct responses to low-value targets results in a 1 cent reward 80% of the time, and a 5 cent reward 20% of the time. After incorrect responses, the word “Incorrect” would appear above the total compensation received so far. If the responses exceeded the time limit, the words “Too slow” would appear.

To ensure that the instructions were understood and to encourage the participants to ask any questions, the experimenter remained present while participants completed 20 unrewarded practice trials prior to the training phase trials on the first day. Each practice trial allowed an additional 2000 ms for responses. Participants were given a rest break every 100 trials.

Test Phase

In the test phase, the trial sequence was the same except that no feedback was displayed unless participants had been too slow to respond, in which case the words “Too slow” appeared for 1000 ms. Throughout the experiment, the displays were fully randomized and counterbalanced for the location and identity of targets and distractors. Participants reported the color of the target following the same procedure, and total trial duration matched that of the training phase.

Data Analysis

Response time analyses were limited to only correct responses within three standard deviations of the mean for each condition for each participant.

Results

Training Phase

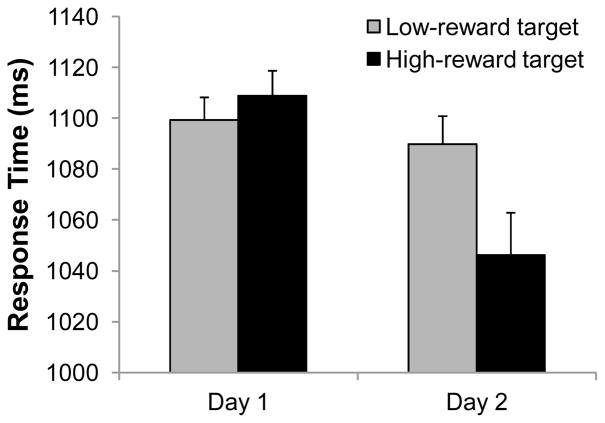

An analysis of variance (ANOVA) on mean RT in the training phase using the value of the target (high vs. low) and day (day 1 vs. day 2) as factors revealed a main effect of day [F(1,19) = 4.41, p = .049] and a significant interaction [F(1,19) = 5.32, p = .033]; the main effect of target value was not significant [F(1,19) = 1.95, p = .179] (see Figure 2). Although RT was similar for high- and low-value targets on day 1 [t(19) = −1.25, p = .227], participants were marginally faster to report the high-value target on day 2 [t(19) = 1.94, p = .067]. The same ANOVA on accuracy revealed only a main effect of day [F(1,19) = 14.19, p = .001] (other F’s < 1). Mean accuracy during the training phase was 87.1% on day 1 and 91.3% on day 2.

Figure 2.

RT during the Training Phase for Day 1 and Day 2. Error bars are within-subject standard errors of the mean.

In the training phase, task-specific goals and reward value favored the same stimuli (i.e., the targets). Of primary interest were the results from the test phase, in which the learned value of particular orientations competed with task-specific goals for attentional selection, allowing for assessment of value-driven attentional capture.

Test Phase

In the test phase, participants produced faster correct responses when the target was low in spatial frequency than when it was high in spatial frequency [mean difference = 32 ms, t(19) = 2.13, p = .047]. This replicates a well-known effect of spatial frequency and contrast on RT (Lupp, Hauske, & Wolf, 1976; Vassilev & Mitov, 1976; Breitmeyer, 1975).

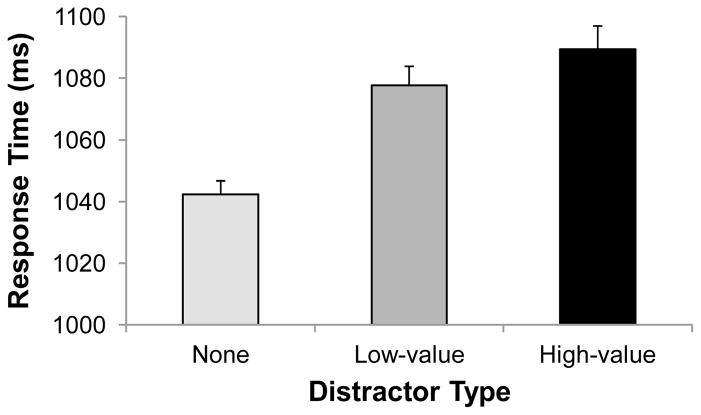

RT during the test phase did not differ significantly for near-horizontal vs. near-vertical distractors [mean difference = 23 ms, t(19) = 1.85, p = .081]. We therefore collapsed across distractor orientation and focused our analyses on the effects of reward on attentional capture by the distractors. RT differed significantly for the three distractor conditions [see Figure 3, F(2,38) = 10.02, p < .001]. The presence of a formerly high-value distractor slowed participants by 47 ms and the presence of a low-value distractor slowed participants by 35 ms compared to trials without distractors [t’s > 4.3, p’s < .001]; the difference in RT between the high- and low-value distractor conditions was not significant [t(19) = 0.89, p = .383]. Accuracy similarly differed for the three distractor conditions [F(2,38) = 3.39, p = .044], with participants being less accurate when a high-value distractor was present (89.7%) compared to low-value distractor (91.3%) and distractor absent trials (91.5%).

Figure 3.

Response time (RT) from the test phase for correct responses to displays containing no distractor, a formerly low-value distractor, and a formerly high-value distractor. Error bars are within-subject standard errors of the mean.

There was a marginally significant correlation between the magnitude of attentional capture by the high-value orientation at test (high-value distractor minus distractor-absent conditions) and the effect of reward value on RT during training (low-value minus high-value target conditions) [Pearson’s r = 0.412, p = .071]. Participants who afforded higher attentional priority to high-value targets during training also tended to be more distracted by these stimuli at test. We found no significant correlation between VWM capacity (mean 2.58, SD = 1.07) and overall accuracy [Pearson’s r = −0.20, p = .40], suggesting that individuals with low capacity did not generally perform poorly at the task. Also, there was no correlation between capacity and the magnitude of RT slowing by the presence of a high-value distractor [Pearson’s r = 0.01, p = .96], despite the fact that intersubject variability was 40% greater than in previous studies in which a significant correlation was observed (Anderson et al., 2011b).

Control Experiment

Although both reward-associated orientations captured attention in the main experiment, the difference in capture between high- and low-value orientations was not statistically significant. Therefore, it remains possible that the physical characteristics of these stimuli were responsible for their ability to capture attention. To rule out such non-value-related influences, we ran a control experiment in which we examined the influence of these same orientated stimuli without any prior training.

Participants were just as accurate in the control experiment as they were in the main experiment (mean accuracy = 90.9%). Near-vertical and near-horizontal orientations were not differentially associated with high and low reward, and as expected, we found no effect on RT of the presence of a near-vertical or near-horizontal distractor [F(2,38) = 2.32, p = .112]. The mean RTs for trials with near-horizontal distractors, near-vertical distractors, and no distractors were, respectively, 1018 ms, 994 ms, and 996 ms.

We directly compared RT in the presence of near-vertical and near-horizontal distractors (compared to distractor absent trials) for the main reward experiment and the control experiment. The slowing in RT caused by the distractors was greater when they were previously associated with reward [Welch two-sample t-test: t(29.56) = 3.06, p = .005]. This analysis confirms that attentional capture in the reward experiment’s test phase was driven by learned value and not simply by the physical salience of near-vertical and near-horizontal orientations.

Discussion

Attention is captured by stimuli whose color has been paired with reward (e.g., Anderson et al., 2011b). Here we examined whether another visual feature, orientation, could be similarly imbued with value during learning so that it would capture attention. We associated different Gabor-patch orientations with different amounts of reward in a training phase, and assessed whether those orientations would capture attention when they were irrelevant and unrewarded in a test phase. We found that formerly rewarded orientations reliably capture attention even when those orientations are no longer rewarded and no longer task-relevant. Formerly rewarded orientations also capture attention when the task-relevant stimulus feature is spatial frequency, even though both features are represented at similar early stages of processing in the visual system.

In the present study, although attentional capture by high-value distractors was numerically larger than attentional capture by low-value distractors, this difference was not statistically reliable. This general pattern has been observed in several prior studies using nonsalient but previously reward-associated distractors (Anderson et al., 2011b, 2013a, 2013b, in press; Anderson & Yantis, 2012) and suggests that value-driven attentional biases are sensitive to the presence of a valuable stimulus without necessarily scaling to its precise value. Prior research has repeatedly shown that search history, independent of reward feedback, cannot explain value-driven attentional capture (Anderson et al., 2011a, 2011b, 2012; Failing & Theeuwes, 2014; Qi, Zeng, Ding, & Li, 2013; Wang, Yu, & Zhou, 2013). A control experiment ruled out physical characteristics of the oriented distractors as an explanation for the attentional capture that we observed by these stimuli following reward training.

Response time was slower on distractor-absent trials in the present study than in our prior reports of value-driven attentional capture (>1000 ms vs ~650–800 ms; Anderson et al., 2011a, 2011b, 2013b, in press; Anderson & Yantis, 2012). This raises the possibility that participants engaged in serial search in the present study, and that the observed slowing of RT reflects disengagement costs occurring post-selection (Posner, Walker, Friedrich, & Rafal, 1984). However, we think it is unlikely that participants employed a serial search strategy in this task. The target was a feature singleton and should attract attention without the need for serial search (e.g., Theeuwes, 1992, 2010). In addition, serial search was discouraged by the fact that the target was defined by its singleton status, which can only be determined relative to other stimuli. One important difference between the present study and these prior studies that could account for slower RTs is that the feature-to-response mapping in the present study was 3-to-1 (warm vs cool colors) whereas in the prior studies it was always 1-to-1. This change was implemented to increase the heterogeneity of the stimuli used in the present study and thus minimize any physical salience attributable to near-vertical and near-horizontal orientations. Such an increase in the decision-making burden of the task might have slowed overall RT.

No relationship was observed between attentional capture by formerly rewarded orientations and VWM capacity for colors. This is in contrast to prior studies demonstrating a reliable negative correlation between VWM capacity and value-driven attentional capture by color distractors (Anderson et al., 2011b, 2013a; Anderson & Yantis, 2012), which was interpreted to reflect a shared reliance on the ability to exert control over information processing. Although the reasons for this discrepancy are unclear, one possibility is that the relationship between VWM capacity and attentional capture is specific to the ability to exert control over information processing within a particular feature dimension (see, e.g., Alvarez, & Cavanagh, 2008; Becker, Miller, & Liu, 2013). That is, the capacity of VWM for colors may be a better predictor of the ability to ignore color distractors than it is of the ability to ignore distractors defined in other feature dimensions such as orientation. It is perhaps noteworthy that other previous studies relating VWM capacity to attentional capture (in this case, contingent attentional capture) have also been entirely in the domain of color (Fukuda & Vogel, 2009, 2011).

An interesting question concerns the nature of the learning mechanism underlying the observed value-driven attentional capture. One possibility is that the mechanism is similar to that involved in eliciting rewarded actions (i.e., instrumental conditioning), but for a non-motor “action”—a shift of covert visual attention. Covert visual attention shifts may be thought of as particularly “low-cost” actions (both in terms of metabolic and response time cost) that are likely to lead to future rewards if informed by prior reward history (see Laurent, 2008). In this sense, they serve a function that is similar to their motoric counterparts. Another possibility is that when stimuli are learned to serve as a predictive cue for reward, they acquire the incentive properties of the reward itself through classical/Pavlovian conditioning (Berridge & Robinson, 1998) and thereby gain a higher priority for selection. It has been suggested that both classical and instrumental conditioning can influence attention, depending on how rewards are perceived (Chelazzi, Perlato, Santandrea, & Della Libera, 2013); either or both of these mechanisms could contribute to value-driven attentional capture.

Our findings demonstrate two basic principles of value-based attentional priority. First, value-based attentional priority can be assigned to different features of a visual stimulus, suggesting a broad influence of reward history on attention that spans multiple stages of visual processing. Second, value-based attentional priority can robustly compete with goal-directed attentional selection even when the reward-associated feature is not represented earlier in the visual processing stream, indicating that value-driven attentional capture does not depend on the reward-associated feature having a privileged status. The present study furthers our understanding of the scope of reward’s influence on attention, which has broad implications for theories linking reward to the attention system (e.g., Anderson et al., 2011a, 2011b; Della Libera & Chelazzi, 2006, 2009; Hickey, Chelazzi, & Theeuwes, 2010; Raymond & O’Brien, 2009).

Acknowledgments

We are grateful to Emma Wampler for technical assistance. This work was funded by NIH grant R01-DA013165 to Steven Yantis and fellowship F31-DA033754 to Brian A. Anderson.

Footnotes

Author Contributions

P.A.L., B.A.A., and S.Y. conceived of the experiment concept. All authors contributed to the design of the experiment. P.A.L. and M.G.H. collected the data. P.A.L. and B.A.A. analyzed the data. All authors contributed to the writing and editing of the manuscript.

References

- Alvarez GA, Cavanagh P. Visual short-term memory operates more efficiently on boundary features than on surface features. Perception and Psychophysics. 2008;70:346–364. doi: 10.3758/pp.70.2.346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA. A value-driven mechanism of attentional selection. Journal of Vision. 2013;13(3):7, 1–16. doi: 10.1167/13.3.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Faulkner ML, Rilee JJ, Yantis S, Marvel CL. Attentional bias for non-drug reward is magnified in addiction. Experimental and Clinical Psychopharmacology. 2013a;21:499–506. doi: 10.1037/a0034575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, Yantis S. Learned value magnifies salience-based attentional capture. PLoS ONE. 2011a;6:e27926. doi: 10.1371/journal.pone.0027926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, Yantis S. Value-driven attentional capture. Proceedings of the National Academy of Sciences USA. 2011b;108:10367–10371. doi: 10.1073/pnas.1104047108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, Yantis S. Generalization of value-based attentional priority. Visual Cognition. 2012;20:647–658. doi: 10.1080/13506285.2012.679711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Laurent PA, Yantis S. Reward predictions bias attentional selection. Frontiers in Human Neuroscience. 2013b;7:262. doi: 10.3389/fnhum.2013.00262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Leal SL, Hall MG, Yassa MA, Yantis S. The attribution of value-based attentional priority in individuals with depressive symptoms. Cognitive, Affective, and Behavioral Neuroscience. doi: 10.3758/s13415-014-0301-z. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Yantis S. Persistence of value-driven attentional capture. Journal of Experimental Psychology: Human Perception and Performance. 2013;39:6–9. doi: 10.1037/a0030860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson BA, Yantis S. Value-driven attentional and oculomotor capture during goal-directed, unconstrained viewing. Attention, Perception, and Psychophysics. 2012;74:1644–1653. doi: 10.3758/s13414-012-0348-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker MW, Miller JR, Liu T. A severe capacity limit in the consolidation of orientation information into visual short-term memory. Attention, Perception, and Psychophysics. 2013;75:415–425. doi: 10.3758/s13414-012-0410-0. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: Hedonic impact, reward learning, or incentive salience? Brain Research Reviews. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Breitmeyer BG. Simple reaction time as a measure of the temporal properties of sustained and transient channels. Vision Research. 1975;15:1411–1412. doi: 10.1016/0042-6989(75)90200-x. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Perlato A, Santandrea E, Della Libera C. Rewards teach visual selective attention. Vision Research. 2013;85:58–72. doi: 10.1016/j.visres.2012.12.005. [DOI] [PubMed] [Google Scholar]

- Della Libera C, Chelazzi L. Visual selective attention and the effects of monetary reward. Psychological Science. 2006;17:222–227. doi: 10.1111/j.1467-9280.2006.01689.x. [DOI] [PubMed] [Google Scholar]

- Della Libera C, Chelazzi L. Learning to attend and to ignore is a matter of gains and losses. Psychological Science. 2009;20:778–784. doi: 10.1111/j.1467-9280.2009.02360.x. [DOI] [PubMed] [Google Scholar]

- Failing MF, Theeuwes J. Exogenous visual orienting by reward. Journal of Vision. 2014;14(5):6, 1–9. doi: 10.1167/14.5.6. [DOI] [PubMed] [Google Scholar]

- Folk CL, Remington R. Selectivity in distraction by irrelevant featural singletons: evidence for two forms of attentional capture. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:847–858. doi: 10.1037//0096-1523.24.3.847. [DOI] [PubMed] [Google Scholar]

- Folk CL, Remington RW, Johnston JC. Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:1030–1044. [PubMed] [Google Scholar]

- Fukuda K, Vogel EK. Human variation in overriding attentional capture. Journal of Neuroscience. 2009;29:8726–8733. doi: 10.1523/JNEUROSCI.2145-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukuda K, Vogel EK. Individual differences in recovery time from attentional capture. Psychological Science. 2011;22:361–368. doi: 10.1177/0956797611398493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickey C, Chelazzi L, Theeuwes J. Reward changes salience in human vision via the anterior cingulate. Journal of Neuroscience. 2010;30:11096–11103. doi: 10.1523/JNEUROSCI.1026-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson EN, Hawken MJ, Shapley R. The orientation selectivity of color-responsive neurons in macaque V1. Journal of Neuroscience. 2008;28:8096–8106. doi: 10.1523/JNEUROSCI.1404-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurent PA. The emergence of saliency and novelty responses from reinforcement learning principles. Neural Networks. 2008;21:1493–1499. doi: 10.1016/j.neunet.2008.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lupp U, Hauske G, Wolf W. Perceptual latencies to sinusoidal gratings. Vision Research. 1976;16:969–972. doi: 10.1016/0042-6989(76)90228-5. [DOI] [PubMed] [Google Scholar]

- Mazer JA, Vinje WE, McDermott J, Schiller PH, Gallant JL. Spatial frequency and orientation tuning dynamics in area v1. Proceedings of the National Academy of Sciences USA. 2002;99:1645–1650. doi: 10.1073/pnas.022638499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meigen T, Lagreze WD, Bach M. Asymmetries in preattentive line detection. Vision Research. 1994;34:3103–3109. doi: 10.1016/0042-6989(94)90076-0. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Connor CE. Population coding of shape in area v4. Nature Neuroscience. 2002;5:1332–1338. doi: 10.1038/nn972. [DOI] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Posner MI, Walker JA, Friedrich FJ, Rafal RD. Effects of parietal injury on covert orienting of attention. Journal of Neuroscience. 1984;4:1863–1874. doi: 10.1523/JNEUROSCI.04-07-01863.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qi S, Zeng Q, Ding C, Li H. Neural correlates of reward-driven attentional capture in visual search. Brain Res. 2013;1532:32–43. doi: 10.1016/j.brainres.2013.07.044. [DOI] [PubMed] [Google Scholar]

- Wang L, Yu H, Zhou X. Interaction between value and perceptual salience in value-driven attentional capture. J Vis. 2013;13(3:5):1–13. doi: 10.1167/13.3.5. [DOI] [PubMed] [Google Scholar]

- Raymond JE, O’Brien JL. Selective visual attention and motivation: The consequences of value learning in an attentional blink task. Psychological Science. 2009;20:981–988. doi: 10.1111/j.1467-9280.2009.02391.x. [DOI] [PubMed] [Google Scholar]

- Theeuwes J. Perceptual selectivity for color and form. Perception and Psychophysics. 1992;51:599–606. doi: 10.3758/bf03211656. [DOI] [PubMed] [Google Scholar]

- Theeuwes J. Top-down and bottom-up control of visual selection. Acta Psychologica. 2010;135:77–99. doi: 10.1016/j.actpsy.2010.02.006. [DOI] [PubMed] [Google Scholar]

- Theeuwes J, Belopolsky AV. Reward grabs the eye: oculomotor capture by rewarding stimuli. Vision Research. 2012;74:80–85. doi: 10.1016/j.visres.2012.07.024. [DOI] [PubMed] [Google Scholar]

- Vassilev A, Mitov D. Perception time and spatial frequency. Vision Research. 1976;16:89–92. doi: 10.1016/0042-6989(76)90081-x. [DOI] [PubMed] [Google Scholar]

- Victor JD, Purpura K, Katz E, Mao B. Population encoding of spatial frequency, orientation, and color in macaque V1. Journal of Neurophysiology. 1994;72:2151–2166. doi: 10.1152/jn.1994.72.5.2151. [DOI] [PubMed] [Google Scholar]

- Vogel EK, McCollouge AW, Machizawa MG. Neural measures reveal individual differences in controlling access to working memory. Nature. 2005;438:500–503. doi: 10.1038/nature04171. [DOI] [PubMed] [Google Scholar]

- Yantis S, Johnston JC. On the locus of visual selection: Evidence from focused attention tasks. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:135–149. doi: 10.1037//0096-1523.16.1.135. [DOI] [PubMed] [Google Scholar]

- Yantis S, Jonides J. Abrupt visual onsets and selective attention: evidence from visual search. Journal of Experimental Psychology: Human Perception and Performance. 1984;10:601–621. doi: 10.1037//0096-1523.10.5.601. [DOI] [PubMed] [Google Scholar]