Abstract

Facial expressions frequently involve multiple individual facial actions. How do facial actions combine to create emotionally meaningful expressions? Infants produce positive and negative facial expressions at a range of intensities. It may be that a given facial action can index the intensity of both positive (smiles) and negative (cry-face) expressions. Objective, automated measurements of facial action intensity were paired with continuous ratings of emotional valence to investigate this possibility. Degree of eye constriction (the Duchenne marker) and mouth opening were each uniquely associated with smile intensity and, independently, with cry-face intensity. Additionally, degree of eye constriction and mouth opening were each unique predictors of emotion valence ratings. Eye constriction and mouth opening index the intensity of both positive and negative infant facial expressions, suggesting parsimony in the early communication of emotion.

Keywords: Emotional Expression, Facial Action, Infancy, Valence, Intensity, Joy, Distress

What is the logic by which facial actions are combined to communicate emotional meaning? Infant facial expressions communicate a range of negative and positive emotion from subtle displeasure to distress and from mild amusement to extreme joy (Camras, 1992; Messinger, Fogel, & Dickson, 2001). Infants have historically provided a basis for understanding the origins of facial expression meaning (Camras, 1992; Darwin, 1877; Izard, 1997). We propose that a given facial action, such as the Duchenne marker, can index the emotional intensity of both positive and negative infant expressions. This proposal has roots in several theories of emotion and facial expression.

Core affect theory emphasizes the primacy of positive and negative emotional valence—a focus of the current report—but does not propose facial actions that index this dimension of emotion (Feldman-Barrett & Russell, 1998). Componential models of emotion posit that facial actions have an invariant meaning in multiple expressive configurations (Ortony & Turner, 1990; Scherer & Ellgring, 2007; Smith, 1989). These models have not, however, suggested that facial actions can index the intensity of both positive and negative affect. Discrete emotion theorists emphasize the role of eye constriction—the Duchenne marker—in indexing the positive intensity of smiles (Ekman, Davidson, & Friesen, 1990). These theorists have also noted the presence of eye constriction in negative expressions (Ekman, Friesen, & Hager, 2002; Izard, 1982), but have not spoken to the possibility that eye constriction can index the intensity of both types of expressions. We synthesize the logic of these theoretical models in our investigation of facial actions involved in prototypic infant positive and negative emotional expressions.

Smiles are the prototypical expression of positive emotion in infancy. Eye constriction—with associated raising of the cheeks—has a well-established role in indexing the joyfulness of adult (Ekman, et al., 1990) and infant (Fox & Davidson, 1988) Duchenne smiles. Recent research suggests that during infant smiles, mouth opening is associated with eye constriction, and that both are indices of positive emotion (Fogel, Hsu, Shapiro, Nelson-Goens, & Secrist, 2006; Messinger, Mahoor, Chow, & Cohn, 2009).

Infants do not reliably produce discrete negative emotion expressions in specific eliciting contexts. Instead, the cry-face—combining elements of anger and distress—is the prototypical infant expression of negative emotion (Camras, Oster, Campos, Miyake, & Bradshaw, 1992; Oster, 2003; Oster, Hegley, & Nagel, 1992). Cry-faces can involve a set of actions—including brow lowering, tight eyelid closing, and upper lip raising—not involved in smiles. Nevertheless, ratings of photographs suggest that cry-faces involving greater mouth opening and stronger eye constriction with associated cheek raising are perceived as more affectively negative than cry-faces with lower levels of mouth opening and eye constriction (Bolzani-Dinehart, et al., 2005; Messinger, 2002; Oster, 2003).

Despite recent research, little is known about the dynamics of infant smiles and cry-faces, and their association with emotional valence. Smile dynamics—continuous changes in the intensity of facial actions—and perceived emotional valence have been investigated only in a series of brief video clips (Messinger, Cassel, Acosta, Ambadar, & Cohn, 2008) and in the face-to-face interactions of two pilot infants (Messinger, et al., 2009). More strikingly, there have been no detailed investigations of the dynamics of infant negative expressions. One obstacle to such research has been a lack of efficient methods for measuring the intensity of facial actions and their perceived emotional intensity (Messinger, et al., in press). The current study addresses this difficulty using innovative measurement approaches.

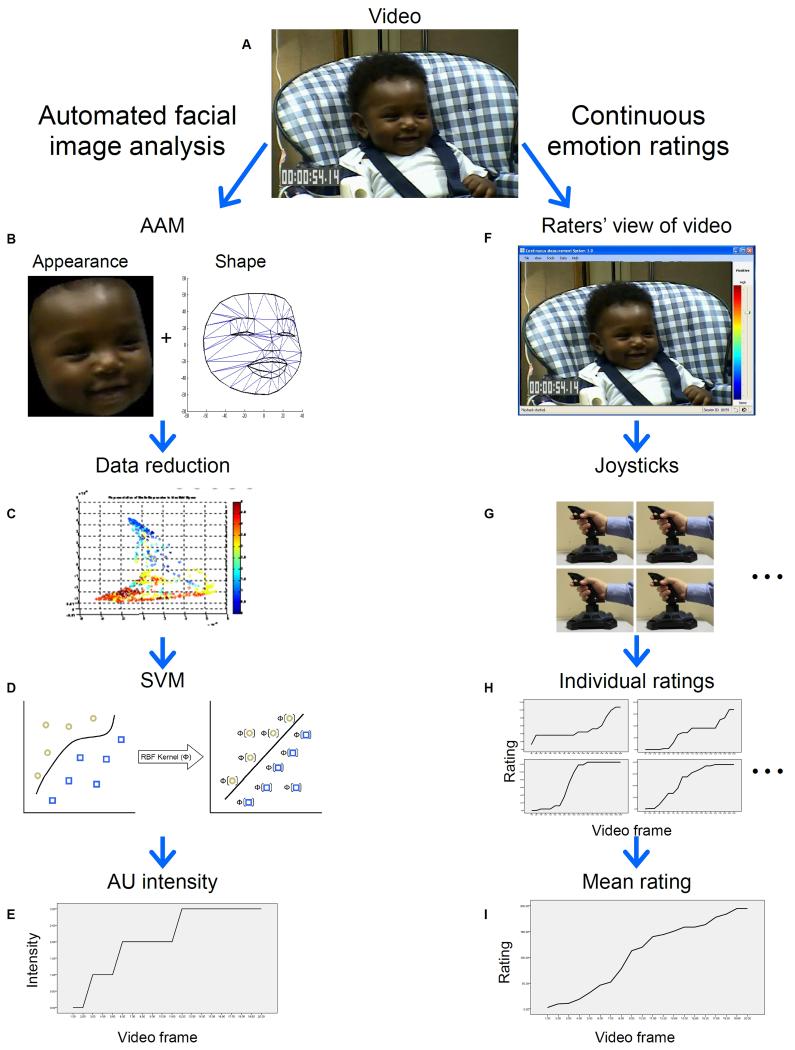

We employed objective (automated) measurements of the intensity of infant facial actions as they occurred dynamically in time. These objective measurements were complemented with continuous ratings of the perceived emotional intensity of the infants’ facial expressions (see Figure 2). This approach was used to test the hypothesis that eye constriction and mouth opening index the positive emotional intensity of smiles and the negative emotional intensity of cry-faces (see Figure 1).

Figure 2.

The measurement approach. (A) Video is recorded at 30 fps. (B) Appearance and shape features of the face are distinguished and tracked using an Active Appearance and Shape Model (AAM). (C) Nonlinear mapping is used to reduce the appearance and shape features to a set of 12 data points per video frame. (D) A separate support vector machine (SVM) classifies the occurrence and intensity of each FACS Action Unit (AU). (E) This yields information on the presence and intensity of AUs 6, 12, and 20 in each video frame. (F) Raters’ view video in real-time. (G) Raters use joysticks used to continuously rate the infant’s affective valence. (H) Individual ratings (I) are combined to produce a mean rating of affective valence for each video frame.

Figure 1.

Varying intensities of smiles and cry-faces with co-occurring eye constriction and mouth opening.

Method

Infants and procedure

Twelve six-month-olds and their parents (11 mothers, 1 father) were video-recorded in the Face-to-Face/Still-Face (FFSF) procedure (Adamson & Frick, 2003). The FFSF was used to elicit a range of negative and positive infant emotional expressions. It involved three minutes of naturalistic play with the parent, two minutes in which the parent became impassive and did not respond to the infant (an age-appropriate stressor), and three minutes of renewed play. The six-month-olds (M = 6.20, SD = 0.43) were 66.7% male, and ethnically diverse (16.7% African American; 16.7% Asian American, 33.3% Hispanic American, and 33.3% European American).

Manual coding

The metric of facial measurement was intensity coding of Action Units (AU) of the anatomically-based Facial Action Coding System (FACS) (Ekman, et al., 2002). AUs were coded by FACS-certified coders trained in BabyFACS (Oster, 2003). Smiles were indexed by the action of zygomaticus major (AU12), cry-faces by the action of risorius (AU20), and eye constriction by the action of orbicularis oculi, pars orbitalis (AU6). These AUs were coded as 0 (absent) 1, 2, 3, 4, or 5 (FACS intensities A to E, trace to maximal (Ekman, et al., 2002). Mouth opening was indexed using a combination of the actions of depressor labii (AU25), masetter (AU26), and the pterygoids (AU27). This produced a scale from 0 (mouth closed), to lips parted (1), and captured the cumulative intensity of jaw dropping (2 – 6, AU26) and mouth stretching (7 – 11, AU27) based on the FACS A to E intensity metric (Messinger, et al., 2009). The purpose of this manual coding was to train and test the automated measurement system.

Automated face modeling

Automated measurement begins with active appearance and shape modeling (see Figure 2). Active appearance and shape models(AAM) track the face over contiguous video frames and were trained on 2.75% of these frames. AAMs separately model shape and appearance features of the face (Baker, Matthews, & Schneider, 2004). Shape features of the face were represented as 66 (x,y) coordinates joined in a triangulated mesh. This constitutes a shape model which is normalized to control for rigid head motion. Appearance was represented as the grayscale values (from white to black) of each pixel in the normalized shape model. The large number of shape and appearance features was subject to nonlinear data reduction to produce a set of 29 variables per video frame that were used in facial action measurement (Belkin & Niyogi, 2003).

Automated facial action overview

Separate support vector machine classifiers (SVM) were used to measure smiles (AU12), cry-faces (AU20), and eye constriction (AU6) (Mahoor, Messinger, Cadavid, & Cohn, 2009). For each video frame, the designated SVM classifier indicated whether the AU in question was present and, if present, its intensity level. To make this assignment, a one-against-one classification strategy was used (each intensity level was pitted against each of the others) (Chang & Lin, 2001; Madhoor, et al., 2009). We constrained the SVM classifiers to utilize only features from those areas of the face anatomically relevant to the AU being measured (Ekman, et al., 2002; Oster, 2003).1

Automated facial action measurement

The SVM classifiers were trained on manual FACS coding using a leave-one-out cross-validation procedure. Models were trained on data from 11 of the infants in the sample; measurements were then produced and reliability ascertained on the remaining infant. This was done sequentially for all infants in the sample. Mouth opening was measured directly as the mean vertical distance between three pairs of points on the upper and lower lips using the shape features of the AAM (Messinger, et al., 2009). Intraclass correlations indicated high inter-system concordance (reliability) between automated measurements and manual coding of smiles (.83), cry-faces (.87), eye constriction (.82), and mouth opening (.83). The automated measurements of facial actions were used in all data analyses.

Student raters and perceived emotional valence

The continuous ratings of naive observers were used to measure the intensity of perceived emotional valence (Ruef & Levenson, 2007). Separate samples of 42 and 36 undergraduates rated positive (“joy, happiness, and pleasure”) and negative emotional intensity (“anger, sadness, and distress”), respectively. The raters had a mean age of 19.6 years and were 52.6% female; they were African American (6.4%), Asian (2.6%), Hispanic (32.1%), White (51.3%), and bi-racial/other (7.7%). Using a joystick, they continuously rated emotional intensity on a color scale while viewing video of each infant in real time (Messinger, et al., 2009). The positive and negative emotion rating scales ranged from none (−500) to high (+500). Mean positive emotion ratings and mean negative emotion ratings were calculated over raters for each frame of video. Mean ratings of positive and negative emotion were highly associated (mean r = −.87), motivating the creation of a combined measure of perceived emotional valence (the absolute value of the mean of the positive ratings and sign-reversed negative ratings). Cross-correlations of the valence ratings and automated measurements indicated an average rating lag of about 1 second (see Messinger et al., 2009), which we corrected for in statistical analyses.

Results

Overview

We used correlations to examine the association of eye constriction and mouth opening with smiles and with cry-faces. There were instances in which neither smiles nor cry-faces occurred (both AUs had zero values). These instances were randomly divided between the smile and cry-face data sets to maintain the independence of correlations involving smiles and correlations involving cry-faces. Next we used regression analyses to determine the role of eye constriction and mouth opening in predicting ratings of emotional valence. To ascertain the predictive role of eye constriction and mouth opening—beyond that of smiles and cry-faces—we calculated a variable that combined the intensity measurements of smiles and cry-faces. This combined cry-face/smile variable—the absolute value of the difference between the intensity of smiles and cry-faces—ranged from 0 (neutral) to 5 (most intense smile or cry-face). In all analyses, we computed correlations, partial correlations, and regression coefficients within infants and used t-tests of the mean parameters to determine significance (see Figure 3).

Figure 3.

(A) Overall (r) and partial correlations (rp) between the intensity of smiles, eye constriction, and mouth opening; and between the intensity of cry-faces, eye constriction, and mouth opening. Frames of video in which neither smiles nor cry-faces occurred (zero values) were randomly divided between the smile and cry-face correlation sets to maintain independence. (B) R2, r, and rp from regressing affective valence ratings on the intensity of smile/cry-faces, eye constriction, and mouth opening. All statistics represent mean values across infants. P values reflect two-tailed, one-sample t-tests of those values: * p < .05, ** p < .01, *** p < .001, **** p < .0001.

Smiles

Eye constriction intensity and degree of mouth opening were independently associated with smile intensity. Associations between these facial actions were strong. Mean correlations of smile intensity with eye constriction and mouth opening were .55 and .43, respectively (see Figure 3A).

Cry-faces

In separate analyses, eye constriction and mouth opening were independently associated with cry-face intensity. Associations between these facial actions were moderate to strong. Mean correlations of smile intensity with eye constriction and mouth opening were .48 and .29, respectively (see Figure 3A). The analyses of smiles and of cry-faces indicated that eye constriction and mouth opening each exhibited unique associations with these facial indices of positive and negative emotion.

Predicting emotional valence

The combined cry-face/smile variable, eye constriction, and mouth opening each uniquely predicted continuous ratings of emotional valence. Effects were moderate to very strong with a mean adjusted R2 of .41. These regression analyses indicate that eye constriction and mouth opening were independent predictors of positive and negative emotional valence (see Figure 3B).

Discussion

Since Darwin, researchers have attempted to understand how individual facial actions are combined to communicate emotional meaning (Camras, 1992; Darwin, 1877). The dearth of precise measurements of facial expressions in naturalistic conditions has made this task difficult. We addressed this problem by combining objective measurements of infant facial actions with continuous ratings of their emotional valence. We found that eye constriction and mouth opening index the positive emotional intensity of smiles and the negative emotional intensity of cry-faces. Below, we discuss this finding with respect to previous results and then explore its theoretical implications.

One strand of research categorically distinguishes smiles between smiles that do and do not involve eye constriction in adults (Ekman, 1990) and infants (Fox & Davidson, 1988). Smiles involving eye constriction (Duchenne smiles) are thought to uniquely index joy (Duchenne, 1990/1862; Ekman, 1994; Ekman, et al., 1990). The current results do not suggest this categorical distinction. Instead, objective measurements of intensity suggest that eye constriction—and mouth opening—rise and fall with the strength of smiling. Together these actions predicted the intensity of positive emotion in dynamically occurring expressions. These results extend previous work using more limited samples and measurement approaches (Fogel, et al., 2006; Messinger & Fogel, 2007; Messinger, et al., 2009; Oster, 2003). They suggest that the intensity of multiple actions involved in early smiling index continuous changes in positive emotion.

Paralleling the smile results, degree of eye constriction and mouth opening were covarying indices of the intensity of negative emotion during infant cry-faces. These results stem from objective measurement and continuous ratings of naturalistic facial expressions. They extend previous findings involving ratings of static expressions (Bolzani-Dinehart, et al., 2005; Messinger, 2002; Oster, 2003). The results indicate that the intensity of lateral lip stretching, eye constriction, and mouth opening are linked indices of negative affect.

The same facial actions—eye constriction and mouth opening—were associated with both the intensity of infant positive emotion and the intensity of infant negative emotion. Moreover, the intensity of eye constriction and mouth opening were associated with the intensity of smiles and cry-faces. This suggests the primary function of eye constriction and mouth opening was accentuating the emotional intensity of these expressions. We discuss the implications of this finding for discrete emotion theory and componential emotion theory below.

Discrete emotion theorists emphasize the role of eye constriction—the Duchenne marker—in indexing the positive intensity of smiles (Ekman, et al., 1990). In coding manuals and guides, theorists (Ekman, et al., 2002; Izard, 1982) have noted the presence of variants of eye constriction in negative as well as in positive expressions. Nevertheless, to the degree that one emphasizes the discrete character of positive and negative emotion expressions, the current pattern of results is not easily explained (Ekman, 1992; Izard, 1997). In a rigidly discrete account, affect programs responsible for producing smiles and cry-faces are distinct and separate. Similarities between the expressions—including the common role of eye constriction and mouth opening—would be coincidental.

Componential emotion theories do suggest potential commonalities between facial expressions (Ortony & Turner, 1990; Scherer & Ellgring, 2007; Smith, 1989). The current results may reflect links between facial actions and specific appraisals. Mouth opening, for example, could conceivably index a surprise component in both smiles and cry-faces. Eye constriction might indicate protective wincing, potentially indicating a reaction to the intensity of the interactive conditions involved in both smiles and cry-faces (Fridlund, 1994). We speculate that eye constriction communicates a focus on internal state in both positive and negative emotional contexts. Mouth opening, we believe, communicates an aroused, excited state, be it positive or negative; in some situations, mouth opening could also enable affective vocalizing. It should be acknowledged, however, that the results do not support one of these interpretations over another. They simply indicate that certain facial actions have a general function of indexing and communicating both positive and negative affective intensity. This possibility is consonant with and appears to extend current formulations of componential emotion theory.

Infant smiles and cry-faces differ in many respects. Each, in fact, appears to be perceived as part of an emotional gestalt that dictates the perceived meaning of associated eye constriction and mouth opening (see Figure 1). In both smiles and cry-faces, however, eye constriction and mouth opening can index emotional intensity in a continuous, but valence-independent fashion. Overall, then, human infants appear to utilize a parsimonious display system in which specific facial actions index the emotional intensity of both positive and negative facial expressions. Ekman has proposed the existence of families of related emotions (e.g. a family of joyful emotions) whose differences in intensity are expressed by related facial expressions (Ekman, 1993). In this integrative account, the infant smiles observed here may reflect a family of positive emotions related to joy, happiness, and pleasure; the cry-faces may reflect a family of infant negative emotion related to anger, sadness, and distress (Camras, 1992).

The current findings reflect associations between continuous ratings of emotional valence and automated measurements of facial action using the full FACS intensity metric in the well-characterized FFSF protocol. This focus on the production of infant facial expressions addresses the need for observational data on the occurrence of relatively unconstrained facial expressions in emotionally meaningful situations. There is evidence in the literature that smiling with eye constriction (Duchenne smiling)—and open-mouth smiling—index intense positive emotion among older children and adults, although less information is available on negative emotion expression (Cheyne, 1976; Ekman, et al., 1990; Fogel, et al., 2006; Fox & Davidson, 1988; Gervais & Wilson, 2005; Hess, Blairy, & Kleck, 1997; Johnson, Waugh, & Fredrickson, 2010; Keltner & Bonanno, 1997; Matsumoto, 1989; Oveis, Gruber, Keltner, Stamper, & Boyce, 2009; Schneider & Uzner, 1992). Intriguingly, adult actors—like the infants observed here—use eye constriction and mouth opening in portrayals of both strong positive (joy/happiness) and strong negative (despair) emotion (Scherer & Ellgring, 2007). We do not claim, however, that either eye constriction or mouth opening indexes the intensity of all emotion expressions throughout the lifespan; counter-examples abound. Instead, findings from infancy suggest early regularities in the expression of both negative and positive expressions whose relevance to adult expression awaits further investigation.

Acknowledgments

This research was the subject of a Master’s Thesis by Whitney I. Mattson. The research was supported by grants from NIH (R01HD047417 and R01MH051435), NSF (INT-0808767 and 1052736), and Autism Speaks.

Footnotes

SVM classifiers map the input (face) data into a multidimensional space, which is optimally separable into output categories (AU intensity classes) (Cortes & Vapnik, 1995).

Contributor Information

Daniel S. Messinger, Departments of Psychology, Pediatrics, and Electrical and Computer Engineering, University of Miami

Whitney I. Mattson, Department of Psychology, University of Miami

Mohammad H. Mahoor, Department of Electrical and Computer Engineering, University of Denver

Jeffrey F. Cohn, Department of Psychology, University of Pittsburgh, Robotics Institute, Carnegie Mellon University

References

- Adamson LB, Frick JE. The still-face: A history of a shared experimental paradigm. Infancy. 2003;4(4):451–474. [Google Scholar]

- Baker S, Matthews I, Schneider J. Automatic construction of active appearance models as an image coding problem. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2004;26(10):1380–1384. doi: 10.1109/TPAMI.2004.77. [DOI] [PubMed] [Google Scholar]

- Belkin M, Niyogi P. Laplacian Eigenmaps for dimensionality reduction and data representation. Neural Computation Archive. 2003;15(6):1373–1396. [Google Scholar]

- Bolzani-Dinehart L, Messinger DS, Acosta S, Cassel T, Ambadar Z, Cohn J. Adult perceptions of positive and negative infant emotional expressions. Infancy. 2005;8(3):279–303. [Google Scholar]

- Camras LA. Expressive development and basic emotion. Cognition and Emotion. 1992;6(3/4):269–283. [Google Scholar]

- Camras LA, Oster H, Campos JJ, Miyake K, Bradshaw D. Japanese and American infants’ responses to arm restraint. Developmental Psychology. 1992;28(4):578–583. [Google Scholar]

- Chang CC, Lin CJ. LIBSVM: a library for support vector machines. 2001 Retrieved 4/7/2008 http://www.csie.ntu.edu.tw/~cjlin/libsvm.

- Cheyne JA. Development of forms and functions of smiling in preschoolers. Child Development. 1976;47(3):820–823. doi: 10.2307/1128200. [Google Scholar]

- Cortes C, Vapnik V. Support-vector networks. Machine Learning. 1995;20(3):273–297. doi: 10.1007/bf00994018. [Google Scholar]

- Darwin C. A biographical sketch of an infant. Mind. 1877;2(7):285–294. [Google Scholar]

- Duchenne GB. In: The mechanism of human facial expression. Cuthbertson RA, translator. Cambridge University Press; New York: 1990/1862. [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and Emotion. 1992;6(3/4):169–200. [Google Scholar]

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48(4):384–392. doi: 10.1037//0003-066x.48.4.384. doi: 10.1037/0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P. All emotions are basic. In: Ekman P, Davidson RJ, editors. The nature of emotion: Fundamental questions. Oxford University Press; New York: 1994. pp. 15–19. [Google Scholar]

- Ekman P, Davidson RJ, Friesen W. The Duchenne smile: Emotional expression and brain physiology II. Journal of Personality and Social Psychology. 1990;58:342–353. [PubMed] [Google Scholar]

- Ekman P, Davidson RJ, Friesen W. The Duchenne smile: emotional expression and brain physiology: II. Journal of Personality and Social Psychology. 1990;58(2):343–353. [PubMed] [Google Scholar]

- Ekman P, Friesen WV, Hager JC. Facial Action Coding System Investigator’s Guide. A Human Face; Salt Lake City, UT: 2002. [Google Scholar]

- Feldman-Barrett L, Russell JA. Independence and bipolarity in the structure of current affect. Journal of Personality and Social Psychology. 1998;74(4):967–984. [Google Scholar]

- Fogel A, Hsu H-C, Shapiro AF, Nelson-Goens GC, Secrist C. Effects of normal and perturbed social play on the duration and amplitude of different types of infant smiles. Developmental Psychology. 2006;42:459–473. doi: 10.1037/0012-1649.42.3.459. [DOI] [PubMed] [Google Scholar]

- Fox N, Davidson RJ. Patterns of brain electrical activity during facial signs of emotion in 10 month old infants. Developmental Psychology. 1988;24(2):230–236. [Google Scholar]

- Fridlund AJ. Human facial expression: An evolutionary view. Academic Press; New York: 1994. [Google Scholar]

- Gervais M, Wilson DS. The evolution and functions of laughter and humor: A synthetic approach. The Quarterly Review of Biology. 2005;80:395–430. doi: 10.1086/498281. [DOI] [PubMed] [Google Scholar]

- Hess U, Blairy S, Kleck RE. The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior. 1997;21(4):241–257. doi: 10.1023/a:1024952730333. [Google Scholar]

- Izard CE. A system for identifying affect expressions by holistic judgements (AFFEX) University of Deleware; Newark, Deleware: 1982. [Google Scholar]

- Izard CE. Emotions and facial expressions: A perspective from Differential Emotions Theory. In: Russell JA, Fernandez-Dols FM, editors. The psychology of facial expression. Cambridge University Press; New York: 1997. pp. 57–77. [Google Scholar]

- Johnson KJ, Waugh CE, Fredrickson BL. Smile to see the forest: Facially expressed positive emotions broaden cognition. Cognition and Emotion. 2010;24(2):299–321. doi: 10.1080/02699930903384667. doi: 10.1080/02699930903384667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keltner D, Bonanno GA. A study of laughter and dissociation: Distinct correlates of laughter and smiling during bereavement. Journal of Personality & Social Psychology. 1997;73(4):687–702. doi: 10.1037//0022-3514.73.4.687. [DOI] [PubMed] [Google Scholar]

- Mahoor MH, Messinger DS, Cadavid S, Cohn JF. A framework for automated measurement of the intensity of non-posed facial Action Units; Paper presented at the IEEE Workshop on CVPR for Human Communicative Behavior Analysis; Miami Beach, FL. 2009. [Google Scholar]

- Matsumoto D. Face, culture, and judgments of anger and fear: Do the eyes have it? Journal of Nonverbal Behavior. 1989;13(3):171–188. doi: 10.1007/bf00987048. [Google Scholar]

- Messinger D. Positive and negative: Infant facial expressions and emotions. Current Directions in Psychological Science. 2002;11(1):1–6. [Google Scholar]

- Messinger D, Cassel T, Acosta S, Ambadar Z, Cohn J. Infant Smiling Dynamics and Perceived Positive Emotion. Journal of Nonverbal Behavior. 2008;32:133–155. doi: 10.1007/s10919-008-0048-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messinger D, Fogel A. The interactive development of social smiling. In: Kail R, editor. Advances in Child Development and Behavior. Vol. 35. Elsevier; Oxford: 2007. pp. 328–366. [DOI] [PubMed] [Google Scholar]

- Messinger D, Fogel A, Dickson K. All smiles are positive, but some smiles are more positive than others. Developmental Psychology. 2001;37(5):642–653. [PubMed] [Google Scholar]

- Messinger D, Mahoor M, Chow S, Cohn JF. Automated measurement of facial expression in infant-mother interaction: A pilot study. Infancy. 2009;14:285–305. doi: 10.1080/15250000902839963. NIHMS99269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Messinger D, Mahoor M, Chow S, Haltigan JD, Cadavid S, Cohn JF. Early Emotional Communication: Novel Approaches to Interaction. In: Gratch J, Marsella S, editors. Social emotions in nature and artifact: Emotions in human and human-computer interaction. Vol. 14. Oxford University Press; in press. [Google Scholar]

- Ortony A, Turner TJ. What’s basic about basic emotions? Psychological Review. 1990;97(3):315–331. doi: 10.1037/0033-295x.97.3.315. doi: 10.1037/0033-295x.97.3.315. [DOI] [PubMed] [Google Scholar]

- Oster H. Emotion in the infant’s face insights from the study of infants with facial anomalies. Annals of the New York Academy of Sciences. 2003;1000:197–204. doi: 10.1196/annals.1280.024. [DOI] [PubMed] [Google Scholar]

- Oster H, Hegley D, Nagel L. Adult judgments and fine-grained analysis of infant facial expressions: Testing the validity of a priori coding formulas. Developmental Psychology. 1992;28(6):1115–1131. [Google Scholar]

- Oveis C, Gruber J, Keltner D, Stamper JL, Boyce WT. Smile intensity and warm touch as thin slices of child and family affective style. Emotion. 2009;9(4):544–548. doi: 10.1037/a0016300. doi: 10.1037/a0016300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruef A, Levenson R. Studying the time course of affective episodes using the affect rating dial. In: Coan JA, Allen JJB, editors. The Handbook of Emotion Elicitation and Assessment. 2007. [Google Scholar]

- Scherer KR, Ellgring H. Are facial expressions of emotion produced by categorical affect programs or dynamically driven by appraisal? Emotion. 2007;7(1):113–130. doi: 10.1037/1528-3542.7.1.113. doi:10.1037/1528-3542.7.1.113. doi: 10.1037/1528-3542.7.1.113. [DOI] [PubMed] [Google Scholar]

- Schneider K, Uzner L. Preschoolers’ attention and emotion in an achievement and an effect game: A longitudinal study. Cognition and Emotion. 1992;6(1):37–63. [Google Scholar]

- Smith CA. Dimensions of appraisal and physiological response in emotion. Journal of Personality and Social Psychology. 1989;56(3):339–353. doi: 10.1037//0022-3514.56.3.339. [DOI] [PubMed] [Google Scholar]