Abstract

Dynamics and function of neuronal networks are determined by their synaptic connectivity. Current experimental methods to analyze synaptic network structure on the cellular level, however, cover only small fractions of functional neuronal circuits, typically without a simultaneous record of neuronal spiking activity. Here we present a method for the reconstruction of large recurrent neuronal networks from thousands of parallel spike train recordings. We employ maximum likelihood estimation of a generalized linear model of the spiking activity in continuous time. For this model the point process likelihood is concave, such that a global optimum of the parameters can be obtained by gradient ascent. Previous methods, including those of the same class, did not allow recurrent networks of that order of magnitude to be reconstructed due to prohibitive computational cost and numerical instabilities. We describe a minimal model that is optimized for large networks and an efficient scheme for its parallelized numerical optimization on generic computing clusters. For a simulated balanced random network of 1000 neurons, synaptic connectivity is recovered with a misclassification error rate of less than 1 % under ideal conditions. We show that the error rate remains low in a series of example cases under progressively less ideal conditions. Finally, we successfully reconstruct the connectivity of a hidden synfire chain that is embedded in a random network, which requires clustering of the network connectivity to reveal the synfire groups. Our results demonstrate how synaptic connectivity could potentially be inferred from large-scale parallel spike train recordings.

Keywords: Spike trains, Network topology, Connectome identification, Inverse problem, Synaptic connectivity, Connectivity inference, Generalized linear model, Maximum likelihood estimation, Penalized likelihood, Sparsity, Point process

Introduction

The synaptic organization of neuronal networks is key to understanding the dynamics of brain circuits, and, eventually, to link them to higher level cognitive functions. A large body of work aims to address this challenge by developing experimental techniques which enable the reconstruction of the connections between neurons on the basis of anatomical or physiological evidence. Anatomically, synaptic connections may be identified using optical imaging or electron microscopy (Briggman et al. 2011; Bock et al. 2011), while physiological approaches rely on simultaneous recordings of individual neurons and the mutual influence of the spikes of one neuron on the membrane potential of the other (Perin et al. 2011; Boucsein et al. 2011). Substantial progress has been made in recent decades to increase the size of networks accessible by experimental methods, including the new promising macroscale and mesoscale connectivity mapping techniques (Chung et al. 2013; Oh et al. 2014). However, on the microscale of individual neurons, the practical limitations of these techniques mean that reliable reconstruction is currently only possible for neural circuits of up to dozens of cells.

Alternatively, the connectivity of neuronal networks can be inferred from parallel recordings of their spiking activity. Potentially, this enables the recovery of the connections in circuits of hundreds and thousands of cells. Recent technical achievements in conducting large-scale parallel recordings of neuronal dynamics, such as multi-electrode array technology for in vivo implantation (Hatsopoulos and Donoghue 2009; Ghane-Motlagh and Sawan 2013), micro-electrode dishes for recording the in vitro activity of acute brain slices and dissociated cell cultures (Nam and Wheeler 2011; Spira and Hai 2013), and optical imaging techniques (Grewe and Helmchen 2009; Lütcke et al. 2013; Ahrens et al. 2013), make this path even more compelling.

The main difficulty in the analysis of parallel recordings, though, lies in the interpretation of the results (Gerstein and Perkel 1969; Aertsen et al. 1989). On one hand, simple reduced models of network interactions are often unable to resolve ambiguous scenarios: a classic example of such ambiguity is a group of neurons that receives common input versus a mutually connected group of cells, which cannot be distinguished using pairwise cross-correlation analysis (Stevenson et al. 2008). On the other hand, obtaining reliable fits of complex large-scale models to the data presents both a methodological and computational challenge in itself (Chen et al. 2011; Song et al. 2013). At the same time, there are often considerable difficulties in directly relating the reconstructed connectivity matrices to measurable experimental quantities or model parameters. The resulting sets of connections are then regarded as “functional” or “effective” connectivity, terms lacking strict and universally accepted definitions, and not necessarily matching real anatomical connectivity, but still hoped to provide useful insights with respect to the interaction of the network elements (Horwitz 2003).

The desire to strike the balance between explanatory power, and analytical as well as numerical tractability, has fueled an ever growing interest in methods that go beyond simple linear regression analysis, but still remain highly efficient. Previous works show that generalized linear models (GLM) (McCullagh and Nelder 1989) of network spiking activity can indeed be efficiently estimated from experimental data (Truccolo et al. 2005; Okatan et al. 2005; Pillow et al. 2008; Stevenson et al. 2009; Gerwinn et al. 2010) (dealing with recordings of up to 20, 33, 27, 75+108 and 7 neurons respectively), and make it possible to recover the actual synaptic connectivity of small neuronal circuits (N=3) (Gerhard et al. 2013). Scaling these approaches directly up to substantially larger networks of thousands of units, however, seemed not to be feasible due to the vast computational resources such a reconstruction would require.

In this work, we present a method to reconstruct the parameters of large-scale recurrent neuronal network models of N≥1000 elements, based on parameter estimation of a stochastic point process GLM using only observations of the spiking activity of the neurons. Provided with the knowledge of the probability p(X|𝜃) of a specific stochastic model yielding the observations X given the parameters 𝜃, we maximize the likelihood function L(𝜃)=p(X|𝜃) in order to identify a set of parameters 𝜃 resulting in an optimal agreement of the selected model with the observations X. This is a widespread technique known as maximum likelihood estimation (MLE) (Paninski 2004). If the underlying model is sufficiently detailed and is indeed appropriate to describe the observations, then not only can the parameters 𝜃 be related to the actual measurable features of the neuronal network that generated the data, but they also define a dynamic model of the neuronal network activity (also called a generative model). Such a model can be used to derive testable predictions, or conduct virtual experiments (simulations), which might otherwise have been impossible or impractical.

Due to the large number of parameters necessary to describe a network of N≥1000 neurons, the optimization of the likelihood L(𝜃) can only be performed efficiently for some of the possible GLMs of neuronal networks. In Section 2, we describe our optimized model, including a particular choice of nonlinearity and interaction kernels, which enables us to obtain closed forms and recurrence formulae which go beyond more general techniques previously reported in the literature. We additionally supply details about the numerical methods employed. In Section 3, we demonstrate the proposed technique on simulations of random balanced neuronal networks, and present reconstructions of the connectivity matrix consisting of 106 possible synapses in sparsely connected recurrent networks of N=1000 spiking neurons. Finally, we apply our method to a structured network. We recover a synfire chain embedded in a balanced network from recordings of spiking activity, in which no activations of the synfire chain were present, and demonstrate that the inferred model of this network supports the transmission of synfire activity when stimulated.

In the present study we focus on reconstructions of networks for which all spiking activity can be recorded. Whereas in experimental settings undersampling is to be expected – and we performed a basic assessment of how it would affect our reconstructions, see Appendix C – a thorough investigation of the consequences of undersampling for the classification performance of our techniques is out of scope. Similarly, when presenting these techniques we are initially concerned with activity which we can assume to be a sample of a multi-dimensional point process with constant parameters (i.e. neuronal excitability and synaptic interactions). In Section 4 we examine these limitations and propose how they could be relaxed in future studies.

Methods

This section provides detailed information on the method of network reconstruction we employ, including original amendments and adaptations. In Section 2.1 we introduce the likelihood of our network model to reproduce a given dataset of neuronal spike trains. This likelihood is the quantity which is subject to optimization. The specific formulation of the likelihood relies on a model of the spiking activity of the neurons, which is introduced in Section 2.2. To evaluate the likelihood and its gradient under that model efficiently, recursive formulae and closed form expressions are derived in Section 2.3. The subsequent sections describe how we handle synaptic transmission delays (Section 2.4) and how, in some cases, we employ regularization of the optimization problem (Section 2.5). Finally, Section 2.6 gives further details regarding the practical aspects of our highly parallelized implementation of the method.

Point process likelihood of generalized linear models

A statistical model that describes the activity of a network of N neurons can be defined as an expression for the conditional probability of observing an N-dimensional spike train (spike raster) S for a given input signal , which may include external stimulation and/or previous activity of the network itself. Given all the inputs of a neuron, we assume that its probability of spiking is independent of the other neurons (conditional independence). This allows us to factorize , where is the probability that the i-th neuron, within the recording time [T 0,T 1], produces a spike train S i conditioned on the input . Therefore, in what follows we focus on the probability of a single neuron.

The activity of the individual nerve cells can be characterized by a stochastic GLM that postulates that two consecutive operations are performed by the neuron on its input. First, the dimensionality of the observable signal is reduced by means of a linear transformation K i. This transformation models synaptic and dendritic filtering, input summation and leaky integration in the soma. The result is a one-dimensional quantity that is analogous to the membrane potential of a point neuron model. Second, this transformed one-dimensional signal is fed into a nonlinear probabilistic spiking mechanism, which works by sampling from an inhomogeneous Poisson process with an instantaneous rate (conditional intensity function) given by . Here, f i(⋅) is a function that captures the nonlinear properties of the neuron. Both the linear filter K i and the nonlinearity f i are specified by 𝜃 i, a set of parameters that describes the characteristics of the i-th neuron. The schematic of this model is shown in Fig. 1.

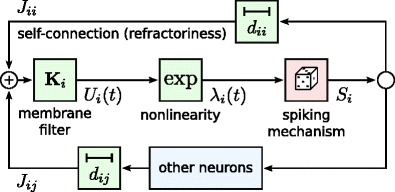

Fig. 1.

Schematic of the point process generalized linear model (PP GLM) of a recurrent spiking neuronal network. In this model, the spike trains from the neurons in the network, after incurring transmission delays d ij, pass through a linear filtering stage K i. The resulting (pseudo) membrane potential U i(t) is fed into a nonlinear link function , which transforms it into the conditional intensity function λ i(t). The latter drives the probabilistic spiking mechanism that generates an output spike train S i for the i-th neuron. Note that this spike train is then also fed back as an input to the neuron itself via a “self-connection” in order to model its refractory, post-spike properties

Based on these definitions, we may now introduce the natural logarithm of the likelihood L(𝜃|S) and expand it as

| 1 |

where the observation (previously called X) is the spike raster S. In the last step of Eq. (1) we have introduced the single neuron log-likelihood .

Let us now compute the probability that an inhomogeneous Poisson process with intensity λ i(t) produces the spike train S i={t i,k}, 1≤k≤q i, where T 0≤t i,k≤T 1 and q i is the number of spikes of the i-th neuron. This probability is (Brillinger 1988)

with t i,0=T 0. Here, for each spike time t i,k, we multiply the (survival) probabilities of not producing a spike in (t i,k−1,t i,k) with the intensity λ i(t i,k) at t i,k. Finally, we factor in the probability of not producing a spike in the recording time , which remains after the last spike. The function is known as the point process likelihood (Snyder and Miller 1991).

Taking the logarithm yields the log-likelihood function

| 2 |

where the sum runs over all spikes 1≤k≤q i of the i-th neuron. The first term of this expression rewards high intensity at times t i,k when the spikes of the i-th neuron have been emitted, and the second term penalizes high intensity when no spikes have been observed. Different numbers of spikes q i render the absolute values of difficult to compare among different neurons, but play no role when maximizing with respect to 𝜃 i.

Conditional intensity model for a recurrent neural network

In order to investigate the recurrent aspects of the dynamics of the system, we define the observable input signal for each neuron as the history of spikes recorded in the network up to a given point in time, including the spikes of the i-th neuron itself (which are used to model the refractory properties of the neuron). It is possible to include external inputs in this formulation, however this is not an option that we have pursued in the current work. Below follows a detailed discussion of the different components of the model as presented in Fig. 1.

For simplicity, we assume that the effect of each incoming spike can be modeled as an instantaneous current injection. The spike train S j of the j-th neuron as a function of time is expressed as , where t j,k is the k-th spike of the j-th neuron. Each spike then elicits an exponential post-synaptic response in the neuron, due to the filtering properties of the membrane, , where t is the time since spike arrival, τ i is the membrane time constant of the neuron, and H(x)={1 if x≥0, else 0} is the Heaviside function, which ensures the causal relationship between the stimulation and the response. Note that while the propagation of spikes is assumed to happen instantaneously in the formulation above, the incorporation of delays will be discussed in detail later in Section 2.4.

We may now define the linear dimensionality-reducing transformation as

| 3 |

where ∗ denotes the convolution operation,

The baseline potential J i0 will be used later to set a base level of activity of the unit in the absence of inputs. Differentiation of Eq. (3) yields the first-order ordinary differential equation of the leaky integrator

| 4 |

Hence U i(t) can be interpreted as the membrane potential of the i-th neuron, while J is the synaptic connectivity matrix and each of its elements J ij denotes the strength (synaptic weight) of the connection from the j-th to the i-th neuron. Due to its simplicity, Eq. (4) leads to highly efficient algorithms (discussed in Section 2.3 and 2.6) to evaluate the membrane potential and the conditional intensity function of the neurons, beyond previously reported more general parallelization techniques (Chu et al. 2006). The membrane potential and the intensity are, in turn, needed to compute the values of the likelihood function and its gradient.

In Eqs. (3) and (4), positive and negative values of J ij correspond to excitatory and inhibitory connections respectively, and zero values denote the lack of a connection between two cells. Note that as formulated, this model does not ensure compliance with Dale’s law (according to which each neuron can form synapses of only one type). However, we will show that this is an essentially negligible source of errors in the reconstructions presented below.

Further, we choose a specific type of the nonlinearity , such that

| 5 |

In this expression, the scalar δ u>0 can be considered as the inverse “gain” of the nonlinearity. In the derivations that follow we will assume δ u=1 in order to simplify the expressions without loss of the generality, as different gains can be accommodated by rescaling the synapse weights J ij and the baseline potential J i0 accordingly. In the absence of input spikes, U i=J i0, which leads to the base rate

| 6 |

It is worth mentioning that the base rate can effectively constitute a “sink” for spiking activity that cannot be explained by the recurrent network dynamics, such as external stimulation that has not been included in the present model, or missing inputs from unobserved neurons due to incomplete observations of the network (undersampling).

The model as formulated above is similar to the widely used cascade LNP model (Simoncelli et al. 2004), but in addition to the activity of the other cells in the ensemble, it also incorporates the spiking history of the neuron itself through its self-connection J ii. An intuitive biological interpretation of this class of models, also known as the spike-response model with escape noise, in relation to the conventional integrate-and-fire model is given in Brillinger (1988) and Gerstner et al. (2014). Here, in contrast to the approaches taken in previous studies (Song et al. 2013; Citi et al. 2014; Ramirez and Paninski 2014), we drastically simplify both the conditional intensity model for a single neuron and the interaction kernels. This makes the numerics in our method amenable to a highly efficient implementation as discussed in Section 2.6.

Given that f i(⋅) is both a convex and log-concave function of , and the space of possible {K i} is convex, it can be shown that the log-likelihood function of such problems is concave and does not have any non-global local extrema (Paninski 2004). Thus the log-likelihood function of the model as formulated above is concave in (note, however, that τ i is not included in 𝜃 i; the recovery of the time constants will be addressed separately). A proof of the concavity of for our specific choice of kernels and link function is given in Appendix A. Since the sum of concave functions is again concave, the full log-likelihood is concave as well. Consequently, there exists a unique set of parameters 𝜃 that characterize the network model that is most likely to exhibit a given recorded activity. These parameters 𝜃 can be efficiently identified via gradient ascent based nonlinear optimization methods applied to . Moreover, due to the separability of (1), in order to recover 𝜃={𝜃 i}, one can maximize the individual log-likelihood functions for each recorded unit, instead of maximizing the complete log-likelihood function .

Since the experimental techniques to obtain simultaneous recordings of thousands of units are becoming increasingly accessible, in this work we are targeting N≥1000. However, even if the number of variables is reduced from the required for the complete log-likelihood function to the required for the log-likelihood function of an individual neuron, this is still a high-dimensional convex optimization problem. It can only be solved in practice using gradient based methods, for which the analytical closed form expressions for the log-likelihood function and its gradient are both available, and amenable to efficient evaluation. In the following we derive these expressions for the postulated model.

Closed form expressions

Let us consider the log-likelihood for an individual neuron; recall that the variable part of Eq. (2) consists of two terms:

| 7 |

Observe that given a closed form for U i(t), computing is a matter of a simple algebraic substitution, while the efficiency of computing depends on whether it is possible to find this primitive analytically.

Recurrence formula for the membrane potential

By design, our particular choice of K i (exponential post-synaptic potential plus baseline potential) allows us to obtain the required closed form for U i(t) because it obeys the leaky integrator dynamics (4). The solution of Eq. (4) from t k to t in the absence of input spikes s j(t) is . This expression is valid at any time t between two consecutive observed spikes t k,t k+1∈S, where S={t k} is the (ordered) set of all recorded spikes of the network. At the borders of each of those intervals, the value of U i(t k+1) is increased by the contribution of the corresponding incoming spike:

| 8 |

where the index j refers to the neuron that emitted a spike at time t k+1; if spikes from multiple neurons j 1,2,3,… arrive at time t k+1, the contributions have to be added. We will refer to Eq. (8) as the key recurrence formula in the following.

The formula (8) for U i(t k+1) makes it possible to find the value of the membrane potential of the neuron at the spike time t k+1 given the previous value at time t k by computing only one exponential function. It is substantially more efficient in terms of computation than naively summing up the contributions from all spikes that happened at t<t k for each point in time t k. In particular, for kernels with infinite memory like the exponential kernels h i(t) employed here, the recurrence formula (8) is crucial to avoid an explosion of the computational costs when evaluating the log-likelihood on large datasets in continuous time.

Evaluating the likelihood

Taking these considerations into account, the integral over the duration of the recording in Eq. (7) can be broken down into a sum of integrals from t k to t k+1:

| 9 |

where is the total number of recorded spikes, are the spike times, and t 0=T 0 and t q+1=T 1 are the start and end of the recording. The integral contained here has a known closed form, so

| 10 |

where Ei(x) is a special function (exponential integral) defined as for real nonzero values of x. For a proof of the equivalence of Eqs. (9) and (10) see Appendix B; the numerical computation of this function is discussed below in Section 2.6.1. The summands of Eq. (10) are independent, and therefore the evaluation of lends itself to trivial parallelization.

Evaluating the gradient

It now remains to find an efficient way to compute the gradient of the log-likelihood function. The performance at this point is likewise important, or even more so for large N, since has partial derivatives that all need to be evaluated at each step of the optimization. The parameters of are . For convenience, let us first introduce the terms

| 11 |

which, for j≥1, can be interpreted as the putative response of the i-th neuron to the input spikes from the j-th neuron, that is going to be scaled by J ij, cf. (3). The derivatives of (7) with respect to J ij can then be expressed as

| 12 |

Here, q i is the number of spikes of the i-th neuron, and {t i,k}=S i are the points in time when the i-th neuron emitted a spike. For j=0, Eq. (12) becomes . This means that at a maximum of , the baseline potential J i0 (and so the base rate c i(6)) is set such that the number of spikes q i equals the expected total number of spikes of the GLM, . Further, in order to evaluate (12) for the cases when j≥1, we have defined the symbols and analogous to Eq. (7).

The values ν ij(t i,k) for j≥1 can be obtained using a recurrence formula just like for the membrane potential U i(t k)(8); in fact, , cf. (11). Hence, ν ij(t) obeys leaky integrator dynamics like U i(t), which can be obtained by differentiating Eq. (4) by J ij and reinserting Eq. (11). Accordingly, ν ij(t) decays exponentially in between spikes , and we find the recurrence formula

| 13 |

Individual values within these intervals can be computed in parallel independently from each other. Summing up all ν ij(t i,k) then yields .

It is also important to mention that ν ij(t)(11) and, consequently, in Eq. (12) do not depend on parameters 𝜃 i and therefore need only be computed once at the beginning of the optimization. However, even though we can use the formula , for large N it is more expensive to compute U i(t) by summing up weighted contributions of ν ij(t) than by using Eq. (8) as explained above.

Making use of the recurrence formulae for U i(t)(8) and ν ij(t)(13), the closed form of in Eq. (12) can be expressed as follows:

| 14 |

where, as in Eq. (10), is the total number of recorded spikes, are the spike times, t 0=T 0 and t q+1=T 1 are the start and end of the recording. Unlike , this expression needs to be re-evaluated at every optimization step, but as with Eq. (10), the elements of the sum are independent from each other and can therefore also be efficiently parallelized.

Handling transmission delays

In the discussion above, the communication of spikes between the neurons was implicitly assumed to happen instantaneously. Of course, in reality spikes incur transmission delays, which strongly affects the dynamics of the network.

Fortunately, the effects of combined synaptic and axonal delays can be easily incorporated into the described model: thanks to the separability property, we can optimize the parameters for each neuron independently, and feed every optimization for different neurons with its own modified dataset, containing the incoming spike times from other neurons arriving as the target neuron actually received them.

Therefore, given an effective delay matrix D, it is only necessary to shift each spike train S j in the recorded raster S by the corresponding delay at the beginning of the optimization for the i-th neuron, such that the membrane potential of this neuron is affected at the point in time when the incoming spikes from the j-th neuron have reached their target, and not immediately as they were fired (and recorded):

| 15 |

The transformation above has to be applied with one exception: the elements of the sum in (and, accordingly, ) have to be evaluated at time points S i when the i-th neuron actually produced a spike, and not at time points , when this spike has reached the neuron through the “self-connection” and provoked a depression of its membrane potential, which models the refractory properties of the neuron.

In other words, in order to correctly evaluate the expressions Eqs. (7) and (12) while taking into account transmission delays, one must compute the values of and using the modified raster , but at time points S i of the original raster S, and substitute these values in the elements of the sums and respectively, instead of summing up the elements taken at times . In the following, we omit the “hats” for notational convenience.

Regularization of the model

Substantial improvements in the quality of the network reconstruction can be achieved if the model presented above is subjected to standard regularization techniques. These techniques enhance the accuracy of the inference procedure by integrating additional prior knowledge about the system into the optimization process (Meinshausen and Bühlmann 2006; Ravikumar et al. 2010). For instance, we can impose box constraints on reasonable values of the synaptic connection matrix J ij or base rates c i, and complement this with a choice of more sophisticated methods, such as ℓ 1 or ℓ 2 regularization, exploiting assumed sparsity or smoothness of the expected result, respectively (Chen et al. 2011).

In particular, ℓ 1 regularization (Tibshirani 1996) has a straightforward Bayesian interpretation in our setting: by penalizing the log-likelihood function (2) with the sum of the absolute values of the synaptic weights J ij, we impose a sparsity-inducing Laplace prior on the sought-for solution, thereby performing a maximum a posteriori (MAP) estimation. Here the strength of the penalty α reflects the firmness of our belief in the sparseness of the network connectivity:

| 16 |

Possible overfitting due to an inadequate choice of the regularization parameter α can be prevented by separating the dataset into two parts to cross-validate the recovered synaptic weights, and, in the case that the available data is too scarce, more elaborate techniques such as K-fold cross-validation and other cross-validation types (Kohavi 1995) can be employed.

Practical implementation

The mathematical components described above make it possible to reproduce our estimation procedure. However, we found that without employing additional numerical methods, a naive implementation would be way too slow for practical use. In the following we outline the techniques that helped us to boost the optimization speed by many orders of magnitude, bringing the computational requirements to perform estimations of the connectivity for the networks of neurons into a practical range for plausible amounts of experimental data.

Efficient evaluation

From the computational perspective, a program that performs the parameter estimation would typically consist of a nonlinear optimization routine, which is provided with callback procedures that are repeatedly called in order to evaluate the objective function (2) and its gradient (12) for any given set of parameters. Hence, the cornerstone guiding principle to achieve best performance is to carefully consider the CPU time versus memory consumption trade-offs, and cache as many values for these callbacks as feasible.

As the values of U i(t) for S={t k} (all spikes of the network) are needed in order to evaluate both the log-likelihood function and its gradient, it makes sense to pre-compute these values at the beginning of the optimization step. Additionally, as previously noted, the values of ν ij(t) do not depend on the parameters 𝜃, and therefore both ν ij(t) and can be pre-computed during the first optimization step, and re-used in all subsequent steps. Likewise, it is important to consider the costs of calculating transcendental functions; whereas they might seem negligible at the first sight, the time taken to compute some 1010 exponentials every step is considerable. Therefore, pre-computing the values of all sub-expressions that do not depend on the parameters, and, in particular, is another possibility to save large amounts of CPU time.

In any case, we recommend using iterative profiling in order to select the relevant optimization targets to add each next level of caching, since, as a general rule, the more caches there are, the more complicated and error-prone it is to keep them consistent and up to date with respect to the changes in parameters. Additionally, this avoids the situations when a sizeable amount of work is invested only to gain minor improvements in speed, due to runtime actually being dominated by different code paths than anticipated.

We observed that the optimization algorithms are (unsurprisingly) sensitive to the precision of the evaluation of the objective function and its gradient, and especially to the consistency between the two. Therefore we rejected using numerical approximations to the gradient, such as values computed using the central differences formula, and employed analytically derived expressions instead. We have also found that better precision of the objective function leads to faster convergence. This particularly concerns the accurate approximation of the exponential integral in Eq. (10). In general, finding an efficient method to evaluate Ei(x), which is a crucial part of Eq. (10), poses a significant computational challenge. However, high-quality rational approximations exist in the literature (Cody and Thacher 1969), which make it as fast as evaluating low-order polynomials. In our implementation, we rely on the approximations devised by John Maddock using a custom Remez code, which are part of the Boost C++ library.1 These approximations are not only highly accurate, but also the fastest that are available to us.

Parallelization and distribution

As the sweeping growth of the clock speeds in the last couple of decades seems to have saturated, the focus is increasingly shifting towards increasing parallelism, and nowadays multicore CPUs are a de facto standard, rather than rare marvels. Therefore, suitability for parallelization is becoming a critical feature to discriminate the algorithms that are appropriate for large-scale data analysis. In this section we discuss the parallelization strategies applicable to the model described above.

Owing to the separability of the problem, the highest level approach to parallelize the execution of the optimization is to launch several estimations for different neurons in parallel. This results in a perfect scaling for N t≤N, where N t is the number of simultaneously executed hardware threads. This is clearly a very attractive option due to the relative simplicity of implementation, however, its practical applicability is limited by the amount of the available memory per thread, which quickly becomes a bottleneck for larger networks and bigger amounts of data.

A slightly lower-level method is to identify independent elements in the formulae that need to be evaluated at every step of the optimization, and divide this work among several threads within one running process. The summands of , , and as defined in Eqs. (7), (10), (12) and (14) are all amenable to that kind of processing. This approach is advantageous to utilize all usable threads from within one process, but its scalability is limited by both the amount of the available memory on a single compute node (as above), and the serial part of the computations, which cannot be parallelized. In our model, it is mainly the calculation of the membrane potential U i(t)(8) and the membrane responses ν ij(t), because each value in the recurrence formulae depends on the previous one. The membrane responses ν ij(t) are less of a problem, since they can be pre-computed at the beginning of the optimization as explained above, if one is willing to trade memory consumption for performance. Alternatively, ν ij(t) can be computed in parallel, which can be faster than fetching the results from memory for a very high number of threads and low memory bandwidth.

We have also explored the possibility of distributing the estimation across several compute nodes, which is not only necessary in order to utilize larger numbers of threads than available on one node, but also allows the computation to make use of the additional memory when the problem gets too large to fit into one machine’s RAM. The most straightforward distribution scheme is to designate one process (rank) to perform serial computations required for every optimization step, broadcast the results and parameters to other ranks, have them do their share of the computations, and, finally, collect the results. The biggest advantage of this scheme lies in its ease of implementation: the communication pattern is very clear, and the code can largely remain unchanged except for the need of a few additional functions to distribute and collect the data.

In our implementation, we performed the calculation of the membrane potential U i(t), the log-likelihood function and on Rank 0, and evenly divided the work to compute , j≥1, among all other ranks. This system scales (almost) linearly up to the point when the amount of time needed to perform the computations on Rank 0 exceeds the amount of time it takes to compute the gradient distributed to all other ranks. Since it takes several orders of magnitude more time to calculate than , we have found that for N=1000 we can easily distribute each single task up to N r=10…20 ranks.

For production estimations, we combined all three approaches outlined above. The highest level of parallelization was left up to the batch system: for each estimation, we generated and submitted the job scripts for every neuron and let the scheduler optimally backfill the queue. The code was run with N t=8…16, depending on the amount of hardware threads available per processor, and N r=10…20, depending on the amount of available memory per processor and the requirements of the particular estimations. For estimations of size N=1000, this hybrid approach allowed us to scale almost linearly up to cores.

In this context, it becomes clear why not only the convexity, but also the separability property of the optimization problem discussed in Section 2.2 is crucial to our model. In a typical estimation, as described in Section 3, 1 hour recording of N=1000 neurons spiking at ∼5 s−1 would contain ∼107 spikes, so the intermediary data to be held in RAM during the optimization would need around ∼1014=10×107×(103)2 bytes or 100 TB of storage capacity. This calculation assumes that the main contribution comes from the pre-computed matrix of ν ij(t) vectors of length 107 stored as doubles and disregards all other factors. From our experience, for some N r×N t=105 threads at ∼2 GHz the optimization would take an order of magnitude of 30 minutes of walltime to converge after about a hundred of iterations.

Currently, these requirements can be barely satisfied by booking a complete supercomputer such as JUROPA,2 and any substantial increase in the number of units, or in the amount of data to be processed will put the problem beyond our reach. However, while the number of parameters of the complete log-likelihood function in our formulation is , thanks to the above-mentioned separability property, the number of parameters of is linear in the number of units, . Not only does this present major practical advantages such as easier scheduling of smaller jobs, but it also makes it possible to solve larger problems at all by proportionally trading the execution time for the amount of resources allocated to the optimization process.

Technical realization

Our model was implemented in Python, an increasingly popular language in the field of computational neuroscience. It relies upon the NumPy and SciPy scientific libraries3 for essential data structures and algorithms. We used Cython4 in order to bind to the OpenMP-parallelized computational kernels, that we extracted and re-wrote in C++ for performance reasons, and in order to access the mathematical functions from Boost C++ library. The distribution was implemented using the Python bindings to MPI, mpi4py.5

The optimization was performed via the NLopt6 package by Steven G. Johnson using the low-storage Broyden-Fletcher-Goldfarb-Shanno method (Liu and Nocedal 1989) with support for bound constraints (Byrd et al. 1995) implemented by Ladislav Luksan (L-BFGS-B). We chose to use BFGS instead of the nonlinear conjugate gradient (CG) algorithm, because the former approximates the inverse Hessian matrix of the problem and uses it to steer the search in the parameter space. This results in improved convergence at the cost of higher iteration overhead. Since in our case the computation of the objective function is substantially more expensive, this trade-off is worthwhile.

As a stopping condition, we used a criterion based on the fractional tolerance of the objective function value. The optimization was terminated if , where is the decrease in the function value from one iteration to next, reached the threshold of . The value of was selected close to the machine epsilon for the double precision floating point type, as requesting even lower tolerance would not yield a more accurate solution; the typical choice was .

It is worth to note that in the case of ℓ 1 regularized optimizations, it turned out that all gradient-based algorithms we tried were very much affected by the non-smoothness at zero, introduced by the regularization term in Eq. (16). A thorough review of the existing approaches to address this issue is presented in (Schmidt et al. 2009); we opted for implementing a smooth 𝜖–ℓ 1 approximation, originally suggested in Lee et al. (2006):

| 17 |

The derivatives of with respect to J ij(12) have to be adjusted by addition of respectively. We found that this approximation works well in practice for sufficiently small values of 𝜖<10−7 and enables us to use the L-BFGS-B algorithm without modifications. Additionally, we imposed bound constraints on the model parameters as discussed in Section 2.5; typical constraint ranges were |J ij|<50 mV for synaptic weights and for base rates. The recordings were truncated to the first and last recorded spikes, T 0=t 1 and T 1=t q, where q is the total number of recorded spikes.

Results

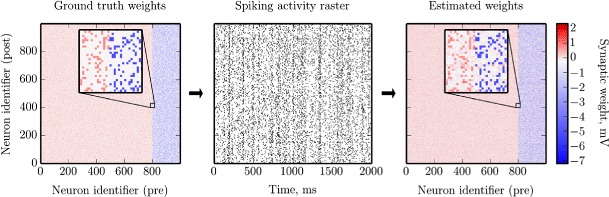

We quantified the effectiveness of our suggested method by performing a series of experiments as illustrated in Fig. 2. In these experiments we simulated neuronal networks with known (ground truth) connectivity, and reconstructed the synaptic weight matrix along with the model parameters of these networks on the basis of the recorded spike times. In this way, estimation results could be readily compared to the original connectivity matrix and model parameters. All simulations presented in this section were carried out with the NEural Simulation Tool (NEST) (Gewaltig and Diesmann 2007) and reconstructions were performed using the CPU implementation of the MLE optimizer as described in Section 2. Although the connectivity is sparse in all experiments considered below, we generally use MLE optimization here; only in Section 3.3, which describes the most difficult of the experiments, we also use regularization in order to demonstrate that our computational framework can handle regularized optimization.

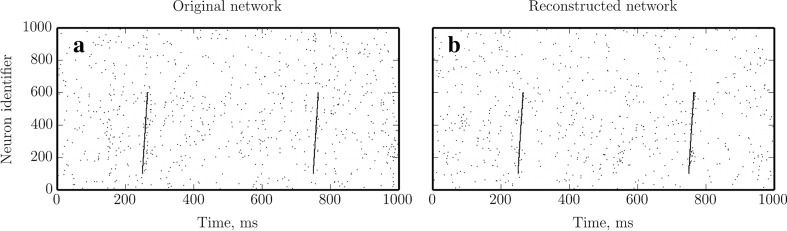

Fig. 2.

Schematic representation of the method validation setting. A test neural network is set up using known ground truth connectivity matrix (left panel) and its dynamics is numerically simulated. The emerging spiking activity of the neurons (middle panel) is recorded and fed into the reconstruction procedure. The resulting connectivity matrix (right panel) is then compared to the original one to assess the performance of the proposed technique. Insets show a zoom-in of the connectivity matrix, as indicated

In the following subsections, we present the benchmarks of the proposed technique against simulations of a widely used model of a random balanced network (Brunel 2000) and investigate the effect of choosing different neuron and synapse models, first with homogeneous and then with randomly distributed parameters. Finally, we show a successful reconstruction of a specific, non-random network, a “synfire chain” embedded in a balanced random network, only from “background” network activity (where the chain was not stimulated). Finally, by stimulating the synfire chain in a simulation of the estimated model, and comparing resulting dynamics to the output of the original network, we highlight the generative aspect of GLM network models. The abbreviations used in the following sections are summarized in Table 1.

Table 1.

Glossary of abbreviations

| EM | expectation-maximization |

| GLM | generalized linear model |

| GMM | Gaussian mixture model |

| KDE | kernel density estimation |

| LIF | leaky integrate-and-fire |

| MER | misclassification error rate |

| MLE | maximum likelihood estimation |

| probability density function |

Random balanced network of GLM neurons

As an initial testbed for our method, we selected a random balanced neural network of excitatory and inhibitory neurons in the asynchronous irregular (AI) spiking regime (Brunel 2000). Random networks do not have any particular structural features that can be exploited by the optimizer in order to improve the quality of the reconstruction, and hence in this sense they represent a “worst-case” type of input that is particularly useful for benchmarking purposes. Such networks are commonly studied using the leaky integrate-and-fire (LIF) neuron model. However, in order to be able to interpret the follow-up experiments, we first chose to assess the performance of our estimation method under idealized conditions, in which the simulated and estimated neuron and synapse models coincide: the GLM neuron model as described in Section 2 and simple synapses with exponential post-synaptic potentials.

As discussed in Section 2.2, given several conditions that our GLM satisfies, there is a unique maximum likelihood parameter set for the estimated network model (Paninski 2004). In the limit of an infinite amount of spike data used for model estimation and arbitrarily precise calculations, our method is thus bound to recover the true parameters of the simulated model. Hence, testing the method under idealized conditions, but for finite datasets, allows us to distinguish errors that are purely due to the limited length of the observations and restricted machine precision, from those due to a mismatch between the dynamics of the neuron and synapse models used to generate the data, and the dynamics of the models used to reconstruct the network.

The test network consisted of N=1000 GLM neurons with 80 % : 20 % proportion of excitatory to inhibitory neurons (“pp_psc_delta” model in NEST nomenclature, with a base rate c=5 s−1, membrane time constant of τ=20 ms and a resting potential of V r=0 mV). The nonlinearity gain of the neurons was set to δ u=4 mV as in Jolivet et al. (2006), which defines the scaling and units of a single post-synaptic potential via Eq. (5) (δ u=1 as assumed previously in Section 2 for the sake of convenience would make it unitless). Each connection was realized independently with a connection probability of 𝜖=0.2 (Erdős-Rényi p-graph). The neurons were connected by synapses with exponential post-synaptic potentials with a peak amplitude of J e=1 mV for excitatory and J i=−5 mV for inhibitory synapses, and a transmission delay of d=1.5 ms. A strong inhibitory self-connection with J s=−25 mV and a transmission delay of d s=Δt was used to model post-spike effects. The simulation progressed in time steps of Δt=0.1 ms (resolution) and the simulation time was T=1 hour. The average firing rate of the neurons was ν=4.2 s−1. The recorded spike trains were fed to the estimation method, assuming known values of the time constant τ, the transmission delays d and the delay of the self-connection d s. The method produced estimates of the synaptic weight matrix J ij and the base rates {c i} for all neurons. The original and reconstructed synaptic weight matrix for this experiment are presented in Fig. 2. Throughout this text we refer to {J ij}1≤i,j≤N, i≠j as the weight matrix; the self-connections {J ii}1≤i≤N and the baseline potentials {J i0}1≤i≤N are treated separately.

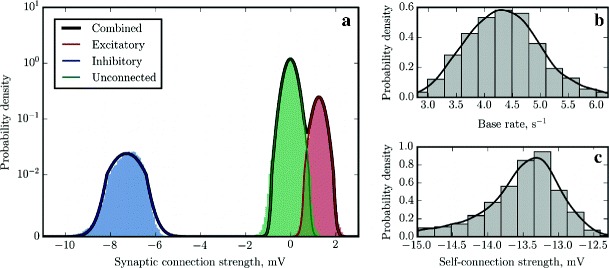

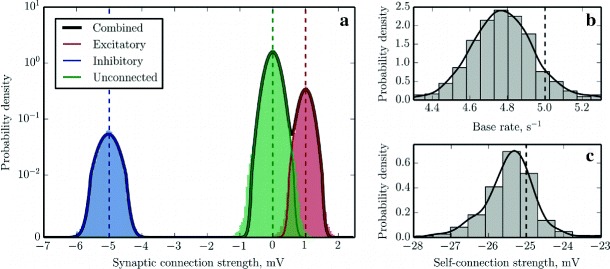

In order to evaluate the quality of the reconstruction, we analyzed the resulting distributions of recovered synaptic weights and base rates, as shown in Fig. 3. Whereas the probability density function (PDF) of the original distribution of synaptic weights can be described as a sum of three δ-functions (for excitatory, inhibitory and null connections respectively), the peaks in the reconstructed distribution are broader due to the finite duration of the recording and limited machine precision, to the extent that for realistic values of parameters, there is a degree of overlap between the components of the distributions that represent excitatory and null connections. We noted that the amplitude of the noise that causes the broadening decreases approximately in inverse proportion to the square root of the duration of the recording (data not shown), however, we selected T=1 hour as a reasonable standard amount of input data to mimic conditions where the duration of the recording is limited due to experimental and computational constraints.

This circumstance thus makes it difficult to identify weak excitatory connections unambiguously, and therefore an advanced approach to classification was needed to obtain optimal network reconstruction. To this end, we fitted a Gaussian mixture model (GMM) with a fixed number of components (n=3) to the reconstructed synaptic weights, assuming that synaptic connections, in general, can be either excitatory or inhibitory, or absent. We used an expectation-maximization (EM) algorithm to obtain a maximum likelihood estimate (MLE) of the GMM parameters (mixing weights, means and variances of the individual components), and classified the synaptic weights accordingly. The fitting and classification was performed using a Python implementation of GMM (sklearn.mixture.GMM) provided by the scikit-learn toolkit (Pedregosa et al. 2011). In order to reconstruct the PDFs of the base rates and self-connections, we used both the histogram function from the NumPy library and the Gaussian kernel density estimation (KDE) code from the SciPy library.

The results are illustrated in Fig. 3, which shows that the means of the distributions were almost perfectly reconstructed and that GMM is indeed an appropriate model for this PDF. The recovered base rates and self-connection weights are also more or less in agreement with the ground truth values. The detailed classification performance breakdown is presented in Table 2, showing that the classification of synaptic connections is nearly optimal for this dataset (assuming that the cost of making a “false positive” error is equal to the cost of the “false negative” error) and the number of misclassified connections is less than 1%.

Table 2.

Breakdown of classification errors for the GLM random network

| Connection type | Errors | FP | FN | ND |

|---|---|---|---|---|

| Excitatory | 7 300 | 62 % | 38 % | 15 % |

| Inhibitory | 0 | |||

| Unconnected | 7 300 | 38 % | 62 % | — |

| Total errors | 0.73 % | |||

The column “Errors” shows the absolute number of incorrectly classified connections belonging to each class. The columns “FP” and “FN” show the percentage of false positives and false negatives of this number accordingly. The column “ND” is the percentage of misclassified connections in violation of the Dale’s law (i.e. an inhibitory neuron is assigned an outgoing excitatory connection, or vice versa), which was not enforced for this reconstruction. The last row shows the percentage of erroneously classified connections of the total number of possible connections (N 2=106 for N=1000)

Random balanced network of LIF neurons

Having established the baseline performance in ideal conditions, we designed our next experiment to gauge the influence of mismatch between the neuron and synapse models used to generate the data and those used to reconstruct the network. To this end, we generated data with the commonly used, more complex and realistic LIF neuron model with α-shaped post-synaptic currents (PSCs). We then carried out the reconstruction as before assuming our simplified GLM neuron model and synapses with exponential post-synaptic potentials. Another important point is that whereas in the previous experiment we assumed that the membrane time constant τ and transmission delays between the neurons d are known in advance, this is certainly not the case in the laboratory setting, and hence a principled way of estimating these parameters is required in order to analyze real physiological data.

To generate the test data, we wired a network similar to the one described in the previous section, but using a LIF instead of a GLM neuron model. As before, we used N=1000 neurons with 80 % : 20 % ratio of excitatory to inhibitory cells, connection probability of 𝜖=0.2 (each connection was realized independently), transmission delay of d=1.5 ms, simulation resolution of Δt=0.1 ms. Synaptic weights were set to , with J e=1 mV and J i=−5 mV. The latter (J e and J i) were again interpreted as peak PSP amplitudes, where w=w(τ m,τ s,C) was the scaling factor (specific to the post-synaptic neuron) selected such that an incoming spike passing through a connection with the synaptic weight of w would evoke a PSP with the maximum amplitude of 1 mV. The parameters of the LIF model (“iaf_psc_alpha” in NEST nomenclature) were chosen as follows: membrane capacitance C=250 pF, membrane time constant τ m=20 ms, synaptic time constant τ s=0.5 ms, refractory time t r=2 ms, firing threshold 𝜃=20 mV, resting potential V r=0 mV and reset to V r after each spike. This time, additional to the synaptic input from other simulated neurons, each neuron received independent Poisson process excitatory inputs at a rate of and inhibitory inputs at . These external inputs represent the influence of neurons that are not part of the simulation, and are necessary to achieve asynchronous and irregular activity as in cortical networks (Brunel 2000). The simulation time was set to T=2 hours and the data was cut into training and validation parts of T t=T v=1 hour as explained below. The average neuron firing rate was ν=4.2 s−1, and so matched the average neuron firing rate of the network of the GLM neurons presented above.

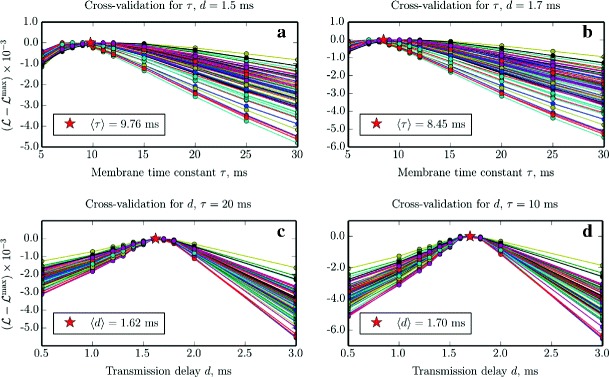

In order to recover the GLM parameters τ and d for this experiment, we applied a cross-validation procedure. It is important to note that we are not expecting to obtain exactly τ=τ m=20 ms and d=1.5 ms due to mismatch between the LIF with α-shaped PSCs and GLM with exponential PSPs models. Instead, we want to recover the optimal parameters τ and d for the GLM model to produce most similar dynamics to the recorded spike trains from the LIF model. We split the available data into a training and a validation dataset, and performed reconstructions for a subset of N s=75 neurons on the training dataset varying one parameter, while keeping the other one fixed. The resulting parameter estimates 𝜃 i were then used to calculate the log-likelihood function on the validation dataset. Two different datasets (training and validation) were used in order to ensure that the chosen values of the parameters generalize, and are not specific to the training sample. The validation curves are shown in Fig. 4a, c (the curves for the training dataset look identical); note that they all have an easily identifiable maximum. Subsequently, we averaged the locations of the maxima for all trials and performed another cross-validation run (Fig. 4d, b) for updated values of the parameters. Repeating this procedure of alternatively fixing one parameter and performing cross-validation for another one would lead us to a local extremum in the (τ,d) parameter space. However, we opted to stop after only a few iterations because the procedure is computationally expensive, and in order to asses if a sub-optimal choice of τ=10 ms and d=1.7 ms would lead to acceptable estimation results.

Fig. 4.

Cross-validation for the membrane time constant τ and transmission delay d. Log-likelihood computed on the validation dataset, using parameters estimated from the training dataset for different values of parameters τ and d. For each trial, has been rescaled according to . The red star marks the average of the horizontal location of the peaks of all curves in the plot. Each panel shows N s=75 parameter scans for N e=60 excitatory and N i=15 inhibitory neurons, randomly selected from the complete recording of N=1000 neurons. a, b Cross-validation for τ using fixed values of d=1.5 ms (standard deviation σ=1.14 ms) and d=1.7 ms (σ=1.02 ms). c, d Cross-validation for d using fixed values of τ=20 ms (σ=0.04ms) and τ=10 ms (σ=0.01 ms)

After determining τ=10 ms and d=1.7 ms through the cross-validation procedure, we used these values to estimate the connectivity and base rates. The results of the connectivity reconstruction on the training dataset were processed in the same way as in the previous subsection and are presented in Fig. 5, with further details on the classification of synaptic connections in Table 3. We find that the reconstruction quality as defined by classification into the groups of excitatory, inhibitory and null connections closely matches the performance on the ideal dataset analyzed in the previous section, despite the mismatch in models and the suboptimal choice of τ and d. Note that in this experiment, the recovered values of synaptic weights in mV cannot be compared directly to the ones that were used in the simulation which produced the data due to the differences between GLM and LIF models, unlike in the first experiment described in Section 3.1. However, this does not matter for the purposes of classification.

Fig. 5.

Reconstruction of a random balanced network of LIF neurons with α-shaped PSCs. The reconstruction was performed for τ=10 ms, d=1.7 ms (obtained through cross-validation) and d s=0.1 ms. a GMM fit for the PDF of the reconstructed synaptic weight matrix (black solid curve) and individual components (colored solid lines); colored bars under the curves show the distributions of the reconstructed synaptic weights classified using the ground truth synaptic connectivity matrix as in Fig. 3, approximated as histograms of n=200 bins. The scale of the vertical axis is logarithmic, except for the first decade, which is in linear scale. b, c Histograms and Gaussian KDEs approximating the PDFs of the base rates and self-connection weights

Table 3.

Breakdown of classification errors for the LIF random network with α-shaped PSCs

| Connection type | Errors | FP | FN | ND |

|---|---|---|---|---|

| Excitatory | 7 020 | 58 % | 42 % | 10 % |

| Inhibitory | 2 | 100 % | 0 % | 100 % |

| Unconnected | 7 022 | 42 % | 58 % | — |

| Total errors | 0.70 % | |||

The meaning of the abbreviations is the same as in Table 2

Random balanced network with distributed parameters

To make the reconstruction task more challenging and to create a more realistic benchmark for our method, we amended the network described in the previous subsection to have different parameters J e, J i, d, τ m and τ s for every neuron and synaptic connection, sampled from uniform distributions around each respective mean value (Table 4), which are the same as in the previous experiment. However, instead of trying to recover the individual values of τ i for each neuron and d i for every connection, we decided to investigate whether it would be still possible to make a useful reconstruction assuming identical “mean” values of τ for all neurons and d for all connections. Additional motivation for this choice is in that cross-validation is a computationally expensive procedure: whereas individual estimation might converge in a matter of minutes, the amount of resources needed to scan a multidimensional parameter grid grows quickly and becomes unmanageable. Therefore, we performed cross-validation on a subset of neurons as described in the previous subsection, and settled for τ=10 ms and d=1.7 ms again (data not shown).

Table 4.

Distribution of parameter values of a random balanced network of LIF neurons

| Parameter | Symbol | Range | Spread |

|---|---|---|---|

| Excitatory weight | J e | 0.8…1.2 mV | ±20 % |

| Inhibitory weight | J i | −4…−6 mV | ±20 % |

| Transmission delay | d | 1…2 ms | ±33 % |

| Membrane time constant | τ m | 15…25 ms | ±25 % |

| Synaptic time constant | τ s | 0.3…0.7 ms | ±40 % |

All parameters except for d were sampled from continuous uniform distributions limited by the values in the table. The transmission delay d was sampled from a discrete distribution with the step equal to the simulation resolution Δt=0.1 ms. Note that the synaptic scaling factor w depends on τ m and τ s of the post-synaptic neuron, and, therefore, the distributions for and (“ground truth weights”) were not uniform

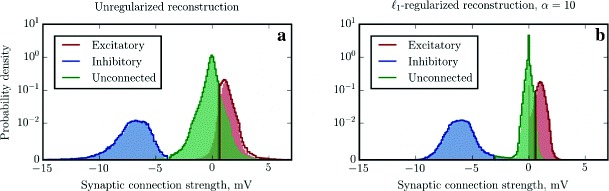

The estimation results for this dataset are shown in Fig. 6 and Table 5 (left panel and left part of the table respectively). The PDFs of the reconstructed synaptic weights were approximated using Gaussian KDE. Obviously, the individual components of the PDF were distorted, because instead of using optimal values for τ i and d i, we used rather arbitrarily chosen fixed values for all neurons and connections. However, more importantly, as the components of the original PDF of synaptic weights were broad distributions rather than δ-functions, the resulting recovered distribution components are strongly non-Gaussian. Therefore, in this case the EM procedure for GMM fails to converge to reasonable means and variances, and is no longer a viable choice to perform the classification of connections.

Fig. 6.

Unregularized and ℓ 1-regularized reconstruction of a random balanced network of LIF neurons with α-shaped PSCs and distributed parameters. The colored solid step lines show PDFs approximated with histograms of n=200 bins of the reconstructed synaptic weights corresponding to the classification via k-means clustering. Vertical lines demarking the boundaries between distributions designate the points that are equidistant from the identified centroids. The colored bars under the curves represent PDFs estimated by histograms of n=200 bins, classified using the ground truth connectivity matrix, as in Figs. 3 and 5

Table 5.

Breakdown of the classification errors for the unregularized and ℓ 1-regularized (α=10) connectivity estimations of a LIF random network with distributed parameters

| Unregularized | Regularized, α=10 | |||||||

|---|---|---|---|---|---|---|---|---|

| Connection type | Errors | FP | FN | ND | Errors | FP | FN | ND |

| Excitatory | 57 827 | 84 % | 16 % | 17 % | 33 706 | 16 % | 84 % | 3 % |

| Inhibitory | 2 226 | 95 % | 5 % | 79 % | 2 673 | 0 % | 100 % | 0 % |

| Unconnected | 59 749 | 16 % | 84 % | — | 36 379 | 85 % | 15 % | — |

| Total errors | 5.99 % | 3.64 % | ||||||

The categories of errors are the same as in Table 2

However, instead of engaging in more elaborate statistical modeling to overcome this difficulty, we can take a step back and resort to an unsupervised learning technique called k-means clustering (which is actually a simplification of GMM). This method rejects the probabilistic assignment of data points to components, and instead makes the assumption that each point belongs to one (and only one) cluster, to the centroid of which it is closest in terms of Euclidean distance. This simplification leads to sub-optimal classification when the underlying distributions violate these constraints, but the resulting algorithm is fast and robust.

The Voronoi diagrams for k-means classification are represented in Fig. 6 as solid lines: the colors show which of the three centroids is closest, in blue, green and red for inhibitory, null and excitatory connections, respectively. By comparing the solid curves and envelope of the colored bars it can be seen that in this case there is a significant overlap between the components contributed by null connections and excitatory connections. Therefore, even the most advanced classification strategies will lead to a substantially higher amount of classification errors than in the previous experiments. The classification data using k-means is given in Table 5 (left part).

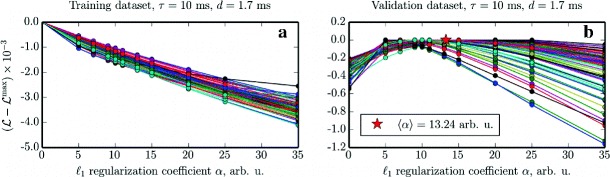

Nevertheless, the situation can still be considerably improved: here, we exploited the sparsity of the synaptic connection matrix by regularizing the GLM estimation with a ℓ 1 penalty term as explained in Section 2.5. Imposing such a prior on the estimation causes shrinking of the distribution of null connections (Tibshirani 1996) and thus enables better separation between the components. However, the choice of the penalty scaling constant α is arbitrary and so we again availed ourselves of a cross-validation procedure to determine the optimal value for our dataset.

The results of the reconstruction for a subset of the recorded neurons with different values of α on the training dataset are shown in the left panel of Fig. 7. The right panel depicts the subsequent evaluation of the log-likelihood function on the validation dataset. It is important to note that, for optimal results, this procedure should generally be performed for all neurons, and an individual regularization coefficient should be selected for each of the cells. Instead, in order to save computational resources, we only performed it for a subpopulation of neurons and subsequently selected the same value of α=10 for all cells, which is slightly lower than the average, to prevent excessive connection pruning in neurons with small optimal α.

Fig. 7.

Cross-validation for the ℓ 1 regularization coefficient α for fixed values of τ=10 ms and d=1.7 ms. Each panel shows N s=75 parameter scans for N e=60 excitatory and N i=15 inhibitory neurons randomly selected from the complete recording of N=1000 neurons. a The values of the rescaled log-likelihood as a function of the ℓ 1 regularization coefficient α computed on the training dataset. b Log-likelihood as a function of α computed on the validation dataset using the parameters estimated from the training dataset. The red star marks the average of the horizontal location of the peaks of all curves in the plot

We performed a full ℓ 1-regularized GLM estimation using α=10, still fixing the parameters to τ=10 ms and d=1.7 ms, the results of which are presented in Fig. 6, right panel and Table 5, right part. The plot shows that the contribution by null connections indeed shrunk significantly, and thus the amount of classification errors was decreased almost by half. At the same time, for some neurons α=10 turned out to be too strong of a regularization factor, and thus the estimator, in an overzealous attempt to find a sparse solution, set to zero some of the weaker excitatory and inhibitory synapse weights. This can be seen as a secondary peak of the red distribution at the origin. A secondary peak of the blue distribution is also present, but scarcely visible due to scale.

Synfire chain embedded in a random balanced network

Construction of the network model

In this experiment, we turned to structured networks in order to highlight the generative aspects of the proposed GLM model and demonstrate a potential approach to the interpretation of the recovered connectivity. One specific structure of interest, prominent in the context of cortical networks, is called a “synfire chain” (Abeles 1982). The synfire chain, consisting of consecutively linked and synchronously activated groups of neurons, is a thoroughly studied model of signal propagation in the cortex (Diesmann et al. 1999; Goedeke and Diesmann 2008).

We built a simulation of a random balanced network with an embedded synfire chain, simulated the dynamics of this network and recorded its spiking activity, which we then used as input data for the MLE procedure to infer the parameters of our GLM (no regularization was applied in this experiment, unlike in the last case presented in Section 3.3). However, as would be the case with the experimental recordings, we did not assume that we know the “right” ordering of the neuron identifiers. We therefore subjected the recovered connectivity to a clustering process in order to reveal the trace of the synfire chain in the connection matrix. After identifying the synfire chain in the network, we performed a simulation where we stimulated the discovered first “link” of the chain in the original and reconstructed networks, and observed identical dynamics in both cases.

Similarly to the previous experiments, we first constructed a random balanced network of LIF neurons (N=1000) with 80 % : 20 % proportion of excitatory to inhibitory cells. This time, we used “iaf_psc_delta_canon” model in NEST nomenclature; this model is different from the standard “iaf_psc_delta” and “iaf_psc_alpha” LIF neurons in that the points in time when it emits spikes are not tied to the grid defined by the simulation resolution, but rather are recorded precisely as they occur (Morrison et al. 2007; Hanuschkin et al. 2010). Correspondingly, for the external inputs, we employed the continuous time version of the Poisson generator “poisson_generator_ps”. Since this network model works in continuous time and does not require discretization or binning of the spike data, we wanted to examine the implications of feeding the precise spike times to the MLE of the GLM, as opposed to data binned to Δt=0.1 ms simulation resolution as in the previous experiments. The model parameters were fixed to τ m=20 ms, τ r=2 ms, 𝜃=20 mV, and V r=0 mV. Each neuron was set to receive a fixed number of incoming connections (M e=80 excitatory and M i=20 inhibitory), where the pre-synaptic neurons were randomly selected (without replacement) from the excitatory and inhibitory populations respectively (implemented as “RandomConvergentConnect” function in NEST). Synaptic weights were set to J e=0.9 mV for excitatory, J i=−4.5 mV for inhibitory connections with a transmission delay of d=1.5 ms. Additional independent Poisson process excitatory inputs were supplied at and inhibitory inputs at .

On top of this “background” network, we selected N l=10 groups (links) of neurons each ( excitatory and inhibitory cells) and connected all excitatory neurons of every group to each of the neurons in the next group with excitatory synapses (transmission delay d=1.5 ms). Inhibitory neurons in a link of the chain do not have specific connections to the next link in the chain (Hayon et al. 2004). No neuron in the network was part of more than one group of the synfire chain. This way, we created a “hidden” embedded synfire chain, which receives inputs from the background random network and likewise projects outgoing connections to the background network. When the first group of this structure is stimulated in a coordinated fashion, the chain reliably propagates the excitation from one group to the next until it reaches the last one, and terminates. In the absence of such coordinated stimulation, the synfire chain did not activate, and only “background” activity was observed.

Identification of the synfire chain by connectivity clustering

The complete network was simulated for T=2 hours of biological time and exhibited an average firing rate of ν=1.4 s−1. The synfire chain was not stimulated during the simulation, so the spike train recordings contained no instances of propagating synfire activity. The neuron identifiers were randomly shuffled and the resulting spike raster was fed into the MLE reconstruction procedure.

We reasoned that one of the most generic differentiators between the neurons that belong to various groups (inhibitory neurons and excitatory neurons that are, or are not part of the synfire chain) is the relative strengths of the synapses (both incoming and outgoing connections can be considered). Therefore, we can apply a clustering algorithm to the recovered connectivity matrix to discern between several classes of neurons. However, most algorithms (such as k-means or GMM, employed in the previous sections) require the desired number of clusters to be set explicitly, either through prior knowledge, or by applying statistical or information theory methods to the data to get an estimation.

To circumvent this problem, we carried out an unsupervised learning technique known as hierarchical clustering. It amounts to iteratively repeating the procedure of looking at the discovered clusters (which, in the first step, each contain a single element), determining the ones that are most similar according to a chosen metric, and merging them into an agglomerate cluster; the process continues until a single cluster remains. The results are visualized by constructing a so-called “dendrogram”, which shows the discovered hierarchy of clusters as a tree structure. Therefore, it is not necessary to specify the number of clusters in advance, but rather the most appropriate set of clusters can be selected by analyzing the dendrogram after performing the clustering. This approach fits very well to an exploratory setting, where one might wish to appreciate the entirety of possible groupings in a compact graphical form and then choose the one that best highlights the particular aspect of interest of the data.

We applied hierarchical clustering to the connectivity matrix using Ward’s minimum variance method (Ward 1963) as a criterion for choosing the pair of clusters to merge at each step. Ward’s minimum variance criterion minimizes the total within-cluster variance and enables the grouping of items into sets such that they are maximally similar to each other according to some definition of similarity, which is usually expressed in form of a “dissimilarity matrix”. We used the SciPy hierarchical clustering package (scipy.cluster.hierarchy) to obtain the linkage and visualize the results.

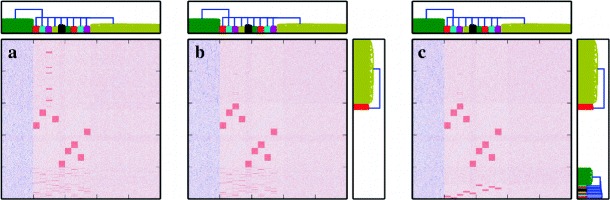

Initially, we grouped the neurons by using the outgoing synaptic weights as the measure of dissimilarity, as shown for the MLE-reconstructed connectivity in Fig. 8a. This clustering enabled us to tell excitatory and inhibitory neurons apart (smaller blueish group on the left, and larger reddish group on the right of the matrix). Additionally, in this figure, we can see eight big red squares, which represent the links of the synfire chain. In total, nine squares should be visible in the connectivity matrix for N l=10 links, because the outgoing connections of the last link are not statistically different from those of the background neurons.

Fig. 8.

Clustering of synaptic weights uncovers the synfire chain in the reconstructed connectivity matrix. a Connectivity is first clustered by the outgoing connections (columns), while trying to achieve minimal variability inside each group. The dendrogram at the top of the panel shows the hierarchy of the clusters with the relevant groups highlighted in different colors. The green cluster on the left is formed by the inhibitory neurons. The yellow cluster at the right consists of excitatory neurons that are not part of the synfire chain and so do not have strong outgoing connections. The clusters in the middle correspond to the links of the synfire chain, which coalesce as red squares in the matrix. b Clustering by incoming connections (rows) inside the yellow clusterof neurons reveals the last link of the chain. c Clustering by incoming connections inside the green cluster helps to identify the inhibitory neurons that are part of the synfire chain (thin red rectangles)

The square missing from Fig. 8a is the last link of the chain, which by construction cannot be detected via clustering by the outgoing connections. Therefore, we subjected the neurons that are part of the big yellow cluster (excitatory neurons, which have not been previously identified as taking part in any of the synfire chain links) to additional clustering by incoming connections. This operation reveals the formerly concealed last link of the chain (Fig. 8b). Finally, we applied the same procedure to the inhibitory neurons in the big green cluster. This reveals the inhibitory neurons that are part of the synfire chain. These neurons receive connections from the previous link in the chain but do not send outgoing projections to the next links, and so they are also impossible to detect by clustering only by outgoing connections. This step completes the clustering procedure and we arrive at the final result as shown in Fig. 8c.

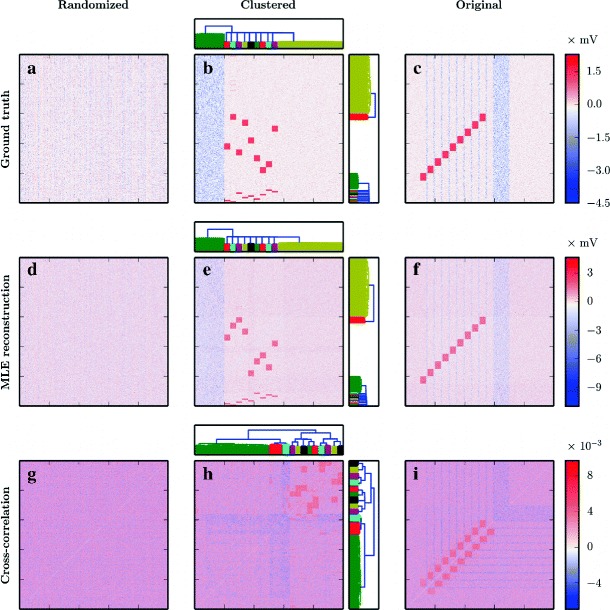

In Fig. 9, the clustered matrices (middle column) are contrasted with the matrices in randomized (left column) and original ordering (right column), i.e. the initial indexing of neurons that we used to define the neuron groups of the synfire chain network. An identical clustering procedure was applied to the ground truth connectivity matrix (Fig. 9a–c) and the one obtained from MLE estimation using the recorded spike trains (Fig. 9d–f). Note that, as explained at the end of Section 3.2, the reconstructed values of the synaptic weights in the second row cannot be directly compared to the original synaptic strengths.

Fig. 9.

Identification of an embedded synfire chain by clustering connectivity estimated from “background” spiking activity. The grouping by rows delineates the panels produced on the basis of the ground truth connectivity, connections estimated using the GLM model, and lagged cross-correlation data. The grouping by columns lays out the panels presenting the connectivity matrices where the order of the neuron identifiers have been randomized, recovered by clustering and defined by the sequence in which the neurons were originally wired up. a, d, g Ground truth and MLE reconstructed synaptic weights, as well as lagged cross-correlation coefficient matrices for randomized neuron identifier order. c, f The red rectangles correspond to the connections from one chain link to the next. Thin blue bands identify inhibitory neurons that belong to the synfire chain. The wide blue band corresponds to the inhibitory neurons that are not part of the chain. b, e The interpretation of the bigger red rectangles and the wide blue band is the same as above, except that all inhibitory neurons are now grouped together. The thin red rectangles at the bottom correspond to the groups of inhibitory neurons in the synfire chain receiving incoming connections from all excitatory neurons in the previous link. The clustering process that produced the reordering and the dendrograms is illustrated in steps in Fig. 8. g, h, i The lagged cross-correlation matrix is symmetric by construction. Therefore, the dendrograms at the top and on the right of panel h are identical (unlike b, e). Diagonal entries (all 1) were excluded here.h, i The red rectangles correspond to groups of neurons that exhibit positively correlated firing activity, while inhibitory neurons display negative correlation, marked by the blue bands

The synfire chain is not apparent in the connectivity matrix in randomized ordering, neither for the ground truth matrix (Fig. 9a), nor the MLE-estimated connectivity (Fig. 9d). However, clustering neurons by the similarity of incoming and outgoing connection weights reveals the synfire chain substructure (Fig. 9b, e) of both excitatory and inhibitory neurons. This shows that our clustering procedure successfully recovers the group structure of the synfire chain network. Note that in the original ordering (Fig. 9f), the reconstructed matrix also resembles the ground truth matrix to a great extent (Fig. 9c), as expected based on our previous reconstruction experiments above.

Comparison to correlation-based connectivity estimation