Abstract

The present study recorded event-related potentials using rapid serial visual presentation paradigm to explore the time course of emotionally charged pictures. Participants completed a dual-target task as quickly and accurately as possible, in which they were asked to judge the gender of the person depicted (task 1) and the valence (positive, neutral, or negative) of the given picture (task 2). The results showed that the amplitudes of the P2 component were larger for emotional pictures than they were for neutral pictures, and this finding represents brain processes that distinguish emotional stimuli from non-emotional stimuli. Furthermore, positive, neutral, and negative pictures elicited late positive potentials with different amplitudes, implying that the differences between emotions are recognized. Additionally, the time course for emotional picture processing was consistent with the latter two stages of a three-stage model derived from studies on emotional facial expression processing and emotional adjective processing. The results of the present study indicate that in the three-stage model of emotion processing, the middle and late stages are more universal and stable, and thus occur at similar time points when using different stimuli (faces, words, or scenes).

Keywords: emotional pictures, event-related potentials, rapid serial visual presentation, P2, late positive potential

Introduction

Rapid responses to emotional arousal stimuli, especially potentially biologically relevant stimuli, such as snakes, tigers, or pictures of accidents, particularly when attentional resources are limited, is believed to be evolutionarily significant to humans (Schupp et al., 2006; Vuilleumier and Pourtois, 2007). Understanding the temporal characteristics of rapid emotion processing can help improve emotion recognition, allowing us to make the proper response. In fact, many studies have explored the time course of emotion processing; in these studies, different stimuli, such as emotional words, facial expressions, and sounds, have been employed to induce different emotions. In addition, given its high temporal resolution, the event-related potential (ERP) technique has been widely adopted in studies of emotion processing.

Recently, researchers have begun to investigate the timing of emotional facial expressions processing. For example, the results of an ERP study by Utama et al. (2009), which used emotional facial images as stimuli and required the participants to distinguish the type of emotion and rate the emotional intensity of each image, revealed that the P1 component was significantly correlated with the correct recognition of facial images, while the N170 was significantly correlated with the intensity level rating of the images. Thus, the authors concluded that processing facial emotion is comprised of two different stages: (1) the rapid recognition of facial emotions, which occurs as early as 100 ms after image onset, and (2) detailed processing, such as the intensity assessment, which occurs around 170 ms post-stimulus.

Though the above mentioned two-stage model of emotional facial expression processing revealed the time course of emotional facial processing to some extent, it seems too rough to clarify the temporal characteristics 100 ms after image presentation. To solve this problem, based on the rapid serial visual presentation (RSVP) paradigm, Luo et al. (2010), who adopted emotional facial pictures (from the Chinese Facial Affective Picture System, CFAPS) as stimuli, proposed a three-stage model of facial expression processing? In this model, the brain distinguishes negative emotional facial expressions from positive and neutral facial expressions in the first stage, which explains why the posterior P1 and anterior N1 amplitudes elicited by fearful faces are larger than the amplitudes elicited by neutral and happy faces. In the second stage, the brain distinguishes emotional from non-emotional facial expressions, which explains why the N170 and vertex positive potential (VPP) amplitudes elicited by fearful and happy faces are larger than those elicited by neutral faces. In the third stage, the brain classifies different types of facial expressions, thus explaining why the P3 and N3 amplitudes elicited by fearful, happy, and neutral faces are different from one another. This three-stage model may help us to understand the time courses of emotional facial expression processing. Below, we introduce several representative ERP components that are involved in emotion processing.

When it comes to early emotional facial expressions processing, we cannot ignore two representative ERP components, namely P1 and N1. Both of these components are indicators of early processing (Brown et al., 2012) and represent comparatively automatic mechanisms of selective attention (Dennis et al., 2009). P1 is a positive-going potential that peaks around 80–130 ms after stimulus onset, and is presumed to indicate early visual processing (Jessen and Grossmann, 2014). Furthermore, it reaches its maximal amplitude over the occipital areas in emotional word and facial expression processing (Van Hooff et al., 2008; Cunningham et al., 2012). Moreover, P1 is related to the selective attention to emotional stimuli, i.e., the P1 amplitudes elicited by attended stimuli were higher than the P1 amplitudes to stimuli that were unattended (Dennis et al., 2009). Additionally, the P1 amplitudes elicited by negative emotional pictures and words were larger than the P1 amplitudes elicited by positive ones (Bernat et al., 2001; Smith et al., 2003; Delplanque et al., 2004). N1, a negative-going potential, appears shortly after P1, and is sensitive to the characteristics of facial expressions; for example, Eimer and Holmes (2002) found that fearful faces induced a shorter N1 latency than did neutral faces.

Another important component is P2. P2 is an attention-related component, with a typical peak latency of about 200–250 ms (Ferreira-Santos et al., 2012), which reflects the detection of visual features during the perceptual stage of processing (Luck and Hillyard, 1994; Carretié and Iglesias, 1995). Moreover, P2 is regarded as indexing some aspects of the stimulus categorization process (García-Larrea et al., 1992). Previous ERP studies on the relationship between the valence of stimuli and the P2 amplitude present conflicting results. On one hand, using both emotional pictures (Carretié et al., 2004) and emotional words (Herbert et al., 2006; Kanske and Kotz, 2007) as stimuli, researchers found that emotional stimuli elicited significantly larger P2 amplitudes than did neutral stimuli. However, Yuan et al. (2007) reported that the P2 amplitudes elicited by emotional and neutral stimuli were not significantly different. At the same time, some researchers found that the P2 amplitudes elicited by negative stimuli were significantly greater than those elicited by positive stimuli (Carretié et al., 2001; Delplanque et al., 2004; Huang and Luo, 2006; Wang and Bastiaansen, 2014), while others have reached the opposite conclusion (Schapkin et al., 1999). Thus, the exact role of the P2 component in emotion processing remains unclear.

The late positive potential (LPP, also known as LPC) is a positive, slow components elicited by both emotional (positive and negative) and neutral stimuli, and can be used as an indicator of continued attention to a motivationally striking stimulus (Jaworska et al., 2011; Weinberg and Hajcak, 2011; Leite et al., 2012; Gable and Adams, 2013; Novosel et al., 2014). Numerous studies (Dennis and Hajcak, 2009; Hajcak et al., 2009, 2013; Kessel et al., 2013; Smith et al., 2013; Yuan et al., 2014) showed that the amplitude of the LPP is sensitive to stimulus valence, i.e., emotional stimuli (positive and negative) elicited larger LPP amplitudes than did neutral stimuli. However, whether positive and negative stimuli differentially affect the LPP amplitude has not yet been agreed upon by researchers. By adopting emotional pictures from the International Affective Picture System (IAPS) as stimuli and controlling for the arousal dimension of the pictures, Schupp et al. (2004) and Codispoti et al. (2006) found that the LPP amplitudes elicited by positively and negatively valenced pictures were not significantly different in healthy subjects. In contrast, Huang and Luo (2009) and Weinberg and Hajcak (2010) demonstrated that unpleasant stimuli elicited larger LPP amplitudes than did pleasant stimuli. Hence, more research is necessary to resolve these inconsistencies.

Besides conveying emotional information through facial expressions, humans can convey emotional information through writing and speech, which inspired researchers to explore the time course of emotional word processing. Thus, with emotional words, the findings were quite similar to those obtained with faces, although some differences were also observed. However, our later studies indicate that, in general, the three-stage model still holds. For example, in one of our recent studies (Zhang et al., 2014), we adopted emotional Chinese adjective (selected from the Chinese Affective Word System, CAWS), and used the same paradigm (RSVP) as that in our previous study (Luo et al., 2010). In that study, we found that the processing of emotional adjective seemed to occur in the same three stages as did the processing of emotional facial expressions. Specifically, in the first stage, the brain distinguished negative adjectives from non-negative adjectives. Next, the brain discerned emotional adjectives from non-emotional adjectives. Lastly, negative, neutral, and positive adjective were separated. Soon after, in a separate study, we investigated the time course of emotional noun processing and found that the processing of emotional nouns (from the CAWS) also occurred in three different stages (Yi et al., 2015). Besides, we did not find the negativity bias for emotional noun processing that is usually found in the processing of emotional facial expressions and adjectives, i.e., the brain did not distinguish negative nouns from positive and neutral nouns in the first stage. The remaining two stages of emotional noun processing showed similar results to the results for emotional adjective processing, indicating that the brain distinguished emotional nouns from non-emotional nouns in the middle stage, and classified the three different types of nouns (negative, positive, and neutral) in the last stage. These major findings for the time courses of emotional facial expression and word processing are summarized in Table 1.

Table 1.

Summary of findings.

| Stimuli/ERP/Time | First stage | Second stage | Third stage |

|---|---|---|---|

| Facial expression | P1, N1: R > L neg > neu, pos (ns) | N170: R > L VPP: M > R, L neg, pos (ns) > neu | N300: R > M, L P300: M, R > L neg > pos > neu |

| Emotional adjective | P1:L, R > M Left:neg > neu, pos (ns) | N170, EPN L: neg, pos (ns) > neu R:neg, pos vs. neu (ns) | LPC:M > R, Lvs. R, M (ns) pos > neg > neu |

| Emotional noun | PI pos, neu vs. neg (ns) pos vs. neu (ns) | N170: L > R L: pos, neg > neu R:pos, neg vs. neu (ns) | LPC:M > R, L vs. R, M (ns) neg > neu > pos |

Comparisons of the time courses of emotional facial expression and word processing based on representative ERP components. neg, negative; neu, neutral; pos, positive; ns, no significant difference; R, L, and M = Right, left, and middle hemisphere.

The existence of three temporal processing stages for emotional facial expression and word processing is easily demonstrated. However, there are also processing differences between the two stimulus types. Firstly, we found that negative expressions and adjectives elicited lager right (Luo et al., 2010) and left (Zhang et al., 2014) N1 amplitudes, respectively, than did positive and neutral expressions and adjectives; however, the N1 amplitudes elicited by negative, neutral, and positive nouns were not significantly different (Yi et al., 2015). Secondly, although the N170 effect has been found in all processes, the right hemisphere appears to be superior for processing emotional facial expressions, but the left hemisphere appears to be superior for word (both emotional adjective and noun) processing. These discrepancies may be explained by the different types of stimuli (facial expressions versus words) adopted in the two experiments. Indeed, the processing differences between these two common emotion inducing materials [words and pictures (facial expressions)] have been widely discussed in past years. Firstly, formally speaking, words transmit affective information on a symbolic level, while facial expressions convey emotion information through biological cues (Zhang et al., 2014). Comparatively, the latter is more direct, which is relevant to the processing difference between the two stimuli. Next, some researchers (Seifert, 1997; Azizian et al., 2006) discovered that processing superiority exists for pictures vs. words, i.e., pictures have quicker and simpler access to associations. Since words convey symbolic information, it is reasonable to suspect that the processing of words requires extra translation activity at a surface level before they access the semantic system. Additionally, different brain regions have been activated when processing pictures and words. A positron emission tomography (PET) experiment (Grady et al., 1998) has shown greater activity of the bilateral visual pathway and medial temporal cortices, during processing of pictures. In contrast, for processing of words, strong activation patterns was observed in prefrontal and temporoparietal regions.

As mentioned above, it has been showed that the processing of both emotional facial expressions and words occurs in three different stages; however, what about the time course of emotional pictures that are not of faces (e.g., scenes)? Considering the potential similarities between processing emotional facial expressions and pictures (for example, both types of stimuli could transmit emotion information more intuitively than words), we postulated that the processing of emotional pictures would also show different stages. The present study, adopting the RSVP paradigm and using both neutral faces and scenes as stimuli, aimed to explore the temporal characteristics of emotional picture processing under conditions of limited attentional resources. It is reasonable to speculate that the processing of emotional pictures will show similar temporal characteristics to those shown in facial expression processing.

The early ERP components are sensitive to the non-emotional perceptual features of the stimuli (De Cesarei and Codispoti, 2006; Olofsson et al., 2008). It has been found that in the earliest processing stages, the physical properties of a stimulus such as its color (Cano et al., 2009) and complexity (the latter producing a very early effect, i.e., 150 ms after stimulus onset; Olofsson et al., 2008) influence the affective waveforms. Considering these factors, it is reasonable to expect that the processing of emotional pictures in the early stage is not pure processing to isolate the emotion information conveyed by the pictures; therefore, it is not possible to distinguish whether the main effect was caused by physical attributes or emotions, even if there appeared to be a P1 main effect. Based on these considerations, the early processing of emotional pictures was not analyzed in the present study. We hypothesized that subjects would distinguish emotional pictures from non-emotional pictures in the middle stage of emotional picture processing and would distinguish the different valences of the pictures in the late stage.

Materials and Methods

Subjects

Eighteen (nine men) healthy undergraduates from Chongqing University of Arts and Sciences were tested. All participants reported normal or corrected to normal visual acuity and no history of mental illness and brain disease, and all of them were right-handed. Participants received a small amount of money for participation. All participants provided written informed consent, which was approved by the Ethics Committee of The Chongqing University of Arts and Sciences.

Stimuli

Materials consisted of four neutral face pictures, 18 emotional pictures, and 12 scrambled pictures (SPs), described in detail below. Four grayscale photographs of four different identities (two females) showing neutral expressions were selected from the CFAPS, while eighteen emotional pictures convey positive, neutral, or negative emotions (six neutral, six happy, and six fearful pictures) were selected from the Chinese Affective Picture System (CAPS). The emotional pictures were not face-related pictures, including landscapes, animals and events of life scenes. SPs made by randomly swapping small parts (18 × 18 pixels) of the same neutral pictures were used as distraction stimuli. The scrambled images had the same rectangular shape, size, luminance, and spatial frequency as the face pictures and emotional pictures, used as mask. The visual angle was 5.6 × 4.2°. To control the influence of arousal on the processing of emotional pictures, the arousals of all images having been measured on a 9-point scale before the formal experiment, the arousals of emotional pictures (M ± SD, positive: 5.61 ± 0.43, negative: 5.97 ± 0.59) were higher than neutral pictures (M ± SD, 3.76 ± 0.36), F(2,15) = 8.40, p < 0.001, η2 = 0.836, and there was no difference between positive and negative pictures (p = 0.607), while the emotional pictures differed significantly in valence, F(2,15) = 434.49, p < 0.001, η2 = 0.983; M ± SD, positive: 7.61 ± 0.15, neutral: 4.81 ± 0.23, negative: 2.53 ± 0.44.

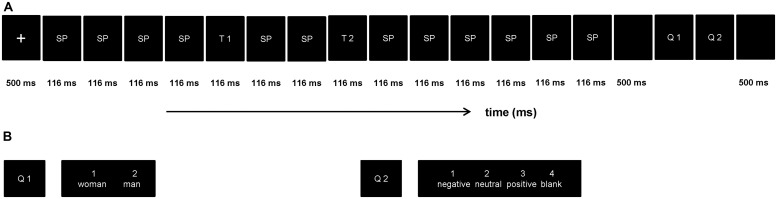

Procedure

In order to restrict attention resources, the procedure of present ERP study was designed on the basis of the RSVP paradigm. The procedure includes four blocks, while each block comprised of 120 trials, a total of 480 trials. As showed in Figure 1, each trial structure consisted of fourteen pictures (two target stimulus), pictures were portrayed with a stimulus-onset asynchrony (SOA) of 116 ms and no blank inter stimulus interval (ISI). The T1 emerged randomly and equi-probably at the fifth, sixth, seventh position, nextly, two distracting stimulus, then the T2 (SOA = 348 ms), and the remaining distracting stimulus items were presented in turn succesively. All stimuli were presented in the center of the screen. The first target stimulus (T1, discriminate the gender showed in the picture, subjects were asked to press Key “1” when the stimuli was woman and Key “2” when if it was man) was one of the four gender pictures (two females) and the second target stimulus (T2, discriminate the valence showed in the picture, press Key “1” if T2 was negative, Key “2” when neutral, Key “3” when positive, Key “4” if T2 was blank) was one of the 18 emotion pictures. The question would disappear while participants pressed the index key, and this task without a specific time limit. All subjects were required to respond to the two questions with their right hand. Stimulus presentation was controlled by the E-Prime 1.1 software (Psychology Software Tools Inc., Pittsburgh, PA, USA).

FIGURE 1.

The rapid serial visual presentation (RSVP) paradigm used in this experiment. a(A) Each trial contained 10 scrambled pictures (SP), two target stimuli (T1 and T2), and two questions (Q1 and Q2). The T1 emerged randomly and equi-probably at the fifth, sixth, seventh position, T1 and T2 were presented within an interval of 232 ms. (B) Example for the two questions in each trail.

This study was completed in a sound attenuated room, participants sat in front of a 17″ computer screen (refresh rate 60 Hz) at a distance of 90 cm. In order to ensure them fully understand the experiment procedure, with 20 practice trials before the formal experiment. In front of each trial, a white fixation point present in the center of the screen for 500 ms. After the presentation of each trial, participants were asked to complete both task 1 and task 2 as accurately as possible. In terms of time sequence, they were told to complete task 2 as accurately as possible, based on the accurate response to task 1. The two tasks were appeared serially with fixed order at the end of each trial. Then, participants would be led into the following series after 500 ms during which the screen stayed black and blank. No feedback was provided for each trial. To avoid being overtired, all participants were forced to rest for 2 min after each block.

Data Recording and Analysis

Brain electrical activity was recorded from 64 scalp sites using tin electrodes mounted on an elastic cap (Brain Product, Munich, Germany) referentially against left mastoids. Horizontal electrooculographies (EOGs) were recorded from two electrodes sites at the outer canthi of each eye, while vertical EOGs were recorded from tin electrodes placed 1 cm above and below the right eye. All interelectrode impedances were kept below 5 kΩ. The EOG and electroencephalogram were collected with a bandpass of 0.01–100 Hz, sampled at a rate of 500 Hz, and re-reference offline to obtain a global average (Bentin et al., 1996). The EEG data were corrected for eye movements using the method proposed by Gratton et al. (1983), as implemented in the Brain Vision Analysis software (Version 2.0; Brain Product, Gilching, Germany). Horizontal EOGs and vertical EOGs were used to pick up eye movement artifacts. Trials with EOG artifacts (mean EOG voltage exceeding ±80 μV) and other artifacts (peak-to-peak deflection exceeding ±80 μV) were excluded from averaging. The averages were then digitally filtered (low-cut 30 HZ, 24 dB/octave).

The averaged epoch for ERP was 1200 ms including a 200 ms pre-stimulus baseline. Trials were accepted only if participants gave correct response to both T1 and T2. EEG images evoked by the positive, neutral, and negative emotions were overlaid and averaged. On the basis of the hypothesis and the topographical distribution of grand-averaged ERP activity, P2 and LPP were chosen for statistical analysis in the presented study. The P2 component (150–280 ms) were analyzed at the following nine electrode sites (Fz, F3, F4, FCz, FC3, FC4, Cz, C3, C4), we used local peak detection for each of the nine electrode sites (Fz, F3, F4, FCz, FC3, FC4, Cz, C3, C4) and applied a peak detection over the common (across three conditions) grand average (coming up with nine latencies), which was then commonly used even if a particular participant did not have a peak there, 21 sites (Fz, F3, F4, FCz, FC3, FC4, Cz, C3, C4, CPz, CP3, CP4, Pz, P3, P4, POz, PO3, PO4, Oz, O1, O2) were chosen for the analysis of LPP (400–500 ms). The baseline-to-peak amplitude was computed for P2 component while average amplitude was computed for LPP. Amplitudes and latency of each component were measured by a two-way repeated-measure analysis of variances (ANOVAs). Factors involved in the analysis were emotional types (three levels: positive, neutral and negative) and electrodes site, p-values were corrected by Greenhouse-Geisser correction.

Results

Behavior Results

The results of ANOVA for the accuracy revealed a significant main effect of emotional type, F(2,34) = 5.40, p = 0.018, η2 = 0.241. The results of pairwise comparison showed that the accuracies of negative emotion pictures (97.06 ± 2.86%) were higher than positive emotion pictures (91.89 ± 7.05%) and neutral pictures (89.56 ± 11.55%), and there was no significant difference between positive and neutral pictures (p > 0.05).

ERP Data Analysis

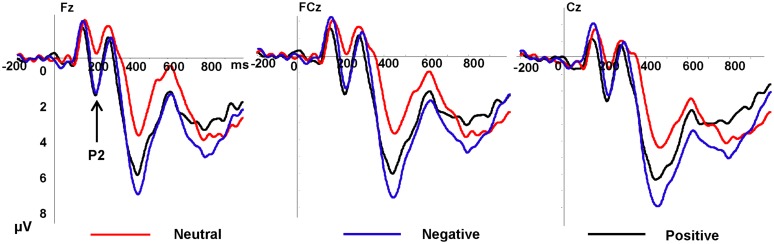

P2

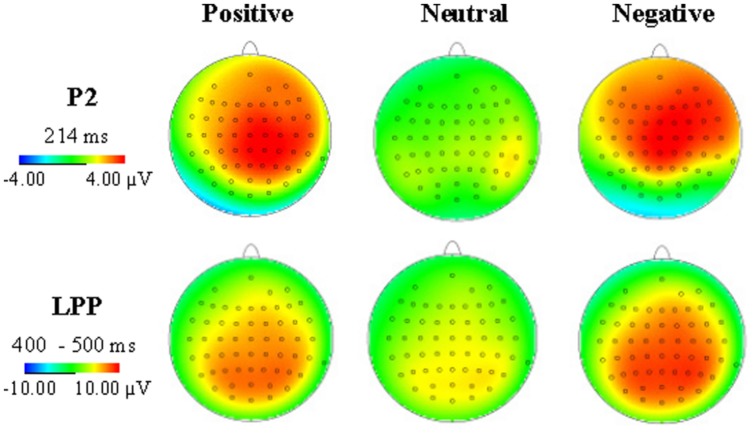

As showed in Figure 2, the P2 amplitudes revealed significant main effects of emotion type, F(2,34) = 8.83, p = 0.003, η2 = 0.525, and electrode, F(8,136) = 3.13, p = 0.026, η2 = 0.155, there was no significant interaction between emotional type and electrode. Positive pictures (3.29 μV, p = 0.001) and negative pictures (3.02 μV, p = 0.001) elicited greater amplitudes than neutral pictures (1.27 μV), and the amplitudes elicited by positive and negative pictures showed no significant difference (p = 0.562). Frontal electrode elicited lager P2 amplitudes than the other part. The P2 latency showed no significant difference both of main effect and interaction effect.

FIGURE 2.

Grand average event-related potentials (ERPs) of P2 components for negative (purple lines), neutral (red lines), and positive (black lines) conditions recorded at Fz, FCz, Cz.

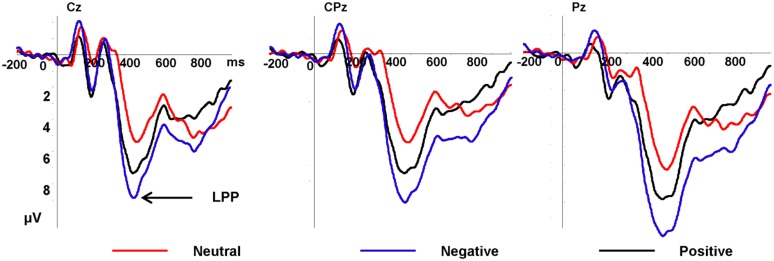

Late Positive Potential

In Figure 3, the LPP amplitudes revealed significant main effects of emotion type and electrode [F(2,34) = 13.10, p < 0.001, η2 = 0.435; F(20,340) = 4.93, p = 0.01], there was no significant interaction between emotional type and electrode. Negative pictures elicited greater (7.69 μV) amplitudes than positive pictures (6.34 μV, p = 0.015) and neutral pictures (4.37 μV, p < 0.001), and the LPP amplitudes elicited by positive pictures was greater than neutral pictures (p = 0.015). Pz electrode elicited the largest LPP amplitudes (7.29 μV, see Figure 4).

FIGURE 3.

Grand average ERPs of late positive potential (LPP) components for negative (purple lines), neutral (red lines), and positive (black lines) conditions recorded at Cz, CPz, Pz.

FIGURE 4.

Average ERP topographies of the P2 and LPP components across three emotional conditions.

.

Discussion

In this study, the behavioral data showed that the participants’ accuracy on negative pictures was higher than their accuracies on positive and neutral pictures (showed no difference). This is in line with the notion that negative stimuli are prioritized in emotion processing given their strong biological significance. Furthermore, the ERP results showed that positive and negative pictures (no difference) elicited larger P2 amplitudes than did neutral pictures. Additionally, the LPP amplitudes elicited by negative pictures were larger than those elicited by non-negative pictures, while positive pictures elicited larger LPP amplitudes than did neutral ones.

In the RSVP paradigm, a series of stimuli, such as pictures, letters, words, or digits is presented in rapid succession at the same location. Usually, the stimuli are presented at the rate of 6–20 item per second, and the participants are instructed to only react to one or more stimuli (the target stimuli) that are different from the other stimuli in the series (distraction stimuli) in color, brightness or other features (Broadbent and Broadbent, 1987; Raymond et al., 1992; Isaak et al., 1999). Many studies (Broadbent and Broadbent, 1987; Weichselgartner and Sperling, 1987; Raymond et al., 1992; Isaak et al., 1999) have indicated that this special paradigm is useful for exploring the temporal characteristics of attentive processes. Additionally, previous studies (Chun, 1997; Kessler et al., 2005; Shapiro et al., 2006) revealed that the attentional resources and task difficulty were affected by two factors, the inter-stimulus interval (the attentional blink appeared when the inter-stimulus interval ranged from 200 to 500 ms) and the number of tasks (participants perform better on a single-task than on a dual-task, which is referred to as the attentional blink), especially in the middle and late stage of emotion processing (for details, please refer to our previous work). The three-stage model of emotional facial expressions and words processing proposed in our previous studies (Luo et al., 2010; Zhang et al., 2014; Yi et al., 2015), was based on the lack of attentional resources, i.e., a process is in accordance with the three-stage model only when the processing of emotional facial expressions and words is within the attentional blink time window. The present study aimed to test whether the processing of emotional pictures other than faces is also in line, or partly in line, with the three-stage model; therefore, the test needed to be conducted under conditions where the participants lacked attentional resources. Based on the above considerations, the present study adopted the dual-task experimental procedure based on the RSVP paradigm.

Utilizing this paradigm, we found that the P2 amplitude elicited by emotional pictures was higher over the frontal lobes compared to those elicited by neutral pictures. Since P2 is generally viewed as an index of certain stimulus attributes (García-Larrea et al., 1992), the P2 component mainly differentiated emotional stimuli from non-emotional stimuli. Increased P2 amplitudes for emotional pictures compared to neutral pictures have also been found by other researchers (Peng et al., 2012; Wu et al., 2013). In addition, neither the main effect nor the interaction with the P2 latency was significant in the present study, which is supported by the results of Yuan et al. (2007). However, Carretié et al. (2001) stated that shorter P2 latencies were elicited in response to negative pictures than in response to positive pictures. However, there are several differences between their study and the present study. First, a different response method was used, i.e., oral report (Carretié et al., 2001) vs. keyboard press (present study). Second, different stimulus presentation times were used (longer in Carretié et al., 2001); the longer times in the study by Carretié et al. (2001) may have absorbed more attentional resources. The largest P2 amplitudes in the present study were identified at frontal sites at approximately the same position as the maximal VPP amplitudes in our previous work. Additionally, no significant differences were found regarding the interaction between the factors of electrode and emotion. In our previous study (Luo et al., 2010), we found that the brain ERPs were able to distinguish emotional expressions (fearful and happy) from neutral facial expressions, but could not distinguish fearful and happy expressions, during the second stage of emotional facial expression processing, as indicated by larger anterior VPP amplitudes in response to fearful and happy faces than in response to neutral faces. Since the VPP effect is largely specific for facial expression processing, we did not recognize a VPP effect in the present study, instead, we recognized P2 effect, but temporally speaking, the P2 effect corresponded to the VPP effect.

In our previous studies (Luo et al., 2010; Zhang et al., 2013a,b, 2014), we found that the brain could differentiate between three types of emotional stimuli during the third stage of emotion processing. In the present study, as expected, the main effect of emotion on the LPP amplitude was significant; specifically, the LPP amplitude was larger to negatively valenced pictures than it was to positively valenced and neutral pictures, and positively valenced pictures elicited larger LPP amplitudes than did neutral pictures, which was coherent with our previous results. Since both the P3 and LPP (or LPC) belong to the P3 family (González-Villar et al., 2014; Grzybowski et al., 2014), it is reasonable to compare these two components directly. Although researchers (Foti and Hajcak, 2008; Hajcak et al., 2010; Lang and Bradley, 2010; Yen et al., 2010; Zhang et al., 2012b) agree that emotional stimuli elicit larger LPP (P3) amplitudes than do neutral stimuli, they do not agree on the relationship between the LPP amplitudes and the valence of emotional stimuli. Some researchers (Ito et al., 1998; Huang and Luo, 2006; Frühholz et al., 2009) found that the LPP amplitudes elicited by negative stimuli were larger than the LPP amplitudes elicited by positive stimuli, which is in agreement with our results. However, in contrast to the present study, Solomon et al. (2012) found that girls displayed larger LPP amplitudes to neutral pictures than to positive pictures. This difference may be interpreted as the influence of developing cognitive and affective factors related to emotion processing, since Solomon enlisted children (between 5 and 6 years old) as participants, while all of the subjects in the present study were college students. Additionally, using emotional pictures as stimuli (selected from the IAPS), Brown et al. (2013) found that the LPP amplitudes elicited by low-arousal unpleasant, neutral, and low-arousal pleasant pictures were not significantly different from each other, which is in line with our results to some degree. However, the LPP amplitudes elicited by high-arousal unpleasant pictures were larger than those elicited by high-arousal pleasant and neutral pictures (the negativity bias). The differences can be interpreted in terms of different arousal levels, since the LPP is sensitive to the arousal levels of emotional stimuli (McConnell and Shore, 2011; Zhang et al., 2012a). The main effect of electrode was significant, with the LPP amplitude being the largest at the Pz electrode, which is supported by many previous studies (Amrhein et al., 2004; Schupp et al., 2004; Schutter et al., 2004; Smith et al., 2013).

As for the three-stage model, preliminary analysis of a threat during the early stage of processing can help individuals quickly escape from the threat, and therefore has strong adaptive significance (Vuilleumier, 2005; Corbetta et al., 2008; Bertini et al., 2013), but this analysis, although fast, is also very coarse. Emotion processing is a dynamic process; the first stage of the three-stage model of emotional facial processing identifies the negative stimuli, while the second stage distinguishes positive stimuli from neutral stimuli. Thus, if we link the first and second stages, it is easy to state that in the first two stages, the identification of these three emotions is basically completed. This raises the question of whether the third stage is necessary. Our previous experimental results (Luo et al., 2010; Zhang et al., 2014; Yi et al., 2015) suggested that fine processing of the three different emotions occurs; hence, the third stage is complementary to the first and second stages. Therefore, we believe the three-stage model of facial emotional expression processing is more appropriate than the two-stage model (Utama et al., 2009).

Since the arousal level affects the processing of visual stimuli (McConnell and Shore, 2011; Zhang et al., 2012a; Prehn et al., 2014), we originally intended to control for the arousal level when selecting the experimental stimuli. Unfortunately, we found that it was almost impossible to absolutely match the arousal level of neutral and emotional (positive and negative) pictures in our picture system. However, because the arousal level of neutral pictures was lower than the arousal level of positive and negative pictures, this seems to indicate higher ecological validity, since valence and arousal were plotted as horizontal and vertical coordinates, respectively, when establishing axes for evaluating the emotional pictures. The resulting scatter plot formed a slight U-shape. Therefore, the P2 amplitudes elicited by emotional pictures were larger than those elicited by neutral pictures in the present study, which might be due to valence or arousal difference between the stimuli, or to the interaction between valence and arousal; however, we were unable to determine the exact cause given the particularity of the emotional scene. Moreover, the interaction between valence and arousal is a very interesting question that we hope to investigate in future studies.

The present experiment only differs from our previous work Luo et al. (2010) in terms of the experimental stimuli, i.e., emotional scene pictures vs. emotional face pictures, respectively, while all other details remain the same (please refer to our previous work). The results of the present study suggest that, temporally speaking, different processing stages exist within the brain for emotional pictures. We found that the middle and late stages for emotional scene processing correspond to the middle and late stages of the three-stage model of facial expression processing. Because of the inherent limitation caused by the use of different types of stimuli, we could not compare emotion processing in the early phase between studies. The details and information in the emotional pictures varied, and these differences (i.e., physical complexity) could not be controlled for in the present study. Therefore, these factors might have affected the early processing stage. Collectively, the present study confirmed the latter two stages of the three-stage model of facial expression processing exist when processing images other than faces when attentional resources are limited, thus, we provide new evidence in supporting of the hypothesis of different temporal processing stages for emotional visual stimuli. Moreover, the results of the present study indicate that the time course of emotional processing is similar even when the emotions are conveyed by different types of stimuli.

Conclusion

In the present study, by adopting an RSVP paradigm that limited the participants’ attentional resources, we explored the time course of emotional picture processing. The results demonstrated that emotional picture processing showed different stages (P2, LPP) similar to those observed during the processing of emotional faces. This suggests that in the late perceptual and cognitive processing stages, the time courses for processing the same emotions, regardless of whether they are conveyed by facial expressions, words, and pictures (scenes) are similar.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The work was supported by the National Natural Science Foundation of China (Grant Nos. 31170984, 31371033, 31300869, 81471376); the National Basic Research Program of China (2014CB744600, 2011CB711000); the Foundation of the National Key laboratory of Human Factors Engineering (HF2012- K-03); the Fundamental Research Funds for the Central Universities (2012CXQT01), the Nature Science Foundation of JiangSu Province (BK20130415).

References

- Amrhein C., Mühlberger A., Pauli P., Wiedemann G. (2004). Modulation of event-related brain potentials during affective picture processing: a complement to startle reflex and skin conductance response? Int. J. Psychophysiol. 54 231–240. 10.1016/j.ijpsycho.2004.05.009 [DOI] [PubMed] [Google Scholar]

- Azizian A., Watson T. D., Parvaz M. A., Squires N. K. (2006). Time course of processes underlying picture and word evaluation: an event-related potential approach. Brain Topogr. 18 213–222. 10.1007/s10548-006-0270-9 [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., Mccarthy G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8 551–565. 10.1162/jocn.1996.8.6.551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernat E., Bunce S., Shevrin H. (2001). Event-related brain potentials differentiate positive and negative mood adjectives during both supraliminal and subliminal visual processing. Int. J. Psychophysiol. 42 11–34. 10.1016/S0167-8760(01)00133-7 [DOI] [PubMed] [Google Scholar]

- Bertini C., Cecere R., Làdavas E. (2013). I am blind, but I “see” fear. Cortex 49 985–993. 10.1016/j.cortex.2012.02.006 [DOI] [PubMed] [Google Scholar]

- Broadbent D. E., Broadbent M. H. (1987). From detection to identification: response to multiple targets in rapid serial visual presentation. Percept. Psychophys. 42 105–113. 10.3758/BF03210498 [DOI] [PubMed] [Google Scholar]

- Brown K. W., Goodman R. J., Inzlicht M. (2013). Dispositional mindfulness and the attenuation of neural responses to emotional stimuli. Soc. Cogn. Affect. Neurosci. 8 93–99. 10.1093/scan/nss004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S. B., Van Steenbergen H., Band G. P., De Rover M., Nieuwenhuis S. (2012). Functional significance of the emotion-related late positive potential. Front. Hum. Neurosci. 6:33 10.3389/fnhum.2012.00033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cano M. E., Class Q. A., Polich J. (2009). Affective valence, stimulus attributes, and P300: color vs. black/white and normal vs. scrambled images. Int. J. Psychophysiol. 71 17–24. 10.1016/j.ijpsycho.2008.07.016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carretié L., Hinojosa J. A., Martín-Loeches M., Mercado F., Tapia M. (2004). Automatic attention to emotional stimuli: neural correlates. Hum. Brain Mapp. 22 290–299. 10.1002/hbm.20037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carretié L., Iglesias J. (1995). An ERP study on the specificity of facial expression processing. Int. J. Psychophysiol. 19 183–192. 10.1016/0167-8760(95)00004-C [DOI] [PubMed] [Google Scholar]

- Carretié L., Mercado F., Tapia M., Hinojosa J. A. (2001). Emotion, attention, and the ‘negativity bias’, studied through event-related potentials. Int. J. Psychophysiol. 41 75–85. 10.1016/S0167-8760(00)00195-1 [DOI] [PubMed] [Google Scholar]

- Chun M. M. (1997). Temporal binding errors are redistributed by the attentional blink. Percept. Psychophys. 59 1191–1199. 10.3758/BF03214207 [DOI] [PubMed] [Google Scholar]

- Codispoti M., Ferrari V., Bradley M. M. (2006). Repetitive picture processing: autonomic and cortical correlates. Brain Res. 1068 213–220. 10.1016/j.brainres.2005.11.009 [DOI] [PubMed] [Google Scholar]

- Corbetta M., Patel G., Shulman G. L. (2008). The reorienting system of the human brain: from environment to theory of mind. Neuron 58 306–324. 10.1016/j.neuron.2008.04.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham W. A., Van Bavel J. J., Arbuckle N. L., Packer D. J., Waggoner A. S. (2012). Rapid social perception is flexible: approach and avoidance motivational states shape P100 responses to other-race faces. Front. Hum. Neurosci. 6:140 10.3389/fnhum.2012.00140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Cesarei A., Codispoti M. (2006). When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology 43 207–215. 10.1111/j.1469-8986.2006.00392.x [DOI] [PubMed] [Google Scholar]

- Delplanque S., Lavoie M. E., Hot P., Silvert L., Sequeira H. (2004). Modulation of cognitive processing by emotional valence studied through event-related potentials in humans. Neurosci. Lett. 356 1–4. 10.1016/j.neulet.2003.10.014 [DOI] [PubMed] [Google Scholar]

- Dennis T. A., Hajcak G. (2009). The late positive potential: a neurophysiological marker for emotion regulation in children. J. Child Psychol. Psycol. 50 1373–1383. 10.1111/j.1469-7610.2009.02168.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dennis T. A., Malone M. M., Chen C.-C. (2009). Emotional face processing and emotion regulation in children: an ERP study. Dev. Neuropsychol. 34 85–102. 10.1080/87565640802564887 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M., Holmes A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13 427–431. 10.1097/00001756-200203250-00013 [DOI] [PubMed] [Google Scholar]

- Ferreira-Santos F., Silveira C., Almeida P., Palha A., Barbosa F., Marques-Teixeira J. (2012). The auditory P200 is both increased and reduced in schizophrenia? A meta-analytic dissociation of the effect for standard and target stimuli in the oddball task. Clin. Neurophysiol. 123 1300–1308. 10.1016/j.clinph.2011.11.036 [DOI] [PubMed] [Google Scholar]

- Foti D., Hajcak G. (2008). Deconstructing reappraisal: descriptions preceding arousing pictures modulate the subsequent neural response. J. Cogn. Neurosci. 20 977–988. 10.1162/jocn.2008.20066 [DOI] [PubMed] [Google Scholar]

- Frühholz S., Fehr T., Herrmann M. (2009). Early and late temporo-spatial effects of contextual interference during perception of facial affect. Int. J. Psychophysiol. 74 1–13. 10.1016/j.ijpsycho.2009.05.010 [DOI] [PubMed] [Google Scholar]

- Gable P. A., Adams D. L. (2013). Nonaffective motivation modulates the sustained LPP (1,000-2,000 ms). Psychophysiology 50 1251–1254. 10.1111/psyp.12135 [DOI] [PubMed] [Google Scholar]

- García-Larrea L., Lukaszewicz A.-C., Mauguiére F. (1992). Revisiting the oddball paradigm. Non-target vs neutral stimuli and the evaluation of ERP attentional effects . Neuropsychologia 30 723–741. 10.1016/0028-3932(92)90042-K [DOI] [PubMed] [Google Scholar]

- González-Villar A. J., Triñanes Y., Zurrón M., Carrillo-De-La-Peña M. T. (2014). Brain processing of task-relevant and task-irrelevant emotional words: An ERP study. Cogn. Affect. Behav. Neurosci. 14. 939–950. 10.3758/s13415-013-0247-6 [DOI] [PubMed] [Google Scholar]

- Grady C. L., Mcintosh A. R., Rajah M. N., Craik F. I. (1998). Neural correlates of the episodic encoding of pictures and words. Proc. Natl. Acad. Sci. U.S.A. 95 2703–2708. 10.1073/pnas.95.5.2703 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gratton G., Coles M. G., Donchin E. (1983). A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 55 468–484. 10.1016/0013-4694(83)90135-9 [DOI] [PubMed] [Google Scholar]

- Grzybowski S. J., Wyczesany M., Kaiser J. (2014). The influence of context on the processing of emotional and neutral adjectives-An ERP study. Biol. Psychol. 99 137–149. 10.1016/j.biopsycho.2014.01.002 [DOI] [PubMed] [Google Scholar]

- Hajcak G., Dunning J. P., Foti D. (2009). Motivated and controlled attention to emotion: time-course of the late positive potential. Clin. Neurophysiol. 120 505–510. 10.1016/j.clinph.2008.11.028 [DOI] [PubMed] [Google Scholar]

- Hajcak G., Macnamara A., Foti D., Ferri J., Keil A. (2013). The dynamic allocation of attention to emotion: simultaneous and independent evidence from the late positive potential and steady state visual evoked potentials. Biol. Psychol. 92 447–455. 10.1016/j.biopsycho.2011.11.012 [DOI] [PubMed] [Google Scholar]

- Hajcak G., Macnamara A., Olvet D. M. (2010). Event-related potentials, emotion, and emotion regulation: an integrative review. Dev. Neuropsychol. 35 129–155. 10.1080/87565640903526504 [DOI] [PubMed] [Google Scholar]

- Herbert C., Kissler J., Junghöfer M., Peyk P., Rockstroh B. (2006). Processing of emotional adjectives: evidence from startle EMG and ERPs. Psychophysiology 43 197–206. 10.1111/j.1469-8986.2006.00385.x [DOI] [PubMed] [Google Scholar]

- Huang Y.-X., Luo Y.-J. (2006). Temporal course of emotional negativity bias: an ERP study. Neurosci. Lett. 398 91–96. 10.1016/j.neulet.2005.12.074 [DOI] [PubMed] [Google Scholar]

- Huang Y.-X., Luo Y.-J. (2009). Can Negative stimuli always have the processing superiority?. Acta Psychol. Sin. 41 822–831. 10.3724/sp.j.1041.2009.00822 [DOI] [Google Scholar]

- Isaak M. I., Shapiro K. L., Martin J. (1999). The attentional blink reflects retrieval competition among multiple rapid serial visual presentation items: tests of an interference model. J. Exp. Psychol. Hum. 25 1774–1792. 10.1037/0096-1523.25.6.1774 [DOI] [PubMed] [Google Scholar]

- Ito T. A., Larsen J. T., Smith N. K., Cacioppo J. T. (1998). Negative information weighs more heavily on the brain: the negativity bias in evaluative categorizations. J. Pers. Soc. Psychol. 75 887–900. 10.1037//0022-3514.75.4.887 [DOI] [PubMed] [Google Scholar]

- Jaworska N., Thompson A., Shah D., Fisher D., Ilivitsky V., Knott V. (2011). Acute tryptophan depletion effects on the vertex and late positive potentials to emotional faces in individuals with a family history of depression. Neuropsychobiology 65 28–40. 10.1159/000328992 [DOI] [PubMed] [Google Scholar]

- Jessen S., Grossmann T. (2014). Unconscious discrimination of social cues from eye whites in infants. Proc. Natl. Acad. Sci. U.S.A. 111 16208–16213. 10.1073/pnas.1411333111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanske P., Kotz S. A. (2007). Concreteness in emotional words: ERP evidence from a hemifield study. Brain Res. 1148 138–148. 10.1016/j.brainres.2007.02.044 [DOI] [PubMed] [Google Scholar]

- Kessel E. M., Huselid R. F., Decicco J. M., Dennis T. A. (2013). Neurophysiological processing of emotion and parenting interact to predict inhibited behavior: an affective-motivational framework. Front. Hum. Neurosci. 7:326 10.3389/fnhum.2013.00326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler K., Schmitz F., Gross J., Hommel B., Shapiro K., Schnitzler A. (2005). Target consolidation under high temporal processing demands as revealed by MEG. Neuroimage 26 1030–1041. 10.1016/j.neuroimage.2005.02.020 [DOI] [PubMed] [Google Scholar]

- Lang P. J., Bradley M. M. (2010). Emotion and the motivational brain. Biol. Psychol. 84 437–450. 10.1016/j.biopsycho.2009.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leite J., Carvalho S., Galdo-Alvarez S., Alves J., Sampaio A., Gonçalves F. (2012). Affective picture modulation: Valence, arousal, attention allocation and motivational significance. Int. J. Psychophysiol. 83 375–381. 10.1016/j.ijpsycho.2011.12.005 [DOI] [PubMed] [Google Scholar]

- Luck S. J., Hillyard S. A. (1994). Electrophysiological correlates of feature analysis during visual search. Psychophysiology 31 291–308. 10.1111/j.1469-8986.1994.tb02218.x [DOI] [PubMed] [Google Scholar]

- Luo W., Feng W., He W., Wang N., Luo Y. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage 49 1857–1867. 10.1016/j.neuroimage.2009.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McConnell M. M., Shore D. I. (2011). Upbeat and happy: arousal as an important factor in studying attention. Cogn. Emot. 25 1184–1195. 10.1080/02699931.2010.524396 [DOI] [PubMed] [Google Scholar]

- Novosel A., Lackner N., Unterrainer H.-F., Dunitz-Scheer M., Scheer P. J. Z., Wallner-Liebmann S. J., et al. (2014). Motivational processing of food cues in anorexia nervosa: a pilot study. Eat. Weight. Disord. 19 169–175. 10.1007/s40519-014-0114-7 [DOI] [PubMed] [Google Scholar]

- Olofsson J. K., Nordin S., Sequeira H., Polich J. (2008). Affective picture processing: an integrative review of ERP findings. Biol. Psychol. 77 247–265. 10.1016/j.biopsycho.2007.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peng J., Qu C., Gu R., Luo Y.-J. (2012). Description-based reappraisal regulate the emotion induced by erotic and neutral images in a Chinese population. Front. Hum. Neurosci. 6:355 10.3389/fnhum.2012.00355 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prehn K., Korn C. W., Bajbouj M., Klann-Delius G., Menninghaus W., Jacobs A. M., et al. (2014). The neural correlates of emotion alignment in social interaction. Soc. Cogn. Affect. Neurosci. 3 435–443. 10.1093/scan/nsu066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raymond J. E., Shapiro K. L., Arnell K. M. (1992). Temporary suppression of visual processing in an RSVP task: an attentional blink? J. Exp. Psychol. Hum. 18 849–860. 10.1037/0096-1523.18.3.849 [DOI] [PubMed] [Google Scholar]

- Schapkin S., Gusev A., Kuhl J. (1999). Categorization of unilaterally presented emotional words: an ERP analysis. Acta Neurobiol. Exp. 60 17–28. [DOI] [PubMed] [Google Scholar]

- Schupp H. T., Flaisch T., Stockburger J., Junghöfer M. (2006). Emotion and attention: event-related brain potential studies. Prog. Brain Res. 156 31–51. 10.1016/S0079-6123(06)56002-9 [DOI] [PubMed] [Google Scholar]

- Schupp H. T., Hman A., Junghöfer M., Weike A. I., Stockburger J., Hamm A. O. (2004). The facilitated processing of threatening faces: an ERP analysis. Emotion 4 189–200. 10.1037/1528-3542.4.2.189 [DOI] [PubMed] [Google Scholar]

- Schutter D. J., De Haan E. H., Van Honk J. (2004). Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. Int. J. Psychophysiol. 53 29–36. 10.1016/j.ijpsycho.2004.01.003 [DOI] [PubMed] [Google Scholar]

- Seifert L. S. (1997). Activating representations in permanent memory: different benefits for pictures and words. J. Exp. Psychol. Learn. 23 1106 10.1037//0278-7393.23.5.1106 [DOI] [PubMed] [Google Scholar]

- Shapiro K., Schmitz F., Martens S., Hommel B., Schnitzler A. (2006). Resource sharing in the attentional blink. Neuroreport 17 163–166. 10.1097/01.wnr.0000195670.37892.1a [DOI] [PubMed] [Google Scholar]

- Smith E., Weinberg A., Moran T., Hajcak G. (2013). Electrocortical responses to NIMSTIM facial expressions of emotion. Int. J. Psychophysiol. 88 17–25. 10.1016/j.ijpsycho.2012.12.004 [DOI] [PubMed] [Google Scholar]

- Smith N. K., Cacioppo J. T., Larsen J. T., Chartrand T. L. (2003). May I have your attention, please: electrocortical responses to positive and negative stimuli. Neuropsychologia 41 171–183. 10.1016/S0028-3932(02)00147-1 [DOI] [PubMed] [Google Scholar]

- Solomon B., Decicco J. M., Dennis T. A. (2012). Emotional picture processing in children: an ERP study. Dev. Cogn. Neurosci. 2 110–119. 10.1016/j.dcn.2011.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Utama N. P., Takemoto A., Koike Y., Nakamura K. (2009). Phased processing of facial emotion: an ERP study. Neurosci. Res. 64 30–40. 10.1016/j.neures.2009.01.009 [DOI] [PubMed] [Google Scholar]

- Van Hooff J. C., Dietz K. C., Sharma D., Bowman H. (2008). Neural correlates of intrusion of emotion words in a modified Stroop task. Int. J. Psychophysiol. 67 23–34. 10.1016/j.ijpsycho.2007.09.002 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. (2005). How brains beware: neural mechanisms of emotional attention. Trends Cogn. Sci. 9 585–594. 10.1016/j.tics.2005.10.011 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Pourtois G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45 174–194. 10.1016/j.neuropsychologia.2006.06.003 [DOI] [PubMed] [Google Scholar]

- Wang L., Bastiaansen M. (2014). Oscillatory brain dynamics associated with the automatic processing of emotion in words. Brain Lang. 137 120–129. 10.1016/j.bandl.2014.07.011 [DOI] [PubMed] [Google Scholar]

- Weichselgartner E., Sperling G. (1987). Dynamics of automatic and controlled visual attention. Science 238 778–780. 10.1126/science.3672124 [DOI] [PubMed] [Google Scholar]

- Weinberg A., Hajcak G. (2010). Beyond good and evil: the time-course of neural activity elicited by specific picture content. Emotion 10 767 10.1037/a0020242 [DOI] [PubMed] [Google Scholar]

- Weinberg A., Hajcak G. (2011). The late positive potential predicts subsequent interference with target processing. J. Cogn. Neurosci. 23 2994–3007. 10.1162/jocn.2011.21630 [DOI] [PubMed] [Google Scholar]

- Wu H., Tang P., Huang X., Hu X., Luo Y. (2013). Differentiating electrophysiological response to decrease and increase negative emotion regulation. Chin. Sci. Bull. 58 1543–1550. 10.1007/s11434-013-5746-x [DOI] [Google Scholar]

- Yen N.-S., Chen K.-H., Liu E. H. (2010). Emotional modulation of the late positive potential (LPP) generalizes to Chinese individuals. Int. J. Psychophysiol. 75 319–325. 10.1016/j.ijpsycho.2009.12.014 [DOI] [PubMed] [Google Scholar]

- Yi S., He W., Zhan L., Qi Z., Zhu C., Luo W., et al. (2015). Emotional noun processing: an ERP study with rapid serial visual presentation. PLoS ONE 10:e0118924 10.1371/journal.pone.0118924 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan J., Chen J., Yang J., Ju E., Norman G. J., Ding N. (2014). Negative mood state enhances the susceptibility to unpleasant events: neural correlates from a music-primed emotion classification task. PLoS ONE 9:e89844 10.1371/journal.pone.0089844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan J., Zhang Q., Chen A., Li H., Wang Q., Zhuang Z., et al. (2007). Are we sensitive to valence differences in emotionally negative stimuli? Electrophysiological evidence from an ERP study. Neuropsychologia 45 2764–2771. 10.1016/j.neuropsychologia.2007.04.018 [DOI] [PubMed] [Google Scholar]

- Zhang D., He W., Wang T., Luo W., Zhu X., Gu R., et al. (2014). Three stages of emotional word processing: an ERP study with rapid serial visual presentation. Soc. Cogn. Affect. Neurosci. 12 1897–903. 10.1093/scan/nst188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang D., Luo W., Luo Y. (2013a). Single-trial ERP analysis reveals facial expression category in a three-stage scheme. Brain Res. 1512 78–88. 10.1016/j.brainres.2013.03.044 [DOI] [PubMed] [Google Scholar]

- Zhang D., Luo W., Luo Y. (2013b). Single-trial ERP evidence for the three-stage scheme of facial expression processing. Sci. China Life Sci. 56 835–847. 10.1007/s11427-013-4527-8 [DOI] [PubMed] [Google Scholar]

- Zhang Q., Kong L., Jiang Y. (2012a). The interaction of arousal and valence in affective priming: behavioral and electrophysiological evidence. Brain Res. 1474 60–72. 10.1016/j.brainres.2012.07.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W., Lu J., Fang H., Pan X., Zhang J., Wang D. (2012b). Late positive potentials in affective picture processing during adolescence. Neurosci. Lett. 510 88–92. 10.1016/j.neulet.2012.01.008 [DOI] [PubMed] [Google Scholar]