Abstract

Background

Despite debriefing being found to be the most important element in providing effective learning in simulation-based medical education reviews, there are only a few examples in the literature to help guide a debriefer. The diamond debriefing method is based on the technique of description, analysis and application, along with aspects of the advocacy-inquiry approach and of debriefing with good judgement. It is specifically designed to allow an exploration of the non-technical aspects of a simulated scenario.

Context

The debrief diamond, a structured visual reminder of the debrief process, was developed through teaching simulation debriefing to hundreds of faculty members over several years. The diamond shape visually represents the idealised process of a debrief: opening out a facilitated discussion about the scenario, before bringing the learning back into sharp focus with specific learning points.

Debriefing is the most important element in providing effective learning in simulation-based medical education reviews

Innovation

The Diamond is a two-sided prompt sheet: the first contains the scaffolding, with a series of specifically constructed questions for each phase of the debrief; the second lays out the theory behind the questions and the process.

Implication

The Diamond encourages a standardised approach to high-quality debriefing on non-technical skills. Feedback from learners and from debriefing faculty members has indicated that the Diamond is useful and valuable as a debriefing tool, benefiting both participants and faculty members. It can be used by junior and senior faculty members debriefing in pairs, allowing the junior faculty member to conduct the description phase, while the more experienced faculty member leads the later and more challenging phases. The Diamond gives an easy but pedagogically sound structure to follow and specific prompts to use in the moment.

|

Introduction

High-fidelity simulation uses life-size manikins in actual or recreated clinical environments to provide a clinical training experience without posing any risk to real patients. It can be used for all types of health care professional at any stage, pre- or post-qualification. Although it is used for many types of training, it is ideally suited for the teaching of non-technical skills such as teamworking, prioritising and leadership, and it provides a unique opportunity for inter-professional education.1

|

Simulation-based medical education reviews consistently find debriefing to be the most important element in providing effective learning.2,3 A commonly used definition of debriefing is a ‘facilitated or guided reflection in the cycle of experiential learning’ that occurs after a learning event.4 Despite the recognised importance of debriefing, there are only a few examples in the literature to help guide a debriefer.5–7 Leading experts in the field have called for work to ‘define explicit models of debriefing’.8 In response to this, the authors set out to develop a clear and simple visual aid to debriefing of clinical events, be they simulated or real.

There are only a few examples in the literature to help guide a debriefer

The debriefing method upon which diamond is based has at its core the technique of description, analysis and application,5 along with aspects of the advocacy-inquiry approach and of debriefing with good judgement.6

Context

The debrief diamond was developed through the work of the authors at the simulation centre of a large academic health sciences centre and hospital system in the UK. The Diamond was developed over time based on the personal debriefing episodes of the authors, our work training over 500 novices on courses and in practice by ‘debriefing the debrief’. These experiences suggested that a structured visual reminder would benefit faculty members and participants.

We observed that faculty members often start a debrief confidently, but can find it difficult to structure a discussion around non-technical skills. They frequently allowed technical skills to dominate the discussion, used closed questions and reverted to didactic instructional approaches or traditional feedback tools, such as Pendleton's rules.9

We developed an initial debriefing aid for new simulation faculty that listed specific questions, prompts, and reminders used in the description, analysis, and application debriefing model. This was integrated into our faculty member debriefing courses and used during all of our simulation courses. We observed an increase in the quality of facilitation and a decrease in didactic teaching. Candidates talked more and shared more clinical stories that illustrated non-technical skills (NTS); however, facilitators were still rarely able to develop specific, personalised learning points for learners to take away.

Recognising these issues, we believed the debrief sheet needed further evolution. This was when two ideas intersected.

Integrating a cognitive scaffold of question prompts separated by clearly signposted transitions between phases.

Using the diamond shape to visually represent the idealised process of a debrief: opening out a facilitated discussion about the scenario, before bringing the learning back into sharp focus with specific learning points.

Innovation

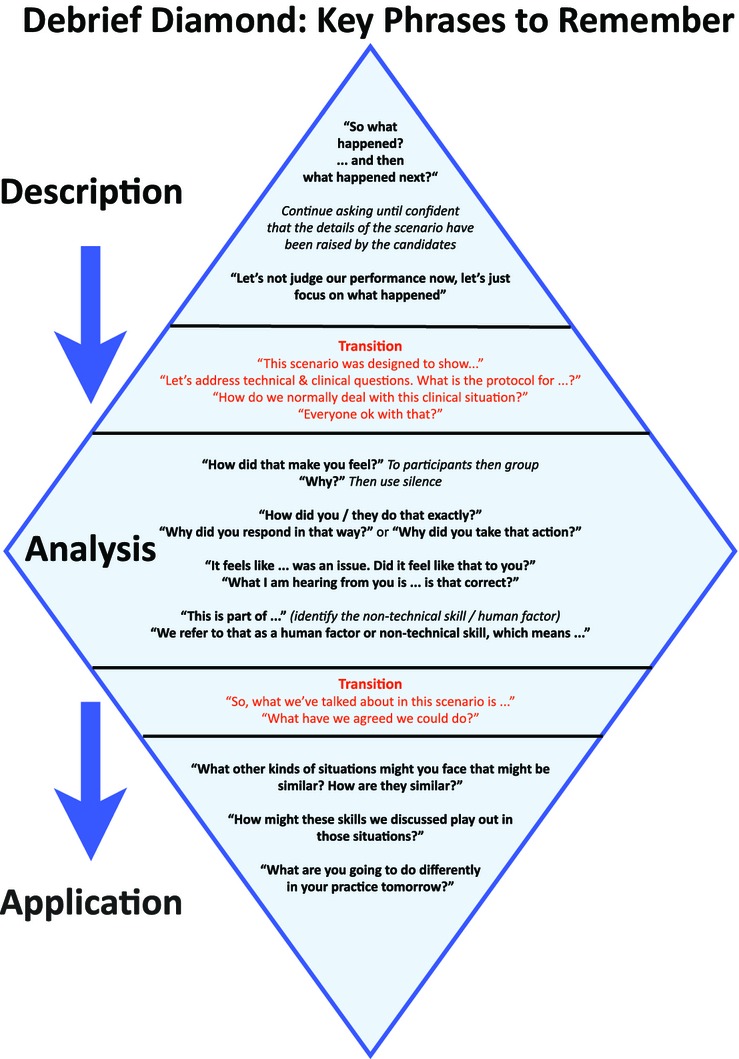

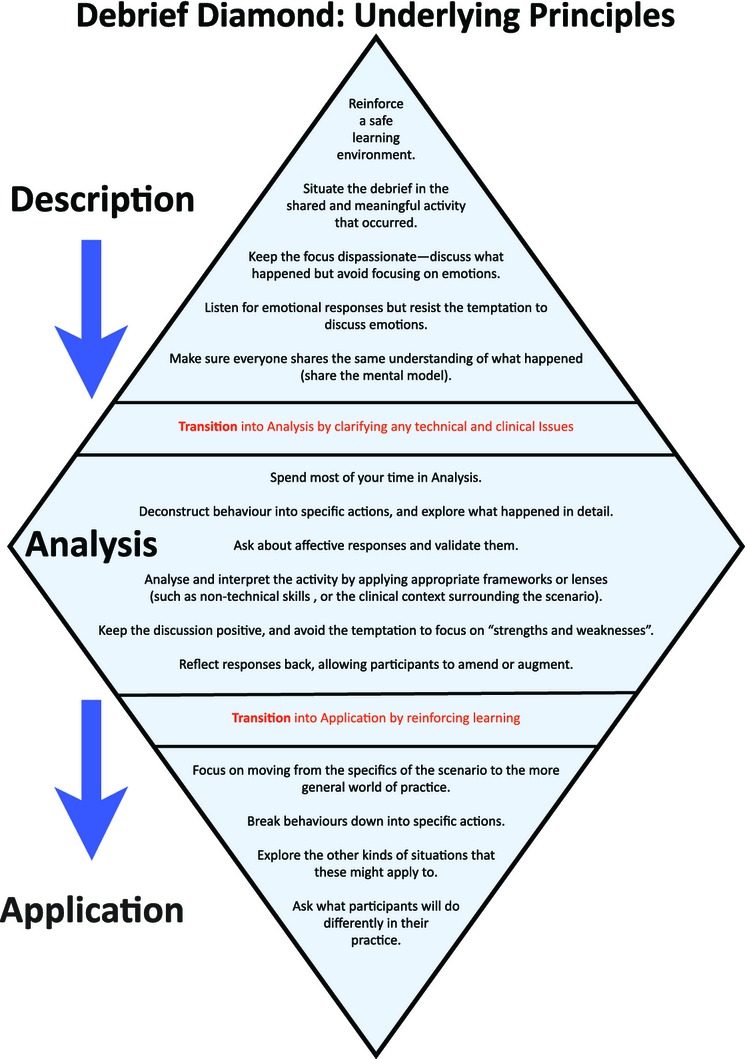

The Diamond was developed as a double-sided page (see Figures1 and 2). The first side contains the scaffold, with a series of specifically constructed questions for each phase of the description, analysis and application debrief. The second side lays out the theory behind the questions and the process enabling the debriefing faculty member to quickly remind themselves of the learning environment that they are trying to create, and how this can be achieved.

Figure 1.

The first side of the Diamond contains the scaffold with a series of specifically constructed questions for each phase of the description, analysis and application debrief

Figure 2.

The second side of the Diamond lays out the theory behind the questions and the debriefing process

Although the question prompts may seem didactic and inflexible, this is purposeful, and suits the aim of a cognitive scaffold. It enables new faculty members to practise their debriefing skills, initially with close adherence to the prompts. When the faculty member is more experienced, the model can act as a guide rather than a script. Faculty members experienced in debriefing have found that retaining the specific components, such as transitions (e.g. ‘this scenario was designed to show…’), serves to signpost the process for both learners and faculty members, and thus improves the quality of the debrief.

It enables new faculty members to practise their debriefing skills

Description

The description process involves taking the group through an ‘agreed description’ of the scenario that has just finished. This should be performed action-by-action, restricting the discussion to facts and avoiding emotion. The facilitator should start the debrief with a simple non-judgmental phrase, and then direct the conversation to those candidates not involved in the scenario to engage them in the process. This allows the scenario participants to rest and to reflect on their colleagues’ recollections of the events, before giving their own accounts.

|

We argue that it is vital that the facilitator acknowledges comments about the perceived quality of the performance, but redirects away from performance evaluation at this stage; the focus should remain on creating a shared understanding of what actually occurred in the scenario. This ensures that scenario participants do not feel under attack, and that a safe learning environment is maintained.

Interestingly, we do not use a venting ‘How do you feel?’ question initially, as suggested by Rudolph et al.6 We have not found this necessary, and postulate that this may be cultural, in that the model was developed in a UK rather than in a US setting.

At the end of the descriptive phase, the facilitators can clarify any outstanding clinical issues or technical questions. The Diamond offers faculty members the prompt ‘This scenario was designed to show…the recommended management of which is…’ This phrase allows the faculty members to clarify the intentions of running the scenario, but accepts the limitations and emergent nature of simulation as a learning setting. Summarising the clinical management reinforces appropriate clinical knowledge, skills, protocol adherence or behaviour, and addresses potential misconceptions without specifically focusing on the performance of participants.6 It also lessens the opportunity for collusion, and draws a line under the clinical issues to prevent them from dominating the analysis phase.

Analysis

The analysis phase starts with an open question, such as ‘how did you feel?’, directed to the scenario participants. It is important that faculty members allow enough time for the candidates to compose their answer, even if a few moments of silence seems uncomfortable. It may be necessary to follow up the response with ‘why?’, or similar prompts, which can be asked multiple times until underlying feelings and motivations are revealed. This cycle can be reflected back to the group to compare and contrast perceptions and feelings, and to explore the nature of any potential dissonance expressed.

At the end of the descriptive phase, the facilitators can clarify any outstanding clinical issues or technical questions

The analysis phase is where the facilitator structures the debrief around non-technical skills. Our faculty training recommends that only one skill is explored in each debrief, to avoid cognitive overload for the learner. We encourage facilitators to focus on the skill that the learners – not the faculty members – feel was most relevant within the scenario. Faculty members can then construct a framework within which these skills can be examined and developed, using as a basis the shared and agreed experience of the scenario and the clinical experience of all participants.

Once these are aired, the facilitator should illustrate positive (and, we argue, only very carefully, and with extreme caution, negative) examples of the non-technical skill that is to be the focus. Guiding the conversation, the faculty member can help to break this skill or behaviour down into specific actions that participants can use in their clinical environments. This is a facilitative process, during which the faculty member reflects and summarises the suggestions of the group, reframing them in non-technical language, as appropriate.

The facilitator next moves through the transition with the phrase ‘So what we have talked about in this scenario is… What have we agreed that we could do?’ This reinforces the learning about the NTS, ensuring a greater likelihood of remembering the detail in clinical practice settings.

Application

This phase encourages participants to consider how they may apply the knowledge in their own clinical practice. This aspect can be the most challenging for faculty members, as the learning needs to be drawn to a conclusion in a very focused way, without the introduction of alternative suggestions. Faculty members should ask for specific summary points from the participants who made particular suggestions about non-technical skills and behaviours during the analysis phase. It is important to allow one or two participants to contextualise this skill within their own working environment. This emphasis on applying the new skills to their own environments finishes up the debrief in a focused, yet personalised, way.

Implications

Based on experiences in our centre, we argue that debriefing facilitators need both specific techniques and a clear structure to optimise learning during a debrief.10 We have developed the Diamond to address this need. Currently there is considerable variation between the perceived ideal role of the debrief facilitator and what is actually executed during real debriefing sessions.7 We argue that a tool such as the Diamond could help address this gap.

Debriefing facilitators need both specific techniques and a clear structure to optimise learning during a debrief

Further research is currently in process to define the extent to which this model does indeed assist faculty members with the delivery of the post-simulation debrief, and to what extent it enhances the learning of participants. This includes research validating the use of the Diamond in other settings, a more rigorous design-based inquiry exploring how the intentions of the design are being reflected in actual debriefs, and in-depth interaction and conversational analysis of video recordings of diamond-based debriefs, which will demonstrate the extent to which diamond-based debriefs show clear evidence of learning and engagement with the simulation experience.

The feedback received from debriefs of over 6000 learners in our centre, and from other allied centres, shows that the Diamond encourages a standardised approach to high-quality debriefing across courses and institutions, benefiting both participants and faculty members. It facilitates debriefing in pairs, as the transition phases are a perfect point to switch faculty member; it also allows junior faculty members to conduct the relatively unproblematic description phase while more experienced faculty members lead the later and more challenging phases.

As a cognitive scaffold for novice facilitators, we suggest that the Diamond gives an easy and pedagogically sound structure to follow, with specific prompts to use in the moment.

References

- 1.Robertson J, Bandali K. Bridging the gap: Enhancing interprofes- sional education using simulation. J Interprof Care. 2008;22:499–508. doi: 10.1080/13561820802303656. [DOI] [PubMed] [Google Scholar]

- 2.Issenberg SB, McGaghie WC, Petrusa ER. Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27:10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 3.McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation-based medical education research: 2003–2009. Med Educ. 2010;44:50–63. doi: 10.1111/j.1365-2923.2009.03547.x. [DOI] [PubMed] [Google Scholar]

- 4.Fanning RM, Gaba DM. The Role of Debriefing in Simulation-Based Learning. Simul Healthc. 2007;2:115–125. doi: 10.1097/SIH.0b013e3180315539. [DOI] [PubMed] [Google Scholar]

- 5.Steinwachs B. How to Facilitate a Debriefing. Simulation Gaming. 1992;23:186–195. [Google Scholar]

- 6.Rudolph JW, Simon R, Dufresne RL, Raemer DB. There's No Such Thing as ‘Nonjudgmental’ Debriefing: A Theory and Method for Debriefing with Good Judgment. Simul Healthc. 2006;1:49–55. doi: 10.1097/01266021-200600110-00006. [DOI] [PubMed] [Google Scholar]

- 7.Dieckmann P. Molin Friis S, Lippert A, Østergaard D. The art and science of debriefing in simulation: Ideal and practice. Med Teach. 2009;31:e287–e294. doi: 10.1080/01421590902866218. [DOI] [PubMed] [Google Scholar]

- 8.Raemer D, Anderson M, Cheng A, Fanning R, Nadkarni V, Savoldelli G. Research regarding debriefing as part of the learning process. Simul Healthc. 2011;6:S52–S57. doi: 10.1097/SIH.0b013e31822724d0. [DOI] [PubMed] [Google Scholar]

- 9.Pendleton D, Schofield T, Tate P, Havelock P. The consultation: an approach to learning and teaching. Oxford: Oxford University Press; 1984. [Google Scholar]

- 10.Dismukes RK, Gaba DM, Howard SK. So Many Roads: Facilitated Debriefing in Healthcare. Simul Healthc. 2006;1:23–25. doi: 10.1097/01266021-200600110-00001. [DOI] [PubMed] [Google Scholar]