Abstract

Zipf’s law is a fundamental paradigm in the statistics of written and spoken natural language as well as in other communication systems. We raise the question of the elementary units for which Zipf’s law should hold in the most natural way, studying its validity for plain word forms and for the corresponding lemma forms. We analyze several long literary texts comprising four languages, with different levels of morphological complexity. In all cases Zipf’s law is fulfilled, in the sense that a power-law distribution of word or lemma frequencies is valid for several orders of magnitude. We investigate the extent to which the word-lemma transformation preserves two parameters of Zipf’s law: the exponent and the low-frequency cut-off. We are not able to demonstrate a strict invariance of the tail, as for a few texts both exponents deviate significantly, but we conclude that the exponents are very similar, despite the remarkable transformation that going from words to lemmas represents, considerably affecting all ranges of frequencies. In contrast, the low-frequency cut-offs are less stable, tending to increase substantially after the transformation.

Introduction

Zipf’s law for word frequencies is one of the best known statistical regularities of language [1, 2]. In its most popular formulation, the law states that the frequency n of the r-th most frequent word of a text follows

| (1) |

where α is a constant and ∝ the symbol of proportionality. However, Eq (1) is not the only possible approach for modeling word frequencies in texts. One could also look at the number of different words with a given frequency in a text. In that case, the probability f(n) that a word has frequency n is given by

| (2) |

where γ is a constant. The real values of f(n) and n(r) contain the information about the frequency of the words in a text, but f(n) does it in a compressed fashion (given only the values of f(n) such that f(n) > 0, n(r) is retrieved for any value of r). In the first version of the law, r, the so-called rank of a word, acts as the random variable, and in the second version the random variable is the frequency of a word, n. In both cases, α and γ are the exponents, related by [2]

| (3) |

Usually, α is close to 1 and then γ is close to 2.

The relevance of Zipf’s law for human language [3–5], as well as for other species’ communication systems [6–8], has been the topic of a long debate. To some researchers, Zipf’s law for frequencies is an inevitable consequence of the fact that words are made of letters (or phonemes): Zipf’s law is obtained no matter if you are a human or another creature with a capacity to press keys sequentially [3, 4] or to concatenate units to build words in a more abstract sense [6]. This opinion is challenged by empirical values of α that are not covered by simple versions of random typing [9], the dependence of α upon language complexity during language ontogeny [10], and also by the large differences between the statistics defined on ranks (e.g., the mean rank) and the random typing experiments with parameters for which a good fit was claimed or expected [5].

If the law is not inevitable, understanding the conditions under which it emerges or varies is crucial. Alterations in the shape and parameters of the law have been reported in child language [10, 11], schizophrenic speech [11, 12], aphasia [13, 14], and large multiauthor texts [15–17]. Despite intense research on Zipf’s law in quantitative linguistics and complex systems science, little attention has been paid to the elementary units for which Zipf’s law should hold. Zipf’s law has been investigated in letters [18] and also in blocks of symbols (e.g., words or letters) [19]. Here a very important issue that has not received enough attention since the seminal work of Zipf is investigated in depth: the effect of considering word forms vs. lemmas in the presence, scope and parameters of the law (a lemma is, roughly speaking, the stem form of a word; see below for a more precise definition). Research on this problem is lacking as the overwhelming majority of empirical research has focused on word forms for simplicity (e.g., [10, 16, 17, 20–22, 24]).

Thus, here we address a very relevant research question: does the distribution of word frequencies differ from that of lemmas? This opens two subquestions:

Does Zipf’s law still hold in lemmas?

Does the exponent of the law for word forms differ from that of lemmas?

It is remarkable that Zipf himself addressed this problem at a very preliminary level (Fig. 3.5 in Ref. [1]), and it has not been until much more recently that several researchers have revisited it. Baroni compared the distribution of ranks in a lemmatized version of the British National Corpus against the non-lemmatized counterpart and concluded, based upon a qualitative analysis, that both show essentially the same pattern [25]. Reference [26] studied one English text (Ulysses, by James Joyce) and one Polish text; for the former, the word and lemma rank-frequency relations were practically undistinguishable, but for the Polish text some differences were found: the exponent α slightly increased (from 0.99 to 1.03) when going from words to lemmas and a second power-law regime seemed to appear for the highest ranks, with exponent α about 1.5. Bentz et al. [27], for a translation of the Book of Genesis into English, pointed to a connection between morphology and rank-frequency relations, provided by an increase in the exponent α (from 1.22 to 1.29) when the book was lemmatized and Mandelbrot’s generalization of Zipf’s law was used in a maximum likelihood fit of n(r). Finally, Hatzigeorgiu et al. [28] analyzed the Hellenic National Corpus and found that the exponent α decreased when taking the 1000 most frequent units (from α = 0.978 for the 1000 most frequent word forms to α = 0.870 for the 1000 most frequent lemmas). This decrease is hard to compare with the increases reported in Refs. [26, 27] and the results presented in this article because it is restricted to the most frequent units.

Our study will provide a larger scale analysis, with 10 rather long single-author texts (among them some of the longest novels in the history of literature) in 4 different languages, using state-of-the-art tools in computational linguistics and power-law fitting. The languages we study cover a fair range in the word-lemma ratio, from a morphologically poor language such as English to a highly inflectional language such us Finnish, with Spanish and French being in between. In a previous study with a subset of these texts, some of us investigated the dependence of word and lemma frequency distributions on text length [29], but no direct quantitative comparison was performed between the results for word and lemmas. It will be shown here that the range of validity of Zipf’s law [Eq (2)] decreases when using lemmas; however, we will show that, while the exponents obtained with word forms and lemmas do not follow the same distribution, they maintain a very close and simple relationship, suggesting some robust underlying mechanism.

We will study the robustness of Zipf’s law concerning lemmatization from the perspective of type frequencies instead of ranks. Ranks have the disadvantage of leading to a histogram or spectrum that is monotonically decreasing by definition. This can hide differences between real texts and random typing experiments [30]. The representation in terms of the distribution of frequencies f(n) has been used successfully to show the robustness of Zipf’s exponents as texts size increases: Although the shape of the distribution apparently changes as text length increases, a simple rescaling allows one to unravel a mold for f(n) that is practically independent from text length [29]. In this article we investigate the extent to which f(n) is invariant upon lemmatization. We restrict our analysis to single-author texts, more concretely literary texts. This is because of the alterations in the shape and parameters of the distribution of word frequencies known to appear in large multi-author corpora [15–17].

Definitions

Let us consider, in general, a sequence composed of symbols that can be repeated. We are studying texts composed by words, but the framework is equally valid for a DNA segment constituted by codons [31], a musical piece consisting of notes [32], etc. Each particular occurrence of a symbol is called a token, whereas the symbol itself is referred to as a type [20]. The total number of tokens gives the sequence length, L (the text length in our case), whereas the total number of types is the size of the observed vocabulary, V, with V ≤ L.

In fact, although a sequence may be perfectly defined, its division into symbols is, up to a certain point, arbitrary. For instance, texts can be divided into letters, morphemes, etc., but most studies in quantitative linguistics have considered the basic unit to be the word. This is a linguistic notion that can be operationalized in many languages by delimiting sets of letters separated by spaces or punctuation marks. Nevertheless, the symbols that constitute themselves a sequence can be non-univocally related to some other entities of interest, as it happens with the relationship between a word and its lemma. A lemma is defined as a linguistic form that stands for or represents a whole inflectional morphological paradigm, such as the plural and singular forms of nouns or the different tensed forms of a verb. Lemmas are typically used as headwords in dictionaries. For example, for a word type, houses, the corresponding lemma type is house. Nevertheless, this correspondence is not always so clear [33], such that lemmatization is by no means a straightforward transformation.

Using different texts, we will check the validity of Zipf’s law for lemmas, and we will compare the statistics of word forms to the statistics of lemmas. To gather statistics for lemmas, we will replace each word in the text by its associated lemma, and will consider the text as composed by lemmas. To see the effect of this transformation, consider for instance the word houses in Ulysses. The number of tokens for the word type houses is 26, because houses occurs 26 times in the book. However, the number of tokens for the corresponding lemma, house, is 198, because the lemma house (in all its nominal and verbal forms, house, houses, housed…) occurs 198 times. The relationship between the statistics of words and lemmas, and in particular the question of whether lemmas follow Zipf’s law or not [1], is not a trivial issue [33].

In order to investigate the validity of Zipf’s law in a text we count the frequency n of all (word or lemma) types and fit the tail of the distribution of frequencies (starting at some point n = a) to a power law, i.e.,

with γ > 1, C the normalization constant, and disregarding values of n below a. The version of Zipf’s law that we adopt has two parameters: the exponent γ and the low-frequency cut-off a. We consider that Zipf’s law is valid if a power law holds starting at a and reaching at least two decades up to the maximum frequency (the frequency of the most common type). With these assumptions, we are adhering to the view of Zipf’s law as an asymptotic property of a random variable [34, 35].

To fit this definition of the law we use a two-step procedure that first fits the value of γ for a fixed a and next evaluates the goodness of the power-law fit from a onwards; this is repeated for different a-values until the most satisfactory fit is found. The resulting exponent is reported as γ ± σ, where σ is the standard deviation of γ. Our procedure is similar in spirit to the one by Clauset et al. [23], but it can be shown to have a better performance for continuous random variables [36–38]. Indeed, Clauset et al.’s requirement for power-law acceptance seems to be very strict, having been found to reject the power-law hypothesis even for power-law simulated data [37]. Details of the procedure we use are explained in Ref. [39]; this is basically the adaptation of the method of Ref. [38] to the discrete case. The Materials and Methods Section provides a summary.

Results

We analyze a total of 10 novels comprising four languages: English, Spanish, French, and Finnish, see Table 1. In order to gather enough statistics, we include some of the longest novels ever written, to our knowledge. For the statistical analysis of lemmas, we first perform an automatic process of lemmatization using state of the art computational tools. The steps comprise tokenization, morphological analysis, and morphological disambiguation, in such a way that, at the end, each word token is assigned a lemma. See Materials and Methods for further details.

Table 1. Characteristics of the books analyzed.

The length of each book L is measured in millions of tokens.

| Title | Author | Language | Year | L |

|---|---|---|---|---|

| Clarissa 1 | Samuel Richardson | English | 1748 | 0.976 |

| Moby-Dick 2 | Herman Melville | English | 1851 | 0.215 |

| Ulysses | James Joyce | English | 1918 | 0.269 |

| Don Quijote 3 | Miguel de Cervantes | Spanish | 1605 | 0.381 |

| La Regenta | L. Alas “Clarín” | Spanish | 1884 | 0.308 |

| Artamène 4 | Scudéry siblings 9 | French | 1649 | 2.088 |

| Le Vicomte de Bragelonne 5 | A. Dumas (father) | French | 1847 | 0.699 |

| Seitsemän veljestä 6 | Aleksis Kivi | Finnish | 1870 | 0.081 |

| Kevät ja takatalvi 7 | Juhani Aho | Finnish | 1906 | 0.114 |

| Vanhempieni romaani 8 | Arvid Järnefelt | Finnish | 1928 | 0.136 |

1Clarissa: Or the History of a Young Lady.

2Moby-Dick; or, The Whale.

3El ingenioso hidalgo don Quijote de la Mancha (1605)—The Ingenious Gentleman Don Quixote of La Mancha (title in English); including second part: El ingenioso caballero don Quijote de la Mancha (1615).

4Artamène ou le Grand Cyrus—Artamène, or Cyrus the Great.

5Le Vicomte de Bragelonne ou Dix ans plus tard—The Vicomte of Bragelonne: Ten Years Later.

6Seven Brothers.

7Spring and the Untimely Return of Winter.

8The Story of my Parents.

9Madeleine and Georges de Scudéry.

Zipf’s law holds for both word forms and lemmas

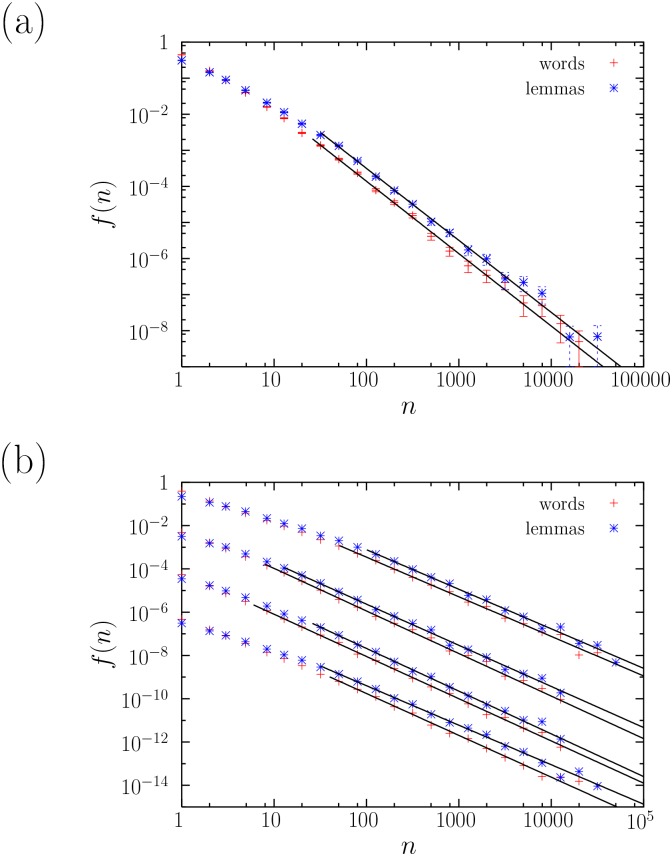

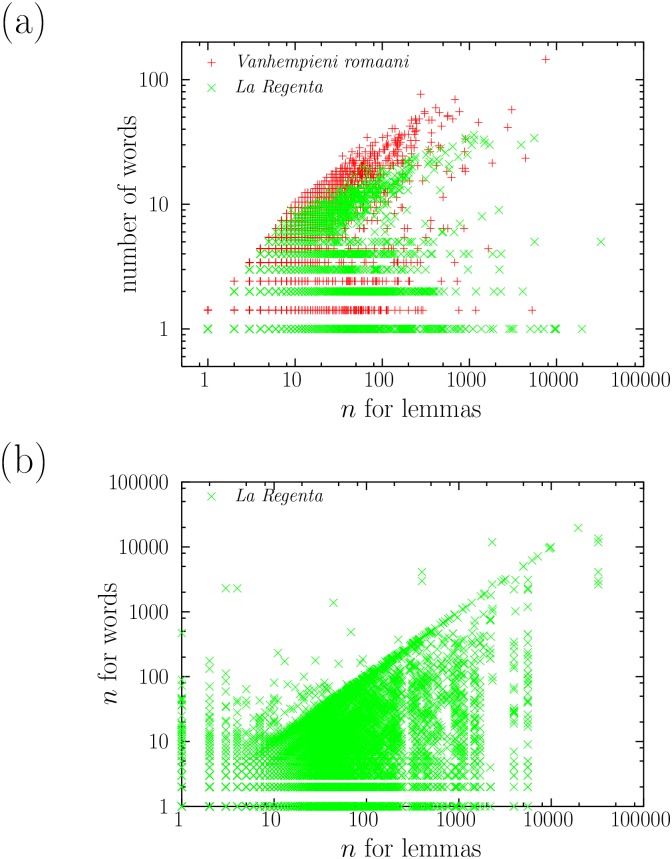

Fig 1(a) compares the results before and after lemmatization for the book La Regenta (in Spanish). The frequency distributions f(n) for words and for lemmas are certainly different, with higher frequencies being less likely for words than for lemmas, an effect that is almost totally compensated by hapax legomena (types of frequency equal to one), where words have more weight than lemmas. This is not unexpected, as the lemmatization process leads to less types (lower V), which must have higher frequencies, on average (the mean frequency is ⟨n⟩ = L/V). The reduction of vocabulary for lemmas (for a fixed text length) has a similar effect to that of increasing text length; in other words, we are more likely to see the effects of the exhaustion of vocabulary (if this happens) using lemmas rather than words. The difference in the counts of frequencies results in a tendency of f(n) for lemmas to bend downwards as the frequency decreases towards the smallest values (i.e., the largest ranks) in comparison with the f(n) of words; this in agreement with Ref. [26]. Besides, one has to take into account that lemmatization errors are more likely for low frequencies, and then the frequency distribution in that domain can be more strongly affected by such errors. In any case, our main interest is for high frequencies, for which the quantitative behavior shows a power-law tail for both words and lemmas. This extends for almost three orders of magnitude, with exponents γ very close to 2, implying the fulfillment of Zipf’s law (see Table 2).

Fig 1.

(a) Probability mass functions f(n) of the absolute frequencies n of words and lemmas in La Regenta, together with their fits. (b) The same, from top to bottom, for Clarissa, Moby-Dick, Ulysses (all three in English), and Don Quijote (in Spanish). The distributions are multiplied by factors 1, 10−2, 10−4 and 10−6 for a clearer visualization.

Table 2. Power-law fitting results for words and lemmas, denoted respectively by subindices w and l.

V is the number of types (vocabulary size), n m is the maximum frequency of the distribution, N a is the number of types in the power-law tail, i.e., with n ≥ a, a is the minimum value for which the power-law fit holds, and γ and σ are the power-law exponent and its standard deviation, respectively. 2σ d, the double of the standard deviation σ d is also given. σ d is the standard deviation of γ l−γ w assuming independence, which is . The last column provides ℓ1, the number of lemmas associated to only one word form. Notice that the lemma exponent is very close to the one found in Ref. [29] for the tail of a double power-law fitting, except for Moby-Dick and Ulysses.

| Title | V w | n mw | N aw | a w | γ w ± σ w | V l | n ml | N al | a l | γ l ± σ l | 2σ d | ℓ1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Clarissa | 20492 | 38632 | 1514 | 51 | 1.83±0.02 | 9041 | 41679 | 838 | 101 | 1.83±0.03 | 0.07 | 5750 |

| Moby-Dick | 18516 | 14438 | 2658 | 8 | 1.97±0.02 | 9141 | 14438 | 1548 | 13 | 1.90±0.02 | 0.06 | 6157 |

| Ulysses | 29450 | 14934 | 4377 | 6 | 1.95±0.01 | 12469 | 14934 | 1024 | 26 | 1.97±0.03 | 0.07 | 8670 |

| Don Quijote | 21180 | 20704 | 939 | 40 | 1.93±0.03 | 7432 | 31521 | 936 | 32 | 1.83±0.03 | 0.08 | 3812 |

| La Regenta | 21871 | 19596 | 1196 | 26 | 2.01±0.03 | 9900 | 32300 | 993 | 32 | 2.00±0.03 | 0.08 | 5308 |

| Artamène | 25161 | 88490 | 936 | 200 | 1.86±0.03 | 5008 | 119016 | 641 | 200 | 1.79±0.03 | 0.08 | 2178 |

| Bragelonne | 25775 | 26848 | 3173 | 16 | 1.84±0.02 | 10744 | 45577 | 1382 | 40 | 1.84±0.02 | 0.06 | 5391 |

| Seitsemän | 22035 | 4247 | 22035 | 1 | 2.13±0.01 | 7658 | 4247 | 474 | 26 | 2.13±0.05 | 0.10 | 4246 |

| Kevät ja | 25071 | 5042 | 8660 | 2 | 2.05±0.01 | 8898 | 6886 | 699 | 20 | 1.96±0.04 | 0.07 | 5060 |

| Vanhempieni | 35931 | 5254 | 6523 | 3 | 2.09±0.01 | 13510 | 7526 | 571 | 32 | 2.05±0.04 | 0.09 | 7837 |

The rest of books analyzed show a similar qualitative behavior, as shown for 4 of them in Fig 1(b). In all cases Zipf’s law holds, both for words and for lemmas. The power-law tail exponents γ range from 1.83 to 2.13, see Table 2, covering from 2 and a half to 3 and a half orders of magnitude of the type frequency (except for lemmas in Seitsemän veljestä, with roughly only 2 orders of magnitude). For the second power-law regime reported in Ref. [26] for the high-rank domain of lemmas (i.e., low lemma frequencies), we only find it for the smallest frequencies (i.e., between n = 1 and a maximum n) in two Finnish novels, Kevät ja takatalvi and Vanhempieni romaani with exponents γ = 1.715 and 1.77±0.008, respectively. These values of γ yield α = 1.40 and 1.30 (recall Eq (3)), which one can compare to the value obtained in Ref. [26] for a Polish novel (1.52). However, for the rest of distributions of lemma frequency, a discrete power law starting in n = 1 is rejected no matter the value of the maximum n considered. This is not incompatible with the results of Ref. [29], as a different fit and a different testing procedure was used there. Note that the Finnish novels yield the poorest statistics (as their text lengths are the smallest), so this second power-law regime seems to be significant only for short enough texts.

The consistency of the exponents between word forms and lemmas

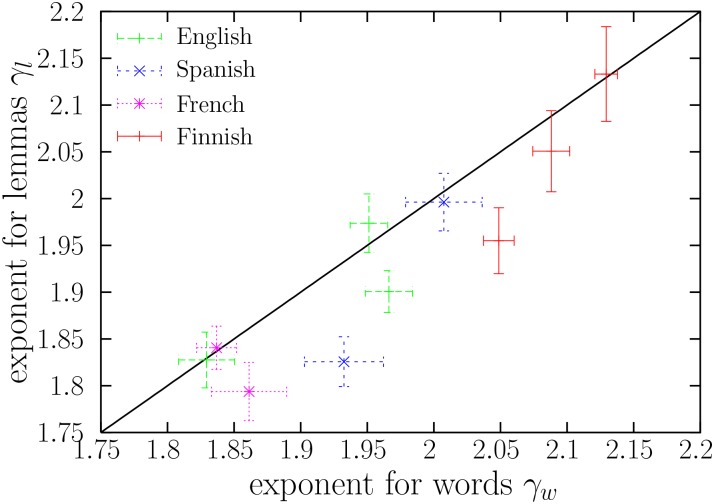

In order to proceed with the comparison between the exponents of the frequency distributions of words (w) and lemmas (l), let us denote them as γ w and γ l, respectively. Those values are compared in Fig 2. Coming back to the example of La Regenta, it is remarkable that the two exponents do not show a noticeable difference (as it is apparent in Fig 1(a)), with values γ w = 2.01 ± 0.03 and γ l = 2.00 ± 0.03. Out of the remaining 9 texts, 4 of them give pairs of word-lemma exponents with a difference of 0.02 or smaller. This is within the error bars of the exponents, represented by the standard deviations σ w and σ l of the maximum likelihood estimations of the exponents; more precisely, ∣γ w − γ l∣ < σ l, as can be seen in Table 2. For the other 5 texts, the two exponents are always in the range of overlap of two standard deviations, i.e., ∣γ w − γ l∣ < 2(σ w + σ l).

Fig 2. γ l (the exponent of the frequency distribution of lemmas) versus γ w (the exponent of the frequency distribution of word forms).

As a guide to the eye, the line γ l = γ w is also shown (solid line). Error bars indicate one standard deviation.

However, we should be cautious in drawing conclusions from these data. If, for a fixed book, γ w and γ l were independent variables, the standard deviation of their difference would be , according to elementary probability theory ([40], Chapter 3); however, independence cannot be ensured and we have , where cov(γ w, γ l) is the covariance of both variables, for a fixed book (this covariance is different from the covariance implicit in the Pearson correlation introduced below, which refers to all texts). Although the maximum likelihood method provides an estimation for the standard deviations of the exponents (for a fixed text) [23, 38], we cannot compute the covariance of the word and lemma exponents (for the total size of each text), and therefore we do not know the uncertainty in the difference between them. This is is due to the fact that we only have one sample for each book to calculate γ w and γ l. If we could assume independence, we would obtain that already three books yield results outside the 95% confidence interval of the exponent difference (given by 2σ d), see Table 2. This could be modified somewhat by the Bonferroni and Šidák corrections for multiple testing [41, 42]. Nevertheless, we expect a non-zero covariance between γ w and γ l, as the samples representing words and lemmas have some overlap (for instance, some word tokens remain the same after lemmatization), and therefore the standard deviation σ d should be smaller than in the independent case, which leads to larger significant differences than what the independence assumption yields. Conversely, the standard deviations σ w and σ l of the maximum likelihood exponents are obtained assuming that a w and a l are fixed parameters, but they are not, and then the total uncertainties of the exponents are expected to be larger than the reported standard deviations; nevertheless, this is difficult to quantify. Thus, the standard deviations we provide for the exponents have to be interpreted as some indication of their uncertainty but not as the full uncertainty, which could be larger. We conclude that we cannot establish an absolute invariance of the value of the Zipf exponent under lemmatization.

Instead of comparing the word and lemma exponents book by book, using the uncertainty for each exponent, we can also deal with the whole ensemble of exponents, ignoring the individual uncertainties. We consider first a Student’s t–test for paired samples to analyze the differences between pairs of exponents. This test, although valid for dependent normally distributed data (and the estimations of the exponents are normally distributed), assumes that the standard deviations σ w and σ l are the same for all books, which is not the case, see Table 2. So, as a first approximation we apply the test and interpret its results with care. The t–statistics gives t = 2.466 (p-value = 0.036), leading to the rejection of the hypothesis that there are no significant differences between the exponents. These results do not look like very surprising upon visual inspection of Fig 2: Most points (γ w, γ l) lie below the diagonal, suggesting a tendency for γ l to have a lower value than γ w. But we can go one step further with this test and consider the existence of one outlier, removing from the data the book with the largest difference between their exponents. In this case one needs to avoid introducing any bias in the calculation of the p-value. For this purpose, we simulate the t–Student distribution by summing rescaled normal variables in the usual way (see Materials and Methods), and remove (in the same way as for empirical data) the largest value of the variables. This yields t = 2.053 and p = 0.075, which suggests that the values of the exponents are not significantly different, except for one outlier. However, as we have mentioned, this test cannot be conclusive and other tests are necessary.

We realize that γ w and γ l are clearly dependent variables (when considering all books). Their Pearson correlation, a measure of linear correlation, is ρ = 0.913 (the sample size is 𝓝 = 10 and p = 0.0003 is the p-value of a two-sided test with null hypothesis ρ = 0). Note that this correlation is different to the one given above by cov(γ w, γ l), which referred to a fixed book. Given this, we formulate three hypotheses about the relationship between the exponents. The first hypothesis is that γ w and γ l are identically distributed for a given text (but not necessarily for different texts, different authors, or different languages). The second hypothesis is that γ w is centered around γ l, i.e., the conditional expectation of γ w given γ l is E[γ w∣γ l] = γ l. This means that a reasonable prediction on the value of γ w can be attained from the knowledge of the value of γ l. The third hypothesis is the symmetric of the second, namely that γ l is centered around γ w, i.e., the conditional expectation of γ l given γ w is E[γ l∣γ w] = γ w. The second and third hypotheses are supported by the strong Pearson correlation between γ w and γ l, but these two hypotheses are not equivalent [43].

We define and as the average values of γ w and γ l, respectively, in our sample of ten literary texts. The first hypothesis means that given a certain text, γ w and γ l are interchangeable. If γ w and γ l are identically distributed for a certain text, then the absolute value of the difference between the means should not differ significantly from analogous values obtained by chance, i.e., flipping a fair coin to decide if γ w and γ l remain the same or are swapped within a book. As there are ten literary texts, there are 210 possible configurations. Thus, one can compute numerically the p-value as the proportion of these configurations where equals or exceeds the original value. This coin-flipping test is in the same spirit as Fisher’s permutational test ([44], pp. 407–416), with the difference that we perform the permutations of the values of the exponents only inside every text. The application of this test reveals that , which is a significantly large difference (with a p-value = 0.04). Therefore, we conclude that the first hypothesis does not stand, and therefore γ w and γ l are not identically distributed within books. This seems consistent with the fact that most points (γ w, γ l) lay below the diagonal, see Fig 2. However, the elimination of one outlier (the text with the largest difference) leads to p = 0.08, which makes the difference non-significant for the remaining texts.

The second hypothesis is equivalent to E[γ w/γ l∣γ l] = 1 and therefore this hypothesis is indeed that the ratio γ w/γ l is mean independent of γ l (the definition of mean independence in this case is E[γ w/γ l∣γ l] = constant = E[γ w/γ l], ([43], pp. 67)). Similarly, the third hypothesis is equivalent to E[γ l/γ w∣γ w] = 1 and therefore this hypothesis is indeed that γ l/γ w is mean independent of γ w. Mean independence can be rejected by means of a correlation test as mean independence needs uncorrelation (see Ref. [45], pp. 60 or Ref. [43], pp. 67). A significant correlation between γ w/γ l and γ l would reject the second hypothesis while a significant correlation between γ l/γ w and γ w would reject the third hypothesis. Table 3 indicates that neither the Pearson nor the Spearman correlations are significant (see Materials and Methods), and therefore these correlation tests are not able to reject the second and the third hypotheses. Further support for the second and third hypotheses comes from linear regression. The second hypothesis states that E[γ w∣γ l] = c 1 γ l+c 2 with c 1 = 1 and c 2 = 0 while the third hypothesis states that E[γ l∣γ w] = c 3 γ w+c 4 with c 3 = 1 and c 4 = 0. Consistently, a standard linear regression and subsequent statistical tests indicate that c 1, c 3 ≈ 1 and c 2, c 4 ≈ 0 cannot be rejected (Table 4).

Table 3. Analysis of the association between random variables using Pearson and Spearman correlations as statistics.

ρ is the value of the correlation statistic and p is the p-value of a two-sided test with null hypothesis ρ = 0, calculated through permutations of one of the variables (the results can be different if p is calculated from a t–test). The sample size is 𝓝 = 10 in all cases. Only the Spearman correlation between a w and a l/a w is significantly different from zero.

| Association | Correlation test | ρ | p |

|---|---|---|---|

| γ w/γ l and γ l | Pearson correlation test | −0.378 | 0.28 |

| Spearman correlation test | −0.418 | 0.23 | |

| γ l/γ w and γ w | Pearson correlation test | −0.034 | 0.92 |

| Spearman correlation test | −0.091 | 0.81 | |

| a w/a l and a l | Pearson correlation test | 0.420 | 0.24 |

| Spearman correlation test | 0.393 | 0.26 | |

| a l/a w and a w | Pearson correlation test | −0.373 | 0.11 |

| Spearman correlation test | −0.867 | 0.002 |

Table 4. The fit of a linear model for the relationship between exponents (γ w and γ l) and the relationship between cut-offs (a w and a l).

c 1 and c 3 stand for slopes and c 2 and c 4 stand for intercepts. The error bars correspond to one standard deviation. A Student’s t-test is applied to investigate if the slopes are significantly different from one and if the intercepts are significantly different from zero. The resulting p-values indicate that in all cases the slopes are compatible with being equal to one. The intercepts are compatible with zero for the exponents, but seem to be incompatible for the cut-offs.

| Linear model | Parameters | Student’s t | p |

|---|---|---|---|

| E[γ w∣γ l] = c 1 γ l + c 2 | c 1 = 0.855 ± 0.135 | −1.074 | 0.314 |

| c 2 = 0.315 ± 0.261 | 1.208 | 0.261 | |

| E[γ l∣γ w] = c 3 γ w + c 4 | c 3 = 0.975 ± 0.154 | −0.161 | 0.876 |

| c 4 = 0.013 ± 0.303 | 0.044 | 0.966 | |

| E[a w∣a l] = c 1 a l + c 2 | c 1 = 1.012 ± 0.103 | 0.115 | 0.911 |

| c 2 = −17.523±7.798 | −2.247 | 0.055 | |

| E[a l∣a w] = c 3 a w + c 4 | c 3 = 0.912 ± 0.093 | −0.945 | 0.372 |

| c 4 = 20.009 ± 6.272 | 3.190 | 0.013 |

In any case, to perform our analysis we have not taken into account that the number of datapoints (V) and the power-law fitting ranges are different for words and lemmas, a fact that can increase the difference between the values of the exponents (due to the fact that the detection of deviations from power-law behavior depends on the number of datapoints available). In general, the fitting ranges are larger for words than for lemmas, due to the bending of the lemma distributions, see below. Another source of variation to take into account for the difference between the exponents is, as we have mentioned, that the lemmatization process is not exact, which can lead to type assignment errors and even to some words not being associated to any lemma (see the Materials and Methods Section for details).

Although, after the elimination of one outlier, we are not able to detect differences between the exponents, there seems to be a tendency for the lemma exponent to be a bit smaller than the word exponent, as can be seen in Fig 2. This can be an artifact of the fitting procedure, which can yield fitting ranges that include a piece of the bending-downwards part of the distribution in the case of lemmas. The only way to avoid this would be either to have infinite data, or not to find the fitting range automatically, or to use a fitting distribution that parametrizes also the bending. As we are mostly interested in the power-law regime, we have not considered these modifications to the fits.

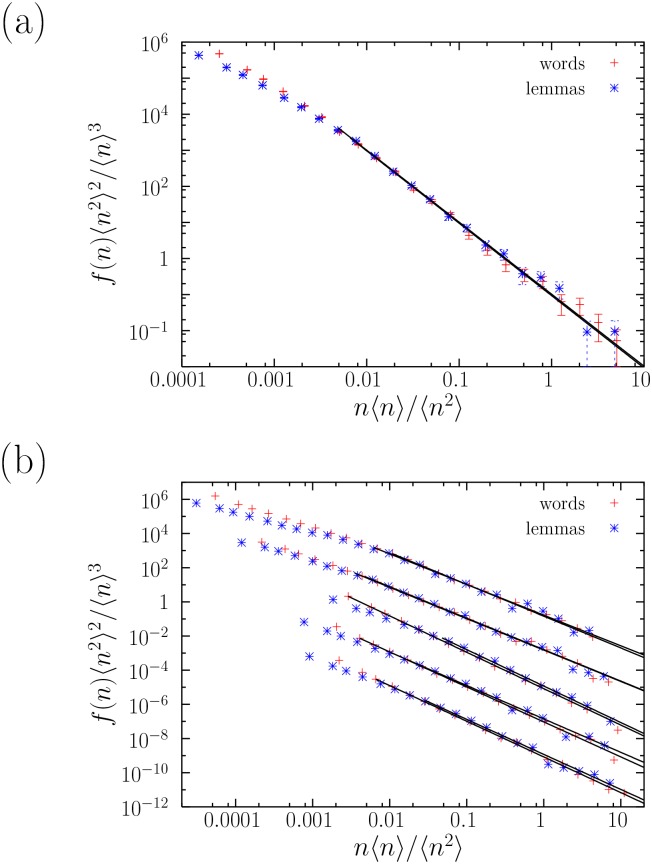

A rescaling of the axes as in Refs. [36, 46] can lead to additional support for our results (see also Ref. [29]). Fig 3(a) shows the rescaling for La Regenta. Each axis is multiplied by a constant factor, in the form

which translates into a simple shift of the curves in a double-logarithmic plot, not affecting the shape of the distribution and therefore keeping the possible power-law dependence. The collapse of the tails of the two curves into a single one is then an alternative visual indication of the stability of the exponents. The results for the 5 texts that were not shown before are now displayed in Fig 3(b). These findings suggest that, in general, Zipf’s law fulfills a kind of invariance under lemmatization, at least approximately, although there can be exceptions for some texts.

Fig 3.

(a) Probability mass functions f(n) of the absolute frequencies n of words and lemmas in La Regenta, together with their fits, under rescaling of both axis. The collapse of the tails indicates the compatibility of both power-law exponents. (b) The same for, from top to bottom, Artamène, Bragelonne (both in French), Seitsemän v., Kevät ja t., and Vanhempieni r. (all three in Finnish). The rescaled distributions are multiplied in addition by factors 1, 10−2, etc., for a clearer visualization.

Finally, in order to test the influence of the stream of consciousness part of Ulysses on the results, we have repeated the fits removing that part of the text. This yields a new text that is about 9% shorter, but more homogeneous. The Zipf exponents turn out to be γ w = 1.98±0.01 for n ≥ 6 and γ l = 2.02±0.04 for n ≥ 32, slightly higher than for the complete text. Nevertheless, the new γ w and γ l still are compatible between them (in the sense explained above for individual texts), and therefore our conclusions do not change regarding the similarity between word and lemma exponents. If we pay attention to the removed part, despite its peculiarity, the stream of consciousness prose still fulfills Zipf’s law, but with smaller exponents, γ w = 1.865±0.02 for n ≥ 2 and γ l = 1.82±0.03 for n ≥ 3. Both exponents are also compatible between them.

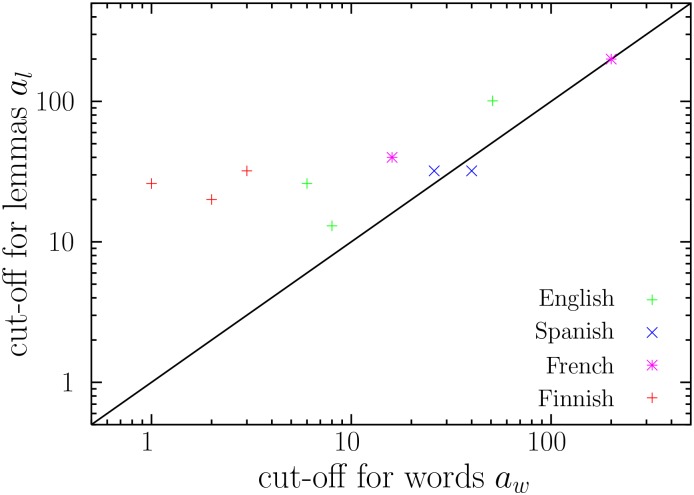

The consistency of the lower cut-offs of frequency for word forms and lemmas

As we have done with the exponent γ, we define a w and a l as the lower cut-off of the power-law fit for the frequency distributions of words and of lemmas, respectively. Those values are compared in Fig 4. When all texts are considered, a Student t–test for paired samples yields the rejection of the hypothesis that there is no significant difference in the values of a w and a l, even if the presence of one possible outlier is taken into account (t = −3.091 and p = 0.015). In fact, a w and a l are not independent, as their Pearson correlation is ρ = 0.961 (𝓝 = 10 and p = 0.0014 for the null hypothesis ρ = 0, calculated through permutations of one of the variables). These results are not very surprising upon inspection of Fig 4: Most points (a w, a l) lay above the diagonal, suggesting a tendency for a l to exceed a w.

Fig 4. The lower cut-off for the frequency distribution of lemmas (a l) versus the lower cut-off for the frequency distribution of word forms (a w).

The line a l = a w is also shown (solid line).

Like we did for the exponents, we formulate three hypotheses about the relationship between the low-frequency cut-offs. The first hypothesis is that a w and a l are identically distributed for a given text. The second hypothesis is that the expectation of a w given a l is E[a w∣a l] = a l, while the third hypothesis is that the expectation of a l given a w is E[a l∣a w] = a w. The second and third hypotheses are supported by the strong Pearson correlation between a w and a l just mentioned. We define and as the mean value of a w and a l, respectively, in our sample of ten texts. The coin flipping test reveals that is significantly high (p-value = 0.01). Therefore, the first hypothesis does not stand, not even after the exclusion of one outlier (which leads to p = 0.03).

The second hypothesis is indeed that a w/a l is mean independent of a l while the third hypothesis is that a l/a w is mean independent of a w. Table 3 indicates that neither a Pearson nor a Spearman correlation test are able to reject the second hypothesis. In contrast, a Pearson correlation test fails to reject the third hypothesis but the Spearman correlation test does reject it. This should not be interpreted as an contradiction between Pearson and Spearman tests but as an indication that the relationship between a l and a w is non-linear, as suggested by Fig 4. As a typical correlation test is conservative because it only checks a necessary condition for mean dependence [47], a further test is required. The second hypothesis states that E[a w∣a l] = c 1 a l + c 2 with c 1 = 1 and c 2 = 0 while the third hypothesis states that E[a l∣a w] = c 3 a w + c 4 with c 3 = 1 and c 4 = 0. A standard linear regression indicates that c 1, c 3 ≈ 1 but c 2 ≈ 0 is in the limit of rejection, whereas c 4 ≈ 0 fails (Table 4). Therefore, this suggests that the cut-offs do not follow hypothesis 3. Note that the significance of the values c 2 < 0 and c 4 > 0 implies that, in general, a l is significantly larger than a w. This is consistent with Fig 4.

Discussion

We have shown that Zipf’s law is fulfilled in long literary texts for several orders of magnitude in word and lemma frequency. The exponent of lemmas and the exponent of word forms are positively correlated. Similarly, the low-frequency cut-offs of lemmas and that of word forms are positively correlated. However, the exponent is more stable than the cut-off under the lemmatization transformation. While the exponent of lemmas is apparently centered around that of word forms and vice versa, the equivalent relationships are not supported for the cut-offs. However, we cannot exclude the possibility that the exponents of lemmas are indeed not centered around those of word forms. Some suspicious evidence comes from Fig 2, where it can clearly be seen that γ l ≤ γ w in most cases. The tendency to satisfy this inequality is supported by the slight increase of the exponent α when moving from words to lemmas that has been reported in previous research [26, 27] and that we have reviewed in the Introduction. Although Refs. [26, 27] employed methods that differ substantially from ours, Eq (3) allows one to interpret, with some approximation, the increase from α w to α l of Refs. [26, 27] as the drop from γ w to γ l we have found in most cases. The apparent stability of the exponent of Zipf’s law could be a type II error caused by the current size of our sample of long single-author texts. Furthermore, the apparently constant relationship between γ l/γ w and γ w (or between γ w/γ l and γ l) may hide a non-monotonic dependence, which the correlation tests above are blind to (our correlation tests are biased towards the detection of monotonic dependences). In spite of these limitations, one conclusion is clear: Exponents are more stable than cut-offs.

The similarity between the exponents of words and lemmas would be trivial if the lemmatization process affected only a few words, or if these words were those with the smallest values of the frequency (where the two distributions are more different). However, Fig 5(a) displays the number of words that corresponds to each lemma for La Regenta and for Vanhempieni romaani (in Finnish), showing that the effect of lemmatization is rather important [33]. Lemmatization affects all frequency scales, and, in some cases, almost 50 words are assigned to the same lemma in Spanish (verb paradigms), and more than 100 in Finnish (lemma olla). All texts in Spanish, French, and Finnish yield very similar plots; texts in English lead to flatter plots, because lemmatization is not such a big transformation there due to the morphological characteristics of English. Fig 5(b) shows the same effect in a different way, depicting the frequency of each word as a function of the frequency of its corresponding lemma. The presence of data above the diagonal is due to the fact that some words can be associated to more than one lemma, and then the sum of the frequencies of the words corresponding to one lemma is not the frequency of the lemma; this is the case in English of the word found, which can correspond to two lemmas, (to) found or (to) find.

Fig 5.

(a) Number of words per lemma as a function of lemma absolute frequency n l in Vanhempieni romaani (in Finnish) and in La Regenta. The figures for the former have been slightly shifted up for clarity sake. (b) Frequency of words n w as a function of the frequency of their lemmas n l in La Regenta.

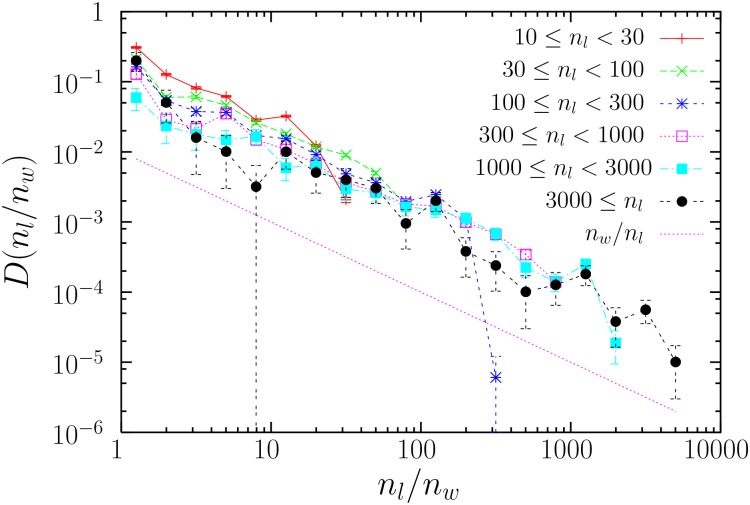

Finally, a complementary view is provided in Fig 6, which shows the distribution of the ratio of frequencies n l/n w for the words that correspond to a given lemma (the subindices refer to lemmas and words, respectively). In all cases this ratio is broadly distributed, resembling a power law, although the statistics is too poor to draw more solid conclusions. As an indication, we plot in the figure a power law with exponent around 1, which is a good visual guide for texts in Spanish and French. In Finnish, the distribution becomes broader, being closer to a power law with exponent 0.5, whereas in English the decay is faster, around an exponent 1.5 (not shown). In any case, the relation between the frequency of words and the frequency of their lemmas seems to lack a characteristic scale. The simplest case in which there is only one word per lemma (and then their frequencies are the same, n l/n w = 1) is quantified in the last column of Table 2.

Fig 6. Probability density D(n l/n w) of the frequency ratio for lemmas and words, n l/n w, in La Regenta.

Values of n l smaller than n w are disregarded, as they arise from words associated to more than one lemma. Bending for the largest n l/n w is expected as the maximum of the ratio is given by n l, which is not constant for each distribution but has a variation of half an order of magnitude (see plot legend).

A challenge for future research is to illuminate the approximated invariance in the word-lemma transformation. A simplistic approach is offered by MacArthur’s broken-stick model for species abundances [48]. Assume that each lemma, with frequency n, only “breaks” into two different words, with frequencies in the text given by m and n − m. If m is distributed uniformly between 0 and n, and the distribution of lemma frequencies is a power law, then, the distribution of word frequencies m turns out to be also a power law, with the same exponent (see the supplementary information of Ref. [49]). However, there is a long way from this oversimplification to reality. We have learned in Fig 5(a) that the number of words a lemma can yield varies a lot, from a few words for nominal lemmas to many words for verb lemmas in Spanish or French. More realistic models from an evolutionary perspective certainly appear as avenues for future work.

Conclusions

We have studied the robustness of Zipf’s law under lemmatization for single-author written texts. For this purpose it is crucial to unambiguously determine the power-law exponent γ of the frequency distribution of types, and the range of validity of Zipf’s law, given by the low-frequency cut-off a, both for unlemmatized texts (consisting of word forms) and for lemmatized texts (transformed into sequences of lemmas). We find that word and lemma distributions are somewhat different, but the exponents of Zipf’s law in both cases remain close to each other, for most of the texts, especially when compared to cut-offs. Nevertheless, the set of values of γ suggests a slight bias for the exponents of lemmas to decrease with respect to that of words. In contrast to the exponents, the cut-offs we find are not stable at all under the lemmatization transformation, but are significantly increased, which in turn implies a decrease in the range of validity of Zipf’s law. Random breaking of lemmas into words might explain the relative stability of the power-law distribution under the lemma-word transformation, but cannot account for the wider validity of Zipf’s law for words.

As Zipf’s law is a paradigm that goes beyond linguistics, having been found in the distribution of number of city inhabitants [50] or in the size of companies [51] (among many other systems in which “tokens” merge to constitute “types” [23]), our results could have a much broader applicability. In many of these cases, the aggregation of tokens to form types can be done in different ways, or types can be merged themselves to constitute “supertypes”, in a coarse-grained process akin both to lemmatization and to a transformation of the renormalization group [52]. This is what was attempted in Refs. [53, 54], where the spatial extent of elementary patches was added to define what was called there a natural city. Extrapolating our results, we could expect that Zipf’s exponent for city areas would not be very much affected by this process; in that case, the changes in Zipf’s α exponent found in Ref. [53] indicate that further study is necessary to elucidate whether the differences arise from the data (and so are due to differences in the underlying phenomenon) or from the data manipulation, e.g. the fitting method. In general, investigating the commonalities and differences between different systems displaying Zipf’s law is an area that should be actively addressed in the near future.

Materials and Methods

Corpus selection

First, we selected languages we have some command of (for data and error analysis purposes) and there are freely available lemmatization tools for [55, 56]. The exception is Finnish, which we included because it is a morphologically rich language that could shed light on the impact of lemmatization processes in Zipf’s law. We were interested in finding very long texts by single authors, and with that purpose we searched for the longest literary texts ever written. Of those novels published by mainstreaming publishers, Artamène is ranked as the longest, in any language, and Clarissa as the longest in English [57]. Don Quijote, consistently considered the best literary piece ever written in Spanish, is also of considerable length. The list was completed based on the availability of an electronic version of the novels in the Project Gutenberg [58]. Note that Artamène was not found in the Gutenberg Project but in a different source [59]. We were not able to find novels in Finnish of comparable length to those in the other languages and in this case they are much shorter, see Table 1.

Lemmatization

To carry out the comparison between word forms and lemmas, texts must be lemmatized. A manual lemmatization would have exceeded the possibilities of this project, so we employed natural language processing tools: FreeLing [55] for Spanish and English, TreeTagger [56] for French, and Connexor’s tools [60] for Finnish.

The tools carry out the following steps:

Tokenization: Segmentation of the texts into sentences and sentences into words, symbols, and punctuation marks (tokens).

Morphological analysis: Assignment of one or more lemmas and morphological information (a part-of-speech tag) to each token. For instance, houses in English can correspond to the plural form of the noun house or the third person singular, present tense form of the verb to house. At this stage, both are assigned whenever the word form houses is encountered.

Morphological disambiguation: An automatic tagger assigns the single most probable lemma and tag to each word form, depending on the context. For instance, in The houses were expensive the tagger would assign the nominal lemma and tag to houses, while in She usually houses him, the verb lemma and tag would be preferred. We note that as in both cases the lemma is the same, both occurrences would count in the statistics of the house lemma.

As all these steps are automatic, some errors are introduced at each step. However, the accuracy of the tools is quite high (e.g., around 95–97% at the token level for morphological disambiguation), such that a quantitative analysis based on the results of the automatic process can be carried out. Also note that step 2 is based on a pre-existing dictionary (of words, not of lemmas, also called a lexicon): only the words that are in the dictionary are assigned a reliable set of morphological tags and lemmas. Although most tools use heuristics to assign tag and/or lemma information to words that are not in the dictionary, the results shown in this paper are obtained by counting only tokens of lemmas for which the corresponding word types are found in the dictionary, so as to minimize the amount of error introduced by the automatic processing. This comes at the expense of losing some data. However, the dictionaries have quite a good coverage of the vocabulary, particularly at the token level, but also at the type level (see Table 5). The exceptions are Ulysses, because of the stream of consciousness prose, which uses many non-standard word forms, and Artamène, because 17th century French contains many word forms that a dictionary of modern French does not include.

Table 5. Coverage of the vocabulary by the dictionary in each language, both at the word-type and at the token level.

The average for all texts is also included. Remember that we distinguish between a word type (corresponding to its orthographic form) and its tokens (actual occurrences in text).

| Title | Tokens | Types |

|---|---|---|

| Clarissa | 96.9% | 68.0% |

| Moby-Dick | 94.7% | 70.8% |

| Ulysses | 90.4% | 58.6% |

| Don Quijote | 97.0% | 81.3% |

| La Regenta | 97.9% | 89.5% |

| Artamène | 83.6% | 43.6% |

| Bragelonne | 97.5% | 89.8% |

| Seitsemän v. | 95.4% | 89.8% |

| Kevät ja t. | 98.3% | 96.2% |

| Vanhempieni r. | 98.5% | 96.5% |

| average | 95.0% | 78.4% |

Note that the tools we have used do not only provide lemmatization, but also morphological analysis. That means that words are associated with a lemma (houses: house) and a morphological tag (houses: NNS, for common noun in plural form, or VBZ, for verb in present tense, third person singular). Tags express the main part of speech (POS; for houses, in this case, noun vs. verb) plus additional morphological information such as number, gender, tense, etc. That means that instead of reducing our vocabulary tokens to their lemmas, we could have chosen to reduce them to their lemma plus tag information (lemma-tag, house-NNS vs. house-VBZ), or to their lemma plus POS information (lemma-POS: house-N vs. house-V). Table 6 shows that, from all these reductions, pure lemmatization (houses: house) is the most aggressive one, while still being linguistically motivated, as it reduces the size of vocabulary V a factor which is between 2 (for Moby-Dick) and 5 (for Artamène). Therefore, in this paper we focus on comparing word tokens with lemmas. A further reduction in the lemmatization transformation is provided by our requirement, explained in the previous paragraph, that the corresponding word is included in the dictionary of the lemmatization software. If this restriction is eliminated, the results are very similar, as the restriction mainly operates at the smallest frequencies (let us say, n ≤ 10 or 20), whereas the power law fit takes place for larger frequencies (see Table 2). Alternatively to lemmatization, there is a different transformation that, instead of aggregating words into lemma-POS or lemmas, segregates words into what we may call word-lemma-tag. Table 6 shows that this transformation is not very significant, in terms of changes in the size of the vocabulary.

Table 6. Size of vocabulary V (i.e., number of types) when texts are decomposed in different sorts of types, being these: word-lemma-tag (w-l-t), plain words, lemma-POS (l-pos), lemma-POS of words in the dictionary (l-pos dic), lemmas, and lemmas of words in the dictionary (lemma dic).

The latter provide the most radical transformation, as it yields the largest reduction in resulting vocabulary.

| w-l-t | word | l-pos | l-pos dic | lemma | lemma dic | |

|---|---|---|---|---|---|---|

| Clarissa | 23624 | 20492 | 17058 | 10315 | 15356 | 9041 |

| Moby-Dick | 20777 | 18516 | 15774 | 10426 | 14226 | 9141 |

| Ulysses | 32952 | 29450 | 26412 | 14136 | 24089 | 12469 |

| Don Quijote | 23359 | 21180 | 11872 | 7906 | 11128 | 7432 |

| La Regenta | 24053 | 21871 | 12509 | 10500 | 11768 | 9900 |

| Artamène | 31574 | 25161 | 7605 | 5349 | 7177 | 5008 |

| Bragelonne | 28803 | 25775 | 12994 | 11342 | 12127 | 10744 |

| Seitsemän | 22851 | 22035 | 9749 | 7788 | 9607 | 7658 |

| Kevät ja | 26087 | 25071 | 9897 | 9054 | 9733 | 8898 |

| Vanhempieni | 37247 | 35931 | 14751 | 13678 | 14566 | 13510 |

Statistical procedures

We now explain the different statistical tools used in the paper. We begin with the procedure to find parameter values that describe the distributions of frequencies, that is, the power-law exponent γ and the low-frequency cut-off a. As we have already mentioned, the method we adopt is based on the one by Clauset et al. [23], but it incorporates important modifications that have been shown to yield a better performance in the continuous case [36, 37]. The algorithm we use is the one described in Ref. [39].

The key issue when fitting power laws is to determine the optimum value a of the variable for which the power-law fit holds. The method starts by selecting arbitrary values of a, and for each value of a the maximum likelihood estimation of the exponent is obtained. In the discrete case one has to maximize the likelihood function numerically, where the normalization factor is obtained from the Hurwitz zeta function. The goodness of the fit needs to be evaluated independently. For this, the method uses the Kolmogorov-Smirnov test, and the p-value of the fit is obtained from Monte Carlo simulations of the fitted distribution. The simulated data need to undergo the same procedure as the original empirical data in order to avoid biases in the fit (which would lead to inflated p-values). In this way, for each value of a we obtain a fit and a quantification of the goodness of the fit given by its p-value. The chosen value of a is the smallest one (which gives the largest power-law range), provided that its p-value is large enough. This has an associated estimated maximum likelihood exponent, which is the final result for exponent. Its standard deviation (for the quantification of its uncertainty) is obtained, for fixed a, from the standard deviation of the values obtained in the Monte Carlo simulations.

The complete algorithm is implemented here with the following specifications. The minimum frequency a is sampled with a resolution of 10 points per order of magnitude, in geometric progression to yield a constant separation of a–values in logarithmic scale. The procedure is simple: A given value for a is obtained by multiplying its previous value by , with the initial value of a being 1, and in this sense the relative error in a can be considered to be of the order of ; the values of a produced that are not integers are rounded to the next integer a posteriori to become true parameters. The goodness of fit is evaluated with 1000 Monte Carlo simulations; and a p-value is considered to be large enough if it exceeds 0.20.

Now we review the methods used to investigate the similarity between words and lemmas from the perspective of the parameters of the frequency distribution. Student’s t–test for paired samples makes use of the differences between the values of the parameters of each text (either exponents or cut-offs, word minus lemma) and rescales the mean of the differences by dividing it by the (unbiased) standard deviation of the differences and by multiplying by (with 𝓝 the number of data, 10 books in our case). This yields the t statistic, which, if the differences are normally distributed with the same standard deviation and zero mean, follows a t–Student distribution with 𝓝 − 1 degrees of freedom. Simulations of 𝓝 independent normally distributed variables with zero mean and the same standard deviation mimic the distribution of the differences under the null hypothesis and lead to the t–Student distribution, which allows the calculation of the p–value. This simulation method allows for the systematic treatment of outliers, as mentioned in the main text (if one outlier is removed, then, obviously, 𝓝 = 9 in the calculation of the value of t).

Correlations between parameters are calculated using either the Pearson correlation coefficient or the Spearman correlation coefficient. While the Pearson coefficient is a measure of the strength of the linear association, the Spearman correlation coefficient is able to detect non-linear dependences [44, 61]. The former is defined as the covariance divided by the product of the standard deviations; the latter is defined in the same way but replacing the values of each variable by their ranks (one, two, etc.); both are represented by ρ. In order to test the null hypothesis ρ = 0 we perform a reshuffling of one of the variables and calculate the resulting ρ. The p-value is just the fraction of values of ρ for the reshuffled data with absolute value larger or equal than the absolute value of ρ for the original data (a two-sided test).

We could have also used a correlation ratio test [47], a test based on the correlation ratio, another correlation statistic [62]. That test provides a way of testing for mean independence that is a priori more powerful than a standard correlation test (a Pearson correlation test is a conservative test of mean dependence [47]). However, our dataset exhibits a high diversity of values (Table 2), which is known to lead to type II errors with that statistic [47].

Acknowledgments

We thank the Connexor Oy company for tagging the Finnish texts, R. D. Malmgren and P. Puig for discussions about statistical testing, G. Altmann for his advice from a quantitative linguistics perspective, L. Devroye for facilitating a copy of his valuable book for free, and A. Deluca and F. Font-Clos for feedback on the fitting of word and lemma frequency distributions.

Data Availability

All relevant data are available via Figshare (http://dx.doi.org/10.6084/m9.figshare.1430465).

Funding Statement

This work was supported by FIS2009-09508 from the former Spanish Ministerio de Ciencia e Innovación (www.micinn.es, AC); FIS2012-31324 from the Spanish Ministerio de Economia y Competitividad (www.mineco.gob.es, AC); 2009SGR-164, 2014SGR-1307 from the Agència de Gestió d’Ajuts Universitaris i de Recerca (www.gencat.cat/agaur, AC); Beatriu de Pinós grants 2010 BP-A 00070 and 2010 BP-A2 00015 from Agència de Gestió d’Ajuts Universitaris i de Recerca (www.gencat.cat/agaur of the Generalitat de Catalunya, GB); BASMATI, TIN2011-27479-C04-03 and OpenMT-2, TIN2009-14675-C03 from the former Spanish Ministerio de Ciencia e Innovación (www.micinn.es, RFiC); and grant Iniciacio i reincorporacio a la recerca from Universitat Politecnica de Catalunya (www.upc.edu, RFiC). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Zipf GK (1972) Human Behaviour and the Principle of Least Effort An Introduction to Human Ecology. New York: Hafner reprint; 1st edition: Cambridge, MA: Addison-Wesley, 1949. [Google Scholar]

- 2. Zanette D (2014) Statistical Patterns in Written Language. ArXiv 1412: 3336. [Google Scholar]

- 3. Miller GA (1968) Introduction In: The Psycho-Biology of Language: an Introduction to Dynamic Psychology, Cambridge, MA, USA: MIT Press; pp. v–x. The book is authored by G. K. Zipf. [Google Scholar]

- 4. Li W (1992) Random texts exhibit Zipf’s-law-like word frequency distribution. IEEE T Inform Theory 38: 1842–1845. 10.1109/18.165464 [DOI] [Google Scholar]

- 5. Ferrer-i-Cancho R, Elvevåg B (2010) Random texts do not exhibit the real Zipf’s-law-like rank distribution. PLoS ONE 5: e9411 10.1371/journal.pone.0009411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Suzuki R, Tyack PL, Buck J (2005) The use of Zipf’s law in animal communication analysis. Anim Behav 69: 9–17. 10.1016/j.anbehav.2004.08.004 [DOI] [Google Scholar]

- 7. McCowan B, Doyle LR, Jenkins JM, Hanser SF (2005) The appropriate use of Zipf’s law in animal communication studies. Anim Behav 69: F1–F7. 10.1016/j.anbehav.2004.09.002 [DOI] [Google Scholar]

- 8. Ferrer-i-Cancho R, McCowan B (2012) The span of dependencies in dolphin whistle sequences. J Stat Mech: P06002. [Google Scholar]

- 9. Ferrer i Cancho R, Servedio V (2005) Can simple models explain Zipf’s law for all exponents? Glottom 11: 1–8. [Google Scholar]

- 10. Baixeries J, Elvevåg B, Ferrer-i-Cancho R (2013) The evolution of the exponent of Zipf’s law in language ontogeny. PLoS ONE 8: e53227 10.1371/journal.pone.0053227 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Piotrowski RG, Pashkovskii VE, Piotrowski VR (1995) Psychiatric linguistics and automatic text processing. Autom Doc Math Ling 28: 28–35. [Google Scholar]

- 12. Piotrowski RG, Spivak DL (2007) Linguistic disorders and pathologies: synergetic aspects In: Grzybek P, Köhler R, editors, Exact methods in the study of language and text. To honor Gabriel Altmann, Berlin: Gruyter; pp. 545–554. [Google Scholar]

- 13.Van Egmond M (2011) Word finding difficulties in aphasia and their effect on Zipf’s law. Master’s thesis, Faculty of Humanities, the Netherlands.

- 14. Hernández-Fernández A, Diéguez-Vide F (2013) La ley de Zipf y la detección de la evolución verbal en la enfermedad de Alzheimer. Anuario de Psicología 43: 67–82. [Google Scholar]

- 15. Ferrer i Cancho R, Solé RV (2001) Two regimes in the frequency of words and the origin of complex lexicons: Zipf’s law revisited. J Quant Linguist 8: 165–173. 10.1076/jqul.8.3.165.4101 [DOI] [Google Scholar]

- 16. Petersen AM, Tenenbaum J, Havlin S, Stanley HE, Perc M (2012) Languages cool as they expand: Allometric scaling and the decreasing need for new words. Sci Rep 2: 943 10.1038/srep00943 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Gerlach M, Altmann EG (2013) Stochastic model for the vocabulary growth in natural languages. Phys Rev X 3: 021006. [Google Scholar]

- 18. Naranan S, Balasubrahmanyan VK (1993) Information theoretic model for frequency distribution of words and speech sounds (phonemes) in language. J Sci Ind Res 52: 728–738. [Google Scholar]

- 19. Egghe L (2000) General study of the distribution of n-tuples of letters or words based on the distributions of the single letters or words. Math Comput Model 31: 35–41. 10.1016/S0895-7177(00)00058-3 [DOI] [Google Scholar]

- 20. Baayen H (2001) Word Frequency Distributions. Kluwer, Dordrecht. [Google Scholar]

- 21. Jayaram BD, Vidya MN (2008) Zipf’s law for Indian languages. J Quant Linguist 15: 293–317. 10.1080/09296170802326640 [DOI] [Google Scholar]

- 22. Tuzzi A IIP, Altmann G (2009) Zipf’s law in Italian texts. J Quant Linguist 16: 354–367. 10.1080/09296170903211519 [DOI] [Google Scholar]

- 23. Clauset A, Shalizi CR, Newman MEJ (2009) Power-law distributions in empirical data. SIAM Rev 51: 661–703. 10.1137/070710111 [DOI] [Google Scholar]

- 24. Li W, Miramontes P, Cocho G (2010) Fitting ranked linguistic data with two-parameter functions. Entropy 12: 1743–1764. 10.3390/e12071743 [DOI] [Google Scholar]

- 25. Baroni M (2009) Distributions in text In: Lüdeling A, Kytö M, editors, Corpus linguistics: An international handbook, Volume 2 Mouton de Gruyter, Berlin, pp. 803–821. [Google Scholar]

- 26. Kwapień J, Drozdz S (2012) Physical approach to complex systems. Phys Rep 515: 115–226. 10.1016/j.physrep.2012.01.007 [DOI] [Google Scholar]

- 27. Bentz C, Kiela D, Hill F, Buttery P (2014) Zipf’s law and the grammar of languages: A quantitative study of old and modern English parallel texts. Corpus Ling Ling Theory 10: 175–211. [Google Scholar]

- 28. Hatzigeorgiu N, Mikros G, Carayannis G (2001) Word length, word frequencies and Zipf’s law in the Greek language. J Quant Linguist 8: 175–185. 10.1076/jqul.8.3.175.4096 [DOI] [Google Scholar]

- 29. Font-Clos F, Boleda G, Corral A (2013) A scaling law beyond Zipf’s law and its relation with Heaps’ law. New J Phys 15: 093033 10.1088/1367-2630/15/9/093033 [DOI] [Google Scholar]

- 30. Ferrer-i-Cancho R, Gavaldà R (2009) The frequency spectrum of finite samples from the intermittent silence process. J Am Assoc Inf Sci Technol 60: 837–843. 10.1002/asi.21033 [DOI] [Google Scholar]

- 31. Mantegna RN, Buldyrev SV, Goldberger AL, Havlin S, Peng CK, Simons M, et al. (1995) Systematic analysis of coding and noncoding DNA sequences using methods of statistical linguistics. Phys Rev E 52: 2939–2950. 10.1103/PhysRevE.52.2939 [DOI] [PubMed] [Google Scholar]

- 32. Serrà J, Corral A, Boguñá M, Haro M, Arcos JL (2012) Measuring the evolution of contemporary western popular music. Sci Rep 2: 521 10.1038/srep00521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Popescu II, Altmann G (2006) Some aspects of word frequencies. Glottom 13: 23–46. [Google Scholar]

- 34. Conrad B, Mitzenmacher M (2004) Power laws for monkeys typing randomly: the case of unequal probabilities. IEEE T Inform Theory 50: 1403–1414. 10.1109/TIT.2004.830752 [DOI] [Google Scholar]

- 35. Stumpf MPH, Porter MA (2012) Critical truths about power laws. Science 335: 665–666. 10.1126/science.1216142 [DOI] [PubMed] [Google Scholar]

- 36. Peters O, Deluca A, Corral A, Neelin JD, Holloway CE (2010) Universality of rain event size distributions. J Stat Mech P11030 10.1088/1742-5468/2010/11/P11030 [DOI] [Google Scholar]

- 37. Corral A, Font F, Camacho J (2011) Non-characteristic half-lives in radioactive decay. Phys Rev E 83: 066103 10.1103/PhysRevE.83.066103 [DOI] [PubMed] [Google Scholar]

- 38. Deluca A, Corral A (2013) Fitting and goodness-of-fit test of non-truncated and truncated power-law distributions. Acta Geophys 61: 1351–1394. 10.2478/s11600-013-0154-9 [DOI] [Google Scholar]

- 39. Corral A, Deluca A, Ferrer-i-Cancho R (2012) A practical recipe to fit discrete power-law distributions. ArXiv 1209: 1270. [Google Scholar]

- 40. Taylor JR (1997) An introduction to error analysis The study of uncertainty in phyisical measurements. Sausalito, California: University Science Books. [Google Scholar]

- 41. Bland JM, Altman DG (1995) Multiple significance tests: the Bonferroni method. Brit Med J 310: 170–170. 10.1136/bmj.310.6973.170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Abdi H (2007) Bonferroni and Šidák corrections for multiple comparisons In: Salkind NJ, editor, Encyclopedia of Measurement and Statistics. Sage, Thousand Oaks, pp. 103–107. [Google Scholar]

- 43. Poirier DJ (1995) Intermediate Statistics and Econometrics: A Comparative Approach. Cambridge: MIT Press. [Google Scholar]

- 44. Conover WJ (1999) Practical nonparametric statistics. New York: Wiley; 3rd edition. [Google Scholar]

- 45. Kolmogorov AN (1956) Foundations of the Theory of Probability. New York: Chelsea Publising Company, 2nd edition. [Google Scholar]

- 46. Corral A (2015) Scaling in the timing of extreme events. Chaos Soliton Fract 74: 99–112. 10.1016/j.chaos.2015.01.011 [DOI] [Google Scholar]

- 47. Ferrer-i-Cancho R, Hernández-Fernández A, Baixeries J, Dębowski Ł, Mačutek J (2014) When is Menzerath-Altmann law mathematically trivial? A new approach. Stat Appl Genet Mol Biol 13: 633–644. [DOI] [PubMed] [Google Scholar]

- 48. MacArthur RH (1957) On the relative abundance of bird species. Proc Natl Ac Sci USA 43: 293–295. 10.1073/pnas.43.3.293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Corral A, Ossó A, Llebot JE (2010) Scaling of tropical-cyclone dissipation. Nature Phys 6: 693–696. 10.1038/nphys1725 [DOI] [Google Scholar]

- 50. Malevergne Y, Pisarenko V, Sornette D (2011) Testing the Pareto against the lognormal distributions with the uniformly most powerful unbiased test applied to the distribution of cities. Phys Rev E 83: 036111 10.1103/PhysRevE.83.036111 [DOI] [PubMed] [Google Scholar]

- 51. Axtell RL (2001) Zipf distribution of U.S. firm sizes. Science 293: 1818–1820. 10.1126/science.1062081 [DOI] [PubMed] [Google Scholar]

- 52. Corral A (2005) Renormalization-group transformations and correlations of seismicity. Phys Rev Lett 95: 028501 10.1103/PhysRevLett.95.028501 [DOI] [PubMed] [Google Scholar]

- 53. Jiang B, Jia T (2011) Zipf’s law for all the natural cities in the United States: a geospatial perspective. Int J Geograp Inform Sci 25(8): 1260–1281. 10.1080/13658816.2010.510801 [DOI] [Google Scholar]

- 54.Jiang B, Yin J, Liu Q (2014) Zipf’s law for all the natural cities around the world. Int J Geogr Inf Sci: in press.

- 55.FreeLing. http://nlp.lsi.upc.edu/freeling.

- 56.Schmid H (1994) Probabilistic part-of-speech tagging using decision trees. In: Proceedings of International Conference on New Methods in Language Processing. Citeseer, Manchester, volume 12, pp. 44–49.

- 57. http://en.wikipedia.org/wiki/List_of_longest_novels.

- 58.Project Gutenberg. http://www.gutenberg.org.

- 59.Artamène ou le Grand Cyrus. http://www.artamene.org.

- 60.Connexor. http://www.connexor.eu.

- 61. Zou K, Tuncali K, Silverman SG (2003) Correlation and simpler linear regression. Radiology 227: 617–628. 10.1148/radiol.2273011499 [DOI] [PubMed] [Google Scholar]

- 62. Kruskal WH (1958) Ordinal measures of association. J Am Statist Assoc 53: 814–861. 10.1080/01621459.1958.10501481 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All relevant data are available via Figshare (http://dx.doi.org/10.6084/m9.figshare.1430465).