Significance

When left and right eyes disagree about what is being viewed, the brain resolves the disagreement by compromise: Visual awareness alternates between the two eyes’ views over time. Called “binocular rivalry,” these alternations in awareness are widely thought to reveal the usually implicit inferential nature of visual processing. In this study, we found that the perceptual dynamics defining rivalry are influenced by abstract, relational properties between visual and auditory sequences comprising musical melodies. However, this bisensory interaction impacts only the amount of time a visual sequence dominates awareness, with audiovisual interaction being powerless to hasten a visual sequence’s emergence from invisibility into awareness. These findings situate the inferential processing putatively transpiring during rivalry prior to extraction of abstract, semantic information.

Keywords: music, binocular rivalry, multisensory interaction, visual awareness, audio-visual congruence

Abstract

Predictive influences of auditory information on resolution of visual competition were investigated using music, whose visual symbolic notation is familiar only to those with musical training. Results from two experiments using different experimental paradigms revealed that melodic congruence between what is seen and what is heard impacts perceptual dynamics during binocular rivalry. This bisensory interaction was observed only when the musical score was perceptually dominant, not when it was suppressed from awareness, and it was observed only in people who could read music. Results from two ancillary experiments showed that this effect of congruence cannot be explained by differential patterns of eye movements or by differential response sluggishness associated with congruent score/melody combinations. Taken together, these results demonstrate robust audiovisual interaction based on high-level, symbolic representations and its predictive influence on perceptual dynamics during binocular rivalry.

Vision can be confusing, particularly when the retinal images inaugurating vision are ambiguous or underspecified with respect to their origins (1, 2). In some situations, confusion can be resolved by reliance on contextual information (3), prior experience (4), expectations (5), and/or implicit knowledge about structural regularities in our visual environment (6). In other situations, however, confusion resists resolution, in which case one can directly experience the dynamical nature of competition as conflicting perceptual interpretations vie for dominance (7). Among the visual phenomena exhibiting this behavior, none is more compelling than binocular rivalry (8).

When the two eyes view dissimilar monocular stimuli, stable binocular single vision gives way to interocular competition, characterized by unpredictable fluctuations in visual awareness of the rival stimuli (9, 10). Rivalry offers a way to study the inferential nature of perception when the brain is confronted with conflicting sensory evidence (11, 12). Within this context, recent studies imply that rivalry dynamics are governed by influences related to the likelihood of competing perceptual interpretations, such as motor actions (13), affective connotation (14–16), familiarity (17), and concomitant sensory input from other modalities (18–20). Here we examined the limits of susceptibility of rivalry to predictive influences by asking whether binocular rivalry dynamics depend on multisensory congruence between abstract representations familiar to individuals with expertise in that domain of abstraction. The domain we selected was music, for three reasons: (i) musical notation is symbolic; (ii) only people versed in music can decipher that notation; and (iii) melodic structure can be experienced via sight or sound.

Results

Experiment 1: Extended Tracking of Binocular Rivalry.

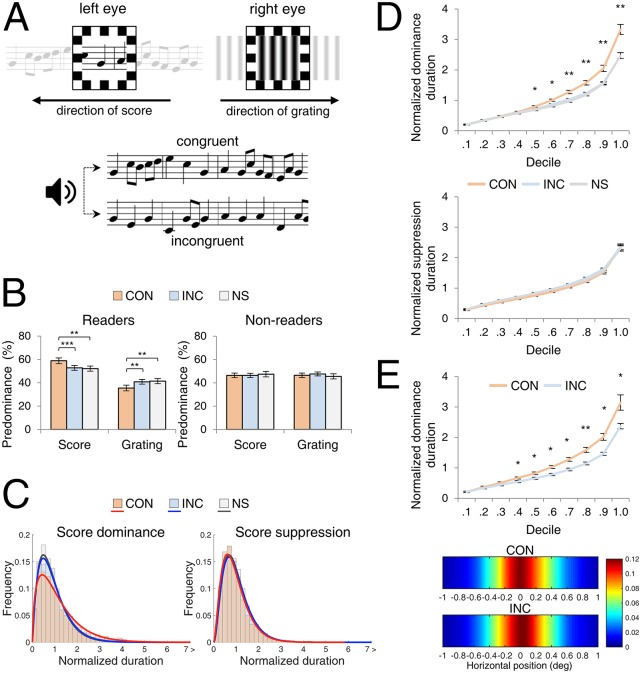

Experiment 1 was implemented as a conventional binocular rivalry task in which participants pressed buttons to track alternating periods of dominance and suppression between dissimilar monocular displays viewed by the two eyes (Fig. 1A): One eye saw a musical score scrolling through a viewing window, and the other eye saw a vertical, drifting grating. Participants tracked alternations in perception during 30-s trials administered in one of three audiovisual (AV) conditions: (i) listening to a melody that was congruent (CON) with the score, (ii) listening to a melody that was incongruent (INC), or (iii) listening to no sound (NS). The task itself required no familiarity with musical notation, allowing us to test people who can read music and those who cannot.

Fig. 1.

Experiment 1. (A) Schematics of rival stimuli and AV conditions. (Upper) One eye viewed a single-note musical score scrolling right to left, and the other eye viewed a vertical grating scrolling left to right. (Lower) In some trials, the participant heard an auditory melody that either matched (CON) or did not match (INC) the visual score; in other trials (not illustrated) no melody was heard (NS). (B) Predominance plotted for readers and nonreaders; pairs of predominance values for a given AV condition do not necessarily sum to 100%, because total trial duration included brief periods of incomplete dominance (i.e., mixtures, which comprised 5.6, 6.4, and 6.5% of the total viewing time for CON, INC, and NS conditions, respectively, values that are not significantly different when examined using ANOVA). Predominance values computed with mixtures excluded produce exactly the same pattern of results. **P < 0.01, ***P < 0.001. Error bars denote SEM. (C) Frequency histograms showing the relative proportion of normalized dominance durations for score and for grating (i.e., score suppression) in readers in CON trials (orange), INC trials (blue), and NS trials (gray). (D) Decile plots for normalized score dominance durations and for normalized score suppression durations. *P < 0.05, **P < 0.01, FDR corrected. Error bars represent SEM. (E) Eye movement measurement in control experiment 1. (Upper) Decile plot of normalized score dominance durations for CON and INC conditions. *P < 0.05, **P < 0.01, FDR corrected. (Lower) Heat-maps showing horizontal gaze stability for CON and INC trials. The key indicates the mapping between color and incidence of fixation, with warm colors denoting greater incidence of fixation on the given spatial location of the rival target area.

Our initial analyses focused on the relative predominance of the pair of rival stimuli (i.e., percentages of time the score or the grating was exclusively dominant). Because left eye and right eye predominance were equivalent, we pooled results across eyes for all statistical analyses of conditions and groups.

As summarized in Fig. 1B, musical scores in rivalry enjoyed significantly greater predominance than did grating among people able to read music [t (22) = 3.71, P < 0.01; Cohen’s effect size index d =1.534], whereas score and grating predominance were not different for nonreaders [t (10) = 0.05, P = 0.956, d = 0.033]. We next tailored our statistical analyses to examine predominance in more detail in only those persons who could read music. Paired t tests between the three AV conditions revealed that the predominance of scores was significantly greater in CON trials than in INC trials [t (22) = 3.87, P < 0.001, d = 0.562] or in NS trials [t (22) = 0.78, P < 0.01, d = 0.602].

For our purpose, it is essential to know whether these significant differences in score predominance for the AV conditions arose from lengthened durations of individual dominance of the scores and/or from abbreviated suppression of individual durations of the scores (i.e., dominance durations of the grating); either of those possibilities could promote the observed enhancement in predominance of musical scores. To compare the durations of dominance and suppression for these conditions, we first needed to take into account the substantial individual differences in overall durations of dominance existing among our participants: The average dominance durations spanned a threefold range (1.28–3.52 s), consistent with previous results (21, 22). To remove that source of variance from our analyses of dominance durations, we applied a widely used normalization procedure (23) whereby the individual dominance durations of scores for each participant were divided by the average dominance duration of scores and the individual suppression durations of scores were divided by the average suppression duration of scores. Frequency histograms of those normalized duration values (Fig. 1C) conform closely to the signature distribution characteristic of rivalry durations (24). Statistical analyses of these normalized durations yielded the same pattern of results as seen with predominance and, for that matter, with the actual dominance durations. Specifically, for the readers, normalized durations for scores seen in CON trials were significantly longer than those measured in INC trials [t (22) = 4.04, P < 0.001, d = 1.454] and those measured in NS trials [t (22) = 3.96, P < 0.001, d = 1.524]. Normalized score-suppressed durations did not differ significantly in these pairwise comparisons. We also analyzed normalized durations for the three AV conditions within the nonreader group and found no statistically significant differences in score dominant or in score suppressed. The means and SEs of the raw and the normalized dominance and suppression durations for readers and nonreaders in each of the three AV conditions are provided in Table S1.

Table S1.

Summary data for experiment 1: mean score dominance and score suppression durations for CON, INC, and NS conditions measured in readers and in nonreaders in experiment 1

| Score dominant (SEM) | Score suppressed (SEM) | ||||||

| CON | INC | NS | CON | INC | NS | ||

| Readers (n = 23) | Dominance duration (s) | 2.815 (0.196) | 2.284 (0.141) | 2.273 (0.184) | 1.818 (0.153) | 1.931 (0.158) | 1.873 (0.139) |

| Normalized dominance duration | 1.162 (0.040) | 0.947 (0.018) | 0.926 (0.022) | 0.962 (0.024) | 1.027 (0.014) | 1.009 (0.017) | |

| Nonreaders (n = 11) | Dominance duration (s) | 1.926 (0.229) | 1.944 (0.224) | 2.012 (0.281) | 1.961 (0.192) | 2.052 (0.234) | 1.922 (0.187) |

| Normalized dominance duration | 0.989 (0.011) | 1.004 (0.019) | 1.013 (0.025) | 0.998 (0.012) | 1.029 (0.016) | 0.979 (0.015) | |

We next performed a more fine-grained analysis (25) of the normalized dominance and suppression durations measured in individual trials, the aim being to examine when in time those various AV conditions begin to differ from one another for the music readers. First, we sorted the individual normalized dominance durations and the individual normalized suppression durations measured during CON, INC, and NS trials from the shortest to the longest, separately for each participant. Then for each of those six conditions (two rivalry states × three AV conditions) we divided the ordered sets of durations into deciles, i.e., 10 groups of durations, each with approximately equal numbers of ordered duration values. For each decile group we calculated the mean rival state durations and then averaged those individual decile means over observers to create the curves shown in Fig. 1D. Paired comparison t tests were performed on each of the CON/INC decile pairs, with P values corrected using the false-discovery rate (FDR) procedure applicable when performing multiple comparisons (26). The significant influence of CON did not emerge until dominance durations were equal to or longer than the dominance duration associated with the fifth decile. This finding makes sense, because congruence between a score and a melody requires experiencing multiple consecutive notes, a point we discuss further later. The CON and INC durations for the score-suppressed durations did not differ at any decile level.

Eye Movement Hypothesis.

Both rival targets used in experiment 1 comprised contours moving in opposite directions, stimulus conditions that could have produced tracking eye movements (27), especially because we did not provide a fixation point (Methods). Could the differences in dominance durations measured in readers during CON and INC trials stem from differences in the pattern of eye movements evoked during those trials? That notion seemed unlikely, because the conditions of visual stimulation were identical for CON and for INC, but, to be sure, we replicated the conditions of experiment 1 in 20 new participants, all of whom were accomplished music readers, this time measuring eye movements throughout the course of each trial (see Methods and SI Results for details).

Considering first the psychophysical tracking results (Fig. 1E), dominance durations of the score were significantly longer in CON trials than in INC trials for all but the briefest decile categories, replicating the results from experiment 1.

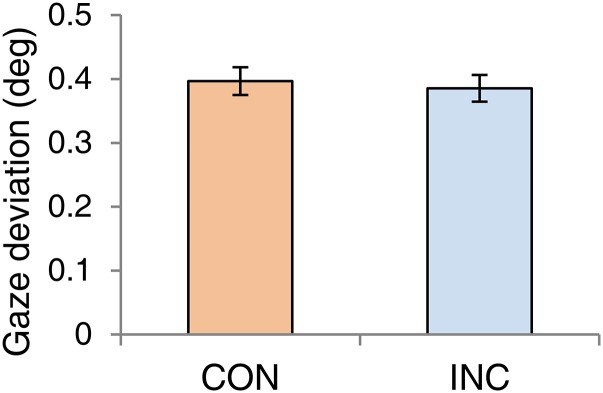

Turning to the eye movement measures, those records disclose episodes of optokinetic reflex (OKR) movements correlated with the direction of motion associated with the reported direction of rival target motion, consistent with others’ reports (28, 29). However, there also were periods devoid of OKR, in which the eyes were relatively stationary or executed small saccades during a rivalry state, making it impossible to predict reliably which stimulus was dominant (SI Results and Fig. S1). For our purpose, the mixed OKR results predicting the dominant stimulus was not a problem, because what we needed to know was whether the variability of horizontal eye position differed during CON trials and INC trials. In fact, we observed no discernible difference in the variability of eye positions in the two conditions (Fig. 1E and Fig. S2).

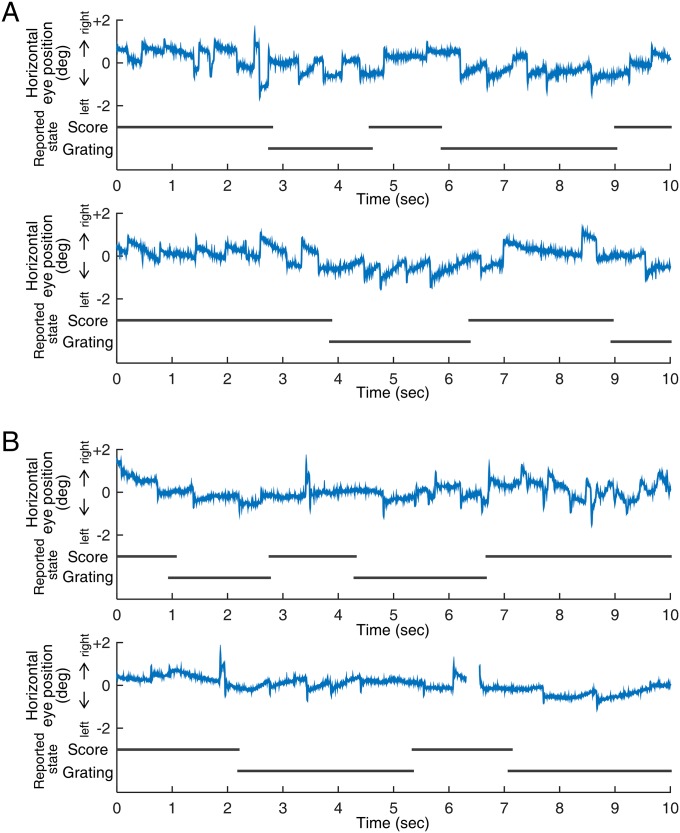

Fig. S1.

OKR movements over several state transitions in a representative observer showing examples reflecting perceptual dominance (A) and examples devoid of OKR (B). Each 10-s record shown here is a part of a complete, ∼30-s tracking period in different trials. In all panels, the y axis plots horizontal eye position, with positive values denoting locations to the right of the center of the display and negative numbers denoting locations to the left of center.

Fig. S2.

Mean gaze deviations measured in CON and INC trials.

Experiment 2: Discrete Trial Binocular Rivalry.

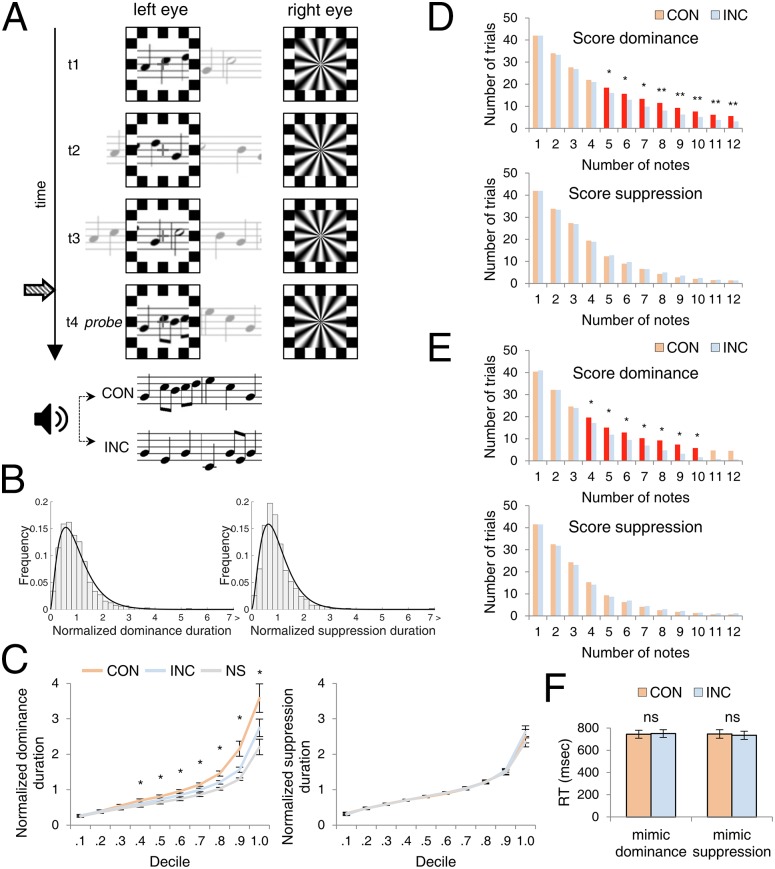

Congruence between auditory melody and visual musical score affected dominance durations for the score but had no significant influence on suppression durations for the score. Evidently, hearing music has no impact on the speed of recovery from suppression of a musical score, regardless whether music and score are CON or INC. This conclusion contrasts with other findings showing that contextual congruence (30, 31) and experience-based familiarity (32) can influence how quickly a visual stimulus emerges from interocular suppression to achieve dominance. Hence, we wanted to examine more carefully the impact of INC and CON during phases of score suppression using a discrete trial probe technique that is arguably more sensitive than rivalry tracking when it comes to detecting weak, subliminal influences during rivalry (33, 34). This probe technique involves introducing CON or INC auditory melodies unpredictably when the musical score is dominant or suppressed (Fig. 2A).

Fig. 2.

Experiment 2. (A) Schematics of rival stimuli (t1, t2, and t3 denote time) and AV conditions. One eye viewed a musical score drifting leftward while the other eye viewed a counterphase flickering radial grating. Following an unpredictable number of state changes (signified by participant’s button presses), the initially random score smoothly morphed into a melodic probe score (signified by the time label t4). Concurrently with probe presentation, the participant began hearing an auditory melody that either matched (CON) or did not match (INC) the probe score. In other trials, probe presentations were unaccompanied by auditory melody (NS). (B) Frequency histograms of the relative proportion of the normalized score dominance durations and score suppression durations in both readers and nonreaders, collapsed over AV conditions. (C) Decile plots for the normalized score dominance durations and normalized score suppression durations. *P < 0.05, FDR corrected. Error bars denote SEM. (D) Frequency histograms of trials in experiment 2 categorized by the number of notes presented visually and auditorily for score dominance and for score suppression conditions in readers. Bolded bars and symbols denote categories in which CON and INC were significantly different in terms of incidence of number of trials. *P < 0.05, **P < 0.01 FDR corrected. (E) Results in same format as D, obtained from a new group of participants. *P < 0.05, FDR corrected. (F) Reaction times (RTs) to probe scores presented in rivalry mimic trials in which the probe score followed appearance of the nonsense melody score (mimicking score dominance) or followed the appearance of the radial grating (mimicking score suppressed). Response times did not differ between the two AV conditions (ns, not significant). Error bars indicate SEM.

Each trial began with dichoptic presentation of a nonmelodic score and a flickering radial grating, without accompanying sound. Readers and nonreaders of music reported rivalry state by pressing one of two assigned keys on the keyboard. In score-dominance trials, the nonmelodic score smoothly and rapidly morphed into one of seven possible melodic probe scores, with the change happening at the onset of the second dominance phase of the default score. In suppression trials, the transition from nonmelodic to probe score occurred at the onset of the second suppression phase of the nonmelodic score; because this transition occurred smoothly, participants were unaware of the change at the time of its occurrence. Trials in which transitions occurred at the onset of dominance or at the onset of suppression of the nonmelodic score were randomly intermixed, so observers could not anticipate when a transition would happen. Simultaneously with the transition onset, participants began hearing the melody corresponding to the newly introduced probe score (CON), a melody different from the newly introduced probe score (INC), or no melody at all (NS). A trial ended when the participant released the key signifying a change in perceptual dominance following introduction of the probe score. Thus each trial generated one phase duration, which could be either a dominance duration for the probe score or a suppression duration for the probe score.

Fig. 2B presents frequency histograms for all normalized dominance and suppression durations measured in the probe phase of each trial. Rival state durations measured using single trial probes, as an aggregate, conform to the characteristic gamma-like distribution typical of durations measured using extended rivalry tracking (24). Fig. 2C shows decile plots for CON, INC, and NS conditions, for probes presented during dominance and during suppression for readers. As found in experiment 1, CON trials produced significantly longer dominance durations than did INC trials for decile categories at or beyond the median value, again confirming that CON boosts dominance of the musical score. Of primary interest for our purposes, suppression durations of the probe scores were not significantly different within any of the decile groups. As in experiment 1, nonreaders did not exhibit significant differences across the three AV conditions.

Having again failed to find an effect of congruency on suppression durations, we wondered whether some other factor might prevent us from detecting a weak but genuine effect of score congruence on suppression durations. As noted earlier, congruence of auditory melody and visual score can be discerned only when there are multiple successive tones and visual notes available to be matched. Perhaps, we reasoned, a genuine effect of congruence during suppression is being obscured by inclusion of very short-state durations that cannot possibly contribute to a congruence effect. To examine that possibility, we reanalyzed the dominance and suppression durations for CON and INC conditions based on the number of notes actually presented in each trial. The pair of histograms in Fig. 2D plots the number of trials in which at least n notes were visually and auditorily presented before a state transition. Plotted in this way, the data can be construed as the outcome of a series of Bernoulli trials, with the resulting geometric distribution plotted as a function of duration, except that the unit of time in Fig. 2D is the number of notes presented. The relevant comparison is between the CON and INC trials. Looking first at the dominance trials (i.e., probe score and accompanying sound presented when the probe score was dominant), the differences between CON and INC dominance durations are statistically significant for trials in which anywhere from 5 to 12 musical notes had been seen and heard; before that, CON and INC trials were statistically indiscriminable in duration. Evidently the realization of the congruence or lack of congruence between auditory and visual melodic structure emerges only after some critical amount of time, on the order of a couple of seconds. In other words, CON trials tended to last longer when observers had the opportunity to hear and to see five or more notes. Looking next at the suppression trials (i.e., probe score and accompanying sound presented immediately following suppression of the initial musical score), one sees an equivalence of CON and INC trials at all note values. So, considering only at trials in which readers experienced multiple musical notes does not reveal a subtle reduction in suppression durations associated with melodic congruence.

Finally, we tried excluding state durations that were too brief to ensure the presentation of at least two visual and two auditory notes; those minimum durations were defined individually for each of the seven musical sequences used in the experiment. This exclusion rule was imposed on the entire set of actual rival state durations—dominance and suppression—following the introduction of the auditory melody, regardless whether they were CON or INC trials. Excluded trials averaged 20% of the total across all participants. The removal of very brief durations had no impact on the robust difference between CON and INC dominance durations and, moreover, did not uncover a weak suppression effect. Summary values of those state durations, both unpruned and pruned, are presented in Table S2.

Table S2.

Summary data for experiment 2: mean dominance and suppression durations for probe scores presented in CON, INC, and NS conditions for readers and for nonreaders

| Score dominant (SEM) | Score suppressed (SEM) | ||||||

| CON | INC | NS | CON | INC | NS | ||

| Readers* (n = 15) | Dominance duration (s) | 3.322 (0.455) | 2.693 (0.367) | 2.143 (0.232) | 2.121 (0.240) | 2.137 (0.230) | 2.040 (0.214) |

| Normalized dominance duration | 1.192 (0.039) | 0.976 (0.025) | 0.832 (0.038) | 1.000 (0.031) | 1.014 (0.018) | 0.987 (0.038) | |

| Nonreaders* (n = 15) | Dominance duration (s) | 2.295 (0.355) | 2.162 (0.313) | 2.022 (0.228) | 2.645 (0.260) | 2.413 (0.219) | 2.555 (0.205) |

| Normalized dominance duration | 1.043 (0.024) | 0.996 (0.020) | 0.962 (0.029) | 1.037 (0.032) | 0.944 (0.017) | 1.018 (0.037) | |

| Readers† (n = 15) | Dominance duration (s) | 3.692 (0.480) | 3.062 (0.359) | 2.444 (0.211) | 2.336 (0.243) | 2.450 (0.234) | 2.251 (0.201) |

| Normalized dominance duration | 1.166 (0.047) | 0.999 (0.029) | 0.837 (0.041) | 0.986 (0.023) | 1.043 (0.021) | 0.973 (0.033) | |

| Nonreaders† (n = 15) | Dominance duration (s) | 2.516 (0.333) | 2.427 (0.295) | 2.196 (0.208) | 2.826 (0.266) | 2.710 (0.277) | 2.737 (0.206) |

| Normalized dominance duration | 1.041 (0.016) | 1.016 (0.018) | 0.941 (0.021) | 1.020 (0.029) | 0.972 (0.020) | 1.004 (0.035) | |

Contains means and SEs computed from all durations.

Excludes durations too brief to ensure presentation of at least two visual and two auditory notes; those minimum durations were defined individually for each of the seven musical sequences used in the experiment.

Sluggish Response Hypothesis.

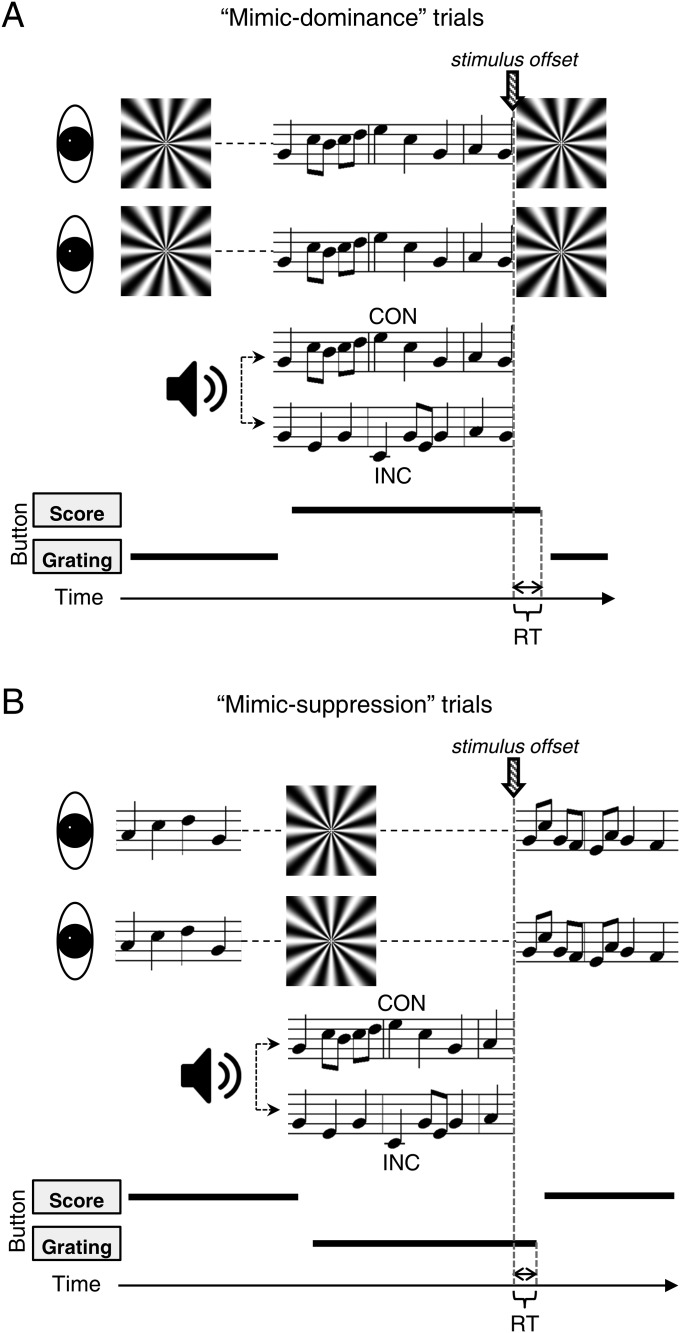

Given the failure to find any trace of auditory modulation of suppression durations, we felt compelled to consider whether the effect of congruence on dominance durations might, in fact, be attributable to response sluggishness and not to prolonged perceptual dominance. According to this hypothesis, readers are slower to recognize and respond to unpredictable transitions from score to grating in CON trials than in INC trials, an effect that would not be seen in trials involving the transition from grating to score. Perhaps, for example, congruent audiovisual stimulation is more engaging, leading to slower button releases and thus to seemingly longer dominance durations. To evaluate this hypothesis, we tested 13 music readers (11 new to the task) using exactly the same discrete trial procedure used in experiment 2. The results for these CON and INC trials replicated the pattern found in the original group of participants (Fig. 2E). Using those measured dominance and suppression durations, we then created rivalry mimic trials for each participant that entailed actually presenting the score and the grating alternately to the separate eyes in a sequence matching that reported by that participant during the actual rivalry procedure (SI Methods and Fig. S3). As before, participants tracked the alternating appearance of the two rival stimuli by pressing one of two buttons. Means and SDs of the response latencies associated with the final transition of a sequence were computed for CON and INC trials associated with the ersatz dominance condition (real transition from score to grating) and the ersatz suppression condition (real transition from grating to score). For both the mimicked dominance and the mimicked suppression trials, reaction times in the CON and INC conditions were indistinguishable (Fig. 2F), implying that reaction times per se are not responsible for the difference in dominance durations between the two conditions measured during genuine rivalry.

Fig. S3.

Schematics of stimuli and AV conditions in the mimic rivalry portion of control experiment 2. (A) Structure of the mimic-dominance trials. (B) Structure of the mimic-suppression trials.

Discussion

Melodic congruence between what is seen and what is heard impacts perceptual dynamics during binocular rivalry in people who can read music. However, this interaction between seeing and hearing was not observed when the musical score was suppressed from awareness, and under no conditions was it seen in people without expertise in music. This effect of congruence in music readers cannot be attributed to differential patterns of eye movements or to response sluggishness associated with CON score/melody combinations.

Our results add to the body of evidence showing that visual awareness during interocular competition is influenced by nonvisual information conveyed by other sensory modalities (35–41). Our results go further, however, by demonstrating robust AV interaction based on high-level, symbolic representations, i.e., musical notation and melodic structure. It is not surprising that this robust interaction is enjoyed only by individuals with expertise acquired through musical training, in contrast, for example, to the performance enhancement exhibited by virtually all people when listening to speech sounds while looking at lip movements (35, 39).

Is it far-fetched to interpret the current findings as resulting partly from preexisting perceptual characteristics of individuals trained in music? It has been suggested that musicians exhibit distinct auditory (42, 43), verbal (44), and broad cognitive (45) abilities and/or systems. In particular, musical training, whether singing or playing an instrument, requires developing perception–action coordination, which may promote unusually strong connections between perception and action systems in the brain (46, 47). Storage and retrieval of musical melodies may be subserved by auditory-motor pathways including the premotor area (48). In this vein, our results could arise in part from feedback from implicit motor activity evoked in musicians while viewing musical notes (49). From other work we know that what one is doing can impact dominance in binocular rivalry (13) and that actions relevant to what is perceived influence perceptual dynamics during bistable perception (50); perhaps this influence is at play in our situation.

The multisensory interaction demonstrated in our study almost certainly does not arise from sensory-neural convergence of auditory and visual signals, i.e., the kind of convergence that has been well-established in single-cell sensory neurophysiology (51–53) and in human brain imaging (54). Instead, the interactions implicated in our study more likely entail information combination of the sort discussed in the context of cue combination across modalities (55, 56). In those situations the process of combination is construed as a form of probabilistic inference with dynamic weightings of different sources of information being governed by their reliability and likelihood. To interpret our results in that framework, however, would mean that likelihood, as signified by CON vs. INC, can be established only during dominance phases of rivalry, not during suppression.

Alternatively, one could imagine that congruent melodic sound and visual score more strongly engage the attention of a musically trained individual, resulting in a strengthened neural representation for that combination (9). After all, attention can modulate the visual awareness of a stimulus engaged in binocular competition (57–59), and this modulation could be a mediating factor in our study. A weakness of this hypothesis, however, is the equally plausible expectation that incongruence should attract attention and therefore should more strongly boost dominance of mismatched melody/score combinations. Indeed, in other situations incongruity does indeed promote dominance of a rival stimulus (60).

The AV congruence effect discovered in our work may arise from neural events within high-level, amodal brain areas such as the posterior portion of the superior temporal sulcus (61) and the prefrontal cortex (62). These brain regions also are implicated in music learning (63). Activity in these high-level regions, in turn, might have feedback influence on early visual cortex (64). Complex auditory information and even imagery can be decoded from activity in the primary visual cortex in the absence of retinal input (65). Hence, an interplay between the high-level multisensory and the early visual areas could form part of the neural network promoting visual predominance of musical notation accompanied by congruent melodic sound.

Nonetheless, we are left with an unanswered question: Why does hearing a melody have no impact on suppression durations associated with viewing a musical score, regardless whether melody and score are congruent or incongruent? After all, in other circumstances suppression durations of a visual stimulus are impacted by the properties of that stimulus, including its physical characteristics (9), its configural properties (30, 66), its affective connotation (67, 68), its lexical familiarity (32), and its social connotation (69). However, in all these instances the features defining the suppressed stimulus are plausibly being registered and remain available for analysis (70), albeit with reduced fidelity or signal strength (34). There is no reason why this should not be true for the visual features—contour size, orientation and contrast polarity—comprising notes contained in the musical scores used as a rival stimulus in our experiments. However, melody is embodied in the unfolding temporal structure of successive notes; in this sense, melody is an emergent property of a sequence of notes. Abstracting the lexical semantics of music is comparable in processing complexity to the abstraction of meaning from a sequence of words comprising a sentence. Evidently, without conscious access to the relational patterns of notes portrayed visually in a score, the emergent property of melody cannot be realized and therefore cannot be combined with auditory melody during suppression phases of the score; bisensory melodic integration is limited to periods of dominance of the score. During suppression, in other words, the visually defined semantic content within a musical score is literally out of sight and out of mind.

Methods

General Methods.

Participants.

Different portions of this study were conducted at Korea University, at Seoul National University, and at Vanderbilt University. All volunteer participants had normal or corrected-to-normal visual acuity and good stereopsis; participants were not screened based on eye dominance because rival target/eye configuration was counterbalanced over trials. Each person gave written informed consent on forms approved by the Institutional Review Board (IRB) of Korea University (1040548-KU-IRB-13-149-A-2) or Vanderbilt University (IRB 040915).

Apparatus and stimuli.

Stimuli were created using Matlab in concert with the Psychophysics Toolbox-3 (71, 72). At Korea University and Seoul National University, visual stimuli were presented on a 19-inch CRT monitor (1,024 × 768 resolution, 60-Hz frame rate, 53 cm viewing distance at Korea University, 60 cm in Seoul National University), and at Vanderbilt University, visual stimuli were presented on a 20-inch CRT monitor (1,024 × 768 resolution, 100-Hz frame rate, 80.5 cm viewing distance). In all setups, participants viewed the monitor through a mirror stereoscope with the head stabilized by a head/chin-rest. Sound was delivered over headphones.

Experiment 1.

Participants.

Twenty-three music readers [15 females, age 22.3 ± 2.5 (SD) y] and 11 nonreaders [seven females, age 26.1 ± 9.1(SD) y] participated in the experiment, some at Korea University and others at Vanderbilt University.

Apparatus and stimuli.

Dichoptically viewed rival targets were generated on the two halves of the video monitor, so that one eye viewed one of seven possible musical scores and the other eye viewed a 2.1 cycle per degree, 60%-contrast, vertical, drifting sinusoidal grating. Auditory stimuli comprised seven melodies, each corresponding to one of the seven musical scores. Scores (dark gray notes against a white background, ∼60% contrast) were excerpted from vocal scores (monophonic), with notes edited in size, note density, and rhythm as needed to ensure visual clarity. The visual scores and the auditory sound files corresponding exactly to those scores were generated with the music notation software Sibelius (Avid Technology, Inc.). In each trial one of the seven scores and a vertical grating were foveally viewed within a pair of dichoptic windows framed by a checkerboard texture that served to promote stable binocular alignment (Fig. 1A). The inner and outer borders of the windows subtended a visual angle of 1.9° × 1.9° and 2.66° × 2.66°, respectively. The score scrolled from right to left within its window at ∼1.2°/s, and the grating drifted within its window at approximately the same speed in the opposite direction.

Design and procedure.

While dichoptically viewing the rival stimuli, participants used keys to track successive periods of exclusive dominance of the score and of the grating, pressing both keys when mixtures of the score and the grating were experienced. Trial durations varied from 30 to 42 s depending on which of the seven musical sequences was used in that trial, and each trial comprised one of three possible AV conditions of equal proportion. In CON trials, participants heard the auditory melody synchronized with the streaming visual score; in INC trials they heard an auditory melody different from the visual score (drawn from the pool of six remaining melodies); and in NS trials no melody was heard. Each visual score was repeated six times and presented an equal number of times to each eye, yielding a total of 42 trials per observer. For each trial we discarded the last rivalry state duration if the observer was pressing a key at the time the trial ended, to avoid including truncated durations in the data analysis. A fixation mark was not included, but all individuals were instructed to maintain their gaze within the display window throughout the trial. They were told to expect musical sound to be heard in some trials but not others. Before formal data collection, participants were given practice tracking rivalry between score and grating in the absence of sound.

Control Experiment 1: Eye Movements.

Participants.

Fourteen volunteers were tested at Korea University, and six were tested at Seoul National University [17 females, age 21.8 ± 2.1 (SD) y]. Given the purpose of this experiment (see text), we tested only people who could read music. Some of those individuals were tested during the course of a related study comparing rivalry dynamics for melody/score combinations in musicians with absolute pitch and in musicians without absolute pitch.

Apparatus, stimuli, and procedure.

Apparatus, stimuli, and procedure were identical to those in experiment 1, with the addition of recording eye movement throughout each trial. Those records were made using a video eye-tracking system that sampled the left eye’s position (1,000 Hz) using the centroid mode [EyeLink 1000, SR Research, controlled by the Eyelink toolbox for Matlab (73)]. Initial calibration and two subsequent recalibrations during the test session were performed using the conventional five-point calibration routine that forms part of the Eyelink software package. Participants viewed the screen through a mirror stereoscope with their heads stabilized by a head/chin-rest. As before, there was no fixation point, but participants were instructed to look at the display window throughout the trial. For the analysis of eye-movement data, we excluded periods of eye blinks as well as records measured between 100 ms before and 100 ms after each eye blink (those periods comprised 10% of the total trial duration). A total of 42 trials were broken into blocks of 14 trials each, and during a block of trials observers were not allowed to remove their heads from the head/chin-rest. After each block, observers were given a short rest period followed by eye tracker recalibration before beginning the next block.

Experiment 2.

Participants.

Fifteen music readers (10 females, age 22.1 ± 3.2 (SD) y] and 15 nonreaders [seven females, age 26.2 ± 9.8 (SD) y] who had not participated in experiment 1 were recruited at Vanderbilt University. In this experiment we validated participants’ self-reported ability/inability to read music by giving each individual a music quiz consisting of multiple-choice questions, each with four possible answer choices that involved identification of a specific, visually presented musical note.

Apparatus and stimuli.

Stimuli and apparatus were identical to those used in experiment 1 except that (i) the rival grating was a 100% contrast, 5-Hz counterphase flickering radial grating (Fig. 2A), selected because it had no tendency to induce lateral eye movements; (ii) each trial started with one eye viewing a nonmelodic musical score consisting of a quasi-random sequence of notes that transitioned into one of seven actual musical scores (the probe score) after several rivalry alternations (Fig. 2A); (iii) a fixation point in the center of the window displaying the musical score, a location coinciding with the point within the other window where the radial grating came to a point; and (iv) musical scores the notes of which were convolved with a Gaussian filter (σ = 0.08° visual angle) to reduce the sharpness of their edges without compromising legibility.

Each participant completed three blocks comprising 84 discrete rivalry trials. At the beginning of a trial, the nonmelodic score and the flickering radial grating were presented dichoptically without accompanying sound. Each rival target was presented an equal number of times to the two eyes. Equal numbers of the three AV conditions were intermixed randomly within a block of 42 trials, and each block was repeated three times. A trial ended as soon as the participant indicated a change in perceptual dominance following introduction of the probe score; thus each trial generated one phase duration, which could be either a dominance duration for the probe score or a suppression duration for the probe score (i.e., those trials in which dominance of the radial grating was being reported). The end of each trial was followed by an enforced 1-s interval before the observer could tap a key to initiate the next trial.

Control Experiment 2: Response Sluggishness.

Participants.

Thirteen Korea University students [nine females, age 23.5 ± 2.2 (SD) y] participated in this control experiment, including two who also had participated in experiment 1. We purposefully tested only music readers, because this experiment focused on the congruence effect found in readers only.

Apparatus and stimuli.

Stimuli and apparatus were identical to those in experiment 2, with the addition of yoked rivalry trials in which rivalry alternations were mimicked by physically alternating between the radial grating and the score (SI Methods and Fig. S3).

SI Results

We examined the eye-movement records to learn whether reliable OKR movements were associated with viewing the rival stimuli that moved in opposite directions in the two eyes. For this analysis we focused on the horizontal component of the eye records, knowing that the directions of motion in the musical score and the drifting grating were leftward and rightward, respectively. We created eye-position plots for extended periods of rivalry tracking that spanned multiple state changes, expecting that OKR, if present, would be reflected in complementary positional changes over time coinciding with the dominant stimulus. Visual inspection of eye-movement records for individual episodes of score dominance and grating dominance did reveal some obvious periods of smooth pursuit eye movements followed by rapid return saccades, i.e., hallmark signatures of OKR. When those kinds of records were seen, the slope of the smooth pursuit component tended to reflect the direction of motion of the reported dominant stimulus (Fig. S1A. At the same time, there were instances of dominance periods in which the eyes exhibited relatively stable periods of gaze fixation punctuated by rapid shifts in fixation, with no hint of OKR (Fig. S1B). Overall, the eye-movement data did not predict with high reliability which stimulus was dominant. This result is not particularly surprising, because our rival stimuli were small compared with those used in previous studies, and the scrolling score did not portray perfectly smooth motion because of the variability in the timing and positions of successive notes. In addition, the incidence of OKR did not predict which AV condition, CON vs. INC, the observer was experiencing in a given trial. Our main purpose for measuring eye movements was to learn whether the differences in dominance durations between CON and INC were related to differences in variability in deviations of eye position for those two conditions. The summary heat maps in Fig. 1E in the main text imply that those two conditions were highly similar, and statistical comparison of horizontal eye deviations revealed no significant differences between CON and INC conditions [t (19) = 0.85, P = 0.405] (Fig. S2). These results were based on 20 participants, 17 of whom were musicians, including persons with absolute pitch participating in a larger study of musical score and melody.

SI Methods

This experiment consisted of two steps: (i) administration of exactly the same discrete trial, binocular rivalry procedure, used in experiment 2, and (ii) a mimic-rivalry condition in which a musical score and a flickering radial grating were presented in physical alternation following the temporal sequence measured during rivalry in the same participant. Fig. S3 shows the stimulus conditions and the trial structure of the mimic-rivalry condition. At the beginning of each mimic-rivalry trial, a nonmelodic score and the flickering radial grating were presented in physical alternation (i.e., left eye only followed by right eye only) without any accompanying sound. Over trials each stimulus was presented an equal number of times to each eye. Participants reported the currently visible stimulus by pressing one of two assigned keys on the keyboard. In trials mimicking a transition from score suppressed to score dominant (42 randomly ordered trials per block), one of seven possible probe scores appeared at that point in time when, according to the genuine rivalry tracking record, the score would have emerged from suppression into dominance (Fig. S3A). In trials mimicking a transition from score dominant to score suppressed (42 trials per block), the grating appeared at the point in time when, based on the genuine rivalry tracking record, the grating would have emerged from suppression into dominance (Fig. S3B). Mimic-dominance and mimic-suppression trials were intermixed randomly; therefore, observers were not able to anticipate exactly when a transition would happen. Simultaneously with the onset of the transition of the mimic-dominance condition, participants began to hear the melody corresponding to the newly introduced probe score (CON), a melody different from the newly introduced probe score (INC), or no melody at all (NS). At the onset of the transition of the mimic-suppression condition, the flickering radial grating was accompanied by an auditory melody (pseudo-CON or pseudo-INC, meaning there is no objectively defined CON or INC) or by NS. Equal numbers of these three AV conditions were intermixed randomly within a block of 42 trials, and each block was repeated three times. A trial ended as soon as the probe changed to the other stimulus (score to radial grating in the mimic-dominant condition and radial grating to score in the mimic-suppression condition). Participants’ reaction times were defined as the difference in time between the probe button release and the probe offset.

Supplementary Material

Acknowledgments

We thank Gordon Logan and Isabel Gauthier for helpful comments on this project. This work was supported by Grants NRF-2013R1A1A1010923 and NRF-2013R1A2A2A03017022 from the Basic Science Research Program through the National Research Foundation of Korea (NRF) [funded by the Ministry of Science, Information and Communications Technologies (ICT) and Future Planning] and by National Institutes of Health Core Grant P30-EY008126.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1509529112/-/DCSupplemental.

References

- 1.Gregory RL. Perceptions as hypotheses. Philos Trans R Soc Lond B Biol Sci. 1980;290(1038):181–197. doi: 10.1098/rstb.1980.0090. [DOI] [PubMed] [Google Scholar]

- 2.Knill DC, Richards W. Perception as Bayesian Inference. Cambridge Univ Press; Cambridge, UK: 1996. [Google Scholar]

- 3.Albright TD, Stoner GR. Contextual influences on visual processing. Annu Rev Neurosci. 2002;25:339–379. doi: 10.1146/annurev.neuro.25.112701.142900. [DOI] [PubMed] [Google Scholar]

- 4.Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5(8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- 5.Sterzer P, Frith C, Petrovic P. Believing is seeing: Expectations alter visual awareness. Curr Biol. 2008;18(16):R697–R698. doi: 10.1016/j.cub.2008.06.021. [DOI] [PubMed] [Google Scholar]

- 6.Marr D. A theory for cerebral neocortex. Proc R Soc Lond B Biol Sci. 1970;176(1043):161–234. doi: 10.1098/rspb.1970.0040. [DOI] [PubMed] [Google Scholar]

- 7.Blake R, Logothetis N. Visual competition. Nat Rev Neurosci. 2002;3(1):13–21. doi: 10.1038/nrn701. [DOI] [PubMed] [Google Scholar]

- 8.Kim C-Y, Blake R. Psychophysical magic: Rendering the visible ‘invisible’. Trends Cogn Sci. 2005;9(8):381–388. doi: 10.1016/j.tics.2005.06.012. [DOI] [PubMed] [Google Scholar]

- 9.Levelt WJM. On Binocular Rivalry. Royal Van Gorcum; Assen, The Netherlands: 1965. [Google Scholar]

- 10.Fox R, Herrmann J. Stochastic properties of binocular rivalry alternations. Percept Psychophys. 1967;2(9):432–436. [Google Scholar]

- 11.Hohwy J, Roepstorff A, Friston K. Predictive coding explains binocular rivalry: An epistemological review. Cognition. 2008;108(3):687–701. doi: 10.1016/j.cognition.2008.05.010. [DOI] [PubMed] [Google Scholar]

- 12.Leopold DA, Logothetis NK. Multistable phenomena: Changing views in perception. Trends Cogn Sci. 1999;3(7):254–264. doi: 10.1016/s1364-6613(99)01332-7. [DOI] [PubMed] [Google Scholar]

- 13.Maruya K, Yang E, Blake R. Voluntary action influences visual competition. Psychol Sci. 2007;18(12):1090–1098. doi: 10.1111/j.1467-9280.2007.02030.x. [DOI] [PubMed] [Google Scholar]

- 14.Alpers GW, Pauli P. Emotional pictures predominate in binocular rivalry. Cogn Emot. 2006;20(5):596–607. [Google Scholar]

- 15.Yoon KL, Hong SW, Joormann J, Kang P. Perception of facial expressions of emotion during binocular rivalry. Emotion. 2009;9(2):172–182. doi: 10.1037/a0014714. [DOI] [PubMed] [Google Scholar]

- 16.Wilbertz G, van Slooten J, Sterzer P. Reinforcement of perceptual inference: Reward and punishment alter conscious visual perception during binocular rivalry. Front Psychol. 2014;5:1377. doi: 10.3389/fpsyg.2014.01377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yu K, Blake R. Do recognizable figures enjoy an advantage in binocular rivalry? J Exp Psychol Hum Percept Perform. 1992;18(4):1158–1173. doi: 10.1037//0096-1523.18.4.1158. [DOI] [PubMed] [Google Scholar]

- 18.Conrad V, Bartels A, Kleiner M, Noppeney U. Audiovisual interactions in binocular rivalry. J Vis. 2010;10(10):1–15. doi: 10.1167/10.10.27. [DOI] [PubMed] [Google Scholar]

- 19.Lunghi C, Alais D. Touch interacts with vision during binocular rivalry with a tight orientation tuning. PLoS ONE. 2013;8(3):e58754. doi: 10.1371/journal.pone.0058754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhou W, Jiang Y, He S, Chen D. Olfaction modulates visual perception in binocular rivalry. Curr Biol. 2010;20(15):1356–1358. doi: 10.1016/j.cub.2010.05.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Carter OL, Pettigrew JD. A common oscillator for perceptual rivalries? Perception. 2003;32(3):295–305. doi: 10.1068/p3472. [DOI] [PubMed] [Google Scholar]

- 22.Hancock S, Gareze L, Findlay JM, Andrews TJ. Temporal patterns of saccadic eye movements predict individual variation in alternation rate during binocular rivalry. Iperception. 2012;3(1):88–96. doi: 10.1068/i0486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Logothetis NK, Leopold DA, Sheinberg DL. What is rivalling during binocular rivalry? Nature. 1996;380(6575):621–624. doi: 10.1038/380621a0. [DOI] [PubMed] [Google Scholar]

- 24.Brascamp JW, van Ee R, Pestman WR, van den Berg AV. Distributions of alternation rates in various forms of bistable perception. J Vis. 2005;5(4):287–298. doi: 10.1167/5.4.1. [DOI] [PubMed] [Google Scholar]

- 25.Ratcliff R. Group reaction time distributions and an analysis of distribution statistics. Psychol Bull. 1979;86(3):446–461. [PubMed] [Google Scholar]

- 26.Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological) 1995;57(1):289–300. [Google Scholar]

- 27.Valsecchi M, Gegenfurtner KR, Schütz AC. Saccadic and smooth-pursuit eye movements during reading of drifting texts. J Vis. 2013;13(10):1–20. doi: 10.1167/13.10.8. [DOI] [PubMed] [Google Scholar]

- 28.Fox R, Todd S, Bettinger LA. Optokinetic nystagmus as an objective indicator of binocular rivalry. Vision Res. 1975;15(7):849–853. doi: 10.1016/0042-6989(75)90265-5. [DOI] [PubMed] [Google Scholar]

- 29.Hayashi R, Tanifuji M. 2012. Which image is in awareness during binocular rivalry? Reading perceptual status from eye movements. J Vision 12(3):5, 1–11. [DOI] [PubMed]

- 30.Wang L, Weng X, He S. Perceptual grouping without awareness: Superiority of Kanizsa triangle in breaking interocular suppression. PLoS ONE. 2012;7(6):e40106. doi: 10.1371/journal.pone.0040106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mudrik L, Breska A, Lamy D, Deouell LY. Integration without awareness: Expanding the limits of unconscious processing. Psychol Sci. 2011;22(6):764–770. doi: 10.1177/0956797611408736. [DOI] [PubMed] [Google Scholar]

- 32.Jiang Y, Costello P, He S. Processing of invisible stimuli: Advantage of upright faces and recognizable words in overcoming interocular suppression. Psychol Sci. 2007;18(4):349–355. doi: 10.1111/j.1467-9280.2007.01902.x. [DOI] [PubMed] [Google Scholar]

- 33.Lin Z, He S. Seeing the invisible: The scope and limits of unconscious processing in binocular rivalry. Prog Neurobiol. 2009;87(4):195–211. doi: 10.1016/j.pneurobio.2008.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ling S, Blake R. Suppression during binocular rivalry broadens orientation tuning. Psychol Sci. 2009;20(11):1348–1355. doi: 10.1111/j.1467-9280.2009.02446.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Alsius A, Munhall KG. Detection of audiovisual speech correspondences without visual awareness. Psychol Sci. 2013;24(4):423–431. doi: 10.1177/0956797612457378. [DOI] [PubMed] [Google Scholar]

- 36.Chen Y-C, Yeh S-L, Spence C. Crossmodal constraints on human perceptual awareness: Auditory semantic modulation of binocular rivalry. Front Psychol. 2011;2:212. doi: 10.3389/fpsyg.2011.00212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kang MS, Blake R. Perceptual synergy between seeing and hearing revealed during binocular rivalry. Psichologija. 2005;32:7–15. [Google Scholar]

- 38.Lunghi C, Morrone MC, Alais D. Auditory and tactile signals combine to influence vision during binocular rivalry. J Neurosci. 2014;34(3):784–792. doi: 10.1523/JNEUROSCI.2732-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Plass J, Guzman-Martinez E, Ortega L, Grabowecky M, Suzuki S. Lip reading without awareness. Psychol Sci. 2014;25(9):1835–1837. doi: 10.1177/0956797614542132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.van Ee R, van Boxtel JJ, Parker AL, Alais D. Multisensory congruency as a mechanism for attentional control over perceptual selection. J Neurosci. 2009;29(37):11641–11649. doi: 10.1523/JNEUROSCI.0873-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lunghi C, Binda P, Morrone MC. Touch disambiguates rivalrous perception at early stages of visual analysis. Curr Biol. 2010;20(4):R143–R144. doi: 10.1016/j.cub.2009.12.015. [DOI] [PubMed] [Google Scholar]

- 42.Hyde KL, et al. Musical training shapes structural brain development. J Neurosci. 2009;29(10):3019–3025. doi: 10.1523/JNEUROSCI.5118-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schlaug G, et al. Training-induced neuroplasticity in young children. Ann N Y Acad Sci. 2009;1169(1):205–208. doi: 10.1111/j.1749-6632.2009.04842.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Franklin MS, et al. The effects of musical training on verbal memory. Psychol Music. 2008;36(3):353–365. [Google Scholar]

- 45.Jacoby N, Ahissar M. What does it take to show that a cognitive training procedure is useful? A critical evaluation. Prog Brain Res. 2013;207:121–140. doi: 10.1016/B978-0-444-63327-9.00004-7. [DOI] [PubMed] [Google Scholar]

- 46.Novembre G, Keller PE. A conceptual review on action-perception coupling in the musicians’ brain: What is it good for? Front Hum Neurosci. 2014;8:603. doi: 10.3389/fnhum.2014.00603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zatorre RJ, Chen JL, Penhune VB. When the brain plays music: Auditory-motor interactions in music perception and production. Nat Rev Neurosci. 2007;8(7):547–558. doi: 10.1038/nrn2152. [DOI] [PubMed] [Google Scholar]

- 48.Rauschecker JP. Is there a tape recorder in your head? How the brain stores and retrieves musical melodies. Front Syst Neurosci. 2014;8:149. doi: 10.3389/fnsys.2014.00149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Reichenbach A, Franklin DW, Zatka-Haas P, Diedrichsen J. A dedicated binding mechanism for the visual control of movement. Curr Biol. 2014;24(7):780–785. doi: 10.1016/j.cub.2014.02.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Beets IAM, et al. Online action-to-perception transfer: Only percept-dependent action affects perception. Vision Res. 2010;50(24):2633–2641. doi: 10.1016/j.visres.2010.10.004. [DOI] [PubMed] [Google Scholar]

- 51.Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221(4608):389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- 52.Maier JX, Neuhoff JG, Logothetis NK, Ghazanfar AA. Multisensory integration of looming signals by rhesus monkeys. Neuron. 2004;43(2):177–181. doi: 10.1016/j.neuron.2004.06.027. [DOI] [PubMed] [Google Scholar]

- 53.Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25(20):5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol. 2000;10(11):649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- 55.Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415(6870):429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 56.Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 2004;14(3):257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- 57.Dieter KC, Tadin D. 2011. Understanding attentional modulation of binocular rivalry: A framework based on biased competition. Front Hum Neurosci 5(155):1–12.

- 58.Paffen CLE, Alais D. Attentional modulation of binocular rivalry. Front Hum Neurosci. 2011;5:105. doi: 10.3389/fnhum.2011.00105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Zhang P, Jiang Y, He S. Voluntary attention modulates processing of eye-specific visual information. Psychol Sci. 2012;23(3):254–260. doi: 10.1177/0956797611424289. [DOI] [PubMed] [Google Scholar]

- 60.Mudrik L, Deouell LY, Lamy D. Scene congruency biases binocular rivalry. Conscious Cogn. 2011;20(3):756–767. doi: 10.1016/j.concog.2011.01.001. [DOI] [PubMed] [Google Scholar]

- 61.Naumer MJ, et al. Investigating human audio-visual object perception with a combination of hypothesis-generating and hypothesis-testing fMRI analysis tools. Exp Brain Res. 2011;213(2-3):309–320. doi: 10.1007/s00221-011-2669-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Diehl MM, Romanski LM. Responses of prefrontal multisensory neurons to mismatching faces and vocalizations. J Neurosci. 2014;34(34):11233–11243. doi: 10.1523/JNEUROSCI.5168-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Zimmerman E, Lahav A. The multisensory brain and its ability to learn music. Ann N Y Acad Sci. 2012;1252(1):179–184. doi: 10.1111/j.1749-6632.2012.06455.x. [DOI] [PubMed] [Google Scholar]

- 64.Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron. 2008;57(1):11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vetter P, Smith FW, Muckli L. Decoding sound and imagery content in early visual cortex. Curr Biol. 2014;24(11):1256–1262. doi: 10.1016/j.cub.2014.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zhou G, Zhang L, Liu J, Yang J, Qu Z. Specificity of face processing without awareness. Conscious Cogn. 2010;19(1):408–412. doi: 10.1016/j.concog.2009.12.009. [DOI] [PubMed] [Google Scholar]

- 67.Jiang Y, Costello P, Fang F, Huang M, He S. A gender- and sexual orientation-dependent spatial attentional effect of invisible images. Proc Natl Acad Sci USA. 2006;103(45):17048–17052. doi: 10.1073/pnas.0605678103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7(4):882–886. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Stein T, Senju A, Peelen MV, Sterzer P. Eye contact facilitates awareness of faces during interocular suppression. Cognition. 2011;119(2):307–311. doi: 10.1016/j.cognition.2011.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Blake R, Tadin D, Sobel KV, Raissian TA, Chong SC. Strength of early visual adaptation depends on visual awareness. Proc Natl Acad Sci USA. 2006;103(12):4783–4788. doi: 10.1073/pnas.0509634103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10(4):433–436. [PubMed] [Google Scholar]

- 72.Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat Vis. 1997;10(4):437–442. [PubMed] [Google Scholar]

- 73.Cornelissen FW, Peters EM, Palmer J. The Eyelink Toolbox: Eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput. 2002;34(4):613–617. doi: 10.3758/bf03195489. [DOI] [PubMed] [Google Scholar]