Abstract

Radiology report errors occur for many reasons including the use of pre-filled report templates, wrong-word substitution, nonsensical phrases, and missing words. Reports may also contain clinical errors that are not specific to the speech recognition including wrong laterality and gender-specific discrepancies. Our goal was to create a custom algorithm to detect potential gender and laterality mismatch errors and to notify the interpreting radiologists for rapid correction. A JavaScript algorithm was devised to flag gender and laterality mismatch errors by searching the text of the report for keywords and comparing them to parameters within the study’s HL7 metadata (i.e., procedure type, patient sex). The error detection algorithm was retrospectively applied to 82,353 reports 4 months prior to its development and then prospectively to 309,304 reports 15 months after implementation. Flagged reports were reviewed individually by two radiologists for a true gender or laterality error and to determine if the errors were ultimately corrected. There was significant improvement in the number of flagged reports (pre, 198/82,353 [0.24 %]; post, 628/309,304 [0.20 %]; P = 0.04) and reports containing confirmed gender or laterality errors (pre, 116/82,353 [0.014 %]; post, 285/309,304 [0.09 %]; P < 0.0001) after implementing our error notification system. The number of flagged reports containing an error that were ultimately corrected improved dramatically after implementing the notification system (pre, 17/116 [15 %]; post, 239/285 [84 %]; P < 0.0001). We developed a successful automated tool for detecting and notifying radiologists of potential gender and laterality errors, allowing for rapid report correction and reducing the overall rate of report errors.

Keywords: Quality control, Radiology reporting, Software design, Health Level 7 (HL7), Laterality, Gender mismatch, Patient safety

Introduction

The advent of speech recognition (SR) software has been adopted by many radiology departments over conventional transcription despite early mixed reactions [1–4]. The advantages of SR compared to conventional transcription include faster report turnaround time with suggestion of improved radiologist production and cost savings [5–7]. Despite the advantages of SR, a higher error rate has been described in reports generated with SR when compared to conventional transcription [8–12].

Common dictation errors related to SR generated reports include, but are not limited to, wrong-word substitution, nonsensical phrases, and errors of omission [13–16]. Reports may also contain clinical errors that are not specific to the SR software. These types of report errors include wrong laterality and gender-specific discrepancies. Laterality errors (e.g., the dictation erroneously mentions the right lower extremity in a report for a procedure involving the left) can have significant impact on patient care, particularly if the patient is to require surgical intervention [1, 17, 18]. The radiology report ideally should be free of such errors. Gender errors (e.g., the report mentions a prostate gland in a female patient) may result due to the post-hysterectomy appearance of the female pelvis, accidental inclusion of pre-filled report template text, or as a result of transcription error in voice recognition dictation systems (e.g., “venous” is transcribed to “penis”). Although these errors less commonly directly impact patient care, they nevertheless produce unnecessary confusion, reduce the credibility of radiologists, and should be avoided.

The frequency and impact of laterality errors in radiology reports have been previously described [1, 12, 13, 17, 18]. Landau et al. developed a system to reduce laterality errors where radiologists have the opportunity to re-review the report prior to signature in a separate window that displays terms of laterality highlighted in separate colors [19]. Luetmer [1] and Sangwaiga [13] have also reported on laterality error rates in radiology reports. Additionally, it has been shown in related fields that simply increasing awareness of a quality problem is enough to induce positive change without any other intervention [20].

We developed a system that detects multiple types of errors, increases awareness of these errors within our department, gives opportunity for correction via near real-time e-mail and pager notification, and is seamlessly integrated into our existing workflow. The purpose of our study were to describe our departmental automated report error detection and notification system, determine the frequency and spectrum of laterality and gender report errors found by this system, analyze the frequency of these dictation errors before and after the introduction of our system, and finally determine whether this quality improvement intervention resulted in a more correct and complete final clinical record.

Materials and Methods

Study design and execution was exempted from prospective review by our Institutional Review Board. All data acquired through ongoing quality improvement within our department for the purpose of report error detection is maintained on an encrypted, secured, HIPAA compliant departmental server and database.

We receive a real-time Health Level 7 (HL7) message feed from our radiology information system (RIS) (Siemens) on our encrypted, secured, HIPAA compliant departmental server. A channel on our Mirth Connect HL7 engine (Mirth Corporation) filters HL7 Observation Result (ORU) radiology report messages and checks for report errors using custom JavaScript heuristics based algorithms (executed with Mirth Connect using the JavaScript engine embedded in the Oracle Java Runtime Environment) with both simple word and regular expression matching (Addendum 1). Associated trainee and attending radiologist data is identified by parsing specific HL7 fields that include radiologist name and unique doctor ID. Potential report errors trigger execution of a custom Bash (GNU) script, which notifies the involved radiologists via e-mail and text page. Metadata associated with these errors are stored in a MySQL (Oracle) database allowing for review as needed. The error notification system does not require the dictating radiologist to open additional programs or windows or add any additional steps to their workflow. Every preliminary or finalized report in the department is screened for potential errors.

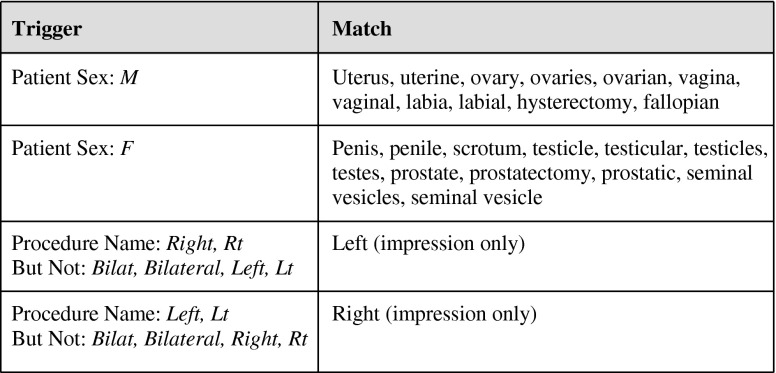

For gender errors, we screened for a list of words that should, with perhaps rare exception, never be in a report for a particular gender. For example, if a report for a male patient included words such as, but not limited to, “uterus,” “ovary,” or “adnexa” or any associated variants such as “ovaries,” it would be flagged as a potential error. Similarly, a report for a female patient should not include words such as “prostate,” “penis,” or “scrotum.” We identify gender based on the gender established at registration and included in a specific HL7 field within our ORU report messages (Fig. 1).

Fig. 1.

Laterality errors

An example of a report flagged for a potential gender error and later confirmed as a true positive:

GENDER: FEMALE

PROCEDURE: CT ABDOMEN PELVIS WO CONTRAST

DATE OF EXAM: Dec 4 2012 8:25 PM

CLINICAL HISTORY: 32-year-old female with sudden onset left upper quadrant pain radiating to the left lower quadrant. Evaluate for left renal calculus.

TECHNIQUE: XXX

FINDINGS:

No hydronephrosis. No renal or ureteral calculi are seen. No abdominal or pelvic free fluid or lymphadenopathy. Aorta is normal in caliber. Stomach contains small amount of high density material. No dilated loops of small bowel. Colon is not dilated. No free intraperitoneal air or focal fluid collection. Urinary bladder, PROSTATE GLAND, and SEMINAL VESICLES are normal. No suspicious osseous lesions.

IMPRESSION: XXX

For laterality errors, we only screened reports that were specific to a side of the body (including diagnostic mammograms and ultrasounds). For example, a radiograph of the right hand would be screened while radiographs of the bilateral hands would not. Additionally, we only screened the impression section of the report for the contralateral side because as we developed the system, we found this algorithm struck an appropriate balance of sensitivity and specificity and avoided “notification fatigue” with too many false-positive notifications. For example, if the impression section of a report for a right lower extremity ultrasound included the word “left,” it would be flagged, but if the word “left” were included in the findings section, it would not be flagged. We found that at our institution, the contralateral side was frequently included in the findings of many side-specific reports, which resulted in increased false-positive notifications if the entire report body was screened for the contralateral side. In the lower extremity ultrasound example, our technologists frequently image the contralateral common femoral vein which would be described within the body of the report but not in the impression (Fig. 1). An example of a report flagged for a potential laterality error and later confirmed as a true positive:

GENDER: XXX

PROCEDURE: FOOT 3 V, LEFT

DATE OF EXAM: Dec 20 2012 3:55 PM

CLINICAL HISTORY: Close fracture of the metatarsal.

COMPARISON: XXX

TECHNIQUE: Weight-bearing AP, oblique, and lateral views of the left foot.

FINDINGS: Mild resorption of callus around the nondisplaced fracture through the proximal LEFT fifth metatarsal. Otherwise, no significant change in the appearance of the fracture. No additional fractures are noted. Visualized joints are well-maintained.

IMPRESSION: Slight resorption callus around the RIGHT fifth metatarsal fracture which is otherwise unchanged in appearance.

Further details of our error-detection algorithms can be seen in Addendum 1.

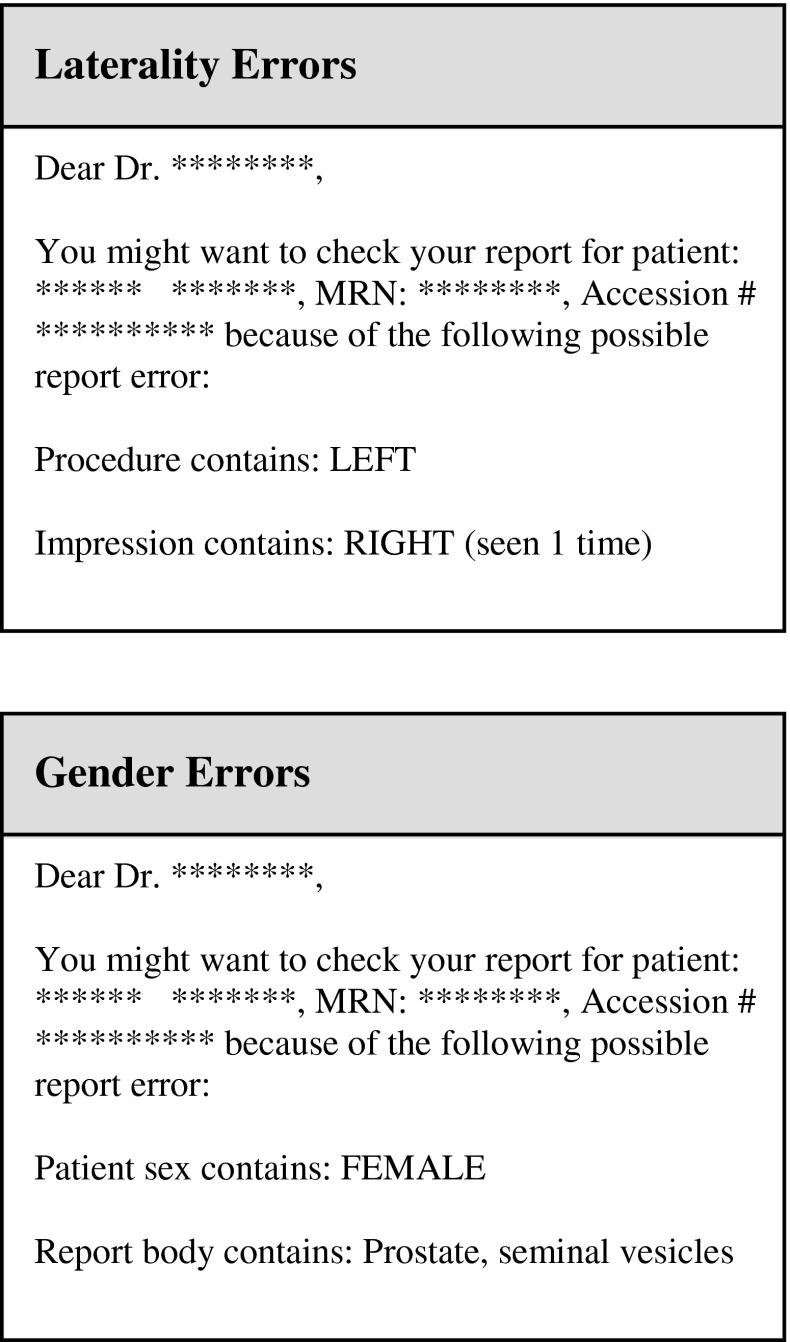

When a potential dictation error was flagged, an immediate e-mail and page notification were automatically sent to the appropriate dictating radiologist(s) (Fig. 2).

Fig. 2.

Gender errors

Example laterality error notification:

Dear Dr. *****,

You might want to check your report for patient: **** **** MRN: #######,

Accession: ####### because of the following possible report error.

Procedure contains: Left

Impression contains Right (seen 1 time)

Example gender error notification:

Dear Dr. *****,

You might want to check your report for patient: **** **** MRN: #######,

Accession: ####### because of the following possible report error.

Patient sex: Female

Report body contains: prostate, seminal vesicles

The radiology department was educated on the error notification system by department wide e-mail and showcasing during our monthly departmental quality and safety meeting.

A baseline error detection rate was established by retrospectively applying our error detection algorithms on reports generated during the 4 months prior to initiation of the notification system (Apr 1, 2012–Jul 31, 2012). After initiation of the notification system, data was prospectively collected for 15 months (Aug 1, 2012–Oct 31, 2013). Outside films that contained administrative reports were flagged and excluded. One senior radiology resident and radiology attending with 4-year experience manually reviewed all radiology reports that were flagged by the error notification system. Each flagged report was categorized as either a true-positive or false-positive gender or laterality error depending on whether an actual reporting error was judged to be present. Reports having true errors were then followed to see if they were ultimately corrected or addended. Radiology reports not flagged by the error notification system were not concurrently reviewed for gender or laterality reports due to the high volume of cases at our busy institution (approximately 132,000 preliminary and 260,000 final reports generated between Apr 1, 2012 and Oct 31, 2013). This limitation means that true-negative and false-negative rates are not established, and thus, derivative statistics such as sensitivity or specificity cannot be calculated.

Voice Recognition Software

The use of PowerScribe (Nuance, Burlington, MA) voice recognition software began at our institution in 2005; the current version at the time of analysis was PowerScribe 4.8.0.4.1. All users were formerly trained with our informatics staff and had dictated with the software for a minimum of 9 months.

Statistical Analysis

All statistical analyses were performed using the Pearson chi-squared test of association. P values of 0.05 or less were considered significant.

Results

A total of 82,353 preliminary and final radiology reports were generated by the department during the 4-month period (Apr 1, 2012–Jul 31, 2012) before the initiation of the error notification system. On retrospective application of the error detection system to these reports, 198 out of the total 82,353 [0.24 %] were flagged as potential errors. One hundred sixteen out of the total of 82,353 [0.14 %] were confirmed on manual inspection to have a laterality or gender mismatch errors, of which 37 % (43/116) were laterality errors and 63 % (73/116) were gender type errors (Table 1).

Table 1.

Radiology reports

| Reports | Cumulative (19 months) | Pre-intervention (4 months) | Post-intervention (15 months) | P value (pre/post) |

|---|---|---|---|---|

| Total number of reports generated | 391,657 | 82,353 | 309,304 | |

| Number of reports flagged by error detection algorithm | 826 (0.21 %) | 198 (0.24 %) | 628 (0.20 %) | 0.04 |

| Laterality | 547 (0.14 %) | 124 (0.15 %) | 423 (0.14 %) | |

| Gender mismatch | 279 (0.07 %) | 74 (0.09 %) | 205 (0.06 %) | |

| Flagged reports that are positive for errors (true positives) | 401 (0.10 %) | 116 (0.14 %) | 285 (0.09 %) | <0.0001 |

| Laterality | 141 (0.04 %) | 43 (0.05 %) | 98 (0.03 %) | |

| Gender mismatch | 260 (0.07 %) | 73 (0.09 %) | 187 (0.07 %) | |

| Confirmed errors that were ultimately corrected | 64 % (256/401) | 15 % (17/116) | 84 % (239/285) | <0.0001 |

| Laterality error precision | 26 % (141/547) | 35 % (43/124) | 23 % (98/423) | |

| Gender error precision | 93 % (260/279) | 99 % (73/74) | 91 % (187/205) |

After the initiation of the notification system, a 15-month post-intervention period (Aug 1, 2012–Oct 31, 2013) was subsequently analyzed. During that period, the department generated a total of 309,304 preliminary or final radiology reports that were screened by the error detector. Of those reports, 628 out of the total 309,304 [0.20 %] were flagged as potential errors which was a 16.7 % reduction in error-detection rate, P = 0.04. 285 out of the total of 309,304 [0.09 %] were confirmed to have laterality or gender errors which was a 35.7 % reduction in the rate of confirmed errors after initiation of the notification system, P < 0.0001. Thirty-four percent (98/285) of these confirmed errors were laterality errors, and 66 % (187/285) were gender errors (Table 1). There were no flagged reports that contained a concurrent laterality and/or gender error.

Over the evaluated 19 months (4 months pre-intervention and 15 months post-intervention), the precision of the laterality and gender error detection algorithms were found to be 26 % (141/547) and 93 % (260/279), respectively (Table 1). The cumulative detected laterality and gender mismatch error rates were 0.04 % (141/391,657) and 0.07 % (260/391,657), respectively (Table 2). Our laterality error rate is much higher than previously published data by Sangwaiya (0.00008 %) [13] and Landau (0.0007 %) [19] and more in line with the rate described by Luetmer (0.055 %) [1]. The differences compared to Sangwaiya and Landau are likely attributed to differences in study design and inclusion criteria. Landau et al. evaluated their departmental laterality error rates via radiologist self reporting after proofing reports with highlighted terms of laterality in a separate window. This required the dictating radiologist to detect their own errors and proactively report an error when found. Sangwaiya et al. limited their evaluation to reports that already had an addendum correcting the laterality error. Luetmer et al. retrospectively searched their RIS for reports which had any change attributed to laterality which most closely matches our inclusion criteria, but with different methodology. Our study is unique in that we established a baseline error rate for both laterality and gender errors, prospectively evaluated changes in these rates after intervention with an automatic notification system, and then manually scrutinized flagged reports to confirm or deny the presence of a true error. At the time of our study, we are not aware of reports of gender mismatch error rates.

Table 2.

Laterality and gender mismatch error rates

| Reports | Cumulative (19 months) | Pre-intervention (4 months) | Post-intervention (15 months) |

|---|---|---|---|

| Flagged reports with true errors | 401 | 116 | 285 |

| Total reports with laterality error | 141 | 43 | 98 |

| Radiographs | 36 % (51/141) | 30 % (13/43) | 39 % (38/98) |

| Women’s imaginga | 29 % (41/141) | 24 % (11/43) | 31 % (30/98) |

| USb | 16 % (22/141) | 12 % (5/43) | 17 % (17/98) |

| MRI | 9 % (12/141) | 12 % (5/43) | 8 % (7/98) |

| CT | 6 % (9/141) | 7 % (3/43) | 6 % (6/98) |

| Other (NM and IR) | 4 % (6/141) | 14 % (6/43) | 0 % (0/98) |

| Total reports with gender error | 260 | 73 | 187 |

| Radiographs | 18 % (47/260) | 10 % (7/73) | 21 % (40/187) |

| Women’s imaginga | 1 % (2/260) | 1 % (1/73) | 1 % (1/187) |

| USb | 10 % (25/260) | 10 % (7/73) | 8 % (15/187) |

| MRI | 6 % (17/260) | 14 % (10/73) | 5 % (10/187) |

| CT | 43 % (112/260) | 44 % (32/73) | 43 % (80/187) |

| Other (NM and IR) | 22 % (57/260) | 21 % (16/73) | 22 % (41/187) |

aWomen’s imaging includes screening and diagnostic mammograms, diagnostic breast ultrasound, and breast related interventions (ultrasound and steotactic)

bExcludes women’s imaging related ultrasounds

Next, we analyzed those reports that had confirmed errors (116 pre-intervention and 285 post-intervention) to see if they were ultimately corrected. As expected, the number of ultimately corrected reports was significantly higher in the post-intervention subset (239/285 reports [84 %]) as compared to the pre-intervention subset (17/116 reports [15 %]), P < 0.0001, as the dictating radiologist(s) received a text page and e-mail regarding potential errors after the implementation of the error notification system (Fig. 2 and Table 1).

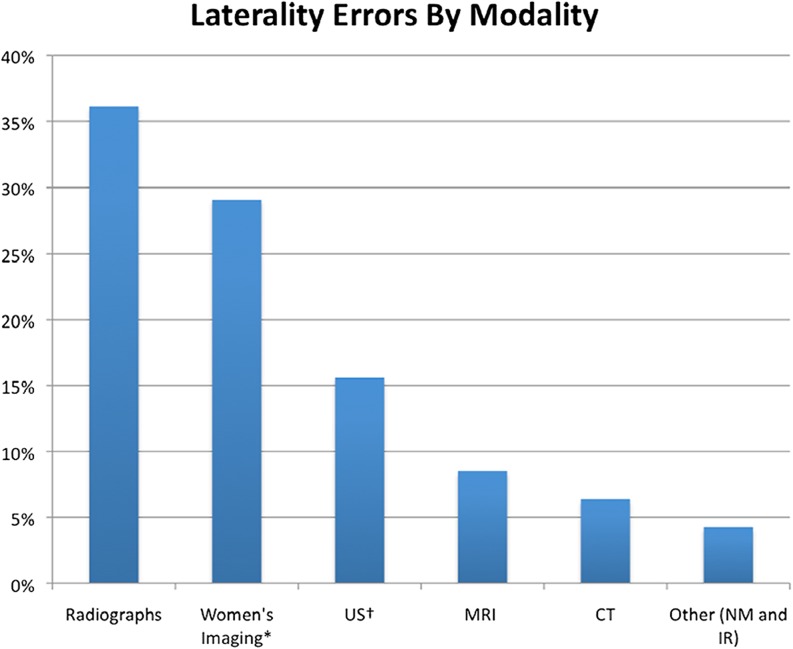

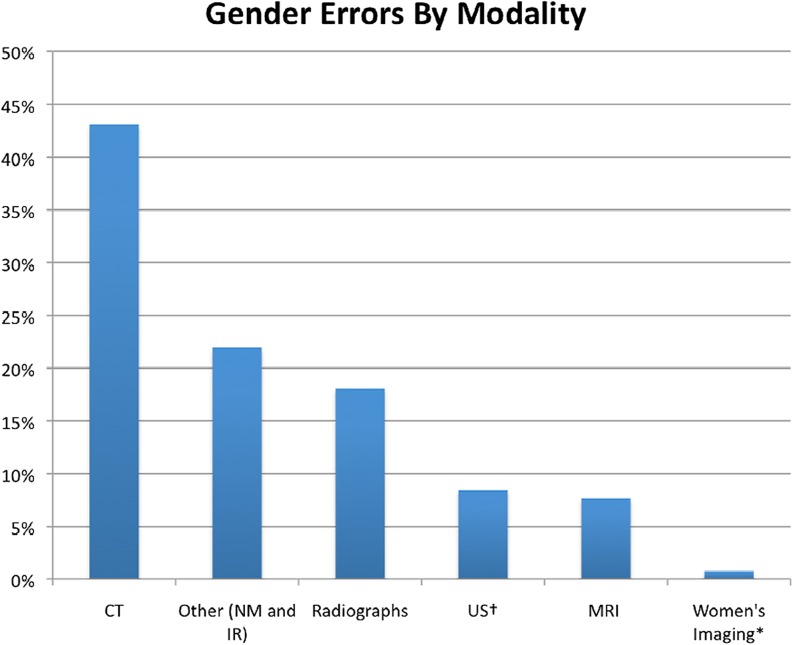

We also analyzed the breakdown of types of errors by modality. Radiographs and women’s imaging procedures (mammograms, breast ultrasound, and/or biopsy) were the top two modalities resulting in laterality errors, accounting for 36 and 29 %, respectively (Fig. 3 and Table 2). Cross-sectional CT reports generated the most gender errors, accounting for 43 %. Nuclear medicine, interventional radiology, and radiograph reports also generated substantial gender errors, accounting for 22 % (combined nuclear medicine and interventional) and 18 %, respectively (Fig. 4 and Table 2). Interestingly, two gender errors were generated by women’s imaging reports which were unexpected but were simply the result of voice recognition errors. Overall, more reports were flagged in female patients (476/826 [58 %]) compared to males (350/826 [42 %]) for unclear reasons.

Fig. 3.

Laterality errors by modality

Fig. 4.

Gender errors by modality

Discussion

It is important to increase awareness among radiologists that errors in radiology reports inevitably occur, particularly after the introduction of SR, and that errors may impact patient management and radiologist credibility. Our study not only documents report error rates at our institution but also details an automated notification system functioning as a quality improvement intervention that significantly decreased report error rates, significantly improved correction of report errors and thus the accuracy of the clinical record, and thereby positively impacted patient management. The fact that potential error detection and confirmed error rates decreased significantly after initiation of the notification system suggests the heightened awareness of these errors causes radiologists to more carefully avoid these errors, similar to those findings by Lumish et al. [20]. In fact, several radiologists have told us anecdotally that they are much more careful to avoid gender and laterality errors on particular procedures which can be problematic after having received several notifications. Our study also confirmed that our feedback via notifications significantly improves the ultimate correction of errors after the fact, which is another important and clinically significant outcome of our system.

Limitations to our study include underestimation of radiology dictation errors. The notification system is not currently designed to detect wrong word substitution, nonsensical phrases, incorrect punctuation, or errors of omission to name a few. Our notification system only flags laterality and gender errors, with specific descriptors as described in Fig. 1 and Addendum 1. The system is designed to flag laterality mismatch errors if the laterality side (i.e., right or left) mentioned within the impression is discordant with the HL7 header data. Therefore, laterality errors within the body of the report or radiology reports which are without a laterality heading within the procedure HL7 data (i.e., bilateral knee radiographs, portable chest radiographs, etc.) will not be screened by the error notification system. These errors, not to mention the breadth of other unforeseen report errors, will be underestimated. Future studies with improved algorithms using artificial intelligence or natural language processing are future considerations which may allow us to detect these errors.

There is a relatively high false-positive detection rate in laterality errors, as many report impressions will mention the contralateral side (e.g., a lower extremity CT that also includes portions of the contralateral extremity in the field of view). Through our attempts to balance the sensitivity of our system against potential “pager fatigue,” we have almost certainly missed some laterality errors as a result of decreasing our sensitivity. On the other hand, some radiologists may have still felt that false-positive notifications were too frequent and may have ignored some or all notifications, thus missing an opportunity to correct true-positive report errors. Any system attempting to detect errors such as these must strike a balance that satisfies the potentially unique needs of the department.

Radiology reports not flagged by the notification system were not concurrently reviewed for missed errors (false negatives) due to the sheer volume of reports at our institution. Our team did not have the resources to review a total of 391,657 reports manually to determine if a gender or laterality error was missed by the error notification system. Therefore, true-negative (TN) and false-negative (FN) rates were not obtained, limiting our ability to completely characterize our algorithm.

Finally, our notification system only detected errors after reports were signed. For trainees, this worked well as they still had an opportunity to correct their preliminary report before it became finalized, but for the attending radiologists, this could only be corrected via an addendum. The slight delay in notification (a few minutes) along with the hurdles of addending a report may have decreased the rate at which the report is ultimately corrected. While this delay is short, it does pose the possibility of the signed report being accessed by the referring physician(s) and possibly the patient, leaving a small window of possible medical error. Ideally, our notification system could be incorporated directly into our SR system so the error detection, and correction, could occur during report dictation and editing before the report is finalized. Incorporation into our current version of SR is something we are actively exploring as a recent major upgrade has made this possible.

Given the standardization of health-related messaging via HL7, an automatic notification system could be implemented at other institutions where custom alert notifications could be created to address the specific needs and deficiencies of each radiology department. At our institution, we have expanded the notification alerts to also include improper ICD-9 codes, the lack of fluoroscopy time documentation, and certain template errors specific to our institution. While many components of this system could be used at other institutions, some customization is still required to adjust for site-specific HL7 messages, study descriptions, and desired sensitivity and specificity of potential error detection. This makes it difficult to provide an easily deployable package for distribution, but the authors have included a more detailed description of the error detection algorithms in Addendum 1 and would be happy to help other interested institutions and can be contacted for this purpose.

Conclusion

We developed an automated quality improvement tool that improves radiology report quality by rapidly notifying radiologists of potential laterality and gender mismatch dictation errors. This system successfully detects report errors, reduces the overall rate of errors, facilitates rapid report correction, and improves the final clinical record.

Footnotes

Presentation

Awarded RSNA Trainee Research Prize for presentation at the 2013 RSNA annual meeting in the informatics section.

References

- 1.Luetmer MT, Hunt CH, McDonald RJ, Bartholmai BJ, Kallmes DF. Laterality errors in radiology reports generated with and without voice recognition software: frequency and clinical significance. J Am Coll Radiol. 2013;10(7):538–43. doi: 10.1016/j.jacr.2013.02.017. [DOI] [PubMed] [Google Scholar]

- 2.Pezzullo JA, Tung GA, Rogg JM, Davis LM, Brody JM, Mayo-Smith WW. Voice recognition dictation: radiologist as transcriptionist. J Digit Imaging. 2008;21(4):384–9. doi: 10.1007/s10278-007-9039-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kauppinen T, Koivikko MP, Ahovuo J. Improvement of report workflow and productivity using speech recognition: A Follow-up Study. J Digit Imaging. 2008;21(4):378–82. doi: 10.1007/s10278-008-9143-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chang CA, Strahan R, Jolley D. Non-clinical errors using voice recognition dictation software for radiology reports: a retrospective audit. J Digit Imaging. 2011;24(4):724–8. doi: 10.1007/s10278-010-9344-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gale B, Safriel Y, Lukban A, Kalowitz J, Fleischer J, Gordon D. Radiology report production times, voice recognition vs. transcription. Radiol Manage. 2001;23(2):18–22. [PubMed] [Google Scholar]

- 6.Sferrella SM. Success with voice recognition. Radiol Manage. 2003;25(3):42–49. [PubMed] [Google Scholar]

- 7.Marquez LO, Stewart H. Improving medical imaging report turnaround times: the role of technology. Radiol Manage. 2005;27(2):26–31. [PubMed] [Google Scholar]

- 8.Rana DS, Hurst G, Shepstone L, Pilling J, Cockburn J, Crawford M: Voice recognition for radiology reporting: is it good enough? Clin Radiol 60(11):1205–12, 2005 [DOI] [PubMed]

- 9.Basma S, Lord B, Jacks LM, Rizk M, Scaranelo AM. Error rates in breast imaging reports: comparison of automatic speech recognition and dictation transcription. AJR Am J Roentgenol. 2011;197(4):923–7. doi: 10.2214/AJR.11.6691. [DOI] [PubMed] [Google Scholar]

- 10.McGurk S, Brauer K, Macfarlane TV, Duncan KA. The effect of voice recognition software on comparative error rates in radiology reports. Br J Radiol. 2008;81(970):767–70. doi: 10.1259/bjr/20698753. [DOI] [PubMed] [Google Scholar]

- 11.Strahan RH, Schneider-Kolsky ME. Voice recognition versus transcriptionist: error rates and productivity in MRI reporting. J Med Imaging Radiat Oncol. 2010;54(5):411–4. doi: 10.1111/j.1754-9485.2010.02193.x. [DOI] [PubMed] [Google Scholar]

- 12.Quint LE, Quint DJ, Myles JD. Frequency and spectrum of errors in final radiology reports generated with automatic speech recognition technology. J Am Coll Radiol. 2008;5(12):1196–9. doi: 10.1016/j.jacr.2008.07.005. [DOI] [PubMed] [Google Scholar]

- 13.Sangwaiya MJ, Saini S, Blake MA, Dreyer KJ, Kalra MK. Errare humanum est: frequency of laterality errors in radiology reports. AJR Am J Roentgenol. 2009;192(5):W239–44. doi: 10.2214/AJR.08.1778. [DOI] [PubMed] [Google Scholar]

- 14.Hawkins CM, Hall S, Hardin J, Salisbury S, Towbin AJ. Prepopulated radiology report templates: a prospective analysis of error rate and turnaround time. J Digit Imaging. 2012;25(4):504–11. doi: 10.1007/s10278-012-9455-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hawkins CM, Hall S, Zhang B, Towbin AJ. Creation and implementation of department-wide structured reports: an analysis of the impact on error rate in radiology reports. J Digit Imaging. 2014;27(5):581–7. doi: 10.1007/s10278-014-9699-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Larson DB, Towbin AJ, Pryor RM, Donnelly LF. Improving consistency in radiology reporting through the use of department-wide standardized reporting. Radiology. 2013;267(1):240–50. doi: 10.1148/radiol.12121502. [DOI] [PubMed] [Google Scholar]

- 17.Kwaan MR, Studdert DM, Zinner MJ, Gawande AA. Incidence, patterns, and prevention of wrong-site surgery. Arch Surg. 2006;141(4):353–7. doi: 10.1001/archsurg.141.4.353. [DOI] [PubMed] [Google Scholar]

- 18.Seiden SC, Barach P. Wrong-side/wrong-site, wrong-procedure, and wrong-patient adverse events: Are they preventable? Arch Surg. 2006;141(9):931–9. doi: 10.1001/archsurg.141.9.931. [DOI] [PubMed] [Google Scholar]

- 19.Landau E, Hirschorn D, Koutras I, Malek A, Demissie S. Preventing Errors in Laterality. J Digit Imaging. 2014 Oct 2. [DOI] [PMC free article] [PubMed]

- 20.Lumish HS, Sidhu MS, Kallianos K, Brady TJ, Hoffmann U, Ghoshhajra BB. Reporting scan tiem reduces cardiac MR examination duration. J Am Coll Radiol. 2014;11(4):425–8. doi: 10.1016/j.jacr.2013.05.037. [DOI] [PubMed] [Google Scholar]