Abstract

Objectives: The EuroScan International Network is a global network of publicly funded early awareness and alert (EAA) systems for health technologies. We describe the EuroScan member agency systems and methods, and highlight the potential for increased collaboration.

Methods: EuroScan members completed postal questionnaires supplemented with telephone interviews in 2012 to elicit additional information and check equivalence of responses. Information was updated between March and May 2013.

Results: Fifteen of the seventeen member agencies responded. The principal purpose of agencies is to inform decisions on coverage or reimbursement of health services and decisions on undertaking secondary research. The main users of information are national governments; health professionals; health services purchasers, commissioners, and decision makers; and healthcare providers. Most EuroScan agencies are small with almost half having fewer than two whole time equivalent staff. Ten agencies use both active and passive identification approaches, four use only active approaches. Most start identification in the experimental or investigational stages of the technology life cycle. All agencies assessed technologies when they are between the investigational and established, but under diffusion stages. Barriers to collaboration revolve around different system aims, purposes, and requirements; a lack of staff, finance, or opportunity; language differences; and restrictions on dissemination.

Conclusions: Although many barriers to collaboration were identified, the majority of agencies were supportive of increased collaboration either involving the whole EuroScan Network or between individual agencies. Despite differences in the detailed identification processes, members thought that this was the most feasible phase to develop additional collaboration.

Keywords: Early awareness and alert systems, Horizon scanning, Healthcare technology

Early awareness and alert (EAA) systems are increasingly considered an essential component of health technology assessment (HTA) and decision making around the evaluation, adoption, and diffusion of emerging and new health technologies. Indeed, with increasing deliberations regarding the benefits of early dialogue with industry, EAA systems remain at the forefront of methodological developments in this area. The International Information Network on New and Emerging Health Technologies (the EuroScan International Network) is a global network of publically funded, nonprofit making EAA systems for health technologies (www.euroscan.org.uk). In 2012, the network updated its aims and goals, and these now include the sharing of skills and experiences, the continuing development of methodological approaches to EAA activities, and the on-going exchange of information about new and emerging health technologies. The exchange of information is facilitated by the use of a web-based database which currently holds just over 2,500 entries. Almost 90 percent of information in the database is publicly accessible, the rest containing commercially or politically sensitive information.

A key activity for the network is the development and dissemination of methods including the setup and organization of individual EAA systems and the detailed processes used within systems for identification, filtration and prioritization, assessments, and dissemination. Information on these methods can be found in the EuroScan methods toolkit (1). Previous comparisons of EAA systems and methods have looked at the systems as a whole (2–6), reported on and summarized key methods (7;8) or assessed specific aspects of the processes used, for example, the identification, filtration, and/or prioritization of new and emerging health technologies (9–12).

The comparative analysis of EuroScan International Network agencies in 2008–09 found that fourteen of twenty agencies who responded (70 percent) were set up to inform coverage or reimbursement decisions and inform decisions about secondary research, 50 percent informed decisions about capital or recurrent spending and seven (35 percent) informed decisions about primary research (5). Detailed questions on methods found that fifteen agencies (75 percent) either relied on or supplemented their identification with the ability to call for and accept suggestions from individuals or organizations outside their agency, eleven agencies (55 percent) used primary sources available on the Internet for identification, and two agencies (10 percent) obtained information directly from commercial developers (2). Eighteen agencies (90 percent) had a formal filtration process which was implemented by in-house staff in twelve (60 percent) agencies, by a scientific board in five (25 percent) agencies, by the policy-making users in three (15 percent) agencies, and by individual experts in two (10 percent) agencies. Eighteen agencies (90 percent) reported using prioritization criteria around patient numbers and burden of disease; clinical benefit; economic impact; availability of evidence; current diagnostic, therapeutic, or other management options; safety; and social, ethical, and legal aspects. Agencies produced a range of outputs with the depth of assessments undertaken appearing to relate to the time frame of reporting. Agencies investigating technologies 2 to 3 years before launch were less likely to include evidence of clinical benefit in their reports than those agencies investigating technologies nearer to product launch.

A systematic review of methods used for healthcare horizon scanning undertaken by the Agency for Healthcare Research and Quality (AHRQ) in a review of their horizon scanning project, found twenty-three formal horizon scanning programs, most of which are or have been members of the EuroScan International Network, and several activities carried out in the United States of America by both governmental or nongovernmental agencies (8). Some of these activities were commissioned by AHRQ from contractors such as the ECRI Institute, others related to licensing agencies such as the US Food and Drug Administration, and decision makers for funders of healthcare such as Medicare and Medicaid. Although the authors identified a gap in evidence on detailed methods, they report that the non-EuroScan projects had very different goals, target technologies, and time horizons and used a variety of methods for the identification and assessment of individual technologies.

Although Wild and Langer's overview of horizon scanning systems, mentioned non-EuroScan agency horizon scanning activity from organizations such as the ECRI Institute and Hayes Incorporated, it only included details from the then, thirteen EuroScan member agencies (6). Their review concluded that the EuroScan members have a shared understanding of the activity and used common terminology. The systems were found to differ in terms of their size, resources, operational level, mandate, customers, and organizational embedding. These differences led to a variation in the methods for the identification, filtration and prioritization, assessment, and dissemination of health technologies.

Within the EuroScan International Network members were aware of a similarity of purpose, identification sources, and methods used between agencies, and because of constrained health and research budgets were conscious of the need to reduce duplication if practicable, while maintaining individual agency service and delivery aims and needs. The potential to increase the quality of EAA outputs by closer collaboration was also an important consideration.

OBJECTIVES

We aimed to update the comparative analysis undertaken in 2008/09 and describe the current EuroScan International Network member agency EAA systems, to identify similarities and differences, and highlight any opportunities for increased collaboration between member agencies or within the Network as a whole.

METHODS

In December 2011, EuroScan International Network member agencies were asked to complete a questionnaire about their EAA system structure and funding; its purpose and coverage; the methods used for identification, filtration, assessment, and reporting; their use of clinical and scientific experts; and means of dissemination. We also asked about existing collaborations between members and/or with other nonnetwork organizations and about any real and perceived barriers to collaboration.

Following the initial survey in December 2011, a semi-structured telephone interview was undertaken with each participant to clarify individual answers, to check their understanding of the language used by and between members, and to elicit additional opinions on how collaboration could be promoted or assisted. The interviews were carried out between February and May 2012 on Skype or ordinary telephone using predetermined questions to guide the conversation. The material was transcribed (http://scribie.com) and reviewed by the interviewer (Rosimary Terezinha de Almeida) to verify accuracy and consistency of the transcription. Results were incorporated into the individual agency questionnaire information.

All information was checked by members and a final opportunity given for updating information provided between March and May 2013.

RESULTS

Of the seventeen EuroScan International Network members in January 2013, fifteen supplied sufficient information either in the initial questionnaire (December 2011), interview (February to May 2012) and/or during the final updating (March to May 2013) for inclusion in the study. For a list of respondents and their agency abbreviations, please refer to the acknowledgements section.

Of the fifteen agencies, twelve were European and three were from outside Europe (from Australia, Canada, and Israel). Five were hosted by or were part of an HTA agency, six by organizations involved in health policy/decision making or supporting health policy, for example, health or other governmental ministries, two by organizations involved in health research, and one each by a hospital system and a university. Eleven systems were funded by the national health system, department of health or health services only, one from a regional health system only, two from national and regional departments or health services, and one from the host HTA agency. Agency staffing levels for EAA activities varied from 0.15 to 21.5 whole time equivalents, see Table 1.

Table 1.

Number of Staff, Types of Technologies Covered, and Use of Experts by 15 Responding Agencies

| Number of agencies | |

|---|---|

| Number of staff in EAA system | |

| < 1 whole time equivalent staff | 4 |

| 1 to <2 whole time equivalent staff | 3 |

| 2 to <5 whole time equivalent staff | 5 |

| 5 to <10 whole time equivalent staff | 2 |

| > 10 whole time equivalent staff | 1 |

| Types of technologies | |

| Devices (includes imaging equipment) | 13 |

| Diagnostics (includes diagnostic and prognostic markers) | 13 |

| Interventional procedures | 12 |

| Pharmaceuticals (includes vaccines) | 10 |

| Programs e.g. screening or vaccination | 10 |

| Healthcare settings, e.g., organizational changes, professional boundaries | 8 |

| Others | 0 |

| Stage of EAA activity supported by clinical and scientific experts | |

| Topic identification | 12 |

| Topic filtration | 6 |

| Topic prioritisation | 11 |

| Early assessment | 10 |

| Report writing | 10 |

| Peer review | 12 |

| Other – information provision; follow-up of emerging technologies. | 2 |

| Don't use | 0 |

Purpose, Customers, and Coverage

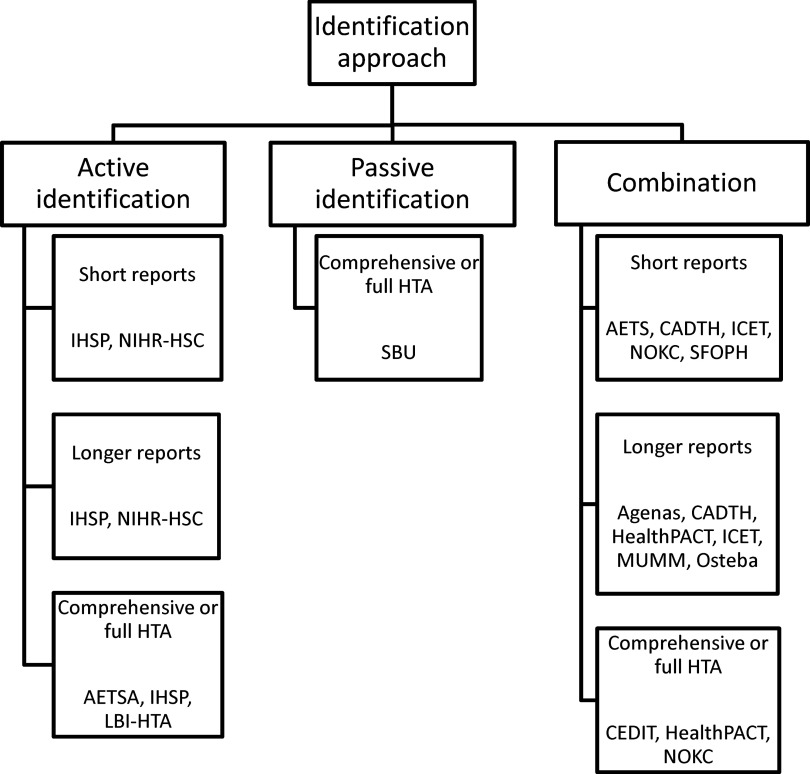

Figure 1 shows the main reported purposes of the EAA systems. Over three quarters of the agencies considered their main purpose to be informing decisions on coverage or reimbursement of health services and informing decisions on undertaking secondary research, for example, health technology assessment and systematic reviews. Many of the other purposes related to decision making around the availability and adoption of new health technologies and to inform primary research, that is, the collection of original data for instance for clinical trials. Other purposes included identifying technologies with legal or ethical concerns, informing decisions about the development of clinical guidance, developing forecasting models for drug utilization and expenditure, and collaboration with international organizations involved in drug evaluation.

Figure 1.

Main purpose of EuroScan International Network member EAA systems.

Most agencies had more than one principal intended user of their information. Thirteen agencies informed national governmental health departments and ministers; twelve informed healthcare professionals; eleven informed health service purchasers, commissioners, or other decision makers; eleven informed healthcare providers, for example, hospitals; seven informed regional, state, or provincial government; six informed national research and development programs; four informed insurance or re-imbursement organizations; two informed consumers of health services; and two informed other users including an ombudsman, a national cancer care guideline program, a national council for quality and prioritization in health care, and evaluation and guidance producers.

Four agencies covered all types of technologies (drugs, devices, diagnostics, interventional procedures, programs, and healthcare settings) and two covered all technologies except healthcare settings. Table 1 shows the coverage of individual technology types by agencies. In relation to the clinical areas covered, fourteen EAA systems covered all disease areas, the remaining system only considered oncology drugs.

Identification, Filtration, and Prioritization

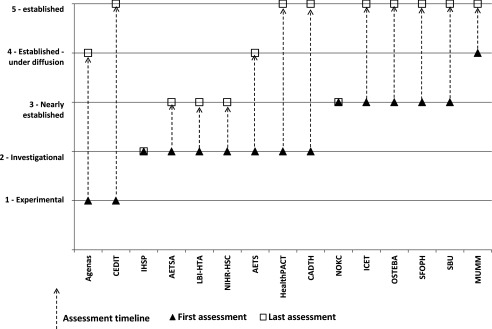

In considering the potential to increase collaboration a variable was created from member responses to categorize the principal method of identification of new and emerging health technologies into active, passive or a combination: (i) Active: identification undertaken by an active search in several sources of technology news, literature or by direct contact with the industry. (ii) Passive: topics suggested or requested from experts, government, patients or the public, board of advisers and/or industry application or notification. (iii) Combination: the use of both active and passive methods.

Ten agencies used both active and passive identification methods, four used only active methods (AETSA, IHSP, LBI-HTA, NIHR-HSC), and one only passive methods (SBU). Nine agencies commenced their identification process when technologies are in the “experimental” phase (phase I and II or equivalent). Four started when the technologies are in the “investigational” phase (phase III or equivalent) and two when the technologies are “nearly established” (some use outside clinical trials, but not widely available). As agencies identified topics in several of the development stages, identification by agencies included the “experimental” phase in nine agencies, the “investigational” stage in thirteen agencies, the “nearly established” phase in thirteen agencies, the “established under diffusion” phase (used outside clinical trials, but not fully diffused) in ten agencies, and “established” (full market use, but some controversial issues remain) in eight agencies.

Thirteen agencies undertook in-house filtration and/or prioritization. This was undertaken only in-house in four agencies, in-house and in collaboration with experts in five agencies, in-house and in collaboration with a scientific board in two agencies, and in-house and in collaboration with both experts and a scientific board in one agency. One agency used in-house filtration and prioritization in collaboration with customers and commissioners. One agency used only a scientific board for both filtration and prioritization.

The filtration and prioritization criteria used within the EAA systems had common themes around (i) the patient group size and burden of disease; (ii) the anticipated clinical benefits; (iii) the presence of innovation and current alternatives; (iv) the potential costs and organizational impact; (v) the likely diffusion, timing, and regulation; (vi) any potential ethical, social, and other impacts; and (vii) individual technology relevance and potential interest to policy makers and health systems.

Assessment, Outputs, and Dissemination

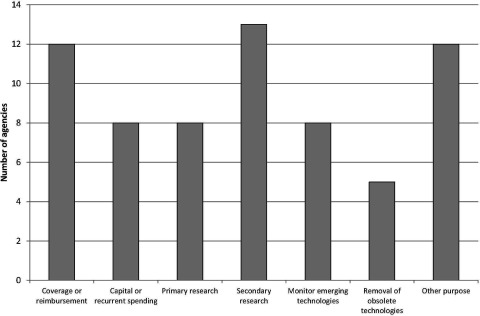

Two agencies assessed technologies when they were in the “experimental” phase, nine when the technology was in the “investigational” phase, thirteen when the technologies were “nearly established”, ten in the “established under diffusion” stage, and eight when the technologies were “established” (Figure 2). Five agencies produced their outputs in their first language (non-English) only; three in English only; and seven in both the first language and English.

Figure 2.

Stage in technology lifecycle for assessment, showing the first and last stages for the production of assessments.

Seven agencies produced short reports of one to four pages, eight produced longer reports of four to ten pages, four produced comprehensive reports of over ten pages, and four agencies produced other types of reports such as in-depth reviews of emerging technologies across a patient pathway. Six agencies produced an estimated one to ten reports per year, three between eleven and twenty reports, three between twenty-one and fifty reports, and two produced over fifty reports a year. Seven agencies updated their reports, two occasionally updated, and five did not update.

Eleven agencies included an assessment of a technology's potential for significant health service impact in their main EAA-related output, thirteen included an assessment of safety and efficacy, eleven of clinical effectiveness; and seven of cost-effectiveness at least in some instances. Other areas included in the main outputs were consideration of costs and other clinical, patient and financial impact (five agencies), social, ethical and legal impact (four agencies), regulatory status and stage of diffusion (three agencies), innovation and place in therapy (three agencies), and burden of disease, uncertainties, and trial quality (one agency each).

Twelve agencies published their reports openly on their agency website, seven in another “open” way, for example, widely distributed newsletter or peer-reviewed journal, and ten agencies mentioned the EuroScan database, as a means of dissemination. Three agencies published internally or to direct customers/users only and six to health professionals.

Use of Experts

All fifteen agencies used experts at some point in their processes, see Table 1 for details. Six agencies paid their experts and nine do not. Those agencies that did pay varied in what they paid for: one paid travel expenses only; three paid a fee and expenses for travel; and two paid only a fee for assessment, peer review, or report writing.

Collaboration

Eleven of the agencies loaded information about their identified or assessed new or emerging health technologies into the EuroScan International Network web-based database. The proportion of EAA system output in 2012 recorded on the database from agencies that used it varied from 5 percent to 100 percent. Seven agencies reported more specific collaboration with other EAA agencies both inside and outside the EuroScan International Network. This was for identification (four agencies – Osteba, AETS, CADTH and SFOPH), prioritization (two agencies – IHSP and SFOPH), assessment (four agencies – IHSP, LBI-HTA, SBU, and SFOPH), dissemination (one agency – SBU), and education (one agency – SBU).

Agencies that reported no collaboration gave the main reasons as:

-

•

Lack of financial or human resources (four agencies)

-

•

Lack of opportunity (three agencies)

-

•

Processes and methods that are specific to our agency (three agencies)

-

•

Requirement of customers that may be different to that of other agencies (one agency)

-

•

Language barrier (one agency)

-

•

The main focus of work probably differs from that of the other members (one agency)

A classification of the agencies by identification approach and the format of the principal output are shown in Figure 3.

Figure 3.

Classification of EuroScan International Network member agencies by identification approach and format of principal output.

DISCUSSION

Summary of Key Results

We found that the principal purposes of the EuroScan International Network member EAA systems are to inform decisions on coverage or reimbursement of health services and to inform decisions on undertaking secondary research. As expected given these purposes, the main users of information from the EAA systems were national governments and ministries; health professionals; health services purchasers, commissioners or other decision makers; and healthcare providers. Most of the EuroScan agencies were small with almost half, at the time of this survey, having fewer than two whole time equivalent staff.

All but one agency used active methods of identification with all but two, starting identification in the experimental or investigational stages of the technology life cycle. We found that many agencies continue to identify technologies when they are “established”, challenging the current boundaries of EAA activity. This is however, consistent with the finding that many EAA systems were an integral part of a HTA agency or an organization carrying out HTA activities. In these cases the function of the EAA system may not be as a separate entity with specific outputs confined to information about a technology before it has diffused.

Filtration and prioritization criteria were as anticipated and similar between agencies. A key feature of the EAA systems was the selection of the most significant health technologies so that health service decision makers, HTA agencies, and others can target their resources toward further research, evaluation, and decision making. Experts participated in most system activities except filtration, where only six agencies used them. Clinical and scientific experts can provide essential insight into the potential significance of new and emerging technologies and their place in patient pathways and health services.

We report a variable use of the EuroScan International Network web-based database as a means of collaboration and dissemination, with some agencies not using the database to share information and, of those that did use it, a number who only add a small percentage of their final outputs. Barriers to current and future collaboration revolved around the different system aims, purposes and requirements; a lack of staff or finance; a lack of opportunity; language differences; and restrictions on dissemination such as those imposed by required or imposed system confidentiality. Some of these barriers may be insurmountable especially in the financial situation that many health systems find themselves.

In summary the systems had common purposes, overlapping timeframes for identification, similar filtration criteria but variable commitments to sharing and collaboration.

Strengths and Limitations

Although not all EuroScan International Network members took part in the study, the collaboration represents the largest network for health technology, government-related EAA systems and these results represent the global spread of the member agencies. It is unlikely that significantly different experiences and methods have been missed, although some of the detailed application of methods in the nonresponding systems may be different. The interview process in 2012 was resource intensive, but was a good way to clarify some of the answers given in the initial questionnaires and, for nonresponders to the initial questionnaire, a means of gaining information. The study included both the strategic context and organization of EAA systems and represents a good starting point for agencies and organizations who wish to establish new or review current EAA systems.

Comparison with other Studies

The results presented here do not differ significantly from those reported from the 2008–09 survey (4;7). This is not unexpected because the members of EuroScan have not significantly changed in the last 5 years. We do however, report more detail on the time frame for the principal EAA activities of early identification and assessment, which is valuable information that can be used to increase understanding of each others’ systems and perhaps to indicate areas that are ripe for increased collaboration.

Conclusions and Policy Implications

Although many barriers to collaboration were identified, the majority of agencies were supportive in principal, of consideration for increased collaboration either involving the whole EuroScan International Network or between individual member agencies. Many agencies however, reported that they were not in a stable position and/or that their work was not fully supported by policy makers or funders in their own countries. This has led directly to changing levels of available personnel, which is a significant obstacle to increased collaboration which requires time, understanding, energy, continuity, and finance.

Despite differences in the detailed identification processes, during discussions following the survey members recognized this stage as the most feasible phase to develop a formal collaboration between members. Indeed from a reduction in duplication viewpoint the identification stage is the most obvious area where closer collaboration could bring benefits. However, full use of the principal means of information exchange, the EuroScan web-based database, remains variable and is reported as difficult for many agencies. Some of the reported constraints could be overcome with an increase in support, with any increase in information exchange being of benefit to all members and their associated health systems. One aspect of closer collaboration not mentioned by any member in the survey is that of trust. If truly collaborative identification of emerging technologies was piloted between countries with different agencies being responsible for specific identification sources, then an agency would not be in complete control of the supply of information needed to perform their own role or fulfil their contract. This would lead to an uncertainly of the ability to deliver timely and comprehensive information. This may be an important reason why a cross-national collaborative identification program has not been progressed.

EAA systems are well placed to support early dialogue between manufacturers, evaluators and health services, by identifying technologies and their developers before evidence has been generated. Given their role and place in decision making, continuing investment in these systems is important.

CONFLICTS OF INTEREST

The authors do not report any conflicts of interest.

References

- 1. Gutierrez-Ibarluzea I, Simpson S, Benguria-Arrate G. Early awareness and alert systems: An overview of EuroScan methods. Int J Technol Assess Health Care. 2012;28:301–307. [DOI] [PubMed] [Google Scholar]

- 2. EuroScan International Network. A toolkit for the identification and assessment of new and emerging health technologies October 2014. http://euroscan.org.uk/methods.

- 3. Murphy K, Packer C, Stevens A and Simpson S. Effective early warning systems for new and emerging health technologies: Developing an evaluation framework and an assessment of current systems. Int J Technol Assess Health Care. 2007;23:324–330. [DOI] [PubMed] [Google Scholar]

- 4. Simpson S, Packer C. A comparative analysis of early awareness and alert systems. Proceedings of the 6th Annual Meeting of Health Technology Assessment International (HTAi), Singapore, 2009. Ann Acad Med Singapore. 2009;38(Suppl 6): Abstract P4.2;S71. [Google Scholar]

- 5. Simpson S, on behalf of the EuroScan collaboration. A comparative analysis of early awareness and alert systems Poster presented at the 6th Annual Meeting of Health Technology Assessment International (HTAi), Singapore, 2009.

- 6. Wild C, Langer T. Emerging health technologies: Informing and supporting health policy early. Health Policy. 2008;87:160–171. [DOI] [PubMed] [Google Scholar]

- 7. Simpson S, Hiller J, Gutierrez-Ibarluzea I, et al. A toolkit for the identification and assessment of new and emerging health technologies. 2009. Birmingham: EuroScan; ISBN: 0704427257 / 9780704427259. [Google Scholar]

- 8. Sun F, Schoelles K. A systematic review of methods for health care technology horizon scanning (Prepared by ECRI Institute under Contract No. 290-2010-00006-C.) AHRQ Publication No. 13-EHC104-EF. Rockville, MD: Agency for Healthcare Research and Quality; August 2013. http://effectivehealthcare.ahrq.gov/ehc/products/393/1679/Horizon-Scanning-Methods-Report_130826.pdf (accessed December 4, 2013). [Google Scholar]

- 9. Douw K, Vondeling H, Eskildsen D, Simpson S. Use of the internet in scanning the horizon for new and emerging health technologies: A survey of agencies involved in horizon scanning. J Med Internet Res. 2003;5:e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Douw K, Vondeling H. Selection of new health technologies for assessment aimed at informing decision making: A survey among horizon scanning systems. Int J Technol Assess Health Care. 2006;22:177–183. [DOI] [PubMed] [Google Scholar]

- 11. Douw KKP. Horizon scanning of new health technologies: Some issues in identification and selection for assessment PhD thesis. Institute of Public Health, University of Southern Denmark 2008.

- 12. Ibargoyen-Roteta N, Gutierrez-Ibarluzea I, Benguria-Arrate G, Galnares-Cordero L, Asua J. Differences in the identification process for new and emerging health technologies. Analysis of the EuroScan database. Int J Technol Assess Health Care. 2009;25:367–373. [DOI] [PubMed] [Google Scholar]