Abstract

Background

The Accreditation Council for Graduate Medical Education has begun to evaluate teaching institutions' learning environments with Clinical Learning Environment Review visits, including trainee involvement in institutions' patient safety and quality improvement efforts.

Objective

We sought to address the dearth of metrics that assess trainee patient safety perceptions of the clinical environment.

Methods

Using the Hospital Survey on Patient Safety Culture (HSOPSC), we measured resident and fellow perceptions of patient safety culture in 50 graduate medical education programs at 10 hospitals within an integrated health system. As institution-specific physician scores were not available, resident and fellow scores on the HSOPSC were compared with national data from 29 162 practicing providers at 543 hospitals.

Results

Of the 1337 residents and fellows surveyed, 955 (71.4%) responded. Compared with national practicing providers, trainees had lower perceptions of patient safety culture in 6 of 12 domains, including teamwork within units, organizational learning, management support for patient safety, overall perceptions of patient safety, feedback and communication about error, and communication openness. Higher perceptions were observed for manager/supervisor actions promoting patient safety and for staffing. Perceptions equaled national norms in 4 domains. Perceptions of patient safety culture did not improve with advancing postgraduate year.

Conclusions

Trainees in a large integrated health system have variable perceptions of patient safety culture, as compared with national norms for some practicing providers. Administration of the HSOPSC was feasible and acceptable to trainees, and may be used to track perceptions over time.

Editor's Note: The online version of this article contains a table describing the surveyed residency and fellowship programs, questions addressed in each patient safety domain, and the survey instrument used in the study.

Introduction

In the United States, 25 000 new physicians enter graduate medical education each year in a variety of teaching hospitals. Training in a hospital with better outcomes is associated with significantly better outcomes observed in practice 20 years later.1 In an effort to promote quality care, the Accreditation Council for Graduate Medical Education Next Accreditation System2 includes an enhanced focus on resident and fellow involvement in patient safety and quality improvement.

Currently, cross-sectional analyses of trainees' perceptions on the clinical learning environment are lacking. We undertook a cross-sectional analysis of patient safety culture (PSC) at a large sponsoring institution. We hypothesized trainees had lower perceptions of PSC than practicing physicians. By measuring PSC, training programs can establish metrics of trainee perception and adopt measures to better integrate trainees into the infrastructure of sponsoring institutions.

Methods

Setting and Participants

The Agency for Healthcare Research and Quality (AHRQ) Hospital Survey on Patient Safety Culture (HSOPSC) measures patient safety perceptions in 12 domains and incorporates 2 outcome measures.3 The survey was administered anonymously to trainees in 50 residency and fellowship programs at 10 hospitals within our integrated health system (provided as online supplemental material (21.1KB, docx) ). To ensure confidentiality, we excluded 49 programs with fewer than 4 trainees. The survey was administered electronically using SurveyMonkey in May and June of 2013. Trainees were sent an e-mail 3 times during the study period and were asked to complete the survey only once. Completion of the survey was encouraged by program directors and program coordinators.

The HSOPSC utilizes 3 to 4 questions in each of 12 patient safety domains (provided as online supplemental material (37.7KB, docx) ). Questions are agreement questions (responses ranging from “Strongly disagree” to “Strongly agree”) or frequency questions (“Never” to “Always”) using a 5-point Likert scale. The survey also uses 2 single-item outcome measures about the number of events reported (defined as errors of any type, regardless of whether they result in patient harm) and the overall patient safety grade (“Excellent” to “Failing”). Previous analyses have shown that all 12 dimensions had acceptable levels of internal consistency, but lack association with patient outcomes.4,5 To create publicly accessible benchmark data, the survey was administered to 108 621 health care workers from 382 hospitals across the United States between October 2004 and July 2006.6 The AHRQ publishes annual national comparison data.7

The HSOPSC has been previously tested on and adapted for residents and fellows.8 The adaptations included adding a definition of event reporting. The word staff was replaced throughout the survey with the phrase resident/fellow, the words hospital work area and unit were changed to hospital, and agency/temporary staff was clarified to mean moonlighters or cross-covering physicians. The term manager was changed to program director. Since trainees may work at several hospitals, we defined their “unit” as the hospital in which they spent the majority of their time. Finally, we added a demographics section and asked whether trainees had education on patient safety and quality improvement in their programs. Otherwise, we maintained the question format, question order, and response options of the HSOPSC (provided as online supplemental material (40.5KB, doc) ).

This study was declared exempt by the University of Pittsburgh Institutional Review Board.

Analysis

We compared residents' and fellows' PSC perceptions to national practitioners representing a mix of practicing physicians, resident physicians, physician assistants, and nurse practitioners from 543 hospitals and 29 162 respondents. At the time of our study, 2013 national comparisons were not available, and comparisons were made to 2012 responses.

Guidelines for calculating HSOPSC domain scores are published.9 When comparing trainees to national practitioners, the AHRQ holds that a PSC score that is 5 percentage points greater than the national average signifies better PSC. Similarly, a PSC score that is 5 percentage points less than the national average signifies worse PSC.6 We also compared domain scores by postgraduate year level through a t test. For all statistical analyses, we used Microsoft Excel version 2010 for Windows and Minitab 16.

Results

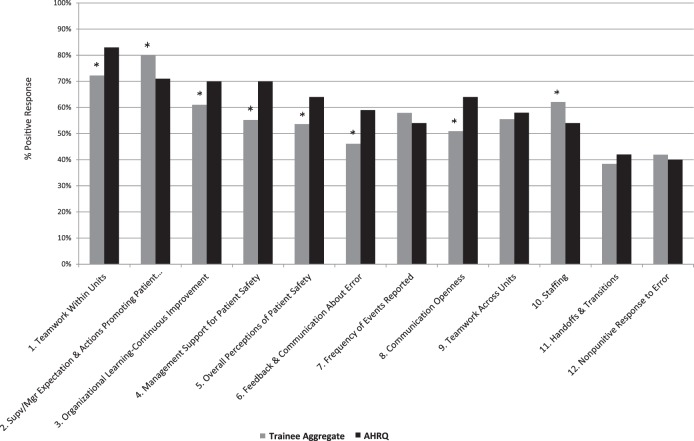

The survey was administered to 1337 residents and fellows in 50 training programs at 10 hospitals, with a response rate of 71.4% (955 of 1337). A comparison of resident and fellow PSC scores to national practitioners is shown in figure 1. In 2 domains, residents and fellows had higher PSC scores than the national practitioner sample. Trainees gave higher scores for (1) supervisor/manager expectations and actions promoting patient safety, and (2) staffing. Residents and fellows had lower PSC scores in 6 domains compared with the national data: (1) teamwork within units, (2) organizational learning–continuous learning, (3) management support for patient safety, (4) overall perceptions of patient safety, (5) feedback and communication about error, and (6) communication openness. PSC scores equaled national data in the 4 remaining domains. Perceptions of PSC did not change with advancing postgraduate year level.

FIGURE 1.

Comparison of 12 Patient Safety Culture Domains, Trainee Aggregate Compared to AHRQ

Abbreviations: AHRQ, Agency for Healthcare Research and Quality; Supv, supervisor; Mgr, manager. Note: All responses from 955 trainees at 10 hospitals were aggregated and compared with AHRQ national data representing 29 162 respondents from 543 hospitals, who defined their titles as practicing physician, resident physician, physician assistant, or nurse practitioner. The AHRQ considers a 5% absolute difference in each patient safety culture domain score to be clinically significant. Asterisks identify domains with a 5% or greater absolute difference.

The HSOPSC incorporates 2 outcome measures. Trainees gave their training programs an overall patient safety grade of “Very good” (mean score 1.96, SD = 0.76), and their hospitals a slightly lower overall patient safety grade of “Very good” (mean score 2.17; SD = 0.75; P < .001). Fifty-eight percent (554 of 955) of respondents stated that they had not reported a patient safety event in the preceding 12 months, and the majority (84.2%, 804 of 955) had participated in educational activities on patient safety. For each training program we generated individual reports that highlighted strengths and weaknesses (figure 2).

FIGURE 2.

Individual Training Program's Patient Safety Culture Survey Results

Abbreviations: UPMC, University of Pittsburgh Medical Center; CI, Continuous Improvement. Note: Such reports are used by program directors to lead discussions with trainees and faculty members.

Discussion

We observed some variable perceptions of PSC in trainees compared with a national sample of practicing providers, with trainees having lower overall perceptions of PSC. We believe there are multiple explanations, including trainee day-to-day patient care responsibilities that may be disconnected from hospital administration efforts to implement patient safety measures. As new providers, trainees lack knowledge of how hospital practice has evolved over time, and trainees may not appreciate systemic changes as promoting patient safety when these are not clearly labeled as patient safety initiatives. Finally, trainee perceptions of PSC have not been widely published, and it is challenging to conclude whether lower perceptions are a phenomenon of being a trainee or a reflection of institutional shortcomings.

Regardless of the explanation of lower PSC, trainee perceptions are highly valuable. In our institution, we generated program-specific PSC reports to debrief each training program on its individual strengths and weaknesses. Survey results are used by program directors to engage trainees, faculty, and leadership in discussions about trainee perceptions of priority patient safety problems. Based on survey results, our hospitals are now inviting trainees to root-cause analyses and hospital committees in which errors are discussed.

Our study has limitations that warrant comment. The national AHRQ data are compiled from a mix of practicing physicians, trainees, physician assistants, and nurse practitioners from which the percentage of responding trainees is not reported. We reported lower PSC scores in our trainees compared with national data and speculate this is due to the circumstances of being a learner. We hypothesized that lower scores reflect that trainees are still accumulating knowledge/experience. However, without local comparative data we cannot exclude the conclusion that our institution has worse PSC. Beginning in 2014, we are surveying trainees and staff biyearly to perform direct comparisons. A second limitation reflects controversy regarding correlation of the HSOPSC survey with patient outcomes. One multicenter study showed that higher PSC scores were associated with lower readmission rates for heart failure and myocardial infarction.10 However, other work suggests no relationship between PSC and outcomes.11 A final limitation relates to survey question interpretation. The HSOPSC has not been used extensively with trainees, and trainees may interpret survey questions differently than practicing physicians.

Conclusion

Trainees gave their training programs “Very good” scores for patient safety, but had lower perceptions of PSC compared with national practitioners. Measuring PSC provides a useful baseline measurement and facilitates targeted initiatives that improve trainee integration into the patient safety and quality improvement infrastructure of their institutions. In addition, measuring PSC provides metrics by which training programs can track their progress to understand if educational and operational changes affect perceptions.

Supplementary Material

Acknowledgments

The authors would like to thank Karen S. Navarra, Cindy A. Liberi, and Nicole Pantelas for their assistance with survey design and collection. They would also like to thank W. Dennis Zerega for his thoughtful review of an earlier version of the manuscript.

Footnotes

Gregory M. Bump, MD, is Associate Professor of Medicine, University of Pittsburgh School of Medicine and University of Pittsburgh Medical Center; Jaclyn Calabria, MSc, is Administrative Fellow, University of Pittsburgh Medical Center; Gabriella Gosman, MD, is Associate Professor, Department of Obstetrics, Gynecology, and Reproductive Sciences, University of Pittsburgh School of Medicine and University of Pittsburgh Medical Center; Catherine Eckart, MBA, is Associate Designated Institutional Officer and Executive Director, Graduate Medical Education, Stony Brook Medicine; David G. Metro, MD, is Professor of Anesthesiology, University of Pittsburgh School of Medicine and University of Pittsburgh Medical Center; Harish Jasti, MD, is Associate Professor of Medicine, Icahn School of Medicine at Mount Sinai; Julie B. McCausland, MD, is Assistant Professor, Department of Emergency Medicine, University of Pittsburgh School of Medicine and University of Pittsburgh Medical Center; Jason N. Itri, MD, PhD, is Assistant Professor, Department of Radiology, University of Cincinnati Medical Center; Rita M. Patel, MD, is Professor of Anesthesiology and Associate Dean for Graduate Medical Education, University of Pittsburgh School of Medicine, and Designated Institutional Official, University of Pittsburgh Medical Center; and Andrew Buchert, MD, is Assistant Professor of Pediatrics, University of Pittsburgh School of Medicine and University of Pittsburgh Medical Center.

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

References

- 1.Asch DA, Nicholson S, Srinivas S, Herrin J, Epstein AJ. Evaluating obstetrical residency programs using patient outcomes. JAMA. 2009;302(12):1277–1283. doi: 10.1001/jama.2009.1356. [DOI] [PubMed] [Google Scholar]

- 2.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012;366(11):1051–1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 3.Sorra J, Nieva V. Hospital Survey on Patient Safety Culture. Rockville, MD: US Department of Health and Human Services, Agency for Healthcare Research and Quality; 2004. AHRQ Publication No. 04-0041. http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/userguide/hospcult.pdf. Accessed November 24, 2014. [Google Scholar]

- 4.Sorra JS, Dyer N. Multilevel psychometric properties of the AHRQ hospital survey on patient safety culture. BMC Health Serv Res. 2010;10(199):1–13. doi: 10.1186/1472-6963-10-199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Colla JB, Bracken AC, Kinney LM, Weeks WB. Measuring patient safety climate: a review of surveys. Qual Saf Health Care. 2005;14(5):364–366. doi: 10.1136/qshc.2005.014217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sorra J, Nieva V, Famolaro T, Dyer N. Hospital Survey on Patient Safety Culture: 2007 Comparative Database Report. Rockville, MD: US Department of Health and Human Services, Agency for Healthcare Research and Quality; 2007. AHRQ Publication No. 07-0025. http://archive.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/2007/hospsurveydb1.pdf. Accessed November 24, 2014. [Google Scholar]

- 7.Agency for Healthcare Research and Quality. Hospital Survey on Patient Safety Culture. Chapter 6. Comparing your results. http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/2012/hosp12ch6.html. Accessed November 20, 2014. [Google Scholar]

- 8.Jasti H, Sheth H, Verrico M, Perera S, Bump G, Simak D, et al. Assessing patient safety culture of internal medicine house staff in an academic teaching hospital. J Grad Med Educ. 2009;1(1):139–145. doi: 10.4300/01.01.0023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Agency for Healthcare Research and Quality. User's guide: Hospital Survey on Patient Safety Culture. http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/userguide/index.html. Accessed November 20, 2014. [PubMed] [Google Scholar]

- 10.Hansen LO, Williams MV, Singer SJ. Perceptions of hospital safety climate and incidence of readmission. Health Serv Res. 2011;46(2):596–616. doi: 10.1111/j.1475-6773.2010.01204.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rosen AK, Singer S, Zhao S, Shokeen P, Meterko M, Gaba D. Hospital safety climate and safety outcomes: is there a relationship in the VA. Med Care Res Rev. 2010;67(5):590–608. doi: 10.1177/1077558709356703. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.