1. Introduction

Priorities for greater accountability, using strong scientific evidence to demonstrate what works, have raised the bar for what evaluations should yield in an era of limited resources (Schweigert, 2006). Training is the most important and frequently used human resource development activity and organizations devote a substantial percentage of their budgets to it (Lee-Kelley & Blackman, 2012; Owens, 2006). According to the American Society for Training and Development (ASTD), organizations in the United States spent approximately $156.2 billion on employee learning and development activities in 2011 (Miller, 2012).

Evaluating the effectiveness of training has become critical, but it is not widely used by organizations. Reasons for not evaluating training activities include difficulties in identifying and measuring outcomes, and the lack of expertise in evaluation techniques (Karim, Huda, Khan, 2012; Twitchell, Holton, & Trott, 2000). When evaluations are conducted, they often have a limited focus on participant satisfaction and future intention. Brinkerhoff (2006a) commented on this issue, stating that the use of rigorous evaluation methods for most organizations is difficult, time intensive, impractical, and costly.

We agree with the premise that rather than the training itself being the object of evaluation, the focus should be on how well organizations use training (Nanda, 2009; Brinkerhoff, 2003) and maximize outcomes. Among the Top 100 U.S. companies, approximately 92% measure training effectiveness through Kirkpatrick’s four-level evaluation (Kirkpatrick & Kirkpatrick, 2006) and 67% measure through return on investment (Wu, 2007). However, Rowden (2001) has noted an increase in the use of alternative approaches to measure the success of training programs. One of the approaches he identified was the Success Case Method (SCM) (Brinkerhoff, 2006b; 2003).

SCM is a process to evaluate the effects of, and factors that are associated with, the effective application of new skills by focusing on characteristics of the most successful cases. This approach provides information about what worked, what did not, what results were achieved, and what can be done to get better results in the future. Brinkerhoff (2003) identifies five steps to be followed in planning and conducting a success case study: (1) focus and planning of a SCM study; (2) development of an impact model that defines the expected results of the intervention; (3) identification of cases as best (i.e., success cases) or worst (i.e., non-success cases) using survey methods; (4) documentation of success and non-success cases using semi-structured interview techniques; and (5) communication of SCM findings, conclusions, and recommendations to clients and interested stakeholders.

The SCM is used to determine in a fast and simple way which parts of an initiative work well enough to be left alone, which parts need revision, and which should be abandoned. The method seeks to understand why things worked and why they did not (Brinkerhoff, 2003). SCM, as an evaluation approach, has been widely adopted by major corporations with great success (Brinkerhoff, 2006b). Benefits identified by companies using SCM include leveraging evaluation results to increase training effectiveness and the ability to make a convincing business argument to senior management for investing in training (Brinkerhoff, 2008). Although the SCM has been designed for and used predominately in profit-based settings (Brinkerhoff, 2003), it also has been used in food security projects, in a non-profit foundation, and in educational settings (Coryn et al., 2009; Pine, 2006). Moreover, the approach with the addition of a time series design was used in a social service context to evaluate a program aimed at reducing homelessness and unemployment (Coryn et al., 2009). Benefits identified for adding a time-series design element to traditional SCM include the ability to identify growth and decay and the reasons for them, the ability to identify long-term program effects and for who and why (or why not) those effects are, or are not, sustained, and the ability to provide useful feedback to the program at various points during the evaluation (Coryn et al., 2009).

SCM has been used in health care settings to measure the value of training and report that value back out to the organization of training evaluation (Preston, 2010). In addition, Olson and colleagues (2011) have used the approach to improve continuing medical education activities that contributed to significant changes in tobacco cessation practice in nine outpatients care practices.

The purpose of this article is to describe the use of SCM to advance public health goals. Specifically, we used SCM to determine the usefulness of two trainings sponsored by the Office Community Research and Engagement (OCRE) of the Puerto Rico Clinical and Translational Research Consortium (PRCTRC). In order to improve the most prevalent health problems in Puerto Rico, the PRCTRC was established to advance translation of knowledge from academic to community settings as a means of impacting health disparities. OCRE acts as a vehicle for putting the mission of the Consortium into action through capacity-building activities geared toward establishing stable community-academic partnerships. Efforts toward building this capacity included sponsoring training workshops that targeted academic researchers and members of community-based organizations.

2. Methods

2.1. The trainings

During the summer of 2011, OCRE sponsored two intensive workshops: The Atlas.ti Program and its Applications in the Analysis of Qualitative Data and Intervention Mapping Approach to Planning Health Promotion Programs. The first was a three-day workshop that targeted academic researchers. Academic researchers interested in participating in the training were asked to offer information about their research interests and if they were working or planning to conduct a research study that included qualitative data. Participants were selected considering: (1) if they were part of one of the Consortium partner institutions, (2) if they were conducting research in a health topic of interest by the Consortium and (3) if they were conducting or planning to conduct a research study that includes qualitative data. The workshop content objectives included: (a) develop a complete understanding of the Atlas.ti program and its features, (b) learn how to use Atlas.ti in a wide variety of qualitative projects, (c) become acquainted with the steps for analyzing data from a research project, and (d) operate all functions of the software (individual coaching and practice). At the end of the training, each participant received a free one-year license of the Atlas.ti program to ensure that access to the software would not be a barrier to its continued use.

The second training was on Intervention Mapping, a week-long course that targeted researchers and members of community-based organizations. Researchers interested in participating were asked to provide information about their research interests. Participants were selected with the intended outcome that they perform a research study in one of the health topics of interest by the Consortium. For members of a community-based organization, participants were selected if they worked with one of the health topics interested by the Consortium. Intervention Mapping is a valuable evidence-based tool for planning and developing health promotion programs (Bartholomew et al., 2011). At the end of the training, participants received a copy of the book Planning Health Promotion Programs: An Intervention Mapping Approach, 3rd edition to ensure that participants would have a ready reference to guide them in current and future projects

Participant satisfaction with the trainings, their content, presenter effectiveness, and increases in knowledge were evaluated using survey instruments developed by the Tracking and Evaluation (TEK) core of the PRCTRC. Formative evaluation of the Atlas.ti workshop included a survey administered at the end of the workshop, along with a pre- and post-test designed to measure increases in knowledge and skills. The Intervention Mapping formative evaluation included a self-administered questionnaire that assessed the content value of the course and participants’ satisfaction at the end of each day of the training.

For the evaluation of the training and providing follow-up of the participants IRB approval was obtained (A1250111).

2.2. Success Case Method

2.2.1 Step 1: Focusing and Planning the Success Case Study

During OCRE’s weekly meetings, prior to trainings, the staff designed and planed the study taking in consideration the purpose and stakeholders of the study, grant deadlines and resources (Table 1).

Table 1.

Success Case Method Design

| The Program | The SCM Intent | The Design |

|---|---|---|

| A university consortium that has invested in two trainings, targeting researchers and members of community-based organizations. | Complement the self-assessment and satisfaction evaluation to determine the usefulness of the trainings and the characteristics of the success cases in order to gain an understanding of its effectiveness, identify areas that would improve the training, and develop more efficient recruitment strategies for future training initiatives. |

|

2.2.2 Step 2: Creating an impact model that define success

Brinkerhoff (2003) defines the impact model as a description of what success would look like if the training is working. For this application, the development of the impact model required us to think ‘outside the box’ of corporate priorities and to conceptualize what success would mean from a public health and translational research perspective. Our decisions were guided by two mandates to which our training initiative had to be aligned. The first was that federal government agencies have prioritized assessing the effectiveness and demonstrating accountability for their supported initiatives. The second relates to the fact that OCRE serves as vehicle of the PRCTRC to advance its mission. Through these and other training initiatives, OCRE expects to build sustainable, translational research capacity that potentially can impact PR communities to eliminate health disparities.

Schweigert (2006) established that in a review of evaluation reports and literature, three different meanings of effectiveness are used for assessing community initiatives: a) increased understanding of the dynamics of communities and interventions, b) accountability, and c) demonstrated causal linkages. Although these meanings share considerable overlap, we chose to focus predominantly on the first meaning of effectiveness for developing success criteria for the Atlas.ti workshop. Two criteria were established: (1) use of Atlas.ti for research purposes to increase understanding of the dynamics of communities and interventions through analysis of existing qualitative data or developing new studies, and (2) use Atlas.ti and/or the qualitative data analysis skills (gained through the training) a minimum of two times (not for practice) during the assessment period. To be considered a success case, the trainee had to meet both conditions.

Intervention Mapping was similar since this tool already takes into consideration the different meanings of effectiveness used for assessing community initiatives described by Schweigert (2006). More specifically, Intervention Mapping is an evidence-based tool that describes the process of program planning and development of health promotion programs in six steps: (a) needs assessment, (b) matrices for program objectives, (c) selection of theory-based intervention methods and strategies for change, (d) production of program components, (e) planning for adoption, implementation and sustainability, and (f) evaluation (Bartholomew et al., 2011). Based on the interests of all the parties and using Schweigert’s effectiveness meanings as a reference, we developed success criteria for the Intervention Mapping course. These were (1) using the tool, or some aspects of it, in the development or planning of a health program or intervention; and (2) using the tool in the participant’s institution or main work setting. To be a success case the trainee had to meet both conditions. This second success criterion was established because Intervention Mapping was a week-long training workshop that required institutions to release participants from normal duties to attend. Given this investment, it was expected that participants would apply the training in their work.

2.2.3 Step 3: Differentiating Success and Non-success Cases

The third step of SCM identifies potential success cases in which trainees have been successful in applying knowledge and skills developed during their training. Three months after each workshop, participants were contacted and asked to complete a short survey (8 items) designed to assess if the training had been beneficial to them and if they were using the Atlas.ti program or Intervention Mapping methods in their work. Participants responding that they had not yet used the training were questioned about barriers they faced and if they needed any help or support from OCRE staff to better incorporate what they had learned from the workshop.

2.2.4 Step 4: Documenting Success Cases

At six-month post training, we conducted follow-up interviews with each participant to confirm and document the characteristics of success and non-success cases. Given that both sessions were targeted trainings, the number of participants was limited. Thus, we departed from typical Success Case Methodology that focuses on interviewing participants at the extremes on the success continuum and instead interviewed all participants.

Follow-up interviews took approximately 20 minutes and participants clarified what exact parts of the training they used and for what purposes, when they used it, and what specific outcomes were accomplished. Also, the follow-up was conducted to identify if there were changes, specifically if any of the previously identified non-success cases had started using the software, reasons for this change, and how the tool was helping them achieve objectives.

2.2.5 Step 5: Communicating Findings

A report that included conclusions and recommendations was developed and completed by the Tracking and Evaluation core of the PRCTRC, with results shared with PRCTRC stakeholders. The report included recommendations of recruitment strategies that will help have a greater impact on trainings. Also, the study was presented at local and international health-related conferences.

3 Results

3.1.1 Atlas.ti Program and its Applications

Formative evaluation revealed nearly uniform high satisfaction with the training, accompanied by clear increases in knowledge using pre- and post-test measurements. According to the Evaluation Report of the workshop (TEK, 2011), about 94% (n=14) of the participants strongly agreed or agreed with the following statements about the training: improved their research skills in qualitative data analysis, was relevant, was useful, and fulfilled their expectations. At pre-test none of the participants identified themselves as having specific or expert knowledge on the software and how to use it, while at the post-test 93.4% (n=14) indicated such level of knowledge. Additionally, the evaluation report established that 87.0% (n=13) of the participants indicated at the end of the workshop that they had gained specific knowledge about the applicability of the Atlas.ti program for the qualitative data analyses of their research projects.

A total of 14 participants were successfully contacted by OCRE staff for a three-month follow-up; at the six-month follow-up, all but one of the participants (response rate: 93.8%) were contacted. A majority (80.0%, n=12) were affiliated with one of the Consortium partner institutions and were female. About half were faculty and half were graduate students or research staff.

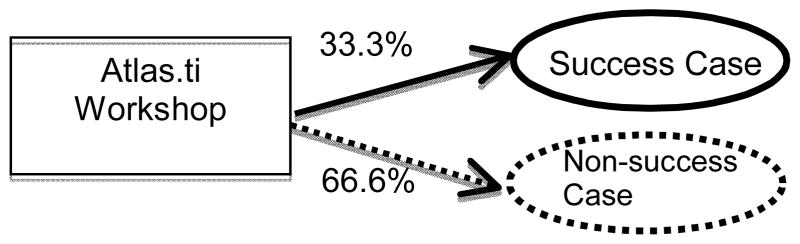

During the three-month follow-up, five participants (33%) reported having used Atlas.ti training content according to the established success criteria (Figure 1). Moreover, two (2) additional participants reported using the program to practice the skills acquired during training. Helpful elements of the training identified by participants included instructor expertise and the educational materials provided during the training. No changes were identified among non-success cases about starting using the software at the six-month follow-up.

Figure 1.

Success and Non- Success Cases rate

An important factor identified among success cases was that participants had existing data on which to use the software and apply the training. Atlas.ti software was used to organize and categorize focus-group data, clinical interviews, and other in-depth interviews. Among the five success cases identified at follow-up, four reported using the program on several occasions and all reported the use of the educational materials provided during the training.

One of the most important and valuable findings identified among the success cases was that two had trained members of their research staff to assist them in data analysis process and three had completed the data analysis phase as part of their research studies and were planning to publish their results.

Example case

Research assistant

The trained participant indicated that used the software for organizing and categorizing data previously gathered from two focus groups with HIV positive patients from a Department of Health STD Clinic. The focus groups purposes were to explore the knowledge and perception of the participants about the Human Papilloma Virus and the diseases and symptoms it may cause. Right after the training, the participant started to use the software and had used it more than ten times, about two days a week, approximately four hours each time. For this participant, a reported important aspect of the training that helped her use the software was the training’s content and the educational materials offered. These were used often as a reference, especially in the coding process. Also, the participant mentioned as a helpful aspect of the training that it was a hand-on session, allowing her to practice with the focus group data. Although the participant mentioned having no difficulties using the software, it was highlighted that in comparison with quantitative analysis software previously used by the participant Atlas.ti wasn’t a “user friendly tool”. Moreover, the participant emphasized that without the training she wouldn’t be able to use the software and that she and her team planned to publish the findings in a peer review journal.

Among the non-success cases, an often reported barrier to using the software were delays in implementing their study protocol; which included delays with IRB approval and with the data collection process. Other reasons for not using the software included: not using qualitative data for their research project, the end of the funding for their research project, and lack of time. Only one participant expressed no plans to use Atlas.ti in the near future because they had changed jobs due to the end of funding of the research project. However, all of the other participants expressed that they have plans for using the software in the near future.

3.1.2 Intervention Mapping and its Applications

Formative evaluation revealed high overall satisfaction with each day of the Intervention Mapping training among participants. According to the Evaluation Report of the workshop (TEK, 2011), at least 90% of the participants classified the course as very valuable or valuable for providing important concepts in terms of: planning model(s), health education theories, ecological model used in health promotion, education and health promotion theories, and need assessment for program development. Meanwhile, all participants reported that the course provided them with a very valuable or valuable experience on important concepts for specifying determinants with the theory and the evidence, and the development of behavioral changes matrixes. Moreover, at least 94% of the participants considered very valuable or valuable Steps 3 and 4 of the Intervention Mapping model: (1) selection of methods and strategies, the design and organization of programs and (2) scope and sequence of program(s). Finally, participants reported a very valuable or valuable experience related to Steps 5 and 6 of the Intervention Mapping model: (1) plans for adoption and implementation of the program(s) and (2) development of program(s) evaluation.

During the three and six-month follow-ups, all but one of the participants (19/20) was successfully contacted by OCRE staff (response rate: 95.0%). A majority of participants (73.7%, n=14) were affiliated with the Consortium partner institutions (Table 3).

Table 3.

Percentage distribution of Intervention Mapping trainee characteristics

| Characteristic | N | % |

|---|---|---|

| Gender | ||

| Female | 16 | 84.2 |

| Male | 3 | 15.8 |

| Academic Institution Affiliation | ||

| Consortium partner institutions | 14 | 73.7 |

| Community Based Organization | 3 | 15.8 |

| Other academic institutions | 2 | 10.5 |

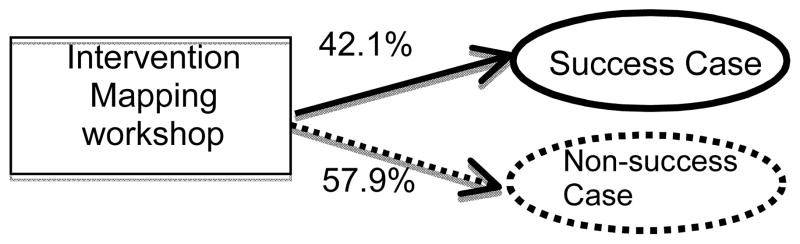

At the three month follow-up, we determined that six participants had used the Intervention Mapping approach according to established success criteria. Moreover, during the six-month follow-up two new participants were identified as success cases (Figure 2). Helpful elements of the course, as identified by participants included instructor expertise, educational materials, the facilities; the training was conducted at a local hotel, allowing participants to be in an environment away from their normal work setting where they could focus on the learning process of the training.

Figure 2.

Success and Non- Success Cases rate

Similar to results for the Atlas.ti training, participants that already were engaged in the design or revision of an intervention at the time that they attended the Intervention Mapping training represented success cases. Among the most important and valuable findings identified among the success cases was that 63% (5/8) implemented or adapted Intervention Mapping approaches in the process of writing a grant. Additionally, two of the success cases had incorporated Intervention Mapping as a theoretical framework for an existing research project.

All success cases had used the book Planning Health Promotion Programs: An Intervention Mapping Approach provided during training. They had also shared the book and principles of the model with research team members.

Example case

Evaluator/Research Associate

Within past month, the participant incorporated the model for a proposal seeking external funding. The participant identified instructor expertise, the training content and the educational materials as important aspects of the training. He added that he often used the training materials as a reference, especially the examples of the change objectives and matrix. Also, the participant stated that the model fulfilled his expectations and perfectly fit his previous knowledge about program planning and development. The model was used for the development of an HIV prevention intervention for adolescents between 14 to 18 years. The successful participant expressed that he had shared the model with his colleagues and that the model was well accepted in his institution. Although the participant mentioned having no difficulties using the model, he indicated experiencing logistical barriers such as applying the model was time consuming and that ample time was needed for extended practice.

The main reason given by participants that did not meet our criteria for success cases was the lack of opportunities in their work to apply skills learned during the training. Other non-success case participants reported barriers specifically related to: (1) gaps in understanding the information offered during the training (n=1), (2) using the model was time consuming (n=2), (3) lack of funding (n=2), and (4) Intervention Mapping was not coherent with their current research effort (n=1).

4 Discussion

In summary, most trained participants were successfully contacted during the follow up to explore their experience with their recently acquired knowledge and resources surrounding the use of Atlas.ti software and application of the Intervention Mapping. A total of 16 of those contacted had used the data analysis tool and/or model either for practice or in their institution or main work setting. Of those participants that reported using the data analysis tool and/or model, 38% (13/34) had met the success criteria. The common thread seen among most of them was having an existing qualitative data set (Atlas.ti) or an intervention in the planning and developmental stages (Intervention Mapping). All participants used the program and model as expected and were mostly satisfied with both. Furthermore, some participants expressed delivering learned knowledge to their staff and colleagues. Although SCM has been most widely used to estimate the successes and failures on meeting the goals of for-profit organizations, our findings suggest that SCM is a useful and valuable tool for evaluating public health initiatives. Brinkerhoff‘s SCM was developed with the purpose of assessing the impact of training activities on organizational goals using case study and storytelling among selected success and non-success groups.

4.1 Limitations and Recommendations

Despite our best efforts to screen potential participants to ensure that we would have groups with a high probability of using acquired skills during the training, it is clear that the level of readiness among some participants did not match our expectations (we had available seats for training opportunities). For the Atlas.ti training, a weakness identified in our recruitment criteria was that we took into consideration those who had plans to conduct research that included qualitative aspects. This decision was made due to the fact that one of the reasons most often mentioned for not using the software was delays with the research timeline. In the future, it might be advisable to document that potential participants who have an existing qualitative data set (Atlas.ti) that can be used to implement acquired knowledge.

For the Intervention Mapping course, a weakness identified in our recruitment criteria was that the members of community-based organizations were not subject to a rigorous selection process, with the only selection of criteria being that they work with a health area of Consortium’s interest. Another weakness identified was that we did not include as selection criteria for researchers that they be working or planning to work in the development of a health intervention. This original decision was based on general difficulties or barriers experienced related to not having the opportunity to use or practice acquired skills. For future trainings of this type, we plan to use a strategy that includes site visits to potential participants prior to their training enrollment.

4.2 Lessons Learned

Our SCM evaluation was complemented by standard formative assessments, such as participant satisfaction, value of the content, presenter effectiveness, and increases in knowledge. If we had considered a formative approach to be sufficient for our evaluation, we would have judged both trainings as overwhelmingly successful. A majority of participants reported being satisfied with the training and its content, and exhibited increased knowledge using as a measure self-perception or pre-test and post-test assessment (data not shown). We also would have concluded that our recruitment strategy was sound and effective.

In addition to short-term increases in participant’s knowledge, an important role of training evaluation is to determine if there has been an enhancement of participant skills and a potential to continuously improve performance over time. Thus, assessing training effectiveness is a significant benefit for organizations (Tsang-Kai, 2010). Our three-month follow-up survey focused on use of the training and/or materials as an indicator of success. This enabled us to distinguish participants who had shown increases in knowledge and had a favorable assessment of the training at its completion from those that carried this forward and applied their acquired knowledge and skills in a meaningful context in their work. In this respect, the follow-up could be considered a summative approach.

Follow-up at three months yielded disappointing levels of success from a standard summative evaluation standpoint, with only about one third of participants in both trainings meeting our established success case criteria. If we had stopped our evaluation at this stage, we would have concluded that the training only had been marginally successful. Specifically, although overall satisfaction was high and knowledge had been gained by participants during the training, these alone were insufficient to ensure that that the new knowledge and skills would be used after the training was completed. The follow-up also revealed a possible weakness in our recruitment strategy, which we originally thought was strict. In the future, it might be advisable to document that potential participants have an existing qualitative data set (Atlas.ti) or an intervention in the planning and developmental stages (Intervention Mapping) on which to apply their training.

Although follow-up at six months did not yield different results, except for Intervention Mapping, valuable information still was obtained. For example, additional time does not appear to improve outcomes for non-success cases. The fact that most of the non-success cases applied the training between 3–6 months supports a highly targeted recruitment strategy to ensure that resources are being used effectively.

SCM (Brinkerhoff, 2003) can be based on Diffusion of Innovations theory (Rogers, 2003), in that the evaluation focuses on the most successful cases in a sample. Rogers conceptualizes these as innovators, which represent the first persons to adopt an innovation (2.5%), and early adopters, which represent social leaders in their field who are looking to adopt and use new technologies and ideas (13.5%). Combined success cases for both trainings were roughly 38% (13/34). As mentioned previously, a traditional summative evaluation would consider this disappointing. However, SCM would deem this highly successful. The number of our success cases was more than double the expected rate predicted by Rogers for innovators and early adopters (16%).

Our use of SCM enabled us to gather important information about how a training initiative was being used, in what context, how the training had been leveraged to build additional skills, and what outcomes had been achieved. One of the most important findings relates to the degree to which the training is translated to additional individuals at the participant’s institution. A number of success cases reported having shared their new knowledge (Intervention Mapping and/or qualitative data analysis principles), known as reach, with research staff including use of the Atlas.ti software program. The scope of our evaluation did not permit follow up with these individuals. However, a simple count indicates that the reported reach had an impact beyond our expectations for the training initiative and in the near future will likely match, if not exceed, the total original training sample (data not shown).

Based upon results obtained for success cases using the training to assist in the development of manuscripts for publication and grant proposals, and the reach of the training – we conclude that our training initiative was very successful. It is particularly important to emphasize that had we not used SCM, we probably would have concluded that this initiative was not very successful and not worth the investment of resources. Moreover, by using SCM, we have a much better understanding of the pathways that success cases took to enhance their own productivity.

For training initiatives, success often is measured in terms of simple numbers. Were participants satisfied? Did participants gain knowledge and to what degree? Did participants use knowledge and skills gained? Although these and other evaluation questions certainly are relevant, the strength of SCM is that it focuses on impact. It asks the important question: Can the impact of a small number of success cases justify the expenditure of resources on a training initiative even when success in the overall sample is low? In our case, the answer to this question is yes. Moreover, the Diffusion of Innovations theory (Rogers, 2003) suggests that reach from our success cases will continue to expand, as more people at the institutions of success cases take interest in their productivity and what has worked for them.

A second major strength of SCM is that it is neither time-intensive nor a costly evaluation strategy. By focusing on success cases (innovators and early adopters in a Diffusion of Innovations model), the sample for which in-depth data is obtained is significantly reduced.

The major weakness of SCM is that it is a case study and therefore the results cannot be generalized. However, for evaluating health promotion and training initiatives, this approach does provide information necessary for decision-making related to the educational program, a critical factor in program evaluation. As a result, generalizability may not be as important as impact and reach. A second weakness is that SCM, by design, does not produce a representative picture of the study sample. However, for gaining a clear idea of what is working and what is not, particularly in pilot work before a large-scale initiative is implemented, the utility of SCM is unmatched. We conclude that health promotion and public health training programs would benefit greatly from incorporating the Success Case Method into their evaluation strategies.

Table 2.

Percentage distribution of Atlas.ti trainee characteristics

| Characteristic | N | % |

|---|---|---|

| Gender | ||

| Female | 11 | 73.3 |

| Male | 4 | 26.7 |

| Academic Affiliation | ||

| Consortium partner institutions | 12 | 80.0 |

| Other academic institutions | 3 | 20.0 |

| Current Position | ||

| Students | 4 | 26.7 |

| Research Staff | 3 | 20.0 |

| Professor | 5 | 33.3 |

| Investigator/Researcher | 3 | 20.0 |

Highlights.

The successful implementation of the Success Case Methods’ steps for public health trainings.

The necessity of redefining training success (not in monetary terms) for public health arena.

Success Case Method is a valuable evaluative method in the public health arena.

Acknowledgments

This publication was possible by grants from the National Center for Research Resources (U54 RR 026139-01A1), the National Institute on Minority Health and Health Disparities (8U54 MD 007587/2U54MD007587), and the National Cancer Institute (U54CA096297/U54CA096300) from the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Special thanks to all training participants for their collaboration at follow up interviews and training facilitators.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bartholomew LK, Parcel GS, Kok G, Gottlieb NH, Fernandez ME. Planning Health Promotion Programs: An Intervention Mapping Approach. 3. San Francisco, California: Jossey-Bass; 2011. [Google Scholar]

- Brinkerhoff RO. The Success Case Method: Find Out Quickly What’s Working and What’s Not. 1. San Francisco, CA: Berrett-Koehler Publisher; 2003. [Google Scholar]

- Brinkerhoff RO. Telling Training’s Story: Evaluation Made Simple, Credible, and Effective. 1. San Francisco, CA: Berrett-Koehler; 2006a. [Google Scholar]

- Brinkerhoff RO. Increasing impact of training investments: an evaluation strategy for building organizational learning capability. Industrial and Commercial Training. 2006b;38:302–307. [Google Scholar]

- Brinkerhoff RO, Mooney TP. Section VI: Measuring and Evaluating Impact -Chapter 30: Level 3 Evaluation. In: Biech Elaine., editor. ASTD Handbook for Workplace Learning Professionals. Alexandria, VA: American Society for Training and Development; 2008. pp. 523–538. [Google Scholar]

- Coryn CLS, Schröter DC, Hanssen CE. Adding a Time-Series Design Element to the Success Case Method to Improve Methodological Rigor: An Application for Non-profit Program Evaluation. American Journal of Evaluation. 2009;30:80–92. [Google Scholar]

- Karim MR, Huda KN, Khan RS. Significance of Training and Post Training Evaluation for Employee Effectiveness: An Empirical Study on Sainsbury’s Supermarket Ltd, UK. International Journal of Business and Management. 2012;7(18):141–148. [Google Scholar]

- Kirkpatrick DL, Kirkpatrick JD. Evaluating training programs. 3. San Francisco: Barrett-Koehler; 2006. [Google Scholar]

- Lee-Kelley L, Blackman D. Project training evaluation: Reshaping boundary objects and assumptions. International Journal of Project Management. 2012;30(1):73–82. [Google Scholar]

- Miller L. 2012 ASTD State of the Industry Report: Organizations Continue to Invest in Workplace Learning. T+D. 2012;66(11):42–48. [Google Scholar]

- Nanda V. An innovative method and tool for role-specific quality-training evaluation. Total Quality Management. 2009;20(10):1029–1039. [Google Scholar]

- Noe R. Employee training and development. 2. New York: McGraw-Hill; 2002. [Google Scholar]

- Olson CA, Shershneva MB, Horowitz-Brownstein M. Peering Inside the Clock: Using Success Case Method to Determine How and Why Practice-Based Educational Interventions Succeed. Journal of Continuing Education in the Health Professions. 2011;31:S50–S59. doi: 10.1002/chp.20148. [DOI] [PubMed] [Google Scholar]

- Owens LT., Jr One More Reason Not to Cut Your Training Budget: The Relationship Between Training and Organizational Outcomes. Public Personnel Management. 2006;35(2):163–172. [Google Scholar]

- Pine CK. Developing Beginning Teachers’ Professional Capabilities in the Workplace: An Investigation of One California County’s Induction Program Using the Success Case Method. UMI Dissertation Publishing; 2006. [Google Scholar]

- Preston K. Leadership perception of results and return on investment training evaluations. UMI Dissertation Publishing; 2010. [Google Scholar]

- Rogers EM. Diffusion of Innovations. 5. New York: Free Press; 2003. [Google Scholar]

- Rowden R. Exploring methods to evaluate the return from training. American Business Review. 2001;19:6–12. [Google Scholar]

- Schweigert FJ. The Meaning of Effectiveness in Assessing Community Initiatives. American Journal of Evaluation. 2006;27:416–436. [Google Scholar]

- Scriven M. Evaluation thesaurus. 4. Newbury Park, CA: Sage; 1991. [Google Scholar]

- Tsang-Kai H. An Empirical study of the training evaluation decision-making model to measure training outcomes. Social Behavior & Personality: An International Journal. 2010;38:87–101. [Google Scholar]

- Twitchell S, Holton EF, Trott JW., Jr Technical Training Evaluation Practices in the United States. Performance Improvement Quarterly. 2000;31:84–109. [Google Scholar]

- Wu E. A Balanced Scorecard for the People Development Function. Organization Development Journal. 2002;25:113–117. [Google Scholar]