Abstract

INTRODUCTION

In 2012, the Veterans Health Administration (VHA) implemented guidelines seeking to reduce PSA-based screening for prostate cancer in men aged 75 years and older.

OBJECTIVES

To reduce the use of inappropriate PSA-based prostate cancer screening among men aged 75 and over.

SETTING

The Veterans Affairs Greater Los Angeles Healthcare System (VA GLA)

PROGRAM DESCRIPTION

We developed a highly specific computerized clinical decision support (CCDS) alert to remind providers, at the moment of PSA screening order entry, of the current guidelines and institutional policy. We implemented the tool in a prospective interrupted time series study design over 15 months, and compared the trends in monthly PSA screening rate at baseline to the CCDS on and off periods of the intervention.

RESULTS

A total of 30,150 men were at risk, or eligible, for screening, and 2,001 men were screened. The mean monthly screening rate during the 15-month baseline period was 8.3 %, and during the 15-month intervention period, was 4.6 %. The screening rate declined by 38 % during the baseline period and by 40 % and 30 %, respectively, during the two periods when the CCDS tool was turned on. The screening rate ratios for the baseline and two periods when the CCDS tool was on were 0.97, 0.78, and 0.90, respectively, with a significant difference between baseline and the first CCDS-on period (p < 0.0001), and a trend toward a difference between baseline and the second CCDS-on period (p = 0.056).

CONCLUSION

Implementation of a highly specific CCDS tool alone significantly reduced inappropriate PSA screening in men aged 75 years and older in a reproducible fashion. With this simple intervention, evidence-based guidelines were brought to bear at the point of care, precisely for the patients and providers for whom they were most helpful, resulting in more appropriate use of medical resources.

KEY WORDS: electronic health records, physician decision support, cancer screening, applied informatics, implementation research, quality improvement

INTRODUCTION

Recommendations regarding PSA-based prostate cancer screening have changed significantly over the past ten years. Appropriate patient selection is critical, given the increased evidence of the 10- to 20-year indolent nature of the majority of prostate cancer and in light of an improved quantitative understanding of the assessment of competing mortality risks1. In 2008, the U.S. Preventive Services Task Force (USPSTF) issued a grade D recommendation against PSA-based screening in men over the age of 74 years.2 At that time, guidance provided by the National Comprehensive Cancer Network (NCCN) and the American Urological Association (AUA) agreed with the principle that screening was not helpful in men with a life expectancy of less than ten years,3,4 and both organizations more recently updated their guidelines to recommend against screening men older than 75 (NCCN) and 70 (AUA) years, or for whom life expectancy is less than 10-15 years.5,6

Despite these recommendations, studies from the last decade have demonstrated high rates of screening among older men. A comparison of self-reported screening rates among a national cohort of men aged 75 years and older from 2005 and 2010 showed no change in screening practices, and revealed an overall screening rate of over 40 % in this elderly age group.7 A report from the Veterans Health Administration (VHA) in 2003 found that PSA screening was performed for 56 % of men aged 70 years and older and 36 % of men aged 85 and older.8 A recent national survey of urologists and radiation oncologists found that 43 % still recommend screening in men 75–79 years of age. Longitudinal follow-up of elderly men screened in 2003 at the VHA confirmed that many suffered significant burdens from treatments for which benefits conferred were debatable.9

In 2012, VHA leadership asked local facilities to address inappropriate overuse of preventive care and identified PSA screening in men aged 75 and older as a potential area for improvement.10 In response, we created a clinical computerized decision support (CCDS) tool to remind providers of current recommendations against PSA-based prostate cancer screening for men in this age group. The tool, a “reminder order check,” or “pop-up” alert, was a new decision support option available within the VHA’s Computerized Patient Record System (CPRS), and one that allowed the use of complex patient-specific logic to prompt an alert at the time that a provider ordered a PSA test. We hypothesized that implementation of a highly specific point-of-care CCDS tool would result in a reduction in inappropriate PSA screening in the elderly.

METHODS

Context

The study setting included all outpatient clinics in the Veterans Affairs Greater Los Angeles Healthcare System (VA GLA), a large urban academic VHA medical facility that includes a tertiary medical center associated with hospital-based primary care clinics, two ambulatory care centers, and eight community-based outpatient clinics (CBOCs), with over 80,000 patients seen annually. As part of the VHA system, the VA GLA receives revenue based on a global budget, has a mature EHR system that has been in use for more than 15 years and interfaces with standardized regional and national databases, and has a strong culture of performance measurement and accountability.11–13 Clinicians see numerous clinical alerts or reminders, and their performance is assessed in part on their response to a subset of these alerts. Report cards are used in some clinical areas, and performance is tied to modest financial incentives at the leadership and individual clinician levels. Team-based care is emphasized. Finally, multiple health services researchers are embedded within the clinical staff at the GLA.14

Just prior to the initial study period, the USPSTF released its controversial and potentially confounding (from the standpoint of this study) recommendation against routine PSA screening for all men, not just those aged 75 and older.15

Computerized Clinical Decision Support Tool

Our project team comprised two internal medicine generalists (CG, PS), the Chief of Informatics (CG), an RN Clinical Applications Coordinator (LO), a staff urologist and informaticist (JS), and the Chief of Urology (CB). In addition, key primary care leaders reviewed the project and contributed to the wording of alerts.

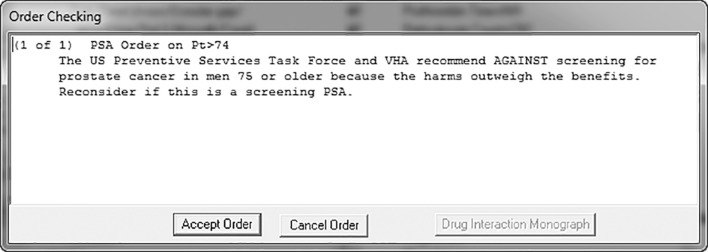

We developed a pop-up as a means to alert providers ordering a screening PSA blood test in a patient 75 years of age or older (Table 1). We defined a screening PSA as any PSA test ordered on a male patient aged 75 or older, excluding those with any of the following: a) a diagnosis of existing malignant or benign prostate disease, including a diagnosis of “elevated prostate-specific antigen”, b) use of either an enhancer or suppressor of testosterone, or c) a PSA level of 3.5 ng/ml or greater (3.5 ng/ml was the average cutoff level of two large randomized controlled trials on prostate cancer screening16,17) on either of their two most recent PSA tests. When triggered, a brief interruptive educational message was shown on the ordering screen: “The US Preventive Services Task Force and VHA recommend AGAINST screening for prostate cancer in men 75 or older because the harms outweigh the benefits. Reconsider if this is a screening PSA.” (Fig. 1).

Table 1.

Criteria Used in the CCDS Tool to Define Screening PSA in Men Aged 75 Years and Older

| CCDS trigger | • PSA order |

| Exclusion criteria (any one of the listed factors) | • Age <75 years |

| • A history of any of the following CPT codes: 85153, J1950, J9217, J9219, J9202, 55840, 55842, 55845, 55810, 55812, 55815, 55866, 55873, 55840, 55842, 55845, 55873, 55875, 84152, 84154, 55700, 55801, 55821, 55831, 52450, 52601, 52612, 52614, 52620, 52630, 52640, 52700, 52648, 52500 | |

| • A history of any of the following ICD-9 diagnosis codes: 185, 233.4, 236.5, 239.5, 602.3, 60.5, 60.62, 222.2, 600.00, 600.01, 600.10, 600.11, 600.20, 600.21, 600.90, 600.91, 601.0, 601.1, 601.2, 601.3, 601.4, 601.8, 601.9, 602.8, 602.9, 790.93, 60.21, 60.29, 60.3, 60.4, 60.94, 60.95, 60.96, 60.97, 60.0, 60.1, 60.11, 60.12, 60.15, 60.18, 60.19, 60.61, 60.69, 60.92, 60.93, 60.99, 790.93 | |

| • Any history of a prescription for any of the following drugs: leuprolide, goserelin, bicalutamide, flutamide, nilutamide, degarelix , abarelix | |

| • A history of receiving testosterone supplementation within the last 12 months | |

| • A history of a PSA level > 3.5 ng/ml on either of the prior two PSA screenings |

Figure 1.

Screenshot of CCDS triggered by ordering a screening PSA for a man aged 75 years or older.

The pop-up gave the provider the option of continuing with or canceling the order, but did not require a justification for either action. No providers were exempted from receiving this reminder. The alert logic was validated by chart review using a convenience sample of 40 cases where a PSA test was ordered on men aged 75 and older. Of the 20 cases in which the alert would have been triggered, one was misclassified as a screening PSA because a pre-existing diagnosis of prostate cancer had not been electronically coded. Of the 20 cases in which the alert would not have been triggered, all patients were verified to have met at least one of our exclusion criteria. In all 40 cases, the ordered PSA test was performed. We estimated the impact of some of the alert criteria on the number of alert triggers, and found that within a 6-month window, the inclusion of men with benign diagnoses would have nearly doubled the number of alerts, and that the use of age alone as an exclusion criteria would have led to a nearly eightfold increase in alerts. We used the taxonomy outlined by Wright et al. to describe the decision support content.18

Implementation

The wording of the alert was not pilot-tested, nor were comments solicited from providers beyond the process described above. The alert was activated June 1, 2012, without specific educational or help desk support. Help desk staff reported receiving no questions about the alert. While many clinical alerts are used for audit and feedback and are subject to internal financial incentives, this instrument was not. No changes were made during the study to alert wording, logic, implementation plan, or study design. This project was not funded. We referred to the Template for Intervention Description and Replication to describe the intervention.19

Data Analysis

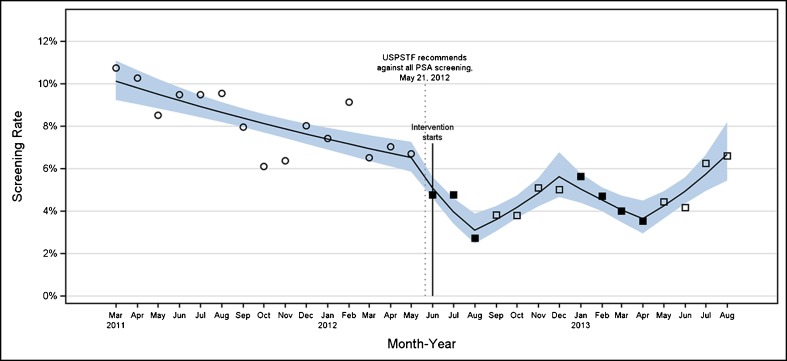

With regard to the screening rate, to define the denominator, we used the same definition of screening PSA as that used for the pop-up alert, and to determine the numerator, we used the number of PSA orders on a monthly basis in unique patients who had a visit to any primary care clinic. We used a prospective interrupted time series study design, turning the reminder on from June through August 2012 and from January through April 2013. The off periods were September through December 2012 and May through August 2013. As a baseline, we selected the previous 15-month period, from March 2011 through May 2012. We used a narrow (i.e., monthly) time interval to better attribute changes in PSA screening rates to the pop-up. To calculate the unadjusted screening rates for each month in the study period, we used the pop-up definition of screening PSA to define the eligible at-risk patients for the denominator, and the count of PSA orders in unique patients who had a visit to any primary care clinic for the numerator. We also fit a Poisson regression with five linear splines, one for each study period. From the resulting model, we estimated the log screening rate for each month and then calculated the screening rate ratio (SRR) and 95 % CI for each period by exponentiation of the linear combinations of the spline coefficients and testing for significance (equality of one) within each study period. Equivalence of SRRs between study periods was tested with linear contrasts of the spline coefficients. We plotted the observed and estimated rates for each month and the 95 % confidence band around the estimated rates (Fig. 2).

Figure 2.

Monthly PSA-based prostate cancer screening rate trend during the baseline and interrupted on/off study periods. Baseline screening rates are represented by open circles. Screening rates when the CCDS tool was on are represented by solid black squares, and screening rate from months when the CCDS tool was turned off are represented by open black squares. The solid line is the predicted screening rate generated by our model. The blue band is the 95 % confidence interval around this predicted rate. The confounding secular event is marked with a dotted vertical line and the study starting point with a solid vertical line.

We performed sensitivity analyses of some specifications of the pop-up logic, including the exclusion of PSA orders in patients with benign prostatic diseases and the impact of a PSA cutoff of 3.5. To facilitate external comparisons to annually reported screening rates, we compared monthly screening rates to the annual screening rate for calendar-year 2011.

This study was granted exempt status by the VA GLA institutional review board. Data was obtained through a series of Veterans Health Information Systems and Technology Architecture (VistA) extracts.

RESULTS

During the 30-month analysis period, 30,150 men aged 75 years and older were “at risk” or eligible for screening, meaning they had a visit and did not meet any of our alert exclusion criteria. Of these, PSA tests were ordered for 2,001 (Table 2). The unadjusted mean monthly screening rate during the 15-month baseline period was 8.3 %, and during the 15-month intervention period, was 4.6 %. The annual screening rate during calendar-year 2011 was 27.4 %, as compared with the average monthly rate of 9.2 % during the same time frame, which would be expected in a patient population that often makes more than one annual visit to primary care. Turning the CCDS reminder on corresponded with sharply decreasing screening rates, which dropped from 6.7 % to 2.7 % during the first on period and 5.0 % to 3.5 % during the second on period. Turning the alert off corresponded with similarly sharp increases in screening rates, from 2.7 % to 5.0 % in the first off period and then from 3.5 % to 6.6 % in the second off period (see also Fig. 2). The reproducibility of this effect suggests a screening rate reduction as a result of the CCDS tool that was above and beyond the already decreasing baseline trend.

Table 2.

Monthly Screening Rate (SR), Change in Screening Rate Per Period, Screening Rate Ratio (SRR) Per (Within) Period (1 = No Change), and p-value for Test of Non-equivalence Between Period SRRs

| Period | Month | Monthly SR (%) | Period SR change Δ (%) | Within-period SRR (95 % CI) | Period SSR vs. baseline SRR |

|---|---|---|---|---|---|

| Baseline | Mar 2011 | 10.8 | −4.1 (−37.6) | 0.97* (0.96–0.98) | |

| Apr 2011 | 10.3 | ||||

| May 2011 | 8.5 | ||||

| Jun 2011 | 9.5 | ||||

| Jul 2011 | 9.5 | ||||

| Aug 2011 | 9.5 | ||||

| Sep 2011 | 8.0 | ||||

| Oct 2011 | 6.1 | ||||

| Nov 2011 | 6.4 | ||||

| Dec 2011 | 8.0 | ||||

| Jan 2012 | 7.4 | ||||

| Feb 2012 | 9.1 | ||||

| Mar 2012 | 6.5 | ||||

| Apr 2012 | 7.0 | ||||

| May 2012 | 6.7 | ||||

| Reminder on (1) | Jun 2012 | 4.8 | −4.0 (−59.7) | 0.78* (0.72–0.85) | p < 0.0001 |

| Jul 2012 | 4.3 | ||||

| Aug 2012 | 2.7 | ||||

| Reminder off (1) | Sep 2012 | 3.8 | 2.3 (84.3) | 1.16* (1.07–1.26) | p < 0.0001 |

| Oct 2012 | 3.8 | ||||

| Nov 2012 | 5.1 | ||||

| Dec 2012 | 5.0 | ||||

| Reminder on (2) | Jan 2013 | 5.6 | −1.5 (−29.8) | 0.90* (0.83–0.97) | p = 0.056 |

| Feb 2013 | 4.7 | ||||

| Mar 2013 | 4.0 | ||||

| Apr 2013 | 3.5 | ||||

| Reminder off (2) | May 2013 | 4.4 | 3.1 (87.4) | 1.16* (1.07–1.27) | p < 0.0001 |

| Jun 2013 | 4.2 | ||||

| Jul 2013 | 6.2 | ||||

| Aug 2013 | 6.6 |

*indicates significant within-period change in screening rate

Within each study period, there was a significant change in estimated monthly screening rates, as evidenced by the SRRs from the regression analysis, which were all significantly different from 1 (p < 0.0001 for all SRRs) (Table 2, Fig. 2). Looking between periods, the rate of decline in SRR was significantly greater in the reminder-on (1) period compared to the baseline (SRR 0.78 vs. 0.97, p < 0.0001); a trend was seen toward a steeper rate of decline in the reminder-on (2) compared to the baseline (SRR 0.90 vs. 0.97, p = 0.056). Both reminder-off periods showed significant and similar increases in SRRs compared to the baseline trend (SRR 1.16 and 1.16 vs. 0.97, respectively; both p <0.0001) as well as compared to the preceding reminder-on periods (all p <0.0001).

To evaluate the impact of excluding PSA tests performed in men with benign prostate diseases, we performed sensitivity analysis of the unadjusted monthly screening rate in 2011 using a definition of screening PSA that did not exclude prior diagnoses of benign prostate disease. This showed an expected increase in both at-risk and screened men of 42.9 % and 39.7 %, respectively, with an estimated monthly addition of an average of 70.5 men for whom receiving PSA screening would be classified as inappropriate. The effect on the screening rate, however, was small, with an absolute difference of 0.6 % and an average percentage difference of 12.2 %.

The May 21, 2012 release of USPSTF recommendations against all PSA screening occurred 10 days prior to the initiation of our intervention, and was accompanied by widespread media coverage.15 The rate of decline during the On-1 period was significantly greater than that of the On-2 period (p < 0.01), suggesting an additive effect from this event.

DISCUSSION

We demonstrated a significant reduction in PSA-based screening for prostate cancer in men aged 75 years and older through the use of a highly specific and targeted CCDS pop-up alert. While we identified a secular trend of decreasing PSA screening, our study demonstrated significant reductions in PSA screening among men aged 75 and older that were most likely attributable to the pop-up, as underscored by the significant increase in screening rates during both off periods despite ongoing media coverage of the USPSTF’s controversial recommendation to discontinue all PSA screening.

At the core of the current debate regarding the benefits and harms of PSA-based prostate cancer screening is evidence derived from an era in which PSA screening was applied relatively indiscriminately to men over the age of 50, and in which treatment was applied indiscriminately to those diagnosed with prostate cancer.16,17 Both the detection and management of prostate cancer have since been refined, and one area of consensus is in the avoidance of screening among men aged 75 and older. While screening may benefit some men in this age group, avoidance is considered the default, and instruments like ours can be part of efforts—as exemplified by the Choosing Wisely campaign—to encourage appropriate use of health care resources.5,6,20,21

This study supports prior work demonstrating the capacity of health information technology to improve processes of care and of CCDS to change provider behavior.22–24 Our study found a median benefit of 2.1 % to clinical process-of-care measures, similar to the rate of 3.8 % found in a 2009 Cochrane Review on the effect of CCDS on process of care. In the same Cochrane Review, only 4 of the 32 included studies looked at CCDS tools as a way to reduce unwanted behavior.24 Our study adds to the small number of studies in this area, and appears to confirm the finding that CCDS instruments are equally effective at increasing and decreasing behaviors. It also demonstrated a significant change in provider behavior, despite a relatively small gap in quality.

One problem with CCDS tools and other alerts is alert fatigue. A recent review found that providers overrode alerts 49–96 % of the time.25 In one study, VHA clinicians rated only 11 % of reminders as clinically relevant, and in another study, a VA researcher found that 80 % of drug interaction warnings were ignored.26,27 Studies of CCDS tools that allow for more complex data input have shown better performance of alerts.28,29 One human factors analysis of medication alerts identified 15 ways to improve alerts, with the reduction of redundant alerts and the inclusion of adequate information to facilitate physician decision-making as two of the most important.30 The CCDS instrument that we developed used complex trigger criteria to favor specificity over sensitivity in an attempt to limit false triggers and to avoid contributing to alert fatigue. We believe that the combination of a highly specifiable pop-up architecture such as ours and the electronic availability of a wide range of structured clinical data (including inpatient and outpatient diagnosis and procedure codes, laboratory, pharmacy, demographics, and problem list data) allowed our alert to be more targeted than most previously reported CCDS tools, which may have contributed to its success.28,29,31–33 Formal development of the alert language through focus groups and solicited feedback may have further improved its performance.

While this study was not designed to evaluate provider learning, we assumed that providers would learn from the alert, and thus an alert would not need to be triggered for every patient in order to change provider behavior. However, the complete rebound in monthly screening rates observed during each off period suggests that provider learning during the study period was limited. This may reflect the narrow implementation strategy or the rotation of resident physician staff in some clinics, although only a minority of the clinics were resident-staffed. Additional investigation of the barriers to and facilitators of provider learning could direct further CCDS tool and implementation refinement.34,35

To determine the clinical importance of the intervention that was employed, we estimated the expected number of screenings that might be prevented with continuous use of this tool. We calculated the difference between the mean number of men screened during the final months of the on and off periods (41), and annualized it to arrive at an estimate of 492 screenings prevented over a period of one year. In the VHA in 2003, biopsies were performed on 21 % of men over the age of 75 with a PSA greater than 4.0, and 11 % of all men 75 and older with a PSA above 4.0 were treated. While these rates are likely greater than those in current practice, if we apply them to our population, we estimate that use of the CCDS tool in GLA would prevent up to 103 biopsies and the possible over-treatment of 54 men each year.9 These estimates are relatively small, but easy to achieve. Moreover, the relative ease of scaling this targeted and simple intervention across the VHA would be expected to multiply these favorable effects.

LIMITATIONS

External validity may be limited outside the setting of large integrated healthcare systems with mature EHRs and a strong quality improvement culture. We measured the correlation of PSA lab ordering with the on–off status of the CCDS tool, but did not directly measure whether PSA orders were canceled as a result of CCDS use. Furthermore, we measured screening rates by PSA lab orders and not by resultant lab values, possibly introducing some measurement error, although no such discrepancy was seen in the alert logic validation. Moreover, orders are a better reflection of provider intent, and this was the target of the intervention. The specificity of the CCDS alert logic may have underestimated the screening rate. We did not measure practice-, provider-, or patient-level factors (the collection of which was beyond the scope of the project), increasing the chance of a type I error.

CONCLUSION

The implementation of a highly specific CCDS tool alone was shown to significantly reduce inappropriate PSA screening in men aged 75 years and older in a reproducible fashion. Through this simple intervention, evidence-based guidelines were brought to bear at the point of care for precisely the patients and providers for whom they were most helpful, resulting in a more appropriate use of medical resources. As electronic health record systems are becoming more sophisticated, the opportunity to develop more specific “personalized” CCDS alerts is growing, and is worth considering in many clinical situations.

Acknowledgments

Contributors

The UCLA IDRE (Institute for Digital Research and Education) Statistical Consulting Group contributed to the statistical analysis.

Prior Presentations

American Urological Association Annual Meeting, 2013. Poster

ASCO (American Society of Clinical Oncology) Quality Symposium, 2013. Poster

Conflict of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Daskivich T, et al. Severity of comorbidity and non-prostate cancer mortality in men with early-stage prostate cancer. Arch Intern Med. 2010;170(15):1396–1397. doi: 10.1001/archinternmed.2010.251. [DOI] [PubMed] [Google Scholar]

- 2.USPSTF (US Preventive Services Task Force) Screening for prostate cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2008;149(3):185–191. doi: 10.7326/0003-4819-149-3-200808050-00008. [DOI] [PubMed] [Google Scholar]

- 3.Greene KL, et al. Prostate Specific Antigen Best Practice Statement: 2009 Update. J Urol. 2009;182(5):2232–2241. doi: 10.1016/j.juro.2009.07.093. [DOI] [PubMed] [Google Scholar]

- 4.National Comprehensive Cancer Network. National Comprehensive Cancer Network Clinical Guidelines in Oncology: Prostate Cancer Early Detection V1.2012. 2012 [cited 2015 February 9th]; Available from: http://www.nccn.org/about/permissions/request_form.asp.

- 5.National Comprehensive Cancer Network. NCCN Clinical Practice Guidelines in Oncology: Prostate Cancer Early Detection V1.2014 2014 [cited 2015 February 9th]; Available from: http://www.nccn.org/professionals/physician_gls/pdf/prostate_detection.pdf.

- 6.American Urological Association. EARLY DETECTION OF PROSTATE CANCER GUIDELINE. 2013 [cited 2015 February, 9th]; Available from: http://www.auanet.org/education/guidelines/prostate-cancer-detection.cfm. [DOI] [PubMed]

- 7.Prasad SM, et al. 2008 US Preventive Services Task Force recommendations and prostate cancer screening rates. JAMA. 2012;307(16):1692–1694. doi: 10.1001/jama.2012.534. [DOI] [PubMed] [Google Scholar]

- 8.Walter LC, et al. PSA screening among elderly men with limited life expectancies. JAMA. 2006;296(19):2336–2342. doi: 10.1001/jama.296.19.2336. [DOI] [PubMed] [Google Scholar]

- 9.Walter LC, et al. Five-year downstream outcomes following prostate-specific antigen screening in older men. JAMA Intern Med. 2013;173(10):866–873. doi: 10.1001/jamainternmed.2013.323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.VHA Office of Healthcare Transformation. VHA T21 Implementation Guidance: FY2012, 2012: VHA Intranet.

- 11.McGlynn EA, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348(26):2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 12.Ryoo JJ, Malin JL. Reconsidering the Veterans Health Administration: A Model and a Moment for Publicly Funded Health Care Delivery. Ann Intern Med. 2011;154(11):772–773. doi: 10.7326/0003-4819-154-11-201106070-00010. [DOI] [PubMed] [Google Scholar]

- 13.Rubenstein LV, et al. A Patient-Centered Primary Care Practice Approach Using Evidence-Based Quality Improvement: Rationale, Methods, and Early Assessment of Implementation. J Gen Intern Med. 2014;29:S589–S597. doi: 10.1007/s11606-013-2703-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yano EM, et al. Patient Aligned Care Teams (PACT): VA’s Journey to Implement Patient-Centered Medical Homes. J Gen Intern Med. 2014;29:S547–S549. doi: 10.1007/s11606-014-2835-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.USPSTF (US Preventive Services Task Force). Screening for Prostate Cancer. 2012 May, 2012 [cited 2015 February 9th]; Available from: http://www.uspreventiveservicestaskforce.org/Page/Topic/recommendation-summary/prostate-cancer-screening?ds=1&s=prostate.

- 16.Andriole GL, et al. Mortality results from a randomized prostate-cancer screening trial. N Engl J Med. 2009;360(13):1310–1319. doi: 10.1056/NEJMoa0810696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schröder FH, et al. Screening and prostate-cancer mortality in a randomized European study. N Engl J Med. 2009;360(13):1320–1328. doi: 10.1056/NEJMoa0810084. [DOI] [PubMed] [Google Scholar]

- 18.Wright A, et al. A Description and Functional Taxonomy of Rule-based Decision Support Content at a Large Integrated Delivery Network. J Am Med Inform Assoc. 2007;14(4):489–496. doi: 10.1197/jamia.M2364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hoffmann TC, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687. doi: 10.1136/bmj.g1687. [DOI] [PubMed] [Google Scholar]

- 20.Brook RH. The role of physicians in controlling medical care costs and reducing waste. JAMA. 2011;306(6):650–651. doi: 10.1001/jama.2011.1136. [DOI] [PubMed] [Google Scholar]

- 21.Cassel CK, Guest JA. Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA. 2012;307(17):1801–1802. doi: 10.1001/jama.2012.476. [DOI] [PubMed] [Google Scholar]

- 22.Goldzweig CL, et al. Costs and benefits of health information technology: new trends from the literature. Health Aff (Millwood) 2009;28(2):w282–w293. doi: 10.1377/hlthaff.28.2.w282. [DOI] [PubMed] [Google Scholar]

- 23.Buntin MB, et al. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood) 2011;30(3):464–471. doi: 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 24.Shojania KG, et al. The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev. 2009;3 doi: 10.1002/14651858.CD001096.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.van der Sijs H, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13(2):138–147. doi: 10.1197/jamia.M1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kesselheim AS, et al. Clinical decision support systems could be modified to reduce 'alert fatigue' while still minimizing the risk of litigation. Health Aff (Millwood) 2011;30(12):2310–2317. doi: 10.1377/hlthaff.2010.1111. [DOI] [PubMed] [Google Scholar]

- 27.Spina JR, et al. Clinical relevance of automated drug alerts from the perspective of medical providers. Am J Med Qual. 2005;20(1):7–14. doi: 10.1177/1062860604273777. [DOI] [PubMed] [Google Scholar]

- 28.Eppenga WL, et al. Comparison of a basic and an advanced pharmacotherapy-related clinical decision support system in a hospital care setting in the Netherlands. J Am Med Inform Assoc. 2012;19(1):66–71. doi: 10.1136/amiajnl-2011-000360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Woods AD, et al. Clinical decision support for atypical orders: detection and warning of atypical medication orders submitted to a computerized provider order entry system. J Am Med Inform Assoc. 2013;21:569–573. doi: 10.1136/amiajnl-2013-002008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Russ AL, et al. A human factors investigation of medication alerts: barriers to prescriber decision-making and clinical workflow. AMIA Annu Symp Proc. 2009;2009:548–552. [PMC free article] [PubMed] [Google Scholar]

- 31.Harinstein LM, et al. Use of an abnormal laboratory value-drug combination alert to detect drug-induced thrombocytopenia in critically Ill patients. J Crit Care. 2012;27(3):242–249. doi: 10.1016/j.jcrc.2012.02.014. [DOI] [PubMed] [Google Scholar]

- 32.Shah NR, et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc. 2006;13(1):5–11. doi: 10.1197/jamia.M1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Starmer JM, Talbert DA, Miller RA. Experience using a programmable rules engine to implement a complex medical protocol during order entry. Proc AMIA Symp. 2000:829–32. [PMC free article] [PubMed]

- 34.Brouwers MC, et al. The landscape of knowledge translation interventions in cancer control: what do we know and where to next? A review of systematic reviews. Implement Sci. 2011;6:130. doi: 10.1186/1748-5908-6-130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rothschild JM, et al. Assessment of education and computerized decision support interventions for improving transfusion practice. Transfusion. 2007;47(2):228–239. doi: 10.1111/j.1537-2995.2007.01093.x. [DOI] [PubMed] [Google Scholar]