Abstract

Cervical auscultation is the recording of sounds and vibrations caused by the human body from the throat during swallowing. While traditionally done by a trained clinician with a stethoscope, much work has been put towards developing more sensitive and clinically useful methods to characterize the data obtained with this technique. The eventual goal of the field is to improve the effectiveness of screening algorithms designed to predict the risk that swallowing disorders pose to individual patients’ health and safety. This paper provides an overview of these signal processing techniques and summarizes recent advances made with digital transducers in hopes of organizing the highly varied research on cervical auscultation. It investigates where on the body these transducers are placed in order to record a signal as well as the collection of analog and digital filtering techniques used to further improve the signal quality. It also presents the wide array of methods and features used to characterize these signals, ranging from simply counting the number of swallows that occur over a period of time to calculating various descriptive features in the time, frequency, and phase space domains. Finally, this paper presents the algorithms that have been used to classify this data into ‘normal’ and ‘abnormal’ categories. Both linear as well as non-linear techniques are presented in this regard.

Index Terms: cervical auscultation, swallowing sounds, swallowing accelerometry signals, signal processing

I. Introduction

DYSPHAGIA is a term used to describe swallowing impairments [1]. A common comorbid disorder, it is estimated that ten million Americans are diagnosed with dysphagia every year [2]. Nervous disorders, particularly stroke, are some of the most common causes of dysphagia as they can directly affect the strength and coordination of muscle activity [2], [3]. If these disorders affect the cranial nerves of a patient, which control the muscles involved during swallowing activity among other things, then the patient’s airway may not be sufficiently protected during a swallow [2], [4]. On its own, dysphagia is a minor to moderate inconvenience that can cause discomfort when eating. However, this greatly increases the risk of aspiration, where swallowed material is allowed to enter the airway, and can lead to much more serious health complications including pneumonia, malnutrition, dehydration, and even death [3], [5].

Assessment of swallowing function typically begins with screening, where a test is administered to determine if a patient likely has a swallowing disorder and requires a more detailed examination. Several methods, including a water swallow screen [6], pulse oximetry [7], and electromyography [8], have been proposed over the last two decades as less invasive methods to determine the presence of aspiration during swallowing. However with the exception of the water swallow screen, which has exhibited an acceptable predictive value for aspiration in some studies, these methods have exhibited unacceptable sensitivity and specificity and are not yet proven to be reliable tests. Another method, cervical auscultation, has received a significant amount of attention in recent years. Many researchers have attempted to implement this method to screen for dysphagia, as it is just as non-invasive and inexpensive to use as these other proposed methods. However, research on the subject has been somewhat disorganized and uncoordinated. In the following manuscript we hope to introduce the idea of using cervical auscultation to screen for swallowing disorders and to present the current efforts of the field in an organized manner.

II. Swallowing Physiology

Physiologically, swallowing is divided into three separate phases. The first stage, the oral phase, consists entirely of voluntary activity [9]. It begins when the mouth is opened to allow material to enter the oral cavity and end when the tongue is pressed against the hard palette and the bolus is propelled in the posterior direction [9]. The pharyngeal phase, whose activity is involuntary but may be initiated consciously, is the second stage of deglutition [9]. It begins once the bolus has passed the palatoglossal arch and entered the oropharynx and ends as the bolus reaches the upper esophageal sphincter [10]. This phase involves temporarily sealing all unwanted bolus pathways including the nasopharynx by the soft palate, the oral cavity by the tongue, and the larynx by the epiglottis [10]. Because of these valves, all actions such as breathing, coughing, and mastication are inhibited during the pharyngeal phase [11]. The oropharynx and laryngopharynx, larynx, and hyoid structures are all then pulled in the superior and anterior directions so as to accept the bolus and to further seal the larynx and nasopharynx [10]. Peristalsis of the oropharynx and layngopharynx muscles then moves the bolus down towards the upper esophageal sphincter [10]. The third stage is the esophageal phase and is completely involuntary [9]. As the bolus is travelling through the pharynx, the upper esophageal sphincter relaxes to allow the bolus to enter the esophagus [9]. Peristalsis of the muscles surrounding the pharynx and esophagus push the bolus downward until it passes through the lower esophageal sphincter and into the stomach [11]. The pharynx, larynx, and hyoid all relax and return to their initial positions after the bolus has passed into the esophagus [11]. The tongue, vocal cords, epiglottis, and soft palate likewise return to their resting positions and non-swallowing activities can resume [11].

In simplest terms, dysphagia occurs when this process does not work as intended. As stated previously, the greatest concern for the patient’s health is the risk of aspiration. Should the airway not be protected sufficiently during a swallow, such as the epiglottis not closing fully or too slowly for example, then the swallowed material would be able to enter the larynx and cause complications for the patient. The goal of the various non-invasive screening techniques is to be able to identify when such an abnormal event occurs without the direct aid of videofluoroscopy. Specifically for cervical auscultation, it is proposed that the events which cause an abnormal swallow will also change the acoustic and vibratory pattern of the swallow. Given the large number of muscles and structures involved in a swallow, differentiating normal swallowing sounds and vibrations from all possible abnormal situations is a task that could be possible, but has not yet been solved.

III. Cervical Auscultation

In the simplest terms, auscultation is the observation of the internal workings of the human body by use of sounds. Cervical auscultation then, applies this concept to the neck and throat in order to monitor swallowing. Obviously, the stethoscope is the most commonly referred to device in the field since it has been used for centuries and is still the most widely used in the clinical setting. Microphones are becoming more common as of late, however, as more research is put towards analysing the subtler characteristics of recorded auscultation data. Several groups have attempted to correlate these sounds with physiologic events typically only observable with imaging methods such as videofluoroscopy. Additionally, research in this area has been spawned by trends to optimize and add electronic automation to some medical diagnostic techniques. A microphone can, in theory, record the same signal as a stethoscope, but the former device more commonly has a flat frequency response while the later is designed to match the non-linear human auditory system [12]. Though both methods produce data that can be digitized and analysed, microphones are more often capable of recording a wider range of unfiltered signals. Cervical auscultation has also, at times, been used to refer to swallowing accelerometry [13]–[15]. Although accelerometers detect vibrations caused by the spatial displacement of physical objects rather than sounds produced by the propagating pressure waves generated by moving objects, the two signals provide complementary information. This is due to the fact that these two types of signals are generated by the same phenomena during swallowing: movements of oropharyngeal structures and the flow of swallowed material through the aerodigestive tract. Because they are so closely related, it is logical to include swallowing accelerometry in this field of study.

It is important to note, that the definition of cervical auscultation has not been clearly defined in an official capacity, and that the line between swallowing accelerometry and swallowing sounds is rather blurred. Several well-cited experiments, in fact, explicitly refer to swallowing accelerometry signals as acoustic signals [16]–[19]. Despite their physical similarities, research has demonstrated that swallowing accelerometry and sounds are not interchangeable [16], [20]–[22]. For this paper, “cervical ausculation” will collectively refer to the recording of both types of data (acoustic and vibratory) signals on or near the neck during swallowing while specific signal types will be indicated for clarity.

Cervical auscultation has been a key research topic for a few reasons. First, its accuracy in detecting physiological events is still up for debate. To date, few studies have shown the ability of a human observer to reliably interpret the observed pharyngeal sounds produced during swallowing and correlate them to actual physiologic events observed with accepted imaging techniques. Second, little research has been published indicating whether the use of non-human objective methods of analysing these signals might be promising toward increasing the accuracy and objectivity of cervical auscultation. In the clinical setting, the Speech-Language Pathologist evaluating a patient’s swallowing function may use a stethoscope to listen for “notable clicks and pops” while a patient swallows and base some of their impressions on that information [13]. By observing these sounds and linking them to their causative physiological events, we can gain a better understanding of whether auscultation offers increased screening or diagnostic value for swallowing disorders. Furthermore, success of this technology would also open the door to increasing the objectivity of interpretations of acoustic and vibratory data emanating from the aerodigestive tract during swallowing. This could reduce the subjectivity of auscultation techniques and is arguably inherent in all clinical, human-interpreted, diagnostic procedures. Second, the process of screening for swallowing-related aspiration or foreign matter in the airway, has become ubiquitous in hospitals and other health care facilities. Because of the negative impact of aspiration in conditions like stroke, neurodegenerative diseases, and many other conditions, these screening procedures have been universally recognized as essential [23]–[26]. Significant reductions of health care associated pneumonia rates have been demonstrated in facilities instituting formal screening protocols [25]. Additionally, using more objective screening tactics provides the potential to greatly reduce public health cost and human morbidity and mortality associated with swallowing disorders. Since cervical auscultation is extremely accessible, inexpensive, and easy to implement in the screening process, it has the potential to add needed objective data that could increase screening accuracy and early detection of risk. This outcome could provide significant savings to the health care industry if widely adopted by increasing the precision of dysphagia screening and by identifying at-risk patients before complications arise.

This paper aims to provide an overview of signal analysis techniques used in cervical auscultation research. It begins by explaining the methods of transduction, what devices are used and where they are located during recording, before detailing what techniques are used to condition and filter those signals. It then continues on to explain how researchers have attempted to characterize cervical auscultation signals and how they have attempted to use that information to differentiate abnormal from healthy swallows. As the field is still in its early stages this paper will hopefully serve as a reference guide for what has been accomplished while demonstrating the shortcomings of previous work. From that foundation, this paper should be able to provide guidance on future areas of research in the field.

IV. Transducer Selection

As expected, the stethoscope was one of the earliest used transducers in this field and is still regularly utilized by researchers and clinicians [13], [14], [27], [28]. In general, stethoscopes have been used in studies that have compared the accuracy of subjective judgements made during clinical, non-instrumented evaluations of swallowing function to the actual physiological and kinematic events recorded using imaging technology. They have sought to determine whether auscultation sounds are correlated to the physiology of the patient’s swallowing events. The studies done by Borr, et al., Zenner, et al., and Marrara, et al. are prime examples. In these studies, clinical experts were asked to determine if several features of patents’ swallows were normal or abnormal based on a non-concurrent comparison of videofluoroscopic imaging data to observations made at the bedside using a stethoscope [14], [27], [29]. The feature assessments of the two methodologies were then compared in order to relate the findings of the stethoscope evaluation to an accepted standard [14], [27], [29]. In general, these studies found a high rate of agreement between the bedside evaluation and videofluoroscopic screening [14], [27], [29]. However, these studies suffered from design flaws including comparison of auscultation and imaging data that were not recorded concurrently during swallowing and by the use of judges who were not blinded to the results of the auscultation evaluation when interpreting the imaging data. While the sensitivity and specificity of the stethoscope evaluation in these studies was not high enough to support a stand-alone screening procedure, and despite the design flaws, all of the studies agreed that it is a beneficial supplement to the videofluoroscopic evaluation [14], [27], [29].

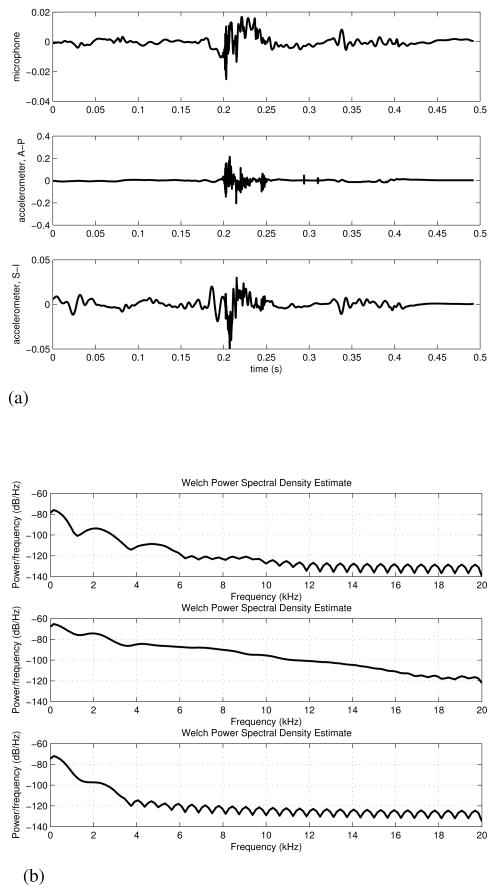

Utilizing additional hardware, such as microphones and accelerometers, has become more common in swallowing research in recent years. These devices are not particularly common in the clinical setting, but they offer clear advantages in the analysis of cervical auscultation compared to clinical data observed with stethoscopes. In particular, being able to digitize and record the signal opens many possibilities for processing and analysis of signal characteristics that are not available with a subjective, stethoscope-based analysis. Figure 1 provides a visual example of the signals obtained in this manner from a single participant and demonstrates how the signal can noticeably vary between transducers. However, little has been done to elucidate differences between acoustic and vibratory signals in defining swallowing related events. The signal/transducer combination used in a given project is often chosen at the whim of the experimenter. Several studies have not systematically evaluated the variations of their instrumentation and falsely assume that swallowing vibrations and sounds are equivalent [16], [17], [19], [30], [31]. Four papers have been published in the last two decades that have investigated the differences between accelerometers and microphones in swallowing applications. The earliest and an often cited work by Takahashi. et al. found that, of the models tested, their accelerometer had a flatter frequency response than their chosen microphone and so was superior in a signal analysis application [16]. Cichero, et al. and Murdoch, et al. later repeated this first experiment, but instead investigated the signal to noise ratio of the devices [20]. Their results were the exact opposite of Takahashi, et al.’s original work and concluded that a microphone produced a superior signal [20]. Only very recently were the final two studies published that attempted to address this contradiction. Rather than trying to judge the superior device, Dudik, et al. and Reynolds, et al. investigated a multitude of signal features and simply described how the devices differed when simultaneously recording swallowing events [21], [22]. The selection of which transducer was superior was left unanswered, as it seems to depend entirely on what signal processing and analysis techniques are used by the researcher as well as what signals are being analysed.

Fig. 1.

A single swallow simultaneously recorded with both a microphone and dual axis accelerometer [21]. One can see obvious differences between sound and vibratory signals as well as different vibration directions.

(a) Time domain recording. Top figure: Microphone, Middle figure: Anterior-Posterior direction of the accelerometer, Bottom figure: Superior-Inferior direction of the accelerometer. This figure has been previously published by BioMed Central in [21].

(b) Corresponding power spectral density estimates of (1a)

V. Transducer Location

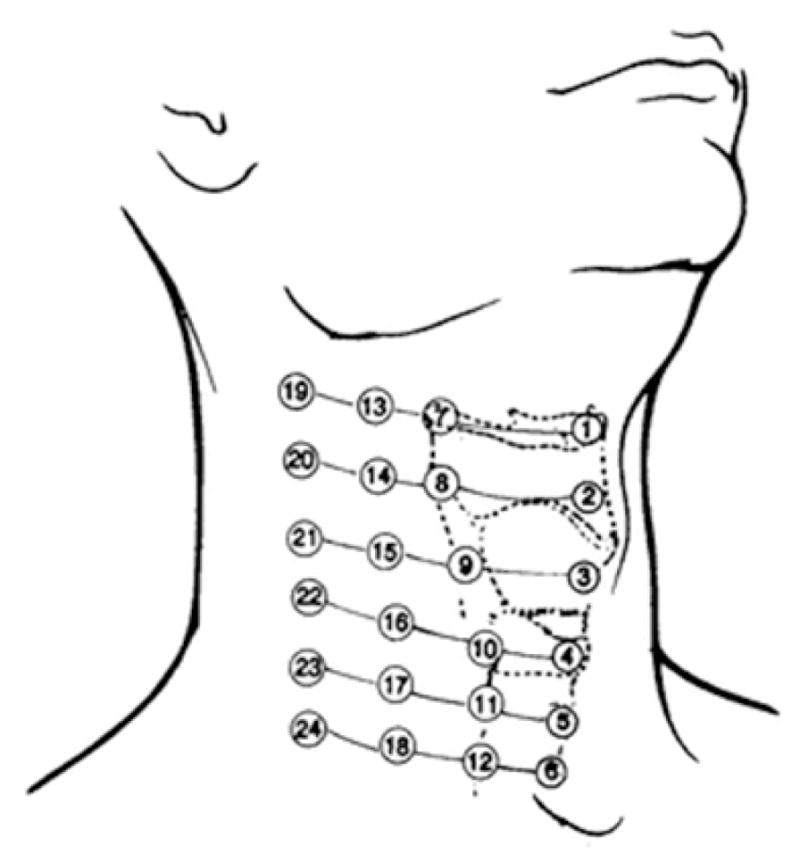

There has also been some debate as to the best location to place the microphone or accelerometer when recording swallowing information. Only a few studies have focused their recordings on transducer placements superior to the thyroid cartilage. Experiments done by Selley, et al. as well as Klahn, et al., Perlman, et al., Pinnington, et al., Roubeau, et al., and Smith, et al. made use of the “Exeter Dysphagia Assessment Technique”, which records data from the vicinity of the jawline and hyoid bone [32]–[37], whereas Passler, et al., Shirazi, et al., Sazonov, et al., and Firmin, et al. gathered data from an even higher location with several bone-conduction microphones mounted in the ear canal [38]–[41]. Studies that record data from a location inferior to the thyroid cartilage are far more plentiful. Some experiments have placed their transducer at the suprasternal notch [31], [42]–[44], but the majority of researchers record from the skin overlying the cricoid cartilage or immediate area [17], [20], [21], [27], [40], [41], [45]–[52]. The cricoid cartilage is an intuitive and logical recording location given that the anatomical structures present at this level are active during swallowing. Takahashi, et al.’s work, which systematically calculated the maximum signal to noise ratio of a swallowing accelerometry signal at various locations on a patient’s neck, once again was one of the first to provide an authoritative answer [16]. Their results, which have been cited quite often since their publication and are summarized in Figure 2, suggested that the signal with the greatest peak signal-to-noise ratio could be recorded by a transducer placed either directly on, immediately inferior to, or immediately lateral to the cricoid cartilage [16].

Fig. 2.

Recording locations originally tested in [16]. Location 3 is directly over the thyroid cartilage, location 4 is directly over the cricoid cartilage, and location 6 is near the suprasternal notch. Locations 4, 5, 10, and 11 were declared to be ideal. The figure has been reproduced from the original work [16] with kind permission of Springer Science+Business Media.

VI. Signal Conditioning

Once the signals are recorded, it then becomes a question of what to do with the data. Table I summarizes the general categories of signal conditioning that has been applied to cervical auscultations signals as well as which studies use each technique. In general doing more than the most basic signal processing has been rare in the field. Many studies, in fact, utilize the raw transducer signal to draw their conclusions and many used the human ear in the analysis without any digital or mathematical analysis of the signal waveform’s characteristics [14]–[17], [19], [20], [22], [27], [32]–[37], [40], [48], [50], [52], [63], [76]–[79], [91]–[112]. Those studies that have conditioned the signal between acquisition and analysis generally only applied a bandpass filter in order to eliminate sources of noise at either end of the frequency spectrum [13], [28], [38], [39], [43]–[47], [49], [51], [53]–[62], [65], [68]–[73], [84]–[89]. Once again Takahashi, et al.’s work [16], which was later supported by Youmans, et al. [17], is cited often because their study characterized the frequency range of swallowing accelerometry signals. They found that the vast majority of the signal’s energy is found at or below 3.5 kHz [16], [17] and so many later studies place the upper notch of their filter at or near that point. Unfortunately, these studies have been unable to determine the lower bound on useful frequency components, resulting in much more variability of the placement of the lower notch. While some place it as low as 0.1 Hz in order to maintain a “pure” signal [21], [49], [51], [65], [66], [83], [86], [90], others place it as high as 30 Hz or more in order to eliminate motion artifacts and other low frequency noise [39], [42], [44], [60]–[62], [69]. Since similar bandlimits have yet to be identified for swallowing sounds, studies which use a microphone simply limit the recorded signal to either the human audible range [21], [32], [33], [37], [40], [46], [48], [67], [76]–[78], [86], [89], [95], [97]–[100], [102], [103], [110], [112]–[116] or the range of common stethoscopes used in bedside assessments [13], [22], [28], [39], [56], [69], [73], [88], [91], [92]. Typically, this bare minimum amount of signal processing has traditionally been done for one of two reasons. First, studies that make extensive use of subjective human judgements, whether to determine the start and end times of individual swallows or to match physiological events with the time domain signal, do not want to filter out important frequency components and alter the signal. Instead, they attempt to provide a signal that is identical to what these experts would observe when performing a bedside evaluation in a clinical environment [19], [22], [28], [46], [48], [84], [91], [92], [106], [110]. Second, many of these studies look at only the most basic of signal features, such as the sound composites representing the number of swallows over time, the timing of swallowing phases, or simply the onset/offset of a swallowing event [14]–[17], [20], [27], [32]–[34], [36]–[40], [50], [52], [55]–[57], [68]–[70], [93], [95], [100]–[105], [107], [108]. These features can be suitably estimated from noisy data, so applying complex filtering techniques is deemed unnecessary.

TABLE I.

Summary of Filtering and Pre-Processing Techniques

| Filtering | Segmentation | ||||||

|---|---|---|---|---|---|---|---|

| Wavelet Denoising | Analog Bandpass | Auto-Regressive | Specific Source Elimination | Thresholding | Neural Networks | Windowed Variances | |

| 1990–1995 | [13], [45], [53], [54] | ||||||

| 1996–2000 | [55]–[59] | ||||||

| 2001–2005 | [43], [60]–[62] | [60] | [43], [61], [63], [64] | ||||

| 2006–2010 | [30], [47], [65]–[67] | [28], [46], [47], [49], [65], [68]–[73] | [65], [66] | [74], [75] | [65], [71], [76]–[79] | [71], [76] | [80]–[83] |

| 2011–2013 | [21], [51], [84]–[87] | [38], [39], [44], [51], [84]–[86], [88], [89] | [21], [85]–[87] | [21], [51], [85]–[87], [90] | [88] | [21], [85], [86] | |

It is important to note that not all filtering of the swallowing signal is intentional. Ideally, the transducer used would have a flat frequency response and would not change the amplitude of a recorded signal based on the signal’s frequency. This is not always the case, however, and each specific transducer model has a unique frequency response curve. Depending on the researcher’s available funds, personal preference, or a legitimate design choice they may utilize a model that amplifies some frequency components more than others. The way a transducer is used can also alter the signal. For example, if an omni-directional microphone is placed too close to the source of the sound the “proximity effect” causes the lower frequency components of the signal to be amplified. It is possible to use filters to compensate for these imperfect frequency responses, but not all researchers do and so care must be taken when analysing the presented results.

Although only a few research groups have applied more advanced signal processing techniques they have approached the problem from multiple directions. First, several researchers have applied wavelet denoising techniques to swallowing signals with some success [21], [30], [47], [51], [65]–[67], [84]–[87]. Wavelet denoising involves performing the wavelet decomposition of a time domain signal and then eliminating any detail components with a magnitude below a specific threshold; the assumption being that these components consist predominantly of noise data [117]. The denoised time domain signal can then be reconstructed from the remaining wavelet components [117]. Unlike common bandpass filtering techniques, which reduce noise only in specific frequency ranges, wavelet denoising is able to reduce the impact of both white and colored noise on a given signal [117]. In an application with a less than ideal signal-to-noise ratio and a wide bandwidth this method of filtering has clear advantages.

In addition to general denoising, there have also been attempts to remove specific unwanted signals found in cervical auscultation data. Head motion during swallowing is one such example, as it can cause unwanted biasing of an accelerometer’s output. Sejdić, et al., et al has demonstrated a method of eliminating this unwanted signal which uses splines. A signal can be written in terms of splines as

| (1) |

where c(k) is an L2 sequence of real numbers and bp(k) is the pth order indirect spline filter, also known as a B-spline. This filter is defined as

| (2) |

where u is a step function and m is a time scaling factor. It was found that, in order to minimize the mean square error of the noise approximation, c(k) must be equal to (3) [90].

| (3) |

In less mathematical terms, this process fits a low frequency (< 2 Hz) trend to the time domain signal that results from head motion during recording [21], [51], [74], [85]–[87], [90]. It then subtracts that low frequency trend from the time domain recording in order to eliminate the effects of head motion without affecting the higher frequency signal [21], [51], [74], [85]–[87], [90]. The same group also investigated ways to eliminate vocalizations from swallowing accelerometry signals [51], [75], [85]. By employing the Robust Algorithm for Pitch Tracking (RAPT), which calculates the normalized correlation coefficient function of a signal and marks the local maxima as vocalizations [118], they were able to remove this source of noise from their analysis and greatly improved their ability to detect the onset and offset of individual swallows [75]. Finally, these researchers have also experimented with techniques to remove noise created by the recording device itself. By recording the transducer’s output in the absence of any input and fitting an autoregressive model to the data, they were able to generate an infinite impulse response filter to remove that device noise and improve their signal quality [21], [65], [66], [85]–[87]. As an example, Figure 3 compares a raw transducer signal with one that has undergone all of the conditioning techniques included in [21], [86]. The improvement in the signal-to-noise ratio is visually apparent and should demonstrate how later analysis techniques can benefit from various forms of signal conditioning.

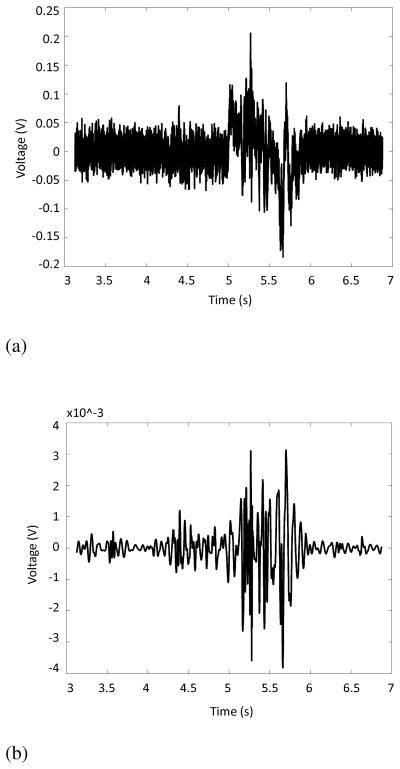

Fig. 3.

Effects of applying signal processing techniques to swallowing accelerometry. Note the increased signal to noise ratio and elimination of low frequency artifacts.

(a) Raw output from the accelerometer recording system detailed in [21]. One saliva swallow is presented.

(b) Accelerometer recording in 3a after undergoing conditioning procedures detailed in [21].

Finally, there have been attempts to find a method of automatically and accurately determining the endpoints of a swallowing event within a generic signal. The simplest method, thresholding the time domain signal based on its magnitude or other simple statistic [65], [71], [76]–[79], [88], is of course the most crude but has clear advantages if one is willing to accept a reduction in precision. On the other end of the spectrum, several researchers, including Lee, et al., Das, et al., and Makeyev, et al., formed neural networks and trained them to segment swallowing signals from background noise and artifacts [60], [71], [76]. The time domain signal is windowed and the network classifies each segment as belonging to a swallow or some other designation based on the values of the chosen parameters of that window [60], [71], [76]. The parameters weighed by the networks vary greatly and have included time domain features such as root-mean-squared magnitude and number of zero crossings, frequency domain features such as average power, or time-frequency features such as the energy in specific wavelet frequency bands [60], [71], [76]. The remaining methods researched take into account the non-stationarity of swallowing signals and observe the changes in the signal’s variance over time. Lazareck, et al. and Ramanna, et al. divided the swallowing signal into a number of equal length sections and then calculated, as shown in equation (4), the waveform fractal dimension of each [43], [61], [63].

| (4) |

Here, L is the length of a given window, a is the step size, and d is the diameter of the waveform [43], [61], [63]. Swallowing was assumed to occur during the periods of high signal variance, and therefore a large waveform fractal dimension value, so a threshold was set to determine the onset and offset of each swallow [43], [61], [63]. Moussavi, et al. and Aboofazeli, et al. also used this approach on multiple occasions. However instead of thresholding the waveform fractal dimension, this feature was used to create a hidden Markov model of swallowing and the model’s transitions between states was found to correspond to the transitions between the oral, pharyngeal, and esophageal stages of swallowing [64], [80]–[82]. Meanwhile, Sejdić, et al. used a different method of determining a signal’s variance over time. They utilized fuzzy means clustering in combination with the time-dependent variance of the signal in order to determine periods when a swallow occurred [21], [83], [85], [86]. Described in (5)–(7), their algorithm separates the signal into “swallowing” and “non-swallowing” clusters, indicated by ujk, based on the prototype vj and the inner product of the prototype with the signal variance, σ [83]. After providing the initial guesses for ujk, the centers of the two clusters, the values of vj and ujk are repeatedly updated until the change in the location of the cluster centres is sufficiently small [83]. In clearer terminology, their algorithm divides the signal into many short periods and calculates the variance of each segment. Based on that value, then algorithm groups together each segment with similarly large variances and labels them as belonging to swallowing events. The inverse occurs with segments of low variance.

| (5) |

| (6) |

| (7) |

To summarize, researchers that utilize cervical auscultation can apply conditioning techniques to both filter and segment the recorded data. While simple bandpass filtering is the most common technique, there have still been many examples of wavelet denoising applied to these signals. There have also been a few more specific filtering strategies including using autoregressive modelling to remove device noise or spline modelling to remove low frequency artefacts. Likewise, simple thresholding of the time-domain signal is the most commonly applied segmentation technique. Those that use more computationally intensive methods characterize the signal’s time-dependent variance before applying thresholding or fuzzy means clustering strategies to the data. The specific instances of these techniques can be found in Table I.

VII. Signal Analysis

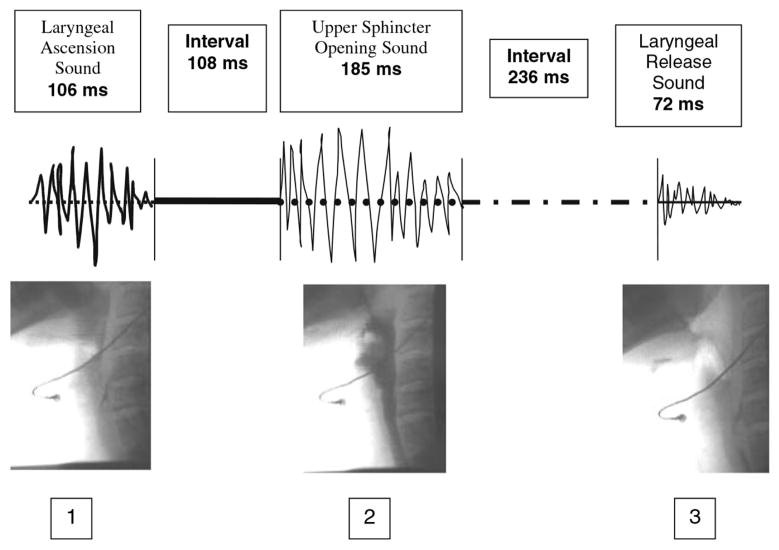

Similar to the signal processing divisions, there are a few common approaches to objectively analyze cervical auscultation signals. Table II lists the general feature categories explored in past research as well as which studies analyzed each. The first analysis method is the most simplistic and extracts the most basic features and conclusions from the time domain recordings. For example, researchers have matched certain visual landmarks of a raw cervical auscultation signal with some specific physiological events [19], [37], [48], [69], [103], determined the onset and duration of a swallow [34], [68], [69], [94], [95], [97], [101], [110], [113], [114], or simply counted the number of swallows that occurred over a certain period [40], [95], [102], [104], [107], [111]. The second analysis method also uses simple features of the signal, but uses the subjective opinion of a trained expert listening to the acoustic signal rather than the researcher’s visual analysis of the signal waveform. Again, features such as the duration of the swallow [27], [47] or the relation between physiological events and key landmarks of the swallowing signal [13], [14], [28], [58], [106] can be analysed, but with the authority of an expert clinician and a corresponding videofluoroscopy feed supporting the conclusions. It is important to note, however, that these landmarks are not distinct waveforms directly linked to the underlying physiology, but rather are weak correlations such as the duration of certain acoustic “bursts” in the swallowing signal [27] or a correlation between swallowing vibration magnitude and laryngeal elevation [58]. Figure 4 demonstrates this interaction between videofluoroscopic recordings and physiological changes in the patient. This technique has also been used to investigate the sensitivity of a bedside dysphagia screen when compared to standard techniques such as videofluoroscopy [91], [92].

TABLE II.

Summary of Feature Extraction Techniques

| Simple Time Domain | Statistical Time Domain | Information-Theoretic | Frequency Domain | Time-Frequency Domain | Phase Space | |

|---|---|---|---|---|---|---|

| 1990–1995 | [14], [16], [45], [53], [109], [111] | [13], [35], [53], [54], [106] | ||||

| 1996–2000 | [59] | |||||

| 2001–2005 | [17], [18], [43], [60], [61], [63] | [15], [43], [61], [63], [93], [119], [120] | [18], [63] | [17], [18], [43], [60], [61], [63] | [62], [121] | |

| 2006–2010 | [31], [46], [47], [49], [65], [67], [70], [122] | [49], [65], [122], [123] | [31], [49], [65], [122] | [31], [46], [49], [79], [98], [101], [114], [122] | [30], [49], [65], [77], [79], [98], [115], [122] | [42], [73] |

| 2011–2013 | [84], [86] | [51], [84], [86] | [21], [51], [86] | [39], [44], [51], [84], [86], [94], [96], [99], [112] | [21], [39], [51], [84], [86], [87], [89], [99] |

Fig. 4.

Diagram depicting links between cervical auscultation and the underlying physiology that is used by some clinicians in bedside analysis [48]. The figure has been reproduced from the original work [48] with kind permission of Springer Science+Business Media.

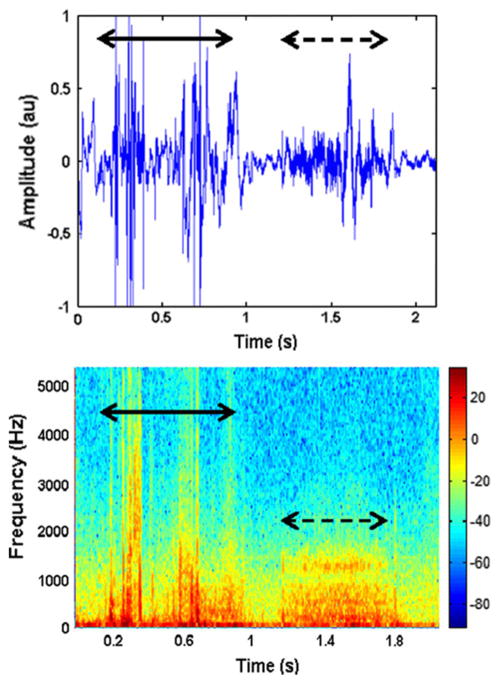

The third method of analysis involves characterizing the swallowing signal through a number of calculated statistical features. The exact features chosen are somewhat subjective and vary greatly depending on the background of the chosen researcher and what they are investigating, since no one has yet determined the “key features” of a swallow. In the time domain, it is common to investigate the overall duration of the swallow [17], [31], [43], [45], [46], [49], [61], [63], [65], [86], [111], [122], the timing of the different phases of deglutition such as the duration of a pharyngeal delay [14], [63], [109], the magnitude of the recorded signal [16], [18], [43], [45], [47], [51], [53], [60], [61], [63], [65], [67], [70], [84], [109], and the statistical moments of the signal such as variance or kurtosis [15], [43], [49], [51], [61], [65], [84], [86], [93], [119], [122]. Many experiments also looked at various frequency domain features of the signal, such as the peak frequency, average power, or other moments, by either visual inspection of the spectrogram or via the fast Fourier transform [13], [17], [18], [31], [35], [39], [43], [44], [46], [49], [51], [53], [54], [60], [61], [63], [79], [84], [86], [94], [96], [101], [106], [112], [114], [122]. Figure 5 shows both a swallowing and a breath sound as time domain and spectrogram representations. It demonstrates how swallowing sounds are constructed of many different underlying features and that swallowing sounds can be differentiated from other auscultation signals.

Fig. 5.

The A) time domain and B) spectrogram representation of a swallowing sound (solid line) and subsequent breath (dashed line) [44]. Note the wide selection of frequency components that form the swallowing signal as well as how it compares to a non-swallowing signal. The figure has been reproduced from the original work [44] with kind permission of Springer Science+Business Media.

However, researchers have also investigated less commonly analyzed attributes with regards to cervical auscultation. Some have calculated the stationarity and normality of swallowing signals [51], [63], [65], [84], [119], [120], [123], while others have explored the various measures of complexity. For example, the Lempel-Ziv complexity, shown in equ. (8) estimates the randomness of a given signal and is a function of signal length n and number of unique sequences in the signal k [21], [49], [51], [65], [86], [122].

| (8) |

Alternatively the Shannon entropy, which is used as an estimate of the uncertainty of a random variable, can also be used to characterize a signal’s complexity and has been applied to cervical auscultation research [21], [49], [51], [65], [86], [122]. Shown in equation (9), Shannon entropy is a function of the probability mass function ρ of the given signal L.

| (9) |

The waveform fractal dimension, explained in equation (4), has also been used to estimate a signal’s complexity with some success [18], [31], [59], [63]. Once again, the chosen measure of complexity varies with the researcher as no one feature has been proven to be the most correct.

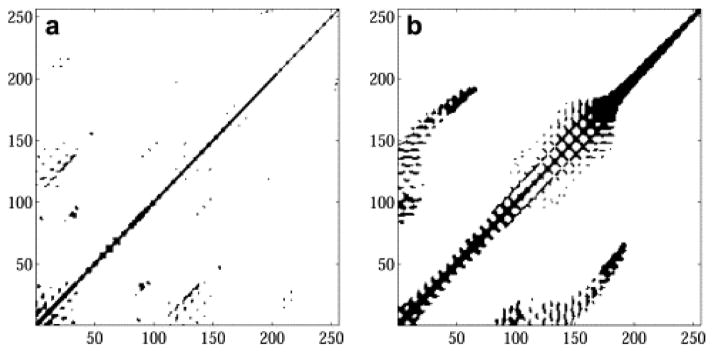

Lastly, various studies have utilized transforms other than the standard Fourier transform. For example, a few studies have investigated mel-scale Fourier transforms as opposed to more traditional frequency representations [98], [99]. Using this method, the Fourier transform of the signal is calculated through the typical means. However, the log of the resulting data is then mapped onto a mel scale, a scale that plots data against its frequency in octaves rather than the raw frequency in Hertz, so as to better relate the data to human perception [98], [99]. There has also been some work done with wavelets in the time-frequency domain, where the signal is decomposed into a sum of scaled and amplified pulses [21], [30], [39], [49], [51], [65], [77], [84], [86], [87], [89], [98], [99], [115], [122]. Similar to the Fourier transform, the signals are characterized by the amplitude of these components and the relative amount of energy in specific frequency bands can be calculated [30], [49], [122]. The hermite projection method, which decomposes the signal into hermite polynomials, shares clear similarities to the wavelet transform method, but does not have the same popularity in this field [79]. In addition, swallowing signals have been investigated using a phase space transformation [42], [62], [73], [121]. By applying the method of delays, it is possible to map the time domain swallowing signal onto a multi-dimensional phase portrait and generate a recurrence plot as shown in Figure 6 [42], [62], [73], [121]. This plot can be used to analyze the trends and periodic nature of a given signal as it tracks every time the phase portrait overlaps itself. Much like with Figure 5 it shows that swallowing signals consist of distinct features and can be distinguished from other auscultation signals even in this new domain. As with the other analysis methods it is then possible to extract a number of features to characterize the signal, such as the percent of the recurrence plot occupied by recurrence points, the percent of points that form lines parallel to the identity line, and the Shannon entropy, given by equation (9), of the length of those parallel lines [42], [73], [121]. These studies have also calculated the correlation dimension of the system, which estimates the minimum number of variables needed to model a process, by the following equation:

| (10) |

where C(r) is the number of pairs of points in the phase space that are no more than r distance apart [42], [73], [121]. The Lyapunov exponents, which characterize the convergence or divergence of trajectories in phase space, have also been investigated [62]. These features can be found by solving for λ in (11), which gives the distance between points in phase space as a function of the Lyapunov exponent (λ), the sampling period (δ), the current point (k), and the distance between an origin point and its nearest neighbour (d0).

Fig. 6.

Typical recurrence plot for A) swallowing accelerometry signals recorded from the suprasternal notch with an identical plot of B) breath sounds for comparison. The figure has been reprinted with permission from the original Elsevier source [42].

| (11) |

The ultimate goal of research with cervical auscultation is to characterize specific characteristics of both healthy and dysphagic swallows and be able to differentiate between them. In order to do that, we must first determine what signal characteristics are important. Though work began with only the most simple and obvious time domain features, researchers have since greatly expanded their attempts to find those most important characteristics. Table II demonstrates the field’s pursuit of this goal. Despite this multitude of data, the research is still in the early stages and there is not yet a consensus on what features warrant further investigation, let alone what features properly characterize a swallow.

VIII. Automated Classification of Abnormal Swallowing

There has been noticeably less work invested towards automatically differentiating normal and abnormal swallowing activity compared to the multitude of analysis and conditioning techniques developed. Table III organizes the existing research on this topic. This should not be taken as a sign of its lack of importance, however, as adding this objective assessment to current screening methods could ensure that a patient’s condition is identified before it becomes a medical issue. Several screening methods have been developed and published, producing relatively good combinations of sensitivity and negative predictive value in predicting aspiration without the use of auscultation or other instruments [23]–[26], [124]–[126]. However, adding an objective dimension to the somewhat subjective judgements produced in the traditional non-instrumented dysphagia screening still offers potential improvements.

TABLE III.

Summary of Feature Classification Methods

As demonstrated, the techniques used to process and analyze specific swallowing-related signals are not well defined. No one group has conclusively determined the acoustic and vibratory signal correlates of a ‘normal’ swallow. Furthermore, it is only in the last decade that researchers have investigated dysphagia by way of cervical auscultation in any systematic fashion. Many researchers have relied on the testimony of clinical experts and then have drawn conclusions about their chosen analysis techniques rather than objectively comparing features to a known standard. One objective method of automatically identifying data in this field involves linear classifiers. The first subcategory of linear classifiers includes those that estimate the conditional density function of the classes. In summary, a linear classifier uses a training set of data that has already been classified as containing safe or unsafe swallows by a clinical expert in order to estimate the values of various statistical features, as described in the previous section, of the given class. New data is then categorized based on the probability of each feature belonging to each potential class. The exact method of calculating these probabilities varies and researchers have applied both linear discriminant analysis [31], [51], [61], [84], [87] and Bayesian classifier techniques [85], [89], [115] to cervical auscultation data. The second type of linear classifier is the discriminative model, which includes the support vector machine [51], [98], [99], k-nearest neighbours classification [84], and fuzzy means classification [44], [96], [111]. These methods attempt to identify clusters of features and use them to separate a given data set into distinct classes. For example, fuzzy means classification attempts to minimize the following cost function

| (12) |

in order to maximize class separation. Here, Xj are the features of the given data point, k is the number of clusters, m is the fuzziness index, p(Xj) is the degree to which the data point belongs to a cluster, and Vi are the cluster centres. Data points with known labels are assigned to each class in order to minimize the number of data points that are classified incorrectly. The class boundaries are then defined and use to classify new, unlabelled data points. Other discriminant analysis techniques have different cost functions, but operate on similar concepts.

Finally, the chief non-linear method of classification used with cervical auscultation is the artificial neural network. Similar to the linear techniques, a number of features are calculated from the data. However, rather than minimizing a cost function or estimating probabilities manually, these features are fed into a web of “neurons” which weighs the inputs and sorts the signal into a class. The relationships between the inputs and outputs of each node was determined through iterative techniques using a training set of data of known classification while the number and arrangement of nodes is determined by the researcher. Several researchers have applied this method to cervical auscultation signals with varying levels of success [53], [60], [77], [84], [109], [121], [123].

In summary, the classification of normal and abnormal swallows with cervical auscultation is a very new area of research. Those few that have investigated the issue to any significant extent have focused on linear classification techniques such as linear discriminant analysis or k-means clustering. However, a few researchers have applied non-linear neural networking techniques to these signals and have shown their potential for this field. Table (III) categorizes the various contributions made in this regard.

IX. Conclusion and Future Work

As outlined, this paper summarized the signal processing techniques used in cervical auscultation research. It provided a brief summary of how the signals in question are obtained and then described the many different ways those signals are filtered, characterized, and analysed. Though much research in the past only used the most basic methods, such as applying a bandpass filter to the data then looking at simple time domain features, there have been a number advancements in the field. Recently more complex algorithms, such as wavelet denoising or cluster-based segmentation, have been used to condition these signals and improve analysis. Furthermore, researchers have investigated alternative signal features in the time domain as well as the frequency, time-frequency, and phase space domains in an attempt to better characterize normal and abnormal swallows. Lastly, several different methods of linear and non-linear classification have been applied to swallowing signals in order to differentiate these two conditions. However, a consensus on what features are most important has not been reached and progress towards developing classification methods has slowed as a result. Nonetheless, the addition of objective methods of data analysis has enhanced the diagnostic process in many other domains and it remains an attractive goal in the screening of dysphagia. Given that patients admitted to a hospital with a stroke have a three-fold increase in the likelihood of dying to pneumonia [127], efforts to more accurately and quickly identify the risk of dysphagia-related pneumonia deserve ongoing research investment.

There are a number of different avenues that this field could investigate in the future. First, and perhaps most obvious, would be to create a valid and definitive list of clinically important swallowing features and methods to differentiate between their characteristics in healthy and pathological states. While many features have been investigated, their abilities to differentiate normal and abnormal swallows have not always been made clear. Likewise, there is also much room for improvement of the classification schemes used for cervical auscultation. Rather than using exclusively 2-class classifiers, it may be viable and useful to investigate methods that utilize a greater number of classes. In addition to the standard “normal” and “abnormal” classes, it may be possible to distinguish the signals caused by non-swallowing events that co-occur with swallowing in the aerodigestive tract, such as breathing, vocalizations, and other sources of noise. By identifying these artifacts and other unwanted events it may be easier to differentiate normal and abnormal swallows. It would also be prudent to investigate non-linear classifiers in greater detail should the assumption of linear separator prove too inaccurate. Furthermore, past research has preferred to analyze the signal of an entire swallow with only some attempts to link specific physiological events with various signal characteristics. Designing filtering techniques to isolate signal components caused by the upper esophageal sphincter opening, the airway closing, or hyoid and laryngeal displacement, for example, could be of great benefit in the treatment of biomechanical swallowing impairments. Not only would it be possible to detect some abnormal deglutitive events, but it would also be possible describe the abnormalities. Finally, many past studies have focused on recording and analyzing frequency components within the range of human hearing, often times attempting to mimic the stethoscope bedside assessment. Microphones and accelerometers, however, do not have the same frequency sensitivities as the human ear. In the future it could be beneficial to concentrate research on the low end of the frequency spectrum (1–50 Hz) to determine if the components that lie outside the human audible range provide any useful information about swallowing activity. While this is not an exhaustive list, it does demonstrate several key areas in the field that should be addressed in the future.

Acknowledgments

Research reported in this publication was supported by the Eunice Kennedy Shriver National Institute Of Child Health & Human Development of the National Institutes of Health under Award Number R01HD074819.

Biographies

Joshua M. Dudik received his bachelor’s degree in biomedical engineering from Case Western Reserve University, OH in 2011 whereas he earned his master’s in bioengineering from the University of Pittsburgh, PA in 2013. He is currently working towards a PhD in electrical engineering at the University of Pittsburgh, PA. His current work is focused on cervical auscultation and the use of signal processing techniques to assess the swallowing performance of impaired individuals.

James L. Coyle received his Ph.D. in Rehabilitation Science from the University of Pittsburgh in 2008 with a focus in neuroscience. He is currently Associate Professor of Communication Sciences and Disorders at the University of Pittsburgh School of Health and Rehabilitation Sciences (SHRS) and Adjunct Associate Professor of Speech and Hearing Sciences at the Ohio State University. He is Board Certified by the American Board of Swallowing and Swallowing Disorders and maintains an active clinical practice in the Department of Otolaryngology, Head and Neck Surgery and the Speech Language Pathology Service of the University of Pittsburgh Medical Center. He is a Fellow of the American Speech Language and Hearing Association.

Ervin Sejdić Ervin Sejdić (S00–M08) received the B.E.Sc. and Ph.D. degrees in electrical engineering from the University of Western Ontario, London, ON, Canada, in 2002 and 2008, respectively.

He was a Postdoctoral Fellow at Holland Bloorview Kids Rehabilitation Hospital/University of Toronto and a Research Fellow in Medicine at Beth Israel Deaconess Medical Center/Harvard Medical School. He is currently an Assistant Professor at the Department of Electrical and Computer Engineering (Swanson School of Engineering), the Department of Bioengineering (Swanson School of Engineering), the Department of Biomedical Informatics (School of Medicine) and the Intelligent Systems Program (Kenneth P. Dietrich School of Arts and Sciences) at the University of Pittsburgh, PA. His research interests include biomedical and theoretical signal processing, swallowing difficulties, gait and balance, assistive technologies, rehabilitation engineering, anticipatory medical devices, and advanced information systems in medicine.

Footnotes

Reference Search Strategy

The works cited in this review were found by use of aggregate research databases including PubMed (MEDLINE), SpringerLink, GoogleScholar, and IEEE Xplore. Keyword searches were conducted through these databases with search terms such as ‘cervical auscultation’, ‘swallowing sounds’, ‘digital swallowing analysis’, ‘dysphagia’, ‘dysphagia classification’, ‘accelerometry’, and ‘swallowing difficulties’ depending on the database’s content and the desired subject. Preference for citation was given to articles that utilized a data recording or analysis method on real data rather than an article by the same author which proposed a method in a theoretical context. Likewise, articles which repeated past experiments in order to confirm previous findings or provided similar information with a new participant population were not included unless the data recording or analysis techniques contained notable alterations.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Contributor Information

Joshua M. Dudik, Email: jmd151@pitt.edu, Department of Electrical and Computer Engineering, Swanson School of Enginering, University of Pittsburgh, Pittsburgh, PA, USA

James L. Coyle, Email: jcoyle@pitt.edu, Department of Communication Science and Disorders, School of Health and Rehabilitation Sciences, University of Pittsburgh, Pittsburgh, PA, USA

Ervin Sejdić, Email: esejdic@ieee.org, Department of Electrical and Computer Engineering, Swanson School of Enginering, University of Pittsburgh, Pittsburgh, PA, USA.

References

- 1.Spieker M. Evaluating dysphagia. American Family Physician. 2000 Jun;61(12):3639–3648. [PubMed] [Google Scholar]

- 2.Castrogiovanni A. Communication facts: Special population: Dysphagia. American Speech-Language-Hearing Association; Jan 5, 2008. [Feb. 20, 2013]. [Online]. Available: http://www.asha.org/research/reports/dysphagia.htm. [Google Scholar]

- 3.Smithard D, O’Neill P, Park C, Morris J, Wyatt R, England R, Martin D. Complications and outcome after acute stroke. does dysphagia matter? Stroke. 1996 Jul;27(7):1200–1204. doi: 10.1161/01.str.27.7.1200. [DOI] [PubMed] [Google Scholar]

- 4.Cook I, Kahrilas P. American gastroenterological association technical review on management of oropharyngeal dysphagia. American Gastroenterological Association, Tech Rep. 1999 Jan;116(2) doi: 10.1016/s0016-5085(99)70144-7. [DOI] [PubMed] [Google Scholar]

- 5.Leslie P, Drinnan M, IZ-M, Coyle James, Ford G, Wilson J. Cervical auscultation synchronized with images from endoscopy swallow evaluations. Dysphagia. 2007 Oct;22(4):290–298. doi: 10.1007/s00455-007-9084-5. [DOI] [PubMed] [Google Scholar]

- 6.Bours G, Speyer R, Lemmens J, Limburg M, de Wit R. Bedside screening tests vs. videofluoroscopy or fiberoptic endoscopic evaluation of swallowing to detect dysphagia in patients with neurological disorders: Systematic review. Journal of Advanced Nursing. 2009;65(3):477–493. doi: 10.1111/j.1365-2648.2008.04915.x. [DOI] [PubMed] [Google Scholar]

- 7.Sherman B, Nisenboum J, Jesberger B, Morrow C, Jesberger J. Assessment of dysphagia with the use of pulse oximetry. Dysphagia. 1999 Jun;14(3):152–156. doi: 10.1007/PL00009597. [DOI] [PubMed] [Google Scholar]

- 8.Ertekin C, Aydogdu I, Yuceyar N, Tarlaci S, Kiylioglu N, Pehlican M, Celebi G. Electrodiagnostic methods for neurogenic dysphagia. Electroencephalography amd Clinical Neurophysiology/Electromyography and Motor Control. 1998 Aug;109(4):331–340. doi: 10.1016/s0924-980x(98)00027-7. [DOI] [PubMed] [Google Scholar]

- 9.Malandraki G, Sutton B, Perlman A, Karampinos D, Conway C. Neural activation of swallowing and swallowing-related tasks in healthy young adults: An attempt to separate the components of deglutition. Human Brain Mapping. 2009 Oct;30(10):3209–3226. doi: 10.1002/hbm.20743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bosma J. Deglutition: Pharyngeal stage. Physiological Reviews. 1957 Jul;37(3):275–300. doi: 10.1152/physrev.1957.37.3.275. [DOI] [PubMed] [Google Scholar]

- 11.Goyal R, Mashimo H. Physiology of oral, pharyngeal, and esophageal motility. GI Motility Online. 2006 May;1(1):1–5. [Google Scholar]

- 12.Full Revision of the International Standards for Equal-Loudness Level Contours. International Standards Organization Std; Oct, 2003. p. 226. [Google Scholar]

- 13.Hamlet S, Penney D, Formolo J. Stethoscope acoustics and cervical auscultation of swallowing. Dysphagia. 1994 Jan;9(1):63–68. doi: 10.1007/BF00262761. [DOI] [PubMed] [Google Scholar]

- 14.Zenner P, Losinski D, Mills R. Using cervical auscultation in the clinical dysphagia examination in long-term care. Dysphagia. 1995 Jan;10(1):27–31. doi: 10.1007/BF00261276. [DOI] [PubMed] [Google Scholar]

- 15.Reynolds E, Vice F, Bosma J, Gewolb I. Cervical accelerometry in preterm infants. Developmental Medicine & Child Neurology. 2002 Sep;44(9):587–592. doi: 10.1017/s0012162201002626. [DOI] [PubMed] [Google Scholar]

- 16.Takahashi K, Groher M, Michi K. Methodology for detecting swallowing sounds. Dysphagia. 1994 Jan;9(1):54–62. doi: 10.1007/BF00262760. copyright Springer-Verlag New York Inc. 1994. [DOI] [PubMed] [Google Scholar]

- 17.Youmans S, Stierwalt J. An acoustic profile of normal swallowing. Dysphagia. 2005 Sep;20(3):195–209. doi: 10.1007/s00455-005-0013-1. [DOI] [PubMed] [Google Scholar]

- 18.Aboofazeli M, Moussavi Z. Automated classification of swallowing and breadth sounds. The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Francisco, CA. September 1–5 2004; pp. 3816–3819. [DOI] [PubMed] [Google Scholar]

- 19.Hamlet S, Nelson R, Patterson R. Interpreting the sounds of swallowing: Fluid flow through the cricopharyngeus. Annals of Otology, Rhinology, and Laryngology. 1990 Sep;99(9):749–752. doi: 10.1177/000348949009900916. [DOI] [PubMed] [Google Scholar]

- 20.Cichero J, Murdoch B. Detection of swallowing sounds: Methodology revisited. Dysphagia. 2002 Jan;17(1):40–49. doi: 10.1007/s00455-001-0100-x. [DOI] [PubMed] [Google Scholar]

- 21.Dudik JM, Jestrović I, Luan B, Coyle JL, Sejdić E. A comparative analysis of swallowing accelerometry and sounds during saliva swallows. Biomedical Engineering Online. 2015 Jan;14(3):1–15. doi: 10.1186/1475-925X-14-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Reynolds E, Vice F, Gewolb I. Variability of swallow-associated sounds in adults and infants. Dysphagia. 2009 Mar;24(1):13–19. doi: 10.1007/s00455-008-9160-5. [DOI] [PubMed] [Google Scholar]

- 23.Martino R, Silver F, Teasell R, Bayley M, Nicholson G, Streiner D, Diamant N. The toronto bedside swallowing screening test (torbsst): Development and calidation of a dysphagia screening tool for patients with stroke. Stroke. 2009 Feb;40(2):555–561. doi: 10.1161/STROKEAHA.107.510370. [DOI] [PubMed] [Google Scholar]

- 24.Logemann J, Veis S, Colangelo L. A screening procedure for oropharyngeal dysphagia. Dysphagia. 1999 Jan;14(1):44–51. doi: 10.1007/PL00009583. [DOI] [PubMed] [Google Scholar]

- 25.Hinchey J, Shephard T, Furie K, Smith D, Wang D, Tonn S, Investigators SPIN. Formal dysphagia screening protocols prevent pneumonia. Stroke. 2005 Sep;36(9):1972–1976. doi: 10.1161/01.STR.0000177529.86868.8d. [DOI] [PubMed] [Google Scholar]

- 26.Hey C, Lange B, Eberle S, Zaretsky Y, Sader R, Stöver T, Wagenblast J. Water swallow screening test for patients after surgery for head and neck cancer: Early identification of dysphagia, aspiration, and limitations of oral intake. Anticancer Research. 2013 Sep;33(9):4017–4027. [PubMed] [Google Scholar]

- 27.Borr C, Hielscher-Fastabend M, Lucking A. Reliability and validity of cervical auscultation. Dysphagia. 2007 Jul;22(3):225–234. doi: 10.1007/s00455-007-9078-3. [DOI] [PubMed] [Google Scholar]

- 28.Leslie P, Drinnan M, Zammit-Maempel I, Coyle J, Ford G, Wilson J. Cervical auscultation synchronized with images from endoscopy swallow evaluations. Dysphagia. 2007 Oct;22(4):290–298. doi: 10.1007/s00455-007-9084-5. [DOI] [PubMed] [Google Scholar]

- 29.Marrara J, Duca A, Dantas R, Trawitzki L, Cardozo R, Pereira J. Swallowing in children with neurologic disorders: Clinical and videofluoroscopic evaluations. Pro-Fono Revista de Atualizacao Cientifica. 2008 Dec;20(4):231–237. doi: 10.1590/s0104-56872008000400005. [DOI] [PubMed] [Google Scholar]

- 30.Aboofazeli M, Moussavi Z. Automated extraction of swallowing sounds using a wavelet-based filter. The 28th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; New York, NY. August 30 – September 3 2006; pp. 5607–5610. [DOI] [PubMed] [Google Scholar]

- 31.Yadollahi A, Moussavi Z. Feature selection for swallowing sounds classification. The 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, FR. August 22–26 2007; pp. 3172–3175. [DOI] [PubMed] [Google Scholar]

- 32.Selley W, Flack F, Ellis R, Brooks W. The exeter dysphagia assessment technique. Dysphagia. 1990 Dec;4(4):227–235. doi: 10.1007/BF02407270. [DOI] [PubMed] [Google Scholar]

- 33.Selley W, Ellis R, Flack F, Bayliss C, Pearce V. The synchronization of respiration and swallow sounds with videofluoroscopy during swallowing. Dysphagia. 1994 Sep;9(3):162–167. doi: 10.1007/BF00341260. [DOI] [PubMed] [Google Scholar]

- 34.Klahn M, Perlman A. Temporal and durational patterns associating respiration and swallowing. Dysphagia. 1999 Sep;14(3):131–138. doi: 10.1007/PL00009594. [DOI] [PubMed] [Google Scholar]

- 35.Smith D, Hamlet S, Jones L. Acoustic technique for determining timing of velopharyngeal closure in swallowing. Dysphagia. 1990 Jul;5(3):142–146. doi: 10.1007/BF02412637. [DOI] [PubMed] [Google Scholar]

- 36.Pinnington L, Muhiddin K, Ellis R, Playford E. Non-invasive assessment of swallowing and respiration in parkinson’s disease. Journal of Neurology. 2000 May;247(10):773–777. doi: 10.1007/s004150070091. [DOI] [PubMed] [Google Scholar]

- 37.Roubeau B, Moriniere S, Perie S, Martineau A, Falieres J, Guily JLS. Use of reaction time in the temporal analysis of normal swallowing. Dysphagia. 2008 Jun;23(2):102–109. doi: 10.1007/s00455-007-9099-y. [DOI] [PubMed] [Google Scholar]

- 38.Passler S, Fischer W. Acoustical method for objective food intake monitoring using a wearable sensor system. The 5th International Conference on Pervasive Computing Technologies for Healthcare; Dublin, IE. May 23–26 2011; pp. 266–269. [Google Scholar]

- 39.Sarraf-Shirazi S, Baril J, Moussavi Z. Characteristics of the swallowing sounds recorded in the ear, nose and on trachea. Medical and Biological Engineering and Computing. 2012 Aug;50(8):885–890. doi: 10.1007/s11517-012-0938-0. [DOI] [PubMed] [Google Scholar]

- 40.Sazonov E, Schuckers S, Lopex-Meyer P, Makeyev O, Sazonova N, Melanson E, Neuman M. Non-invasive monitoring of chewing and swallowing for objective quantification of ingestive behavior. Physiological Measurement. 2008 May;29(5):525–541. doi: 10.1088/0967-3334/29/5/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Firmin H, Reilly S, Fourcin A. Non-invasive monitoring of reflexive swallowing. Speech Hearing and Language. 1997 Janurary;10(1):171–184. [Google Scholar]

- 42.Aboofazeli M, Moussavi Z. Comparison of recurrence plot features of swallowing and breathe sounds. Chaos, Solitons & Fractals. 2008 Jul;37(2):454–464. [Google Scholar]

- 43.Lazareck L, Moussavi Z. Swallowing sound characteristics in healthy and dysphagic individuals. The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Francisco, CA. September 1–5 2004; pp. 3820–3823. [DOI] [PubMed] [Google Scholar]

- 44.Sarraf-Shirazi S, Buchel C, Daun R, Lenton L, Moussavi Z. Detection of swallows with silent aspiration using swallowing and breath sound analysis. Medical and Biological Engineering and Computing. 2012 Dec;50(12):1261–1268. doi: 10.1007/s11517-012-0958-9. copyright International Federation for Medical and Biological Engineering 2012. [DOI] [PubMed] [Google Scholar]

- 45.Takahashi K, Groher M, Michi K. Symmetry and reproducibility of swallowing sounds. Dysphagia. 1994 Sep;9(3):168–173. doi: 10.1007/BF00341261. [DOI] [PubMed] [Google Scholar]

- 46.Santamato A, Panza F, Solfrizzi V, Russo A, Frisardi V, Megna M, Ranieri M, Fiore P. Acoustic analysis of swallowing sounds: A new technique for assessing dysphagia. Journal of Rehabilitation Medicine. 2009 Mar;41(8):639–645. doi: 10.2340/16501977-0384. [DOI] [PubMed] [Google Scholar]

- 47.Zoratto D, Chau T, Steele CM. Hyolaryngeal excursion as the physiological source of accelerometry signals during swallowing. Physiological Measurement. 2010 May;31(6):843–855. doi: 10.1088/0967-3334/31/6/008. [DOI] [PubMed] [Google Scholar]

- 48.Morinière S, Boiron M, Alison D, Makris P, Beutter P. Origin of the sound components during pharyngeal swallowing in normal subjects. Dysphagia. 2008 Sep;23(3):267–273. doi: 10.1007/s00455-007-9134-z. copyright Springer Science+Business Media, LLC 2007. [DOI] [PubMed] [Google Scholar]

- 49.Sejdić E, Komisar V, Steele CM, Chau T. Baseline characteristics of dual-axis cervical accelerometry signals. Annals of Biomedical Engineering. 2010 Mar;38(3):1048–1059. doi: 10.1007/s10439-009-9874-z. [DOI] [PubMed] [Google Scholar]

- 50.Reddy N, Thomas R, Canilang E, Casterline J. Toward classification of dysphagic patients using biomechanical measurements. Journal of Rehabilitation Research and Development. 1994 Nov;31(4):335–344. [PubMed] [Google Scholar]

- 51.Celeste M, Azadeh K, Sejdić E, Berall G, Chau T. Quantitative classification of pediatric swallowing through accelerometry. Journal of NeuroEngineering and Rehabilitation. 2012 Jun;9(34):1–8. [Google Scholar]

- 52.Reddy N, Canilang E, Grotz R, Rane M, Casterline J, Costarella B. Biomechanical quantification for assessment and diagnosis of dysphagia. Engineering in Medicine and Biology Magazine. 1988 Sep;7(3):16–20. doi: 10.1109/51.7929. [DOI] [PubMed] [Google Scholar]

- 53.Prabhu D, Reddy N, Canilang E. Neural networks for recognition of acceleration patterns during swallowing and coughing. The 16th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Baltimore, MD. November 3–6 1994; pp. 1105–1106. [Google Scholar]

- 54.Gupta V, Prabhu D, Reddy N, Canilang E. Spectral analysis of acceleration signals during swallowing and coughing. The 16th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Baltimore, MD. November 3–6 1994; pp. 1292–1293. [Google Scholar]

- 55.Pehlivan M, Yuceyar N, Ertekin C, Celebi G, Ertas M, Kalayci T, Aydogdu I. An electronic device measuring the frequency of spontaneous swallowing: Digital phagometer. Dysphagia. 1996 Nov;11(4):259–264. doi: 10.1007/BF00265212. [DOI] [PubMed] [Google Scholar]

- 56.Nakamura T, Yamamoto Y, Tsugawa H. Measurement system for swallowing based on impedance pharyngography and swallowing sound. The 17th IEEE Instrumentation and Measurement Technology Conference; Baltimore, MD. May 1–4 2000; pp. 191–194. [Google Scholar]

- 57.Preiksaitis H, Mills C. Coordination of breathing and swallowing: Effects of bolus consistency and presentation in normal adults. Journal of Applied Physiology. 1996 Oct;81(4):1707–1714. doi: 10.1152/jappl.1996.81.4.1707. [DOI] [PubMed] [Google Scholar]

- 58.Reddy N, Katakam A, Gupta V, Unnikrishnan R, Narayanan J, Canilang E. Measurements of acceleration during videofluorographic evaluation of dysphagic patients. Medical Engineering & Physics. 2000 Jul;22(6):405–412. doi: 10.1016/s1350-4533(00)00047-3. [DOI] [PubMed] [Google Scholar]

- 59.Joshi A, Reddy N. Fractal analysis of acceleration signals due to swallowing. The 21st Annual Conference of the Biomedical Engineering Society Engineering in Medicine and Biology; Atlanta, GA. October 13–16 1999; p. 912. [Google Scholar]

- 60.Das A, Reddy N, Narayanan J. Hybrid fuzzy logic committee neural networks for recognition of swallow acceleration signals. Computer Methods and Programs in Biomedicine. 2001 Feb;64(2):87–99. doi: 10.1016/s0169-2607(00)00099-7. [DOI] [PubMed] [Google Scholar]

- 61.Lazareck L, Moussavi Z. Classification of normal and dysphagic swallow by acoustical means. IEEE Transactions on Biomedical Engineering. 2004 Dec;51(12):2103–2112. doi: 10.1109/TBME.2004.836504. [DOI] [PubMed] [Google Scholar]

- 62.Aboofazeli M, Moussavi Z. Analysis of normal swallowing sounds using nonlinear dynamic metric tools. The 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Francisco, CA. September 1–5 2004; pp. 3812–3815. [DOI] [PubMed] [Google Scholar]

- 63.Lazareck L, Ramanna S. In: Classification of Swallowing Sound Signals: A Rough Set Approach, ser. Lecture Notes in Computer Science. Tsumoto S, Slowinski R, Komorowski J, Grzymala-Busse J, editors. Vol. 3066 Springer; Berlin, Heidelberg: 2004. [Google Scholar]

- 64.Moussavi Z. Assessment of swallowing sounds’ stages with hidden markov model. The 27th Annual Internaltional Conference of the IEEE Engineering in Medicine and Biology Society; Shanghai, CN. January 17–18 2005; pp. 6989–6992. [DOI] [PubMed] [Google Scholar]

- 65.Lee J, Steele C, Chau T. Time and time-frequency characterization of dual-axis swallowing accelerometry signals. Physiological Measurement. 2008 Aug;29(9):1105–1120. doi: 10.1088/0967-3334/29/9/008. [DOI] [PubMed] [Google Scholar]

- 66.Sejdić E, Steele CM, Chau T. A procedure for denoising of dual-axis swallowing accelerometry signals. Physiological Measurements. 2010 Jan;31(1):N1–N9. doi: 10.1088/0967-3334/31/1/N01. [DOI] [PubMed] [Google Scholar]

- 67.Spadotto A, Papa J, Gatto A, Cola P, Pereira J, Guido R, Schelp A, Maciel C, Montagnoli A. Denoising swallowing sound to improve the evaluator’s qualitative analysis. Computers & Electrical Engineering. 2008 Mar;34(34):148–153. [Google Scholar]

- 68.Kohyama K, Sawada H, Nonaka M, Kobori C, Hayakawa F, Sasaki T. Textural evaluation of rice cake by chewing and swallowing measurements on human subjects. Biosience, Biotechnology, and Biochemistry. 2007 Feb;71(2):358–365. doi: 10.1271/bbb.60276. [DOI] [PubMed] [Google Scholar]

- 69.Eylgor S, Perlman A, He X. Effects of age, gender, bolus volume and viscosity on acoustic signals of normal swallowing. Journal of Physical and Medical Rehabilitation. 2007 Jan;53(1):94–99. [Google Scholar]

- 70.Greco C, Nunes L, Melo P. Instrumentation for bedside analysis of swallowing disorders. The 32nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Buenos Aires, AR. August 31 – September 4 2010; pp. 923–926. [DOI] [PubMed] [Google Scholar]

- 71.Lee J, Steele C, Chau T. Swallow segmentation with artificial neural networks and multi-sensor fusion. Medical Engineering & Physics. 2009 Nov;31(9):1049–1055. doi: 10.1016/j.medengphy.2009.07.001. [DOI] [PubMed] [Google Scholar]

- 72.Damouras S, Sejdić E, Steele C, Chau T. An online swallow detection algorithm based on the quadratic variation of dual-axis accelerometry. IEEE Transactions on Signal Processing. 2010 Jun;58(6):3352–3359. [Google Scholar]

- 73.Guo Y, Cui D, Lu Q, Wang S, Jiao Q. Chaotic feature of water swallowing sound signals revealed by correlation dimension. 2nd International Conference on Information Science and Engineering; Hangzhou, CN. December 4–6 2010; pp. 171–173. [Google Scholar]

- 74.Sejdić E, Steele C, Chau T. Understanding the statistical persistence of dual-axis swallowing accelerometry signals. Computers in Biology and Medicine. 2010 Nov;40(11):839–844. doi: 10.1016/j.compbiomed.2010.09.002. [DOI] [PubMed] [Google Scholar]

- 75.Sejdić E, Falk T, Steele C, Chau T. Vocalization removal for improved automatic segmentation of dual-axis swallowing accelerometry signals. Medical Engineering & Physics. 2010 Jul;32(6):668–672. doi: 10.1016/j.medengphy.2010.04.008. [DOI] [PubMed] [Google Scholar]

- 76.Makeyev O, Schuckers S, Lopez-Meyer P, Melanson E, Neuman M. Limited receptive area neural classifier for recognition of swallowing sounds using continuous wavelet transform. The 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, FR. August 22–26 2007; pp. 3128–3131. [DOI] [PubMed] [Google Scholar]

- 77.Makeyev O, Sazonov E, Schuckers S, Lopez-Meyer P, Baidyk T, Melanson E, Neuman M. In: Recognition of Swallowing Sounds Using Time-Frequency Decomposition and Limited Receptive Area Neural Classifier. 1 Allen T, Ellis R, Petridis M, editors. Vol. 15. Springer; London: 2009. [Google Scholar]

- 78.Makeyev O, Schuckers S, Lopez-Meyer P, Melanson E, Neuman M. Limited receptive area neural classifier for recognition of swallowing sounds using short-time fourier transform. The 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, FR. August 22–26 2007; pp. 1417.1–1417.6. [DOI] [PubMed] [Google Scholar]

- 79.Orovic I, Stankovic S, Chau T, Steele C, Sejdic E. Time-frequency analysis and hermite projection method applied to swallowing accelerometry signals. Journal on Advances in Signal Processing. 2010 Mar;2010(1):1–7. [Google Scholar]

- 80.Aboofazeli M, Moussavi Z. Analysis of temporal pattern of swallowing mechanism. The 28th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; New York, NY. August 30 – September 3 2006; pp. 5591–5594. [DOI] [PubMed] [Google Scholar]

- 81.Aboofazeli M, Moussavi Z. Analysis of swallowing sounds using hidden markov models. Medical and Biological Engineering and Computing. 2008 Apr;46(4):307–314. doi: 10.1007/s11517-007-0285-8. [DOI] [PubMed] [Google Scholar]

- 82.Aboofazeli M, Moussavi Z. Swallowing sound detection using hidden markov modeling of recurrence plot features. Chaos, Solitons & Fractals. 2009 Jan;39(2):778–783. [Google Scholar]

- 83.Sejdić E, Steele CM, Chau T. Segmentation of dual-axis swallowing accelerometry signals in healthy subjects with analysis of anthropometric effects on duration of swallowing activities. IEEE Transactions of Biomedical Engineering. 2009 Apr;56(4):1090–1097. doi: 10.1109/TBME.2008.2010504. [DOI] [PubMed] [Google Scholar]

- 84.Lee J, Steele C, Chau T. Classification of healthy and abnormal swallows based on accelerometry and nasal airflow signals. Artificial Intelligence in Medicine. 2011 May;52(1):17–25. doi: 10.1016/j.artmed.2011.03.002. [DOI] [PubMed] [Google Scholar]

- 85.Nikjoo M, Steele C, Sejdić E, Chau T. Automatic discrimination between safe and unsafe swallowing using a reputation-based classifier. Biomedical Engineering Online. 2011 Nov;10(100):1–17. doi: 10.1186/1475-925X-10-100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Jestrović I, Dudik J, Luan B, Coyle J, Sejdić E. The effects of increased fluid viscosity on swallowing sounds in healthy adults. Biomedical Engineering Online. 2013 Sep;12(90):1–17. doi: 10.1186/1475-925X-12-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Steele C, Sejdić E, Chau T. Noninvasive detection of thin-liquid aspiration using dual-axis swallowing accelerometry. Dysphagia. 2013 Mar;28(1):105–112. doi: 10.1007/s00455-012-9418-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Fontana J, Melo P, Sazonov E. Swallowing detection by sonic and subsonic frequencies: A comparison. The 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Boston, MA. August 30 – September 3 2011; pp. 6890–6893. [DOI] [PubMed] [Google Scholar]

- 89.Sejdić E, Steele C, Chau T. Classification of penetration –aspiration versus healthy swallows using dual-axis swallowing accelerometry signals in dysphagic subjects. IEEE Transactions on Biomedical Engineering. 2013 Jul;60(7):1859–1866. doi: 10.1109/TBME.2013.2243730. [DOI] [PubMed] [Google Scholar]

- 90.Sejdić E, Steele CM, Chau T. A method for removal of low frequency components associated with head movements from dual-axis swallowing accelerometry signals. PLoS ONE. 2012 Mar;7(3):1–8. doi: 10.1371/journal.pone.0033464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Leslie P, Drinnan M, Finn P, Ford G, Wilson J. Reliability and validity of cervical auscultation: A controlled comparison using videofluoroscopy. Dysphagia. 2004 Nov;19(4):231–240. [PubMed] [Google Scholar]

- 92.Stroud A, Lawrie B, Wiles C. Inter- and intra-rater reliability of cervical auscultation to detect aspiration in patients with dysphagia. Clinical Rehabilitation. 2002 Jun;16(6):640–645. doi: 10.1191/0269215502cr533oa. [DOI] [PubMed] [Google Scholar]

- 93.Reynolds E, Vice F, Gewolb I. Cervical accelerometry in preterm infants with and without bronchopulmonary dysplasia. Developmental Medicine & Child Neurology. 2003 Jul;45(7):442–446. doi: 10.1017/s0012162203000835. [DOI] [PubMed] [Google Scholar]