Abstract

Objectives

To investigate researchers’ perceptions about the factors that influenced the policy and practice impacts (or lack of impact) of one of their own funded intervention research studies.

Design

Mixed method, cross-sectional study.

Setting

Intervention research conducted in Australia and funded by Australia's National Health and Medical Research Council between 2003 and 2007.

Participants

The chief investigators from 50 funded intervention research studies were interviewed to determine if their study had achieved policy and practice impacts, how and why these impacts had (or had not) occurred and the approach to dissemination they had employed.

Results

We found that statistically significant intervention effects and publication of results influenced whether there were policy and practice impacts, along with factors related to the nature of the intervention itself, the researchers’ experience and connections, their dissemination and translation efforts, and the postresearch context.

Conclusions

This study indicates that sophisticated approaches to intervention development, dissemination actions and translational efforts are actually widespread among experienced researches, and can achieve policy and practice impacts. However, it was the links between the intervention results, further dissemination actions by researchers and a variety of postresearch contextual factors that ultimately determined whether a study had policy and practice impacts. Given the complicated interplay between the various factors, there appears to be no simple formula for determining which intervention studies should be funded in order to achieve optimal policy and practice impacts.

Keywords: Health policy, Research Impact, Translational Research, Knowledge Transfer, Intervention Research

Strengths and limitations of this study.

We interviewed researchers about whether and how their own specific intervention research study had achieved policy and practice impacts, thus producing an empirical analysis, not a general analysis of potential influences.

We used a mixed methods approach to identify factors associated with impact. Detailed qualitative analyses were conducted for interview data on researchers’ perspectives, and quantitative analyses conducted for specific variables that were verified through a bibliometric analysis of publications, and through independent panel assessment of policy and practice impacts. Mixed methods provide a more comprehensive analysis of factors than either method alone.

Our findings identify both a range of influences and the links between these variables.

In terms of limitations, the process and timing for assessment of impacts may have limited our capacity to definitively distinguish studies with and without policy and practice impacts, and thus the role and interactions of different influences at different times.

Introduction

There are increasing expectations that health research will have public benefits.1–4 Consequently, there is growing interest in tracking the impacts of health research, and in the processes and factors that facilitate impact.5 6 At the current time there is no agreed systemic approach for measuring the broader impacts of health research, although there are some examples where research impact assessment systems have been introduced by governments and funding bodies, such as the Research Excellence Framework and ResearchFish in the UK.7–9 In Australia, there is no commonly used system for collecting postresearch impact data; however, research utilisation is an area receiving greater attention from the research community and funders.10 11

Research impact models usually describe a sequence of impacts starting with immediate research outputs (eg, scholarly publications), moving to translational outputs (eg, implementation protocols) and further ‘real world’ impacts which occur beyond the research setting, including policy or practice impacts and long-term population health outcomes.5 12–15 Models with sequential stages assume that an impact or output at one stage may lead to increasingly concrete and widespread impacts over time.14 15

A number of factors operating as a complex, interacting system are thought to influence the utilisation of research along this pathway. These include the nature of the evidence and intervention, characteristics of the researchers and end users, the context in which change is to be implemented, and the dissemination actions that are taken.1 16 17 Within each of these domains, specific variables have been identified as potentially influential. For example, interactions between researchers and end users influence research utilisation;1 18–23 and researchers’ perceptions of their role may in turn influence whether or not they actively engage with end users.24 Interventions that are simple to implement, affordable and/ or compatible with existing policies and delivery infrastructure may be more likely to be adopted in practice.18 25–27 The contribution of research may also depend on whether evidence becomes available at a time when the topic is a policy priority.1 18 Finally, the contribution of a study would be expected to depend on the quality of the study, whether the finding was of real world significance and consistent with existing evidence.18 21 22 Thus, there is likely to be high variability in whether or not any given research study exerts influence, given local circumstances and the relative timing of events. However, much of the available evidence is based on researchers’ and policymakers’ general perceptions about factors that influence the use of evidence in policy-making,1 21 22 and there are few studies that have empirically examined in detail the factors that influence impact or different levels of impact for a particular study or set of studies.18 28–30 Furthermore, there are only a small number of studies that focus partly or wholly on intervention research;18 28 30–33 yet, this type of research has the most immediate relevance to policy and practice.34–36

This paper reports on a study investigating researchers’ perceptions about the factors that influenced the policy and practice impacts (or lack of impact) of their own intervention research study, funded by Australia's leading health and medical research funding agency, the Australian National Health and Medical Research Council (NHMRC) between 2003 and 2007. We chose to focus on impacts beyond the research setting, but limited our scope to impacts on policy and practice, rather than examining outcomes in terms of improvements in service delivery or benefits to patients and the public.15 Specifically, we investigated:

To what extent do researchers undertake dissemination actions to facilitate policy and practice impacts, and what approaches do they apply?

What factors do researchers believe influenced whether or not their own study had policy or practice impacts?

What were the main factors that differentiated cases that did and did not achieve policy and practice impacts?

Sample and data sources

Our sample and methodology is described in more detail in a related publication and summarised here.15 The sample comprised all National Health and Medical Research Council (NHMRC) studies funded between 2003 and 2007 that fitted our definition of health intervention research (any form of trial or evaluation of a service, programme or strategy aimed at disease, injury or mental health prevention, health promotion or psychotherapeutic intervention conducted with general or special populations, or in clinical or institutional settings), and where data analysis had been completed by the time of our data collection in 2013.15 Clinical trials of potentially prescribable drugs, vaccines and diagnostics were excluded because of the very different trajectories that such therapeutic goods are required to navigate before being authorised for use by Australia's Therapeutic Goods Administration. Seventy grants from a list of NHMRC grants for the time period met these inclusion criteria.

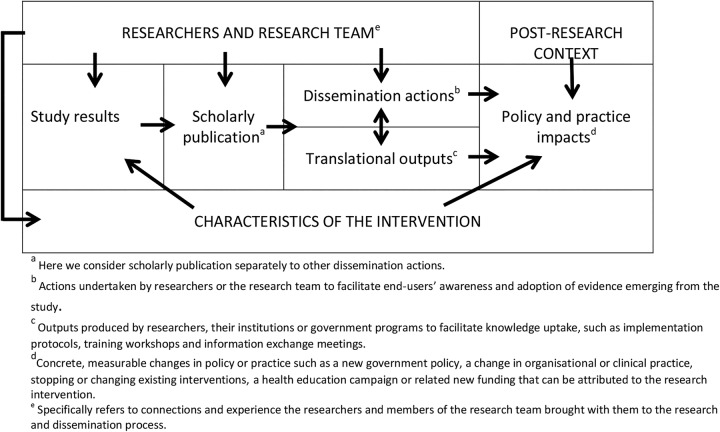

Two online surveys were administered to the named chief investigators of grants; in the first instance to confirm eligibility and, in the second instance, to elicit further information about their study and its impacts.15 Based on responses to these surveys, semistructured interviews were conducted. Interviews sought to obtain consistent information about any potential real world impacts of the study and to explore the researchers’ perceptions of what had helped or hindered the uptake of their intervention. All interviewees were asked open-ended questions related to each major interview topic (figure 1). Given the diversity of cases and wide array of potential variables, the researchers were not asked questions about specific variables (eg, use of media to disseminate their findings), but rather were encouraged to tell the primary story about the actions they had taken and how any of the impacts had occurred.

Figure 1.

Topics covered during interviews.

Investigators for 50 grants (71% response rate) completed the interviews, and these form the basis of data reported here. Data were collected in 2012 and 2013. Grant funding start and completion dates for individual grants varied: a large proportion (20/50, 40%) of the studies in our sample did not start until 2007, the last year in our sample period; the grant funding for most (44/50, 88%) had ended by 2009; and the funding period for all of the studies had concluded by 2011. The sample comprised a mix of treatment and management (n=20), early intervention/screening (n=12) and primary prevention/health promotion interventions (n=18) implemented in clinical and community settings. Topics reflected a wide variety of health disciplines, including medicine, psychiatry, psychology, dietetics, dentistry, physiotherapy, speech pathology, nursing and public health.15 The NHMRC grants comprise investigator-initiated research and are assessed based on: scientific quality; significance, in terms of potential contribution to knowledge and importance of the health issue addressed; and the research team quality and capacity. No observable differences in terms of topic areas or type of study intervention were noted for studies in which the chief investigator did or did not respond to the invitation to participate.

Additional processes were undertaken to verify any impacts claimed by the researchers and to obtain objective data related to publications and study findings. To determine if the studies had impacts, interview data for each case was reviewed by two authors and classified as having at least one, or no, policy and practice impacts. The reported impacts were corroborated, where possible, by internet searching using Google. Studies classified as having impacts were then reviewed by an expert panel to verify the impacts claimed by the chief investigators. Data about related publications were collected from the chief investigators and literature searches. Publications were reviewed to identify those that reported on intervention effects. Those that did were assessed to identify whether any statistically significant changes to principal outcomes proposed in the original research application were reported. Contentious cases were checked by other authors through a panel process. Where no publications on intervention effects were available, we relied solely on the findings reported by the researchers in their interviews to determine if the study had produced a statistically significant intervention effect. A summary of the outcomes of these additional processes is provided in box 1.

Box 1. Summary of case attributes (n=50).

34 (68%) had published study results in a peer-reviewed publication

28 (56%) had study interventions that produced a statistically significant intervention effect

17 (34%) had specific policy and practice impacts (such as clinical practice changes; organisational or service changes; commercial products or services; policy changes) that had already occurred and could be corroborated

Qualitative analyses of factors influencing impact

Using NVivo, each of the interview transcripts were coded against the factors identified from the literature as influencing research impacts on policy and practice, as well as those emerging from our data. A summary of the coding structure is provided in table 1. Coding was conducted and cross-checked by two authors. Also within NVivo, each case (intervention study) was assigned codes for the following attributes: (1) whether or not the intervention results were published, (2) whether or not there were statistically significant intervention effects on primary outcomes, and (3) whether or not there were post-study impacts.15

Table 1.

Coded themes and factors potentially associated with policy and practice impacts

| Study results Statistically significant intervention effects Fit with current evidence Research methodological quality | Intervention characteristics Part of an extended programme of research Novel intervention or first study of its kind Designed for ‘real world’ implementation Research embedded in practice Simple intervention or complex intervention, setting or environment |

| Scholarly publication Peer-review publications Peer-review publications of intervention results | |

| Researcher and research team Experience in relation to translational activities Perceived role in relation to translation activities Multidisciplinary research team End users part of research team Consultation and engagement with end users prior to/during research | |

| Dissemination actions (beyond scholarly publication) Oral presentations at conferences, workshops Engagement with stakeholders and end users in dissemination of findings Communication with policymakers Media | |

| Translational outputs Availability of intervention resources/materials/protocols and/or training and/or inclusion in guidelines/recommendations Commercial opportunities | |

| Post-research implementation context Availability of resources Community need, interest, acceptance of intervention Fit within policy context Compatibility with professional attitudes and practices Presence of a delivery mechanism Fit with existing structures, systems, services, programmes Political acceptability Good timing; windows of opportunity |

The data coded in NVivo were exported to Excel in order to generate a spreadsheet which included case attributes, as well as the presence of coded themes. We used this data summary to sort the sample by case attributes, in order to identify apparent differences in characteristics of intervention studies with and without impacts, and groups of studies with similar attributes. We further explored the observed patterns by conducting detailed analyses on the similarities and differences of groups of cases, and indepth analyses of individual cases. We also examined counter examples, where cases had similar characteristics to others but did not proceed in the same way. This top-level approach, combined with fine-grained analysis of patterns, groups, and individual cases, was conducted against all coded factors and attributes, and considered against each interviewee's primary ‘story’ or account of why the intervention did or did not have particular types of impacts.

Quantitative analyses of factors influencing impact

Quantitative analyses were conducted where objective information was available for case attributes (publication of results and intervention effects). Fisher's exact test (2 tail) was used to compare the association between these case attributes and whether the study did or did not have policy and practice impacts.

This project had approval from the University of Sydney Human Research Ethics Committee (15003). All project informants were assured that their projects would be de-identified in our reports because of anticipated sensitivities about publication output, failed interventions or lack of real world impact.

Results

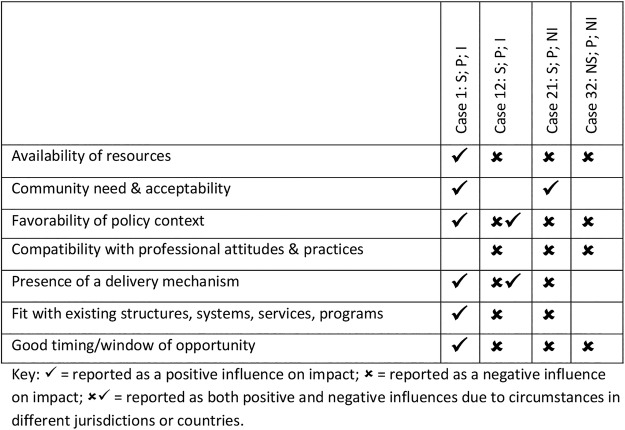

Results are presented for each category identified in table 1. The relationships between the categories in table 1 that were identified in our analysis are represented in figure 2 and discussed in the text. To illustrate themes and patterns, we have included examples and quotes for specific cases. Cases have been numbered and attributes identified as follows: statistically significant intervention effects (S); non-significant intervention effects (NS); results published (P); no results published (NP); policy and practice impacts (I); no policy and practice impacts (NI).

Figure 2.

Relationship between factors that influenced policy and practice impacts.

Intervention effects

A greater proportion of study interventions with statistically significant intervention effects (14/28, 50%) had policy and practice impacts, compared to studies where no statistically significant intervention effect was demonstrated (3/22, 13% p=0.015).

Whether or not interventions with non-significant effects should have policy and practice impacts was commonly discussed among researchers who had findings of this nature. Some researchers with non-significant intervention effects considered it appropriate that their study did not have impacts on policy and practice. For example, one commented: But in terms of changing policy and practice, I don't see that it should be informing that until you have a positive outcome (35: NS; P; NI). Some others felt their study did have implications for policy and practice, most commonly because the findings suggested that an intervention that was already in use, or recommended in the guidelines, was ineffective and therefore there was a case for withdrawing the intervention or changing the existing guidelines. All three of the studies with non-significant findings that had impact were of this nature. For example, in one case, the study findings led to the non-government organisation that developed the intervention withdrawing the intervention (15: NS; P; I). In each of the other two cases, the study indicated that, to be effective, the intervention needed to be modified in some way. In one case, the results indicated that the intervention needed to be varied for different target groups, and the UK guidelines were changed accordingly (16: NS; P; I). However, there were also studies with similar implications that did not have policy and practice impacts. A researcher of one such study commented: I don't think researchers or policymakers are well schooled in decommissioning I suppose is the sort of word I'm looking for……It's a lot easier to get new things that work or are seen to work (32: NS; P; NI).

How study findings fitted with the available body of evidence also influenced whether studies had an impact. Studies with significant intervention effects were more likely to have impacts if the study findings were supported by other evidence. For example, one researcher from the impact group commented: Some of them have actually formally tested it also by trial, and provided exactly the same results……. They really only paid notice as the evidence got more and more overwhelming, and the cost-effectiveness and so on became more important (4: S; P; I). Studies with statistically non-significant effects were less likely to have impacts if the evidence across existing studies was mixed, unclear or there were two schools of thought as this meant it was harder to argue that an existing intervention should be decommissioned or modified.

Other studies have suggested that the methodological quality of the research may have an influence on impact.18 21 In our study, there were a small number of studies where the researchers considered that the methodological problems (eg, recruiting sufficient sample) limited the opportunity to generate significant intervention effects or reduced the certainty of their findings (ie, inability to include randomisation); therefore, these studies did not have policy and practice impacts. For example: We didn't have the statistical power to demonstrate that what we applied in the intervention group actually made a difference (31: NS; P; NI); or We initiated it as a randomized trial but I think what we ultimately ended up doing was perhaps all that we could ever have done which was a parallel cohort study (20: S; NP; NI). However, there were researchers from the impact and no impact groups who described their studies as methodologically robust, suggesting that in this sample research quality on its own was not sufficient to influence impact. For example: I think that the general view of the study was that methodologically it was reasonably robust. So the null result is probably true 28: NS; P; NI); and Well, when the study was published, I mean it was actually the largest study of its type and the one that is considered to be the most definitive (22: S; P; NI).

Scholarly publications

At the time of our data collection, two-thirds (34/50; 68%) of researchers had published their results on primary intervention outcomes in a peer-reviewed journal. Studies where the results had been published in peer-reviewed journals were more likely to have impacts. Close to half (16/34; 47%) of the studies whose results had been published had impacts, whereas only one study (1/16; 6%) with no published results had impacts (p=0.008). The association between publication of results and impact was statistically significant for those studies with statistically significant intervention effects (13/20 with results published had impacts compared to 1/8 studies with no results published, p=0.03); however, this was not the case for studies with non-significant intervention effects (3/14 studies with results published had impacts but none of the studies with no published results had impacts, p=0.27). This adds weight to the importance of publication of findings, as it appears that it is not just the results themselves, but the fact that these have been published that has an influence on impact.

The majority of researchers who had not published their findings planned to do so; some were still writing their publication, others had submitted their article but had been rejected or were still awaiting approval. Difficulty getting findings published was a theme discussed by both researchers with significant and non-significant findings who had not yet had their results published. Our analyses do not suggest that researchers with significant intervention effects were any more likely to have published their results than researchers with non-significant intervention effects (71% of studies with significant effects had published results compared to 64% of studies with non-significant effects, p=0.58). Researchers of studies with non-significant effects or mixed effects were more likely to cite lack of enthusiasm or difficulties in knowing how to present their results as reasons for why publication of their findings had been delayed. For example: I think it was quite successful. But negative results are obviously a lot less exciting to write up than positive ones (42: NS; NP; NI); and But we haven't actually published it yet and that was partly probably because of the unexpected finding that took longer than anticipated to write up (40: NS; NP; NI).

Dissemination actions

Researchers reported engaging in a variety of dissemination activities beyond scholarly publication as described in table 2.

Table 2.

Dissemination actions reported by researchers

| Commonly reported | Less commonly reported |

|---|---|

|

|

Researchers’ perceptions of the implications of their findings determined their approach to dissemination and the extent of their dissemination activities. For example, some researchers reported that their non-significant effects meant they did not have anything to ‘sell’, and therefore limited their dissemination activities to traditional academic activities, such as publishing and attending conferences, rather than seeking out opportunities to engage with end users and decision-makers beyond those directly involved in their study. For example, one researcher commented: In terms of government we haven't had direct conversations with government. Again because we don't have a product we can tell them about that is good (29: NS; P: NI).

There were also a few examples where researchers with significant effects had limited their dissemination efforts due to their perceptions about the implications of the findings: for example, where the findings supported existing guidelines and recommendations, the researcher felt there was no need for change (24: S; P; NI), or where the researcher felt that the clinical significance of the relatively new research findings should be confirmed, through further research, before recommendations based on the findings could be made (22: S; P; NI).

Researchers who felt their findings had implications for policy and practice were more likely to use active dissemination strategies and in particular, try to engage with policymakers or decision-makers. None of the researchers who had limited their dissemination activities were from the impact group; however, researchers from both the impact and no impact groups had used active dissemination strategies and attempted to engage with decision-makers where they felt their findings warranted such action, suggesting that that these activities on their own were not associated with any impact.

However, the length of time researchers had been engaged in active dissemination activities did appear to have an influence on impact. Some researchers reported being engaged in dissemination over long time frames, and they tended to be those involved in studies with policy and practice impacts. Over this time, some had continued their advocacy efforts and in some cases had completed further research in the area, which strengthened the case for uptake of the intervention. For example, one researcher in the impact group described being involved in dissemination activities around the study in question and a body of related research for almost a decade, and during that time had taught 31 courses internationally and 70 nationally, written a text for practitioners, spoken at many international conferences, successfully advocated for a free consumer booklet to be produced and for the intervention to be taught in the undergraduate curriculum nationally, as well as completing further related research (7: S; P; I). On the other hand, there were examples among the no impact group in which the researchers reported only recently publishing their findings and only just beginning to engage with end users and decision-makers. One such researcher commented: The only dissemination activities were conference presentations, book chapters about comorbidity and treatment of comorbidity and the journal article. But as you can see it's only just been published in April (25: S; P; NI).

It was common for researchers to describe making multiple attempts to engage with end users and decision-makers, and to seek alternative avenues of engagement if they were not initially successful. There were examples in the impact group where an intervention was funded for wide-scale implementation only after the researchers had made several attempts to engage with decision-makers over an extended time period. Researchers from both groups described ways in which they planned to continue their dissemination efforts and many felt that these efforts might lead to impacts, or greater impacts, over time.

Translational outputs

For interventions where a group of health professionals needed to be trained in the use of an intervention protocol, researchers frequently discussed how they had packaged their intervention materials in the form of books, protocols, treatment manuals, information packages, training materials for professionals and consumer education materials to facilitate the adoption of the intervention by practitioners. Some researchers also spoke about their role in developing, and in some cases delivering, training programmes and courses at an undergraduate or postgraduate level. These ‘translational outputs’ had been developed by the researchers or others prior to, during or following the study period, or were currently being developed. For some other types of interventions (eg, supplements and safety products), researchers discussed how they had or could facilitate the uptake of their intervention by influencing market mechanisms.

Publicly available translational outputs did appear to facilitate adoption. While researchers from both impact and no impact groups reported producing translational materials, researchers from the impact group were more likely to report that the translational resources related to their intervention were publicly available and that a mechanism to support their distribution to practitioners was in place. For example, in one case, the researcher had developed postgraduate training modules and then partnered with a professional association to deliver the training on a user pays basis (11: S; P; I), and in another case, the researcher had partnered with a non-government organisation to develop consumer resources which were then distributed at a cost to end users (2: S; P; I). There were also a limited number of cases where commercial dissemination of specific measurement tools or products had occurred; one case of significant commercialisation which allowed substantial practice impacts to flow had been supported by the university commercialisation unit and formed part of an existing set of related intervention resources and an accredited training programme (1: S; P; I).

While the importance of developing highly professional and comprehensive translational outputs in order to facilitate impact was commonly discussed, not all of the researchers who mentioned this issue felt that it was their role to produce such resources. Some expressed concerns about their ability to update materials over time, support practitioners and monitor the fidelity of intervention delivery. The lack of an appropriate funding source for such work was also seen as a major barrier for widespread translation; nevertheless, many had produced translational materials and/or developed training courses either by utilising their NHMRC funds for this purpose or through the opportunistic use of other resources.

Intervention characteristics

A common theme reported by researchers was that their study formed part of an extended programme of research conducted by the researcher themselves or other research groups; and having an extended programme of research appeared to be more frequent among those cases that had subsequent impact on policy and practice. This theme was related to how the research fitted within the available evidence. Research supported by other evidence, rather than a single study alone, was more likely to have impacts. In addition, an extended programme of research usually meant that the researcher had been engaged in dissemination activities for longer periods of time. For example, one researcher commented: So, there are studies that are being conducted in Europe. Particularly, there's a Belgian group who have taken it up quite a lot. There is another group in the UK. There are assorted studies that have come out of the USA …….it was when other people started talking about it other than me, that was probably the most important part (7: S; P; I).

On the other hand, researchers with interventions in the no impact category were more likely than those in the impact category to mention that their research was innovative or the first of its kind in some way, usually noting that the intervention was modified or adapted from a previously researched intervention, to suit a new target group or setting. The results of innovative or adapted research were less likely to be supported by evidence from other studies, making it more difficult for researchers to argue a case for change, based on a single study alone. For example, one researcher commented: But I think it's hard to judge the value of one study- if you're breaking ground in an area……So I think it's not just about a single piece of work, it's about the accumulation of different people's experiences at trialing this kind of intervention in different settings (31: NS; P; NI).

Many of the interventions in our study had characteristics that other literature suggest would make them more easily adopted into policy or practice.26 27 For example, these were implemented in real world, practice settings, such as outpatient clinics, and/or designed to fit into existing delivery systems, such as Aboriginal health services. However, these characteristics were found in studies that had impacts as well as those that did not, and did not appear to directly influence whether or not the studies had impacts. For example, a researcher from the no impact group commented: So we designed it so that if it was effective it would just be able to slot into the existing system. We designed it very carefully (32: NS; P; NI).

There were a few cases where researchers suggested that the simplicity of the intervention was one of the factors which influenced its impact; for example, one researcher commented: The other fact is well it's quite a straightforward intervention. It's a one-off relatively cheap intervention which if put in place would make it a cost-effective one (9: S; P; I). However, there were too few cases of this nature to draw any conclusions about whether simple interventions were more likely to have impacts. In fact, in many cases, with and without impact, researchers commented on the complexities of implementation related to the setting, target group or multilayered aspect of the intervention itself.

Researchers and research team

Researchers commonly discussed their professional background, personal connections, networks, experience and orientation towards translational activities, as well as that of their research team. For example, it was common for researchers from the impact and no impact groups to describe themselves as having joint roles as practitioners and researchers. In addition, researchers from both groups described how they had used their professional and personal networks to engage with end users, before, during and after the research period. They described how their connections with various stakeholder groups, including policymakers, professional bodies and consumer groups, and professional experience helped to develop relevant research questions, and provided links and credibility to support dissemination of findings to other practitioners and end user groups. While engagement with end users did not appear to distinguish between impact and no impact groups in our study, the researchers’ experience in translational activities and attitude to these tasks did. Researchers from the impact group were more likely to suggest that they or members of their research team had an extensive track record in translational activities and were considered to be international experts in their field. This finding was related to the researchers also being involved in extended lines of research enquiry related to the intervention in question. For example, one researcher from the impact group commented: I have an international profile and I lead a number of international groups, so I was able to make sure as we worked together around the table with the international groups that they were familiar with this sort of work and they saw its importance (4: S; P; I). In addition, researchers of studies that had impact more commonly saw that it was their role to engage in dissemination activities compared to those from the no impact group. One interviewee from the no impact group commented: I don't see my role as a scientist to be a lobbyist I'm not going to go and make special appointments to draw people's attention to my research (33: NS; P; NI).

Postresearch context

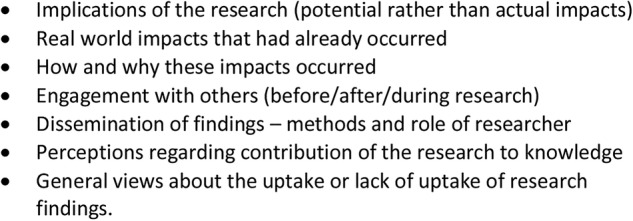

Overall, the researchers described a complicated interplay between postresearch contextual factors and the other factors described above, with each interviewee telling a unique story about these relationships. To illustrate this point, figure 3 presents information from the NVivo analysis showing the factors that were influential for individual studies with different attributes as follows: cases 1 and 12, both with significant results, published and demonstrated post-study impacts; case 21 with significant published results but no impact; and case 32 with non-significant published results and no impact. In some cases, researchers described concurrent but opposing experiences within the same study, for example, if they were working towards translating their findings in multiple jurisdictions or countries (eg, case 12; figure 3) thus highlighting how variable these contextual relationships can be. Most of the postresearch contextual factors we coded (box 1) appeared to influence impact, but the number and combination of factors that were influential for individual cases were highly variable.

Figure 3.

Illustration of contextual factors described in selected cases.

Some patterns related to the postresearch context did emerge, however, when we compared cases with similar attributes (statistically significant results; published results; and had produced translational resources) that did and did not have impacts. It was more common for the interventions in the no impact group to require an entirely new service or treatment to be delivered, involving reorientation of existing services, new funding mechanisms and changes to professional attitudes and roles rather than being an adjunct or replacement for an existing service or treatment that was already being delivered; whereas the opposite was true for interventions in the impact group. There were a few cases in the impact group which did involve implementing an entirely new service. In these cases, the population group tended to be a high-priority group; the policy climate was, therefore, favourable and in some cases dedicated funding for new service delivery was available as part of policy commitments.

Discussion

The findings from our study of intervention research identified a number of major influences on whether studies funded through NHMRC project grants had subsequent policy and practice impacts. Following completion of the study itself, researchers engaged in actions to facilitate utilisation of their findings in ‘real world’, non-research contexts. Numerous factors related to the intervention itself, the researcher, some of their dissemination and translation efforts and the postresearch context exerted variable and contingent influences on whether a study produced policy and practice impacts. However, statistically significant intervention effects and publication of these results were the most important influences; these variables influenced what researchers did next and presumably also influenced end users (figure 2). The general pathway as depicted in figure 2, starting with study results, through scholarly publication, dissemination activities, translational outputs and policy and practice impacts was consistent; however, the details relating to the timing or importance of each action varied for each case. In general, the pathway was initially influenced by researchers’ perceptions about the implications of their findings in the context of existing evidence, and later by the postresearch policy and practice context. The simplified linear pathway depicted in figure 2 pertains to single research studies; albeit in some cases researchers were building on the ‘pathways’ from previous intervention studies, and in other cases they described that they had started new lines of research or ‘pathways’ as a result of their study findings. Such connected and more complex pathways are consistent with the feedback loops described in other conceptual models of research impact, where each research study contributes to the general knowledge pool and inputs for future research.14 13 Our key findings and their implications are highlighted in table 3.

Table 3.

Key findings and implications

| Key findings | Implications |

|---|---|

| Studies with statistically significant intervention effects are more likely to have impacts | So that health systems do not continue to fund interventions found to be ineffective, it is important that the findings of policy relevant negative studies are given equal consideration to those of positive studies; and mechanisms for discontinuing ineffective intervention studies are available |

| If findings are consistent with, or add to existing evidence, the study is more likely to have policy and practice impacts | Individual studies are less likely to provide sufficient evidence for policy or practice change. Replication and evidence synthesis is needed. Funding bodies should support research that replicates and advances the evidence base for existing interventions. However, this should not come at the expense of funding innovative studies that may lead to the development of new solutions |

| Peer-review journal publication on intervention effects appears to be necessary, but not sufficient, to produce policy and practice impacts | Publication of intervention results in peer-reviewed journals should be specifically identified when considering the track record of researchers or research teams in grant assessment processes. Academic achievement systems should include translational outputs, and specifically identify peer-reviewed publication of intervention results |

| Study findings and researchers’ perceptions of their implications determine the extent to which researchers engage in ‘active’ dissemination strategies | Grant application processes should include a requirement for researchers to discuss the potential implications of their research and outline an explicit translation strategy. However, there should also be funding for research that does not aim to achieve immediate or direct impacts on policy and practice |

| The accumulation and interaction of active dissemination efforts over time influences whether a study has policy and practice impacts | Funding bodies should include systems for funding programmes of research that support existing lines of research enquiry initiated by individual researchers or research teams, as well as their ongoing dissemination efforts |

| Studies are more likely to have impact if any translational outputs that are produced are readily accessible to the target audience and available through a stable delivery mechanism | Funding bodies should include systems for dissemination trials, which include preparation of translational outputs, and support academic-policy partnerships |

| A diverse range of postresearch contextual factors are influential; these are not static or predictable | Researchers should be required to demonstrate in grant applications that they understand the policy and practice context in which their research will be implemented, as well as outline the strategies they will employ in order to keep abreast of new developments or changes in this context. As part of the grant application, researchers should outline their translation and dissemination plans, and include reference to key end user groups and relevant policy or practice factors |

| Studies are more likely to have impacts when the researchers involved are experienced and engage with these contextual factors as part of the dissemination process | As well as academic achievements, grant assessment processes should emphasise experience and track record in translational activities, and the extent of the research team's networks and connections outside of the research sector. At the same time, systems to develop expertise in knowledge translation strategies should be introduced |

While statistically significant intervention effects do not necessarily indicate meaningful or important results, these do provide a consistent and objective indicator, and thus exert influence on how the results are perceived by end users (among others). The availability of effectiveness data has been found by others to be associated with impact.21 Wooding et al28 found that negative findings or null results were associated with lower academic and wider impacts. They hypothesised that this may be due to journals being reluctant to publish negative findings, the researchers being reluctant to submit negative findings for publication, and that it was possibly harder to realise impacts for research that failed to prove something (as opposed to research that proved something failed).37

We found that interventions with non-significant findings can have policy and practice impacts, where an intervention that is already in use was shown to be ineffective. However, in most other cases, non-significant findings simply meant that a possible intervention strategy did not prove itself to be appropriate or effective, and there was a need for modification, or an alternative approach, and the findings, therefore, were unlikely to have direct or immediate implications for policy and practice. Owing to the small number of cases with negative effects that did have policy and practice impacts in our study, it was not possible to determine if there were any specific barriers to decommissioning existing programmes. Further research to examine the barriers to impact for studies with non-significant findings may be needed, particularly as it is important that health systems do not continue to fund interventions that are found to be ineffective.38 We did not find that non-significant intervention effects influenced researchers’ attempts to publish or their success in publishing their findings.

Peer-review journal publication of results appears to be a necessary, but not sufficient factor, to produce policy and practice impacts. The survey of Australian researchers by Haynes et al24 showed that researchers are well aware of this as a key influence. Other studies have had mixed findings in terms of whether publications are associated with wider impacts.28–30 However, it is important to note that our study considered the publication of the intervention effects on primary outcomes specifically, rather than focus on all publications related to that study, and the association between publication of intervention results and policy and practice impacts specifically, rather than the broader social or economic benefits of research.

Researchers’ perceptions about the implications of their findings determined the extent to which they engaged in active dissemination strategies. This is relevant because if researchers are unaware of the policy and practice context, they may underestimate the potential implications of their research and therefore miss or not seek out opportunities to actively disseminate their findings.

Researchers who deemed it appropriate often proceeded to develop translational outputs. These were attempts by the researchers or groups they were collaborating with to translate the key messages of the research into a language and product suitable for a specific target audience. Such strategies have been shown to be effective for increasing research use.34 In those cases with impact in our study, a mechanism for distributing or delivering these products to the target audience was available or had been established. The implication is that there is a gap in funding sources for preparing high-quality implementation guides and related resources, and mechanisms for their distribution and maintenance; whether this should come from research funding or from policy agencies is arguable.34 Funding for dissemination trials, which include preparation of translational outputs, support for academic-policy partnerships, and developing research knowledge exchange infrastructure, is likely to be of potential value in advancing such work.34 39

Producing findings consistent with existing evidence and/or building a body of work regarding an intervention seemed to increase the likelihood of having policy and practice impacts. This fits with other studies and perspectives, indicating that multiple sources of evidence form an optimal basis for policy and practice change; it is how a single study fits within a body of evidence that matters.34 In addition, researchers who had been involved in a field of research over an extended period of time may have had greater opportunities to establish networks to disseminate their findings, engage with decision-makers, collaborate with other researchers and develop a level of recognition of their expertise, all of which may have contributed to the likelihood that their studies had impacts compared to other studies.

Other studies have suggested that engagement and interaction between researchers and stakeholders, as well as strategic thinking about this, were associated with achieving translation with wider impacts.1 21 22 28 33 Many of the researchers in this sample were experienced in research translation and dissemination; and demonstrated qualities described as ‘strategic thinking’28 and ‘purposeful bridging relationships’ within the translation.24 In this regard, they actively used their links, spanning academic, clinical and policy networks, to disseminate findings when they believed the evidence warranted such action; albeit there were variations in experience, perceived skills, and persistence in terms of the extent or duration of professional advocacy. In some cases, the researchers pursued translational opportunities tenaciously. However, strategies of this nature were not in themselves associated with impact in our study; rather it is likely that the accumulation of efforts over time and the interaction between these efforts and a variety of contextual factors that ultimately determined whether a study had policy and practice impacts. The importance of timing and a confluence of events that determines whether a study does or does not have impacts have been noted elsewhere.1 18

Our study did not highlight any specific aspects of the postresearch policy and practice context as essential to achieve policy and practice impacts; however, a diverse range of such factors were identified. Perhaps what is most apparent is that those experienced researchers who actively link with end users become familiar with contextual factors, and can then anticipate and manage these as part of a dissemination process. That is, such contextual factors are not taken as static factors with predictable effects, but as a part of the expected dynamics of engaging with policymakers and translation.1 However, some researchers in this sample did not have direct knowledge of the policy context and could only speculate on such factors.

Our analysis identified a number of shared and common practices reported by researchers that were not found to be associated with impact in our study, but have been associated with impact in the literature. For example, most of these researchers were concerned to build evidence for interventions that could potentially be adopted in real- world practice, and therefore designed their interventions so that they were more likely to be scalable.26 27 In addition, most attempted to engage with a range of end users before, during and, if the study intervention provided sufficient evidence, after their research to facilitate adoption of their findings.1 18–22 It is possible that the homogeneity of these factors within this set of studies did not enable associations with impact to be revealed. The NHMRC selection process may also have contributed to the homogeneity of the sample and our contrary findings in terms of methodological quality.18 21 The NHMRC application process is highly competitive and selectively rewards research rigour, quality and investigator track record. In addition, as this sample comprised the outcomes of investigator-initiated research, we did not examine the relationship between coproduced research and research impact, which has been reported elsewhere.30 However, our findings do show some of the current practices among those involved in funded health intervention research in Australia and what researchers believed to be important for achieving policy and practice impacts.

Our study had a number of strengths. We used a whole of population sampling approach that allowed us to examine the differences between intervention studies with and without impacts, and those that did and did not have statistically significant intervention effects. We used qualitative and quantitative analyses to identify factors associated with impact, which provided a more comprehensive analysis of factors than either method alone. This included detailed qualitative analyses of interview data on researchers’ perspectives, and quantitative analyses conducted for specific variables that were verified through bibliometric analysis of publications. In addition, the expert panel process we employed meant the impacts claimed by researchers were subjected to a high degree of scrutiny, as well as ensured there was consistency in judgement across studies.15

In terms of limitations, there were inconsistencies in the extent of information obtained about some factors within each major thematic category due to the open-ended interview methodology we employed. However, this method did allow the unique story of the circumstances of each study to emerge. The time frame for assessment of impacts may have limited our capacity to authoritatively distinguish studies with and without policy and practice impacts; longer lead times may be required for some cases. In addition, the time point at which data collection occurred in comparison to study completion varied among the studies in our sample. This meant that some studies had more time for impacts to occur than others.15 Our study covered the contribution of a single study to policy and practice, rather than that of a programme of research; however, in some cases there was overlap between the study in question and other related research the chief investigator had conducted. As noted in the literature, there are many complications in identifying the impact of a specific study.28 Our results were based on researcher self-report and we recognise the potential for conflict of interest and over-reporting of impact by the principal investigator.30 However, the independent expert panel assessment process we used offers some validity to the range of impacts claimed.15 It is also possible that the researchers were not aware of the postresearch impacts of their research or may not have recalled key information, leading to the under-reporting of impacts. In addition, there were some researchers who did not respond to our request for interview (20 of 70), and these researchers and the outcomes of their research may have differed in meaningful ways to the researchers that did agree to participate. We also recognise that policy and practice impacts are not the only important outcomes of research. Other outcomes such as contributions to developing researcher capacity or knowledge about intervention implementation, and the target group or setting not examined in this study are also important.

This analysis illustrates the dissemination and translation practices adopted by Australian health intervention researchers, and how the application of these practices in a logical sequence and with a conducive set of contextual factors can influence policy and practice impacts. Given the complicated interplay between the various factors associated with impact, there is no simple formula for determining which individual intervention studies should be funded to achieve optimal policy and practice impacts. However, research use over time is likely to be enhanced by funding research that replicates and advances the evidence base for existing interventions, or supports the existing lines of research enquiry initiated by individual researchers or research teams, and their ongoing dissemination efforts. Such strategies should not come at the expense of innovative research that is unlikely to achieve immediate or direct impacts on policy and practice, but may help begin new lines of research enquiry.

Acknowledgments

The authors would like to thank the study participants for their time and contribution to this research.

Footnotes

Contributors: The study was co-conceived by all of the authors except RN. All of the authors contributed to the study design. GC and JS completed data collection. The analysis was led by LK and RN. All of the other authors participated in the analysis and interpretation of the data. RN and LK led on drafting the manuscript. All of the other authors contributed to drafting the manuscript. All of the authors have read and approved the final manuscript.

Funding: This work was supported by funding from the National Health and Medical Research Council of Australia (grant number 1024291).

Competing interests: None declared.

Ethics approval: This project had approval from the University of Sydney Human Research Ethics Committee (15003).

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Mitton C, Adair CE, McKenzie E et al. Knowledge transfer and exchange: review and synthesis of the literature. Milbank Q 2007;85:729–68. 10.1111/j.1468-0009.2007.00506.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bornmann L. Measuring the societal impact of research. EMBO Rep 2012;13:673–6. 10.1038/embor.2012.99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Martin BR. The Research Excellence Framework and the ‘impact agenda’: are we creating a Frankenstein monster? Res Evaluat 2011;20:247–54. 10.3152/095820211X13118583635693 [DOI] [Google Scholar]

- 4.Frodeman R, Holbrook JB, Bourexis PS et al. Broader Impacts 2.0: seeing—and Seizing—the opportunity. Bio Science 2013;63:153–4. [Google Scholar]

- 5.Banzi R, Moja L, Pistotti V et al. Conceptual frameworks and empirical approaches used to assess the impact of health research: an overview of reviews. Health Res Policy Syst 2011;9:26 10.1186/1478-4505-9-26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Donovan C. State of the art in assessing research impact: introduction to a special issue. Res Evaluat 2011;20:175–9. 10.3152/095820211X13118583635918 [DOI] [Google Scholar]

- 7.Milat AJ, Bauman AE, Redman S. A narrative review of research impact assessment models and methods. Health Res Policy Syst 2015;13:18 10.1186/s12961-015-0003-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hanney SR, Gonzalez-Block MA. Health research improves healthcare: now we have the evidence and the chance to help the WHO spread such benefits globally. Health Res Policy Syst 2015;13:12 10.1186/s12961-015-0006-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hanney SR, Castle-Clarke S, Grant J et al. How long does biomedical research take? Studying the time taken between biomedical and health research and its translation into products, policy, and practice. Health Res Policy Syst 2015;13:1 10.1186/1478-4505-13-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Extrapolated returns on investment in NHMRC medical research (2012). Canberra: Australian Society for Medical Research. [Google Scholar]

- 11.Centre for Epidemiology and Evidence (2011). Promoting the generation and effective use of population health research in NSW. A Strategy for NSW Health 2011–2015. North Sydney: NSW Ministry of Health. [Google Scholar]

- 12.Kuruvilla S, Mays N, Walt G. Describing the impact of health services and policy research. J Health Serv Res Policy 2007;12(Suppl 1):9 10.1258/135581907780318374 [DOI] [PubMed] [Google Scholar]

- 13.Buxton M, Hanney S. How can payback from health services research be assessed? J Health Serv Res Policy 1996; 1:35–43. [PubMed] [Google Scholar]

- 14.Graham KER, Chorzempa HL, Valentine PA et al. Evaluating health research impact: development and implementation of the Alberta Innovates—Health Solutions impact framework. Res Evaluat 2012;21:354–67. 10.1093/reseval/rvs027 [DOI] [Google Scholar]

- 15.Cohen G, Schroeder J, Newson R et al. Does health intervention research have real world policy and practice impacts: testing a new impact assessment tool. Health Res Policy Syst 2015;13:3 10.1186/1478-4505-13-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nutley S, Walter I, Davies H. From knowing to doing. Evaluation 2003;9:125–48. 10.1177/1356389003009002002 [DOI] [Google Scholar]

- 17.Hennink M, Stephenson R. Using research to inform health policy: barriers and strategies in developing countries. J Health Commun 2005;10:163–80. 10.1080/10810730590915128 [DOI] [PubMed] [Google Scholar]

- 18.Milat AJ, Laws R, King L et al. Policy and practice impacts of applied research: a case study analysis of the New South Wales Health Promotion Demonstration Research Grants Scheme 2000–2006. Health Res Policy Syst 2013;11:5 10.1186/1478-4505-11-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Walter I, Davies H, Nutley S. Increasing research impact through partnerships: evidence from outside health care. J Health Serv Res Policy 2003;8(Suppl 2):58–61. 10.1258/135581903322405180 [DOI] [PubMed] [Google Scholar]

- 20.Campbell DM, Redman S, Jorm L et al. Increasing the use of evidence in health policy: practice and views of policy makers and researchers. Aust New Zealand Health Policy 2009;6:21 10.1186/1743-8462-6-21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Innvaer S, Vist G, Trommald M et al. Health policy-makers’ perceptions of their use of evidence: a systematic review. J Health Serv Res Policy 2002;7:239–44. 10.1258/135581902320432778 [DOI] [PubMed] [Google Scholar]

- 22.Oliver K, Innvar S, Lorenc T et al. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res 2014;14:2 10.1186/1472-6963-14-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ross S, Lavis J, Rodriguez C et al. Partnership experiences: involving decision-makers in the research process. J Health Serv Res Policy 2003;8(Suppl 2):26–34. 10.1258/135581903322405144 [DOI] [PubMed] [Google Scholar]

- 24.Haynes AS, Derrick GE, Chapman S et al. From “our world” to the “real world”: exploring the views and behaviour of policy-influential Australian public health researchers. Soc Sci Med 2011;72:1047–55. 10.1016/j.socscimed.2011.02.004 [DOI] [PubMed] [Google Scholar]

- 25.Milat AJ, King L, Bauman AE et al. The concept of scalability: increasing the scale and potential adoption of health promotion interventions into policy and practice. Health Promot Int 2013;28:285–98. 10.1093/heapro/dar097 [DOI] [PubMed] [Google Scholar]

- 26.Milat AJ, King L, Newson R et al. Increasing the scale and adoption of population health interventions: experiences and perspectives of policy makers, practitioners, and researchers. Health Res Policy Syst 2014;12:18 10.1186/1478-4505-12-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yamey G. Scaling up global health interventions: a proposed framework for success. PLoS Med 2011;8:e1001049 10.1371/journal.pmed.1001049 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wooding S, Hanney SR, Pollitt A et al. Understanding factors associated with the translation of cardiovascular research: a multinational case study approach. Implement Sci 2014;9:47 10.1186/1748-5908-9-47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hanney S, Davies A, Buxton M. Assessing benefits from health research projects: can we use questionnaires instead of case studies? Res Evaluat 1999;8:189–99. 10.3152/147154499781777469 [DOI] [Google Scholar]

- 30.Hanney S, Buxton M, Green C et al. An assessment of the impact of the NHS Health Technology Assessment Programme. Health Technol Assess 2007;11:1–180. iii–iv, ix–xi 10.3310/hta11530 [DOI] [PubMed] [Google Scholar]

- 31.Donovan C, Butler L, Butt AJ et al. Evaluation of the impact of national breast cancer foundation-funded research. Med J Aust 2014;200:214–18. 10.5694/mja13.10798 [DOI] [PubMed] [Google Scholar]

- 32.Oortwijn WJ, Hanney SR, Ligtvoet A et al. Assessing the impact of health technology assessment in The Netherlands. Int J Technol Assess Health Care 2008;24:259–69. 10.1017/S0266462308080355 [DOI] [PubMed] [Google Scholar]

- 33.Solans-Domenech M, Adam P, Guillamon I et al. Impact of clinical and health services research projects on decision-making: a qualitative study. Health Res Policy Syst 2013;11:15 10.1186/1478-4505-11-15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Grimshaw JM, Eccles MP, Lavis JN et al. Knowledge translation of research findings. Implement Sci 2012;7: 1748–5908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hawe P, Potvin L. What is population health intervention research? Can J Public Health 2009;100(Suppl 1):8–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Milat AJ, Bauman AE, Redman S et al. Public health research outputs from efficacy to dissemination: a bibliometric analysis. BMC Public Health 2011;11:934 10.1186/1471-2458-11-934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wooding S, Hanney S, Pollitt A et al. Project retrosight. Understanding the returns from cardiovascular and stroke research: policy report. Cambridge, UK: RAND Europe MG-1079-RS, 2011. [PMC free article] [PubMed] [Google Scholar]

- 38.Prasad V, Cifu A, Ioannidis JP. Reversals of established medical practices: evidence to abandon ship. JAMA 2012;307:37–8. 10.1001/jama.2011.1960 [DOI] [PubMed] [Google Scholar]

- 39.Bauman A, Nutbeam D. Evaluation in a Nutshell. 2nd edn. Sydney: McGraw-Hill, 2013. [Google Scholar]