Abstract

Background

Nonresponse bias assessment is an important and underutilized tool in survey research to assess potential bias due to incomplete participation. This study illustrates a nonresponse bias sensitivity assessment using a survey on perceived barriers to care for children with orofacial clefts in North Carolina.

Methods

Children born in North Carolina between 2001 and 2004 with an orofacial cleft were eligible for inclusion. Vital statistics data, including maternal and child characteristics, were available on all eligible subjects. Missing ‘responses’ from nonparticipants were imputed using assumptions based on the distribution of responses, survey method (mail or phone), and participant maternal demographics.

Results

Overall, 245 of 475 subjects (51.6%) responded to either a mail or phone survey. Cost as a barrier to care was reported by 25.0% of participants. When stratified by survey type, 28.3% of mail respondents and 17.2% of phone respondents reported cost as a barrier. Under various assumptions, the bias-adjusted estimated prevalence of cost as barrier to care ranged from 16.1% to 30.0%. Maternal age, education, race, and marital status at time of birth were not associated with subjects reporting cost as a barrier.

Conclusion

As survey response rates continue to decline, the importance of assessing the potential impact of nonresponse bias has become more critical. Birth defects research is particularly conducive to nonresponse bias analysis, especially when birth defect registries and birth certificate records are used. Future birth defect studies which use population-based surveillance data and have incomplete participation could benefit from this type of nonresponse bias assessment.

Keywords: nonresponse bias, response rates, orofacial clefts, barriers to care

Introduction

Nonresponse bias occurs when there is a systematic difference between respondents and nonparticipants to a survey or questionnaire. While reporting response rates has become common practice for researchers to demonstrate the quality of the data collected, it is a flawed measure and does not address the potential effect of nonresponse bias (Fowler et al., 2002; Groves, 2006; Johnson and Wislar, 2012; Davern, 2013; Halbesleben and Whitman, 2013). The assumption that high response rates are indicative of a high quality study or, conversely, that a low response rate suggests nonresponse bias is incorrect. Even with high response rates, respondents are not necessarily representative of the larger population. Rather, nonresponse bias is a function of both the response rate and the mean difference between respondents and nonparticipants:

where X̄Respondents is the mean value of the outcome of interest among respondents, X̄total is the true mean value for the entire population, PNR is the proportion of nonresponse (i.e., 1-response rate), and X̄Nonparticipants is the mean value of the outcome among nonparticipants (Groves, 2006; Halbesleben and Whitman, 2013). The latter part of the equation (PNR X̄Respondents–X̄Nonparticipants)) represents the bias introduced by nonresponse. While the mean outcome value for nonparticipants can never truly be known (if it was, they would be respondents), there are multiple strategies to estimate the potential impact of nonresponse bias. Halbesleben and Whitman (2013) recently outlined and compared the strengths and limitations of multiple nonresponse bias assessment methods.

As survey response rates continue to decline in all areas of research, including birth defects, nonresponse bias assessment is becoming increasingly critical to show the potential impact of the missing data (Groves and Peytcheva, 2008; Davern, 2013). This study illustrates a nonresponse bias sensitivity assessment using a survey on the prevalence of perceived barriers to care for children with orofacial clefts (OFCs) in North Carolina (Cassell et al., 2012, 2013, 2014).

Materials and Methods

SURVEY OF BARRIERS TO CARE AMONG CHILDREN WITH OROFACIAL CLEFTS IN NORTH CAROLINA

Between May and October 2006, questionnaires were mailed in English and Spanish to the parents of eligible children with OFCs in North Carolina to examine barriers to care. Children born between January 1, 2001, and December 31, 2004, in North Carolina, diagnosed with an OFC during their first year of life, and captured by the North Carolina Birth Defects Monitoring Program (NCBDMP) were eligible for inclusion. The NCBDMP is an active, state-wide, population-based birth defects registry that identifies infants with birth defects from all nonmilitary North Carolina hospitals, and links health services use data, vital statistics, and hospital discharge information (NBDPN, 2011). Potential subjects were excluded from the survey if they lived outside of North Carolina, if the eligible child died, or if the eligible child was adopted. Vital statistics data, which included maternal and child demographic information at time of birth, were available for all eligible subjects.

To contact eligible subjects, the maternal residential address listed on the child’s birth certificate was initially used to mail the questionnaire; however, if no response was received after 1 month, publically accessible national and state search databases, including public voter registration data, public health department data, and other commercially available proprietary databases, were used to identify other potential residential addresses. Surveys were mailed to each potential residential address. After an additional 2 to 3 months of no response, participants were again traced using the aforementioned databases, contacted by means of telephone, and asked to complete a survey with trained phone interviewers (English or Spanish). Survey response method (mail or phone) was recorded for each participant.

The survey included both open- and closed-ended questions that addressed perceived barriers to care, satisfaction of care, and health services use. Closed-ended questions on perceived barriers and satisfaction were scored on a five-point Likert scale: never, almost never, sometimes, often, and almost always. For analysis purposes, these responses were then dichotomized into never/almost never (“never”) and sometimes/often/almost always (“ever”) a problem. ‘Not applicable’ and blank responses were treated as missing.

For the purposes of illustration, only 1 of the 35 barriers to care addressed in the barriers to care survey was chosen for this analysis. As such, a nonresponse bias sensitivity assessment on the dichotomized (never/ever) results from the following question “How often was the cost of primary cleft or craniofacial care a problem in the past 12 months when trying to get primary cleft or craniofacial care for your child?” (cost as a perceived barrier to care) is reported below. Cost as a perceived barrier was selected because it is one of the most commonly reported barriers and the prevalence varied across multiple maternal characteristics (Cassell, 2014). It should be noted that for a complete nonresponse bias assessment, all outcome variables of interest should be analyzed.

NONRESPONSE BIAS SENSITIVITY ASSESSMENT

Demographic distributions of maternal (age, education at time of birth, race, ethnicity, and marital status at time of birth) and child (sex, age, OFC diagnosis, presence of other birth defects, preterm birth, and low birth weight) characteristics obtained from the North Carolina vital records for phone respondents, mail respondents, and nonparticipants were compared. The prevalence of cost as a perceived barrier to care was compared across different strata of demographic characteristics and between mail and phone respondents.

Nonparticipant “responses” were then imputed by randomly generating a number between 0 and 1 for each nonparticipant, then applying a missing data assumption to determine whether each nonparticipant would have responded “never” or “ever” to cost being a perceived barrier to care. For example, if the missing data assumption was that 50% of nonparticipants would report cost as a perceived barrier to care, all individuals with generated numbers ≤0.5 would be imputed as reporting cost as a barrier to care (i.e., “ever”), and individuals with generated numbers >0.5 would be imputed as not reporting the barrier (“never”). Nonparticipant ‘responses’ were then included with survey respondents to calculate the overall prevalence of cost as a perceived barrier to care among subjects under the suggested assumption. This process was repeated 10,000 times to obtain a distribution of bias-adjusted estimates.

Nonparticipant responses were imputed using multiple missing data assumption strategies which address nonresponse bias through follow-up analysis and sample/population comparison (Glynn et al., 1993; Groves, 2006; Andridge and Little, 2011; Halbesleben and Whitman, 2013). Briefly, follow-up analysis is when nonparticipants are resampled using a different survey mechanism. The secondary respondents (in this example, phone respondents) are then used as a proxy for nonparticipants to estimate potential nonresponse bias, and compared with the initial survey respondents. Sample/population comparison is when some information is obtained on all eligible subjects, and nonparticipants are directly compared to respondents using the available data.

Using the available data and methods mentioned above, different assumptions were made regarding the nature of the missing data (Little and Rubin, 2002). First, it was assumed that there was no difference, or bias, between respondents (both mail and phone) and nonparticipants (i.e., that data is missing completely at random), which would yield a bias-adjusted effect estimate identical to the observed. Second, the proportion of nonparticipants reporting cost as a barrier to care was assumed to be the same as the proportion of all respondents when stratified by maternal age, education at time of birth, race, and marital status at time of birth, respectively, meaning that participation is associated with maternal characteristics but not the outcome of interest (missing at random). This analysis was conducted by stratifying the results by each of these covariates, then using different assumptions for each strata when imputing. For example, the assumption applied to nonparticipants ≤30 years old would be different than that applied to nonparticipants >30 years old. Third, the proportion of nonparticipants reporting cost as a barrier to care was assumed to be the same as the proportion of phone respondents who reported cost as a barrier to care. Fourth, the proportion of nonparticipants reporting cost as a barrier to care was assumed to be the same as the proportion of phone respondents when stratified by maternal age, education at time of birth, race, and marital status at time of birth, respectively. Finally, nonparticipants were assumed to be either twice as likely or half as likely to report cost as a perceived barrier to care as phone respondents, meaning that participation is related to the outcome of interest (not missing at random).

Data were analyzed and simulations conducted using SAS 9.3 (SAS Inc., Cary, NC). Institutional Review Board (IRB) approvals for the North Carolina barriers to care survey were received from the North Carolina Division of Public Health IRB and the University of North Carolina at Chapel Hill Public Health and Nursing IRB.

Results

Initially, 475 eligible subjects were identified through the NCBDMP, and 160 (33.7%) responded to a mail survey. Of the remaining 315 subjects, 115 (36.5%) had valid phone numbers that could be traced; the remaining 205 could not be located and were considered lost to follow-up. Among those contacted by means of telephone, 85 (73.9%) completed the phone survey. Overall, 245 (51.6%) individuals participated in the study.

Compared with the initial mail respondents, both follow-up phone respondents and nonparticipants were more likely to be younger, less educated at the time of birth, and non-white (Table 1). Nonparticipants were also more likely to be Hispanic and unmarried at the time of birth compared with mail respondents. Phone respondents were more likely to have a child with a cleft palate, compared with mail respondents. No other significant differences in child characteristics were observed between phone respondents or nonparticipants when compared with mail respondents. When compared with follow-up phone respondents, nonparticipants were more likely to have only elementary and some high school education at the time of birth and be Hispanic (Table 1). Differences in the age distribution of the child were also observed.

TABLE 1.

Distribution of Maternal and Child Characteristics of Mail Respondents Compared to Phone Respondents and Nonparticipants in North Carolina Barriers to Care Survey, 2006

| Mail respondents N = 160

|

Phone respondents N = 85

|

Nonparticipants N = 230

|

||||

|---|---|---|---|---|---|---|

| N (%) | N (%) | p-valuea | N (%) | p-valuea | p-valueb | |

| Maternal characteristics | ||||||

| Agec | ||||||

|

| ||||||

| ≤ 25 years old | 12 (7.5) | 14 (16.5) | 0.05 | 39 (17.0) | 0.006 | 1.0 |

|

| ||||||

| 26–30 years old | 39 (24.4) | 27 (31.8) | 0.23 | 76 (33.0) | 0.07 | 0.89 |

|

| ||||||

| 31–35 years old | 50 (31.3) | 22 (25.9) | 0.46 | 66 (28.7) | 0.65 | 0.67 |

|

| ||||||

| > 35 years old | 59 (36.9) | 22 (25.9) | 0.09 | 49 (21.3) | <0.001 | 0.44 |

|

| ||||||

| Education, at time of child’s birth | ||||||

|

| ||||||

| Elementary and some high school | 22 (13.8) | 14 (16.5) | 0.57 | 65 (28.3) | <0.001 | 0.04 |

|

| ||||||

| High school graduate | 35 (21.9) | 36 (42.4) | 0.001 | 92 (40.0) | <0.001 | 0.70 |

|

| ||||||

| Some college | 44 (27.5) | 20 (23.5) | 0.54 | 39 (17.0) | 0.02 | 0.20 |

|

| ||||||

| College graduate | 59 (36.9) | 15 (17.7) | 0.002 | 34 (14.8) | <0.001 | 0.60 |

|

| ||||||

| Race | ||||||

|

| ||||||

| White | 145 (90.6) | 68 (80.0) | 0.03 | 185 (80.4) | 0.007 | 1.0 |

|

| ||||||

| Non-white/Otherd | 15 (9.4) | 17 (20.0) | - | 45 (19.6) | - | - |

|

| ||||||

| Ethnicity | ||||||

|

| ||||||

| Hispanic/Latino | 5 (3.1) | 5 (5.9) | 0.32 | 46 (20.0) | <0.001 | 0.002 |

|

| ||||||

| Non-Hispanic/Latino | 155 (96.9) | 80 (94.1) | - | 184 (80.0) | - | - |

|

| ||||||

| Marital status, at time of child’s birth | ||||||

|

| ||||||

| Married | 127 (79.4) | 59 (69.4) | 0.09 | 131 (57.0) | <0.001 | 0.05 |

|

| ||||||

| Not married | 33 (20.6) | 26 (30.6) | - | 99 (43.0) | - | - |

|

| ||||||

| Child characteristics | ||||||

|

| ||||||

| Sex | ||||||

|

| ||||||

| Female | 66 (41.3) | 33 (44.7) | 0.68 | 100 (43.5) | 0.68 | 0.90 |

|

| ||||||

| Male | 94 (58.8) | 47 (55.3) | - | 130 (56.5) | - | - |

|

| ||||||

| Agec | ||||||

|

| ||||||

| 3 years old | 35 (21.9) | 25 (29.4) | 0.21 | 41 (17.8) | 0.36 | 0.03 |

|

| ||||||

| 4 years old | 48 (30.0) | 20 (23.5) | 0.30 | 57 (24.8) | 0.30 | 0.88 |

|

| ||||||

| 5 years old | 44 (27.5) | 14 (16.5) | 0.06 | 66 (28.7) | 0.82 | 0.03 |

|

| ||||||

| 6 years old | 22 (13.8) | 18 (21.2) | 0.15 | 50 (21.7) | 0.05 | 1.0 |

|

| ||||||

| 7 years old | 11 (7.9) | 8 (9.4) | 0.46 | 16 (7.0) | 0.99 | 0.48 |

|

| ||||||

| Cleft type | ||||||

|

| ||||||

| Cleft lip only | 34 (21.3) | 13 (15.3) | 0.31 | 47 (20.4) | 0.90 | 0.34 |

|

| ||||||

| Cleft palate only | 50 (31.3) | 39 (45.9) | 0.03 | 88 (38.3) | 0.16 | 0.25 |

|

| ||||||

| Cleft lip with cleft palate | 76 (47.5) | 33 (38.8) | 0.22 | 95 (41.3) | 0.25 | 0.80 |

|

| ||||||

| Presence of other birth defects | ||||||

|

| ||||||

| Yes | 66 (41.3) | 34 (40.0) | 0.89 | 102 (44.4) | 0.60 | 0.52 |

|

| ||||||

| No | 94 (58.8) | 51 (60.0) | - | 128 (55.7) | - | - |

|

| ||||||

| Preterm birth (<37 weeks) | ||||||

|

| ||||||

| Yes | 34 (21.3) | 12 (14.1) | 0.23 | 41 (17.8) | 0.43 | 0.50 |

|

| ||||||

| No | 126 (78.8) | 73 (85.9) | - | 189 (82.2) | - | - |

|

| ||||||

| Low birth weight (<2500 grams)e | ||||||

|

| ||||||

| Yes | 31 (19.4) | 11 (12.9) | 0.22 | 37 (16.1) | 0.42 | 0.60 |

|

| ||||||

| No | 129 (80.6) | 74 (87.1) | - | 192 (83.5) | - | - |

n = 475; p <0.05 was used to indicate statistical significance, significant p-values are in bold.

Phone respondents and nonparticipants were compared to mail respondents using Fisher’s exact tests.

Nonparticipants were compared to phone respondents using Fisher’s exact tests.

Age was calculated using the end of study period date (04/30/2008) and date of birth recorded on the birth certificate for both mother and child.

‘Other’ race included Black, American Indian, Chinese, and Other Asian.

One nonparticipant child was missing birth weight information on birth certificate.

Among all survey respondents, 196 (80.0%) answered the question concerning cost as a barrier to care, and 49 (25.0%) reported cost as a barrier. Reporting cost as a barrier to care was associated with maternal education at time of birth (p = 0.03) and marital status at time of birth (p = 0.05). Of the 138 mail respondents who answered the question, 39 (28.3%) reported cost as “ever” being a barrier; 58 follow-up phone respondents answered the question, and among these, 10 (17.2%) reported cost as a barrier. Twenty-two mail respondents (13.8%) and 27 phone respondents (31.8%) respondents reported “not applicable” or left the question blank. Differences in the prevalence of reporting cost as barrier to care within maternal and child characteristics were observed between mail and phone respondents (Table 2).

TABLE 2.

Prevalence of Cost as “Ever” Being a Perceived Barrier to Care among Respondents across Maternal and Child Characteristics in the North Carolina Barriers to Care Survey, 2006

| All respondents N = 49/196 (25.0%) N (%) |

Mail respondents N = 39/138 (28.3%) N (%) |

Phone respondents N = 10/58 (17.2%) N (%) |

|

|---|---|---|---|

| Maternal Characteristicsa | |||

| Ageb | |||

| ≤30 years old | 16 (21.6) | 12 (25.5) | 4 (14.8) |

| >30 years old | 33 (27.1) | 27 (29.7) | 6 (19.4) |

| Education, at time of child’s birth | |||

| High school graduate or less | 14 (17.1) | 9 (17.7) | 5 (18.5) |

| At least some college | 35 (30.7) | 30 (34.5) | 5 (16.1) |

| Race | |||

| White | 45 (26.8) | 38 (30.2) | 7 (16.7) |

| Non-White/Otherc | 4 (14.3) | 1 (8.3) | 3 (18.8) |

| Marital status, at time of child’s birth | |||

| Married | 43 (28.5) | 36 (33.3) | 7 (16.3) |

| Not married | 6 (13.3) | 3 (10.0) | 3 (20.0) |

| Child Characteristics | |||

| Sex | |||

| Female | 25 (29.1) | 20 (34.5) | 5 (17.9) |

| Male | 24 (21.8) | 19 (23.8) | 5 (16.7) |

| Ageb | |||

| ≤4 years old | 28 (26.4) | 23 (31.1) | 5 (15.6) |

| >4 years old | 21 (23.3) | 16 (25.0) | 5 (19.2) |

| Cleft type | |||

| Cleft lip only | 10 (31.3) | 10 (41.7) | 0 (0) |

| Cleft palate only | 14 (21.9) | 11 (25.6) | 3 (14.3) |

| Cleft lip with cleft palate | 25 (25.0) | 18 (25.4) | 7 (24.1) |

| Presence of other birth defects | |||

| Yes | 30 (26.3) | 17 (28.3) | 2 (9.1) |

| No | 19 (23.2) | 22 (28.2) | 8 (22.2) |

| Preterm birth (<37 weeks) | |||

| Yes | 8 (20.5) | 7 (23.3) | 1 (11.1) |

| No | 41 (26.1) | 32 (29.6) | 9 (18.4) |

| Low birth weight (<2500 grams) | |||

| Yes | 5 (15.6) | 4 (15.4) | 1 (16.7) |

| No | 44 (26.8) | 35 (31.3) | 9 (17.3) |

Due to low frequencies among respondents, ethnicity (Hispanic/non-Hispanic) was unable to be analyzed.

Age was calculated using the end of study period date (04/30/2008) and date of birth recorded on the birth certificate for both mother and child.

‘Other’ included black, American Indian, Chinese, and Other Asian.

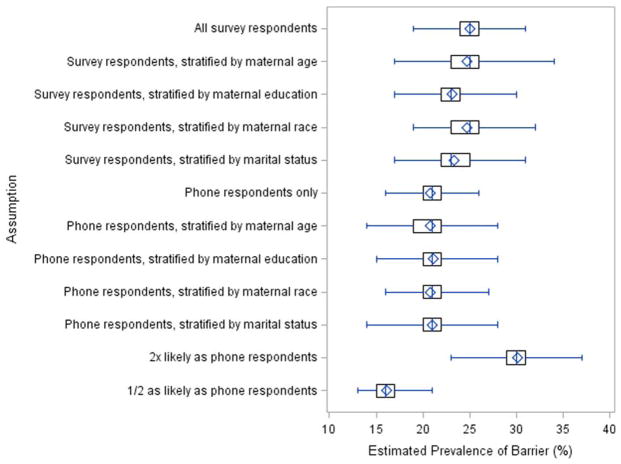

The mean, median, and interquartile range, as well as minimum and maximum bias-adjusted prevalences for each assumption are shown in Figure 1. As expected, no difference was observed between the observed and mean expected prevalences when nonparticipants were assumed to have the same distribution of reporting as all survey respondents (mail and phone), 25.0%. When the overall reporting distribution was stratified by maternal age, education at time of birth, race, and marital status at time of birth, the estimated mean prevalences corrected for nonresponse were slightly lower (24.7%, 23.1%, 24.7%, and 23.4%, respectively) than the observed overall estimate of 25.0%. When simulating nonparticipant responses using only phone respondents, the prevalence of cost as a perceived barrier was lower, 20.8%. Similarly, when phone respondents were stratified by maternal age, education at time of birth, race, and marital status at time of birth, the estimated mean prevalences were 20.8%, 21.1%, 20.8%, and 21.0%, respectively. Finally, when nonparticipants were assumed to be twice as likely or half as likely to report cost as a barrier to care, the estimated mean prevalences were 30.0% and 16.1%, respectively.

FIGURE 1.

Estimated prevalence of barrier (%).

Discussion

In general, participation in this survey appeared to be associated with maternal characteristics (age, education at time of birth, race, ethnicity, and marital status at time of birth), but not child characteristics. However, under our simulation scenarios, the selected, documented maternal characteristics had minimal impact on bias-adjusted prevalence estimates of cost as a barrier to care. The difference between respondent types (mail vs. phone) appeared more influential. Nonparticipants were more similar in documented characteristics to follow-up phone respondents than to initial mail respondents. When the underlying prevalence of cost as a perceived barrier to care among nonparticipants was assumed to equal that of phone respondents, as per a follow-up analysis, the estimated bias-adjusted prevalence was approximately 21%, compared with an observed 25% of respondents reporting cost as a perceived barrier to care.

In an analysis in which nonparticipants were assumed to be between half and twice as likely to report cost as a barrier to care as phone respondents, the estimated percentage for whom cost was a perceived barrier ranged from 16% to 30%. The maximal absolute difference in prevalence compared with the observed estimate was 9%, and the relative difference was 36%, under our simulation scenarios. Overall, the results obtained on cost as a perceived barrier to care do not appear to be substantially biased by nonresponse, even though the survey response rate was relatively low (51.6%) and there are no similar studies for comparison. That being said, while this assessment provides estimates of the potential magnitude of nonresponse bias, the “true” prevalence of cost as a barrier to care cannot be determined.

The strengths of this sensitivity analysis approach to nonresponse bias assessment are its flexibility, ability to use a reference group (phone respondents) to estimate nonparticipant results, and availability of demographic information on nonparticipants for stratification purposes. Because phone respondents did not to respond to the initial mail survey—and would have been nonparticipants without the follow-up phone survey—they are more likely to be representative of the nonparticipants. Even without the availability of demographic information on nonparticipants, this type of analysis can still be conducted using a more general approach (i.e., estimate bias-adjusted prevalences without stratifying across maternal characteristics). Perhaps most importantly, it requires researchers to critically consider and attempt to quantify the underlying mechanisms of potential bias in participation.

That being said, this type of assessment is dependent upon making reasonable assumptions about the underlying prevalence of the dependent variable among nonparticipants, and that the information available on all eligible subjects is associated with the outcome(s) of interest. Furthermore, if participation and perceiving cost as a barrier to care is associated with a variable not included in the available outside data, such as type of health insurance, then bias-adjusted estimates cannot be calculated using these methods. Follow-up respondents are also assumed to be representative of nonparticipants in this example; however, follow-up respondents are still respondents, and may be an inappropriate proxy. Additionally, due to the small number of telephone respondents and proportion of which did not respond to the question at hand, the results presented here should be interpreted with caution. The biggest limitation inherent in all nonresponse bias assessments is that, while they attempt to estimate the missing responses from nonparticipants, these responses cannot actually be measured. Regardless of these limitations, it is still of critical importance to attempt to estimate the potential impact of nonresponse bias on study results.

The novel nonresponse bias assessment strategy used in this study combines multiple methods of nonresponse bias assessment and can provide a more reliable picture of study implications, compared with the use of one method alone. While nonresponse bias analyses are facilitated by the use of record linkages of birth defect registries and vital records to identify eligible subjects, as they provide researchers information about nonparticipants, assessments like the one conducted in this study have rarely been done. We recommend that this type of assessment be routinely conducted in birth defect studies which use population-based surveillance data and have incomplete participation. Even if information on nonparticipants is limited or unavailable, nonresponse bias assessments can—and should—still be conducted.

It is critical to consider nonresponse bias during the study design phase, and not just after analysis, so that necessary information, like the method of survey response or response wave, is collected and recorded. Even if there is evidence of nonresponse bias, analytical techniques (such as imputation or weighting) exist to estimate the impact of the bias, although they should be performed with caution, as additional, stronger assumptions must be made when performing these adjustments (Groves, 2006; Johnson et al., 2006; Hoggatt et al., 2009). It is strongly advised that when these methods are used, all assumptions are explicitly described for the readers. That being said, nonresponse bias is just one potential source of bias when conducting studies involving survey data, and the methods presented here may not directly address bias introduced through other avenues or in combination with nonresponse bias (such as selection bias or recall bias). Generally speaking, researchers need to be thoughtful about the potential impact of nonresponse- and all bias- in their studies. The sensitivity analysis methods presented show the utility and ease of conducting a nonresponse bias sensitivity assessment, and demonstrates how birth defects research could benefit from nonresponse bias assessments.

Acknowledgments

Supported, in part, by a cooperative agreement from the Centers for Disease Control and Prevention (5U01DD000488).

The authors thank Owen Devine for his input and assistance on statistical methodology and simulations, and Suzanne Gilboa for her support of this project. The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Footnotes

The authors have no conflicts of interest to declare.

References

- Andridge RR, Little RJA. Proxy pattern-mixture analysis for survey nonresponse. J Off Stat. 2011;27:153–180. [Google Scholar]

- Cassell CH, Krohmer A, Mendez DD, et al. Factors associated with distance and time traveled to cleft and craniofacial care. Birth Defects Res A Clin Mol Teratol. 2013;97:685–695. doi: 10.1002/bdra.23173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cassell CH, Mendez DD, Strauss RP. Maternal perspectives: qualitative responses about perceived barriers to care among orofacial clefts in North Carolina. Cleft Palate Craniofac J. 2012;49:262–269. doi: 10.1597/09-235. [DOI] [PubMed] [Google Scholar]

- Cassell CH, Strassle P, Mendez DD, et al. Barriers to care for children with orofacial clefts in North Carolina. Birth Defects Res A Clin Mol Teratol. 2014;100:837–847. doi: 10.1002/bdra.23303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davern M. Nonresponse rates are a problematic indicator of nonresponse bias in survey research. Health Serv Res. 2013;48:905–912. doi: 10.1111/1475-6773.12070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fowler FJ, Gallagher PM, Stringfellow VL, et al. Using telephone interviews to reduce nonresponse bias to mail surveys of health plan members. Med Care. 2002;40:190–200. doi: 10.1097/00005650-200203000-00003. [DOI] [PubMed] [Google Scholar]

- Glynn RJ, Laird NM, Rubin DB. Multiple imputation in mixture models for nonignorable nonresponse with follow-ups. J Am Statist Assoc. 1993;88:984–993. [Google Scholar]

- Groves RM. Nonresponse rates and nonresponse bias in household surveys. Public Opin Q. 2006;70:646–675. [Google Scholar]

- Groves RM, Peytcheva E. The impact of nonresponse rates on nonresponse bias: a meta-analysis. Public Opin Q. 2008;72:167–189. [Google Scholar]

- Halbesleben JR, Whitman M. Evaluating survey quality in health services research: a decision framework for assessing nonresponse bias. Health Serv Res. 2013;48:913–930. doi: 10.1111/1475-6773.12002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoggatt KJ, Greenland S, Ritz BR. Adjustment for response bias via two-phase analysis: an application. Epidemiology. 2009;20:872–879. doi: 10.1097/EDE.0b013e3181b2ff66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson TP, Cho YI, Campbell RT, Holbrook AL. Using community-level correlates to evaluate nonresponse effects in a telephone survey. Public Opin Q. 2006;70:704–719. [Google Scholar]

- Johnson TP, Wislar JS. Response rates and nonresponse errors in surveys. JAMA. 2012;307:1805–1806. doi: 10.1001/jama.2012.3532. [DOI] [PubMed] [Google Scholar]

- Little RJA, Rubin DB. Statistical analysis with missing data. 2. New York: John Wiley & Sons, Inc; 2002. [Google Scholar]

- National Birth Defects Prevention Network (NBDPN) State birth defects surveillance program directory. Birth Defects Res A Clin Mol Teratol. 2011;91:1028–1049. [Google Scholar]