Abstract

Some listeners with hearing loss show poor speech recognition scores in spite of using amplification that optimizes audibility. Beyond audibility, studies have suggested that suprathreshold abilities such as spectral and temporal processing may explain differences in amplified speech recognition scores. A variety of different methods has been used to measure spectral processing. However, the relationship between spectral processing and speech recognition is still inconclusive. This study evaluated the relationship between spectral processing and speech recognition in listeners with normal hearing and with hearing loss. Narrowband spectral resolution was assessed using auditory filter bandwidths estimated from simultaneous notched-noise masking. Broadband spectral processing was measured using the spectral ripple discrimination (SRD) task and the spectral ripple depth detection (SMD) task. Three different measures were used to assess unamplified and amplified speech recognition in quiet and noise. Stepwise multiple linear regression revealed that SMD at 2.0 cycles per octave (cpo) significantly predicted speech scores for amplified and unamplified speech in quiet and noise. Commonality analyses revealed that SMD at 2.0 cpo combined with SRD and equivalent rectangular bandwidth measures to explain most of the variance captured by the regression model. Results suggest that SMD and SRD may be promising clinical tools for diagnostic evaluation and predicting amplification outcomes.

I. INTRODUCTION

Speech must be audible for listeners to understand it. For listeners with sensorineural hearing loss, improvement in audibility alone does not always alleviate their speech understanding problems. Beyond audibility, several investigators have suggested that psychophysical factors such as temporal and spectral processing might explain why some listeners need more than improved audibility to alleviate their speech understanding problems (e.g., Philips et al., 2000; Davies-Venn and Souza, 2014).

Spectral resolution is defined as a listener's ability to decompose and use the frequency information in a complex signal such as speech. The process of filtering incoming speech into component frequencies occurs at the level of the basilar membrane by a series of overlapping bandpass filters commonly referred to as auditory filters (Moore, 2007). The compressive non-linearity of outer hair cells is closely related to the “sharpness” of the tuning of auditory filters. Narrowly tuned auditory filters are associated with good spectral resolution, and broadly tuned auditory filters are associated with poor spectral resolution (Moore, 2007). Spectral integration is defined as a listener's ability to combine information from a broad range of auditory filters. Spectral integration is essential for resolving changes in overall spectral shape across multiple auditory filters. Spectral resolution and spectral integration provide salient cues that are important for speech understanding (Bor et al., 2008; Leek and Summers, 1996; Summers and Leek, 1994; Swanepoel et al., 2012). A more general term, spectral processing, is often used to discuss measures of both narrowband and broadband spectral resolution as well as spectral integration and will be used from here on out.

The relationship between spectral processing and speech recognition is still inconclusive. Some studies showed a significant role of spectral processing in speech understanding (e.g., Thibodeau and Van Tasell, 1987; Henry et al., 2005; Bernstein et al., 2013; Sheft et al., 2012) while others did not (e.g., Dubno and Schaefer, 1992). The failure to find a significant relationship between auditory filter bandwidth (i.e., narrowband spectral processing) and speech recognition could be explained by the mismatch between frequency selectivity within a localized region of the cochlea and speech perception across a broad range of frequencies. Consider the study of Thibodeau and Van Tasell (1987), which measured discrimination for a synthetic nonsense syllable (/di/ versus /gi/). The two stimuli were similar in all aspects except for a difference in spectral transitions from 1800 to 2500 Hz. Their results showed a strong correlation between auditory filter bandwidth and syllable discrimination. Results from Thibodeau and Van Tasell (1987) suggest that significant relationships exist for speech recognition in quiet, when the bandwidth and spectral locus of the speech recognition task approximate that of the spectral processing measure.

Following from this rationale, recent studies have used spectrally modulated noise (i.e., rippled noise) detection tasks to evaluate the relationship between spectral processing and speech recognition. The relationship between rippled noise perception and speech recognition is significant for both speech in quiet and speech in noise (Henry et al., 2005; Bernstein et al., 2013; Sheft et al., 2012). Henry et al. reported a significant correlation between listeners' spectral-ripple discrimination (SRD) and speech recognition. Bernstein et al. used a spectro-temporal modulation detection task that involved measuring spectral-ripple depth detection (SMD) at various modulation rates. They reported that SMD at 2.0 cycles per octave (cpo) combined with amplitude modulation rate of 4 Hz significantly predicted speech intelligibility. Sheft et al. reported a significant correlation between listeners' phase-shift detection abilities using rippled noise and their signal-to-noise (SNR) scores for the Quick Speech in Noise (QuickSIN) test by Killion et al. (2004).

Interestingly, the studies with opposing findings also differ in the method used to quantify spectral processing. Classic studies used psychophysical tuning curves: notched-noise with probe signals at specific frequencies in various masking paradigms to estimate the auditory filter bandwidth of individual listeners (e.g., Patterson and Nimmo-Smith, 1980; Green et al., 1987). Auditory filters provide an estimate of frequency tuning within a localized region of the basilar membrane, and it is a measure of narrowband spectral processing. In addition to the classic assessment of narrowband spectral processing using auditory filter bandwidths, investigators have developed other methods to assess spectral processing spanning a broad range of frequencies (e.g., four octaves). These broadband measures include detection of spectrally modulated noise (Bernstein and Green, 1987; Litvak et al., 2007) and detection of phase-inverted or phase-shifted rippled noise (Supin et al., 1994; Sheft et al., 2012; Nechaev and Supin, 2013). Rippled noise, a broadband noise with sinusoidal amplitude variations on a linear or logarithmic frequency axis, is a non-linguistic stimulus that mimics the complex spectral variations of natural sounds and allows assessment of listeners' broadband spectral processing. Rippled noise is characterized by spectral modulation density, depth, and phase. These three metrics have been separately used to assess listeners' ability to detect changes across a broad range of spectral frequencies. These tasks include the ripple-phase-inversion detection task (e.g., Aronoff and Landsberger, 2013; Drennan et al., 2014; Henry et al., 2005; Sabin et al., 2013; Supin et al., 1994, 1999; Won et al., 2007), the ripple-phase-shift detection task (Sheft et al., 2012; Nechaev and Supin, 2013), and the ripple modulation depth detection task (Bernstein et al., 2013; Bernstein and Green 1987; Litvak et al., 2007; Sabin et al., 2012a,b; Summers and Leek, 1994; Zhang et al., 2013). In general, these rippled noise tasks measure spectral processing across a broad range of frequencies and thus cannot be postulated to represent individual auditory filter bandwidths at localized regions of the basilar membrane. In essence, broadband spectral processing determined with rippled noise evaluates spectral processing both within individual channels as well as across multiple auditory channels.

Fundamental differences between the narrowband and broadband measures of spectral processing lead to the major unanswered question regarding whether the differences in the methods used to quantify spectral processing may account for these discrepancies in the relationship between spectral processing and speech recognition. There is a need to understand similarities and differences between these tasks, especially how thresholds for each task relate to speech recognition. The mechanisms responsible for ripple depth detection are still not well understood but have been postulated to a spectral-contrast mechanism at ripple densities below 10 cpo; this may be driven by across-channel spectral integration (Anderson et al., 2012). Listeners' performance on the ripple-phase discrimination task may be influenced by mechanisms related to auditory filter tip sharpness and across-channel spectral integration (Supin et al., 1994; Won et al., 2011). Unfortunately, most of the previous studies were completed on listeners with normal hearing and cochlear implants. There are unanswered questions regarding these mechanisms, especially for listeners with impaired acoustic hearing.

To date, the relationship among auditory filter bandwidths, ripple-phase discrimination, and ripple-depth detection have not been assessed in the same listener cohort. In addition, the role of these measures in predicting speech recognition scores and amplification outcomes on the same listener cohort is also not well understood. A comparison of the different measures will provide useful insights on how these tasks are related to each other as well as their predictive strength for various aspects of speech recognition. The current study evaluated the relationship between spectral processing and speech recognition on listeners with normal hearing and hearing loss. Specifically, we investigated whether fundamental differences between narrowband and broadband spectral processing may account for the strength of each measure's relationship with speech recognition. We also wanted to determine which of the broadband measures would best predict speech recognition scores in quiet and noise. We hypothesized that the broadband spectral measures would have a stronger relationship with all measures of speech recognition compared to the narrowband spectral measures.

II. EXPERIMENT 1: SPEECH RECOGNITION

A. Rationale

This experiment measured listeners' speech recognition performance using three different tasks. NU-6 word recognition was used to assess unamplified speech recognition in quiet. Vowel-consonant-vowel /vCv/ nonsense syllable test was used to assess recognition of linearly amplified speech in quiet. The QuickSIN was used to assess speech-in noise performance.

B. Methods

1. Subjects

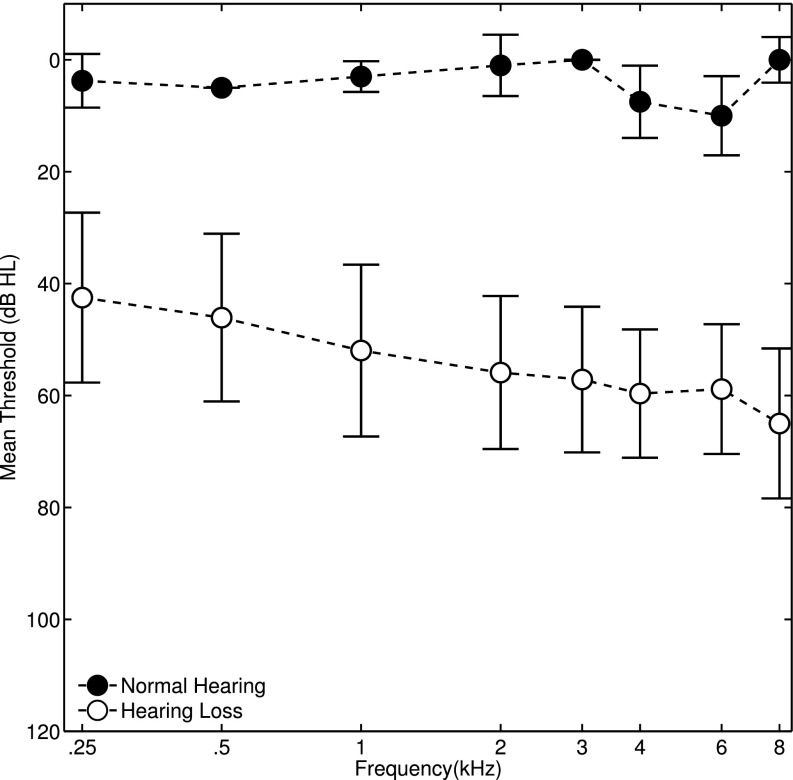

Twenty-eight listeners with sensorineural hearing loss (age in years: M = 65, SD = 16.87; range, 21–89) and five listeners with normal hearing (age: M = 25, SD = 3.35; range, 23–31) took part in this experiment. The mean audiograms for both groups are shown in Fig. 1. Listeners with hearing loss had a mild to severe hearing loss, which was defined by their pure tone average thresholds, in dB hearing level (HL) at octave frequencies from 0.5 to 2 kHz using a criterion of 25–85 dB (PTA in dB HL: M = 50.46, SD = 2.52). The following criteria were used to rule out the presence of a conductive component to listener's sensorineural hearing loss: the absence of an air-bone gap greater than 10 dB HL from 500 to 4000 Hz, and normal tympanograms (Jerger, 1970). Listeners with normal hearing had thresholds lower than 20 dB HL (PTA in dB HL: M = 3, SD = 1.33). All participants spoke English as their first or primary language. They were all in good general health and reported no significant history of otologic or neurologic disorders. They all had good organic or corrected vision and were able to read all displays on the computer monitor. Listeners with hearing loss were tested at the University of Washington. Listeners with normal hearing were tested at the University of Minnesota. All testing was in accordance with the local institutional review board approval. The experimental setup was identical at both locations except for the earphones used. All test stimuli were presented using Sennheiser 280 Pro earphones for listeners with hearing loss and TDH-49 earphones for the listeners with normal hearing. The frequency response of both earphones was approximately flat within the region of interest (i.e., 250–6000 Hz).

FIG. 1.

(Color online) Mean pure-tone thresholds as a function of frequency for listeners with normal hearing and listeners with hearing loss. The filled symbols represent listeners with normal hearing. The open symbols represent listeners with hearing loss. Frequency (kHz) is shown on the x axis, and hearing thresholds (dB HL) is shown on the y axis.

2. Audiologic testing

All audiologic testing was conducted in a double-walled sound-isolating booth using a GSI audiometer. All participants were administered standard audiometric testing, including tympanometry, pure tone air, and bone conduction thresholds. Loudness discomfort levels (LDL) were measured on listeners with hearing loss using warble tones at the octave and inter-octave frequencies from 250 to 8000 Hz. Instructions recommended by Hawkins et al. (1987) were used to determine the LDL values. The measured LDL values were used to ensure that presentation levels of the test stimuli did not exceed each listener's threshold of discomfort.

3. Unamplified speech recognition

Unaided word recognition scores (WRS) were measured at a 30 dB sensation level (SL) above pure-tone average thresholds using a 25-word list from Northwestern University Auditory Test No. 6. (NU-6) recording by Wilson et al. (1990). Speech recognition performance in noise listeners was evaluated using the QuickSIN test by Killion et al. (2004). Two lists of the QuickSIN test were administered at 70 dB HL for the listeners with normal hearing and mild-to-moderate hearing loss. QuickSIN lists were administered at most comfortable loudness level (MCL) for the listeners with more severe hearing loss. QuickSIN scores were defined as SNR loss—the lowest SNR that listeners could recognize 50% of the words correctly—were correctly determined using the protocol recommended by the test developers.

4. Amplified speech recognition

a. Stimuli.

The test stimuli were a subset of 16 randomly ordered vowel-consonant-vowel /vCv/ nonsense syllable tokens (i.e., stops and fricatives). The test stimuli consisted of four repetitions of the 16 /vCv/ nonsense syllables. Recordings were made from two male and two female speakers for a total of 64 unique syllables, digitally recorded at a 44.1 kHz sampling rate (Turner et al., 1995). The 16 consonants used were orthographically displayed on a computer monitor as follows: aBa, aDa, aGa, aPa, aTa, aKa, aFa, aVa, atha, aTHa, aSa, aSHa, aCHa, aZHa, aNa, aMa. All test stimuli were processed by applying custom prescribed National Acoustics Laboratory-Revised (NAL-R) frequency gain response to each /vCv/ syllable via a locally developed matlab program (Byrne and Dillon, 1986). For listeners with normal hearing, the frequency response was matched to that of the amplified conditions, but presentation level of the speech signal was maintained at 70 dB sound pressure level (SPL) without adding any gain.

b. Procedure.

A within-subjects repeated-measures design was used to evaluate consonant recognition. All listeners were trained to select the stimulus heard from a display of orthographically represented nonsense syllable tokens. All testing was conducted in a sound isolating booth. Correct answer visual feedback was used during practice blocks. Each subject completed two practice blocks prior to actual data collection. Correct-answer feedback was provided during the practice runs. For data collection, 256 randomly ordered consonant-vowel syllable tokens (i.e., four repetitions of 64 syllables) were presented at 70 dB SPL for each block. Scores (i.e., LNR) were computed by averaging listeners' scores from four blocks. To stabilize the variance, scores were transformed to rationalized arcsine units (RAU) (Sherbecoe and Studebaker, 2004). All statistical analyses were conducted using the RAU transformed speech scores.

C. Analysis and results

NU-6 word recognition scores were used to represent a clinical, audible but unamplified (i.e., no frequency shaping), assessment of speech perception in quiet. Listeners with normal hearing had higher NU-6 scores (M = 94.40, SD = 2.99) compared to listeners with hearing loss (M = 74.57, SD = 3.45). The QuickSIN scores served as a clinical assessment of speech perception in noise. Listeners with normal hearing had thresholds at lower signal-to-noise ratios (M = 0.30, SD = 0.54) compared to listeners with hearing loss (M = 8.45, SD = 0.97). For the linearly amplified nonsense syllables (LNR), listeners with normal hearing had higher speech recognition scores (M = 97.89, SD = 2.63) and less variance compared to listeners with hearing loss (M = 76.06, SD = 13.34). An independent samples t-test was used to confirm that listeners with normal hearing performed significantly better than listeners with hearing loss for unamplified speech in quiet (i.e., WRS) [t (31) = 4.337, P < 0.001); amplified speech in quiet (i.e., LNR) [t (31) = 5.255, P < 0.001];1 and speech in noise (i.e., QuickSIN) [t (31) = −7.380, P < 0.001]. In summary, listeners with normal hearing had better scores compared to listeners with hearing loss on all three measures of speech recognition. As expected, listeners with normal hearing also had less within-group variance compared to listeners with hearing loss.

III. EXPERIMENT 2: SPECTRAL-RIPPLE DEPTH DETECTION (SMD)

A. Rationale

This experiment measured broadband spectral processing using the SMD task. This task measures the smallest spectral modulation depth at which a spectrally modulated “rippled” noise signal could be distinguished from an unmodulated signal at four spectral modulation frequencies (i.e., ripple densities of 0.25, 0.5, 1.0, and 2.0 cpo).

B. Methods

1. Subjects

The same participants from experiment 1 took part in this experiment.

2. Stimuli

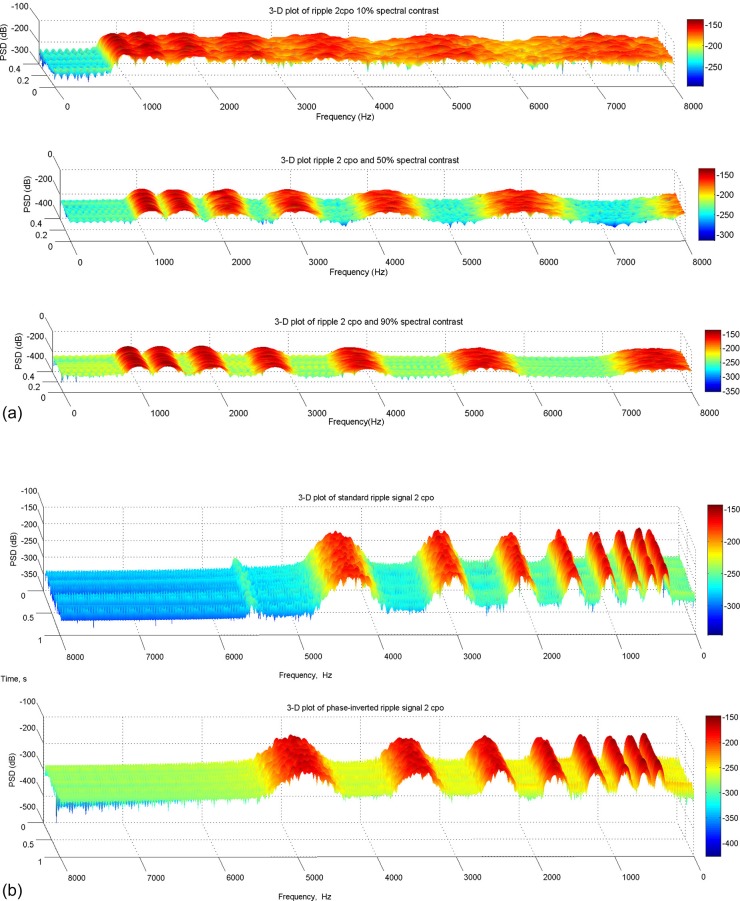

The task used in this experiment is similar to the spectral-ripple depth detection task used by Litvak et al. (2007). All stimuli were generated using matlab software. The rippled noise was a four-octave-wide broadband noise with sinusoidal amplitude variations on a log frequency axis from 350 to 5600 Hz. The stimulus duration was 360 ms and included onset and offset 20-ms cos2 window ramps designed to limit spectral splatter and potential confounding cues from sharp onset and offset. To create the flat spectrum of the standard stimulus, the spectral contrast (i.e., peak to valley) was set to 0 dB. An illustration of the test stimulus is shown in Fig. 2(a).

FIG. 2.

(Color online) (a) Illustration of the spectral ripple stimuli used in the modulation detection task. Spectral contrast is illustrated in the different panels. The SMD task measures the smallest spectral contrast that listeners can detect. (b) Illustration of the spectral ripple-phase-inversion task. For example, at 4000 Hz (top panel) the frequency positions of the log-spaced spectral peaks are inverted in the top compared to the bottom panel. The same change in spectral peaks can be seen at 3000 and 2000 Hz when the standard ripple signal (top panel) is compared with the phase-inverted signal (bottom panel).

3. Procedure

Listeners were instructed to select the stimulus that sounded different from the standard stimulus. This experiment used a cued three-interval, two-alternative forced choice paradigm. For each trial, the first interval contained the standard (reference) stimulus. Either the second or third interval contained the rippled noise signal. Listeners were instructed to select, using a keyboard and mouse, the interval that sounded different from the standard (reference) stimulus of the first interval. Cueing helps listeners compare intervals on tasks when they can hear the difference but cannot decipher which interval is the standard versus the test signal (Eddins and Bero, 2007). The spectral modulation depth (dB) of the test signal was varied adaptively using a two-down, one-up tracking procedure (Levitt, 1971). With this tracking procedure, the modulation depth of the test signal decreased after two consecutive correct responses and increased after an incorrect response. A 2-dB step size was used for the first three reversals and then the step size was decreased to 0.5 dB step size for the six remaining reversals. SMD thresholds were determined using the average of the last six reversals. The difficulty of the task increases with increase in ripple density. In other words, the more densely spaced the spectral peaks, the harder it is for listeners to distinguish between the flat versus spectrally modulated noise.

Mean thresholds were computed by averaging measured thresholds from three repetitions of the task at 0.25, 0.5, 1.0, and 2.0 cpo. The test stimuli were presented at 10 dB SL (re: PTA in dB SPL) for all listeners with hearing loss and 55 dB SPL for listeners with normal hearing. The intensity of the standard and test signals were randomly roved from interval to interval using a ±3 dB range and a 0.5 dB step size. The roving technique by Green et al. (1983) was used to discourage listeners from using local intensity cues to detect the test signal. To reduce possible constant intensity and spectral pitch cues, the starting phase of the test signal was varied across presentations.

C. Analysis and results

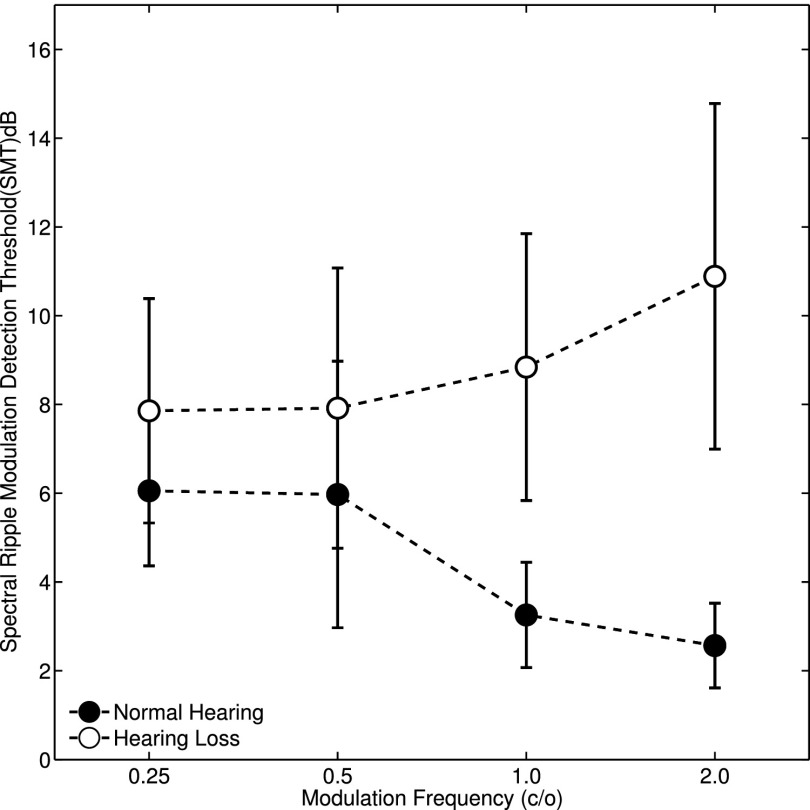

Spectral modulation transfer functions for listeners with normal hearing and hearing loss are shown in Fig. 3. In general, listeners with normal hearing had lower thresholds (i.e., better SMD) compared to listeners with hearing loss, especially at 1.0 and 2.0 cpo. A mixed-model one-way analysis of variance (ANOVA) was used to assess whether thresholds were significantly different across the four ripple densities for listeners with normal hearing compared to listeners with hearing loss. The main effect of SMD was not significant [F(1, 1.76) = 1.712, p = 0.193]. The interaction between SMD and listener group was significant [F(1, 1.76) = 17.91, p < 0.001].2 Post hoc independent sample t-tests revealed that the significant interaction between listener group and ripple density was influenced by the trend toward lower SMD thresholds with increase in ripple density. Listeners with normal hearing had lower SMD thresholds, at ripple densities of 1.0 and 2.0 cpo, compared to listeners with hearing loss. Bonferonni-corrected paired-sample comparisons confirmed the trend shown in Fig. 3. Only SMD thresholds at 1.0 [t (31) = −3.457, P = 0.002] and 2.0 [t (28.721) = −10.019, P < 0.001] were significantly different between listeners with normal hearing compared to listeners with hearing loss.

FIG. 3.

Spectral ripple modulation transfer function (SMTF) for listeners with normal hearing and hearing loss. The filled symbols represent listeners with normal hearing. The open symbols represent listeners with hearing loss. The error bars indicate ±1 SD about the mean threshold for each listener group. SMD thresholds are shown on the y axis, and ripple density (cpo) is shown on the x axis Minimal difference is shown in threshold (i.e., SMD) and variance between listeners with normal hearing and hearing loss at low spectral ripple densities (i.e., 0.25 and 0.5 cpo). At higher ripple densities (i.e., 1.0 and 2.0 cpo), listeners with normal hearing had better SMD thresholds and also less variability compared to listeners with hearing loss.

To assess the clinical viability of the SMD task in predicting auditory abilities, we determined the relationship between this measure and each listener's pure tone average thresholds at 500, 1000, and 2000 Hz. Instead of using absolute thresholds at a single frequency, pure tone average thresholds were used as an audiometric assessment of hearing status. Pearson two-tailed correlation revealed that only SMD at 1.0 and 2.0 cpo significantly correlated with PTA. Pearson correlation analysis was also used to evaluate the relationship between SMD and the three speech measures. For unamplified speech in quiet (WRS), SMD at 1.0 and 2.0 cpo approached significance at a Bonferonni alpha value of 0.002. For amplified speech in quiet, SMD at 1.0 and 2.0 cpo were significant at a Bonferonni alpha value of 0.002. For speech in noise (QuickSIN), SMD at 1.0 and 2.0 cpo were significant at a Bonferonni alpha value of 0.002. All correlation results are shown in Table I.

TABLE I.

Correlation coefficients and P values (parentheses) for each of the test conditions. PTA, pure tone average; WRS, word recognition scores; LNR, amplified speech recognition scores; QuickSIN, quick speech in noise test; SMD, spectral modulation detection; SRD, spectral ripple-phase discrimination; ERB, equivalent rectangular bandwidth from auditory filter measures.

| PTA | WRS | LNR | QuickSIN | |

|---|---|---|---|---|

| SMD 0.25 | −0.075 (0.677) | −0.083(0.645) | −0.263(0.139) | 0.090(0.619) |

| SMD 0.5 | 0.155(0.389) | −0.337(0.055) | −0.424(0.014) | 0.297(.0093) |

| SMD 1.0 | 0.553 (0.001)a | −0.504(0.003) | −0.729(0.000)a | 0.561(0.001)a |

| SMD 2.0 | 0.737 (0.000)a | −0.498(0.003) | −0.741(0.000)a | 0.596(0.000)a |

| SRD | −0.677 (0.000)a | 0.377 (0.058) | 0.724 (0.000)a | −0.497(0.010) |

| ERB 500 | 0.330 (0.080)b | −0.315(0.096) | −0.473 (0.010) | 0.277(0.146) |

| ERB 2000 | 0.455 (0.013)b | −0.265(0.164) | −0.598 (0.001)a | 0.362(0.054) |

Statistical significance at Bonferonni adjusted alpha values of 0.002.

Correlation between ERB and auditory thresholds at 500 and 2000 Hz.

IV. EXPERIMENT 3: SPECTRAL- RIPPLE DISCRIMINATION (SRD)

A. Rationale

This experiment measured broadband spectral processing using the SRD task. This task measures the highest spectral modulation frequency at which listeners could detect an inversion of the phase of a rippled noise, using a fixed spectral modulation depth of 100%.

B. Methods

1. Subjects

Twenty-one listeners with sensorineural hearing loss (age: M = 66, SD = 23.36; range, 21–89) and five listeners with normal hearing listeners (age: M = 25, SD = 2.63) participated in this experiment. These listeners also completed all the other experiments. However, seven of the listeners who completed all the other experiments were unavailable for testing on the SRD task.

2. Stimuli

The task used in this experiment is similar to the test used by Won et al. (2007) on listeners with cochlear implants. To create the 500-ms rippled noise, 200 log-spaced pure tones from 100 to 5000 Hz with random starting phases were summed and shaped with a full-wave rectified sinusoidal function. Fourteen different ripple densities were used in the procedure tracking on ripple density. Beginning at a value of 0.125 cpo, densities were separated by a factor of 1.414, corresponding to 0.125, 0.176, 0.250, 0.354, 0.500, 0.707, 1.000, 1.414, 2.000, 2.828, 4.000, 5.657, 8.000, and 11.314 cpo. Logarithmic scaling of densities gives a closer representation of the non-linear frequency analysis performed by the peripheral auditory system.

The starting phase of the standard stimuli was generated by multiplying 2π with a randomly selected fraction between 0 and 1. The phase-inverted ripple stimuli were generated by adding radians to the starting phase of the standard rippled stimuli but maintaining the same bandwidth, ripple amplitude (i.e., peak-to-valley ratio) and ripple density in cpo. An illustration of the test stimulus is shown in Fig. 2(b).

3. Procedure

Listeners were instructed to select the stimulus that sounded different (i.e., the phase-inverted stimulus) from an on-screen display using a three-interval, three-alternative forced choice paradigm. All stimuli were presented at 10 dB above the PTA in dB SPL for listeners with hearing loss and 55 dB SPL for listeners with normal hearing. Threshold was determined at 71% correct, using a two-down one-up procedure (Levitt, 1971). A threshold run was terminated after 13 reversals. Each listener completed nine randomized threshold runs. SRD was determined by averaging the ripple density for the last eight reversals. Listeners' SRD thresholds indicate the highest ripple density (i.e., geometric mean) at which they could detect a complete inversion of the phase of the rippled noise. To reduce possible loudness cues, the signal was roved over an 8 dB range using 1 dB steps. To reduce possible spectral pitch cues, the starting phase of the ripple was randomly varied across presentations.

C. Analysis and results

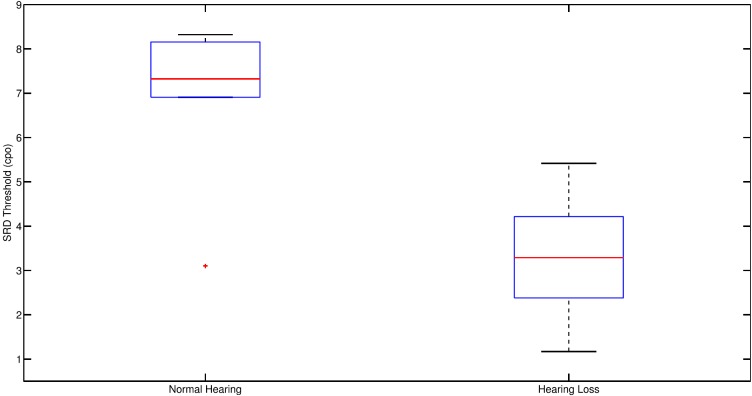

The mean SRD for listeners with normal hearing and hearing loss are shown in Fig. 4. Listeners with normal hearing had better resolution (i.e., better SRD) than listeners with hearing loss. Listeners with normal hearing could detect the phase inversion at higher ripple densities compared to the listeners with hearing loss. An independent-samples t-test confirmed that SRD thresholds were significantly higher for listeners with normal hearing compared to listeners with hearing loss [t (24) = 4.973, P < 0.001]. Pearson correlation was used to assess the relationship between SRD and PTA as well as speech scores. SRD significantly correlated with PTA and amplified speech recognition scores. All correlation results are shown in Table I.

FIG. 4.

(Color online) Boxplot of spectral ripple-phase discrimination threshold (SRD) for listeners with normal hearing and hearing loss. Listeners with normal hearing had better ripple density discrimination thresholds (i.e., better SRD) compared to listeners with hearing loss. The + symbols represent outliers. Outliers were defined as data values greater than 2 times the interquartile range.

V. EXPERIMENT 4. NOTCHED-NOISE MASKING

A. Rationale

This experiment evaluated narrowband spectral resolution using notched-noise masking to estimate auditory filter bandwidths at 500 and 2000 Hz. Auditory filters were measured on listeners with normal hearing and hearing loss using a modified version of the notched-noise procedure as described by Stone et al. (1992).

B. METHODS

1. Subjects

Participants were the same listeners who completed experiments 1 and 2.

2. Stimuli

A 360-ms pure-tone probe signal (fc = 500 and 2000 Hz) was digitally generated using a sampling rate of 48.8 kHz and 25-ms cosine ramped rise and fall times. To create the masker, a digital noise was generated using a sampling rate of 48.8 kHz. The masker was a 460-ms noise with two noise bands that were positioned either symmetrically or asymmetrically about the probe to create various spectral notch widths. The notch was created by positioning a band of noise with sharp edges (i.e., 115 dB/octave filter slopes) on each side of the probe frequency. The normalized frequency of the lower notch width was defined as , and the upper notch width was defined as ; represents the edge of the lower noise band and represents the edge of the upper noise band. fc represents the frequency of the probe signal. The lower and upper edges of the noise were set at ±0.8 multiplied by the probe signal frequency. Six notch width configurations were used. Four of the notch edges were positioned at symmetrical normalized frequencies units of 0, 0.1, 0.2, and 0.4. Two of the notch edges were positioned at asymmetrical normalized frequencies units of [0.2, 0.4] and [0.4, 0.2] for the lower and upper noise bands, respectively.

3. Procedure

Listeners were instructed to detect a 360-ms probe using a two-interval, two-alternative forced-choice procedure. Each listener was trained using a practice block with symmetric and wide notch widths. Correct-answer feedback was provided during the practice and testing sessions. Masked thresholds were obtained using a fixed probe which was presented at 10 dB SL. The masker level was varied adaptively to estimate the 71% correct threshold level (Levitt, 1971). A single reversal meant that the intensity of masking noise signal was increased after two consecutive correct responses and decreased after a single incorrect response. The step size was 7.5 dB for the first four reversals and reduced to 2 dB for the remaining 10 reversals. Testing included randomized presentations of two blocks of 14 reversals for each of the six notch widths. The mean of the last six reversals was used to calculate the masked threshold for each notch condition.

The shape of the auditory filter was obtained by creating a function relating masked threshold with notch width. The function relating masked threshold to notch widths provides an indication of an individual's ability to detect a probe signal as progressively less noise leaks through the skirts of the auditory filter. The data were fit using the rounded-exponential (roex) model by Patterson et al. (1982), and auditory filter bandwidths were determined in equivalent rectangular bandwidths (ERB) using the Polyfit program by Rosen and Baker (1994).

C. Analysis and results

All ERBs were transformed to z-scores, which normalized the proportion and allowed equal error bounds for all filter bandwidths (Thornton and Raffin, 1978). The z-transformed ERBs were subject to Tukey's HSD test for outliers. Auditory filter bandwidths (ZERB) for two listeners at 500 Hz and three listeners at 2000 Hz were identified as outliers and thus excluded from all further statistical analyses.

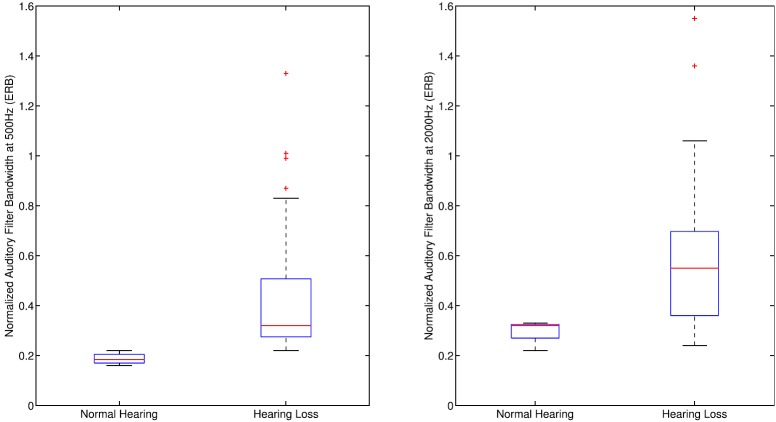

Auditory filter bandwidths (ERB) are shown in Fig. 5 for listeners with normal hearing and listeners with hearing loss. In spite of some overlap in filter bandwidth across the two listener groups, listeners with normal hearing had narrower auditory filter bandwidths compared to some of the listeners with hearing loss. Independent-samples t-test confirmed that listeners with hearing loss had significantly wider ERBs compared to listeners with normal hearing at 500 Hz [t(25.675) = −4.610, P < 0.001]. At 2000 Hz, the ERBs were not significantly different between the two listener groups [t(27) = −1.868, P = 0.073].

FIG. 5.

(Color online) Boxplots of normalized auditory filter bandwidths (ERB) for listeners with normal hearing and hearing loss at 500 Hz (left panel) and at 2000 Hz (right panel). The + symbols represent outliers. Outlier were defined as data values greater than 2 times interquartile range.

Pearson correlation analyses were used to evaluate the relationship between hearing threshold and ERB at 500 and 2000 Hz. Filter bandwidths significantly increased with thresholds at 500 and 2000 Hz. Pearson correlation was also used to evaluate the relationship between ERB and speech scores. Across all listeners, ERB at 500 Hz significantly correlated with the linear amplified speech scores in quiet. At 2000 Hz, the correlation between ERB and speech scores was also significant for the linear amplified speech scores in quiet. All correlation coefficient results are shown in Table I.

VI. GENERAL ANALYSIS AND RESULTS

A. Which measure best predicts speech recognition performance?

This study evaluated the predictive role of various spectral measures on three different measures of speech recognition in quiet and noise. All spectral measures from experiments 2–4 were subject to step-wise multiple linear regression. The independent variables were the spectral measures (i.e., ERB at 500 and 2000 Hz, SMD at 0.25, 0.5, 1.0, and 2.0 cpo and SRD). A separate regression model was evaluated with each of the three measures of speech recognition (i.e., WRS, LNR, and QuickSIN) from experiment 1 as the dependent variable. The regression model for WRS indicated the SMD at 2.0 cpo explained 23% of the variance [R2 = 0.23, F(1,24) = 8.98, P = 0.006]. The regression model for LNR scores indicated that SMD at 2.0 cpo explained 63% of the variance [R2 = 0.63, F(1, 24) = 41.72, P < 0.001]. The regression model for QuickSIN scores indicated that SMD at 2.0 cpo explained 27% of the variance [R2 = 0.27, F(1, 24) = 10.10, P = 0.004]. In summary, the regression models showed that SMD at 2.0 cpo significantly predicted WRS scores (β = −0.53, P < 0.01), LNR scores (β = −0.80, P < 0.001) and QuickSIN scores (β = 0.55, P < 0.01). However, the predictive strength of SMD at 2.0 cpo must be interpreted with caution because of the strong influence of colinearity. SMD at 2.0 cpo correlated with all the other measures of spectral resolution. Commonality analysis was used to assess the unique contribution of each spectral measure to the overall variance explained by each regression model. This analysis decomposes the correlation coefficient in multiple regression into the percent that each independent variable contributes to the overall variance as well as the proportion of variance explained by the common effects among the independent variables (Nathans et al., 2012; Nimon, 2010). Results indicate that SRD and ERB at 2000 Hz were notable contributors to the overall variance explained by each regression model. The unique and common variance from the commonality analyses are shown in Tables II, III, and IV.

TABLE II.

Summary of commonality analyses. All listeners' word recognition score (WRS) was the dependent variable, and the seven measures of spectral resolution were the independent predictor variables.

| Spectral measure | Unique | Common |

|---|---|---|

| SMD 0.025 | 0.018 | −0.011 |

| SMD 0.05 | 0.001 | 0.113 |

| SMD 1.0 | 0.012 | 0.242 |

| SMD 2.0 | 0.023 | 0.225 |

| SRD | 0.053 | 0.089 |

| ERB 500 | 0.029 | 0.071 |

| ERB 2000 | 0.228 | 0.019 |

TABLE III.

Summary of commonality analyses. All listeners score for linear amplified speech was the dependent variable and the seven measures of spectral resolution were the independent predictor variables.

| Spectral measure | Unique | Common |

|---|---|---|

| SMD 0.025 | 0.009 | 0.055 |

| SMD 0.05 | 0.040 | 0.131 |

| SMD 1.0 | 0.069 | 0.397 |

| SMD 2.0 | 0.001 | 0.463 |

| SRD | 0.252 | 0.152 |

| ERB 500 | 0.013 | 0.190 |

| ERB 2000 | 0.136 | 0.116 |

TABLE IV.

Summary of commonality analyses. All listeners' QuickSIN SNR loss score was the dependent variable and the seven measures of spectral resolution were the independent predictor variables.

| Spectral measure | Unique | Common |

|---|---|---|

| SMD0.025 | 0.003 | 0.005 |

| SMD 0.05 | 0.044 | 0.045 |

| SMD 1.0 | 0.049 | 0.266 |

| SMD 2.0 | 0.000 | 0.355 |

| SRD | 0.074 | 0.174 |

| ERB 500 | −0.002 | 0.078 |

| ERB 2000 | 0.226 | 0.089 |

B. What are the relationships between the different measures of spectral processing?

One aim of this study was to evaluate whether the different spectral measures were governed by the same underlying spectral processing mechanism. A Pearson product-moment correlation explored the relationships between and among the seven different measures of spectral processing for listeners with normal hearing and hearing loss. This analysis was found to be statistically significant indicating a strong relationship between these tasks. SMD at 2.0 cpo significantly correlated with all SMD, ERB, and SRD measures; this suggests that similar mechanisms (i.e., spectral processing) may govern thresholds for these tasks. There were significant correlations between ERB at 500 Hz and SMD at 0.5, 1.0, and 2.0 cpo, suggesting that localized regions with best resolution influences detection thresholds for broadband measures of spectral processing. The relationship between these spectral measures was then subjected to a first-order partial correlation analysis to explore the relationship between SMD at 0.25, 0.5, 1.0, and 2.0 cpo and SRD, ERB at 500 and 2000 Hz while controlling for the effects of pure-tone average thresholds. SMD at 2.0 cpo significantly correlated with all the other measures. This finding indicates that a relationship between the spectral measures exists above and beyond PTA. All correlation coefficients are shown in Table V.

TABLE V.

Pearson correlation coefficients and alpha vales (in parentheses) for assessment of the relationship between the different broadband spectral measures and narrowband spectral measures. SMD, spectral modulation detection; SRD, spectral ripple-phase discrimination; and ERB, equivalent rectangular bandwidths.

| SRD | ERB (500 Hz) | ERB (2000 Hz) | |

|---|---|---|---|

| SMD 0.25 | −0.432 (0.027) | 0.441 (0.017) | −0.019 (0.922) |

| SMD 0.5 | −0.510(0.008) | −0.644 (0.000)a | 0.019 (0.923) |

| SMD 1.0 | −0.743 (0.000)a | 0.685 (0.000)a | 0.245 (0.199) |

| SMD 2.0 | −0.761 (0.000)a | 0.654 (0.000)a | 0.520 (0.004)a |

| SRD | −0.538 (0.008) | −0.339 (0.114) |

Significant at Bonferonni adjusted alpha values of 0.004

VII. GENERAL DISCUSSION

This study evaluated the relationship between auditory filter bandwidths, spectral ripple-phase discrimination, and spectral ripple-depth detection on with normal hearing and hearing loss. Second, the role of these spectral measures in predicting speech recognition scores and amplification outcomes in the same listener was also assessed. SMD at 2.0 cpo significantly predicted speech scores for unamplified, amplified speech in quiet and speech in noise. Results from commonality analyses showed higher values for the unique variance accounted for by SRD and ERB at 2000 Hz, suggesting that these measures contributed to the overall variance accounted for by SMD in the regression model.

The finding that SMD at 2.0 cpo was a significant predictor of speech recognition performance is consistent with reports from one other study. Bernstein et al. (2013) evaluated the relationship between speech recognition and ripple perception on listeners with normal hearing and impaired acoustic hearing using a four-octave wide noise with spectral modulations at 0.5, 1.0, 2.0, and 4.0 cpo and temporal modulations at 4, 12, and 32 Hz. Spectro-temporal modulation sensitivity (STM) at 2.0 cpo and 4 Hz was the only significant predictor of speech intelligibility. Mehraei et al. (2014) assessed how well STM predicted speech recognition in noise using narrowband (i.e., 1 octave-wide) noise carriers combined with different spectral and temporal modulation rates. They reported that a modified version of the speech intelligibility index (SII) model, which incorporated a combination of STM for 1000 Hz, 2 cpo and 4 Hz and 4000 Hz, 4 cpo and 4 Hz, accounted for 72% of the variance in scores. In contrast, the significant correlation between SMD at 2.0 cpo for listeners with impaired acoustic hearing differs from reports for listeners with cochlear implants where SMD at 0.25 and 0.5 significantly correlates with speech scores (Anderson et al., 2012; Saoji et al., 2009). These contrasting findings require further evaluation.

With regard to the role of SRD in predicting speech scores, Henry et al. (2005) also reported a significant correlation between SRD and speech recognition in quiet, using similar /vCv/ stimuli. The significant correlation between SMD and clinical measures such as WRS and QuickSIN is promising and may indicate potential clinical viability for the SMD task, especially at ripple densities of 1.0 and 2.0 cpo. Results from Zhang et al. (2013) also support the clinical viability of the SMD task. They reported that SMD at 1.0 cpo was the best predictor of how much additional improvement listeners would receive from combining acoustic and electric hearing (i.e., bimodal benefit). SMD was much better at predicting bimodal benefit compared to audiometric thresholds or clinical measures of speech recognition in the acoustically aided ear. Published ripple data for listeners with impaired acoustic hearing are scant. We are only able to compare our findings with results from Henry et al. (2005) (SRD: range, 0.5–5.5), and Zhang et al. (2013) (SMD at 1.0 cpo: range, 9–18 dB). Despite slight differences in task design, and stimuli parameters when comparisons made between studies, the threshold range reported in our study, for SMD (2.53–15.40) and SRD (1.17–8.32), are comfortably within the range reported in these previous studies for listeners with impaired acoustic hearing.

A. Is there something special about SMD at 2.0 cpo?

In the present study, SMD at 2.0 cpo was the only significant predictor of all amplified and unamplified (but audible) speech in quiet and noise. Interestingly, the few studies that evaluated ripple perception at different densities have also reported a notable and often statistically significant improvement in SMD from 1.0 to 2.0 cpo. Despite differences in ripple parameters such as bandwidth and peak-to-valley ratio (i.e., spectral modulation depth), a few other investigators have reported optimal SMD thresholds at either 2.0 or 3.0 cpo. Other investigators such as Bernstein and Green (1987) and Summers and Leek (1994) used slightly different ripple parameters from those of the current study, but they also reported a similar trend in the spectral modulation transfer functions. Eddins and Bero (2007) evaluated SMD with 12 different configurations of bandwidth (octaves) and frequency (200–12 800 Hz). They reported that irrespective of the bandwidth (1, 2, 3, and 6 octaves) condition, the three listeners with normal hearing had best SMD at either 2.0 or 3.0 cpo. The spectral modulation transfer function from their results (i.e., Fig. 2) also showed a trend toward a steep improvement in SMD from 1.5 to 2.0 cpo with a further decline in SMD at ripple densities beyond 3.0 cpo. This trend of an improvement in SMD within 1.33–3.0 cpo followed by a decline at higher ripple densities persisted and was unaffected by randomizing versus fixing the starting phase as well as roving the intensity levels across trials. Their report further supports the claim that optimal spectral processing is unaffected by or not related to phase or level cues (Eddins and Bero, 2007). Most recently, Anderson et al. (2012) reported spectral modulation transfer functions on three listeners with normal hearing, and their results also showed an improvement in SMD between 1.5 and 2.0 cpo with best spectral processing at 3.0 cpo followed by a decline in SMD with increase in ripple density. Lateral inhibition may explain a portion of this consistent finding of optimal spectral processing at 2.0 and 3.0 cpo, but the presence of this trend in some listeners with hearing loss suggests that inhibition may not be the only mechanism responsible for this phenomena. Further studies are needed to understand factors that influence the functional role of inhibition and other mechanisms in the region of best spectral processing for the spectral-ripple depth detection task.

B. Do the same mechanisms account for SMD and SRD?

This study also evaluated the relationship between spectral ripple-phase discrimination and spectral ripple modulation detection on listeners with hearing loss and listener with normal hearing. Across all listeners, SRD significantly correlated with all four SMD measures. These results suggest that both tasks are measuring similar aspects of spectral processing on this cohort of listeners with normal and impaired acoustic hearing. Put another way, listeners may be using the same skill sets or limited by the same mechanism that could be acoustic, perceptual, or task-related for both the spectral-ripple detection and discrimination tasks.

Our current findings suggest that overall broadband ripple perception may be governed by either low frequency or regions of best spectral processing. Coincidentally, our listeners had have narrower filter bandwidths in the lower frequencies compared to higher frequencies and this makes it difficult to tease apart the two contributing factors. Mehraei et al. (2014) evaluated the effect of carrier frequency on STM and its relationship to hearing status and speech recognition performance. They used an octave-wide noise with different carrier frequencies of 500, 1000, 2000, and 4000 Hz. STM detection was determined for various combinations of spectral modulations at 0.5, 1.0, 2.0, and 4.0 cpo and temporal modulations at 4, 12, and 32 Hz. Significant differences between listeners with normal hearing and hearing loss were seen for temporal modulations of 4 and 12 Hz combined with spectral modulation of 2.0 cpo using a 1000 Hz carrier frequency. Their results showed a similar trend using a 500 Hz carrier, but the difference between the two listener groups did not reach statistical significance.

Anderson et al. (2011) evaluated the relationship between narrowband and broadband measures of SMD on listeners with cochlear implants. Their results for electrically aided listeners suggest that region of best resolution correlated with overall broadband ripple perception. Their results are in agreement with our current findings that the low frequency region with narrower filter bandwidths best correlated with SMD thresholds. Won et al. (2014) reported that global spectral processing rather than octave-wide spectral processing was a great predictor of vowel recognition performance. On the other hand, Anderson et al. (2012) directly assessed the relationship between these difference measures of spectral processing in listeners with cochlear implants. For their listeners with cochlear implants, the localized basilar membrane region of best spectral processing best predicted overall thresholds for the rippled noise task. However, this may not entirely explain our current findings because of the logarithmic scale used for the rippled noise stimuli. Put together, these findings suggest that rippled noise resolution perception may be governed by integrity of individual auditory filters as well as across-channel spectral processing and possibly the ability to use temporal fine structure information. Mehraei et al. (2014) evaluated STM for different octave-wide noise band carriers with different spectral and temporal modulation rates. They reported significant differences between listeners with normal hearing and hearing loss for a carrier frequency of 1000 Hz combined with spectral modulation of 2 cpo and temporal modulations of 4 and 12 Hz. Furthermore, Bernstein et al. (2013) showed that STM sensitivity was best predicted by temporal fine structure (TFS) processing at 500 Hz. Additional comparisons with frequency modulation (FM) detection thresholds from Summers et al. (2013) showed that FM sensitivity at 500 Hz significantly predicted STM sensitivity. Put together, these studies present a compelling argument for the role of TFS ability, from phase-locking information provided by auditory nerve fibers, to improve listeners' ability to detect slow moving spectral peaks at low modulation rates. Despite some differences between the stimuli, our findings are similar to that reported by Summers et al. (2013). These findings suggest that listeners' STM detection, and perhaps spectral modulation detection, ability is influenced by a combination of within and across-channel spectral processing and TFS processing. These findings contrast with that of listeners with cochlear implants. Anderson et al. (2012), examined the relationship between SMD and SRD performance on the same listeners with cochlear implants. They suggested different mechanism may be driving listeners' detection due to discrepancies between the tasks. Further studies are needed to explore the exact mechanisms that drive the similarities and potential differences between these two tasks for listeners with acoustic compared to electric impaired hearing.

VIII. CONCLUSIONS

Our findings indicate a strong correlation between all the spectral measures suggesting that spectral ripple detection and discrimination thresholds may be driven by similar mechanisms of spectral processing. Auditory filter bandwidths at 500 Hz significantly correlated with SMD measures, suggesting that localized regions of optimal narrowband spectral processing may have a great influence on detection thresholds for broadband measures of spectral processing. Second, SMD at 2.0 cpo significantly predicted all speech measures. High values for the unique variance explained by other measures such as SRD and ERB at 2000 Hz suggest that the overall variance explained by each regression model was influenced by colinearity between the measures.

For the spectral ripple modulation depth detection task (SMD), significant differences between listeners with normal hearing and hearing loss were only present at 1.0 and 2.0 cpo. In other words, the ripple modulation detection task was sensitive to differences between the listeners with normal hearing and hearing loss only at mid-densities. SMD at 1.0 and 2.0 cpo also significantly correlated with speech scores in quiet and noise.

For the spectral ripple density discrimination task, the maximum ripple density at which listeners with hearing loss were able to discriminate the phase-inverted ripple signal was lower compared to listeners with normal hearing. In other words, SRD was sensitive to differences in hearing status. SRD significantly correlated with amplified speech recognition in quiet.

Auditory filter bandwidth significantly correlated with pure-tone thresholds at 2000 Hz. Auditory filter bandwidth also significantly correlated with amplified speech recognition scores (i.e., LNR). The correlation between auditory filter bandwidths and any of the clinical measures of speech recognition (i.e., WRS and QuickSIN) was not significant.

SMD at 2.0 cpo significantly predicted all measures of speech perception. Commonality analyses revealed that a combination of SMD at 2.0 cpo, SRD and ERB at 2000 Hz accounted for most of the variance covered by the regression model. The significant correlation between these spectral measures and clinical measures such as NU-6 WRS and QuickSIN is promising and may indicate potential clinical viability for the spectral ripple modulation depth detection task. Time efficient measures of the ripple task (Drennan et al., 2014; Gifford et al., 2014; Molis et al., 2014) may present a clinically valid tool that can be used for diagnostic evaluations and predicting amplification outcomes for listeners with hearing loss.

SRD significantly correlated with all four SMD measures. For listeners with normal hearing and acoustic impaired hearing, similar spectral processing mechanisms may be driving performance on both tasks. Also auditory filter bandwidths at 500 Hz significantly correlated with all SMD and SRD measures; this suggests that localized frequency regions of best resolution may contribute greatly to overall broadband ripple perception. Further research is needed to determine the effect of localized regions of spectral processing on overall spectral shape perception for listeners with acoustic impaired hearing.

ACKNOWLEDGMENTS

This research was supported by grants from the National Institute of Deafness and Other Communication Disorders [NIDCD Grant Nos. F31 DC010127 (E.D.V.), R01 DC008306 (P.N.), and R01 DC006014 (P.S.)]. The authors would like to thank the editor, three anonymous reviewers, Heather Kreft, and Andrew Oxenham for providing helpful comments on a previous version of this manuscript. We are grateful to all the study participants for their time and dedicated efforts.

A portion of this work was presented in “Evaluating the relationship between three different measures of spectral resolution,” at the American Auditory Society Research Meeting, Scottsdale, AZ, March, 2011.

Footnotes

Unequal variance assumed for all Student t-tests when Levene's test for equality of variance was significant.

For all conditions where the assumption of sphericity was violated. Greenhouse Gieser degrees of freedom are reported.

References

- 52. Anderson, E. S. , Nelson, D. A. , Kreft, H. , Nelson, P. B. , and Oxenham, A. J. (2011). “ Comparing spatial tuning curves, spectral ripple resolution, and speech perception in cochlear implant users,” J. Acoust. Soc. Am. 130, 364–375. 10.1121/1.3589255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 1. Anderson, E. S. , Oxenham, A. J. , Nelson, P. B. , and Nelson, D. A. (2012). “ Assessing the role of spectral and intensity cues in spectral ripple detection and discrimination in cochlear-implant users,” J. Acoust. Soc. Am. 132, 3925–3934. 10.1121/1.4763999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Aronoff, J. M. , and Landsberger, D. M. (2013). “ The development of a modified spectral ripple test,” J. Acoust. Soc. Am. 134, EL217–EL222. 10.1121/1.4813802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Bernstein, J. G. W. , Mehraei, G. , Shamma, S. , Gallun, F. , Theodoroff, S. M. , and Leek, M. R. (2013). “ Spectrotemporal modulation sensitivity as a predictor of speech intelligibility for hearing-impaired listeners,” J. Am. Acad. Audiol. 24, 293–306. 10.3766/jaaa.24.4.5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Bernstein, L. R. , and Green, D. M. (1987). “ The profile-analysis bandwidth,” J. Acoust. Soc. Am. 81, 1888–1895. 10.1121/1.394753 [DOI] [Google Scholar]

- 5. Bor, S. , Souza, P. , and Wright, R. (2008). “ Multichannel compression: Effects of reduced spectral contrast on vowel identification,” J. Speech Lang. Hear. Res. 51, 1315–1327. 10.1044/1092-4388(2008/07-0009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Byrne, D. , and Dillon, H. (1986). “ The National Acoustic Laboratories' (NAL) new procedure for selecting the gain and frequency response of a hearing aid,” Ear Hear. 7, 257. 10.1097/00003446-198608000-00007 [DOI] [PubMed] [Google Scholar]

- 7. Davies-Venn, E. , and Souza, P. (2014). “ The role of spectral resolution, working memory, and audibility in explaining variance in susceptibility to temporal envelope distortion,” J. Am. Acad. Aud. 25(6), 592–604. 10.3766/jaaa.25.6.9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Drennan, W. R. , Anderson, E. S. , Won, J. H. , and Rubinstein, J. T. (2014). “ Validation of a clinical assessment of spectral ripple resolution for cochlear implant users,” Ear Hear. 35, E92–E98. 10.1097/AUD.0000000000000009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Dubno, J. R. , and Schaefer, A. B. (1992). “ Comparison of frequency selectivity and consonant recognition among hearing-impaired and masked normal-hearing listeners,” J. Acoust. Soc. Am. 91, 2110–2121. 10.1121/1.403697 [DOI] [PubMed] [Google Scholar]

- 10. Eddins, D. A. , and Bero, E. M. (2007). “ Spectral modulation detection as a function of modulation frequency, carrier bandwidth, and carrier frequency region,” J. Acoust. Soc. Am. 121, 363–372. 10.1121/1.2382347 [DOI] [PubMed] [Google Scholar]

- 11. Gifford, R. H. , Hedley-Williams, A. , and Spahr, A. J. (2014). “ Clinical assessment of spectral modulation detection for adult cochlear implant recipients: A non-language based measure of performance outcomes,” Int. J. Aud. 53, 159–164. 10.3109/14992027.2013.851800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Green, D. M. , Kidd, G., Jr. , and Picardi, M. C. (1983). “ Successive versus simultaneous comparison in auditory intensity discrimination,” J. Acoust. Soc. Am. 73, 639–643. 10.1121/1.389009 [DOI] [PubMed] [Google Scholar]

- 14. Green, D. M. , Onsan, Z. A. , and Forrest, T. G. (1987). “ Frequency effects in profile analysis and detecting complex spectral changes,” J. Acoust. Soc. Am. 81, 692–699. 10.1121/1.394837 [DOI] [PubMed] [Google Scholar]

- 15. Hawkins, D. B. , Walden, B. E. , Montgomery, A. , and Prosek, R. A. (1987). “ Description and validation of an LDL procedure designed to select SSPLSO,” Ear Hear. 8, 162–169. 10.1097/00003446-198706000-00006 [DOI] [PubMed] [Google Scholar]

- 16. Henry, B. A. , Turner, C. W. , and Behrens, A. (2005). “ Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners,” J. Acoust. Soc. Am. 118, 1111–1121. 10.1121/1.1944567 [DOI] [PubMed] [Google Scholar]

- 17. Jerger, J. (1970). “ Clinical experience with impedance audiometry,” Arch. Otolaryngol. 92, 311–324. 10.1001/archotol.1970.04310040005002 [DOI] [PubMed] [Google Scholar]

- 18. Killion, M. C. , Niquette, P. A. , Gudmundsen, G. I. , Revit, L. J. , and Banerjee, S. (2004). “ Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners,” J. Acoust. Soc. Am. 116, 2395–2405. 10.1121/1.1784440 [DOI] [PubMed] [Google Scholar]

- 19. Leek, M. R. , and Summers, V. (1996). “ Reduced frequency selectivity and the preservation of spectral contrast in noise,” J. Acoust. Soc. Am. 100, 1796–1806. 10.1121/1.415999 [DOI] [PubMed] [Google Scholar]

- 20. Levitt, H. (1971). “ Transformed up-down methods in psychoacoustics,” J. Acoust. Soc. Am. 49, 467–477. 10.1121/1.1912375 [DOI] [PubMed] [Google Scholar]

- 21. Litvak, L. M. , Spahr, A. J. , Saoji, A. A. , and Fridman, G. Y. (2007). “ Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners,” J. Acoust. Soc. Am. 122, 982–991. 10.1121/1.2749413 [DOI] [PubMed] [Google Scholar]

- 22. Mehraei, G. , Gallun, F. J. , Leek, M. R. , and Bernstein, J. G. W. (2014). “ Spectrotemporal modulation sensitivity for hearing-impaired listeners: Dependence on carrier center frequency and the relationship to speech intelligibility,” J. Acoust. Soc. Am 136, 301–316. 10.1121/1.4881918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Molis, M. R. , Gilbert, R. , and Gallun, F. J. (2014). “ Establishing a clinical measure of spectral-ripple discrimination,” J. Acoust. Soc. Am. 135, 2165–2165. 10.1121/1.4877033 [DOI] [Google Scholar]

- 24. Moore, B. C. (2007). Cochlear Hearing Loss: Physiological, Psychological and Technical Issues ( Wiley and Sons, Sussex, UK: ), pp. 1–266. [Google Scholar]

- 25. Nathans, L. L. , Oswald, F. L. , and Nimon, K. (2012). “ Interpreting multiple linear regression: A guidebook of variable importance,” Practical Assess. Res. Eval. 17, 1–19. [Google Scholar]

- 26. Nechaev, D. I. , and Supin, A. Y. (2013). “ Hearing sensitivity to shifts of rippled-spectrum patterns,” J. Acoust. Soc. Am. 134, 2913–2922. 10.1121/1.4820789 [DOI] [PubMed] [Google Scholar]

- 27. Nimon, K. (2010). “ Regression commonality analysis: Demonstration of an SPSS solution,” Mult. Linear Regress. Viewpoints 36, 10–17. [Google Scholar]

- 28. Patterson, R. D. , and Nimmo-Smith, I. (1980). “ Off-frequency listening and auditory-filter asymmetry,” J. Acoust. Soc. Am. 67, 229–245. 10.1121/1.383732 [DOI] [PubMed] [Google Scholar]

- 29. Patterson, R. D. , Nimmo-Smith, I. , Weber, D. L. , and Milroy, R. (1982). “ The deterioration of hearing with age: Frequency selectivity, the critical ratio, the audiogram, and speech threshold,” J. Acoust. Soc. Am. 72, 1788–1803. 10.1121/1.388652 [DOI] [PubMed] [Google Scholar]

- 30. Phillips, S. L. , Gordon-Salant, S. , Fitzgibbons, P. J. , and Yeni-Komshian, G. (2000). “ Frequency and temporal resolution in elderly listeners with good and poor word recognition,” J. Speech. Lang. Hear. Res. 43, 217–228. 10.1044/jslhr.4301.217 [DOI] [PubMed] [Google Scholar]

- 31. Rosen, S. , and Baker, R. J. (1994). “ Characterizing auditory filter nonlinearity,” Hear. Res. 73, 231–243. 10.1016/0378-5955(94)90239-9 [DOI] [PubMed] [Google Scholar]

- 32. Sabin, A. T. , Clark, C. A. , Eddins, D. A. , and Wright, B. A. (2013). “ Different patterns of perceptual learning on spectral modulation detection between older hearing-impaired and younger normal-hearing adults,” J. Assoc. Res. Otolaryngol. 14,283–294. 10.1007/s10162-012-0363-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sabin, A. T. , Eddins, D. A. , and Wright, B. A. (2012a). “ Perceptual learning evidence for tuning to spectrotemporal modulation in the human auditory system,” J. Neurosci. 32, 6542–6549. 10.1523/JNEUROSCI.5732-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Sabin, A. T. , Eddins, D. A. , and Wright, B. A. (2012b). “ Perceptual learning of auditory spectral modulation detection,” Exp. Brain Res. 218, 567–577. 10.1007/s00221-012-3049-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Saoji, A. A. , Litvak, L. M. , and Hughes, M. L. (2009). “ Excitation patterns of simultaneous and sequential dual-electrode stimulation in cochlear implant recipients,” Ear Hear. 30, 559–567. 10.1097/AUD.0b013e3181ab2b6f [DOI] [PubMed] [Google Scholar]

- 36. Sheft, S. , Risley, R. , and Shafiro, V. (2012). “ Clinical measures of static and dynamic spectral pattern discrimination in relationship to speech perception,” in Speech Perception and Auditory Disorders, edited by Dau T., Jepsen M. L., Poulsen T., and Dalsgaard J. ( The Danavox Jubilee Foundation, Ballerup, Denmark: ), pp. 481–488. [Google Scholar]

- 37. Sherbecoe, R. L. , and Studebaker, G. A. (2004). “ Supplementary formulas and tables for calculating and interconverting speech recognition scores in transformed arcsine units,” Int. J. Aud. 43, 442–448. 10.1080/14992020400050056 [DOI] [PubMed] [Google Scholar]

- 38. Stone, M. A. , Glasberg, B. R. , and Moore, B. C. J. (1992). “ Simplified measurement of auditory filter shapes using the notched-noise method,” Br. J. Audiol. 26, 329–334. 10.3109/03005369209076655 [DOI] [PubMed] [Google Scholar]

- 39. Summers, V. , and Leek, M. R. (1994). “ The internal representation of spectral contrast in hearing-impaired listeners,” J. Acoust. Soc. Am. 95, 3518–3528. 10.1121/1.409969 [DOI] [PubMed] [Google Scholar]

- 40. Summers, V. , Makashay, M. J. , Theodoroff, S. M. , and Leek, M. R. (2013). “ Suprathreshold auditory processing and speech perception in noise: Hearing-impaired and normal-hearing listeners,” J. Am. Acad. Audiol. 24, 274–292. 10.3766/jaaa.24.4.4 [DOI] [PubMed] [Google Scholar]

- 41. Supin, A. , Popov, V. V. , Milekhina, O. N. , and Tarakanov, M. B. (1999). “ Ripple depth and density resolution of rippled noise,” J. Acoust. Soc. Am. 106, 2800–2804. 10.1121/1.428105 [DOI] [PubMed] [Google Scholar]

- 42. Supin, A. Y. , Popov, V. V. , Milekhina, O. N. , and Tarakanov, M. B. (1994). “ Frequency resolving power measured by rippled noise,” Hear Res. 78, 31–40. 10.1016/0378-5955(94)90041-8 [DOI] [PubMed] [Google Scholar]

- 43. Swanepoel, R. , Oosthuizen, D. J. , and Hanekom, J. J. (2012). “ The relative importance of spectral cues for vowel recognition in severe noise,” J. Acoust. Soc. Am. 132, 2652–2662. 10.1121/1.4751543 [DOI] [PubMed] [Google Scholar]

- 44. Thibodeau, L. M. , and Van Tasell, D. J. (1987). “ Tone detection and synthetic speech discrimination in band-reject noise by hearing-impaired listeners,” J. Acoust. Soc. Am. 82, 864–873. 10.1121/1.395285 [DOI] [PubMed] [Google Scholar]

- 45. Thornton, A. R. , and Raffin, M. J. (1978). “ Speech-discrimination scores modeled as a binomial variable,” J. Speech. Lang. Hear. Res. 21, 507–518. 10.1044/jshr.2103.507 [DOI] [PubMed] [Google Scholar]

- 46. Turner, C. , Souza, P. , and Forget, L. (1995). “ Use of temporal envelope cues in speech recognition by normal and hearing-impaired listeners,” J. Acoust. Soc. Am. 97, 2568–2576. 10.1121/1.411911 [DOI] [PubMed] [Google Scholar]

- 47. Wilson, R. H. , Zizz, C. A. , Shanks, J. E. , and Causey, G. D. (1990). “ Normative data in quiet, broadband noise, and competing message for Northwestern University Auditory Test No. 6 by a female speaker,” J. Speech. Hear. Dis. 55, 771–778. 10.1044/jshd.5504.771 [DOI] [PubMed] [Google Scholar]

- 48. Won, J. H. , Clinard, C. G. , Kwon, S. , Dasika, V. K. , Nie, K. , Drennan, W. R. , and Rubinstein, J. T. (2011). “ Relationship between behavioral and physiological spectral-ripple discrimination,” J. Assoc, Res. Otolaryngol. 12, 375–393. 10.1007/s10162-011-0257-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Won, J. H. , Drennan, W. R. , and Rubinstein, J. T. (2007). “ Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users,” J. Assoc. Res. Otolaryngol. 8, 384–392. 10.1007/s10162-007-0085-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Won, J. H. , Humphrey, E. L. , Yeager, K. R. , Martinez, A. A. , Robinson, C. H. , Mills, K. E. , Johnstone, P. M. , Moon, I. J. , and Woo, J. (2014). “ Relationship among the physiologic channel interactions, spectral-ripple discrimination, and vowel identification in cochlear implant users,” J. Acoust. Soc. Am. 136, 2714–2725. 10.1121/1.4895702 [DOI] [PubMed] [Google Scholar]

- 51. Zhang, T. , Spahr, A. J. , Dorman, M. F. , and Saoji, A. (2013). “ Relationship between auditory function of non-implanted ears and bimodal benefit,” Ear Hear. 34, 133–141. 10.1097/AUD.0b013e31826709af [DOI] [PMC free article] [PubMed] [Google Scholar]