Significance

Which features of music are universal and which are culture-specific? Why? These questions are important for understanding why humans make music but have rarely been scientifically tested. We used musical classification techniques and statistical tools to analyze a global set of 304 music recordings, finding no absolute universals but dozens of statistical universals. These include not only commonly cited features related to pitch and rhythm but also domains such as social context and interrelationships between musical features. We speculate that group coordination is the common aspect unifying the cross-cultural structural regularities of human music, with implications for the study of music evolution.

Keywords: ethnomusicology, cross-cultural universals, group coordination, evolution, cultural phylogenetics

Abstract

Music has been called “the universal language of mankind.” Although contemporary theories of music evolution often invoke various musical universals, the existence of such universals has been disputed for decades and has never been empirically demonstrated. Here we combine a music-classification scheme with statistical analyses, including phylogenetic comparative methods, to examine a well-sampled global set of 304 music recordings. Our analyses reveal no absolute universals but strong support for many statistical universals that are consistent across all nine geographic regions sampled. These universals include 18 musical features that are common individually as well as a network of 10 features that are commonly associated with one another. They span not only features related to pitch and rhythm that are often cited as putative universals but also rarely cited domains including performance style and social context. These cross-cultural structural regularities of human music may relate to roles in facilitating group coordination and cohesion, as exemplified by the universal tendency to sing, play percussion instruments, and dance to simple, repetitive music in groups. Our findings highlight the need for scientists studying music evolution to expand the range of musical cultures and musical features under consideration. The statistical universals we identified represent important candidates for future investigation.

Humans are relatively homogenous genetically but are spectacularly diverse culturally (1). Nevertheless, a few key cross-cultural universals, including music, are present in all or nearly all cultures and are often considered to be candidates for being uniquely human biological adaptations (2–4). Although music has been called “the universal language of mankind” (5), there is considerable debate as to whether any specific musical features are actually universal, and even whether there is a universally valid definition of music itself (6, 7). Supporters have proposed lists of up to 70 candidate musical universals (e.g., refs. 8–12), but skeptics (e.g., refs. 13–15) have gone so far as to claim that “the only universal aspect of music is that most people make it” (14). These debates are important because the demonstration of musical universals could point to constraints that limit cross-cultural variability, as well as provide insights into the evolutionary origins and functions of music (16). However, despite decades of theoretical debate, there has been little attempt to empirically verify the actual global distribution of supposedly universal aspects of music.

It is important to note that the concept of a cross-cultural universal does not necessarily imply an all-or-none phenomenon without exceptions. Classic typologies from anthropology and linguistics distinguish between absolute universals that occur without exception and statistical universals that occur with exceptions but significantly above chance (2, 17, 18). They also distinguish between universal features that concern the presence or absence of particular individual features and universal relationships that concern the conditional associations between multiple features (SI Discussion). In language, for instance, the use of verbs is a feature of most languages (i.e., a universal feature), and languages in which verbs appear before objects also tend to have prepositions (i.e., a universal relationship; ref. 17). Analogous proposals have been made for music, such as the use of an isochronous (equally timed) beat as a universal feature, and the association between the use of an isochronous beat and group performance as a universal relationship, such that music without an isochronous beat is thought to be more likely to be performed solo (12).

A number of empirical studies (e.g., refs. 19–25) have revealed cross-cultural musical similarities between particular Western cultures and particular non-Western ones, but none of them has quantitatively tested any candidate musical universals on a global scale. Alan Lomax’s pioneering Cantometrics Project (26) presented a global analysis of music centered on a taxonomy of song style, but unfortunately these data could not be used to systematically test most candidate universals because the Cantometric classification scheme ignored many musical features of interest (e.g., scales) precisely because they were assumed to be too common to be useful. Furthermore, Lomax did not include nonvocal instrumental music in his analyses, and his dataset has not been made publicly available. In the decades since Cantometrics, musicologists, anthropologists, and psychologists have all tended to avoid global comparative studies of music, focusing instead on finer-grained studies of one or several specific societies. Obstacles to a global study of music, beyond sociological factors such as the rise of postmodernism, include the absence of global samples, cross-culturally applicable classification schemes, and appropriate statistical techniques (27).

Another limitation of previous cross-cultural studies is that they have sometimes assumed that the music of a given culture can be represented by a single style. However, our previous work has demonstrated that musical variation within a culture can be even greater than variation between cultures (28). Empirically evaluating candidate musical universals thus requires a sample of real musical examples, rather than generalizations about dominant musical styles. For example, although much South Asian and Western music uses an isochronous beat, there are many examples within these cultures that do not (e.g., North Indian alap and opera recitative). Here we synthesize existing schemes to develop a methodology for classifying specific musical features and apply it to a global sample of music recordings. This enables us to provide empirical support for a number of statistical universals that have been proposed in the literature, as well as to identify a number of previously unidentified universals. We are also able to reject some proposals that do not in fact seem to be universal.

SI Discussion

Terminological Issues.

We have adopted the terms “universal features” and “universal relationships” to refer to the two main categories of universals because these seem more intuitive than common alternatives. Other terms used for universal features include “unrestricted universals,” “presence/absence universals,” “non-implicational universals,” and “unconditional universals,” and other terms for universal relationships include “implicational universals,” “relational universals,” and “conditional universals” (2, 17, 18).

Possible Mechanisms Underlying Musical Universals.

This section provides additional details and references regarding possible mechanisms explaining the statistical universals listed in the main text. The descriptions are cross-referenced to the lists in the main text using bold text and numbers in the text below, with “UF” indicating a universal feature and “UR” indicating a universal relationship. Here we have grouped these explanations into three broad—and not necessarily mutually exclusive—categories: (i) vocal-motor, (ii) cognitive, and (iii) social/cultural.

Vocal-motor.

The following universals seem primarily related to motor constraints, in the sense that they represent the most energetically efficient ways of producing sound. Several relate to (nonvocal) instrument use, which is largely limited to human music, whereas the rest seem to be largely shared with speech and/or learned animal song.

Instruments.

Although the voice is the most universal instrument, the human capacity for tool use allows us to construct (nonvocal) instruments (UF 14) to create louder, wider-ranging, more accurate, and more complex music than the voice alone (9). Because percussion instruments [UR 6; including both membranophones (UR 7) and idiophones (UR 8)] are generally constructed to be struck forcefully to produce loud, but unpitched sounds, they tend not to be performed solo but in groups (UR 1) accompanying pitched melodies produced by the voice, chordophones, and/or aerophones.

Different instruments are subject to different production constraints. Apart from vocal features such as word use that are not applicable to nonvocal instruments, all of the statistical universals we identified were consistent for both vocal and instrumental subsamples. In some cases, particularly aerophones (e.g., flutes) that are sounded by breathing, the same mechanistic constraints on vocalization in songs are likely to be the causes of their universality in instrumental music. In other cases, more detailed analysis will be needed to determine whether the universals are due to similar mechanistic constraints operating on instrumental production (e.g., difficulties in rapid finger movements) or to the adoption of song-like melodies by instruments.

Singing.

Birds and most other nonhuman animals rarely use tools, and thus instrumental music, with the exception of great ape bimanual drumming (11, 47), seems limited to humans. Even in humans, however, the ability to acquire instruments other than the voice is limited by cost, environmental resource availability, and the types of instruments historically available, whereas the voice (UF 13) is inborn.

Several universals seem to stem from mechanisms of vocal control that are shared with animal song. By comparing bird songs with European and Chinese folk melody notations, Tierney et al. (22) found several parallels, concluding that the ubiquity of small intervals (UF 5) was due to constraints on the muscles controlling vocal fold tension, whereas descending/arched contours (UF 4) were due to decreasing subglottal air pressure. Similarly, we propose that short phrases (UF 12) are related to cross-species constraints on lung capacity that require periodic pauses for breaths or minibreaths to replenish air supply in human song, speech, and birdsong. We also propose that the universal use of chest voice (i.e., modal register; UF 15) is related to the fact that the use of the entire glottis provides this register more efficient sound production than the low vocal fry or high falsetto registers. Modal register is also predominant in human speech and animal song, and parallels in human and bird low-frequency production mechanisms suggest that the same mechanisms constrain vocal register in humans and birds despite substantial differences in their anatomical morphology.

The relationship found between syllabic singing (UR 10) and few durational values (UR 4) also seems likely to stem from vocal-motor constraints, because the more frequent enunciation of consonants involved in syllabic singing makes it more difficult to perform rapid ornamentation compared with melismatic singing, which extends a single vowel over many notes. We are not aware of any research on syllabic vs. melismatic singing in nonhuman animals, but we predict that the relationship between syllabic singing and few duration values would also apply to any animals that used melisma.

Cognitive.

The following statistical universals seem to be primarily related not to motor constraints on production efficiency, but to cognitive factors. These factors largely relate to the abilities of pitch-matching and rhythmic entrainment that enable synchronization of multiple individuals in coordinated group performance at the proximate level and whose combination is largely unique to human music (11, 12, 46).

Rhythm.

The use of an isochronous beat (UF 6) increases coordination by allowing multiple performers to entrain their rhythms to a single beat (46). An identical mechanism applies for the association between beat (UR 2) and dance (UR 9), because musicians rarely perform solely to accompany their own solo dancing. This association is further enhanced by the fact that both the auditory system and the vestibular system that controls head-motion perception share the same perceptual organ (the inner ear) and have been shown to directly influence one another (73). Subdivision of the beat into a metrical hierarchy (UF 7) and the use of few durational values (UF 11/UR 4), repetitive motivic patterns (UF 10/UR 3), and phrase repetition (UR 5) all help to create simple and predictable rhythms that enhance coordination and memorability (74).

The use of two- and three-beat subdivisions (UF 8) in general can also be seen as an extension of this general tendency for simple, predictable rhythms compared with more complex divisions not divisible by 2 or 3. The predominance of two-beat subdivisions (UF 9) over three-beat subdivisions may also reflect greater danceability owing to human bipedalism.

Pitch.

The use of discrete pitches (UF 1) helps to coordinate multiple performers to sing the same note or appropriate harmonic intervals (12), as well as helping to create memorable melodies that are easy to teach. This is made even easier by restricting the pool to seven or fewer scale degrees per octave (UF 3), which provides the further advantage of allowing for intervals and chords with higher “harmonicity” (similarity to the harmonic series; ref. 44). The use of nonequidistant scales (UF 2) may not necessarily improve pitch matching but does allow for the creation of more distinctive melodies that are easier to teach and learn, as well as the creation of tonal hierarchies and thus the ability to assign different types of musical meaning to different pitches (75).

We note that resonance of vibrating bodies to create pitched sounds is a general acoustic property shared with speech and animal song, but the use of pitched sounds in speech and much bird song tends to vary in frequency without using fixed scales made up of discrete pitches (11). However, controlled comparison of bird “scales” and human scales remain limited, and existing evidence is equivocal as to whether pitches in bird songs are analogous to human scales (75, 76).

Social/cultural.

Some statistical universals we identified do not seem to be particularly constrained—either motorically or cognitively—by production mechanisms. Instead, they seem to be shaped more by the ways in which music is expressed socially in its cultural context.

Semantic meaning.

As anyone who has forgotten the words in the middle of a song knows, it is easier to sing or hum a melody using meaningless vocables than to memorize lyrics containing words (UF 16). The universality of word use despite this may be due to the increased communicative power acquired by the addition of language’s referential semantic meaning to the nonreferential communicative power of music (77). This increased communicative power may also contribute to the universality of the voice (UF 13) despite the greater acoustic efficiency of nonvocal instruments described above. Conversely, adding musical accompaniment can enhance the emotional meaning of the text in poetry, religious texts, and so forth, and make them easier to remember and transmit across generations. Probably for this reason, most religious texts were transmitted in a music-like form before the invention of writing.

Communal reward.

Furthermore, as anyone who has tried to organize a group of musicians or dancers knows, it is much harder to coordinate such groups than to perform solo, but it is also much more enjoyable and rewarding to make music in groups (UF 17/UR 1) and for such group music to be associated with dance (UR 9). Dunbar (78) has proposed that this feeling of reward from group music and dance is mediated by endorphin production, and that music forms a crucial transitional step in the social evolution of human language from ape grooming and chimpanzee laughter. Performing in groups also allows the performers to communicate with one another as well as with the audience, and this communication is enhanced by adding layers of meaning through the use of song texts, dance gesture, dramatic acting, costumes, ritual objects, and other paramusical accompaniment.

Sex restrictions.

Like other vocal learners, such as songbirds, whales, and pinniped seals (11), human music seems to be performed predominantly by males (UF 18), consistent with the proposals of Darwin and others that human music, like birdsong, evolved primarily through sexual selection (52, 53). However, unlike birdsong, which is controlled by sexually dimorphic brain nuclei (79), there are no known biological constraints on female music making. Instead, female performance regularly equals or surpasses male performance following the lifting of ubiquitous patriarchal restrictions on female performance, suggesting that patriarchy is the primary (although not necessarily the only) factor contributing to the universality of male performance (11, 54, 56).

The Global Music Dataset

To enable a global statistical analysis of candidate musical universals, we recently developed a manual, acoustic classification scheme called CantoCore that is modeled after Cantometrics but that allows us to code the presence or absence of many candidate universals not codable in the original scheme (29). Based on the most comprehensive existing list of proposed musical universals by Brown and Jordania (12), we were able to combine CantoCore, Cantometrics, and the Hornbostel–Sachs instrument classification scheme (30) to operationalize the presence or absence of 32 musical features (SI Materials).

These features include a number of candidates that are rarely discussed in the music evolution literature. Thus, in addition to features from the domains of pitch (e.g., discrete pitches) and rhythm (e.g., isochronous beats) that are commonly cited as putative universals by scientists studying music evolution, our scheme also includes features from the domains of form (e.g., phrase repetition), instrumentation (e.g., percussion), performance style (e.g., syllabic singing), and social context (e.g., performer sex). Some of these features are nested within others by definition. For example, the contrast between syllabic vs. melismatic (nonsyllabic) singing is only relevant for vocal music and must be coded as NA (not applicable) for purely instrumental music.

We systematically coded these features for each of the 304 recordings contained in the Garland Encyclopedia of World Music (ref. 31; Fig. 1). These recordings represent the most authoritative and diverse global music collection available, emphasizing on-site field recordings of traditional, indigenous genres (both vocal and instrumental), but also including a variety of examples of contemporary, nonindigenous, and/or studio recordings, chosen with the aim of emphasizing the diversity of the world’s music. Although the size of the sample is relatively small compared with the vast array of music that humans have produced, it has a broad geographic coverage and is more stylistically diverse than larger samples that have focused only on Western classical and popular music (e.g., refs. 32 and 33) or only on traditional folk songs in the case of cross-cultural samples (e.g., refs. 22 and 26). The geographic and stylistic diversity of this sample make it ideal for testing candidate musical universals. In particular, the encyclopedic nature of this collection, with its intention of capturing the great diversity of musical forms worldwide, should tend to make this sample overrepresent the real level of global diversity, and thus make it a particularly stringent sample for testing cross-cultural statistical regularities.

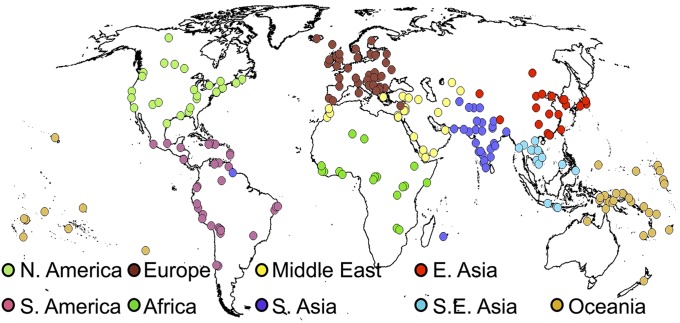

Fig. 1.

The 304 recordings from the Garland Encyclopedia of World Music show a widespread geographic distribution. They are grouped into nine regions specified a priori by the Encyclopedia’s editors, as color-coded in the legend at bottom: North America (n = 33 recordings), Central/South America (39), Europe (40), Africa (21), the Middle East (35), South Asia (34), East Asia (34), Southeast Asia (14), and Oceania (54).

Here we use phylogenetic comparative methods used by evolutionary biologists (34) that have been increasingly applied to cultural systems (e.g., refs. 1 and 35–41; SI Methods). These methods enable us to control for the fact that different cultures have different degrees of historical relatedness and thus cannot be treated as independent (a phenomenon known in anthropology as “Galton’s problem”; ref. 35). We followed the practice of other studies of cultural evolution by using language phylogenies (ref. 42; Figs. S1 and S2) to control for historical relationships in nonlinguistic domains (e.g., refs. 1 and 35–40). Our aim is not to attempt to reconstruct the evolutionary history and ancestral states of global music making, but simply to control for an important potential source of nonindependence in our sample using well-established techniques.

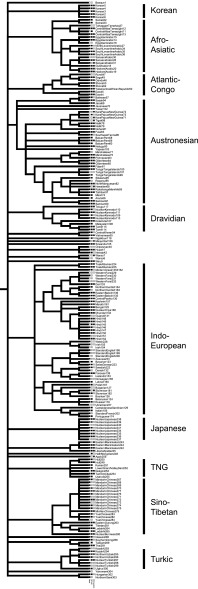

Fig. S1.

Historical relationships, based on Glottolog classifications of the languages spoken by the performers, for the 201 recordings in the indigenous subsample used for the phylogenetic analyses (i.e., excluding 103 recordings showing evidence of horizontal transmission). In this figure, the branch lengths are arbitrary (i.e., they do not represent information about degree of evolutionary change). The tree reveals important sources of statistical nonindependence in the sample that need to be controlled for in our assessment of statistical universals. As an example, the joint distribution of isochronous beat, group performance, and use of percussion instruments is mapped onto the tree. Black squares represent presence of each feature, white squares represent absence, and no square represents a coding of NA.

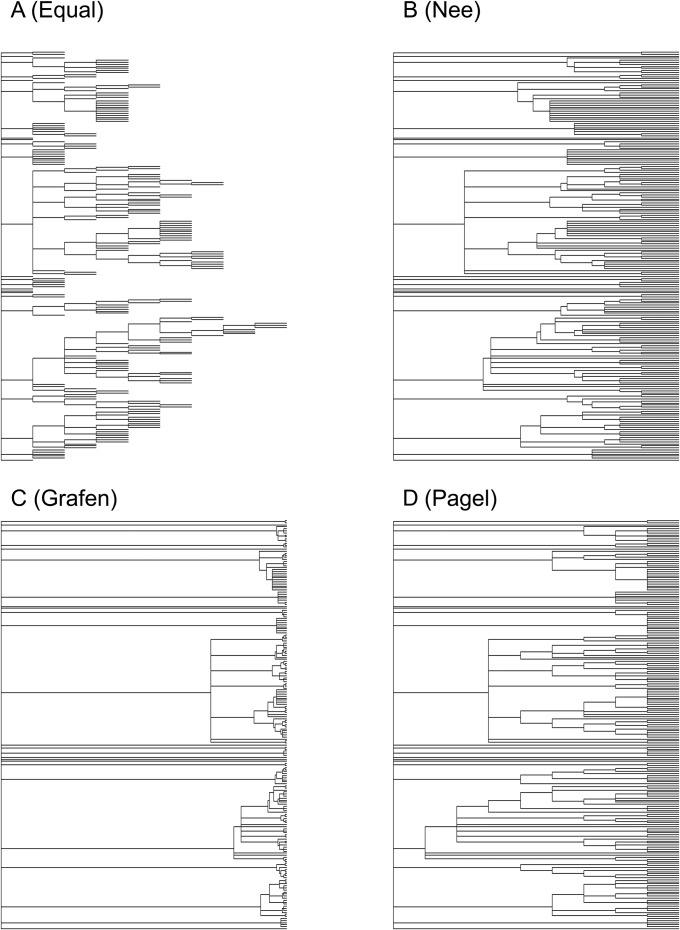

Fig. S2.

Phylogeny of languages relating to the indigenous subsample of recordings under the differing assumptions of (A) equal branch lengths, (B) Nee’s, (C) Grafen’s and (D) Pagel’s methods of assigning branch lengths.

We also performed a large number of additional analyses to assess the effects of different modeling and sampling assumptions. These included analyzing groupings based on musical/geographic regions (rather than language affiliations), comparing the full sample of all recordings against a subsample containing only indigenous music, and altering the branch lengths of the phylogenetic trees (Materials and Methods and SI Methods).

SI Materials

Music Classification Scheme.

We operationalized a list of 32 binary classification characters that could be used to empirically test many of the 70 candidate musical universals listed by Brown and Jordania (12). To do this, we took three preexisting classification schemes—CantoCore (29), Cantometrics (26, 60), and the Hornbostel–Sachs instrumental classification scheme (30)—and reorganized their character-states to make them binary (presence/absence). We also added five characters that were not included in these schemes but that were important for testing Brown and Jordania’s predictions. In some cases, it was also necessary to include an NA state. For example, the presence/absence of word use is, by definition, dependent on the presence/absence of voice use and thus must be coded “NA” for purely instrumental recordings.

CantoCore and Cantometrics were designed primarily for vocal music, which is in many ways easier to compare cross-culturally than instrumental music is (26, 29). However, most of their characters can be applied to instrumental music after making appropriate allowances for differences in instrumental production (e.g., breathing can be used to determine phrase boundaries in vocal and aerophone music, but not necessarily in chordophone music; ref. 29). To make the classifications as comparable as possible, we focused primarily on the vocal part of vocal recordings, whereas for instrumental recordings we treated melodic-instrumental parts as we would vocal melodies. For instrumental recordings without a melodic part (e.g., only unpitched percussion), we treated all instruments as we would treat vocal parts.

CantoCore and Cantometrics originally allowed for multicoding (i.e., coding the presence of multiple character-states for a single character). To transform the original multistate characters into binary presence/absence characters, we treated multicoding of both presence and absence (e.g., a recording with both metric and a-metric sections) as presence.

Almost all classification features included are acoustic features that must be coded manually by ear by a trained rater. Both P.E.S. and E.S. underwent the standard training procedure (29) involving Lomax’s training tapes (60) before coding all data. However, some features could be usefully supplemented by nonacoustic information from the Garland Encyclopedia of World Music (31) and accompanying liner notes, as well as from the editors and the providers of the recordings. Where possible, we corrected/supplemented our acoustic classifications with this information for the final dataset.

CantoCore (structural features; ref. 29).

-

1)

Isochronous beat (CantoCore Line 1)

-

0)

Absent (formerly “a-metric”)

-

1)

Present (formerly divided into “iso-metric”, “hetero-metric” and “poly-metric”)

-

2)

Metrical hierarchy (CantoCore Line 1)

-

0)

Absent (formerly “hetero-metric”)

-

1)

Present (formerly “iso-metric”)

-

NA)

Not applicable (formerly divided into “a-metric” and “poly-metric”)

-

3)

Two- or three-beat subdivisions (CantoCore Line 2)

-

0)

Absent (formerly “complex”)

-

1)

Present (formerly divided into “duple” and “triple”)

-

NA)

Not applicable (formerly “NA: a-/hetero-/poly-metric”)

-

4)

Two-beat subdivisions (CantoCore Line 2)

-

0)

Absent (formerly “triple”)

-

1)

Present (formerly “duple”)

-

NA)

Not applicable (formerly divided into “NA” and “complex”)

-

5)

Motivic patterns (CantoCore Line 6)

-

0)

Absent [formerly “non-motivic” (<20% of notes constructed from a recurring rhythmic pattern)]

-

1)

Present [formerly divided into “moderately motivic” (20–50%) and “highly motivic” (>50%)]

-

6)

Few durational values (<5; CantoCore Line 7)

-

0)

Absent [formerly “high durational variability (>4)”]

-

1)

Present [formerly divided into “moderate durational variability (3–4)” and “low durational variability” (<3)]

-

7)

Discrete pitches (CantoCore Line 8)

-

0)

Absent (formerly “indeterminate a-tonal”)

-

1)

Present (formerly divided into “discrete a-tonal”, “hetero-tonal”, “poly-tonal” and “iso-tonal”)

-

8)

≤7 scale degrees (CantoCore Line 10)

-

0)

Absent (>7 pitch classes) (NB: This distinction was not part of the original scheme, which was originally divided into <4, 4–5, and >5 pitch classes.)

-

1)

Present (≤7 pitch classes)

-

NA)

Not applicable (formerly “NA: a-/hetero-/poly-tonal”)

-

9)

Pentatonic (five-note) scale (CantoCore Line 10)

-

0)

Absent (greater than or fewer than five pitch classes) (NB: This distinction was not part of the original scheme, which was originally divided into <4, 4–5, and >5 pitch classes.)

-

1)

Present (exactly five pitch classes)

-

NA)

Not applicable (formerly “NA: a-/hetero-/poly-tonal”)

-

10)

Small intervals (<750 cents; CantoCore Line 12)

-

0)

Absent [formerly “large intervals” (>750 cents)]

-

1)

Present [formerly divided into “small intervals” (<350 cents) and “medium intervals” (350–750 cents)]

-

11)

Descending/arched melodic contour (CantoCore Line 14)

-

0)

Absent (formerly divided into “ascending” and “U-shaped”)

-

1)

Present (formerly divided into “descending” and “arched”)

-

NA)

Not applicable (formerly divided into “horizontal” and “undulating”)

-

12)

Syllabic singing (CantoCore Line 15)

-

0)

Absent [formerly divided into “moderately melismatic” (three to five consecutive notes without articulating a new syllable) and “strongly melismatic” (more than five consecutive notes without articulating a new syllable)]

-

1)

Present (formerly “syllabic” [≤2 consecutive notes without articulating a new syllable)]

-

NA)

Not applicable (instrumental recording without vocals) (NB: “NA” was not part of the original scheme, which was designed primarily for vocal music.)

-

13)

Vocable (nonlexical syllable) use (CantoCore Line 16)

-

0)

Absent [formerly “few vocables” (<20% of syllables contain only vowels and/or semivowels)]

-

1)

Present [formerly divided into “some vocables” (20–50% of syllables contain only vowels and/or semivowels) and “many vocables” (>50% of syllables contain only vowels and/or semivowels)]

-

NA)

Not applicable (instrumental recording without vocals) (NB: “NA” was not part of the original scheme, which was designed primarily for vocal music.)

-

14)

Word use (CantoCore Line 16)

-

0)

Absent [formerly “many vocables” (>50% of syllables contain only vowels and/or semivowels)]

-

1)

Present [formerly divided into “few vocables” (<20% of syllables contain only vowels and/or semivowels) and “some vocables” (20–50% of syllables contain only vowels and/or semivowels)]

-

NA)

Not applicable (instrumental recording without vocals) (NB: “NA” was not part of the original scheme, which was designed primarily for vocal music.)

-

15)

Dissonant homophony (CantoCore Line 19)

-

0)

Absent [formerly “smooth (consonant)”]

-

1)

Present [formerly “rough (dissonant)”]

-

NA)

Not applicable (formerly “NA: one part or poly-/hetero-rhythmic”)

-

16)

Phrase repetition (CantoCore Line 21)

-

0)

Absent [formerly “non-repetitive” (no repeating phrases, or more than eight different phrases before a repeat)]

-

1)

Present [formerly divided into “moderately repetitive” (three to eight different phrases before a repeat) and “repetitive” (one to two different phrases before a repeat)]

-

17)

Short phrases (<9 s; CantoCore Line 22)

-

0)

Absent [formerly “long phrases” (>9 s)]

-

1)

Present [formerly divided into “short phrases” (<5 s) and “medium-length phrases” (5–9 s)]

-

18)

Group performance (CantoCore Line 24)

-

0)

Absent (formerly “solo”)

-

1)

Present (formerly divided into “mixed,” “alternating,” and “group”)

N.B. Because CantoCore (and Cantometrics) were designed primarily for vocal music, this character originally only coded the presence/absence of singers. However, for the current study, we used the same criteria to code the presence/absence of both singers and instrumentalists. Acoustic codings were supplemented with nonacoustic information from the liner notes to correct cases where the acoustic information was ambiguous (e.g., a solo voice accompanied by a solo instrument could be a group of two performers or a single soloist accompanying him or herself).

Cantometrics (performance features; ref. 60).

NB: We used the updated 1976 scheme (60) rather than the original 1968 scheme (26)

-

19)

Vocal embellishment (Cantometrics Lines 23, 28, 30, and 31)

-

0)

Absent (all of the following former classifications: “slight”/ “little or no” embellishment, “some”/“little or no” glissando, “little or no” tremolo, and “little or no” glottal shake)

-

1)

Present (any of the following former classifications: “extreme”/ “much”/ “medium” embellishment, “maximal”/ “prominent” glissando, “much”/ “some” tremolo, and “much”/ “some” glottal shake)

-

NA)

Not applicable (instrumental recording without vocals) (NB: “NA” was not part of the original scheme, which was designed primarily for vocal music.)

N.B. This rather broad definition of vocal embellishment based on Brown and Jordania’s (12) original proposal did not meet even our first universality criterion of global significance. A more conservative definition of embellishment using only one or several of these features would therefore also have failed this criterion.

-

20)

Loud volume (Cantometrics Line 25)

-

0)

Absent (formerly divided into “very soft,” “soft,” and “mid-volume”)

-

1)

Present (formerly divided into “very loud” and “loud”)

-

21)

High register (Cantometrics Line 32)

-

0)

Absent (formerly divided into “very low,” “low,” and “mid”)

-

1)

Present (formerly divided into “very high” and “high”)

-

22)

Modal register (chest voice; Cantometrics line 32)

-

0)

Absent (formerly divided into “very low” and “very high”)

-

1)

Present (formerly divided into “low,” “mid,” and “high”)

-

NA)

Not applicable (instrumental recording without vocals) (NB: “NA” was not part of the original scheme, which was designed primarily for vocal music.)

Hornbostel–Sachs (instrumental features; ref. 30).

-

23)

Idiophone use (H–S type I)

-

0)

Absent (nonidiophone instruments only)

-

1)

Present (self-sounding instruments, e.g., gongs)

-

NA)

Not applicable [no nonvocal instruments present—see 27 (Instrument use)]

N.B. Hornbostel and Sachs did not originally include the human body as a musical instrument, but here we include nonvocal body sounds (e.g., hand clapping or stomping) as idiophones and use a separate category for the voice [see 26 (Voice use)].

-

24)

Membranophone use (H–S type II)

-

0)

Absent (nonmembranophone instruments only)

-

1)

Present (membrane-sounding instruments, e.g., drums)

-

NA)

Not applicable [no nonvocal instruments present—see 27 (Instrument use)]

-

25)

Aerophone use (H–S type IV)

-

0)

Absent (nonaerophone instruments only)

-

1)

Present (air-sounding instruments, e.g., flutes)

-

NA)

Not applicable [no nonvocal instruments present—see 27 (Instrument use)]

N.B. Hornbostel and Sachs’ other instrumental category, “chordophone” (string instruments, e.g., lutes), was not included because it was not predicted as a universal by Brown and Jordania (12).

New characters.

-

26)

Voice use

-

0)

Absent [nonvocal instruments only (including nonvocal body parts, such as hand clapping)]

-

1)

Present (use of human voice, with or without nonvocal accompaniment)

-

27)

(Nonvocal) instrument use

-

0)

Absent (voice only)

-

1)

Present [use of nonvocal instruments (including nonvocal body parts, such as hand clapping), with or without vocal accompaniment]

-

28)

Percussion use

-

0)

Absent (nonpercussion instruments and/or voice only)

-

1)

Present [use of idiophones (23) and/or membranophones (24)]

-

NA)

Not applicable [no nonvocal instruments present—see 27 (Instrument use)]

-

29)

Dance accompaniment

-

0)

Absent (vocal and/or instrumental performance only)

-

1)

Present (dancers accompany vocal and/or instrumental performance)

N.B. This character relies entirely on nonacoustic information from the Garland Encyclopedia of World Music (31) and accompanying liner notes. Thus, it may underestimate the actual worldwide prevalence of dance—which in the current analysis only accompanied 88 out of the 304 recordings—because there may well have been some recordings that were accompanied by dancing that were not noted in the Encyclopedia. However, this underestimation should serve to mask any associations between dance and other features. We thus predict that the associations we found with dance should be robust in future studies that include more information about dance (e.g., through video analysis).

-

30)

Male performers

-

0)

Absent (female vocalists/instrumentalists only)

-

1)

Present (male vocalists/instrumentalists only)

-

NA)

Not applicable (mixed-sex group of vocalists/instrumentalists, or instrumentalists of unknown sex)

N.B. For vocal recordings, only the sex of the vocalist was coded, as the sexually dimorphic human voice allows for acoustic sex discrimination, which is not possible for most instrumental recordings. For instrumental recordings, we relied on nonacoustic information from the Garland Encyclopedia of World Music (31) and accompanying liner notes. In cases where this did not allow for unambiguous sex identification, we contacted the relevant recording providers and/or editors. This allowed us to identify the sex for all but four instrumental recordings out of the 304 recordings (Dataset S1, recording numbers 50, 149, 167, and 180). Note that, although male fieldworkers were somewhat more likely than female fieldworkers to record male-only performances, the trend we identified toward male-only performance was consistent regardless of the sex of the recording fieldworker (Dataset S1).

-

31)

Sex segregation

-

0)

Absent (mixed-sex group of vocalists/instrumentalists)

-

1)

Present (single-sex group of vocalists/instrumentalists only)

-

NA)

Not applicable (solo vocalist/instrumentalist)

N.B. Solo/group and male/female classifications were based on the definitions in characters 18 and 30, respectively.

-

32)

Nonequidistant scale

-

0)

Absent (equal intervals between all pitch classes; e.g., the scale C, Eb, F# has intervals of 300 cents between each pair of pitch classes. Likewise, the scale C, D, E, F#, G#, A# has intervals of 200 cents between each pair of pitch classes)

-

1)

Present (not all intervals between pitch classes are equal; e.g., the scale C, Eb, F has an interval of 300 cents between C–Eb, but an interval of 200 cents between Eb–F. Likewise, the scale C, D, E, F, G, A, has intervals of 200 cents between each pair of pitch classes except E–F, which has an interval of 100 cents)

-

NA)

Not applicable [any recordings formerly classified as “NA: a-/hetero-/poly-tonal” (see 8: ≤7 scale degrees), or any that are isotonal but have only one or two pitch classes, and hence do not have multiple intervals between pairs of pitch classes to compare]

Data Coding and Reliability.

Of the 305 recordings from the Garland Encyclopedia of World Music (31), we excluded one recording containing only frog sounds to restrict the sample to human music (in consultation with the editor, Terry Miller, who selected the recordings for this volume). P.E.S classified the remaining 304 recordings manually and supplemented and corrected these classifications with metadata from the Encyclopedia and by contacting recording fieldworkers and other experts.

To assess interrater reliability, E.S. independently coded a randomly selected subset of 30 recordings before being informed of the hypotheses. Mean percent agreement and Cohen’s Kappa values were calculated as in ref. 29 but using the binary codings operationalized here, rather than the multistate characters of the original classification schemes. Data are based on acoustic codings by P.E.S. and E.S. before applying any corrections based on nonacoustic metadata from the Garland Encyclopedia of World Music (31). Mean agreement for all 32 characters was 81%, and mean Kappa was 0.45 (Table S3).

Table S3.

Interrater reliability for the 32 features

| Feature | % agreement | Kappa |

| Aerophone use | 96.7 | 0.905 |

| Instrument use | 96.7 | 0.870 |

| Voice use | 93.3 | 0.850 |

| Discrete pitches | 93.3 | 0.714 |

| Word use | 93.3 | 0.441 |

| Chest voice | 93.3 | NA |

| Metrical hierarchy | 90.0 | 0.603 |

| Dissonant homophony | 90.0 | 0.571 |

| Pentatonic scale | 90.0 | 0.500 |

| ≤7 scale degrees | 90.0 | −0.043 |

| Nonequidistant scale | 90.0 | NA |

| Sex segregation | 86.7 | 0.767 |

| Group performance | 86.7 | 0.426 |

| Isochronous beat | 86.7 | 0.259 |

| Syllabic singing | 83.3 | 0.578 |

| Two- or three-beat subdivisions | 83.3 | NA |

| Loud volume | 80.0 | 0.585 |

| Percussion use | 80.0 | 0.532 |

| Vocal embellishment | 80.0 | 0.508 |

| Short phrases | 80.0 | 0.260 |

| Two-beat subdivisions | 76.7 | 0.452 |

| Membranophone use | 76.7 | 0.386 |

| Motivic patterns | 73.3 | 0.172 |

| Idiophone use | 70.0 | 0.363 |

| High register | 66.7 | 0.282 |

| Small intervals | 66.7 | 0.189 |

| Descending/arched contour | 66.7 | NA |

| Phrase repetition | 63.3 | 0.127 |

| Vocable use | 63.3 | 0.086 |

| Few durational values | 60.0 | −0.017 |

| Male performers | 56.7 | 0.649 |

| Dance accompaniment | NA | NA |

| Average | 80.8 | 0.445 |

The data are based on codings of 30 randomly-selected recordings by P.E.S. and E.S. (SI Materials).

SI Methods

Two sets of analyses were performed to establish whether different features and relationships represented statistical universals: (i) global analyses, which use phylogenetic comparative methods to control for historical relationships brought about by cultural descent from a common ancestor, and (ii) consistency of patterns across musical/geographic regions.

Global Analyses.

The sample of musical recordings used in this analysis cannot be considered statistically independent, because the recordings are taken from closely related cultural groups and are sometimes composed of multiple recordings from the same group. To correct for the increased possibility of artificially inflated frequencies and spurious associations between features that such nonindependence can produce, we performed a series of phylogenetic comparative analyses.

To limit the potential impact of horizontal transmission (i.e., cross-cultural borrowing), which is not easily modeled using current methods for discrete characters (59), we used the detailed historical information in the Encyclopedia to exclude 103 recordings for which there was evidence of horizontal transmission (e.g., Christian hymns in Polynesia; Islamic music in sub-Saharan Africa; Euro-, Afro-, and Asian-American genres; Western-style choral arrangements from China and India, etc.). Removing these recordings from the full sample of 304 recordings resulted in a subsample of 201 indigenous recordings that was used for the phylogenetic comparative analyses.

Because the musical sample was so diverse, these 201 indigenous recordings represented 25 different language families or linguistic isolates, ranging from 1 to 54 recordings per language family (median: 3). The phylogenetic comparative methods used require large samples to provide sufficient statistical power—an issue compounded by the lack of variance in the sample owing to the near-universal nature of many of the features (61). It was thus not possible to follow the approach of analyzing each language family independently. Instead, we constructed a global tree by joining the language families at their roots. However, there is no agreed-upon method for doing this, because most linguistics think there is not enough evidence to be able to say how language families are related to one another. We therefore performed a large number of confirmatory analyses to assess the effects of different modeling and sampling assumptions on the analyses, including altering the branch lengths that join the language families together within the phylogenetic tree (see below and Fig. S2).

Our primary analyses were restricted to the 201 recordings that were determined through accompanying documentation in the Encyclopedia to be relatively free from horizontal transmission, to limit non-tree-like processes that could not be modeled by the phylogenetic analysis. For completeness, we also ran the analyses on the full sample to assess whether inclusion of these nonindigenous recordings would alter our findings (Table S4).

Table S4.

Pearson correlation coefficients from correlations between parameter estimates from the alternative phylogenetic comparative analyses

| A) Pairwise relationships | Equal | Grafen | Nee | Pagel |

| Equal | 0.969 | 0.976 | 0.983 | |

| Grafen | 0.935 | 0.979 | 0.970 | |

| Nee | 0.961 | 0.969 | 0.978 | |

| Pagel | 0.964 | 0.958 | 0.978 | |

| B) Long-term frequency | ||||

| Equal | 0.990 | 0.986 | 0.993 | |

| Grafen | 0.990 | 0.996 | 0.998 | |

| Nee | 0.971 | 0.993 | 0.994 | |

| Pagel | 0.990 | 0.996 | 0.990 | |

| C) Phylogenetic mean | ||||

| Equal | 0.995 | 0.991 | 0.991 | |

| Grafen | 0.996 | 0.999 | 0.996 | |

| Nee | 0.986 | 0.998 | 0.998 | |

| Pagel | 0.990 | 0.997 | 0.998 | |

| D) Full vs. indigenous | ||||

| Long-term frequency | 0.989 | 0.988 | 0.975 | 0.991 |

| Phylogenetic mean | 0.987 | 0.990 | 0.992 | 0.990 |

| Conditional | 0.918 | 0.926 | 0.928 | 0.921 |

(A–C) Comparison of results using different branch-length assumptions for A) Pairwise relationship LR values, B) long-term frequency estimates, and C) phylogenetically weighted mean frequency estimates. Upper triangles in italics in A–C represent results using the full sample (n = 304; i.e., including 103 recordings with evidence of horizontal transmission) and the lower triangles represent results using the indigenous subsample (n = 201). (D) Comparison of results using full sample vs. indigenous sample. See SI Methods for full details and Fig. S2 for visualizations of branch lengths under the assumptions of equal branch length and the transformation methods of Grafen, Nee, and Pagel.

Language phylogeny.

Following other studies of cultural evolution (e.g., refs. 1 and 35–41), we used a phylogenetic tree based on language as a proxy for the historical relationships among the cultures from which these recordings came. However, the majority of previous analyses in cultural phylogenetics have been within certain well-studied language families and have used linguistic data to construct phylogenetic trees using cutting-edge phylogenetic inference techniques adapted from evolutionary biology. Unfortunately, such methods of tree construction cannot yet be reliably applied to linguistic data on the global scale we require owing to the rate at which lexical data seem to evolve (although see, e.g., ref. 62 for innovative studies that have explored the possibility of extending the construction of linguistic phylogenies beyond language families). Here we constructed our global phylogenetic tree using information from classification schemes used by linguists [we note that a similar approach to using these classification schemes was taken by Dediu and Levinson (63) in examining the stability of linguistic structural features]. Ideally, in the future we would like to have information about global linguistic and cultural relationships in the form of phylogenies and/or networks derived in the same rigorous, quantitative ways as is done within language families. However, the use of language classifications combined with regional consistency analyses based on geographic/musical classifications is suitable for our current purposes, because we are not attempting a detailed reconstruction of changes in music over time, nor are we trying to estimate the form of music in the last common ancestral population. Instead, we are using the information from these historical relationships to assess whether there are any obvious sources of bias in the sampling of our data that might lead us to erroneous conclusions, and to control for such biases if they exist.

The topology of the phylogenetic tree used in this study was constructed by hand in the program Mesquite (64) using the classificatory scheme from Glottolog (42), a comprehensive online resource that catalogs information about the world’s languages. Relationships between recordings from speakers of the same language were represented as polytomies, as were other deeper relationships that could not be resolved in a bifurcating manner (i.e., several members of the same family). Because deeper relationships between language families are controversial and as there is no accepted global language phylogeny, we also represented relationships between language families as polytomies (as linguists do implicitly). This assumption of polytomies is obviously somewhat of a simplification. It may be possible to resolve these polytomies using intuitions based on genetics, historical information, or other sources, but these would involve additional assumptions. In preliminary analyses, we created phylogenies based on the classificatory schemes in the World Atlas of Language Structures (65) and the Ethnologue (66), which make slightly different decisions about how some languages or language groups are related to one another. These analyses produced broadly similar results, identifying the same statistical universals, suggesting that our results are robust to such disagreements about the phylogenetic relationships between the cultures in our sample.

These phylogenetic comparative analyses require information about the branch lengths of the phylogenetic tree. These branch lengths represent the evolutionary distance between taxa. When such trees are constructed from a previous analysis of linguistic data, then the branch lengths are often represented in units of linguistic change or time (if suitable calibration points are known, e.g., ref. 67) In comparative analyses, the branch lengths relate to the rate of trait evolution and are important because they inform the parameters of the evolutionary model that is being examined (57). Because our global language tree had to be constructed by hand, we lack information about what these branch lengths should be. We therefore made all branch lengths equal by assigning them a value of 1. It should be noted that this decision means that we are implicitly assuming that the rate of evolution is punctuated in such a way that trait evolution is related to the points at which lineages diverge or “speciate.” Interestingly, previous analyses using phylogenetic trees constructed from Indo-European, Austronesian, and Bantu linguistic data indicate that languages (or at least lexical items) tend to evolve in this punctuated manner (68), indicating that this assumption may not be entirely unreasonable for our musical data. It should also be noted that in terms of time a world language tree is probably at least an order of magnitude older than the time depth of known language families. However, our assumption of equal branch length means that the branch lengths joining language families to the root are generally shorter than the longest total branch length within families.

To assess the effect of this branch length assumption on our results, we created three new phylogenetic trees (Fig. S2) by transforming the branch lengths of our original equal-branch-length tree using three techniques that were developed for dealing with situations where branch lengths are unknown. (Such situations are becoming rarer in evolutionary biology owing to the widespread availability of molecular data.) All three methods constrain the tips to be contemporaneous (i.e., creating ultrametric trees) and were implemented in the PDAP:PDTREE module for Mesquite (64). Grafen’s method (69) sets each node at a height of one less than the number of tip units that descend from that node (i.e., if five songs descend from a particular node, then its height will be 4). A related method is Nee’s technique (reported in ref. 70) that sets node heights as the log of the number of tip units that descend from a node. Finally, Pagel’s method (71) sets the internode distances to a minimum of 1 (in PDAP, polytomies are dealt with by placing them at the minimum possible height). The patterns of branch lengths created by these differing techniques can be seen in Fig. S2. Interestingly the Grafen tree has relatively long branch lengths joining the root of the tree to the language families, which (in this respect at least) is in line with our expectation that the root of a world language tree would be relatively much older than that of the individual language families. This does not necessarily make it a better assumption than the other branch-length transformations, and we note that the other branch-length assumptions implicitly assume that changes at this earlier stage of cultural history are much less important in explaining current diversity than more recent cultural evolution, which may be equally valid depending on the rates of cultural evolution of particular traits. We should stress that our intention here is not to decide on which branch length assumption is best, but rather to simply assess what effect altering these branch lengths has on our ability to draw inferences from our comparative analyses. Overall, based on these confirmatory analyses and the regional consistency check, there does not seem to be a strong reason to suspect that our use of a global phylogeny is introducing any bias into the analyses that has led us to erroneous conclusions.

Universal features.

Two models were used to calculate the frequency of different musical features while taking into account the information provided by the phylogenetic relationships between recordings. The first model infers the rate at which these traits are gained or lost and uses this to calculate their expected long-term frequency. An assumption of this model is that NA values represent “unknown” values. However, in most cases NAs represent nested characters where absence in a higher level by definition implies absence at a lower level. For example, absence of an isochronous beat by definition implies absence of two- or three-beat subdivisions, and thus music without an isochronous beat is coded as NA for two- or three-beat subdivision. To guard against the risk that this assumption might produce spurious results, we also ran the same analyses using a second model that excludes NA values when assessing the proportion of samples in which a trait is absent (0) or present (1) by calculating a phylogenetically weighted mean of each variable. However, this method in turn has the drawback that it assumes that the traits are evolving via Brownian motion, an assumption that is violated by the use of data that are categorically distributed. Although the two analyses have very different assumptions, they produced highly concordant results (see below and Table S5).

Table S5.

Comparison of results using long-term frequency and phylogenetically weighted mean models for phylogenetic comparative analysis of candidate features

| Long-term frequency | Phylogenetically weighted mean | ||

| Feature | Long-term frequency | Likelihood ratio | Alpha |

| Two- or three-beat subdivisions | 0.992 | 2.512 | 0.995 |

| Nonequidistant scale | 0.981 | 4.711 | 0.927 |

| ≤7 scale degrees | 0.964 | 7.026 | 0.995 |

| Chest voice | 0.929 | 5.097 | 0.976 |

| Discrete pitches | 0.904 | 0.360 | 0.990 |

| Motivic patterns | 0.902 | 22.58 | 0.836 |

| Descending/arched contour | 0.894 | 10.133 | 0.960 |

| Word use | 0.865 | 16.142 | 0.636 |

| Small intervals | 0.861 | 11.895 | 0.854 |

| Isochronous beat | 0.850 | 11.659 | 0.764 |

| Two-beat subdivisions | 0.838 | 6.545 | 0.805 |

| Short phrases | 0.811 | 19.297 | 0.827 |

| Instrument use | 0.790 | 12.836 | 0.796 |

| Male performers | 0.785 | 21.728 | 0.852 |

| Metrical hierarchy | 0.775 | 6.926 | 0.675 |

| Sex segregation | 0.773 | 4.077 | 0.803 |

| Phrase repetition | 0.765 | 14.813 | 0.676 |

| Group performance | 0.741 | 5.747 | 0.699 |

| Voice use | 0.726 | 23.538 | 0.838 |

| Few durational values | 0.716 | 20.748 | 0.747 |

| Percussion use | 0.707 | 3.890 | 0.616 |

| Vocal embellishment | 0.605 | 2.067 | 0.680 |

| Syllabic singing | 0.509 | 0.009 | 0.459 |

| Vocable use | 0.421 | 2.013 | 0.559 |

| Loud volume | 0.394 | 6.052 | 0.441 |

| Membranophone use | 0.394 | 1.956 | 0.204 |

| High register | 0.351 | 8.612 | 0.419 |

| Idiophone use | 0.327 | 4.261 | 0.480 |

| Dance accompaniment | 0.325 | 6.446 | 0.215 |

| Dissonant homophony | 0.308 | 1.408 | 0.186 |

| Aerophone use | 0.260 | 18.043 | 0.407 |

| Pentatonic scale | 0.198 | 12.937 | 0.202 |

Bold values indicate values that were significantly greater than 0.5 (LR > 3.84; SI Methods).

As we stress in the main text, our goal here is not to reconstruct the evolutionary history of these traits or to infer the most likely features of music in a common ancestral culture. Rather, we are simply using these techniques as a means of controlling for some of the underlying historical connections between these musical cultures so as to provide a more balanced estimate of the frequency of certain traits in our contemporary sample.

Long-term frequency.

The evolution of a binary trait (i.e., a trait taking states 0 or 1) can be modeled as a continuous-time Markov process (58), with the parameters of the model being instantaneous rates of change (q) for both gain (q01) and loss (q10). Under this method, the trait of interest is mapped onto the tips of the phylogenetic tree and the rate parameters are inferred using maximum likelihood, based on the distribution of the traits and the branch lengths of the tree. By comparing the relative inferred values of gain and loss, we can assess what the expected frequency of 0s and 1s would be if the evolutionary process had been running for a long time with a large number of songs (i.e., such that any idiosyncratic evolutionary event did not substantially bias the frequency). The formula to calculate this frequency is

It should be noted that a simpler evolutionary model in which the rates of gain and loss are equal (q01 = q10) may explain the data as well as the two-rate model does. Under this model, the long-term expected frequency of 0s and 1s will also be equal. Whether a single or two-rate model explains the data better can be assessed using likelihood ratio tests. The ability to significantly detect support for the extra parameter of the two-rate model requires sufficient statistical power (and these results may therefore be conservative). In Table S5, we report all of the expected frequencies based on the inferred rates of change under the two-rate model and note where the analyses were unable to reject the one-rate model.

All analyses were run using BayesTraits. The likelihood ratio statistic (LR) for these analyses was calculated as

with degrees of freedom equal to the difference in number or parameters between the two models (i.e., 1). This gave a critical threshold value of 3.84 for assessing significance at P < 0.05.

For all our results, there were two counterintuitive features—two- or three-beat subdivisions and discrete pitches—for which the long-term frequency model using equal branch length was unable to reject the one-rate model at P < 0.05 despite the fact that the frequency estimate was extremely high (0.992 and 0.904, respectively). Curiously, the analysis was able to reject the one-rate model for two-beat subdivision alone, even though two-beat subdivision is by definition nested within two- or three-beat subdivisions (see SI Materials for precise operationalization). We believe that this is an artifact of the treatment of NA values as “unknown” mentioned above, because two- or three-beat subdivision is the character with the highest ratio of NA to absent values (89:1; 89 recordings were coded as NA because they were not isometric, whereas only 1 recording was coded as absent, meaning that it was isometric but the meter was not subdivided into multiples of two or three beats). Furthermore, five out of the six alternative analyses using the three different branch-length transformations were able to reject the one-rate model, and the phylogenetically weighted mean trait model described below returned even higher estimates for these features of 0.995 and 0.990, respectively (Table S5). Therefore, we decided to include discrete pitches and two- or three-beat subdivisions in our list of statistical universals.

Phylogenetically weighted mean.

The evolution of a continuous trait can be modeled using a phylogenetic generalized least squares (PGLS) approach. Under PGLS, traits are assumed to be evolving under Brownian motion, and the variance of the trait (which can be interpreted as its rate of evolution) can be calculated using information from the phylogenetic tree and its branch lengths. This information allows for the calculation of the most likely value of the trait at the root of the phylogenetic tree, which can also be interpreted as the mean of the data taking into account the phylogenetic relationships (57). Indeed, it is this latter interpretation that we are concerned with here, rather than wishing to say anything definitive about music in a common ancestral population. For a binary variable that takes values of 0 and 1, the proportion of 1s can be found by calculating the mean of the variable. Therefore, for our purposes, although we are dealing with discrete binary traits (therefore clearly violating the assumption that this trait is evolving via Brownian motion), this approach of using PGLS can be thought of as giving us the proportion of 1s (features present) for each variable in the data while controlling for the nonindependent nature of our samples [see also Felsenstein (72) for a discussion about how discrete characters can be modeled using continuous trait evolution under the threshold model].

Analyses were conducted using the constant-variance random walk option for continuous traits in the program BayesTraits under maximum likelihood estimation. The alpha parameter gives the phylogenetically weighted mean.

Comparison between the long-term frequency and phylogenetically weighted mean methods.

Although both methods have very different underlying assumptions, the results produced were highly concordant (Table S5). There is a very high correlation (r = 0.928) between the alpha parameter from the PGLS analysis and the long-term frequency based on the parameter estimates of the Markov model. Furthermore, for the 21 globally significant features, both models gave frequency estimates of over 0.6, well over our threshold criterion of 0.5. There were only two features—syllabic singing and vocable use—for which the two methods produced substantially different results (i.e., the long term-frequency estimates of 0.509 and 0.421 are on the opposite sides of the threshold criterion of 0.5 compared with their respective PGLS estimates of 0.459 and 0.559). This had no effect on our final results because both syllabic singing and vocable use failed our regional consistency criterion. Thus, our results were robust to the different trait model assumptions.

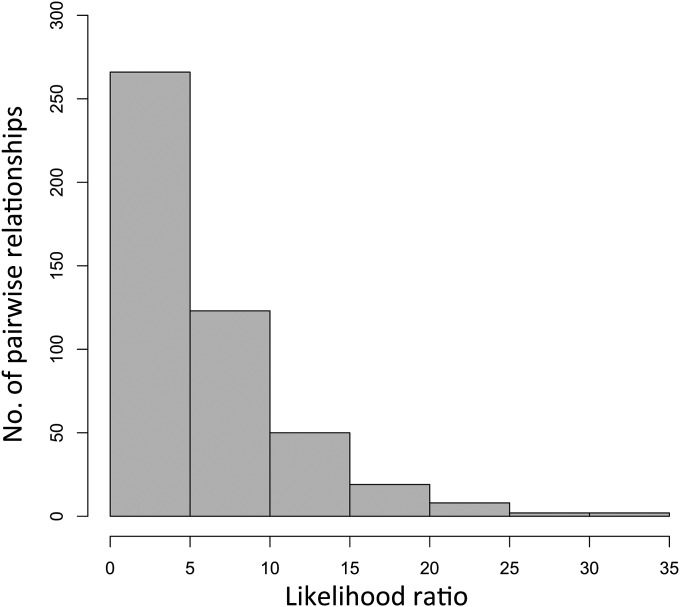

Universal relationships.

Pagel’s coevolutionary method (58) models the evolution of two binary traits over a phylogenetic tree and uses information about the distribution of the traits at the tips of the tree and the branch lengths of the tree to estimate rates of evolutionary change (and is an extension of the continuous-time Markov model for a single trait described above). Under this method, a model in which two binary traits evolve independently is statistically compared with a dependent (or coevolutionary) model in which the state of one trait influences the rate of change from one state to the other. Again the LR is used to determine whether the dependent model fits the data significantly better than the independent model, and is generally taken to approximate a χ2 distribution with degrees of freedom (df) equal to the difference in number of parameters between the two models. Here the df equal 4 and therefore an LR value greater than 9.49 is the threshold for assessing statistical significance at P < 0.05 (Table S1). The calculation of the LR statistic for the coevolutionary analyses is given below:

Table S1.

LR values from the phylogenetic comparative analysis of the pairwise relationships among the 32 features

| Feature | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 | 27 | 28 | 29 | 30 | 31 |

| 1) Isochronous beat | |||||||||||||||||||||||||||||||

| 2) Metrical hierarchy | na | ||||||||||||||||||||||||||||||

| 3) Two- or three-beat subdivisions | na | na | |||||||||||||||||||||||||||||

| 4) Two-beat subdivisions | na | na | na | ||||||||||||||||||||||||||||

| 5) Motivic patterns | 39 | 8 | 1 | 2 | |||||||||||||||||||||||||||

| 6) Few durational values (<5) | 28 | 7 | 3 | 2 | 24 | ||||||||||||||||||||||||||

| 7) Discrete pitches | 10 | 2 | 1 | 3 | 1 | 2 | |||||||||||||||||||||||||

| 8) ≤7 scale degrees | 4 | 8 | 0 | 2 | 3 | 4 | na | ||||||||||||||||||||||||

| 9) Pentatonic (five-note) scale | 7 | 8 | 1 | 4 | 11 | 8 | na | 5 | |||||||||||||||||||||||

| 10) Small intervals (<750 cents) | 5 | 8 | 0 | 3 | 13 | 2 | 5 | 3 | 3 | ||||||||||||||||||||||

| 11) Descending/arched contour | 2 | 7 | 0 | 2 | 1 | 1 | 1 | 1 | 4 | 6 | |||||||||||||||||||||

| 12) Syllabic singing | 17 | 7 | 7 | 3 | 15 | 21 | 11 | 8 | 10 | 7 | 9 | ||||||||||||||||||||

| 13) Vocable (nonlexical syllable) use | 1 | 7 | 2 | 1 | 2 | 3 | 2 | 2 | 7 | 2 | 1 | 15 | |||||||||||||||||||

| 14) Word use | 3 | 9 | 1 | 3 | 7 | 4 | 8 | 2 | 3 | 3 | 5 | 16 | na | ||||||||||||||||||

| 15) Dissonant homophony | 2 | 6 | 1 | 2 | 5 | 1 | 2 | 1 | 5 | 1 | 2 | 6 | 6 | 3 | |||||||||||||||||

| 16) Phrase repetition | 7 | 11 | 5 | 7 | 34 | 16 | 5 | 3 | 5 | 8 | 1 | 19 | 5 | 2 | 4 | ||||||||||||||||

| 17) Short phrases (<9 s) | 6 | 19 | 0 | 3 | 8 | 3 | 7 | 2 | 4 | 3 | 1 | 6 | 2 | 3 | 2 | 11 | |||||||||||||||

| 18) Group performance | 24 | 16 | 2 | 6 | 4 | 3 | 4 | 1 | 12 | 6 | 2 | 3 | 9 | 7 | 4 | 3 | 3 | ||||||||||||||

| 19) Vocal embellishment | 15 | 3 | 3 | 5 | 11 | 12 | 4 | 3 | 10 | 5 | 4 | na | 3 | 6 | 5 | 11 | 5 | 10 | |||||||||||||

| 20) Loud volume | 3 | 5 | 2 | 2 | 4 | 3 | 4 | 7 | 2 | 5 | 3 | 5 | 6 | 12 | 4 | 8 | 4 | 8 | 5 | ||||||||||||

| 21) High register | 4 | 6 | 1 | 5 | 2 | 3 | 8 | 5 | 4 | 4 | 1 | 10 | 8 | 7 | 5 | 6 | 4 | 3 | 4 | 10 | |||||||||||

| 22) Modal register (chest voice) | 6 | 7 | 1 | 2 | 2 | 3 | 8 | 4 | 1 | 2 | 1 | 3 | 11 | 15 | 3 | 2 | 2 | 9 | 5 | 3 | 13 | ||||||||||

| 23) Idiophone use | 4 | 1 | 4 | 5 | 10 | 7 | 3 | 2 | 11 | 2 | 6 | 13 | 12 | 9 | 7 | 15 | 5 | 15 | 20 | 3 | 5 | 5 | |||||||||

| 24) Membranophone use | 9 | 20 | 4 | 2 | 10 | 5 | 4 | 4 | 8 | 5 | 0 | 14 | 3 | 6 | 2 | 15 | 8 | 24 | 18 | 8 | 10 | 5 | 3 | ||||||||

| 25) Aerophone use | 6 | 9 | 3 | 8 | 4 | 8 | 4 | 4 | 9 | 13 | 3 | 12 | 4 | 9 | 3 | 4 | 2 | 13 | 11 | 2 | 5 | 5 | 14 | 2 | |||||||

| 26) Voice use | 9 | 4 | 2 | 2 | 4 | 3 | 6 | 2 | 7 | 3 | 8 | na | na | na | 2 | 2 | 3 | 7 | na | 21 | 10 | na | 4 | 6 | 8 | ||||||

| 27) (Nonvocal) instrument use | 16 | 25 | 1 | 2 | 2 | 5 | 4 | 3 | 6 | 2 | 4 | 12 | 3 | 7 | 1 | 4 | 4 | 12 | 4 | 5 | 4 | 7 | na | na | na | na | |||||

| 28) Percussion use | 11 | 10 | 3 | 1 | 8 | 4 | 7 | 4 | 6 | 11 | 5 | 14 | 12 | 5 | 3 | 11 | 4 | 34 | 13 | 6 | 4 | 4 | na | na | 7 | 8 | na | ||||

| 29) Dance accompaniment | 20 | 9 | 2 | 5 | 11 | 14 | 11 | 2 | 6 | 3 | 3 | 11 | 12 | 14 | 17 | 15 | 3 | 12 | 10 | 22 | 2 | 1 | 5 | 1 | 6 | 2 | 17 | 4 | |||

| 30) Male performers | 3 | 6 | 3 | 1 | 3 | 1 | 4 | 4 | 2 | 1 | 7 | 2 | 12 | 12 | 1 | 1 | 3 | 3 | 2 | 3 | 1 | 8 | 4 | 4 | 1 | 13 | 2 | 5 | 3 | ||

| 31) Sex segregation | 3 | 8 | 3 | 4 | 8 | 7 | 10 | 3 | 7 | 8 | 2 | 16 | 5 | 4 | 2 | 15 | 3 | na | 16 | 7 | 3 | 6 | 14 | 5 | 4 | 5 | 6 | 3 | 15 | na | |

| 32) Nonequidistant scale | 2 | 4 | 1 | 2 | 1 | 3 | na | 1 | 5 | 1 | 1 | 4 | 2 | 3 | 2 | 3 | 1 | 4 | 6 | 1 | 2 | 0 | 4 | 4 | 1 | 2 | 2 | 6 | 6 | 2 | 4 |

Relationships implied by definition are indicated as “na.” Regular text indicates positive relationships, and italics indicate negative relationships. Significant relationships (LR > 9.49) are highlighted in bold. Relationships whose direction was consistent across all nine regions are underlined. LR values are rounded to the nearest whole number.

Confirmatory analyses.

Both types of feature analyses and the relationship analyses were performed using the phylogenies under the four different branch lengths, using both the full sample of recordings and the indigenous subsample. Table S4 indicates that parameter inferences for all features and relationships under the different branch length assumptions are highly correlated with one another in both the full and the indigenous samples (r ranges from 0.935 to 0.999). This indicates that our results are robust to specific assumptions about the branch lengths of the phylogenetic tree. Furthermore, the correlations between analyses performed on the full sample vs. the indigenous sample are also very high (r ranges from 0.918 to 0.992). The mean of all these correlations and the correlation of 0.928 between the phylogenetic mean and long-term frequency analyses reported above was 0.979. Importantly, none of these alternative analyses had any effect on our final lists of statistical universals, with one exception: The correlations between idiophone use and group performance were just below the threshold for significance (LR < 9.49) for two out of the eight alternative analyses (the Grafen and Nee trees for the indigenous subsample gave LR values of 8.19 and 8.36, respectively). However, this has no effect on our general conclusions regarding the inclusion of percussion instruments in the network of universal relationships, because both the group-percussion and group-membranophone relationships were significant across all alternative analyses.

Regional Consistency.

For the regional comparisons (Figs. 2 and 3), we only considered the direction of the trend, not its statistical significance, because the smaller sample sizes (n = 14–54) and the stringent criterion that a given candidate must display the same trend across all nine regions without exception would have resulted in an unacceptably high type II error rate. We used both indigenous and nonindigenous recordings from each region because the goal was to account for the various types of musical/regional influences not accounted for by the linguistic phylogeny—including non-tree-like processes—and because the indigenous subsamples would have resulted in unacceptably small sample sizes for some regions (subsample sizes: Africa = 15 recordings, South America = 4, North America = 3, Southeast Asia = 10, Southern Asia = 30, Middle East = 28, Eastern Asia = 33, Europe = 33, and Oceania = 45).

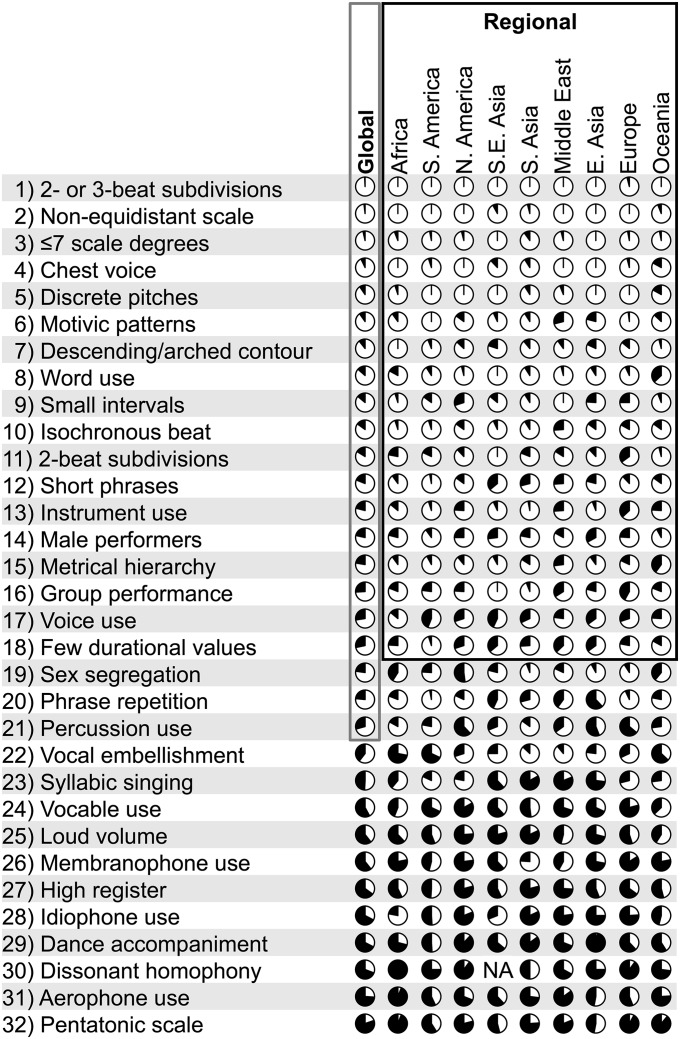

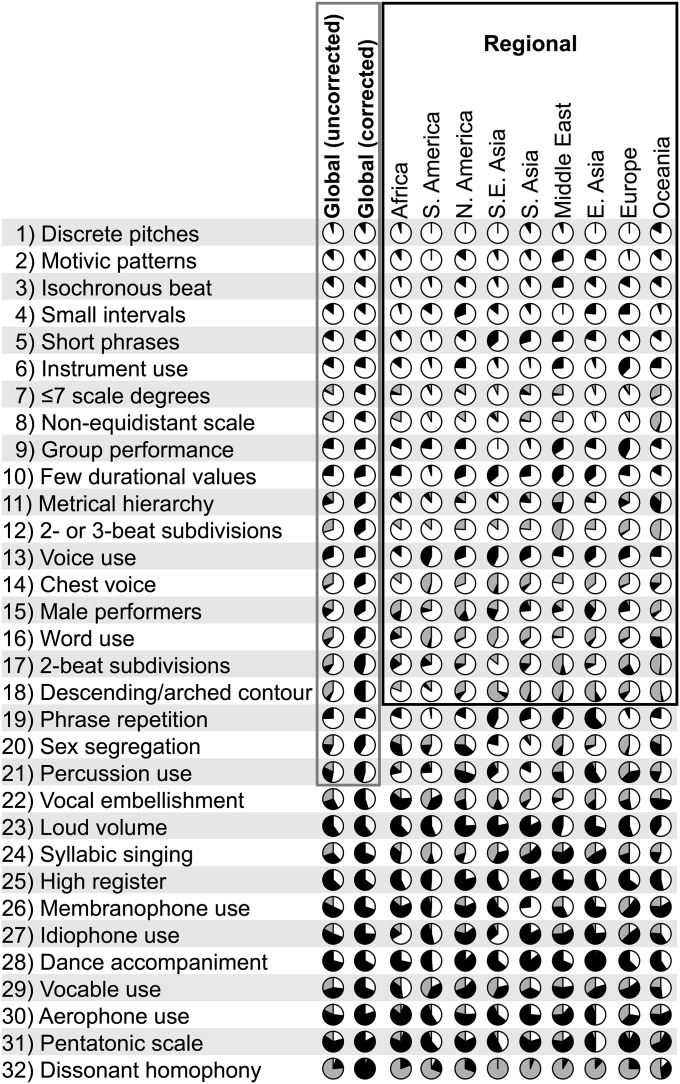

Fig. 2.

Pie charts for the 32 musical features organized according to their frequencies. White slices indicate presence, and black slices indicate absence. [NA (not applicable) codings do not contribute to these analyses; see Fig. S3 for an alternate visualization that includes NAs.] Features whose phylogenetically controlled global frequencies were significantly greater than 0.5 are highlighted using a gray box, and features with frequencies greater than 0.5 in each of the nine regions are highlighted using a black box. Note that vocal embellishment and syllabic singing have global frequencies whose values are slightly greater than 0.5, but not significantly so (SI Methods). The pie chart for dissonant homophony in Southeast Asia is not included because all 14 recordings from this region were coded as NA.

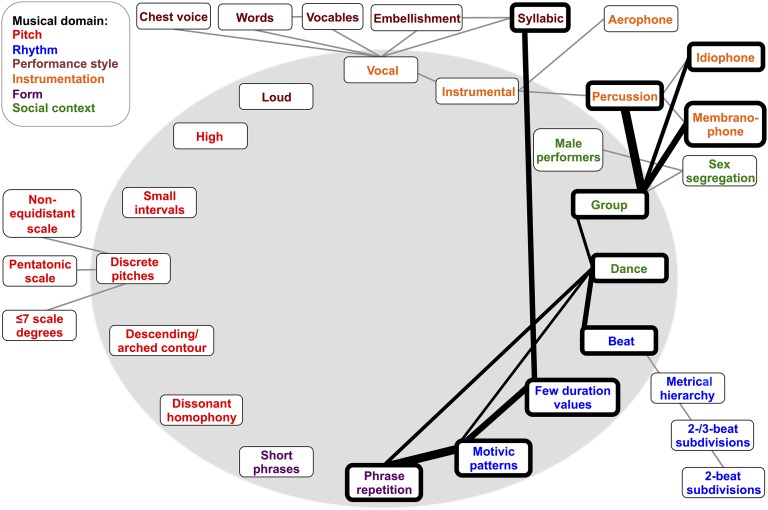

Fig. 3.

Universal relationships between the 32 musical features. Features are color-coded by musical domain (see legend at top left). The 16 nonnested features are distributed around the shaded oval, and the remaining 16 nested features outside the oval require one of the former features by definition. Dependent relationships implied by definition are highlighted using gray lines and were excluded from the analyses. Black lines indicate universal relationships that were significant after controlling for phylogenetic relationships and whose directions were consistent across all nine regions, with the width of each line proportional to the strength of the LR statistic from the phylogenetic coevolutionary analysis (see Table S1 for all pairwise LR values). Ten features (highlighted using bold boxes) formed a single interconnected network joined by one or more universal relationships.

Universal features.

Frequencies of the 32 candidate features (Fig. 2) were calculated for each region, with the criterion of presence outnumbering absence for each of the nine regions.

Universal relationships.

The directions of pairwise relationships were calculated using Pearson’s Φ coefficient, with the criterion of the coefficient having the same sign (all positive or all negative) for each of the nine regions. Φ is an association coefficient directly related to the χ2 statistic, but it can take values from −1 to 1. It is also directly related to Pearson’s r correlation coefficient but is appropriate for cases like ours in which the underlying data are from a dichotomous (i.e., presence/absence) nominal variable, rather than a continuous variable.

Results and Discussion

Universal Features.

Fig. 2 and Fig. S3 shows the frequencies of the 32 candidate features. Twenty-one features had phylogenetically controlled global frequencies significantly greater than 0.5 (Fig. 2, gray box). Eight features that were specifically predicted to be universal failed to meet this threshold: vocal embellishment, syllabic singing, vocables, membranophones, idiophones, aerophones, dissonant homophony, and pentatonic scales. Many of these features do display widespread distributions and surprising cross-cultural similarities when they do occur [e.g., pentatonic scales throughout the world tend to show surprisingly similar interval structures (43, 44)], but they are not universal in the sense of characterizing the bulk of the world’s music. Three features—high register, loud volume, and dance—were specifically predicted only as being universally related to other features and not as universal features themselves (12), and thus their failure to meet this criterion is less surprising.

Fig. S3.

Alternate version of Fig. 2 that also visualizes the frequency of NA codings using gray slices. Because of complications involved in modeling NA values (SI Methods), NA values were treated as 0s for the phylogenetically corrected column. For comparison, the global distribution of presence, absence, and NA values without correcting for phylogeny is also shown. Note that features 15–18 have one or more regions where presence does not outnumber absence and NA codings combined, but presence still outnumbers absence in all regions. Presence also still outnumbers both absence and NA codings combined on a global level.

Three features were predominant on a global scale but were not consistently in the majority within each region. Sex segregation and phrase repetition still displayed consistent trends across all but one region, whereas percussion use was more variable, with the majority of recordings in North America, Europe, and East Asia all lacking percussion instruments. The remaining 18 universal features that passed both criteria are shown using a black box in Fig. 2 and summarized below:

Pitch: Music tends to use discrete pitches (1) to form nonequidistant scales (2) containing seven or fewer scale degrees per octave (3). Music also tends to use descending or arched melodic contours (4) composed of small intervals (5) of less than 750 cents (i.e., a perfect fifth or smaller).

Rhythm: Music tends to use an isochronous beat (6) organized according to metrical hierarchies (7) based on multiples of two or three beats (8)—especially multiples of two beats (9). This beat tends to be used to construct motivic patterns (10) based on fewer than five durational values (11).

Form: Music tends to consist of short phrases (12) less than 9 s long.

Instrumentation: Music tends to use both the voice (13) and (nonvocal) instruments (14), often together in the form of accompanied vocal song.

Performance style: Music tends to use the chest voice (i.e., modal register) (15) to sing words (16), rather than vocables (nonlexical syllables).

Social context: Music tends to be performed predominantly in groups (17) and by males (18). The bias toward male performance is true of singing, but even more so of instrumental performance.