Abstract

Methods of fitting the diffusion model were examined with a focus on what the model can tell us about individual differences. Diffusion model parameters were obtained from the fits to data from two experiments and consistency of parameter values, individual differences, and practice effects were examined using different numbers of observations from each subject. Two issues were examined, first, what sizes of differences between groups can be obtained to distinguish between groups and second, what sizes of differences would be needed to find individual subjects that had a deficit relative to a control group. The parameter values from the experiments provided ranges that were used in a simulation study to examine recovery of individual differences. This study used several diffusion model fitting programs, fitting methods, and published packages. In a second simulation study, 64 sets of simulated data from each of 48 sets of parameter values (spanning the range of typical values obtained from fits to data) were fit with the different methods and biases and standard deviations in recovered model parameters were compared across methods. Finally, in a third simulation study, a comparison between a standard chi-square method and a hierarchical Bayesian method was performed. The results from these studies can be used as a starting point for selecting fitting methods and as a basis for understanding the strengths and weaknesses of using diffusion model analyses to examine individual differences in clinical, neuropsychological, and educational testing.

Research using simple two-choice tasks to examine cognition and decision making has had a long history in psychology. The most successful current models of decision making are sequential sampling models, which assume that decisions are based on the accumulation of evidence from a stimulus, with moment-to-moment fluctuations in the evidence that are largely responsible for errors and for the spread in the distributions of response times (RTs). During the accumulation process, the amount of evidence needed to choose between alternatives is determined by response criteria, one criterion for each of the choices. The time taken to make a decision and which alternative is chosen are jointly determined by the rate at which evidence accumulates (drift rate) and the settings of the response criteria or boundaries. This article will focus on one of the more popular of these models, the diffusion model (Ratcliff, 1978; Ratcliff & McKoon, 2008).

The aim of these models is to provide an understanding of the basic cognitive processes that underlie simple decision making. One of the key advantages of the models is to separate the settings of the criteria (which determine speed-accuracy tradeoffs) from the quality of the evidence obtained from a stimulus and also from the duration of other processes. This provides the basis for another key advantage and that is the ability to examine differences in these aspects of processing in different subject populations. For example, studies have examined reversible changes in normal individuals such as sleep-deprived individuals (Ratcliff & Van Dongen, 2009) and hypoglycemic individuals (Geddes, et al., 2010), as well as decision making in children (Ratcliff, Love et al., 2012). The model allows examination of decision making in various clinical populations such as aphasic individuals, (Ratcliff, Perea, et al. 2004), adults and children with ADHD (Mulder et al., 2010; Karalunas & Huang-Pollock, 2013), individuals with dyslexia (Zeguers et al., 2011), and individuals with anxiety or depression (White et al., 2009, 2010a, 2010b). The model has also been used to examine decision processes in neurophysiology with single-cell recording methods (Ratcliff, Cherian, & Segraves, 2003; Gold & Shadlen, 2007), EEG signals (Ratcliff, Philiastides, & Sajda, 2009), and fMRI methods (Mulder, van Maanen, & Forstmann, in press).

Some applications of the diffusion model have produced new interpretations of differences between subject groups. In the domain of aging, in many tasks there is little difference between older adults and young adults in accuracy but a large difference in RT, with older adults slower than young adults. This suggests a deficit when using RT measures but no deficit when using accuracy measures. Application of the diffusion model showed that there were small or negligible differences in drift rates between older and young adults which explains the lack of differences in accuracy. Increases in boundary settings and nondecision time (the duration of processes other than the decision process) with age explains the differences in RTs. There is also the opposite dissociation with IQ related to drift rate but not boundary setting or nondecision time. Such patterns of results and new interpretations provide strong support for this approach to understanding simple decision making (McKoon & Ratcliff, 2012, 2013; Ratcliff, Thapar, & McKoon, 2001; 2003; 2004; 2010; 2011; Schmiedek et al., 2007; Spaniol, Madden, & Voss, 2006).

In a number of these latter studies examining aging, individual differences across subjects in model parameters show strong external validity by correlating with, for example, IQ. This illustrates a third potential advantage of the models and that is to provide individual difference measures and perhaps even the possibility of determining whether an individual is different from a group (i.e., has a deficit relative to a comparison group).

One large domain for potential application of the model is in the domains of clinical research, educational research, and neuropsychological testing. The highest bar is that the model might contribute to diagnoses of cognitive impairments in individuals. The aim would be to compare a possibly impaired individual to a matched group of normal individuals. In the model, this means that normal ranges of drift rates, criteria, and nondecision times must be estimated and the standard deviations (SD's) across individuals calculated in order to determine whether and how far an individual is outside the normal range.

This model-based approach contrasts with standard neuropsychological and educational testing approaches. In these, often performance is measured on several different tasks, often with relatively few trials on each task, and then the results are averaged into a single indicator of some ability, such as working memory or speed of processing. From the point of view of cognitive modeling, multi-task tests represent a compendium of disparate processes that may share some common features. If processing components differ across the tasks, they might be averaged out. This neuropsychological testing approach is advantageous for many uses, especially when deficits are large, but in other situations, it may be more important to understand deficits in the components of processing with a model-based approach.

Neuropsychological and educational testing approaches often measure speed of processing and label it a basic “ability”. Given that it deals with the speed with which cognitive processes proceed one would expect that modern modeling of decision making and RT would be used or evaluated in this approach. However, it is hard to find neuropsychological or educational tests that come from cognitive modeling research more recent than the 1980's. For example, a recent edited book, “Information processing speed in clinical populations” (DeLuca & Kalmar, 2008) makes no reference to modeling RTs and a review article of intelligence and speed of processing (Sheppard, 2008) likewise makes no reference to modeling approaches. Given this disconnect between theory and applications, models that deal with speed and accuracy in rapid decision making are a natural domain to explore.

In this article we examine an number of practical issues that arise in fitting the diffusion model to data.

Using data from two experiments, we examine effects of numbers of observations and practice effects on both the mean values of model parameters and the variability in them across subjects. This is done by dividing the data into subgroups and fitting the model to each. This analysis provides the basis to determine how large differences in model parameters have to be in order to determine whether a single individual falls outside the normal range.

Simulated data were generated from the diffusion model with ranges of parameters similar to those from the experiments to examine recovery of individual differences in model parameters. The different fitting methods were applied to them and the correlations between the recovered parameters and the ones used to generate the simulated data were compared.

64 sets of simulated data were generated from each of 48 sets of parameters representing the two designs with several different sets of numbers of observations. The different fitting methods were applied and means and SD's in recovered parameter values were obtained. These showed whether there were systematic biases in recovered parameter values and how variable estimates were from the different methods.

A hierarchical Bayesian fitting application was evaluated and compared with a standard method.

It is important to examine practice effects and we did this using the experimental data (this also provides a modest test-retest evaluation of model parameters). If performance changes radically over the first tens or few hundreds of trials, and the changes are not consistent across individuals, then the data from two choice tasks with model analyses may be of limited use when only a small amount of testing time is available as occurs with neuropsychological testing batteries. The experiments presented below used a homogeneous group of undergraduates (with some possibly not particularly motivated) and so provide results for undergraduates as well as a demonstration of how to conduct such studies with other populations.

It is important to distinguish between different sources of variability relevant to applications of the diffusion model. Variability across subjects in the parameters of the model from the fits to each subject's data (SD's) can be used to determine if a parameter value for an individual is significantly different from the values for the group. Standard errors across subjects can be used to determine whether a parameter for one group differs significantly from the parameter for another group. These standard errors represent the variability in the group means and they can be made smaller by increasing the number of subjects in the group.

There is also sampling variability in the model parameters, that is, given the number of observations, how close are the parameters recovered to the parameter values that generated the data. For example, if the diffusion model was used to generate simulated data, the parameters recovered from the simulated data would be more variable if there were 100 simulated observations compared with 1000 observations. In typical applications of the diffusion model, this source of variability is typically 3 to 5 times smaller than the variability due to differences among individuals for 45 min. of data collection. Because individual differences produce larger variability in model parameters than sampling variability, significant correlations can be obtained with variables such as IQ, even when there are relatively small numbers of observations. However, when trying to detect whether an individual falls outside the normal range of the model parameters, both high power and low variability in parameter estimates are needed.

For all such applications of the model just discussed we need to understand how large are SD's in individual differences across subjects as well as SD's in model parameters as a function of number of observations in the sample. Also, SD's differ as a function of the values of the parameters, for example, a small boundary separation has smaller SD than a large boundary separation.

Following the experimental study, we tested a number of methods for obtaining parameter estimates from two-choice data. The first three were our locally-developed programs: two chi-square methods using binned data and a maximum likelihood method (MLH) (Ratcliff & Tuerlinckx, 2002). The other five were from recently developed diffusion-model fitting packages, DMAT, with and without correction for contaminant RTs using the mixed default method (Vandekerckhove & Tuerlinckx, 2007, 2008), fast-dm (Voss & Voss, 2007, 2008), the non-hierarchical HDDM (Wiecki, Sofer, & Frank, 2013), and EZ (Wagenmakers, van der Maas, & Grasman, 2007). In addition, we tested a hierarchical model from the HDDM package with a more limited set of simulated data.

For Simulation Study 1, the aim was to determine how well each method performed in recovering individual differences, that is, providing the correct ordering of parameters across individuals. A method might produce estimates that are biased away from the true values, those from which the simulated data were generated, but if the ordering is correct, then it can be used as a measure of individual differences. For examining differences among individuals and the relationship of such differences to clinical or educational tests, a more accurate order would be more important than accurate recovery of true values.

We used the best-fitting parameter values from fits to the two experiments to generate simulated data using the designs from the two experiments. For each experiment, different studies used different numbers of observations and each study was performed with and without contaminants (assumed to be random delays added to the decision time, see later). For each parameter, its value for each simulated data set was drawn from a normal distribution with the mean and SD from the parameter values from the fits to Experiment 1 or Experiment 2. Each combination of parameters produced a data set for each simulated subject.

For Simulation Study 2, the aim was to determine how well each method recovered the true values of the parameters. The fitting methods were evaluated by how much their estimates were biased away from the true values and the variability in these estimates. The combinations of parameter values were chosen to be representative of what is typically observed in real experiments, 16 such combinations for the numerosity design and 32 for the lexical decision design. For both simulation studies, data were simulated for 64 subjects, each with and without outlier RTs, for numbers of observations ranging per condition from 40 to 1000.

For Simulation Study 3, we tested the nine-quantile chi-square against a hierarchical Bayesian fitting method. Hierarchical methods have been demonstrated to be superior to standard methods when there are low numbers of observations per subject and this study examined the degree to which this is true with the existing package.

The results of the simulation studies are intended to provide methodological guidelines: which methods of fitting the diffusion model provide the correct ordering of the model's parameters and therefore can be used to determine individual differences for correlational analyses; which methods provide values that are not biased away from the true values and therefore can be used to test differences between groups of individuals; and how many subjects for how many numbers of observations does it take to produce sufficiently useful estimates of parameters. If the design of an experiment is substantially different from the numerosity and lexical decision designs, in terms of number of conditions, numbers of observations, accuracy or RT levels, and so on, then the studies here can show how to perform the evaluation needed. Recommendations are presented at the end of the General Discussion section.

The Diffusion Model

In the diffusion model, two-choice decisions are made when information accumulated from a starting point, z, reaches one of the two response criteria, or boundaries, a and 0 (see Figure 1). The drift rate of the accumulation process, v determined by the quality of the information extracted from the stimulus in perceptual tasks and the degree to which a test item matches memory in memory and lexical decision tasks. It is usually assumed that the value of v cannot change during the accumulation of information (see Ratcliff, 2002, p286 for example of mimicking between drift rate ramping on and a constant drift rate). The mean time taken by nondecision processes is labeled Ter. In separating drift rates, criteria, and nondecision times, the model decomposes accuracy and RTs for correct and error responses into individual components of processing. It explains how the components determine all aspects of data, including mean RTs for correct and error responses, the shapes and locations of RT distributions, the relative speeds of correct and error responses, and the probabilities with which the two choices are made.

Figure 1.

An illustration of the diffusion model and the logic of fitting the model to data. The top panel shows the diffusion model with sample paths and correct and error RT distributions. The middle panel shows the components that contribute to the nondecision time. The bottom panel shows a schematic of how RT and accuracy data are mapped into diffusion model parameters. Only the main parameters that have been used in examining individual differences are shown, but all the other model parameters are also estimated.

The model includes three sources of across-trial variability: variability in drift rate, variability in the starting point of the accumulation process (which is equivalent to variability in the criteria settings for low to moderate values), and variability in the time taken by nondecision processes. Variability in drift rate expresses the idea that the evidence subjects obtain from nominally equivalent stimuli differs in quality from trial to trial. Variability in starting point expresses the idea that subjects cannot hold their criteria constant from one trial to the next, and variability in nondecision components from one trial to the next expresses the idea that processes of encoding etc. do not take exactly the same time across trials. Across-trial variability in drift rate is normally distributed with SD η, across trial variability in starting point is uniformly distributed with range sz, and across trial variability in the nondecision component is uniformly distributed with range st. (Ratcliff, 1978, 2013, showed that the precise forms of these distributions are not critical.)

Subjects sometimes make responses that are spurious in that they do not reflect the processes of interest but instead are due to, for example, distraction, lack of attention, or concern for lunch. We describe the assumptions for such contaminant responses later. Subjects also sometimes make fast guesses with short RTs (e.g., between 0 and 200 ms). Experimentally these can be minimized by adding a delay (1-2 sec.) between the response and the next test item with a message saying “too fast” if the response is shorter than say 200 ms. The HDDM package for fitting the model, discussed below, assumes a contaminant distribution that has a minimum value of zero and this can accommodate a small proportion of fast guesses. Other methods for eliminating them are excluding all responses below some cutoff value (e.g. 200 ms) or excluding all responses that occur before accuracy begins to rise above chance (this latter method is formally implemented in the DMAT package discussed below). Another way of looking for fast guesses, or any kinds of guesses, is to include in the design of the experiment, a condition with high accuracy. The proportion of errors in this condition would be an upper limit on the proportion of guesses.

When the model is fit to data, all of its parameters are estimated simultaneously for all the conditions in an experiment. The model is tightly constrained in several ways. The most powerful is the requirement that the model fit the right-skewed shape of RT distributions (Ratcliff, 1978, 2002; Ratcliff & McKoon, 2008; Ratcliff et al., 1999). Another is the requirement that differences in the data between conditions that vary in difficulty must be captured by changes in only one parameter of the model, drift rate. Boundary settings and nondecision time do not change as a function of difficulty; doing so would require that subjects know the level of difficulty before the start of information accumulation.

The assumption that drift rates change little as a function of boundary settings has been verified in experiments to date. An exception is a recent study by Starns, Ratcliff, and McKoon (2012; see also Rae et al., 2014) who found that if subjects are given extreme speed instructions (to respond in a recognition memory task in under 600 ms), then drift rates are lower than with accuracy instructions. This is likely because subjects limit stimulus or memory processing in order to respond quickly; something that they do not otherwise do.

There have been hints in the literature that nondecision time can change as a function of either changes in difficulty or changes between boundary settings. In a few experiments, fits of the model have been modestly better with small differences in nondecision time between speed and accuracy instructions (e.g., Ratcliff & Smith, 2004, p348; Ratcliff, 2006); however, in most other experiments, the difference has been relatively small (Ratcliff & McKoon, 2008, p895).

One of the most important functions of the diffusion model is that it maps RT and accuracy onto common underlying components of processing, drift rates, boundary settings, and nondecision time, which allows direct comparisons between tasks and conditions that might show the effects of independent variables in different ways. For example, a study by McKoon and Ratcliff (2012) examined associations between the two words of pairs that were studied for recognition memory. Memory was tested in two ways: either subjects were asked whether two words had appeared in the same pair at study or they were asked whether a single word had been studied. In the latter case, a single word was preceded in the test list by the other word of the same pair (“primed”) or by some other studied word (“unprimed”). For priming, the effects of independent variables showed up mainly in RTs whereas for pair recognition, they showed up mainly in accuracy. McKoon and Ratcliff found that age, IQ, and the semantic relatedness of the words of a pair all affected drift rates in the same ways for the two tasks, from which they concluded that priming and pair recognition depend on the same associative information in memory. This conclusion would not have been possible without the model.

Another important function of the model is that it allows direct comparisons between groups of subjects. For example, older adults are generally slower than younger adults and show larger differences among conditions. For pair recognition, for example, older adults' RTs for same-pair tests might be 1200 ms and for different-pair tests, 1400. For young adults, RTs might be 1000 ms and 1100. Ratcliff et al. (2011) found that the differences in performance between older and younger subjects were due to differences in all three components of the model: drift rates, boundary settings, and nondecision times. This contrasts with item recognition in which older adults (60-74 year olds) show little difference in drift rates compared with young adults.

Methods for Fitting the Diffusion Model to Data

The methods used most commonly have been the maximum likelihood method (MLH) (Ratcliff & Tuerlinckx, 2002) and three binned methods: the chi-square (Ratcliff & Tuerlinckx, 2002) method, the multinomial likelihood ratio chi-square (G2 method), and the quantile maximum likelihood method (Heathcote, Brown, & Mewhort, 2002). The latter two methods are nearly identical, so we do not discuss the quantile maximum likelihood method further.

In all of these methods, from some RT (either a single RT for the MLH method or a quantile for a binned method) and the model parameters, the predicted probability density is computed. The expression for the cumulative density is (with ξ drift rate and ζ starting point):

The expression for the response proportion for the choice is

These equations must be integrated over the distributions of drift rate, starting point, and nondecision time (τ):

where

and

To obtain the probability density from the cumulative density, F(ti), at a time, ti, the value of F(ti) and the value for that time plus an increment, F(ti+dt) is computed, where dt is small (e.g., .00001 ms). Then using f(t)=(F(t+dt)-F(t))/dt, the predicted probability density at ti is obtained.

For the MLH method, the predicted probability density (f(ti)) for each RT (ti) for each correct and error response is computed and the product over all densities for all the RTs ti is the likelihood (L=Пf(ti)). To obtain the maximum likelihood parameter estimates, the value of the likelihood is maximized by adjusting parameter values using a function minimization routine. However, because products of densities can become very large or very small, numerical problems occur and so it is standard instead to maximize the log likelihood, i.e., the sum of the logs of the densities (summing logs of the values is the same as the log of the product of the values, log(ab)=log(a)+log(b)). Summing the logs of the predicted probability densities for all the RTs gives the log likelihood and minimizing minus the log likelihood produces the same parameter values as maximizing the likelihood.

Minus log likelihood can be minimized using a variety of software routines and we use the robust SIMPLEX routine (Nelder & Mead, 1965). This routine takes starting values for each parameter, calculates the value of the function to be minimized, then changes the values of the parameters (usually one at a time) to reduce minus log likelihood. This process is repeated until either the parameters do not change from one iteration to the next by more than some small amount or the value to be minimized does not change by more than some small amount.

For the chi-square method, the chi-square value is minimized using the SIMPLEX minimization routine, typically with RTs divided into either 5 or 9 quantiles. For 5 quantiles, the data entered into the minimization routine for each experimental condition are the .1, .3, .5, .7, and .9 quantile RTs for correct and error responses and the corresponding accuracy values. For 9 quantiles, the .1, .2, .3, …, and .9 quantiles are used. The quantile RTs and parameter values of the model are used to generate the predicted cumulative probabilities of a response by that quantile RT. Subtracting the cumulative probabilities for each successive quantile from the next higher quantile gives the proportion of responses between adjacent quantiles. For the chi-square computation, these are the expected values, to be compared to the observed proportions of responses between the quantiles (i.e., for the 5-quantile method, the proportions between 0, .1, .3, .5, .7, .9, and 1.0, which are .1, .2, .2, .2, .2, and .1, and for the 9-quantile method, the proportions between quantiles and outside them, which are all .1). These proportions are multiplied by the number of observations to give the expected frequencies and summing over (Observed-Expected)2/Expected for all conditions gives a single chi-square value to be minimized. The SIMPLEX routine then adjusts parameter values to minimize the chi-square value.

For the G2 method, G2 = 2 Σ N pi ln(pi/πi). This statistic is equal to twice the difference between the maximum possible log likelihood and the log likelihood predicted by the model (because ln(p/π)=ln(p)-ln(π)). Every time we have used this method (with several hundred observations per subject) and compared results to the chi-square method, we have found almost identical parameter estimates. This is because the chi-square approximates the multinomial likelihood statistic (see Jeffreys, 1961, p. 197); both are distributed as a chi-square random variable. We fit all the data for each subject in Experiments 1 and 2 and found that boundary separation, nondecision time, and drift rates correlated between the two methods greater than .986 for Experiment 1 and greater than .958 for Experiment 2. The across-trial variability parameters correlated greater than .930 for Experiment 1 and greater than .855 for Experiment 2. These correlations show that for the relatively large numbers of observations in Experiments 1 and 2, the estimates are equivalent. When we reduced the numbers of observations, for example to 40, there were some failures such that the two methods did not produce the same values, but we have not pursued this further.

We only applied the chi-square method to the data from Experiments 1 and 2 but we applied all the methods to the simulation studies. We assumed that every observed RT distribution has contaminants and their probability is po, the same probability for all experimental conditions for a subject. The contaminants are assumed to come from a uniform distribution with a range determined by the maximum and the minimum RTs in each experimental condition so that the model fit is a mixture of contaminants and responses from the diffusion process. In generating simulated data from this mixture, contaminants are assumed to involve a delay in processing (but not random guessing). Thus the contaminant assumption in generating simulated data is not the same as in fitting the data. However, the assumption of a uniform distribution of contaminants in all conditions gave successful recovery of the parameters of the diffusion process and the proportion of contaminants (Ratcliff & Tuerlinckx, 2002). In the General Discussion, we examine contaminants further.

Fitting the model with the chi-square and G2 methods is much faster in terms of computer time than the MLH method, especially for large numbers of observations. For example, for Experiments 1 and 2, for each experimental condition, the 5-quantile chi-square required five evaluations of the diffusion model cumulative density for correct responses and five evaluations for error responses, no matter how many observations there were per condition. For the MLH method, the density function must be evaluated for each RT, which means hundreds or thousands of evaluations for each condition (with two evaluations of the cumulative distributions function to produce the density function for each RT). For the studies below, we used the chi-square method because it is what we have been using and because the results would be similar if we used, for example, G2 (see above). As a check, we have fit exact predictions for the accuracy values and quantile RTs with the chi-square method and the model parameters used to generate the predictions are recovered to within 1%. (Note that our home grown fitting programs were handed off to the second author who implemented batch scripts for fitting with one version to avoid the possibility of tuning the programs specific to the data set).

In addition to the chi-square and MLH methods, we tested four diffusion-model fitting packages that are available in the public domain: DMAT (Vandekerckhove & Tuerlinckx, 2007, 2008), fast-dm (Voss & Voss, 2007, 2008), HDDM (Wiecki, Sofer, & Frank, 2013), and EZ (Wagenmakers, et al., 2007). The DMAT, fast-dm, and HDDM packages can all fit all the conditions of an experiment and both correct and error responses simultaneously, but the EZ method fits only one condition at a time and only correct RTs or only error RTs. For each method, we used the most straightforward default method and options. The aim was to reproduce what a user might employ in fitting. Simulated data used in the studies used here will be available as a supplement.

The data input to the DMAT package are the RTs and choice probabilities for each quantile of the data, where the number of quantiles is defined by the user (values of the bin limits can also be specified by the user). The values of the model parameters that best generate the quantile data are determined by minimizing a chi-square or G2 statistic. The package also allows the user to choose to implement a mixture model for slow contaminants and an exponentially weighted moving average method to eliminate fast outliers (Vandekerckhove & Tuerlinckx, 2007). In the applications below, DMAT was applied both with (using the “Mixed Model” option) and without contaminant correction.

Note that DMAT was designed to be used with data with large numbers of observations per condition (hundreds) and was not tuned for smaller numbers and will likely not produce meaningful estimates for numbers of observations per condition in the tens. It also provides warning messages when there may be problems with the fit. Often in published applications, these are ignored. We operate like the normal user and provide what the package produces, ignoring the warning messages. But then we report the number of them for one of the studies.

Fast-dm uses a Kolmogorov-Smirnov (KS) statistic in which the whole cumulative RT distributions for correct and error responses are generated and then compared with the cumulative for the data. The model parameters are adjusted until the deviation between the two is minimized. Fast-dm, instead of using the expressions in Equations 1-5, solves the partial differential equation (the Fokker-Planck backward equation, Ratcliff, 1978; Ratcliff & Smith, 2004) numerically, which is very fast. It might be possible to use numerical solutions like this for other packages or for the chi-square or MLH methods, but we have not investigated this (but see discussions by Diederich and Busemeyer, 2003, and Smith, 2000). The fast-dm method is robust to contaminants, as demonstrated later.

HDDM uses a Bayesian method that essentially combines a likelihood function with prior distributions over parameters. The prior distributions for boundary separation and nondecision time are Gamma distributed, drift rate and starting point are normally distributed, across trial variability in drift rate and nondecision time are half normals, and across trial variability in starting point is beta distributed (see Wiecki et al., 2013) and we used these informative priors in our fits. We used the package to fit the data from each subject individually in the same way as for the other methods. The default settings in HDDM were used, including a 20 sample burn-in, and 1800 samples for the estimation. The proportion of contaminants were estimated and not fixed in the model.

HDDM can also be fit using a hierarchical model in which model parameters are assumed to be drawn from distributions and the parameters of those distributions are estimated along with the parameters for each individual subject. This means that extreme values of parameters that might be produced because of noise in the data are constrained to be less extreme through the distributions over the group of subjects. For the first two simulation studies, we examined separate fits to individual subjects. We also compared parameters recovered from the hierarchical method with those recovered from the chi-square method. As for individual fits, we used a 20 sample burn-in, and 1800 samples for the estimation.

The EZ method (Wagenmakers et al., 2007) is based on a restricted diffusion model; there is no across-trial variability in any of the model parameters, the starting point is set midway between the two boundaries, there is no allowance for contaminant responses, and as mentioned above, it can be applied only to correct RTs or only to error RTs, for only one condition of an experiment at a time. Without across-trial variability, it cannot account for relations between correct and error RTs. With the starting point midway between the boundaries, it produces biased parameter estimates if the true starting point is not midway. It also predicts that RTs for correct and error responses will be the same, something that is known to be incorrect for the vast majority of experiments. Without allowance for contaminants, it produces quite biased estimates of parameter values (unless there are no contaminants in the data; see Ratcliff, 2008).

Wagenmakers et al. (2007) derived expressions to relate the mean RT for a condition, the variability in the mean, and the accuracy for that condition to boundary settings, nondecision time, and drift rate for that condition. Essentially, this transforms three statistics of data into three model parameters. This means that the model cannot be falsified on the basis of accuracy, mean RT, or variance in RT. The model does make predictions about RT distributions, the same predictions as the standard model and so it is easily possible to evaluate how well EZ fits RT distributions. In our applications of the EZ method, we fit only correct responses. For error responses, the RT means and the variance in them are much more variable (because there are few observations) making parameter estimates less reliable than for correct responses.

van Ravenzwaaij and Oberauer (2009) compared the EZ method to the fast-dm and DMAT methods by generating simulated data and using these methods to fit the model to the simulated data. They found that EZ and DMAT were better at recovering parameter values and that EZ was the preferred method when the goal was to recover individual differences in parameter values. We extend their results by comparing these three methods with the other methods described above, by explicitly introducing contaminants into simulated data, and by including conditions in which the starting point is not equidistant from the boundaries (a requirement for the EZ method).

When faced with a very low number of observations either because existing data sets are being used or it is not possible to collect many observations per subject, it might be tempting to simply pool the observations (e.g., Menz et al., 2012) if group differences, not individual differences are the focus. This is an incorrect way of grouping data. This is easy to see: if there are only two subjects with distributions that are well separated, then the combination will be bimodal. A better way of combining subjects is to compute quantiles of the distribution and then average them (Ratcliff, 1979). An even better way would be to use hierarchical modeling using the HDDM package.

Refinements of the Chi-square Fitting Method

Over the last several years, we have added refinements to the chi-square method. When there are fewer data points than the number of quantiles, then a median split is used to form two bins, each with probability .5. However, if the median is outside the range of the .3 to .7 quantiles for correct responses, then we use a single value of chi-square ((O-E)2/E, where O is the observed number of observations and E is the expected value from the model) for that condition and add this to the sum of the rest of the values for all the conditions and quantiles. This is because when there are low numbers of errors in conditions with high accuracy, some of the error RTs can be spurious and (we assume) not from the decision process used in performing the task.

Sometimes, accommodating very slow error responses with lower numbers of observations leads to estimates of across-trial variability in drift rate that can be a lot larger than they should be and along with this drift rates can be several times larger than they should be. This problem can be limited by placing upper and lower bounds on across-trial variability in drift rates (e.g., 0.3 and 0.08). The bounds might be determined by examining the ranges of the variability parameter values from similar experiments with larger numbers of observations. For fits for the simulation studies below with low numbers of observations, the value of the across trial variability in drift parameter was often estimated to be at the upper or lower bound for our programs that implemented these limits.

Experiments 1 and 2

The numerosity task for Experiment 1 and the lexical decision task for Experiment 2 were chosen because they are representative of commonly used experimental designs for practical applications. Numerosity discrimination has been used in examining numerosity abilities for a variety of populations and lexical decision has been used in studies of aphasia and Alzheimer's disease. The design of the numerosity experiment is the same as that frequently used with perceptual tasks including brightness, letter, motion, number, and length discrimination (Ratcliff, 2014; Ratcliff & Rouder, 1998; Ratcliff et al, 2001, 2003; Thapar, Ratcliff, & McKoon, 2003, Smith & Ratcliff, 2009; Smith, Ratcliff, & Wolfgang, 2004). The design of the lexical decision experiment is the same as that used with many memory tasks (e.g., Ratcliff, Gomez, & McKoon, 2004; Ratcliff, Thapar, Gomez, & McKoon, 2004; Ratcliff, Thapar & McKoon, 2004, 2010).

In Experiment 1, on each trial, a 10×10 array was filled with spaces, and between 31 and 70 asterisks were placed in the array in random positions. Subjects decided whether the number of asterisks was large (greater than 50) or small (less than 51). This range of the number of asterisks produces a range of levels of difficulty from very easy to very difficult.

In Experiment 2, on each trial, a string of letters was presented and subjects decided whether it was a word or not. There were three levels of difficulty for the words: words that occur in English with high frequency, low frequency, or very low frequency. The difficulty of the nonwords was not varied.

For both experiments, we examined practice effects by grouping the trials into earlier versus later blocks. We also examined the number of trials that are needed to estimate the diffusion model's parameters with reasonably small standard errors (SE's). Ideally, the estimated parameters for early trials would be consistent with those for later trials and the estimates from relatively small numbers of trials would be consistent with those from larger numbers. If so, the model could be used for the limited test durations that occur in applied domains.

In the numerosity discrimination experiments, subjects started the task immediately after the instructions with no prior practice. In the lexical decision task, subjects were given 30 practice trials before starting the real experiment. This means that we can look at practice effects from the beginning of testing in the numerosity discrimination task, and after a very modest amount of practice in the lexical decision task.

Method

Undergraduates at Ohio State University participated in the experiments for course credit, 63 in Experiment 1 and 61 in Experiment 2, each for one 45 min. session (data are archival and were collected in 2005). For both experiments, stimuli were displayed on a PC screen and responses were collected from the keyboard.

Numerosity discrimination

On each trial, the asterisks were placed in random positions in the 10×10 array. Subjects were asked to press the “/” key if the number of displayed asterisks was larger than 50 and the “z” key if the number was smaller than 51 and they were asked to respond as quickly and accurately as possible. If a response was correct, the word “correct” was presented for 500 ms, the screen was cleared, and the next array of asterisks was presented after 400 ms. If a response was incorrect, the word “error” was displayed for 500 ms, the screen was cleared, and the next array of asterisks was presented 400 ms later. If a response was shorter than 280 ms, the words “TOO FAST” were presented for 500 ms after a correct response or after the error message for an incorrect response. There were 30 blocks of 40 trials each with all the numbers of asterisks between 31 and 70 presented once in each block.

Lexical Decision

Words were selected from a pool of 800 words with Kucera-Francis frequencies higher than 78, a pool of 800 words with frequencies of 4 and 5, and a pool of 741 words with frequencies 0 or 1 (Ratcliff, Gomez, & McKoon, 2004). There was a pool of 2341 nonwords, all pronounceable in English. There were 70 blocks of trials with each block containing 30 letter strings: 5 high frequency words, 5 low frequency words, 5 very low frequency words, and 15 nonwords. Subjects were asked to respond quickly and accurately, pressing the “/” key if a letter string was a word and the ‘z’ key if it was not. Correct responses were followed by a 150 ms blank screen and then the next response. Incorrect responses were followed by “ERROR” for 750 ms, a blank screen for 150 ms, and then the next test item.

Results for Experiment 1

Responses shorter than 250 ms and longer than 3500 ms were excluded from the analyses (less than 0.8% of the data). The data for numbers of asterisks less than 51 were collapsed with numbers greater than 50 and they were then grouped into two conditions, easy (numbers 31-40 and 61-70) and difficult (41-50 and 51-60). Averaging over all the trials of the experiment, accuracy for the easy condition was .89 (for individual subjects, the highest accuracy was .98 and the lowest .69), mean RT for correct responses was 627 ms, and mean RT for errors was 687 ms. For the difficult condition, accuracy was .68 (for individual subjects, the highest accuracy was .78 and the lowest .57), mean RT for correct responses was 664 ms, and mean RT for errors was 721 ms.

To examine practice effects and the number of trials needed for the diffusion model's parameters to have small SD's, eight groups of data were constructed: trials 1-80, trials 81-160, trials 161-240, trials 1-160, trials 161-320, trials 1-320, trials 321-640, and trials 1-1200. We fit the model to the data with the chi-square method with 9 quantiles. The model fit well, as we detail after presenting results for this experiment and for Experiment 2.

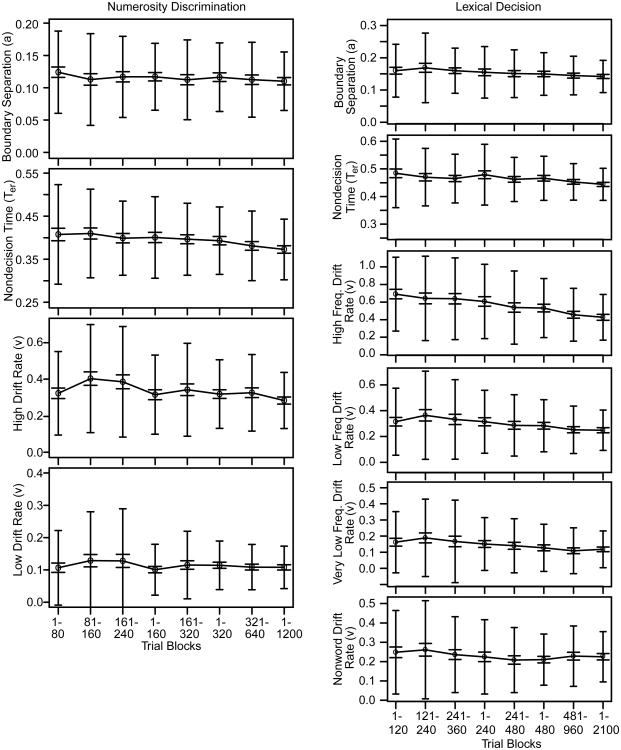

For each of the eight groups of data, Figure 2 plots the mean values over subjects of the parameters that best fit the accuracy and RT data. The means are shown for nondecision time, boundary separation, and two drift rates, one for the easy condition and one for the difficult condition. The wider error bars represent 2 SD's from the mean and the narrower ones, 2 SE's. The means and SD's are also shown in Table 1.

Figure 2.

Plots of the mean values of model parameters across subjects along with the SD's and SE's across subjects for several divisions of the data from Experiments 1 and 2 (the numerosity discrimination and lexical decision tasks respectively). The larger error bars are 2 SD confidence intervals in model parameters and the smaller error bars are 2 SE confidence intervals.

Table 1. Mean Parameter Values and SD's Across Subjects for the Numerosity Discrimination Experiment.

| Trial group | a | Ter | η | sz | p0 | st | vE | vD | χ2 | |

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 1-80 | 0.126 | 0.409 | 0.171 | 0.050 | 0.010 | 0.161 | 0.321 | 0.106 | 25.1 |

| 81-160 | 0.114 | 0.411 | 0.157 | 0.051 | 0.006 | 0.158 | 0.401 | 0.128 | 22.2 | |

| 161-240 | 0.118 | 0.400 | 0.138 | 0.051 | 0.009 | 0.172 | 0.383 | 0.127 | 24.5 | |

| 1-160 | 0.119 | 0.402 | 0.162 | 0.050 | 0.007 | 0.161 | 0.314 | 0.100 | 29.6 | |

| 161-320 | 0.114 | 0.398 | 0.145 | 0.051 | 0.009 | 0.171 | 0.340 | 0.115 | 33.0 | |

| 1-320 | 0.118 | 0.395 | 0.155 | 0.053 | 0.008 | 0.182 | 0.317 | 0.114 | 34.1 | |

| 321-640 | 0.114 | 0.382 | 0.145 | 0.063 | 0.008 | 0.174 | 0.324 | 0.108 | 34.6 | |

| 1-1200 | 0.112 | 0.374 | 0.126 | 0.064 | 0.007 | 0.180 | 0.282 | 0.107 | 50.4 | |

| SD | 1-80 | 0.032 | 0.058 | 0.076 | 0.036 | 0.023 | 0.087 | 0.113 | 0.058 | 10.5 |

| 81-160 | 0.036 | 0.052 | 0.073 | 0.037 | 0.015 | 0.091 | 0.147 | 0.076 | 10.6 | |

| 161-240 | 0.032 | 0.043 | 0.070 | 0.043 | 0.018 | 0.080 | 0.150 | 0.081 | 11.4 | |

| 1-160 | 0.026 | 0.047 | 0.075 | 0.036 | 0.019 | 0.078 | 0.107 | 0.039 | 11.0 | |

| 161-320 | 0.031 | 0.042 | 0.078 | 0.034 | 0.021 | 0.076 | 0.126 | 0.052 | 15.0 | |

| 1-320 | 0.027 | 0.039 | 0.067 | 0.031 | 0.018 | 0.064 | 0.093 | 0.038 | 13.1 | |

| 321-640 | 0.029 | 0.041 | 0.061 | 0.027 | 0.015 | 0.058 | 0.104 | 0.035 | 12.0 | |

| 1-1200 | 0.023 | 0.035 | 0.047 | 0.021 | 0.011 | 0.049 | 0.076 | 0.033 | 20.7 | |

| Mean | Simulation Parameters | 0.11 | 0.375 | 0.13 | 0.06 | 0.16 | 0.30 | 0.10 | ||

| SD | 0.03 | 0.05 | 0.08 | 0.01 | 0.10 | 0.10 | 0.05 | |||

| Mean | Hierarchical simulation parameters: two subject groups | 0.20 | 0.53 | 0.13 | 0.06 | 0.16 | 0.10 | 0.00 | ||

| SD | 0.02 | 0.03 | 0.08 | 0.01 | 0.10 | 0.05 | 0.025 |

Note. a=boundary separation, z=starting point, Ter=nondecision component of response time, η =standard deviation in drift across trials, sz=range of the distribution of starting point (z), st = range of the distribution of nondecision times, vE and vD are the drift rates for the easy and difficult conditions respectively, and χ2 is the chi-square goodness of fit measure. For the data, the number of degrees of freedom for the fits to data was 30 and the critical value of chi-square at the .05 level was 43.8. In the simulations, the drift rates were correlated. The same random number was used to generate both drift rates with half the value added to the lower drift rate. The following were lower limits on parameter values: a, 0.07; η, 0.02; Ter, 0.25; st, 0.04, and an upper limit on sz of 0.9a. Separate sets of simulations were conducted with po 0 or 0.04.

When the values of the parameters estimated from the first 80 trials (the 1-80 group) were compared to the values estimated from all 1200 trials (the 1-1200 group), there were modest differences. The estimated values from all the trials were only slightly lower than for the early trials for boundary separation, nondecision time, and drift rate for the easy condition. For boundary separation, nondecision time, and drift rates, the SD's and SE's were smaller by one half to two thirds for all the trials than for 80 trials. Results were similar for the 1-160 group and the 1-320 group but with smaller differences. In general, for this subject population (undergraduates) and this task, there is little difference in the parameter values estimated from the first few trials and those estimated from the whole session.

Figure 2 and Table 1 also show further divisions of the data that allow examination of practice effects over the first few blocks of trials. There were small declines in nondecision time and boundary separation from the first block to later blocks. Drift rates for the easy condition were higher for the 81-160 and 161-240 groups than for the 1-80 and 1-160 groups respectively, but these were probably spurious because with lower numbers of observations, there are very few errors and this leads to inflated drift rate estimates. As the amount of data increases, the number of errors increases and so drift rate estimates decrease because the larger numbers of errors allows them to be estimated with more accuracy. The overall decrease in SE's across the groups also reflects increasingly larger numbers of errors. These same trends occur for the difficult condition but with smaller differences.

The SD's across subjects in the estimated parameter values (the larger error bars in Figure 2) allow examination of power for detecting differences between an individual and our population of subjects. College students are likely to provide the best performance of any population of subjects because they are likely to have the shortest nondecision times and highest drift rates (and perhaps the narrowest boundary settings, although these vary with task and with instructions that emphasize speed over accuracy or accuracy over speed). To identify an individual as different from our student population, his or her value for any of the parameters would have to lie at least one SD outside the 2 SD confidence interval for the students (e.g., Cumming & Finch, 2005). One SD outside a 2 SD confidence interval gives about 6% false negatives and 6% false positives. One SD outside 2 SD's for nondecision time is about 500 ms and one SD outside 2 SD for boundary separation is about 0.18. Values of boundary separation and nondecision time larger than these values are often found with older adults which means it is possible to detect age differences through the model parameters. However, it is unlikely that subjects with deficits could be distinguished from the students on the basis of drift rates. This is because the bottom of the two SD range extends to zero or almost to zero and 1 SD lower than this is below zero (i.e., even a drift rate of zero representing chance performance would not be far enough outside the confidence intervals).

In contrast, the model parameters can be used to detect differences between different subject groups. Because standard errors decrease and hence power increases with the number of observations (subjects), even quite small differences can be discriminated. For example, 2 SE's in drift rates in Figure 2 for the easy condition with 1200 observations is about 0.02. This means differences as small at 0.03 could be detected for the population, number of observations, and conditions in Experiment 1 (the SE's can be found from the SD's in Tables 1 and 2 by dividing the SD by the square root of the number of subjects). Thus, there is power to detect even small differences in parameter values between the different groups.

Table 2. Mean Parameter Values and SD's Across Subjects for the Lexical Decision Experiment.

| Trial group | a | Ter | η | sz | p0 | st | z | vH | vL | vV | vN | χ2 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | 1-120 | 0.153 | 0.476 | 0.133 | 0.038 | 0.001 | 0.171 | 0.081 | 0.660 | 0.271 | 0.159 | -0.240 | 43.0 |

| 121-240 | 0.162 | 0.462 | 0.150 | 0.042 | 0.002 | 0.175 | 0.085 | 0.626 | 0.323 | 0.186 | -0.259 | 41.2 | |

| 241-360 | 0.153 | 0.457 | 0.122 | 0.062 | 0.003 | 0.153 | 0.081 | 0.618 | 0.290 | 0.165 | -0.233 | 36.7 | |

| 1-240 | 0.148 | 0.471 | 0.139 | 0.040 | 0.003 | 0.182 | 0.075 | 0.581 | 0.272 | 0.147 | -0.219 | 63.3 | |

| 241-480 | 0.144 | 0.454 | 0.125 | 0.051 | 0.002 | 0.173 | 0.083 | 0.516 | 0.244 | 0.135 | -0.204 | 61.0 | |

| 1-480 | 0.143 | 0.458 | 0.132 | 0.045 | 0.001 | 0.193 | 0.083 | 0.502 | 0.237 | 0.122 | -0.201 | 82.3 | |

| 481-960 | 0.138 | 0.445 | 0.144 | 0.054 | 0.002 | 0.167 | 0.076 | 0.431 | 0.206 | 0.104 | -0.221 | 83.2 | |

| 1-2100 | 0.135 | 0.436 | 0.123 | 0.066 | 0.002 | 0.178 | 0.080 | 0.399 | 0.203 | 0.114 | -0.216 | 158. | |

| SD | 1-120 | 0.041 | 0.062 | 0.052 | 0.037 | 0.003 | 0.078 | 0.028 | 0.185 | 0.113 | 0.087 | 0.092 | 20.7 |

| 121-240 | 0.054 | 0.052 | 0.057 | 0.039 | 0.006 | 0.084 | 0.040 | 0.176 | 0.150 | 0.114 | 0.100 | 18.2 | |

| 241-360 | 0.035 | 0.044 | 0.045 | 0.039 | 0.009 | 0.084 | 0.039 | 0.176 | 0.136 | 0.122 | 0.068 | 14.0 | |

| 1-240 | 0.040 | 0.055 | 0.055 | 0.036 | 0.011 | 0.070 | 0.028 | 0.175 | 0.101 | 0.077 | 0.073 | 20.4 | |

| 241-480 | 0.037 | 0.040 | 0.048 | 0.040 | 0.005 | 0.071 | 0.027 | 0.164 | 0.099 | 0.080 | 0.055 | 24.1 | |

| 1-480 | 0.033 | 0.040 | 0.047 | 0.033 | 0.001 | 0.059 | 0.028 | 0.141 | 0.087 | 0.070 | 0.047 | 26.1 | |

| 481-960 | 0.030 | 0.033 | 0.049 | 0.030 | 0.006 | 0.062 | 0.024 | 0.106 | 0.078 | 0.069 | 0.052 | 25.0 | |

| 1-2100 | 0.025 | 0.029 | 0.036 | 0.025 | 0.003 | 0.049 | 0.023 | 0.096 | 0.063 | 0.052 | 0.041 | 51.5 | |

| Mean | Simulation parameters | 0.14 | 0.44 | 0.115 | 0.04 | 0.17 | 0.080 | 0.38 | 0.19 | 0.11 | -0.20 | ||

| SD | 0.04 | 0.04 | 0.06 | 0.02 | 0.06 | 0.002 | 0.06 | 0.06 | 0.06 | 0.06 |

Note. a=boundary separation, z=starting point, Ter=nondecision component of response time, η =standard deviation in drift across trials, sz=range of the distribution of starting point (z), st = range of the distribution of nondecision times, vH, vL, vV, and vN are the drift rates for high, low, and very low frequency words and for nonwords respectively, and χ2 is the chi-square goodness of fit measure. The number of degrees of freedom for the fits to data was 65 and the critical value of chi-square at the .05 level was 84.8. In the simulations, the drift rates were not correlated. The following were lower limits on parameter values: a, 0.07; η, 0.02; Ter, 0.25; st, 0.04, sz, 1.8 the distance from the starting point to the nearest boundary. Separate sets of simulations were conducted with po 0 or 0.04. Each drift rate had the same random number added to it so if a subject had a high drift rate in one condition they had a high drift rate in the other conditions.

Results for Experiment 2

RTs shorter than 300 ms and longer than 4000 ms were excluded (about 2.7% of the data) from data analyses.

For the data for all the trials, for the high-, low-, very-low frequency words, and nonwords, accuracy was .95, .84, .72, and .89 respectively, mean RTs for correct responses were 609, 714, 767, and 730 ms respectively, and mean RTs for errors were 600, 735, 777, and 792 ms respectively. The minimum and maximum values of accuracy across subjects were 1.0 and .88, .96 and .57, .88 and .45, and .98 and .79 for the high-, low-, very-low frequency words, and nonwords respectively.

To examine practice effects and the number of trials needed for the diffusion model's parameters to have small SD's, eight groups of data were constructed: trials 1-120, 121-240, 241-360, 1-240, 241-480, 1-480, 481-960, and 1-2100. Figure 2 shows the means across subjects of the best-fitting parameter values. The wider error bars represent 2 SD's from the mean and the narrower ones, 2 SE's. The means and SD's are also given in Table 2.

Compared to Experiment 1, there is more variability in the estimates of the parameters and this is true even though there were more observations. There are two reasons for this: a larger range of individual differences and more variability because the starting point was estimated from the data; it was not fixed at z=a/2 as it was for Experiment 1.

There were only modest differences between the estimates for the first 120 trials and the estimates from all 2100 trials, mirroring the results from Experiment 1. The estimates from all the trials were only slightly lower than for the early trials for boundary separation and nondecision time, and the estimates were a little higher for drift rates for the low and very low frequency words and nonwords. The estimate for high frequency words was considerably higher due to no error responses for many of the subjects in the first few trials in that condition (leading to spuriously higher estimates). For all six parameters, the SD's and SE's were smaller with all the trials by up to half the value. The pattern of results was similar for all the parameters for the 1-240 group and the 1-480 group, but with reduced differences and smaller SD's and SE's relative to the 1-120 group.

The trends in model parameters as a function of practice (Figure 2 and Table 2) are similar to those from Experiment 1 but with slightly larger differences. Results show that there were small declines in nondecision time and boundary separation from the first block to later blocks. Drift rates for the high-frequency condition are higher for the 1-120, 121-240, and 241-360 groups and are higher than the drift rate for all the data. Also, the drift rates for the 121-240, and 241-360 low frequency groups are higher than the drift rate for all the data. For each of these conditions, 2 SE error bars do not overlap with those for all the data. This is because there are relatively few errors (and zero for some subjects) in these conditions, i.e., for a group with 120 observations, 60 are from word categories and of these, 20 are from each of the three frequency classes and so with a 5% error rate, there will often be zero errors. As discussed earlier, this leads to inflated estimates of drift rates. Two SE error bars for nondecision times and boundary separation for the 1-120, 121-240, and 241-360 groups do not overlap with the 2 SE error bars for all the data (trials 1-2100). But generally, the practice effects are relatively small (especially compared to individual differences).

Following the discussion for Experiment 1, the results of this experiment show that an individual subject could be identified as having a deficit relative to our undergraduate subjects if boundary separation was 1 SD above the 2 SD confidence limit, which, for parameters from fits to all the data, is 0.21. For nondecision time, the value would be 530 ms. Two SD's below the means for drift rate were near zero for all conditions except for high frequency words. Therefore, differences between an individual and the undergraduate group could not be detected unless his or her drift rates were near zero (and there was a relatively large number of trials).

In contrast to detecting differences between an individual and the undergraduates, the SE's on the model parameters have enough power to detect even quite small differences between groups of subjects just as in Experiment 1.

Last blocks of trials

We also examined parameters from fits of the model to the last three blocks of trials, blocks of 80 trials for the numerosity experiment and 120 trials for the lexical decision experiment. This was done as a check to see if there were practice or fatigue effects at the end of the sessions. Boundary separation and nondecision times were a little larger than those for the fit to all the data (by less than .005 and 20 ms respectively) and drift rates were similar to the fits for the first three trial groups in Tables 1 and 2 (i.e., a little larger than those for fits to all the data). These results show that there are no dramatic differences between model parameters estimated from the last few blocks of trials and the first few blocks of trials.

The results of these two experiments are consistent with other studies that have used the diffusion model to examine practice effects. Petrov, Van Horn, and Ratcliff (2011), Ratcliff, Thapar, and McKoon (2006), and Dutilh, Vandekerckhove, Tuerlinckx, and Wagenmakers (2009) all found that boundary separation becomes smaller and nondecision time shorter with increasing amounts of practice but that there is little change in drift rates. These changes are largest between sessions.

Across-trial variability parameters

For both experiments, the across-trial variability parameters were relatively poorly estimated (Ratcliff & Tuerlinckx, 2002). The means of across-trial variability in drift rate, starting point, and nondecision time minus 2 SD's either include zero or are close to it. In fitting the model to the data for Experiments 1 and 2, we constrained the across-trial variability in drift rate to be in the range that has been observed in other applications of the model to similar experiments. If it were not constrained in this way, one SD in across-trial variability in drift rate would be 2/3 the drift rate. Generally, differences in the across-trial variability parameters between individuals or between populations cannot be determined without quite large numbers of observations.

Goodness of fit

Tables 1 and 2 show chi-square goodness-of-fit values for Experiments 1 and 2 using the chi-square method with 9 bins per distribution. Chi-square goodness of fit values are often used to assess how well diffusion models (and other two-choice models) fit data. Because the bins are determined by the data, that is, by the values of the RT quantiles, the statistic we calculate is not, strictly speaking, a chi-square. However, the statistic approaches a chi-square asymptotically (e.g., Jeffreys, 1961), and when the standard chi-square is compared to the chi-square based on quantiles, little difference is found between them (Fific, Little, Nosofsky, 2010).

In fitting the model to data, there are two constraints that a fitting method tries to satisfy. First, it needs to adjust the model parameters to adjust proportions (probability mass) across the bins between quantiles within each condition to match the proportions between the quantile RTs in the data. The second is to adjust parameters to move proportions so that the proportion of correct responses between data and predictions match and the proportion of error responses match.

For each of our data sets, using 5 quantile RTs, there were 12 bins (6 for correct responses and 6 for errors) in each experimental condition and the total probability mass in each condition summed to 1.0, reducing the number of degrees of freedom to 11. For a total of k experimental conditions and a model with m parameters, the number of degrees of freedom in the fit was therefore df = k(12 - 1) - m. For Experiment 1, the number of degrees of freedom was 14 and for Experiment 2, the number was 33. With 9 quantiles, there are 20 bins with 19 degrees of freedom per condition. Thus, for Experiment 1, the number of degrees of freedom was 30 and for Experiment 2, the number was 65.

Ratcliff, Thapar, Gomez, and McKoon (2004) examined the effect of moving a .1 probability mass from one quantile bin (so the .2 probability mass became .1) to another adjacent quantile bin (so the .2 probability mass became .3). They found that the increment to the chi-square for N=100, the increment was 13.3 (over half the critical value of 22.4) and for N=1000, the increment was 133 (five times the critical value). These increments mean that even relatively small systematic misses in the proportions are accompanied by large increases in the chi-square, especially as the number of observations increases.

The mean values of the chi-square for the lowest numbers of observations for the two experiments (the first three lines in Tables 1 and 2) are smaller than the mean chi-square from the chi-square distribution. If the data were generated from a chi-square distribution, the mean chi-square would be the number of degrees of freedom. This means that the model is overfitting the data, i.e., the model is producing fits that are accommodating random variations in the data from variability due to small numbers of observations. For all the data from Experiments 1 and 2, the mean values of the chi-square are 50 and 158 respectively, with critical values of 44 and 85. These represent a better estimate of how well the model is fitting the data because the number of observations is large and variations in the data that occur with small numbers of observations are minimized with a thousand observations or more as in these fits. For many data sets from a number of experiments, we have found that with numbers of observations per subject like the ones in the experiments here, the mean values of chi-square over subjects are typically between the critical value and twice the critical value. Thus, the quality of the fits for Experiments 1 and 2 are about the same as for previous experiments (e.g., Ratcliff, Thapar, & McKoon, 2003, 2004, 2010, 2011).

Power analyses for Experiments 1 and 2

Table 3 shows simple power analysis calculations using the means of the parameter values estimated from the data. We used SD's rounded up or down based on those from Tables 1 and 2, values that would correspond to a moderate number of observations, around several hundred. We assumed normal distributions of the populations (the distributions of parameter values across individuals are usually reasonably symmetric, e.g., Ratcliff et al., 2010).

Table 3. Power analyses showing the value of the parameter needed to detect a score outside the normal range 90% and 95% of the time.

| Task | Parameter | Parameter value | SD in parameter value | Parameter for 90% correct | Parameter for 95% correct | SD in parameter value | Parameter for 90% correct | Parameter for 95% correct |

|---|---|---|---|---|---|---|---|---|

| Numerosity | a | 0.110 | 0.030 | 0.187 | 0.208 | 0.045 | 0.225 | 0.258 |

| Ter | 0.400 | 0.045 | 0.515 | 0.548 | 0.068 | 0.573 | 0.622 | |

| vD | 0.110 | 0.035 | 0.020 | 0 | 0.053 | 0 | 0 | |

| Lexical ecision | a | 0.150 | 0.040 | 0.253 | 0.282 | 0.060 | 0.304 | 0.347 |

| Ter | 0.450 | 0.040 | 0.553 | 0.582 | 0.060 | 0.604 | 0.647 | |

| vH | 0.460 | 0.122 | 0.147 | 0.058 | 0.183 | 0 | 0 | |

| vN | 0.200 | 0.050 | 0.072 | 0.036 | 0.075 | 0.008 | 0 |

Note. Parameter refers to the population parameter value, SD is the standard deviation in the population parameter value. a is boundary separation, Ter is nondecision component of response time, vH, and vN are the drift rates for high frequency words and for nonwords respectively. vL and vV (drift rates for low and very low frequency words) were not included because there was no value greater than 0 for either 90% or 95% correct detection.

To perform a power analysis, we needed an alternative hypothesis. We assumed another population (e.g., for which the subjects had deficits) and estimated the value of the mean to obtain 90% and 95% correct classification of individuals. Boundary separations were assumed to be larger, their nondecision times were assumed to be longer, and drift rates were assumed to be lower. In each case, the SD in the model parameters for this population was assumed to be the same as for the non-deficit population (i.e., the undergraduates in our experiments).

Given the means for the non-deficit population and the SD's for both, we found means for the deficit population so that a score selected from either distribution would be classified correctly 90% of the time and another set of means that would produce a 95% correct classification. Results are shown in Table 3 columns 5 and 6. We also did the same analyses with SD's for both groups 1.5 times larger than those in column 4 of Table 3 and these are shown in columns 7 and 8.

Results showed that for boundary separation and nondecision time, the differences between the values for the deficit population and the undergraduate population for 90 and 95% classification accuracy were large, but only as large as differences that have been found in previous studies with older adults. This means that these parameters are in the range that might be useful for classifying individuals. For example, for 90% correct classification with the smaller of the two SD's, the means for nondecision time and boundary separation are about the same as those for older adults (Ratcliff et al., 2001, 2004, 2010).

However, for drift rates, the classification would be much more difficult. Few of the conditions had drift rates that would separate the population with deficits from the undergraduate population. For the easy condition in numerosity and the low- and very-low frequency word conditions in lexical decision, the values of the drift rates to provide correct classification were negative, and so these conditions are not shown in Table 3. For drift rates for the difficult condition in the numerosity design and high-frequency words and nonwords in the lexical decision design, the drift rates to achieve 90% correct classification were low enough that performance would be near, but not quite at chance (except for high frequency words in lexical decision).

But for 95% correct classification and for the larger values of the SD in drift rates across subjects, performance would be near chance in a condition to detect a deficit. This suggests that the range of individual differences in drift rates in these tasks is so large that individuals with a deficit would have a large overlap with the normal range. This analysis is based on each parameter separately and shows that drift rates in single conditions are likely not to be useful detecting deficits in these tasks and designs. However, it may be possible to use multivariate methods to improve classification with combinations of several parameters (e.g., drift rates, boundary separation, and nondecision time) and, if subjects were tested on multiple tasks, combinations of measures across tasks might also improve classification.

Correlations Among Parameters

If estimates of the model's parameters from small numbers of observations correlate positively with estimates from large numbers, then small numbers can still be used to examine individual differences such as whether model parameters are correlated with measures such as IQ, reading measures, depression scores, etc.

The correlations in Table 4 show the consistency of parameter estimates across the various groupings of trials and numbers of observations for Experiments 1 and 2. For each parameter, the table shows the correlations between that parameter as estimated for the different groups of trials and that parameter as estimated from all the data.

Table 4. Correlations between model parameters for fits to small numbers of trials with model parameters from fits to all the trials.

| Task | Trial block | a | Ter | v1 | v2 | v3 | v4 |

|---|---|---|---|---|---|---|---|

| Numerosity | 1-80 | 0.278 | 0.675 | 0.247 | 0.292 | ||

| 81-160 | 0.735 | 0.578 | 0.304 | 0.304 | |||

| 161-240 | 0.687 | 0.532 | 0.260 | 0.306 | |||

| 1-160 | 0.617 | 0.718 | 0.377 | 0.404 | |||

| 161-320 | 0.773 | 0.628 | 0.546 | 0.438 | |||

| 1-320 | 0.733 | 0.783 | 0.538 | 0.467 | |||

| 321-640 | 0.877 | 0.852 | 0.725 | 0.543 | |||

| Lexical Decision | 1-120 | 0.704 | 0.515 | 0.357 | 0.526 | 0.474 | 0.467 |

| 121-240 | 0.715 | 0.487 | 0.347 | 0.568 | 0.476 | 0.497 | |

| 241-360 | 0.809 | 0.670 | 0.359 | 0.584 | 0.654 | 0.447 | |

| 1-240 | 0.795 | 0.597 | 0.424 | 0.625 | 0.611 | 0.438 | |

| 241-480 | 0.871 | 0.760 | 0.363 | 0.609 | 0.731 | 0.394 | |

| 1-480 | 0.860 | 0.777 | 0.525 | 0.711 | 0.730 | 0.485 | |

| 481-960 | 0.889 | 0.802 | 0.408 | 0.722 | 0.844 | 0.473 |

Note: v1=vE and v2=vD for numerosity (vE and vD are the drift rates for the easy and difficult conditions respectively), and v1=vH, v2=vL, v3=vV, and v4=vN for lexical decision (vH, vL, vV, and vN are the drift rates for high, low, and very low frequency words and for nonwords respectively). a is boundary separation and Ter is nondecision component of response time.

As would be expected, the correlations increase as the number of trials increases. For boundary separation and nondecision time, the correlations are strong even with smaller numbers of trials, mostly above .5 for both tasks (except the 1-80 group for numerosity). The correlations for drift rates are lower, ranging from around .25 for smaller numbers of observations to over .5 for larger groups for Experiment 1 and from around .35 for smaller numbers of observations to over .7 for the larger groups for Experiment 2. There was one unexpected result: in Experiment 2, correlations for drift rates for nonwords are below those for low- and very-low-frequency words even though there were more observations for the nonwords. We have no explanation for this.

The conclusion from these correlations is that consistency in parameter values is good from small to large numbers of observations for boundary separation and nondecision time but not drift rates. Thus, if the aim is to examine differences among individuals or populations, this can be done for nondecision time and boundary separation with smaller numbers but more observations would be needed for drift rates.

Summary for Experiments 1 and 2

The data from Experiments 1 and 2 show that there is enough power in the numbers of observations provided by a 20- or 25-min. session of around 200-300 trials to give estimates of boundary separation and nondecision time that are sufficiently precise to detect differences between populations of subjects and to study individual differences. However, something different would be needed for drift rates, for example, a different task with a smaller range of drift rates, or multivariate methods that combine several model parameters.

Simulation Study 1

For this study, we generated simulated data for 64 subjects and evaluated how well each of the eight methods for fitting the diffusion model reproduced the ordering of the values of the parameters across subjects. Simulated data were generated using the random walk approximation (Tuerlinckx, Maris, Ratcliff, & De Boeck, 2001). If the fitted values are strongly correlated with the generating values, then the fitted values can be used to investigate correlations between parameters of the model and subject variables such as age or IQ.

We simulated the data using the values of the diffusion model's parameters that best fit the data from Experiments 1 and 2. For Experiment 1, numerosity, the simulations were performed with 40, 100, and 1000 observations for each condition (easy and difficult). For Experiment 2, lexical decision, the simulations were performed either with 20 observations for each of the word conditions (high-, low-, and very-low-frequency) and 60 for the nonwords, or 200 for each of the word conditions and 600 for nonwords.

The values of the drift rates for the conditions were perfectly correlated. For the numerosity design, a random number was added to the easy condition drift rate and half the same random number was added to the smaller drift rate for each subject. For the lexical decision design, the same random number was added to each drift rate.

For each of the numbers of observations, data were simulated for the 64 subjects, once with 4% contaminant RTs and once without contaminants. For each subject, the value of each parameter was drawn randomly from a normal distribution for which the mean and SD across subjects (bottom of Tables 1 and 2) were rounded versions of those from Experiments 1 and 2. The contaminant RTs were obtained by adding a delay randomly selected from a uniform distribution with range 2000 ms (see Ratcliff & Tuerlinckx, 2002).

For the model to be fit successfully, it is best to have at least one condition with enough errors to provide a modest estimate of the RT distribution for errors. For numerosity, the drift rate for the difficult condition was low enough that there were enough errors for the simulations with the lowest number of observations (usually greater than 6) to constrain fitting the model. Similarly, for lexical decision, the drift rates were low enough for the low- and very-low frequency words to provide enough errors to constrain the model fitting.

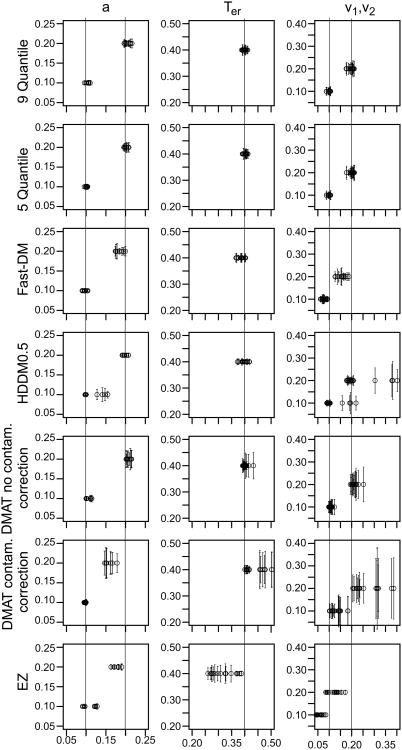

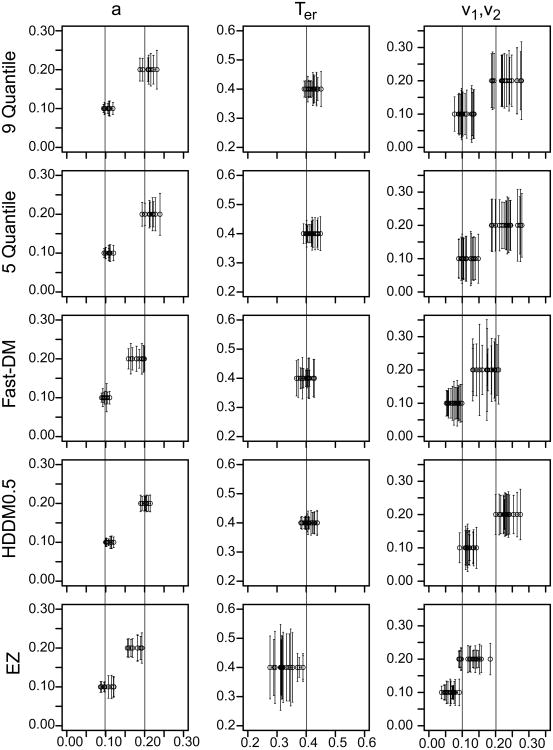

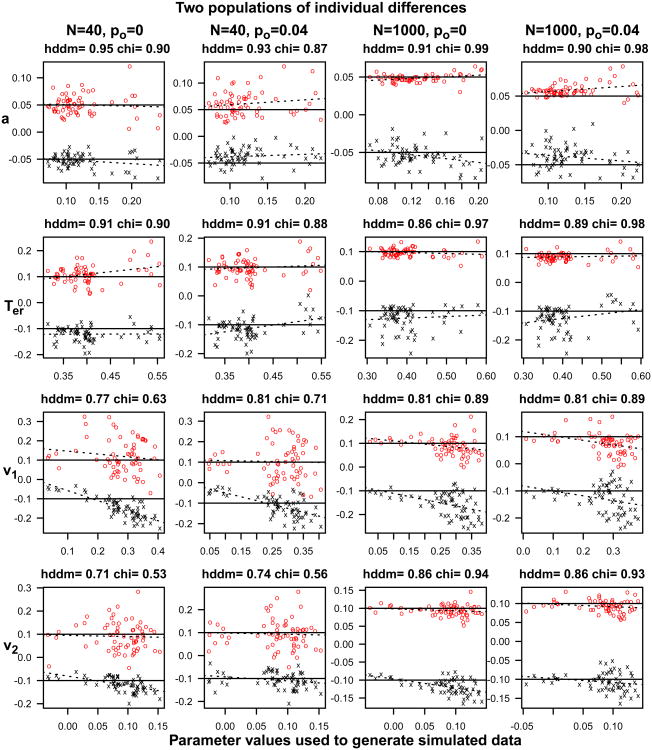

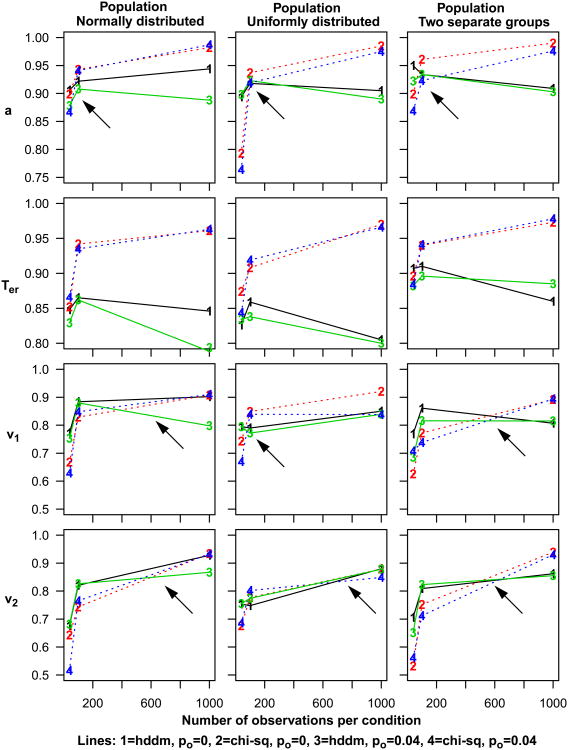

For some of the 64 simulated subjects, the combinations of parameter values were not like those typically observed in practice. For example, large across-trial variability in nondecision time occurred with small nondecision time. However, we did not try to assess the plausibility of the various combinations; instead we let them vary independently to provide a wide range of combinations.