Abstract

Objective

To conduct a systematic review of automatic notification methods and consider evidence-based recommendations for best practices in improving the timeliness and accuracy of critical value reporting.

Results

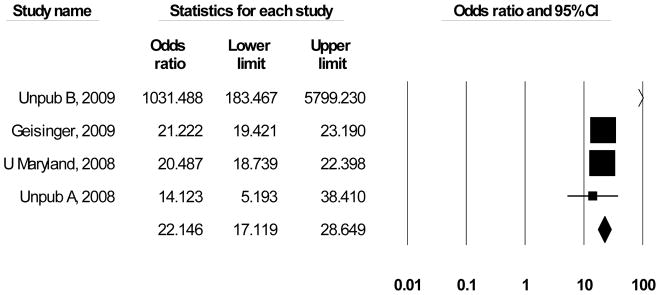

196 bibliographic records were identified, with nine meeting review inclusion criteria. Four studies examined automated notification systems and five assessed call center performance. Average improvement from implementing automated notification systems is d = 0.42 (95% CI = 0.2 – 0.62) while the average odds ratio for call centers is OR = 22.1 (95% CI = 17.1 – 28.6).

Conclusions

The evidence, though suggestive, is not sufficient to make a recommendation for or against using automated notification systems as a best practice to improve the timeliness and accuracy of critical value reporting in an in-patient care setting. Call centers, however, are effective in improving the timeliness and accuracy of critical value reporting in an in-patient care setting, and are recommended as an “evidence-based best practice.”

Keywords: Clinical laboratory information systems, critical care methods, hospital laboratory organization and administration, medical laboratory personnel organization and administration

1.0 Background

It has been more than 40 years since Lundberg [1] articulated the importance of defining and communicating a laboratory test result that identifies a treatable life-threatening condition. Critical value reporting is now a part of the accreditation standards for the Joint Commission [2] and the College of American Pathologists [3], [4]; noted as a National Patient Safety Goal (Joint Commission, Section 5.8.7 [5]); a key element in the World Health Organization’s World Alliance for Patient Safety [6]; codified in the International Organization for Standardization (ISO EN 15189) [7]; and required by the Clinical Laboratory Improvement Amendments (CLIA) regulations [8, 9].

The attention directed towards improvements for critical value notification is driven by the assumption that timely reporting will lead to timely clinical interventions and corresponding secondary prevention of co-morbidities and more effective treatment outcomes. Despite the number of entities interested in improving critical value reporting, evidence has been lacking concerning which practices are effective at achieving these improvements.

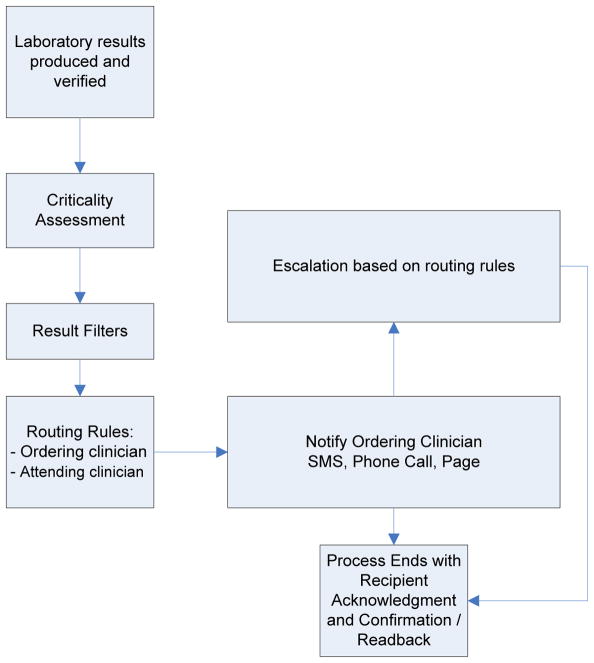

Implementing an effective critical value reporting system is concomitantly complex (Figure 1). A series of inter-dependent decisions and processes must be considered: What is a critical value? How quickly do the verified results need to be reported? Who is responsible for initiating the notification and what skills and knowledge sets do they require? What communication channels are to be used (e.g., phone call, SMS text messaging, electronic health record [EHR] alert, pager) to assure an accurate report is directed to the right person? How is “read-back” verification documented with the specific medium chosen? What is the chain of responsibility in receipt of the alert (attending physician, the responsible physician or the responding clinician)? What is the allowable response time before an escalation is triggered and if escalation is triggered, what form should it take? How are these inter-dependencies addressed for alerts to occur within as well as across organizational boundaries?

Figure 1.

General Process Model in Critical Value Reporting

1.1 Quality Gap: Manual Notification of Critical Values

The standard notification mode in most healthcare facilities includes a manual process of contacting clinicians, connecting them to the laboratory, and conveying critical results verbally. When contact is not successfully completed, escalation procedures are followed, based on routing rules and procedures relevant to the indications for testing, the clinician who ordered the test, attending clinicians, and finally, supervising clinicians. This is often a time-consuming practice that diverts the laboratorian’s attention from other laboratory work, frequently results in the handoff of information to an intermediary, and creates opportunities for transfer errors and reporting delays. Alternative mechanisms that have been instituted to replace the standard laboratory phone contact efforts include the use of automated notification systems and call centers (also known as “customer service centers”).

1.2 Practice Descriptions

Automated notification systems are automated alerting systems or computerized reminders using mobile phones [10], pagers [11] [12], email or other personal electronic devices [13] to alert clinicians of critical value laboratory test results. Upon receipt of an automated notification, the responsible or ordering physician, appointed nurse, or resident acknowledges the critical value and confirms receipt of the alert. If the alert is not acknowledged within a specified timeframe, these systems typically revert to a manual notification system. Automated notification and alerting functions are increasingly frequent features of integrated health information exchange systems [13].

Call Centers involve the use of a centralized unit responsible for communication of critical value laboratory test results via telephone to the responsible caregiver. Twenty percent of medical centers reported using centralized call centers to communicate laboratory critical values [14].

2.0 Methods

This evidence review followed the CDC’s Laboratory Medicine Best Practices Initiative’s (LMBP) “A-6 Cycle” systematic review methods for evaluating quality improvement practices [15]. This approach is derived from previously validated methods, and is designed to produce transparent systematic review results of practice effectiveness to support evidence-based best practice recommendations. The LMBP review topic selection criteria require the existence of: (1) a measurable quality gap; (2) outcome measure(s) of broad stakeholder interest addressing at least one of the Institute of Medicine healthcare quality aims: safe, effective, patient-centered, timely, efficient and equitable [16]; and (3) quality improvement practices available for implementation. A review team conducts the systematic review including a review coordinator and staff specifically trained to apply the LMBP methods. The review strategy and assessment of studies is guided by a multi-disciplinary expert panel including individuals selected for their diverse perspectives and relevant expertise in the topic area, laboratory management, and evidence review methods.1 The process begins with an initial screening of all bibliographic search results and ends with a full-text review, abstraction and evaluation of each eligible study using the LMBP methods. To reduce subjectivity and the potential for bias, all screening, abstraction and evaluation is conducted by at least two independent reviewers and all differences are resolved through consensus.

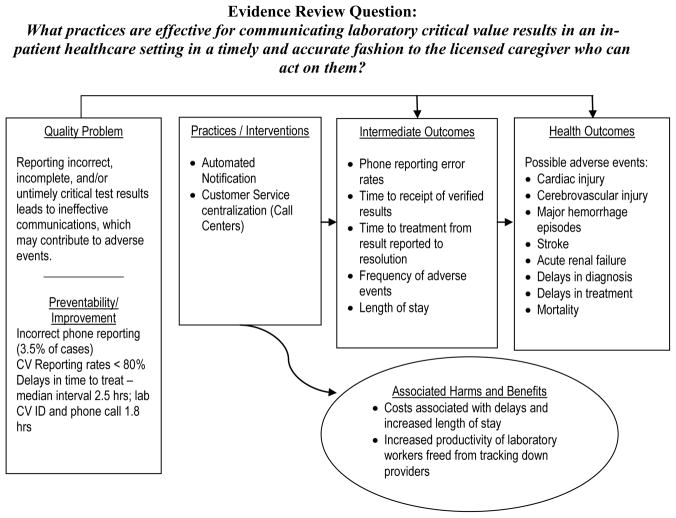

The question answered by this evidence review is: What practices are effective for communicating laboratory critical value results in an inpatient healthcare setting in a timely and accurate fashion to the licensed caregiver who can act on them? This review question is addressed in the context of an analytic framework for the quality issue timely and accurate reporting critical values (Figure 2). The relevant PICO elements are:

Figure 2.

LMBP Quality Improvement Analytic Framework: Critical Values Reporting and Communication

Population: all patients in healthcare settings with laboratory results that include a critical value

Intervention(s): automated notification systems and call centers for communicating critical values

Comparison practice/intervention(s): manual critical values notification systems

Outcomes: timeliness and accuracy of reporting or receipt of critical values information, or timeliness of treatment based on critical values information.

The literature search strategy was developed with the assistance of a medical librarian and included a systematic search in September 2011 of three electronic databases (PubMed, Embase and CINAHL) for English language articles from 1995 to 2011. The search contained the following Medical Subject Headings: cellular phone; clinical laboratory information system; computers, handheld; critical care; and hospital communication systems as well as these keywords: alerting system; automated alerting system; call center; critical value; and notification process. The search strategy also included hand searching of bibliographies from relevant information sources, consultation with and references from experts in the field and the solicitation of unpublished quality improvement studies resulting in direct submissions to the Laboratory Medicine Best Practices Initiative. The screening, abstraction and evaluation of individual studies was conducted by at least two independent reviewers.

To assist with the judgments of impact and consistency across studies, results are standardized to a common metric using meta-analytic technique whenever possible, and plotted on a common graph. A grand mean estimate of the result of the practice is calculated using inverse variance weights and random-effects models,2 and is a valuable tool for estimating precision and assessing the consistency and patterns of results across studies [17]. The key criteria for including studies in the meta-analyses are sufficient data to calculate an effect size, a good or fair study quality rating (estimating the extent to which each study yields an unbiased estimate of the result of the practice), and use of an outcome that is similar enough to the other studies being summarized. When outcomes are similar and the study’s effect size is attributable to the intervention or practice, then the grand mean estimate and its confidence interval is likely a more accurate representation of the results of a practice than that obtained from individual studies [18]. Occasionally, studies will meet these criteria, but are sufficiently different in implementation or population to be excluded from the meta-analysis. By convention, all meta-analysis results are presented in tabular forest plots and are generated using Comprehensive Meta-analysis software (Statistical Solutions, v. 2.2.064).

3.0 Evidence review synthesis and results

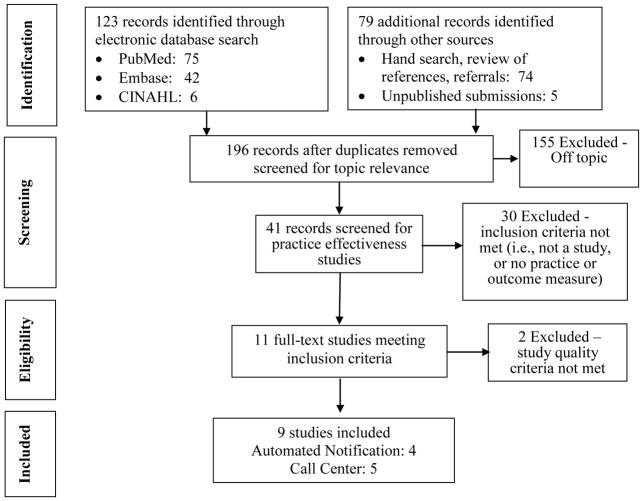

These search procedures yielded 123 separate bibliographic records that were screened for eligibility to contribute evidence of critical value communication. An additional 79 records were identified through hand searching, and unpublished submissions (Figure 3). An annotated bibliography for these studies is provided in Appendix C.

Figure 3.

Systematic Review Flow Diagram

The full text review and evaluation of the 11 eligible studies resulted in excluding 2 studies for poor study quality. Four studies provided valid estimates of the impact of automated notification and five provided valid estimates on call centers for improving the communication of critical values. Appendix D provides the summary tables for abstracted and standardized information and study quality ratings for each eligible study reviewed.

3.1 Evidence of Automated Notification Practice Effectiveness

Of the five studies identified that reported results about the change in communication of critical values after implementing an automated notification system, one [19] was excluded due to multiple sources of bias in how the reported estimate was measured prior to and following implementation of the practice. Each of the four remaining studies examined the effectiveness of automated notification systems using slightly different outcome measures, and each reported a substantial reduction in the time to communicate critical values. Table 1 provides the summary effectiveness data for the practice of automated notification. Etchells et al. [12] reported the results of a computer system-driven paging system for communicating 165 critical values for 108 patients. After implementing an automated notification system, the median interval decreased from 39.5 to 16 minutes (p=0.33) between placement of the critical value into the laboratory information system to the writing of an order on the patient’s chart in response to the critical value. Kuperman et al. [11] similarly reported the results of a randomized control trial using a similar automated paging system in communicating 192 alerts (94 intervention, 98 controls) for 178 subjects. Mean response time was reduced from 4.6 to 4.1 hours (p=0.003, d = 0.434, CI =0.148–0.720). Park et al. [10], using a pre/post design, measured results as the time interval between dispatching a critical value result alert to acknowledgement by the responsible caregiver and reported a median decrease from 213 to 74.5 minutes (d = 0.414, CI = 0.143–0.685). After implementing an automated notification system, Piva et al. [13] documented a decrease from 30 to 11 minutes in the mean time from detection of a critical value to acknowledgement by the responsible clinician.

Table 1.

Body of Evidence Summary for Automated Notification of Critical Values (CV)

| Study (Quality and Effect Size Ratings) | Population/Sample | Setting | Time period | Results (Specimen ID Error Rates) |

|---|---|---|---|---|

Etchells et al. 2010

|

165 critical values in 108 patients | 4 general medicine clinical teaching units | 02/2006–05/2006 | Median time to respond: Practice: 16 mins (IQR 2–141) Comparator: 39.5 mins (IQR 7–104.5) (p=0.33) |

Kuperman et al. 1999

|

178 subjects (medical and surgical in-patients); 192 alerts (94 intervention/98 controls) | 720-bed tertiary care academic medical center | 12/1994–1/95 (medical) 9/95–10/95 (surgical) |

Time to treat: Practice median time: 60min Comparator median time: 96min (p=0.003) Practice mean, 4.1 vs. Comparator mean 4.6 hours (p = 0.003) d = 0.434 (CI =0.148–0.720) |

Park et al. 2008

|

Pre: 121 alert calls Post: 96 alert calls |

ICU and general wards of 2200-bed tertiary care urban academic medical center | (pre) 1/2001–12/2001 (post) 7/2005–6/2006 |

Pre: Total: Median = 213 minutes; Mean 343.3 (sd 369.6) n = 121 Post: Total: Median = 74.5 minutes ; Mean = 203.2 (sd = 294.1) n = 96 d = 0.414 (CI = 0.143–0.685) (p<0.001) |

Piva et al. 2009

|

Study period: 7,320 CVs (4,392 routine testing; 2,928 emergency testing) 82% found in inpatients. | 300-bed teach hospital and research center, annual test volume > 1 million | Pre: 1/2007–2/2007 Post: 1/2008–2/2008 |

(1) Time to receipt - Pre: Average 30 min; Post: Average 11 min (2) % Reported within 1 hour Pre: <50% —unsuccessful Post: 10.9% - unsuccessful |

| BODY OF EVIDENCE RATINGS |

# Studies by Quality and Effect Size Ratings 1 Good/Substantial 3 Fair/Substantial |

|||

|

Consistency Overall Strength |

YES SUGGESTIVE | |||

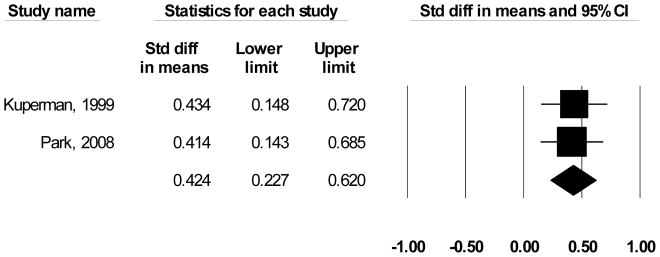

As shown in Table 1, only 1 of the 4 studies reporting findings was rated “good” [12], and only two reported sufficient data with which to calculate a standardized effect size ([10, 11];). Since the results being summarized are based on means, Cohen’s d was selected to represent study findings [20]. Cohen’s d is calculated as the difference in means divided by their pooled standard deviation, so that “0” indicates that the two practices are equally successful and observed differences are quantified according to their location along a standardized normal distribution. The grand mean for improving timeliness of communicating critical values is d = 0.42 (CI = 0.23 – 0.62) and the findings for the two studies are homogeneous (Figure 4). Translating this result into a common language estimate [21], the time to report a randomly selected critical value using an automated notification system will be faster than a randomly selected manually reported critical value approximately 61.8 percent of the time. Using LMBP criteria, on the basis of the number of studies and their corresponding study quality and effect size ratings, the overall strength of evidence rating for automated notification systems is “suggestive.”

Figure 4. Random-effects Meta-Analysis of Improvement in Critical Values Reporting Time Using Automated Notification Systems*.

*Only findings that could be standardized to a common metric (effect size) are included in the figure

3.2 Call Center Practice Effectiveness Evidence

Two published and four unpublished studies contributed data on the effectiveness of call centers for improving the timeliness of critical values communication. Due to a “poor” study quality rating, [22] was omitted from the review. Saxena et al. [23] used a cross-sectional design in which the laboratory technologist called the “customers” call centers and reported an average decreased time to receipt of critical values information from 38 minutes to 10 minutes. Geisinger [24] using a pre/post design using a call center staffed with 21 FTE’s, documented an increase from 50% to 95.5% ( d = 1.684 [CI = 1.635–1.733], OR = 21.2 [CI = 19.4 – 23.2]) of calls completed within 30 minutes from the identification of the verified critical result to acknowledgement by the responsible licensed caregiver. Using a before/after design measuring percentage of calls completed within one hour, University of Maryland [25] reported improvements from 76.7 percent to 95.7 percent, (d = 0.697 [CI = 0.149–1.543] OR = 14.1 [CI = 5.2 – 38.4]) while Unpublished B [26], using a time-series analysis improved from 46.7 to 92.1 percent for the first series and 49.2 to 100 percent for the second series of critical values calls completed within one hour (d = 3.826 [CI = 2.9874 – 4.778 [OR = 1031.5 [CI = 183.5 – 5799.2]).

Two of the five studies reporting findings were rated “good” quality [25, 26], and four of the five studies reported sufficient data to calculate a standardized effect size Unpublished B [24–27]. Most of the data were based on dichotomized criteria (e.g., percent communicated within an hour) therefore odds ratios were used to represent the findings [28]. An OR of 1 = no difference, while differences are distributed along a logarithmic scale between 0 and N. Scores greater than 1 indicate support for improving timeliness through the use of call centers. Random-effects meta-analysis (Figure 5), indicates that results had relative heterogeneity but were still strongly supportive of implementing call centers (Mean OR = 22.2 (CI = 17.1 – 28.7)). The relative heterogeneity is attributable to the extremely large effect size for Unpublished B (2009); removing that outlier returns a homogeneous Mean OR = 20.8 for the improvement in timeliness from implementing call centers (CI = 19.6 – 22.2). Converting this latter value into the common language statistic [21], a randomly selected critical value will be reported by a call center faster than a randomly selected laboratory reported value approximately 88.6 percent of the time. Using LMBP criteria, on the basis of the number of studies and their corresponding study quality and effect size ratings, the overall strength of evidence rating for call centers systems is “moderate.”

Figure 5. Random-effects Meta-Analysis of Improvement in Critical Values Reporting Time Using Call Centers*.

*Only findings that could be standardized to a common metric (effect size) are included in the figure

4.0 Conclusion and Recommendation

No recommendation is made for or against the use of automated notification systems in communicating critical values to responsible licensed healthcare providers for inpatients in hospital settings. Although multiple studies of automated notification systems provided evidence of substantial improvement in the timeliness of critical values notification, only one study was judged to be of “good” quality. Given LMBP criteria that multiple good studies are necessary to recommend a practice, the overall strength of evidence for automated notification systems is rated “suggestive.”

On the basis of moderate overall strength of evidence of effectiveness, call centers are recommended as a best practice to improve critical values notification in inpatient care settings. The moderate overall strength of evidence rating is due to sufficient evidence of practice effectiveness from 5 studies; two “good” and three “fair” studies reporting “substantial” improvement in the timeliness of communicating critical values information.

4.1 Additional Considerations

4.1.1 Additional benefits

Although the available evidence neither supports nor rejects automatic notification systems, it seems likely that health care enterprises will increasingly seek integrated technology systems to manage patient processes, including automated critical value notification. The electronic audit trail captured by the automated notification system can play an important role in performance monitoring and evaluation, including targeted interventions for clinicians who do not attend to critical results [19]. In addition, some observers have noted that the development of automated notification systems can productively lead to a re-examination of critical value policies and thresholds and the development of interpretative reporting support, particularly for critical results in areas such as coagulation disorders; hemoglobin and anemia evaluations; autoimmune disorders; serum protein analysis; immunophenotyping analysis; genetic and molecular diagnostics; endocrinology; toxicology; and other new tests with which clinicians may be less familiar [13]. For the use of call centers, the principal additional benefit appears to come from freeing laboratory workers from the time consuming diversion of locating the responsible caregiver.

4.1.2 Associated Harms

Automated notification systems may have unintended disadvantages, such as disrupting usual lines of communication, and providing too much/too frequent information [29]. The risk of losing back-up contact information must be properly anticipated [30]. There are also risks for patient privacy violations, with protected health information being misdirected and/or mobile communications devices being accessible to unauthorized users.

The use of call centers may require additional communications with laboratory staff when a responsible caregiver requires additional information that call center staff are unable to provide. No information is available about the frequency of this occurrence, but it may undermine the convenience for assigning critical value communication responsibilities to the call center.

4.1.3 Economic evaluation

Only one study provided any data related to an economic evaluation; [23] reported that 230 hours of Information Technology staff time were required over a 5-month period to develop the automated notification system. No other practice-specific economic evaluations (cost, cost-effectiveness, or cost-benefit analyses) were found in the search results described above for call centers. It may be observed, however, that call center-based critical value notification requires that the healthcare facility have sufficient call volume and are adequately staffed to communicate the calls. Call center agents must be properly trained with automated policy and procedure manuals incorporated into the ‘help screens’ used by the call agents. When the combined volume of CV calls and other activities is not sufficient, it may not be economical to operate a call center solely for the purpose of reporting critical laboratory test values.

4.1.4 Feasibility of implementation

For an automated notification system to have a reasonable chance of succeeding, health system administrators must assure policies and procedures are in place that mandate two-way communication of required acknowledgment/confirmation of receipt. Policies concerning routing and escalation after unsuccessful notification attempts must be in place, staff must remain proficient in the use of manual procedures in the event of a technology failure and/or when escalation protocols require that laboratory staff revert to manual contacts.

4.2 Future research needs

This review is restricted to evidence concerning communication methods, and does not focus on the extent to which these methods support effective clinical decision making. As Etchells et al. [12] and Valenstein [31] have noted, however, while improving communication with end users is important, the ultimate value of these improvements rests with how clinicians use the critical value information that is reported to them. Besides reducing clinicians’ and laboratorians’ workload, functional requirements for an effective critical value reporting system may include appropriate routing of results to an alternative receiver, and compliance with auditable standards.

Other industries have successfully dealt with challenges associated with timely and accurate reporting of critical events, such as air traffic control, first responders, and certain industries with concentrated hazardous materials. Successes have entailed clearly defining what criteria define critical results, improving communication with end users of the information, as well as appropriate routing of results to an alternative receiver [31]. Many industries have standardized protocols including the nuclear power industry [32], and chemical manufacturing plants [33]. Protocols have been developed for such specific components of critical value reporting as assessing specific time-critical control requirement [34], staff training in use of critical reporting systems [35], and effectiveness of in-place systems [36]. Laboratory medicine communities of practice may profit from the work already completed in other safety-critical industries. It would be worthwhile to review which of the effective practices developed in other industries can be translated to laboratory medicine critical value reporting.

4.3 Limitations

Simply improving the accuracy and time required to transmit critical values does not, of course, ensure better health outcomes for patients. Timely acquisition of the specimen, prompt management of specimen tests and verification of their results, and many additional decisions and reflex actions precipitated by receipt of a critical value are required to ensure better patient outcomes when critical values are present. Adopting best practices for improving communication of critical values information may be an integral, but is not a sufficient method to improve patient health outcomes.

The LMBP systematic review methods are consistent with practice standards for systematic reviews but all of these methods are imperfect and include subjective assessments at multiple points that may produce bias. In particular, rating study quality depends on consensus assessments that may be affected by such things as rater experience and the criteria used. This systematic review may also be subject to publication bias, although unlike most systematic reviews this review includes unpublished studies which may mitigate that bias. Nonetheless, unpublished studies may be subject to a more general reporting bias in which institutions were more likely to share large and desirable effect sizes. The restriction to English language studies to satisfy the requirement of multiple reviewers for each study may also introduce bias.

Table 2.

Body of Evidence Summary for Use of Call Centers in Critical Value (CV) Reporting

| Study (Quality and Effect Size Ratings) | Population/Sample | Setting | Time period | Results (Specimen ID Error Rates) |

|---|---|---|---|---|

Geisinger 2009

|

Avg. 70 CV calls/day to inpatient units and ER. All CVs excluding Anatomic Pathology reported for GMC testing population; Post: 12,306 CV calls | >300 bed teaching hospital, >1 million tests/yr | Pre: 2006 (12 mos) Post: 1–6/2009 (6 mos) |

% CV results reported within 30 min to responsible licensed caregiver Pre (2006): 50% Post (2009): 95.5% d = 1.684 (CI = 1.635–1.733); OR = 21.2 (CI = 19.4 – 23.2) |

Saxena et al. 2005

|

All CV notifications Pre: Not reported Post: between 334–700; approximately 86% inpatients; 14% outpatients |

Urban acute care teaching hospital, >700 beds | Pilot: 04/2003 Implementation: 11/2003 – 05/2004 Post: 12/2004 |

Monthly average CV lab test notification time: Pre: 38 minutes Post: 10 minutes |

U Maryland, 2008

|

All CV notifications Pre: Approximately 6,600 Post: Approximately 3,000 |

Urban, acute care teaching hospital, >700 beds | Pre: 3/28/08 – 4/27/08 Post: 4/28/08 – 5/14/08 |

Percentage of calls within 1 hour: Pre: 76.7 (SD = 13.7) Post: 95.7 (SD = 2.1) d = 1.665 (CI = 1.616–1.714) ; OR = 20.5 (CI = 18.7 – 22.4) |

Unpublished A, 2008

|

Approximately 200 CV calls/day –inpatient only - includes all CV test results within time period | Large urban academic medical center in Mid-Atlantic U.S. with more than 600 beds; annually > 32,000 inpatients; 300,000 outpatients | 1 mo. pre-call center (3/26–4/27/08) and 1 mo. post-call center (4/28-5/28/08) | % CV results reported within 1 hour: Pre: 76.7% daily average (SD: 13.74; Variance: 188.69; Range: 37.5 – 95.3% daily) Post: 92.1% daily average (SD: 5.35; Variance: 28.62; Range: 71.6 – 99% daily). |

Unpublished B, 2008

|

A sample of 500–750 CV test results/mo; study population was CVs for routine outpatient laboratory work. Inpatient or STAT excluded. | Large Health Maintenance Organization (HMO) Laboratory; > 300 beds; >1 million tests/yr. | Pre: June 2004; Post: June–July 2009 | Timeliness of reporting (within 1-hr). N = 550–750 CVs monthly (2009) Pre: June 2004: 49.2% (~320) CVs reported within 1 hour (# of calls estimated - based upon range in 2009) Time 2 (June–July 2009): June–July 2009: 100% of approximately 1,300 CVs reported within an hour d = 3.826 (CI = 2.99 – 4.78) ; OR = 1031.5 (CI = 183.5 – 5799.2) |

| BODY OF EVIDENCE RATINGS |

# Studies by Quality and Effect Size Ratings 2 Good/Substantial 3 Fair/Substantial |

|||

|

Consistency Overall Strength |

YES MODERATE |

|||

Acknowledgments

Funding Source: CDC funding for the Laboratory Medicine Best Practices Initiative to Battelle Centers for Public Health Research and Evaluation under contract W911NF-07-D-0001/DO 0191/TCN 07235

Melissa Gustafson, Devery Howerton, Anne Pollock, Barbara Zehnbauer, LMBP Critical Values Reporting Expert Panel, LMBP Workgroup members, Submitters of unpublished studies

ABBREVIATIONS

- CDC

U.S. Centers for Disease Control and Prevention

- CV

Critical Value

- EHR

Electronic Health Records

- ISO

International Organization of Standards

- IOM

Institute of Medicine

- LMBP

Laboratory Medicine Best Practices Initiative

- PICO

Population, Intervention/Practice, Comparator, Outcome

- SMS

Short message service, also often referred to as texting, sending text messages or text messaging

Appendix A LMBP Critical Value Reporting Expert Panel Members

Robert Christenson, Professor of Pathology and Medical and Research Technology, U Maryland Medical Center*

Dana Grzybicki, Professor of Pathology, U Colorado

Corinne Fantz, Co-Director, Core Laboratory, Pathology and Lab Medicine, Emory University

Lee Hilborne, Professor of Pathology and Laboratory Medicine, Quest Diagnostics/UCLA Medical School*

Kent Lewandrowski, Associate Chief of Pathology, Massachusetts General Hospital

Mary Nix, Project Officer, National Guideline Clearinghouse, Center for Outcomes and Evidence, AHRQ*

Rick Panning, Vice President, Laboratory Services, Allina Hospitals and Clinics

APPENDIX B LMBP Workgroup Members

Raj Behal, MD, MPH, Associate Chief Medical Officer, Senior Patient Safety Officer, Rush University Medical Center

Robert H. Christenson, PhD, DABCC, FACB, Professor of Pathology and Medical and Research Technology, University of Maryland Medical Center

John Fontanesi, PhD, Director, Center for Management Science in Health; Professor of Pediatrics and Family and Preventive Medicine, University of California, San Diego

Julie Gayken, MT(ASCP), Director of Laboratory Services, Anatomic & Clinical Pathology, Regions Hospital

Cyril (Kim) Hetsko, MD, FACP, Clinical Professor of Medicine, University of Wisconsin-Madison, Chief Medical Officer, COLA, Trustee, American Medical Association

Lee Hilborne, MD, MPH, Professor of Pathology and Laboratory, Medicine, UCLA David Geffen School of Medicine, Center for Patient Safety and Quality; Quest Diagnostics

James Nichols, PhD, Director, Clinical Chemistry, Department of Pathology, Baystate Medical Center

Mary Nix, MS, MT(ASCP)SBB, Project Officer, National Guideline, Clearinghouse; National Quality Measures, Clearinghouse; Quality Tools; Innovations, Clearinghouse, Center for Outcomes and Evidence, Agency for Healthcare Research and Quality

Stephen Raab, MD, Department of Laboratory Medicine, Memorial University of Newfoundland & Clinical Chief of Laboratory Medicine, Eastern Health Authority

Milenko Tanasijevic, MD, MBA, Director, Clinical Laboratories Division and Clinical Program Development, Pathology, Department, Brigham and Women’s Hospital

Ann M. Vannier, MD, Regional Chief of Laboratory Medicine & Director, Southern California Kaiser, Permanente Regional Reference, Laboratories

Sousan S. Altaie, PhD (ex officio), Scientific Policy Advisor, Office of In Vitro, Diagnostic Device (OIVD), Evaluation and Safety Center for Devices and Radiological Health (CDRH), FDA

Melissa Singer (ex officio), Centers for Medicare and Medicaid Services, Center for Medicaid & State Operations, Survey and Certification Group, Division of Laboratory Services

APPENDIX C LMBP Critical Value Reporting Systematic Review Eligible Studies

Included studies

Published

- Etchells E, Adhikari NK, Cheung C, et al. Real-time clinical alerting: effect of an automated paging system on response time to critical laboratory values: a randomised controlled trial. Qual Saf Health Care. 2010;19:99–102. doi: 10.1136/qshc.2008.028407. [DOI] [PubMed] [Google Scholar]

- Kuperman GJ, Teich JM, Tanasijevic MJ, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc. 1999;6(6):512–522. doi: 10.1136/jamia.1999.0060512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park HI, Min WK, Lee W, et al. Evaluating the short message service alerting system for critical value notification via PDA telephones. Ann Clin Lab Sci. 2008;38:149–156. [PubMed] [Google Scholar]

- Piva E, Sciacovelli L, Zaninotto M, et al. Evaluation of effectiveness of a computerized notification system for reporting critical values. Am J Clin Pathol. 2009;131:432–441. doi: 10.1309/AJCPYS80BUCBXTUH. [DOI] [PubMed] [Google Scholar]

- Saxena S, Kempf R, Wilcox S, Shulman IA, Wong L, Cunningham G, Vega E, Hall S. Critical laboratory value notification: a failure mode effects and criticality analysis. Jt Comm J Qual Patient Saf. 2005;31(9):495–506. doi: 10.1016/s1553-7250(05)31064-6. [DOI] [PubMed] [Google Scholar]

Unpublished

- Geisinger 2009 (Unpublished)

- University of Maryland 2008 (Unpublished)

- Unpublished A 2008 (Unpublished)

- Unpublished B 2009 (Unpublished)

Excluded studies

Published

- Barenfanger J, Sautter RL, et al. Improving patient safety by repeating (read-back) telephone reports of critical information. Am J Clin Pathol. 2004;121(6):801–803. doi: 10.1309/9DYM-6R0T-M830-U95Q. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Bates DW, Leape LL. Doing better with critical test results. Jt Comm J Qual Patient Saf. 2005;31(2):66–67. 61. doi: 10.1016/s1553-7250(05)31010-5. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Blick KE. Information management’s key role in today’s critical care environment. MLO Med Lab Obs. 1997;(Suppl):20–24. quiz 34–25. (Excluded, not a study) [PubMed] [Google Scholar]

- Bria WF, 2nd, Shabot MM. The electronic medical record, safety, and critical care. Crit Care Clin. 2005;21(1):55–79. viii. doi: 10.1016/j.ccc.2004.08.001. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Carraro P, Plebani M. Process control reduces the laboratory turnaround time. Clin Chem Lab Med. 2002;40(4):421–422. doi: 10.1515/CCLM.2002.068. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Catrou PG. How critical are critical values? Am J Clin Pathol. 1997;108(3):245–246. doi: 10.1093/ajcp/108.3.245. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Chen TC, Lin WR, et al. Computer laboratory notification system via short message service to reduce health care delays in management of tuberculosis in Taiwan. Am J Infect Control. 2011;39(5):426–430. doi: 10.1016/j.ajic.2010.08.019. (Excluded, not an outcome of interest) [DOI] [PubMed] [Google Scholar]

- Dighe AS, Rao A, et al. Analysis of laboratory critical value reporting at a large academic medical center. Am J Clin Pathol. 2006;125(5):758–764. doi: 10.1309/R53X-VC2U-5CH6-TNG8. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Emancipator K. Critical values: ASCP practice parameter. American Society of Clinical Pathologists. Am J Clin Pathol. 1997;108(3):247–253. doi: 10.1093/ajcp/108.3.247. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Evans RS, Wallace CJ, et al. Rapid identification of hospitalized patients at high risk for MRSA carriage. J Am Medical Informatics Assoc. 2008;15(4):506–512. doi: 10.1197/jamia.M2721. (Excluded, not a practice of interest) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzpatrick K. Computer applications in health care. Physician Assist. 1993;17(7):57–58. 60–51. (Excluded, not a study) [PubMed] [Google Scholar]

- Hanna D, Griswold P, et al. Communicating critical test results: safe practice recommendations. Jt Comm J Qual Patient Saf. 2005;31(2):68–80. doi: 10.1016/s1553-7250(05)31011-7. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Howanitz JH, Howanitz PJ. Laboratory results. Timeliness as a quality attribute and strategy. Am J Clin Pathol. 2001;116(3):311–315. doi: 10.1309/H0DY-6VTW-NB36-U3L6. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Howanitz PJ. Errors in laboratory medicine: practical lessons to improve patient safety. Arch Pathol Lab Med. 2005;129(10):1252–1261. doi: 10.5858/2005-129-1252-EILMPL. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Howanitz PJ, Steindel SJ, et al. Laboratory critical values policies and procedures: a college of American Pathologists Q-Probes Study in 623 institutions. Arch Pathol Lab Med. 2002;126(6):663–669. doi: 10.5858/2002-126-0663-LCVPAP. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Jackson C, Macdonald M, et al. Improving communication of critical test results in a pediatric academic setting: key lessons in achieving and sustaining positive outcomes. Healthc Q. 2009;12(Spec No Patient):116–122. doi: 10.12927/hcq.2009.20978. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Kirchner MJ, Funes VA, et al. Quality indicators and specifications for key processes in clinical laboratories: a preliminary experience. Clin Chem Lab Med. 2007;45(5):672–677. doi: 10.1515/CCLM.2007.122. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Kuperman GJ, Hiltz FL, et al. Advanced alerting features: displaying new relevant data and retracting alerts. Proceedings : a conference of the American Medical Informatics Association/... AMIA Annual Fall Symposium. AMIA Fall Symposium; 1997. pp. 243–247. (Excluded, not a study) [PMC free article] [PubMed] [Google Scholar]

- Kuperman GJ, Teich JM, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc. 1999;6(6):512–522. doi: 10.1136/jamia.1999.0060512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lippi G, Giavarina D, et al. National survey on critical values reporting in a cohort of Italian laboratories. Clin Chem Lab Med. 2007;45(10):1411–1413. doi: 10.1515/CCLM.2007.288. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Novis DA, Walsh MK, et al. Continuous monitoring of stat and routine outlier turnaround times: two College of American Pathologists Q-Tracks monitors in 291 hospitals. Arch Pathol Lab Med. 2004;128(6):621–626. doi: 10.5858/2004-128-621-CMOSAR. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Parl FF, O’Leary MF, Kaiser AB, et al. Implementation of a closed-loop reporting system for critical values and clinical communication in compliance with goals of the Joint Commission. Clin Chem. 2010;56:417–423. doi: 10.1373/clinchem.2009.135376. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Plebani M. Errors in clinical laboratories or errors in laboratory medicine? Clin Chem Lab Med. 2006;44(6):750–759. doi: 10.1515/CCLM.2006.123. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Poon EG, Kuperman GJ, Fiskio J, et al. Real-time notification of laboratory data requested by users through alphanumeric pagers. J Am Med Inform Assoc. 2002;9:217–222. doi: 10.1197/jamia.M1009. (Excluded, not a study) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saw S, Loh TP, Ang SBL, Yip JWL, Sethi SK. Meeting regulatory requirements by the use of cell phone text message notification with autoescalation and loop closure for reporting of critical laboratory results. Am J Clin Pathol. 2011;136:30–34. doi: 10.1309/AJCPUZ53XZWQFYIS. (Excluded, study quality criteria not met) [DOI] [PubMed] [Google Scholar]

- Schiff GD. Introduction: Communicating critical test results. Jt Comm J Qual Patient Saf. 2005;31(2):63–65. 61. doi: 10.1016/s1553-7250(05)31009-9. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Shabot MM, LoBue M, et al. Wireless clinical alerts for physiologic, laboratory and medication data. Proc AMIA Symp. 2000:789–793. doi: 10.1109/hicss.2000.926784. (Excluded, not an outcome of interest) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahangian S, Stankovic AK, et al. Results of a survey of hospital coagulation laboratories in the United States, 2001. Arch Pathol Lab Med. 2005;129(1):47–60. doi: 10.5858/2005-129-47-ROASOH. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

- Tate KE, Gardner RM, Scherting K. Nurses, pagers, and patient specific criteria: three keys to improve critical value reporting. Proc Annu Symp Comput Appl Med Care. 1995:164–168. (Excluded, not a study) [PMC free article] [PubMed] [Google Scholar]

- Taylor PP. Use of handheld devices in critical care. Crit Care Nurs Clin North Am. 2005;17(1):45–50. x. doi: 10.1016/j.ccell.2004.09.006. (Excluded, not a study) [DOI] [PubMed] [Google Scholar]

- Wagar EA, Stankovic AK, et al. Assessment monitoring of laboratory critical values: a College of American Pathologists Q-Tracks study of 180 institutions. Arch Pathol Lab Med. 2007;131(1):44–49. doi: 10.5858/2007-131-44-AMOLCV. (Excluded, not a practice of interest) [DOI] [PubMed] [Google Scholar]

Unpublished

- Providence-Everett (2009). Unpublished network submission. (Excluded, study criteria not met)

APPENDIX D: Evidence Summary Tables – Automated Notification

|

Bibliographic Information - Author (s) - Yr Published/Submitted - Publication - Author Affiliations - Funding |

Study - Design - Facility/Setting - Time Period - Population/Sample - Comparator - Study bias |

Practice - Description - Duration - Training - Staff/Other Resources - Cost |

Outcome Measures - Description (s) - Recording method |

Results/Findings - Type of Findings - Findings/Effect Size - Stat. Significance/Test(s) - Results/Conclusion Bias |

|---|---|---|---|---|

| Etchells E [1,3], Adhikari NKJ [1,2,3], Cheung C [1,3], Fowler R [1,2,3], Kiss A [3], Quan S [4], Sibbald W [1,2,3], Wong B [1,3] - Year: 2010 - Publication: Quality & Safety in Health Care - Affiliation: [1] Department of Medicine, University of Toronto, Toronto, Ontario, Canada. [2] Interdepartmental Division of Critical Care, University of Toronto, Toronto, Ontario, Canada. [3] Sunnybrook Health Sciences Centre, Toronto, Ontario, Canada. [4] University Health Network, Toronto, Ontario, Canada - Funding: New Age provided the system and services at a reduced cost; all other study costs funded by the University of Toronto Department of Medicine Quality Partners Program |

- Design: Randomized Controlled Study - Facility/Setting: Four general medicine clinical teaching units at Sunnybrook Health Sciences Centre, Toronto, Ontario – Canada. - Time period: 02/2006 – 05/2006 -Population/Sample: 165 critical values evaluated on 108 patients with full response time data. Note: Critical values for patients under care of ED or critical care physician excluded. Excluded critical values for troponin. - Comparator: Telephone call to the patient’s ward by laboratory technician - Study bias: If time of order was not documented, the time of administration of treatment was used to calculate response time. For pilot study, physicians did not consistently document times of their orders. |

-Description: Automated real-time paging system that sends critical laboratory values directly from laboratory information system to an alphanumeric pager carried by the responsible housestaff physician. - Duration: 4 months (02/2006 – 05/2006) - Training: Not reported except that they encouraged residents to document response times - Staff: Pager carried by senior resident on weekdays and call resident on nights and weekends, Study nurse reviewed written medical record. Laboratory technician verifies accuracy of test result and inputs into lab informational system (lab tech also telephones patient’s ward with critical lab value). 3 study physicians reviewed data for each critical value. - Other resources: Not reported - Cost: Not reported |

- Description: Time to respond (interval between the acceptance of the critical value into the laboratory information system to the writing of an order on the patient’s chart in response to the critical value). - Recording Method: Electronic patient record for laboratory results. Chart review by nurse for written medical record (medication and treatment handwritten orders) around the time of critical value. Study physicians reviewed data for each critical value. |

- Type of Findings: Comparison - Findings/Effect Size: Median time to respond Practice: 16 minutes (IQR 2–141 min) Comparator: 39.5 minutes (IQR 7–104.5min) (p=0.33) ES = Not calculable from data provided - Statistical Significance/Test(s): Wilcoxon rank sum test, Hodges-Lehman estimate of shift to calculate the median difference in response time, χ2, t tests - Results/conclusion biases: None noted. |

|

Quality Rating:

8(Good)

(10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Potential study bias: sample selection methods may introduce a study bias that would affect results (when time of order not documented, time of treatment was used to calculate response time (−1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

2 Data do not permit calculation of an effect size (−1) |

| Kuperman GJ [1,2]; Teich JM [1,2]; Tanasijevic MJ [2]; Ma’Luf N [2]; Rittenberg E [2]; Ashish Jha MA [2]; Fiskio J [1]; Winkelman J [2]; Bate, DW [1,2] - Year: 1999 - Publication: Journal of the American Medical Informatics Association - Affiliations: [1] Partners HealthCare Systems, [2] Harvard Medical School - Funding: Partly from research grant (R01 -Agency of Health Care Policy and Research) |

- Design: Randomized Controlled Study - Facility/Setting: Brigham & Woman’s Hospital; 720 bed tertiary- care academic medical center in Boston, MA - Time period: Medical: 12/1994 – 01/31/1995 Surgical: 09/01/1995 – 10/30/1995 - Population/Sample: 178 subjects (medical and surgical inpatients); 192 alerts (94 intervention, 98 controls); 4 laboratory tests with critical values and/or alert situations - Comparator: Critical values telephoned by lab technologists to patient floor (nursing staff). - Study bias: None noted |

- Description: Computer system to detect critical conditions and automatically notify the responsible physician via the hospital’s paging system. Physician identified from automated “coverage list” database that identifies primary physician for each patient at any given time. If alert not acknowledged after 15min, border of computer on patient’s floor turns red, nurse responds to alert; if after 30 min no acknowledgement, workstation in telecommunication beeps, phone operator reviews alert and calls floor. - Duration: 2 months at each service unit at different time periods (total 4 months). - Training: Not reported; outcomes assessed by trained reviewers. - Staff: Computer technicians, physician, nurses, unit secretary, telephone operator, reviewers and lab supervisor/manager; clinical alerting system, digital pager, computer workstation - Other resources: Not reported - Cost: Not reported |

- Description: Time to Treat (TTT) - Time interval from the filing of the alerting result to the ordering of appropriate treatment (other outcome of study: Time to Resolution - time interval from the filing of alerting result to the arrival time in the laboratory of a bedside test demonstrating the alerting condition was no longer present) - Recording Method: Occurrence log, Chart review |

- Type of Findings: Comparison - Findings/Effect Size: Time to treat: Practice median time: 1.0 hour (60min) Comparator median time: 1.6 hours (96min) (p=0.003); Practice mean, 4.1 vs. Comparator mean 4.6 hours, (p = 0.003) Physicians reviewed 65/94 intervention alerts (69%) –median time to treatment of 65 alerts: 0.5 hours. Nurses reviewed 7 alerts (7%) and 22 alerts (23%) reviewed by telecommunication staff who communicated them to floor.  d = 0.434 (CI =0.148–0.720) d = 0.434 (CI =0.148–0.720)- Statistical Significance/Test(s): Wilcox rank sum statistic. - Results/conclusion biases: Favorable conclusions not supported; but instead are contradicted by reported findings. Authors note that differences are not significant but focus on direction of effect. |

|

Quality Rating:

7 (Fair) (10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Study design: sample size too small and may not be representative of the results of the practice and may not be generalizable. (−1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

1 Uncontrolled deviations: results reported not clearly attributable to practice being evaluated; conclusions not supported by work (−2) |

| Park H [1]*, Min WK [1], Lee W [1], Park H [2], Park CJ [1], Chi HS [1], Chun S [1] - Year: 2008 - Publication: Annals of Clinical & Laboratory Science. - Affiliations: [1] Asan Medical Center, University of Ulsan College of Medicine, Seoul, Korea; [2] Kangbuk Samsung Hospital, Sungkyunkwan University School of Medicine, Seoul, Korea; * Current affiliation: Catholic University of Korea, College of Medicine, Seoul, Korea - Funding: Korea Health Industry Dev Institute. |

- Design: Before-After - Facility/Setting: 2,200 bed tertiary care urban, academic medical center, Seoul Korea; ICU and general wards. - Time period: Two 12-month study periods: Pre: 01/01/2001 –12/31/2001 Post: 07/01/2005 – 06/30/2006 - Population/Sample: alerts for critical hyperkalemia: Pre: 121 alert calls (ICU: 56; general wards 65) Post: 96 alert calls (ICU: 31; general wards 65) - Comparator: Lab tech telephones nurses on inpatient floor to notify of patient CV. Nurse then informs physician of patient’s CV result. Call documented in lab log. - Study bias: None noted. |

- Description: Short text message service (SMS) system for notifying physician of critical values by sending message to their personal data assistant (PDA) phones. ) -Text messages w/ patient information and test result is transmitted to appropriate physician via PDA phones - Duration: 12 months (07/01/2005 – 06/30/2006) - Training: Not discussed. - Staff: Lab technicians, nurses, physicians - Other resources: Computer software and PDA phones for all physicians - Cost: Not reported. |

- Description: Time to receipt - Time interval in minutes from dispatching critical value result alert to acknowledgement by responsible caregiver (other outcome from study: Clinical response rate -the frequency of clinical responses divided by total # critical value alerts) - Recording Method: Occurrence log |

- Type of Findings: Prestest-Posttest Pretest-posttest - Findings/Effect Size: Time to receipt Pre: Total: Median = 213 minutes; Mean 343.3 (sd 369.6) n = 121 ICU: Median = 193 minutes; Mean 306.9 (sd 336.2) n = 56 General wards: Median = 249 minutes; Mean = 374.7 (sd = 396.1) n = 65 Post: Total: Median = 74.5 minutes ; Mean = 203.2 (sd = 294.1) n = 96 ICU: Median = 93 minutes; Mean =270.6 (sd = 366.7) n = 31 General wards: Median = 63 minutes; Mean = 171.1 (sd =249.3) n = 65  d = 0.414 (CI = 0.143–0.685) d = 0.414 (CI = 0.143–0.685)- Statistical Significance/Test(s): Mann-Whitney U-test showed significant difference between clinical response times in pre and post (p<0.001). - Results/conclusion biases: Practices compared based on data collected during notably different time periods (2001 v. 2005) |

|

Quality Rating:

7 (Fair) (10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

1 Sample size too small: may not be representative of the results of the practice and may not be generalizable. (−1). Setting sufficiently distinctive and results may not be generalizable to other settings. (−1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

2 Appropriateness of statistical analysis: Compares two practices and their estimates based on data collected during notably different time periods (2001 v. 2005) (−1) |

| Piva, E [1], Sciacovelli, L [1], Zaninotto, M [1], Laposata, M [2], Plebani, M [1] - Year: 2009 - Publication: American Journal of Clinical Pathology - Affiliations: [1] Department of Laboratory Medicine, Padua University School of Medicine, Padua, Italy; [2] Vanderbilt University Hospital, Nashville TN - Funding: Self-funded |

- Design: Before-After - Facility/Setting: Padua Hospital, teaching hospital and research center, >300 bed inpatient hospital; Annual test volume: >1 million. Padua, Italy - Time period: Pre: 01/2007–02/2007 (first 2 months of study) Study period: 01/2007–12/2007 (1 year) Post: 01/01/2008 – 02/28/08 (2 months) - Population/Sample: Study period: 7,320 CVs (4,392 routine testing; 2,928 emergency testing) 82% found in inpatients. Post and post breakdown: Not reported - Comparator: Telephone CV notification system - Study bias: Did not exclude emergency critical values data |

- Description: Automated alerting system which involves the use of a computerized database (i.e., HIS; LIS) of test results. Once critical value identified and validated by clinical pathologist in charge, transmission of database creates an e-mail message for automated notification which generates an SMS (text) to cell phone of referring physician (clinician on duty) and at the department level (an alert message flashes on monitors until physician or nurse in charge of notification confirms message is received (flashing alert stops after 60 minutes). - Duration: 2 months (01/01/2008 – 02/28/08) - Training: Not discussed - Staff: physicians, clinical pathologist, nurses, laboratory - Other Resources: Not reported - Cost: Not reported |

- Description: (1) Time to receipt: - Time from detection of CV in minutes to acknowledgement by responsible clinician (2) Timeliness of reporting - % CV results reported within 1 hr:; # unsuccessful notifications w/in 1 hr/total # of CVs - Recording method: Medical reports and HCIS (health care information system) |

- Type of Findings: Pretest-Posttest - Findings/Effect Size: (1) Time to receipt - Pre: Average 30 min; Post: Average 11 min (2) % Reported within 1 hour Pre: >50% –unsuccessful Post: 10.9% - unsuccessful  ES = Not calculable from data provided ES = Not calculable from data provided- Stat. Significance/ Test(s): None reported (used MedCalc software) - Results/Conclusion Bias: No post sample data (numerator or denominator); sample size only for pre-practice period. |

|

Quality Rating:

7 (Fair) (10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Potential study bias: sample selection (includes emergency patients CV) may introduce a study bias that would affect results (−1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

1 Appropriateness of statistical analysis: Does not provide data sufficient to allow/ verify calculation of an effect size (−1) Sample sufficiency: Number of pre/post sample not reported (−2) |

| Saw S [1], Loh TP [1], Ang SBL [2], Yip JWL [3], Sethi SK [1] - Year: 2011 - Publication: Clinical Chemistry - Affiliation: [1] Department of Laboratory Medicine, National University Hospital, Singapore. [2] Department of Anaesthesia, National University Hospital, Singapore. [3] Department of Cardiology, National University Hospital, Singapore - Funding: Self-funded |

- Design: Before-After - Facility/Setting: National University Hospital, Singapore; 1,000-bed tertiary teaching hospital with a full suite of clinical services; laboratory receives in excess of 4,000 clinical samples daily - Time period: Pre: 03/2008 – 05/2008 Pilot: 06/2008 – 08/2008 Implementation: 04/2009 – 03/2010 Post: 03/2010 – 05/2010 -Population/Sample: approximately 4,692 critical laboratory results in each test period - Comparator: Manual call center system - Study bias: None |

-Description: Fully automated short message system (critical reportable results – CRR) using information technology engine that automatically alerts physicians to critical values. The CRR engine software was loaded onto the health care messaging system (HMS) –an existing platform used by call center to maintain and retrieve departmental physician rosters. Physician is required to reply within 10 minutes of critical value receipt, otherwise alert is sent to more senior physician from roster (and/or trigger manual intervention from call center). - Duration: 12 months (04/2009 – 03/2010) - Training: Not reported - Staff/Other resources: Steering committee with representatives from nursing professionals, various clinical divisional leaders, laboratory professionals, hospital administrators, information technology department, the call center, and a local private software development partner - Cost: Not reported |

- Description: Time to receipt (for pre and post: includes time to validate critical result); time to acknowledge Pre: acknowledgment by a person from ordering location and the reporting was considered complete. Post: acknowledgement by physician - Recording Method: All CRR-HMS transactions are electronically captured, and an audit trail traceable to the sender and receiver is recorded. |

- Type of Findings: Pretest-Posttest - Findings/Effect Size: Time to receipt/respond Pre: Median = 7.3 minutes (96.8% of critical results communicated within 1 hour); Mean = 14.6 minutes Post: 11 minutes (92.9% of critical results acknowledged within 1 hour); mean = 18.3 minutes Excluding time taken by laboratory to validate critical values at post: Median = 2.0 minutes; Mean = 4.7 minutes (validation time not available for pre)  d = −0.462 (CI = −0.571 to −0.353) d = −0.462 (CI = −0.571 to −0.353) OR = 0.433 (CI = 0.355 – 0.527) OR = 0.433 (CI = 0.355 – 0.527)- Statistical Significance/Test(s): Not discussed - Results/conclusion biases: CRR response includes delay in escalation logic; manual response time stopped after any staff acknowledged critical result. Both pre and post estimates include time to verify result |

|

Quality Rating: 6 (Fair - exclude) (10 point maximum) Effect Size Magnitude Rating: N/A Relevance: N/A Excluded due to multiple confounds and noticeable measurement bias |

Study (3 pts maximum): 3 | Practice (2 pts maximum): 2 |

Outcome measures (2 pts. maximum):

0 Non-comparable measures |

Results/findings (3 pts maximum):

1 Results not attributable to practice |

APPENDIX E: Evidence Summary Tables – Call Centers

|

Bibliographic Information - Author (s) - Yr Published/Submitted - Publication - Author Affiliations - Funding |

Study - Design - Facility/Setting - Time Period - Population/Sample - Comparator - Study bias |

Practice - Description - Duration - Training - Staff/Other Resources - Cost |

Outcome Measures - Description (s) - Recording method |

Results/Findings - Type of Findings - Findings/Effect Size - Stat. Significance/Test(s) - Results/Conclusion Bias |

|---|---|---|---|---|

| Geisinger Medical Center, Danville, PA, USA - Year: 2009 - LMBP Network Submission - Funding: Self-funded |

- Design: Before-After - Facility/Setting: Geisinger Medical Center, Danville, PA; teaching hospital with > 300 beds; >1 million tests/yr. - Time period: 1/2006--6/09 Pre: 2006 (12 mos.) Post: 1–6/2009 (6 mos.) - Population/Sample: Avg. 70 CV calls/day to inpatient units and ER. All CVs excluding Anatomic Pathology reported for GMC testing population; Post: 12,306 CV calls; Pre: sample size not reported. - Comparator: Passive system used by bench technologists using a written call log with no readback verification - Study bias: None noted |

- Description: Call center operates 24 hrs./7 days/wk. and is staffed by 21 FTEs. A centralized Client Service Contact Center with an integrated software application make critical value calls directly to a licensed practitioner who can take action on critical values. The Call Center must also verify and document readback of the critical value. The time interval is measured from the identification of the verified critical value to the receipt by the responsible licensed care giver. - Duration: 1/07 - practice ongoing - Training: Education materials provided - Staff: Call Center staff - Other resources: None reported - Cost: Not reported |

- Description: (1) Timeliness of reporting – Pre: written call log by bench technologists with no readback verification of critical values being called to someone: not necessarily a care provider. Post: % CV results reported within 30 min interval from identification of the verified critical result to acknowledgement by responsible licensed caregiver. - Recording Method: Vendor occurrence/ monitoring; No reliable method of tracking comparator rates (2006) as no monitoring system was in place to ensure that the results were given to care providers nor was there documentation of the readback of results. |

- Type of Findings: Pretest-Postest - Findings/Effect Size: (1) % CV results reported within 30 min to responsible licensed caregiver Pre (2006): 50% Post (2009): 95.5%  d = 1.684 (CI = 1.635–1.733) d = 1.684 (CI = 1.635–1.733) OR = 21.2 (CI = 19.4 – 23.2) OR = 21.2 (CI = 19.4 – 23.2)- Statistical Significance/Test(s): Not reported - Results/conclusion biases: Data collected during notably different time periods (2006 and 2009); data not provided to support findings or statistical analysis |

|

Quality Rating:

6 (Fair)

(10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct Include: expected downward bias from different measures not apparent |

Study (3 pts maximum): 3 | Practice (2 pts maximum): 2 |

Outcome measures (2 pts. maximum):

0 Different measures used to estimate rates |

Results/findings (3 pts maximum): 1; - Appropriateness of statistical analysis: Compares two practices with estimates based on data from notably different time periods - Data insufficient to allow/verify calculation of an effect size (no sample sizes reported) |

| Providence Regional Medical Center, Everett, WA, USA - Year: 2009 - LMBP Network Submission - Funding: Self-funded |

- Design: Observational - Facility/Setting: Providence Regional Medical Center in Everett, WA; > 300 beds; >1 million tests/yr. - Time period: 10/08–6/09 (3 consecutive calendar quarters) 2008- 4th qtr: 10/08–12/08 2009 -1st qtr: 1/09–3/09 2009- 2nd qtr: 3/09–6/09 - Population/Sample: Call center: 108 outpatient only hospital CV calls Comparator: 1,162 inpatient only hospital CV calls - Comparator: Inpatient CV test results communicated by laboratory techs - Study bias: |

- Description: Critical values results communicated by client services call center to designated physician or clinic staff. - Duration: 10/08–6/09 - Training: Client services staff trained on call center management software - Staff: Not reported - Other resources: Not reported - Cost: Not reported |

- Description: Timeliness of reporting - % CV results reported within 15 min. - Recording Method: Occurrence log |

- Type of Findings: Comparison: Call Center (outpatient) v. Techs (inpatient) - Findings/Effect Size: Timeliness within 15 min- 2008-4th qtr: Call Center: 97% (n=29); Techs: 99.8% (n=427) 2009 – 1st qtr Call Center: 97% (n=32); Techs: 98% (n=329) 2009- 2nd qtr: Call Center: 60% (n=47); Techs: 99% (n=406)  ES = Not calculable from the data provided ES = Not calculable from the data provided- Statistical Significance/Test(s): Not reported - Results/conclusion biases: Sample selection may explain unfavorable direction of results |

|

Quality Rating: 3

(Poor)

(10 point maximum) Effect Size Magnitude Rating: N/A Relevance: Direct |

Study (3 pts maximum): 0; - Samples for the practices are sufficiently different to clearly nullify generalizability of the results – small number of outpatient only CV calls for call center vs. large number of inpatient only CV calls for comparator | Practice (2 pts maximum): 1; - An important aspect of implementation not well-described; staffing not reported | Outcome measures (2 pts. maximum): 2 | Results/findings (3 pts maximum): 0; - Sample sufficiency: Statistical power is not discussed AND the sample is likely too small to allow a robust estimate of the impact of a practice - Appropriateness of statistical analysis: Does not provide data sufficient to allow/verify calculation of an effect size |

| Saxena S (1,2); Kempf R (2), Wilcox S (2), Shulman IA (4), Wong L (2), Cunningham G (2), Vega E, Hall S (2) - Year: 2005 - Publication: Joint Comm J Qual Patient Saf. - Affiliations: [1] Keck School of Medicine, University Southern California. [2] Los Angeles County + University of Southern California Healthcare Network. - Funding: Self-funded |

- Design: Cross-sectional - Facility/Setting: LA County and Southern Calif Medical Center. Urban, acute care teaching hospital, >700 beds. - Time period: Pilot: 04/2003 Implementation: 11/2003 – 05/2004 Post: 12/2004 - Population/Sample: All CV notifications Pre: Not reported Post: between 334–700; approximately 86% inpatients; 14% outpatients - Comparator: Direct physician notification of critical laboratory values. - Study bias: Both practice and comparator include call center practice |

- Description: Centralized and standardized user-friendly system for notification of critical laboratory values (CLVs). Lab tech calls customer service center (CSC) staff who directly communicates CLVs to physician. - Duration: 8 months (5/2004 – 12/2004) - Training: 10 hrs. training CSC staff to use system - Staff: Interdisciplinary team with lab services director (network’s associate patient safety officer) as team lead; medical center lab director, assistance chief administrative labo manager, lab quality improvement coordinator, information tech representative, customer service center supervisor, and medical director of ambulatory services. - Other resources: Not reported - Cost: 230 hours IT time over 5-month period for development |

- Description: (1) Time to receipt of CV result in minutes (2) Timeliness of reporting - % CV results reported within 1 hour (3) Timeliness of reporting – % CV results reported within 15 min - Recording Method: Progress reports to the network’s quality improvement committee (QJC), chaired by chief medical officer |

- Type of Findings: Pretest-Posttest - Findings/Effect Size: (1): Monthly average CV lab test notification time: Pre: 38 minutes Post: 10 minutes (2) Noncomparative: For May 2004- December 2004, almost all (99%–100%) notifications were completed within one hour (3) Noncomparative: –For May 2004- December 2004, 79%–83% of notifications were completed within 15 minutes.  ES = Not calculable from the data provided ES = Not calculable from the data provided- Statistical Significance/Test(s): Not discussed - Results/conclusion biases: No data sources provided for outcomes reported; no comparison period sample size reported; findings only compare last month before implementation with last month of measurement. |

|

Quality Rating:

7 (Fair)

(10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Potential study bias: sample selection (includes emergency patients CV) may introduce a study bias that would affect results (−1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

1 Sample sufficiency: Number of pre sample not reported (−2) |

| - Unpublished Study A - Year: 2008 - LMBP Network - Eastern USA - Funding: Self-funded |

- Design: Before-after - Facility/Setting: Large urban academic medical center in Mid- Atlantic U.S. with more than 600 beds; annually > 32,000 inpatients; 300,000 outpatients - Time period: Approximately 2 mos.; 1 mo. pre-call center (3/26–4/27/08) and 1 mo. post- call center (4/28–5/28/08) - Population/Sample: No sample size reported. Approximately 200 CV calls/day – likely inpatient only - includes all CV test results within time period, however does not include all patients since call center not implemented in all areas – no details provided - Comparator: Computer call queue software tracks time to report CVs to licensed caregivers without using call center. No other information on pre- call center practice; may have involved nursing staff receiving CV test results from lab and calling physicians. - Study bias: None |

- Description: Call center operates 24 hrs./7 days/wk with a 1 hour target threshold for all CV calls. Lab-certified CV test results go into call center computer queue for its staff to call licensed caregivers. Call Center staff asks caregiver to read-back the results and documents the read-back in the computer system. Utilizes escalation procedure to identify patient caregiver. - Duration: 1 month (Practice initiated on 4/28/08) - Training: Not discussed - Staff: Staffed by 1–3 medical technologists per shift - Other resources: Not noted - Cost: Not reported |

- Description: (1) Timeliness of reporting - % daily CV results reported within 1 hour (2) Time to receipt of result - Average daily time (min.) per CV test result notification (i.e., to report to licensed caregiver) - Recording Method: Person making call asks caregiver to read-back results. The read-back is recorded in the computer system, which tracks time from when result certified until caregiver notified (CV “TAT”) |

- Type of Findings: Pretest-Posttest: - Findings/Effect Size: (1) % CV results reported within 1 hour: Pre: 76.7% daily average (SD: 13.74; Variance: 188.69; Range: 37.5 – 95.3% daily) Post: 92.1% daily average (SD: 5.35; Variance: 28.62; Range: 71.6 – 99% daily). (2) Noncomparative: Pre-Call Center only (3/26–4/21/08): Avg. daily CV notification time: 46.5 minutes (SD: 25.53; Range: 21 – 157); removing the single 157 min. outlier: 42.1 minutes (SD 12.25)  d = 0.697 (CI = 0.149–1.543) d = 0.697 (CI = 0.149–1.543) OR = 14.1 (CI = 5.2 – 38.4) OR = 14.1 (CI = 5.2 – 38.4)- Statistical Significance/Test(s): Not reported - Results/conclusion biases: No comparisons available on differences between areas where call center was and was not implemented. |

|

Quality Rating: 7 (Fair) (10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Study sample may not be representative of practice; call center not implemented hospital-wide; no information on population |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

1 Sample sufficiency: measurement period may be insufficient to allow robust estimate of impact. Appropriateness of statistical analysis: does not provide data sufficient to allow/verify calculation of an effect size (sample size) |

| - Unpublished - Call Center: Study B - Year: 2009 - LMBP Network Submission - Western USA - Funding: Self-funded |

- Design: Time-series - Facility/Setting: Western USA, Large Health Maintenance Organization (HMO) Laboratory; > 300 beds; >1 million tests/yr. - Time Period: Pre: June 2004; Post: June–July 2009 - Population/Sample: A sample of 500–750 CV test results/mo; study population was CVs for routine outpatient laboratory work. Inpatient or STAT excluded. No sample sizes reported. - Comparator: Original CV protocol: Regional Lab notified collection laboratory which then notified provider. -Study Bias: None |

- Description: Call center operates 24 hrs./7 days/wk. 24/7 Adult & Advice Call Center that was already staffed with Advice RNs and Call Center MDs who were rotating Emergency Physicians. Two tracks were created, one for INR CVs and one for all other lab CVs - Duration: 3/09 - ongoing - Training: Not discussed - Staff: Staffing level unknown; skilled nursing staff call center; lab assistants occasionally assist in notification. - Other Resources: Not reported - Cost: Not reported |

Outcome Measure: Timeliness of reporting – % CV results reported within 1 hour - Recording Method: Internal quality control instrument; audit of electronic medical record |

- Type of Findings: Time series - Findings/Effect Size: Timeliness of reporting (within 1-hr) N = 550–750 CVs monthly (2009) Pre: June 2004: 49.2% (~320) CVs reported within 1 hour (# of calls estimated - based upon range in 2009) Time 2 (June–July 2009): June–July 2009: 100% of approximately 1,300 CVs reported within an hour  d = 3.826 (CI = 2.9874 – 4.778) d = 3.826 (CI = 2.9874 – 4.778) OR = 1031.5 (CI = 183.5 – 5799.2) OR = 1031.5 (CI = 183.5 – 5799.2)- Statistical Significance/Test(s): Not reported - Results/conclusion biases: Actual Ns not reported |

|

Quality Rating: 8

(Good)

(10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum): 3 | Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

1 - Number of subjects not reported; time periods notably different |

| University of Maryland - Year: 2008 - Publication: Unpublished - Affiliations: - Funding: Self-funded |

- Design: Longitudinal - Facility/Setting: U Maryland Medical Center. Urban, acute care teaching hospital, >700 beds. - Time period: Pre: 3/28/08 – 4/27/08 Post: 4/28/08 – 5/14/08 (two days omitted from calculations as call center was not staffed) - Population/Sample: All CV notifications Pre: Approximately 6,600 Post: Approximately 3,000 - Comparator: Direct physician notification of critical laboratory values. ???? - Study bias: |

- Description: When a critical result is certified, it goes into a call queue, which goes to the call center. - The information is color-coded on the computer screen of the person making the calls, depending on how long the result has been available: results coded yellow have been available (and waiting to be called) for less than 30 minutes. (All results start in yellow in the system.); results coded red have been available (and waiting to be called) for more than 30 minutes. - Duration: 48 days (3/28/08 – 5/14/08) - Training: 10 hrs. training CSC staff to use system - Staff: The call center operates 24 hours per day, 7 days a week. The call center is not yet fully staffed. When fully staffed, it will have 2 med techs for each day shift, and one for off-shifts and a total of 7 staff - Other resources: - Cost: |

- Description: Timeliness of reporting - % CV results reported within 1 hour to licensed caregiver; - first call is to call the ordering physician. If that person cannot be reached, then they call the floor where the patient is located and ask to speak to the person taking care of the patient at that time. - Recording Method: -The person making the call asks the caregiver to read- back the results. The read- back (including who was called and when) is then documented in the computer system. |

- Type of Findings: Pretest-Posttest - Findings/Effect Size: Percentage of calls within 1 hour: Pre: 76.7 (SD = 13.7) Post: 95.7 (SD = 2.1)  d = 1.665 (CI = 1.616–1.714) d = 1.665 (CI = 1.616–1.714) OR = 20.5 (CI = 18.7 – 22.4) OR = 20.5 (CI = 18.7 – 22.4)- Statistical Significance/Test(s): None - Results/conclusion biases: - Short measurement period, but sufficient as critical values were not rare events. |

|

Quality Rating:

8

(10 point maximum) Effect Size Magnitude Rating: Substantial Relevance: Direct |

Study (3 pts maximum):

2 Potential study bias: sample selection (includes emergency patients CV) may introduce a study bias that would affect results (-1) |

Practice (2 pts maximum): 2 | Outcome measures (2 pts. maximum): 2 |

Results/findings (3 pts maximum):

2 Number of subjects not reported |

Footnotes

Laboratory Medicine Best Practices Workgroup member

See Appendix A for the LMBP Patient Specimen Identification Expert Panel Members. LMBP Workgroup members are listed at: https://www.futurelabmedicine.org/about/lmbp_workgroup/

Random-effects model assumes there is no common population effect size for the included studies and the studies’ effect size variation follows a distribution with the studies representing a random sample. This is in contrast to the fixed-effects model which assumes a single population effect size for all studies and that observed differences reflect random variation.

Human Subjects Protection