Abstract

Peer-reviewed publications are one measure of scientific productivity. From a project, program, or institutional perspective, publication tracking provides the quantitative data necessary to guide the prudent stewardship of federal, foundation, and institutional investments by identifying the scientific return for the types of support provided. In this article, the authors describe the Vanderbilt Institute for Clinical and Translational Research’s (VICTR’s) development and implementation of a semi-automated process through which publications are automatically detected in PubMed and adjudicated using a “just-in-time” workflow by a known pool of researchers (from Vanderbilt University School of Medicine and Meharry Medical College) who receive support from Vanderbilt’s Clinical and Translational Science Award. Since implementation, the authors have: (1) seen a marked increase in the number of publications citing VICTR support; (2) captured at a more granular level the relationship between specific resources/services and scientific output; (3) increased awareness of VICTR’s scientific portfolio; and (4) increased efficiency in complying with annual National Institutes of Health progress reports. They present the methodological framework and workflow, measures of impact for the first 30 months, and a set of practical lessons learned to inform others considering a systems-based approach for resource and publication tracking. They learned that contacting multiple authors from a single publication can increase the accuracy of the resource attribution process in the case of multidisciplinary scientific projects. They also found that combining positive (e.g., congratulatory e-mails) and negative (e.g., not allowing future resource requests until adjudication is complete) triggers can increase compliance with publication attribution requests.

When you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind.1

In this quote, Lord Kelvin argues for the importance of objective measurement. This concept has become a fundamental principle of management that applies in the commercial, non-profit, and even academic domains.

Within academia, publication tracking provides one objective measure of scientific quality and productivity.2,3 It also allows institutional leaders to evaluate the overall scientific portfolio of a specific program or the institution as a whole. Metrics such as publication frequency, total number of articles, average number of citations per article, citation rates, number of publications in top percentiles, measures of interdisciplinary nature and specialization, number of publications with particular keywords, types of journals, impact factor of journals, number of citations for combinations of funding sources, multi-institutional co-authorship, and other characteristics can be captured or calculated and linked with sources of support and funding.4 Within information science, the study of bibliometrics and author disambiguation can produce sophisticated, quantitative citation or content analysis of journal articles.5–8

All of these metrics are particularly useful for evaluation purposes when institutions are faced with resources that they must equitably distribute to the entire research community, such as those provided by the National Institutes of Health (NIH) through the Clinical and Translational Science Award (CTSA) program.9–13 Publication tracking provides institutional leaders with quantifiable metrics to ensure the prudent stewardship of federal, foundation, and institutional investments by allowing them to identify the greatest scientific return for various types of support. Institutions often resort to ad hoc surveys tied to grant reporting cycles to identify the publications that arose from particular funding sources.14 However, this method may not be exhaustive, and some publications or pertinent information may be missed due to imprecise recall by publication authors.

Searches in NIH databases like the Research Portfolio Online Reporting Tools (RePORTER)15 and PubMed16 can provide coarse details concerning publications and supporting grants. However, data from these systems indicate only that a publication was supported by a grant, not how and at what capacity the publication was supported. Gathering that granular information is necessary for managing large-scale service-oriented portfolios. Thus, in 2010, we developed a straightforward, yet dynamic methodology for identifying and attributing recently published journal articles with a known pool of researchers receiving support from the CTSA program.

In this article, we describe the methodological framework and workflow that allows researchers to attribute a journal article to specific institutional resources at or near the time of publication. Additionally, we outline the measures of impact used in this new automated workflow for citing publications over the first 30 months of its operation. Finally, we present a set of practical lessons learned to inform other institutions or programs seeking to employ a systems-based approach for resource tracking and the evaluation of scientific portfolios.

A Pilot Funding Model in Vanderbilt University’s CTSA Program

For a grant application to NIH or to another federal or non-profit agency to receive serious consideration, the applicants generally must provide substantial preliminary data.17 Yet, funding for pilot studies to generate such data is often slow, and sometimes, unavailable. The Vanderbilt Institute for Clinical and Translational Research (VICTR) was created in 2006 in part to stimulate new research ideas and to provide a home for researchers who want to plan and conduct scientific studies.18 To streamline an important component of translational science--moving swiftly from hypotheses to proof of concept then to full scale investigation--as part of VICTR, we created an openly accessible, tiered-model to support pilot funding initiatives. This model allows researchers to apply at any time for any level of research support for pilot funding; for research infrastructure services needed to generate preliminary data; or to support projects that are small and do not fit the scope of the traditional “R” award process.

Since October 2007, more than 1,700 pilot and supplemental awards for hypothesis-driven research have been granted to clinical and translational investigators. VICTR pilot funding is available to all faculty members, schools, departments, and disciplines across Vanderbilt University School of Medicine and Meharry Medical College (931 researchers from 217 departments and disciplines).

The VICTR Portfolio Management System

All VICTR pilot funding requests are created and submitted online through a single portal, StarBRITE.19 The application process starts with a “preliminary information” section--the principal investigator provides coarse detail concerning the nature of the support she is requesting (e.g., letter of support for a grant submission, resources to be funded by VICTR, sponsor-reimbursed use of VICTR resource infrastructure) and a summary of her study. Based on the information entered in this first section, the system algorithmically refines the options that appear in the “pick resources” section that comes next, providing a list of resources, from a pool of over 250 items, tailored to the researcher’s specifications. Researchers can select any items from the list; each item includes instructions for selection (e.g., quantity, rationale) as well as the associated cost or value. Selected items appear in a “shopping cart,” which displays aggregate cost/value and can be reviewed by the research team.

The total amount of VICTR funding requested determines the review path and the level of scientific justification required. Vouchers--VICTR funding requests that total less than $2,000--require minimal scientific justification and are typically reviewed administratively within 48 hours (with retrospective full scientific oversight). Expediated requests that total between $2,000 and $10,000 require a five-page NIH-style scientific proposal, relevant ancillary budget documents and researcher biosketch, and are pre-reviewed by VICTR staff and reviewed by at least one VICTR Scientific Review Committee member. Requests exceeding $10,000 require documentation similar to expediated requests but always require full Scientific Review Committee review and deliberation. In addition, research teams are required to specify the expected outcomes (e.g., publication, pilot data for grant submission) and other information important for assessing the portfolio (e.g., research type and area, similar to NIH reporting list, phase of study).

The principal investigator finalizes the request and verifies that she will cite the VICTR funding in any subsequent publications associated with the resources requested.

Once the pilot funding application is submitted, the StarBRITE system facilitates a scientific review and awarding process. When an application is approved, StarBRITE provides resource-specific instructions for the researcher to redeem the award. Automated data feeds from institutional accounting systems inform the StarBRITE system when the award has been redeemed.

In addition to the pilot funding request and approval mechanism, VICTR also provides researchers with a number of tools and services (e.g., REDCap20 and ResearchMatch21) that they can request and use immediately without going through the pilot funding request and approval process. In these cases, StarBRITE records and maintains a comprehensive list of direct-to-researcher services used by individual projects and attributes their use to the principal investigators who lead these supported projects.

The closed-loop data and workflow methods embedded in the StarBRITE system supports VICTR administrators in managing and tracking resources. Thus, the administrators know what resources have been requested, provided, and used by what research teams. However, because future research outcomes (e.g., publications) are disconnected from this request and reward process, evaluating the relative impact of VICTR-resource distribution requires additional information capture and linkage methods leveraging external databases.

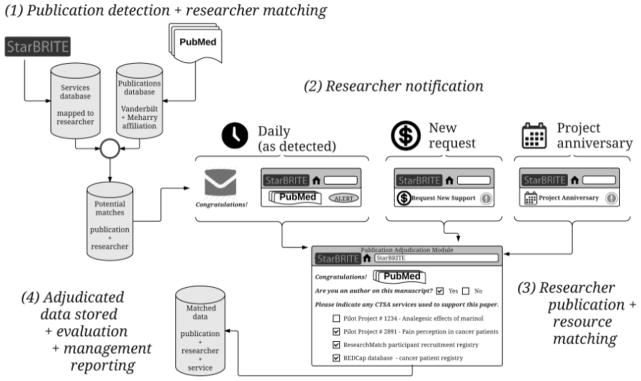

Designing a Systematic Approach to Automated Publication Matching

Figure 1 illustrates the StarBRITE workflow, designed to detect new publications, notify researchers, and identify when a publication acknowledges VICTR support. Fundamental components of our publication matching methodology include: (1) a “listening” service that detects new publications in PubMed that have been authored by VICTR researchers; (2) a multi-faceted notification process for contacting VICTR researchers when new publications are detected; (3) a straightforward adjudication process enabling researchers to match detected publications with specific VICTR services; and (4) program-wide evaluation and management dashboards generated from researcher-provided publication and resource attribution data. The notification and adjudication system is dynamic and easily accommodates new VICTR services as they are developed and offered to research teams. The listening service and notification process allow researchers to attribute resources within days of their publication appearing in PubMed. Key system components are described in more detail below.

Figure 1.

Publication detection, researcher notification, and adjudication workflow used to attribute journal articles to services offered by the Vanderbilt Institute for Clinical and Translational Research (VICTR).

Publication detection and researcher matching

In StarBRITE, we created an automated mechanism to query PubMed nightly to identify any publications affiliated with Vanderbilt or Meharry that had been added to the database in the last 6 months. The six-month window ensures that we did not miss potential publications due to the order in which they were added to or curated in PubMed. Our method for computationally interrogating PubMed leverages the Application Programming Interface technology supplied by the National Library of Medicine.22

For each new Vanderbilt or Meharry publication that StarBRITE detects, we pull the list of authors and cross-reference the names on that list with our known pool of VICTR-supported researchers. To do so, we use a researcher-to-resource mapping table that StarBRITE autogenerates when VICTR services are initially requested, awarded, and used. If any author has used one or more VICTR-supported resources, a notation is made in an underlying database table identifying the publication as a potential match for VICTR resources.

Researcher notification

In StarBRITE, we designed four methods for requesting publication/resource adjudication from researchers whenever new publications of interest are detected and logged. First, a congratulatory email is sent to the researcher listed as an author with instructions for the next steps for adjudication. Second, the researcher is presented with an “on until cleared” notification dialog (a visible, highlighted action-required reminder) each time she uses the StarBRITE system for any purpose. Third, the StarBRITE system has embedded mechanisms preventing researchers from requesting new or amending existing VICTR support until all previously identified publication/resource match candidates have been adjudicated. The final notification mechanism is tied to “anniversary reporting” feedback that is required from all researchers receiving VICTR support. With this mechanism, researchers are directed to a pre-populated StarBRITE web form, requesting a progress report and outcomes to date for the project. Previously adjudicated publication information is included in this anniversary report; the report also affords researchers the opportunity to supply information on publications not previously detected by the StarBRITE system (e.g., multi-center publications for which the corresponding author is at another institution).

Publication/resource matching

The initial step in the publication/resource matching process is author disambiguation. After receiving notification of a newly detected publication (e.g., e-mail or StarBRITE “on until cleared” notification dialog), researchers are presented with two choices: (1) “Yes, I am the author of this publication”; or (2) “No, I am not the author of this publication.” If the researcher selects the “No” option, the StarBRITE system logs the author and publication match as false. If the researcher selects the “Yes” option, she is re-directed to a short form in the StarBRITE system that displays a list of all the VICTR-supported resources that she has been awarded and used in the past with instructions that she should indicate which resources contributed to that publication. As the final step in the adjudication process, researchers are asked to cite the CTSA grant in PubMed.16

Storing adjudicated data, evaluation, and management reporting

After publication/resource adjudication is complete, the matched data are stored in StarBRITE and added to the appropriate dashboards to support management decisions in evaluating the scientific return on investment for individual projects and overall VICTR services.

Assessment of Response to and Effectiveness of Publication Matching

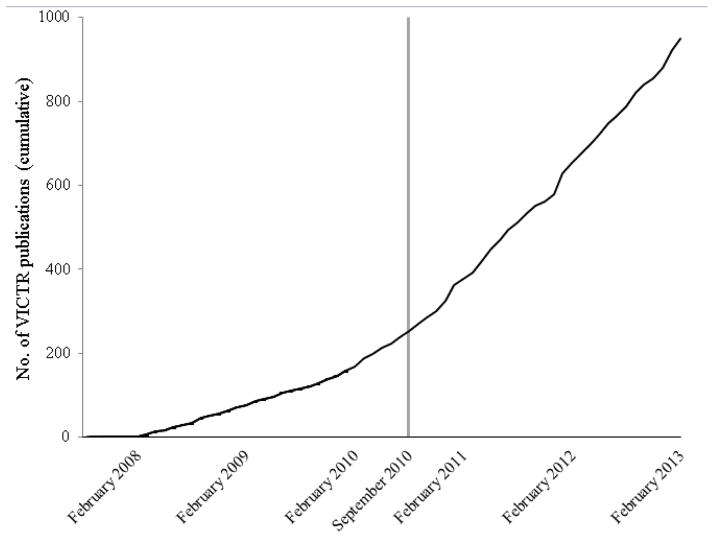

Figure 2 provides a cumulative count of the known VICTR-supported publications and their publication dates for the time period between October 2007 and February 2013. It took several months before researchers were aware of VICTR and the support available, then additional time for publications to evolve from a supported study to a published article. By February 2008, however, we were able to attribute approximately eight publications per month to VICTR-supported projects.

Figure 2.

Total number of publications that cited services offered by the Vanderbilt Institute for Clinical and Translational Research (VICTR), February 2008–February 2013. A new publication detection and adjudication system was launched in September 2010 (vertical grey line) and included: (1) immediate requests for researchers to adjudicate publications detected since the previous annual survey in February 2010; and (2) daily notifications and requests for adjudication when new publications are detected. Adjudication prior to February 2010 was performed using an annual survey each February. Adjudication after February 2010 was performed using the new just-in-time system described above.

Between October 2007 and February 2010, researchers attributed their publications to VICTR services as part of a yearly request for information coinciding with the required CTSA annual progress report. A new system was launched in September 2010. It recognizes and queues for immediate adjudication any publication authored by a known VICTR researcher that has been published since the February 2010 annual data collection survey. More importantly, the new system allows for the daily detection and adjudication of publications, instead of the annual process. We found a marked change in the number of publications supported by one or more VICTR services--by the end of our reporting period, approximately 22 publications per month were attributed to VICTR services.

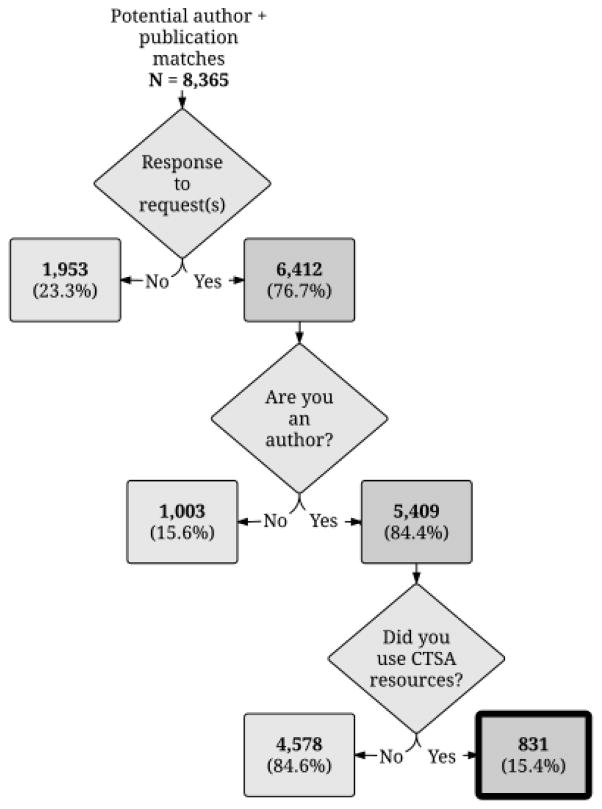

A quantitative summary of the publication matching, adjudication, and attribution processes between September 2010 and February 2013 is shown in Figure 3. Using a loose (first initial and last name) matching algorithm, the StarBRITE system notified every known VICTR-supported researcher each time a new PubMed publication was detected. Roughly 77% (6,412/8,365) of all potential researcher/publication matches were adjudicated, with 10% (831/8,365) attributing one or more VICTR service to the publication. A majority of the researchers who responded to a matching request did so because of an e-mail notification (81%); fewer responded because of an “on until cleared” StarBRITE notification (14%), the mandatory requirement to adjudicate all known publications before requesting new resources (3%), or as part of the anniversary reporting process (1%). Of adjudicated potential researcher/publication matches, 16% (1,003/6,412) were determined to not be a match, highlighting the need for a low-burden adjudication workflow. We have not analyzed thoroughly the 23% (1,953/8,365) of potential matches for which a researcher was contacted but never responded. Some of these cases likely include junior investigators (e.g., trainees, fellows) who received VICTR support and authored a publication before leaving the university. The StarBRITE system does not maintain a forwarding e-mail address, and access is automatically revoked once faculty and staff have left the university.

Figure 3.

Summary of the publication matching, adjudication, and attribution processes, September 2010–February 2013, used to attribute journal articles to services offered by the Vanderbilt Institute for Clinical and Translational Research (VICTR).

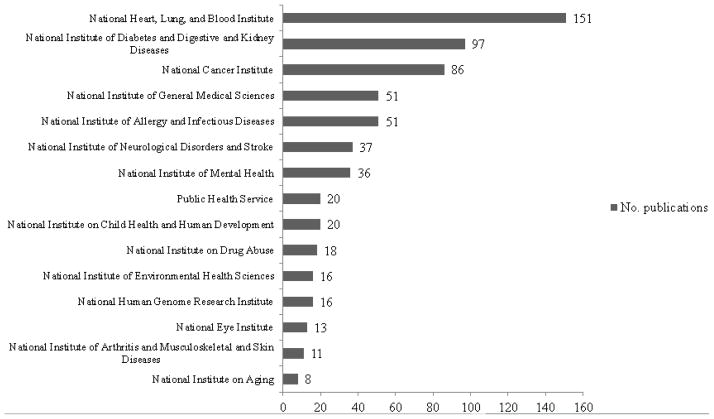

Although general attribution of VICTR services was sufficient to fulfill the annual reporting requirements of our funding organization, we sought more granular information to assist VICTR management decisions. Table 1 shows data generated by the StarBRITE system and used by VICTR leadership to assess the uptake and value added by selected services. Some services and tools were available during the entire reporting period (e.g., pilot program from 2007–2012), while others were added in later years (e.g., ResearchMatch in 2010). The data presented in Table 1 are automatically extracted as part of the researcher adjudication process and displayed in evaluation dashboards within the StarBRITE system.19 Other dashboards automatically created from data captured during the publication attribution adjudication process include data regarding the scientific focus areas supported and co-citations with NIH agencies. Figure 4 provides a snapshot of the scientific focus areas supported by VICTR. These and other dashboards inform and assist institutional leaders in assessing the impact of the entire portfolio of VICTR-supported services and tools.

Table 1.

Number of Publications per Year for which the Authors Credited Specific Vanderbilt Institute for Clinical and Translational Research (VICTR) Services as Supporting the Work Described

| Selected VICTR services | No. publicationsa | |||||

|---|---|---|---|---|---|---|

| 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | |

| REDCap data management platform20 | -- | 3 (1) | 25 (13) | 42 (21) | 82 (40) | 104 (36) |

| BioVU de-identified genetic database23 | -- | 1 (1) | 2 (1) | 18 (9) | 21 (13) | 16 (8) |

| De-identified research data warehouse24 | -- | 1 (1) | 2 (0) | 13 (4) | 14 (5) | 11 (3) |

| Studio25 b | 0 | 7 (6) | 2 (0) | 11 (3) | 19 (7) | 22 (5) |

| ResearchMatch21 c | -- | -- | -- | 4 (0) | 3 (0) | 15 (5) |

| Recruitment faculty/staff study notificationd | -- | -- | -- | 5 (1) | 12 (3) | 33 (6) |

| VICTR pilot support projects19 | 8 (8) | 57 (54) | 68 (53) | 137 (102) | 165 (126) | 226 (138) |

The numbers in parentheses indicate the number of publications for which the authors credited only that VICTR service as supporting the work described in the publication.

The VCTR Studio program brings in experts to provide free, structured, project-specific feedback for medical researchers.

ResearchMatch is a national registry to recruit volunteers for clinical research.

Recruitment faculty/staff study notification is an automated email service that invites faculty and staff to participate in clinical studies and trials.

Figure 4.

Number of publications co-sponsored by a National Institutes of Health agency and the Vanderbilt Institute for Clinical and Translational Science.

Lessons Learned

In this section, we describe our experience building and operating the StarBRITE portfolio management system and present advice that may be helpful to other institutions that want to deploy similar tracking and real-time evaluation methods.

Cast a wide net in gathering researcher input

We had initial concerns that contacting multiple researchers (all detected publication authors with known VICTR support) for input concerning a single publication might be overly burdensome with questionable value added. In practice, we found that contacting multiple authors of the same publication for adjudication improved the accuracy of the attribution process. In complex multidisciplinary scientific projects, authors’ responsibilities can be diverse during the planning, conduct, and scientific writing stages, and individual team members may be unaware of all VICTR services used.

Anticipate author disambiguation issues

Given our “wide net” approach, based on a loose matching algorithm (e.g., first initial and last name), we were concerned about receiving complaints from researchers who were mistakenly contacted due to author disambiguation. In practice, disambiguation issues were limited to a handful of cases. For example, in one case, we found two VICTR-supported researchers who shared a similar first initial and last name pairing. One was a prolific author, and the other was a research staff member with few known or expected publications. To eliminate future erroneous requests for the staff member, we built into the system an opt out flag enabling the VICTR portfolio management team to designate individual VICTR-supported researchers to be ignored during the matching and adjudication process. In another case, the StarBRITE system was not detecting publications for a VICTR-supported researcher because the system knew him by his middle name rather than his first name. We remedied this and similar detection issues by adding a PubMed author string field to the VICTR researcher database. When populated, the system searches detected publications for the value in this field rather than the automatically generated first initial and last name normally used.

Build multiple trigger points

VICTR promotes a culture of service to the research enterprise and regularly requests feedback from this community to develop innovative solutions based on unmet needs. Research teams realize that evaluation is a key program component, understand the value of VICTR support, and recognize that that support relies on grant funding with progress report requirements. We have found that researchers will help with publication attribution if the process is not burdensome. In particular, congratulating researchers on the new publication then asking for their help is an effective process. Only a minority of researchers ignored this adjudication request. In these cases, not allowing future VICTR support requests until adjudication is complete works well. Finally, adding a separate mechanism for researchers to include publications not detected by the system (e.g., a multi-center publication for which the corresponding author is at another institution) is necessary to capture all relevant publications.

Anticipate program evolution

Matching publications to VICTR-supported resources requires system-level data related to the use of each resource. Thus, we built into our notification and adjudication system the ability to easily create data feeds related to new VICTR services as they are added to the program. Since implementation of the VICTR program, eight new resources have become available and were easily incorporated into the StarBRITE system.

Consider a “just-in-time” workflow

Prior to designing and implementing the just-in-time publication adjudication process that we described in this article, we used a semi-automated matching process designed to help VICTR-supported researchers report their publications. As part of our old process, once a year, we asked researchers to provide information about all known VICTR-supported publications to fulfill annual reporting requirements for our CTSA grant. This process was similar to the anniversary reporting we described earlier, except all reporting was done in February to fulfill reporting requirements for a single CTSA grant progress report. The old process included the automated retrieval of information from PubMed to assist with publication attribution. Although confounding factors exist in our new system (e.g., a wider pool of researchers/staff contacted and services added to VICTR and the adjudication process over time), we feel that a major contributor to the increased rate of attribution is the switch to a just-in-time process rather than collecting information annually.

Consider the potential for program management

Our current system has the ability to draw broader conclusions related to our overall scientific portfolio and resource contributions. Simple dashboards (e.g., the data presented in Table 1 and Figure 4) are effective for real-time assessment and provide a deeper understanding of resource use and a global view of the overall scientific portfolio. Combining resource use information with resource cost data enables VICTR leadership to make more informed management decisions. Comparing resources awarded and publication history at the researcher level can help to assess future individual project support requests.

Conclusions and Next Steps

VICTR’s mission is to transform the way ideas and discoveries make their way back and forth across the translational research spectrum. The program accomplishes this goal in part by providing pilot funding and support to a large number of diverse research teams. Active portfolio management and evaluation are essential elements for creating and successfully running a large infrastructure program, like VICTR. Since implementing the just-in-time researcher notification and service-level adjudication system, we have seen measureable improvements in our ability to determine the scientific return on investment for VICTR-supported projects.

Building an automated system requires planning, initial investment, and maintenance. Creating our pilot project service request and fulfillment system in StarBRITE required a high degree of planning and approximately nine months of developer effort.19 Other VICTR services, such as REDCap and ResearchMatch, were developed independently over time.20,21 Because we started from a point where we could easily use StarBRITE to query and collate data on the use of all services, the publication attribution workflow we described here was relatively inexpensive to implement (approximately four weeks of programmer effort). Anticipating the future growth of CTSA services, we built a highly dynamic data architecture and can establish data feeds from new CTSA services that we want to include in the publication adjudication process using minimal resources (e.g., ten programming hours or less per new service).

Before we implemented our current portfolio management and publication adjudication system, the process of gathering and reporting information to NIH required many dedicated weeks from VICTR support staff, numerous information gathering surveys directed to VICTR researchers, and intensive manual review. Since implementing the StarBRITE portfolio management system, resource tracking and annual reporting requirements are nearly completely automated and require minimal effort by VICTR support staff.

We will continue to grow our system to accommodate new VICTR services as they are added. We are exploring additional ways of creating information dashboards centered around individual researchers and academic departments to further enhance the ability of the VICTR Scientific Research Committee to make informed decisions when considering new large project resource requests. We also are considering methods for combining data obtained from the publication adjudication process to create just-in-time StarBRITE search functionality designed to “find experts” at Vanderbilt and Meharry (e.g., to find a mentor, a collaborator, or a VICTR Studio expert). Finally, we are creating and evaluating the StarBRITE publication awareness tools to ensure that researchers comply with NIH Publication Access policies.

Acknowledgments

Funding/Support: The Vanderbilt Institute for Clinical and Translational Research is supported in part by Vanderbilt’s Clinical and Translational Science Award (UL1TR000445) from the National Center for Advancing Translational Sciences

Footnotes

Other disclosures: None reported.

Ethical approval: Reported as not applicable.

Previous presentations: Clinical and Translational Science Award Consortium Steering Committee Meeting, October 10-11, 2012, Rockville, MD; Clinical and Translational Science Award Administration Key Function Committee Meeting, October 9, 2012, Rockville, MD.

Contributor Information

Paul A. Harris, Director, Office of Research Informatics, Vanderbilt University School of Medicine, Nashville, Tennessee.

Jacqueline Kirby, Project manager, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Jonathan A. Swafford, Health systems analyst, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee, at the time this article was written

Terri L. Edwards, Program manager, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Minhua Zhang, Health systems analyst, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Tonya R. Yarbrough, Program manager, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Lynda D. Lane, Director of administration, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Tara Helmer, Research services consultant, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

Gordon R. Bernard, Associate vice chancellor for research, Department of Medicine, Vanderbilt University School of Medicine, Nashville, Tennessee.

Jill M. Pulley, Director, Vanderbilt Institute for Clinical and Translational Research, Vanderbilt University School of Medicine, Nashville, Tennessee.

References

- 1.Thomson W. Popular Lectures and Addresses. Vol 1. Cambridge Library Collection - Physical Sciences. Cambridge, England: Cambridge University Press; 2011. Electrical Units of Measurement. [Google Scholar]

- 2.Agarwal P, Searls DB. Can literature analysis identify innovation drivers in drug discovery? Nat Rev Drug Discov. 2009;8:865–878. doi: 10.1038/nrd2973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kissin I. Can a bibliometric indicator predict the success of an analgesic? Scientometrics. 2011;86:785–795. [Google Scholar]

- 4.Philogene GS. Outcome Evaluation of the National Institutes of Health (NIH) Director’s Pioneer Award (NDPA), FY 2004–2005. Washington, DC: National Institutes of Health; 2011. [Accessed February 18, 2015]. http://commonfund.nih.gov/sites/default/files/Pioneer_Award_Outcome%220Evaluation_FY2004-2005.pdf. [Google Scholar]

- 5.De Bellis N. Bibliometrics and Citation Analysis: From the Science Citation Index to Cybermetrics. Lanham, MD: Scarecrow Press; 2009. [Google Scholar]

- 6.Gurney T, Horlings E, van den Besselaar P. Author disambiguation using multi-aspect similarity indicators. Scientometrics. 2012;91:435–449. doi: 10.1007/s11192-011-0589-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Aronson AR, Lang FM. An overview of MetaMap: Historical perspective and recent advances. J Am Med Inform Assoc. 2010;17:229–236. doi: 10.1136/jamia.2009.002733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kang IS, Na SH, Lee S, et al. On co-authorship for author disambiguation. Inf Process Manag. 2009;45:84–97. [Google Scholar]

- 9.DiLaura R, Turisco F, McGrew C, Reel S, Glaser J, Crowley WF., Jr Use of informatics and information technologies in the clinical research enterprise within US academic medical centers: Progress and challenges from 2005 to 2007. J Investig Med. 2008;56:770–779. doi: 10.2310/JIM.0b013e3175d7b4. [DOI] [PubMed] [Google Scholar]

- 10.Druss BG, Marcus SC. Tracking publication outcomes of National Institutes of Health grants. Am J Med. 2005;118:658–663. doi: 10.1016/j.amjmed.2005.02.015. [DOI] [PubMed] [Google Scholar]

- 11.Hall KL, Stokols D, Stipelman BA, et al. Assessing the value of team science: A study comparing center- and investigator-initiated grants. Am J Prev Med. 2012;42:157–163. doi: 10.1016/j.amepre.2011.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Patel VM, Ashrafian H, Ahmed K, et al. How has healthcare research performance been assessed? A systematic review. J R Soc Med. 2011;104:251–261. doi: 10.1258/jrsm.2011.110005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Patel VM, Ashrafian H, Almoudaris A, et al. Measuring academic performance for healthcare researchers with the H index: Which search tool should be used? Med Princ Pract. 2013;22:178–183. doi: 10.1159/000341756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ovseiko PV, Oancea A, Buchan AM. Assessing research impact in academic clinical medicine: A study using Research Excellence Framework pilot impact indicators. BMC Health Serv Res. 2012;12:478. doi: 10.1186/1472-6963-12-478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.National Institutes of Health. [Accessed February 18, 2015];Research Portfolio Online Reporting Tools (RePORTER) http://projectreporter.nih.gov/

- 16.U.S. National Library of Medicine. [Accessed February 27, 2015]; http://www.ncbi.nlm.nih.gov/pubmed.

- 17.Cech TR. Fostering innovation and discovery in biomedical research. JAMA. 2005;294:1390–1393. doi: 10.1001/jama.294.11.1390. [DOI] [PubMed] [Google Scholar]

- 18.Kain K. Promoting translational research at Vanderbilt University’s CTSA institute. Dis Model Mech. 2008;1:202–204. doi: 10.1242/dmm.001750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris PA, Swafford JA, Edwards TL, et al. StarBRITE: The Vanderbilt University Biomedical Research Integration, Translation and Education portal. J Biomed Inform. 2011;44:655–662. doi: 10.1016/j.jbi.2011.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Harris PA, Scott KW, Lebo L, Hassan N, Lightner C, Pulley J. ResearchMatch: A national registry to recruit volunteers for clinical research. Acad Med. 2012;87:66–73. doi: 10.1097/ACM.0b013e31823ab7d2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Entrez Programming Utilities Help. Bethesda, MD: National Center for Biotechnology Information; 2010. [Accessed February 18, 2015]. http://www.ncbi.nlm.nih.gov/books/NBK25501/ [Google Scholar]

- 23.Pulley J, Clayton E, Bernard GR, Roden DM, Masys DR. Principles of human subjects protections applied in an opt-out, de-identified biobank. Clin Transl Sci. 2010;3:42–48. doi: 10.1111/j.1752-8062.2010.00175.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Danciu I, Cowan JD, Basford M, et al. Secondary use of clinical data: The Vanderbilt approach. J Biomed Inform. 2014;52:28–35. doi: 10.1016/j.jbi.2014.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Byrne DW, Biaggioni I, Bernard GR, et al. Clinical and translational research studios: a multidisciplinary internal support program. Acad Med. 2012;87:1052–1059. doi: 10.1097/ACM.0b013e31825d29d4. [DOI] [PMC free article] [PubMed] [Google Scholar]