Abstract

Scale is a widely used notion in computer vision and image understanding that evolved in the form of scale-space theory where the key idea is to represent and analyze an image at various resolutions. Recently, we introduced a notion of local morphometric scale referred to as “tensor scale” using an ellipsoidal model that yields a unified representation of structure size, orientation and anisotropy. In the previous work, tensor scale was described using a 2-D algorithmic approach and a precise analytic definition was missing. Also, the application of tensor scale in 3-D using the previous framework is not practical due to high computational complexity. In this paper, an analytic definition of tensor scale is formulated for n-dimensional (n-D) images that captures local structure size, orientation and anisotropy. Also, an efficient computational solution in 2- and 3-D using several novel differential geometric approaches is presented and the accuracy of results is experimentally examined. Also, a matrix representation of tensor scale is derived facilitating several operations including tensor field smoothing to capture larger contextual knowledge. Finally, the applications of tensor scale in image filtering and n-linear interpolation are presented and the performance of their results is examined in comparison with respective state-of-art methods. Specifically, the performance of tensor scale based image filtering is compared with gradient and Weickert’s structure tensor based diffusive filtering algorithms. Also, the performance of tensor scale based n-linear interpolation is evaluated in comparison with standard n-linear and windowed-sinc interpolation methods.

Keywords: Scale-space, Local scale, Morphometry, Tensor scale, Image filtering, Image interpolation

1. Introduction

Scale [1–3] may be thought of as the spatial resolution, or a range of resolutions ensuring a sufficient yet compact data representation facilitating a target knowledge learning process. Scale plays an important role in determining the optimum trade-off between noise smoothing and perception/detection of structures. In image analysis and computer vision literature, the notion of scale evolved from Marr–Hildreth–Koenderink–Witkin scale-space theory [1–4] whose key idea is to represent and analyze an image at various resolutions. This theory aids in breaking a computer vision and image-processing task into a hierarchy of tasks starting with macro-structural properties and gradually progressing toward micro-structures or the inverse. Often, an image representation at a specific scale is obtained by convolving the original image with a Gaussian smoothing kernel whose width is related to the chosen scale. While scale-space theory has been proven to be useful in a wide range of applications [5–17], the notion of “local scale” or “space-variant resolution scheme” [18–22] emerged to overcome two major practical hurdles – (1) lack of a common mechanism unifying knowledge extracted at multi-scale analyses, and (2) absence of optimal scale localization. A knowledge of “local scale” may allow us to spatially tune the neighborhood size in different processes leading to selection of small neighborhoods in regions with fine detail or near an object boundary, versus large neighborhoods in deep interiors [23]. Also, “local scale” may be a vital piece of information leading to developments of effective space-variant parameter controlling strategies [24].

A knowledge of “local scale” may lead to an effective and automatic mechanism to spatially control a process as a function of local scale [23,24]. Towards this direction, Saha and Udupa introduced a local morphometric scale using a spherical model in [23,24] and studied its effectiveness in various image processing applications including image segmentation [23,25–27], filtering [24], registration [28], and removal of partial volume effects in rendering [29]; see [30] for a survey on local scale. However, both the scale-space as well as the local scale theory implicitly utilizes an isotropic model of scales while most structures in the real world are anisotropic justifying the notion of a “tensor scale”, or “t-scale” in short, – a regime of unified and simultaneous representation of local structure size, orientation and anisotropy. T-scale carries the promise of serving as a rich parametric descriptor of local structure and geometry that may benefit several applications including object recognition, image preprocessing, registration and compression. Several applications, including biomedical, geological and satellite imaging, demand quantitative architectural analyses of quasi random mesh-like structures [31,32]. Such applications are likely to directly benefit from the notion of t-scale that provides a unified knowledge of local size, orientation and anisotropy. Previously, we introduced a formulation of t-scale using an ellipsoidal model [30] and studied its usefulness in various image processing applications [30,31,33]. Andaló [33,34] presented an efficient computational solution for t-scale in binary images and demonstrated its usefulness in detecting salient points on a given contour. However, our earlier formulation lacks a precise analytic definition of t-scale and its computational solution in 3- or higher-dimensional images is, often, infeasible. Recently, the theory of generalized and curvature based local scale and their applications have been studied by Udupa and his research group [35–38].

In this paper, we introduce an analytic formulation of t-scale that describes the local structure geometry using a local structure adaptive orthogonal system and presents an efficient computational solution. Also, we demonstrate the applications of t-scale in image filtering and interpolation. Several papers are available in the literature on structure tensor [39–42] computed by convolving tensor products of intensity gradients with a Gaussian kernel. Although, structure tensor is a useful concept, it primarily captures information derived from local gradient field and may not directly relate to local object geometry. For example, in a homogeneous region, structure tensor may not carry meaningful information. Here, we formulate t-scale from a geometric perspective where, at each image point, the tensor provides direct information of local object geometry as opposed to the gradient field and thus provides more precise structural information useful in many applications.

2. Theory and algorithms

In our previous work [30], we introduced the concept of t-scale using an algorithmic approach but without a precise analytic definition. Also, the previous algorithmic framework is unrealistic for 3- and higher-dimensional images due to high computational complexity. In the following, first, we will briefly describe the previous algorithmic definition [30] of t-scale which will be followed by a new analytic approach to define a local morphometric scale using a tensor model [43] and an effective computational solution in two and three dimensions (2-D and 3-D). Also, in the later part of this section, we introduce the theory and algorithms related to applications of t-scale to image filtering and interpolation.

2.1. Earlier algorithmic approach to t-scale

The notion of t-scale was motivated by the thought of representing local structures by an ellipsoid and, in an earlier work, Saha [30] attempted to define t-scale at any image point p in a 2-D plane (or, 3-D space) as the largest ellipse (ellipsoid in 3-D) that is centered at p and is contained inside the same object region defined by the continuity of homogeneity. However, in the previous approach, no analytic definition for the “largest ellipse” or “largest ellipsoid” was provided. Rather, t-scale was defined using an algorithmic approach as follows. Primarily, t-scale at an image point p is computed by locating edge points visible from p along different directions which are then used to compute the t-scale ellipse (ellipsoid in 3-D) at p. Basic steps for t-scale computation are as follows (see Fig. 1):

Step 1: Trace image intensity along a set of pairs of radially opposite sample lines emanating from p and approximately uniformly distributed over the angular space around p.

Step 2: Locate the closest edge point on each sample line (triangles and black dots).

Step 3: Reposition the edge locations on each pair of opposite sample lines according to the axial symmetry of an ellipse (black dots to white dots).

Step 4: Compute the t-scale at p using the best-fit ellipse derived from the repositioned edge points (triangles and white dots).

Fig. 1.

A schematic description of the algorithmic approach to define t-scale in 2-D. The method starts with edge locations (triangles and black dots) on sample lines emanating from the candidate image point. Following the axial symmetry of an ellipse, the edge points on each pair of radially opposite sample lines are repositioned (black dots to white dots). Finally, t-scale ellipse is computed from repositioned edge points (triangles and white dots).

2.2. T-scale: an analytic definition

Let ℝ denote the set of real numbers and let us consider an image 𝕀 in ℝn where multiple objects are defined as partitions by M number of (n – 1)-D pseudo-Riemannian manifolds, say, m1, m2, …, mM; we refer to these manifolds as partitioning manifolds. Now, let us first consider a point p ∈ ℝn; we will refer to mi as the nearest partitioning manifold for p if the distance between p and mi is shorter than that between p and mj for all i ≠ j. Now, consider p and a set of i orthogonal vectors τ1, τ2, …, τi; there exists a unique subspace Wi that is parallel to each of the vectors τ1, τ2, …, τi and passes through the point p. An image is formed over the orthogonal complement of Wi where the partitioning manifolds are: ; let us refer to this image as an orthogonal complement image of 𝕀 induced by the vectors τ1, τ2, …, τi; and the point p. Finally, t-scale at a point p ∈ ℝn in an image 𝕀 is an ordered sequence of n orthogonal vectors 〈τ1(p), τ2(p), …, τn(p)〉 inductively defined as follows:

τ1(p) is the vector from p to the closest point on the nearest partitioning manifold of 𝕀.

Given the first i orthogonal vectors, τ1(p), τ2(p), …, τi(p), the (i + 1)th vector τi+1(p) points from p to the closest point on the nearest partitioning manifold in the orthogonal complement image of 𝕀 induced by τ1(p), τ2(p), …, τi(p) and the point p.

Thus, although, the nearest partitioning manifold defines the t-scale at a point, the above analytic formulation embrace the case when there are multiple objects. In 2- and 3-D, we refer to τ1(p), τ2(p) and τ3(p) (only, for 3-D) as primary, secondary and tertiary t-vectors of p; in general, “t-vector” will refer to any of the three vectors. The notion of t-scale defined as above is schematically illustrated in Fig. 2 using a 3-D rabbit femur bone surface m1 (medium dark gray). As illustrated in the figure, t-scale at a given point p (solid black dot) in a 3-D image is an ordered sequence 〈τ1(p), τ2(p), τ3(p)〉 of three orthogonal t-vectors. The primary t-vector τ1(p) (black) defines the direction and distance to the closest point on the femur surface. The orthogonal complement plane and the 1-D partitioning manifold on are shown in the figure; note that the 1-D partitioning manifold (light gray) is essentially the intersection between the plane (dark gray) and the original partitioning surface m1 in the 3-D image. The secondary vector τ2(p) (medium dark gray) is defined by the point on that is closest to p. Once τ1(p) and τ2(p) are found, the line (light gray) on which the tertiary vector τ3(p) lies is confirmed; the final direction and the length of τ3(p) is defined by finding the closest point on the partitioning surface along the line. It may be noted that, often, projections of the two dotted lines (light and medium dark gray) indicates to two principal directions on m1 where it meets τ1(p); this observation is utilized in our computational solution for t-scale in 3-D.

Fig. 2.

An illustration of t-scale using a rabbit femur bone surface (medium dark gray) forming a 2-D manifold m1. The candidate spel p (solid black dot); the point r on m1 closest to p gives the primary t-vector τ1(p) (black). The orthogonal complement plane and the 1-D manifold are shown in dark and light gray, respectively. Secondary t-vector τ2(p) (solid medium dark line) is defined by the point on closest to p; finally, τ3(p) (solid light gray line) is given by the closest point on along the line orthogonal to τ2(p). It may be noted that τ2(p) and τ3(p) coincide with principal directions of m1 at r; this observation is utilized for efficient computation of 3-D t-scale.

Here, we will present a matrix representation T(p) of t-scale at p derived from the ordered sequence of orthogonal t-vectors 〈τ1(p), τ2(p), …, τn(p)〉 facilitating use of conventional tensor algebra. Let ij(p) denote the unit vector along τj(p) and let λj(p) be the magnitude. The matrix representation of t-scale is defined as follows:

The above equation represents t-scale using a symmetric positive semi-definite matrix, an equivalence of covariance matrix. Such a compact formulation will facilitate efficient realization of a direction-dependent anisotropic parameter control strategy under a predetermined physical model; see Sections 2.4 for an example. Also, a matrix formulation of t-scale will be helpful in understanding the interaction between local t-scale structure and the scaling, rotation, translation and shear components of local Jacobian matrix of an image deformation field [44–46].

2.3. An efficient computation of t-scale

A direct algorithmic formulation of t-scale computation from its definition faces two major hurdles − (1) object partitions are unknown in real images and (2) high computational complexity in three- or higher-dimensions. Here, we outline our algorithmic solution for 3-D images involving edge detection, distance transform and differential geometric approaches which may be extended to higher dimensions. In an image, often, we do not know the partitioning manifolds used to define t-scale. However, we may realistically assume that detected edge points in an image lie on these hypothetical manifolds. Also, because of the fact that these edge points are dense samples on these manifolds, the distance transform from these edge points is a close approximation to the unknown distance transform from the hypothetical partitioning manifolds. With this understanding, t-scale may be computed by using gradient analyses and computational geometric approaches to the distance transform map from the image edge locations; in the rest of this section, by “distance transform” we will refer to the distance transform from image edge locations.

Based on the above convention, it is observed that the gradient of the distance transform map at any given point provides the direction to the nearest partitioning manifold, i.e., the direction of the primary t-vector. The magnitude of the primary t-vector is defined by the distance transform value at the candidate point. Once the primary t-vector is determined, in 2-D, the secondary t-vector may be computed by locating the closet manifold along the line perpendicular to primary t-vector. However, this computation is not so trivial in 3-D where the first step is to determine the principal directions on the local partitioning manifold (Fig. 2). This task is accomplished using a new algorithm that is based on computational geometric analysis of distance transform. In the following we describe different steps in t-scale computation starting with basic definitions and notations.

In this paper, all computational and algorithmic developments are confined to 2- and 3-D images, although, these methods may generalize to higher dimensions. Let ℤ denote the set of integers. It may be noted that ℝ2 and ℝ3 represent a 2-D plane and a 3-D space while ℤ2 or ℤ3 denote a digital space in 2- or 3-D, respectively. We will use ℤn as a common reference to ℤ2 and ℤ3. An n-D digital image is defined with an image intensity function f : ℤn → ℝ. Each element of an n-D digital space is referred to as a spel (an abbreviation of “spatial element”) whose position is denoted by Cartesian coordinates (x1, x2) or (x1, x2, x3) where x1, x2, x3 ∈ ℤ. For any two spels p, q ∈ ℤn, |p – q| denotes the Euclidean distance between the two spels. For any vector v ∈ ℝn, |v| gives its magnitude.

2.3.1. Edge detection and distance transform computation

The purpose of edge detection is to compute sample points on unknown partitioning manifolds in a digital image. Here, we have adopted an edge detection approach combining both Laplacian of Gaussian (LoG) and Derivative of Gaussian (DoG) operators. Specifically, an edge is located at the zero crossing of LoG if absolute value of its DoG exceeds a predefined threshold. It may be noted that edge locations in an image form a set of points in ℝn, therefore, a zero crossing of LoG may not coincide with a spel having integral coordinates. This problem is solved by analyzing topological consistency of sign alteration for LoG values at spels over a 2n neighborhood; see Fig. 3 for geometric classes of possible alteration patterns over a 2 × 2 × 2 neighborhood. Alteration patterns in a geometric class are identical under mirror reflection and/or rotations by integral multiple of 90°. Topologically consistent cases of LoG sign alterations are shown in Fig. 3a where the points with identical LoG sign are 6-connected [47–49]; a few examples of topologically inconsistent alteration patterns are shown in Fig. 3b. Here, 6-connectivity is enforced for topological consistency as 26-connectivity allows two voxels with identical LoG sign to meet at a vertex and such an object may not be locally separated by a pseudo-Riemannian manifold.

Fig. 3.

Different patterns of LoG sign alteration in a 2 × 2 × 2 neighborhood. Spels in a 2 × 2 × 2 neighborhood are marked with ± or ∓ (light gray) indicating that if the sign of LoG at a spel marked with ± is positive then that at spel marked with ∓ is negative or vice versa. (a) All possible geometric classes of LoG sign alterations with a valid zero crossing. (b) A few examples of topologically inconsistent LoG sign alterations without a valid zero crossing.

A zero crossing of LoG is identified for topologically consistent cases, only. To determine the edge location, first, a zero crossing is located for each pair of points with alternating LoG values in the 2n neighborhood. Finally, the edge is located at the mean of these zero crossings. The DoG value at the edge location is determined using n-linear interpolation of DoG values at grid locations in the 2n neighborhood depending upon image dimensionality. To our knowledge, the idea of using topological consistency to locate zero-crossings is new and was not used earlier. Finally, two thresholds thrhigh and thrlow of DoG values and a technique similar to hysteresis, originally proposed in Canny’s edge detection algorithm [50], are used to select both strong and weak edges while avoiding noisy zero-crossings. The two thresholds thrhigh and thrlow were determined using the hysteresis threshold detection algorithm for the Canny edge detector [51,52]. Saha et al. [53] described an application-dependent training approach to determine different gradient parameters.

Distance transform is defined as a function or an image DT : ℤn → ℝ, where, DT(p)|p ∈ ℤn gives its Euclidean distance from the closest partitioning manifold. Here, the basic idea is to use edge locations in ℝn and then compute an Euclidean distance transform from these locations. Let E denote the set of all edge points in an image; the binding box of an edge location e ε E is the 2n neighborhood surrounding that point. For every edge location e ε E, a distance transform value is initialized at the 2n vertices of e’s binding box by directly measuring their distances from e. Following this initialization, the Euclidean distance transform values are perfused inside using a wave propagation algorithm similar to one adopted in [54,55]. See Fig. 4 for results of edge location and distance transform computation.

Fig. 4.

Results of t-scale computation. (a) A 2-D image slice from the Brainweb MR brain phantom data. (b) Computed edge locations (red) and gray scale distance transform. (c) A color coded illustration of 2-D t-scale. (d) Color coding disk at full intensity. (e–g) Same as (a–c) but for 3-D t-scale computation. Results are shown on one image slice; see text for further explanation. (h–j) Same as (e–g) but from another view. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

2.3.2. T-scale computation

As mentioned earlier, the primary t-vector τ1(p) at a spel p ∈ ℤn is computed by analyzing the gradient of DT map at p as follows:

and the unit vector i1(p) along τ1(p) is

In this paper, we have used the Sobel gradient operator.

Once, the primary t-vector τ1(p) is determined, computation of the secondary t-vector τ2(p) in 2-D is straightforward because the vector lies on the straight line Lp perpendicular to τ1(p). Thus, τ2(p) may be computed by locating the closet partitioning manifold along the straight line Lp. However, a difficulty here is that the edge locations representing partitioning manifold form a discrete set of points in ℝn and, therefore, a simple sampling approach along a straight line for locating a manifold may raise the problem of missing the target manifold. This challenge is overcome by modifying the search process as follows. Let p + iΔLp |i = 1,2, … be the sample points on a line Lp; the jth sample point is sufficiently close to the target manifold along Lp if it satisfies the following two conditions:

DT(p+jΔLp) ⩽ δDT.

The angular difference between the two primary t-vectors τ1(p +jΔLp) and τ1(p +jΔLp + ΔLp) at the two successive sample points p + jΔLp and p +jΔLp + ΔLp is close to 180°; in this paper, we have used “⩾ 135°” to account for artifacts due to finite precision and other errors. It may be noted that, if the angular difference is less than 90°, the two points are on the same side of the partitioning manifold. Thus, the threshold of 135° was picked at the middle of the ideal situation of 180° and 90° when the two points fall on the same side of the partitioning manifold.

The value of δDT is determined by the density of edge locations and it should also define the sample interval size ΔLp; in this paper, we have used δDT = 1. Finally, the target manifold is located on the line Lp at a distance of jΔLp + DT(p + jΔLp) from p.

Computation of the secondary t-vector τ2(p) is more challenging in 3-D as compared to 2-D. The primary reason behind the difficulty is that, the determination of τ1(p) narrows down τ2(p) onto a plane P perpendicular to τ1(p). However, τ2(p) may lie along any direction on the plane. Although, τ2(p) is uniquely defined in Section 2.2, its computation demands a search on image geometry on the plane P. To keep the computation of t-scale confined in the local neighborhood, we choose the vector τ2(p) along the maximum curvature direction at the closest point r on a partitioning manifold (see Fig. 2). A challenge is how to compute the maximum curvature direction at r because an analytic expression of the partitioning surface is unknown; instead, discrete sample points, i.e., edge locations, on the surface are available. The estimation of curvature for discrete 3-D objects has been an important topic in computer graphics, and several methods have been proposed [56–59]. The basic idea behind our algorithm of detecting the maximum curvature direction is to first, determine the primary t-vector τ1 at every spel in the neighborhood of p. The primary t-vector τ1 at a neighboring spel of p intersects the partitioning surface at the vicinity of r (see Fig. 5). More importantly, the angular inclination of τ1 with the plane P, perpendicular to τ1(p), changes most rapidly along the direction of maximum principal curvature and it changes slowly along the minimum principal curvature direction. In other words, the projection of the unit vector along τ1 on P takes larger values along the maximum curvature direction and it takes smaller values along the minimum curvature. Although our method is primarily based on this theory, to reduce the effect of noise and discretization, we determine the principal curvature direction using principal component analysis (PCA) of these projection vectors on P as follows. Let q1, q2, …, qm be m points in the neighborhood of p and let i′1 (q) (solid vectors in Fig. 5b and c) be the projection of the unit vectors i1(q) on P. To enforce axial symmetry of projection vectors, each projection vector i′1 (q) (solid line; Fig. 5c) is accompanied with an opposite vector –τ′1 (q) (dotted line). PCA of the all points represented by these vectors is applied to compute the two principal directions; the eigenvector corresponding to larger eigenvalue gives the direction for maximum principal curvature while the other eigenvector provides the direction of the minimum curvature (see Fig. 5d). The secondary t-vector τ2(p) is chosen along the maximum curvature direction; the exact value of the t-vector is determined using the same algorithm adopted for detecting τ2(p) in 2-D. Finally, once the primary and secondary t-vectors are known, the task of finding the tertiary t-vector in 3-D is equivalent to determining the secondary t-vector in 2-D.

Fig. 5.

Computation of the secondary t-vector τ2(p) in 3-D. (a) A partitioning surface with primary t-vector τ1(p) (black) at the candidate point p and τ1(p)s (gray) for several qs in the neighborhood. The plane P orthogonal to τ1(p) is indicated. (b) τ′1(q), projection of the unit vectors τ1(q)/|τ1(q)| on P, are indicated for several qs in the neighborhood of p along with the curve formed by the intersection of P and the partitioning surface. (c) Computation of principal directions using PCA of τ′1(q)s (solid) and – τ′1(q)s (dotted). (d) Projection of principal directions onto partitioning surfaces.

2.3.3. T-scale smoothing

A smoothing filter is often used to reduce noise in intensity images. However, smoothing of a t-scale image may not be as trivial as smoothing a scalar image. First, a matrix representation T(p) of t-scale at p is obtained as described at the beginning of Section 2 to enable various tensor operations and statistical analyses [60]. Weickert [39] has used component-wise Gaussian convolution on local structure tensors to obtain a smooth representation. To avoid producing negative eigenvalues in a component-wise averaging that contradicts the basic definition of t-scale, we have adopted the Log–Euclidean distance (L–E) approach [61]. Effectiveness of the L–E approach in diffusion tensor image (DTI) interpolation has been demonstrated in [61].

Let A = QΛQT|Q: unitary matrix, Λ: diagonal matrix with real nonnegative elements, be a symmetric positive semi-definite matrix. The logarithm and the exponential of this matrix are defined as follows:

A smoothing function using the L–E approach is defined using a discrete Gaussian kernel Gσ : ℤn → ℝ

where Gsupport is the support of the kernel and K is the scalar normalizing factor ensuring that ∑a∈GsupportGσ(a) = 1. Finally, the L–E based tensor smoothing algorithm is designed as follows using the kernel function Gσ defined as above:

where ‘*’ is the convolution operator.

2.4. T-scale based image filtering

In this section, we describe a t-scale based diffusive filtering that is primarily developed on the theory of anisotropic diffusion originally proposed by Perona and Malik [62] and subsequently, studied by others [24,63]. The primary objective of the new t-scale based filtering is to govern the diffusion process in a space-variant and orientation-dependent fashion to optimally fit with local image structures captured in the form of t-scale. Anisotropic diffusion [62] was originally described to encourage diffusion within a region (characterized by low intensity gradients) while discouraging it across object boundaries (characterized by high intensity gradients). The anisotropic diffusion process at any spel p may be defined as follows:

where f is image intensity function; t is time variable; “div” is divergence operator; V = GF is diffusion flow vector; G is diffusion conductance function; F is intensity gradient vector; Δτ is the volume enclosed by the surface s surrounding p; and ds = u ds where u is a unit vector which is orthogonal and outward-directed with respect to the infinitesimal surface element ds. The key idea of anisotropic diffusion [62] is to spatially vary the conductance using a nonlinear and non-increasing function of gradient magnitude, e.g. G = exp(–|F2/2σ2|) resulting into a non-monotonic behavior of flow against gradients. Guided by the original theory by Perona and Malik, a diffusive filtering process in a digital image is formulated as an iterative process as follows:

where fi represents image intensity at the ith iteration; µα is pixel adjacency relation; KD is a diffusion constant; Vi−1 is intensity flow vector at (i − 1)th iteration (see below for a precise definition); and D(p, q) is unit vector along the direction from p to q and ‘·’ is the vector dot product operator. Assuming a uniform pixel adjacency relation, the diffusion constant KD should satisfy the following inequality to ensure a monotonic intensity variation with iterations [63]

Using standard 26-adjacency in 3-D, KD = 1/27. The flow vector Vi is determined by the following equation:

where

and Gi is an orientation- and space-adaptive conductance function at ith iteration. As mentioned before, Gi should be a nonlinear function of local intensity gradient Fi that eventually leads to a non-monotonic behavior of flow with gradients. Gaussian functions, as follows, have popularly been used for Gi

where σ is the control parameter determining the degree of filtering. When σ is large, the degree of filtering is high and possibilities of blurring across boundaries and of smearing out regions containing fine details increase. On the other hand, when σ is small, the filtering process performs conservatively and more noise survives after filtering. In conventional diffusive filtering methods [62,63], the diffusion process adapts to local gradient while the controlling parameter σ is kept fixed limiting the fine control on and adaptivity to local image structural properties. Weickert [39] introduced the notion of structure tensor to control the σ parameter and demonstrated its use in along-structure smoothing. The motivation of our work is to use geometric tensor representation of local structures in filtering that facilitates along-structure smoothing while preserving boundary sharpness by discouraging cross-structure diffusion flow; a preliminary version of t-scale based image filtering algorithm was presented in [30] using the old formulation of t-scale. Here, the controlling parameter σ is determined by local t-scale in a space- and direction-variant manner as follows:

The above formulation ensures a minimum diffusion of σmin during the filtering process; the second component in the expression on the r.h.s. uses a monotonically non-decreasing function χ to control local diffusion process in a direction-variant manner using the two t-scale derived measures ζp(q) and ζq(p). The term σψ determines the sensitivity of the diffusion process with local t-scale measures. The t-scale measure ζq(p) is defined as follows:

where ipq is the unit vector along the direction from p to q. Note that, by considering t-scale T(p) as a covariance matrix, the measure ζp(q) gives the square root of the variance of the system along the vector ipq. Here, ζp(q) is treated as an approximate measure of the radial length of the ellipsoid T(p) along the direction ipq. In this paper, we have used the following functional form for χ

where σL is the maximum expected radial length of t-scale ellipse computed as the maximum DT value in the image. In all experimental results presented in Section 4, the parameters σψ is determined as the overall noise level in the image computed in the same way as described in [24]; the value the parameter σmin is chosen as 25% of the value of σψ. Finally, for all experimental results of the filtering process was run for twenty iterations.

2.5. T-scale based n-linear image interpolation

Linear interpolation is a widely used technique for image resampling [64]. In a one dimensional discrete signal, the linear interpolation in between two successive sample values is defined by the straight line joining the sample points. In an n-D digital image, image intensity values are known at spels p ∈ ℤn with integral co-ordinate values. Following the principle of linear interpolation, the intensity value at a location pc ∈ ℝn is determined as a weighted sum of intensity values at 2n vertices of the binding box of pc = (x1, x2, …, xn). Let ⌊·⌋ and ⌈·⌉ denote the floor and ceiling operators. The vertices of the binding box of pc are p1 = (⌊x1⌋, ⌊x2⌋, …, ⌊xn⌋), p2 = (⌈x1⌉, ⌊x2⌋, …, ⌊xn⌋), …, p2n = (⌈x1⌉, ⌈x2⌉, …, ⌈xn⌉). The estimated intensity value at pc is given as follows:

where

The basic idea of using t-scale in n-linear image interpolation is to bring the notion of an anisotropic space where distance increases slower along the direction of the local structure while it increases faster in the cross-structure direction. A smaller value of ζpi (pc) indicates that the vertex pi is close to the partitioning manifold along the vector ipjpc and therefore, the weight of pi in interpolating the intensity value at pc should be discouraged to avoid cross-region mixing. On the other hand, a larger value of ζpj(pc) means that pj is relatively far from the partitioning manifold along the vector ipjpc and therefore a generous value of weight for pj may be used along-the-edge smoothing. Therefore, the t-scale based weights for linear interpolation are defined as follows:

Finally, the t-scale based linear interpolation procedure is defined by the following equation:

3. Experimental methods

In this section, we describe our experimental methods to examine the performance of the new t-scale computation as well as the performance of t-scale based filtering and interpolation methods. Three different experiments were designed as follows:

T-scale computation: To evaluate the accuracy of the new efficient t-scale computation method using 3-D Brainweb phantoms at various levels of noise and blur.

T-scale based image filtering: To evaluate the performance of the t-scale based anisotropic diffusive method and compare it with gradient and structure tensor based anisotropic diffusive methods on both 2- and 3-D images.

T-scale based n-linear image interpolation: To evaluate the performance of t-scale based n-linear image interpolation method in comparison with standard n-linear and windowed-sinc interpolation methods.

3.1. Accuracy of t-scale computation method

The purpose of our accuracy evaluation study is to examine the difference in t-scale obtained using the efficient differential geometric approach as compared to the true value directly computed as per the definition. To perform this test, we generated phantom images at five different levels of noise (noise: 8–20%) and blurs (σblur: 0.5–2.5) from the simulated brain MR image available at brainweb.bic.mni.mcgill.ca/brainweb and a 3-D pulmonary human computed tomography (CT) image. The Brainweb MR phantom data was downloaded with the following parameters – matrix size: 181 × 217 pixels, number of slices: 181, isotropic voxel size: 1 mm, noise: 3% and intensity non-uniformity: 20%. The pulmonary CT images was acquired using the following protocol − 120 kV, 100 effective mAs, pitch factor: 1.0, nominal collimation: 64 × 0.6 mm, image matrix: 512 × 512, number of slices: 518, in-plane resolution: (0.55 mm)2 and slice thickness: 0.5 mm.

Following the fact that the definition of t-scale is based on an image representation with partitioning manifold, true t-scale may not be computed from a general image. To define the manifolds, we partitioned the image into three regions, namely, white matter, gray matter and background (Figs. 6b and 7b). True measure of t-scale was obtained from the partitioned image using a sample-line based approach [30] with a high angular sampling of 10 K lines over the 3-D angular space. Test images for t-scale computation using the new differential geometrical approach were derived from the original image after adding a blur and a white Gaussian noise (Figs. 6d and g, and 7d and g).

Fig. 6.

Accuracy of t-scale computation results. (a) An original image slice from the Brainweb MR phantom data. (b) Partitions of white and gray matter regions used for ground true values for t-scale image. (c) True 3-D t-scale image computed by a dense spatial sampling approach on the hard partition image of (b). (d) Phantom image slice with blur and noise. (e) Computed 3-D t-scale image for (d) using the proposed method. (f) Log–Euclidean error map for (e) as compared to (c). (g–i) same as (d–f) but for the phantom at higher noise and blur.

Fig. 7.

Same as Fig. 6 but from the coronal view.

Let WT(p) be the true t-scale matrix at a spel p computed from the partitioned image and let Wtest(p) denote to the t-scale matrix representation obtained from a test image by applying the differential geometric algorithm. Although, t-scale computation methods were applied on entire image, the error analysis was confined to white and gray matter regions only to avoid background; better results were obtained when the background region was included in error analysis. Let Ω denote the region over which the error analysis is performed. The error of t-scale computation is defined as the average normalized Log–Euclidean distance between the true and the computed t-scales over the target region Ω as follows:

‖·‖; is the Euclidean norm of a positive definite symmetric matrix.

3.2. Evaluation of t-scale based image filtering

The purpose of the experiment is to examine the performance of t-scale based filtering methods as compared to intensity based and structure tensor based diffusive filtering algorithms. ITK implementation [65] of gradient-based diffusive filtering and their recommended values of 0.125, 3.0 and 5 were used for the time step, conductance parameter and the iteration number for 3-D image. An algorithm was implemented for structure based diffusive filtering in accordance to the description of [39] and the parameter value settings of 0.001 for regularization parameter α, 1 for threshold parameter C, 0.3 for noise scale σ, 2 for integration scale ρ, and 10 for iteration time t were used as suggested by the author. Three images were used in this experiment − (1) a phantom image generated with geometric structures at various scales, (2) a photographic image of an aquarium and (3) a lung CT image. Both phantom and CT images were corrupted with five different levels of noise (8–20%) and different filtering algorithms were applied to the noisy images to qualitatively evaluate their performance. A measure of residual noise was used to assess the performance a method and also, a measure of structure blurring was examined for the phantom image since the knowledge of structures is needed to define this measure.

Let I be an original phantom or lung image; I was corrupted by adding a zero-mean Gaussian noise n generating a noisy test image In = I + n. Noise level was defined over the test region Ω in an image as follows:

It may be noted that percent of noise is essentially an inverse measure of signal to noise ratio or SNR widely used as a measure of noise level. Let InF denote the image obtained by applying a filtering algorithm to the noisy image In. Thus the residual noise in the filtered image is nr = InF – I, and an overall measure of residual noise is defined as follows:

Relative contrast is defined for the phantom image to measure structure preserving property of a filtering method in terms of object to background contrast relative to residual noise. Let Oj and Bj denote the set of object and background pixels in a phantom image that are no further than m pixels from the object/background interface. Such pixels are identified in a binary image using standard morphological operations. We did not use the entire object/background regions for measure relative contrast as the notion of structure blurring is absent in deep interior and thus, inclusion of such regions in analysis only reduces the sensitivity of the measurement. The performance of different methods was analyzed for two values of 1 and 2 for m. Finally, the relative contrast in an image I is defined as

where µOj and σOj are the mean and standard deviation of intensities over Oj while µBj and σBj denote same entities over Bj.

3.3. Evaluation of t-scale based n-linear interpolation

The performance of the t-scale based n-linear image interpolation method was evaluated using a phantom image and several medical images from different applications and was compared with standard n-linear and windowed-sinc interpolation methods [64]. A 3-D phantom image of size 512 × 512 × 512 was generated a sinusoidal wavy (along the slice direction) pattern of geometric structures with its scales varying from 5 to 10 voxels. Also, the following sets of medical images were used in our experiment:

The Brainweb MR phantom data described in Section 3.

Seven human pulmonary multi-detector CT images with voxel size of 0.55 × 0.55 × 0.5 mm3 and in-plane matrix grid size of 512 × 512 with the number of slices varying between 519 and 728.

Micro-CT images of four cadaveric distal tibia specimens at 28.8 µm isotropic resolution and 3-D image grid size of 768 × 768 × 512.

Five abdominal CT with voxel size of 0.59 × 0.59 × 1.00 mm3 and in-plane matrix grid size of 512 × 512 with the number of slices varying between 64 and 319.

Starting from an original image I, a sub-sampled images Id was obtained with different sub-sample rates of 2, 3 or 4. A given image interpolation method was applied to each sub-sampled image producing an interpolated image Iint at the original resolution. The performance of the underlying interpolation method is then measured by computing the average normalized absolute difference between the interpolation and the original image as follows:

4. Results and discussion

In this section, we discuss experiments results of the previous section. Performance of the t-scale computation algorithm is qualitatively illustrated in Fig. 4 using 2-D image slices from the Brainweb MR phantom data and the 3-D pulmonary human CT image. The result of the 2-D t-scale computation algorithm on a Brainweb MR phantom image slice randomly selected from mid-brain region is illustrated in Fig. 4a – d. Results of edge location and gray scale distance transformation are presented in Fig. 4b. The color coding scheme by Saha [30] was adopted to display the 2-D t-scale image at a pixel p that represents an ellipse Г(p). A color value is assigned for the t-scale Г(p) such that the hue component of color indicates its orientation while the saturation and intensity components of the color denote the anisotropy and thickness, respectively. The color coding disk at maximum intensity is shown in Fig. 4d. Results of 3-D t-scale computation on the pulmonary CT image are presented in Fig. 4e – j. 3-D t-scale at a spel p essentially represent an ellipsoid Г(p). Using three components of color-space, we may display an ellipse. Therefore, in 3-D, the intersection between Г(p) and the display plane forming an ellipse is depicted (Fig. 4g and j). Results of both 2-D and 3-D t-scale computation are visually satisfactory. The new algorithm takes 3 s to compute 2-D t-scale for the Brainweb phantom image slice running in a desktop with a 2.53 GHz Intel(R) Xeon(R) CPU and Linux OS; the original sample line based t-scale computation algorithm [30] takes 83 s for the same image. Since a 3-D implementation of the original sample line based t-scale computation algorithm is not available, we calculated the expected computation time as follows. The 2-D sample line based algorithm with 60 sample lines and 60 sample points per line takes approximately 1 min for an image of size 256 × 256. Therefore, in 3-D with 900 sample lines (to maintain a comparable angular sampling rate) the total run time for a 512 × 512 × 518 image should be approximately

The multiplication by ‘2’ is added to account for tri-linear interpolation in 3-D image instead of bilinear interpolation in 2-D. On the other hand, the new 3-D t-scale computation algorithm takes approximately 50 min to compute t-scale for the 3-D CT image.

4.1. Results of accuracy analysis

Results of accuracy analysis of the efficient t-scale computation algorithm as compared with the results directly obtained from the analytic definition are qualitatively illustrated in Figs. 6 and 7. As observed in both figures, at moderate blur and noise, the agreement of the efficiently computed t-scale with the analytic t-scale computed in absence of noise and blur is visually satisfactory. However, at very high noise and blur, the fine structures are lost in t-scale. Results of quantitative analysis are presented in Table 1. As observed in the table, the performance of the algorithm decreases, i.e., error increases with noise as well as blur. Based on these results, it may be reasonable to conclude that the computational geometric approach to t-scale is efficient and produces acceptable t-scale at moderate blur and noise.

Table 1.

Performance of the 3-D t-scale computation algorithm based on analytic definition at various levels of noise and blurring. Each row indicates a specific noise level that increases from top to bottom and each column indicates a specific blur level that increases from left to right. Results are reported as normalize Log–Euclidean difference (%) to the result generated by space sampling method for the original binary phantom.

| B1 | B2 | B3 | B4 | B5 | |

|---|---|---|---|---|---|

| N1 | 4.07 | 5.52 | 6.13 | 7.20 | 8.90 |

| N2 | 4.17 | 5.63 | 6.42 | 7.54 | 9.11 |

| N3 | 4.46 | 5.75 | 6.59 | 7.73 | 9.29 |

| N4 | 4.62 | 5.80 | 6.70 | 8.21 | 9.40 |

| N5 | 5.17 | 6.10 | 7.13 | 8.79 | 9.78 |

4.2. Results of t-scale based image filtering

Here we discuss the performance of t-scale based image filtering algorithm with the gradient and structure tensor based methods in both 2- and 3-D. Fig. 8 illustrates results of three filtering algorithms on a photographic image of a fish in an aquarium containing visible noise. Results of application of the three filtering algorithms are presented in Fig. 8b – d. As observed in these figures, among the three results, the maximum visual perceptual noise cleaning and boundary sharpening is achieved using the t-scale based method (Fig. 8d). This observation is confirmed in enlarged views (Fig. 8e – h) of a small box selected from the matching region in the original and the three filtered images. Fig. 9 illustrates results of different filtering methods on a 2-D phantom image. As observed in the figure, at the finest scale, the gradient and structure tensor based filtering algorithms have failed to maintain the separate identity of the three sinusoidal curves at several locations. On the other hand, the t-scale based algorithm has successfully preserved the separation of the three curves at the finest scale while maximally cleaning noise over homogenous regions. The superiority of the t-scale based filtering method on the phantom image is further confirmed in the results of quantitative analysis (Tables 2 and 3) where the t-scale based method has achieved minimum residual noise and maximum enhancement in relative contrast measures among all three method algorithms. In these tables, G-, S- and T-algorithms are used as abbreviations for gradient, structure tensor and t-scale based diffusive filtering algorithms.

Fig. 8.

A qualitative comparison among different diffusive filtering methods. (a) The original digital image with natural noise. (b–d) Smooth images obtained by using gradient (b), structure tensor (c) and t-scale (d) based diffusive filtering methods. (e–h) Zoomed in displays of the matching region cropped from (a–d), respectively. It may be noted that the t-scale based method has outperformed the other two methods in smoothing along the structures while preserving boundaries and effect is more prominent in the zoomed displays in (e–h).

Fig. 9.

Comparative results of image filtering in a 2-D phantom. (a) The original phantom image. (b) Degraded image after adding Gaussian white noise. (c–e) Results of gradient (c), structure tensor (d) and t-scale based (d) anisotropic diffusive filtering methods.

Table 2.

Results of quantitative comparison among three different methods in terms of residual noise after filtering on different images.

| Image | Original noise (%) | Residual noise (%) |

||

|---|---|---|---|---|

| G-algorithm | S-algorithm | T-algorithm | ||

| 3-D Phantom | 8.0 | 7.8 | 7.7 | 5.9 |

| 10.0 | 9.5 | 9.5 | 6.8 | |

| 12.0 | 11.1 | 11.3 | 7.8 | |

| 15.0 | 13.6 | 13.7 | 9.3 | |

| 20.0 | 17.3 | 17.8 | 11.4 | |

| 3-D Lung CT | 8.0 | 7.5 | 8.7 | 4.4 |

| 10.0 | 7.7 | 9.3 | 5.3 | |

| 12.0 | 9.5 | 9.9 | 5.9 | |

| 15.0 | 11.7 | 10.9 | 7.0 | |

| 20.0 | 14.8 | 14.5 | 9.4 | |

Table 3.

Results of quantitative comparison among three different methods in terms of relative contrast after filtering on 3-D phantom image.

| Radius | Original relative contrast |

Relative contrast after filtering |

||

|---|---|---|---|---|

| G-algorithm | S-algorithm | T-algorithm | ||

| 1 voxel | 9.1 | 8.3 | 8.1 | 9.5 |

| 8.6 | 7.1 | 7.7 | 9.0 | |

| 8.0 | 7.3 | 7.3 | 8.5 | |

| 7.1 | 6.1 | 6.7 | 7.8 | |

| 6.0 | 4.9 | 5.5 | 6.7 | |

| 2 voxels | 9.8 | 8.6 | 8.4 | 10.5 |

| 9.2 | 7.3 | 8.0 | 10.1 | |

| 8.6 | 7.7 | 7.7 | 9.4 | |

| 7.7 | 6.5 | 7.1 | 8.8 | |

| 6.5 | 5.3 | 5.9 | 7.6 | |

Fig. 10 illustrates the results of three filtering methods on a 3-D pulmonary CT image. Fig. 10a presents an axial image slice from the original CT data; here, a MIP display of the image region covering ±10 image slices around the target slice is used to depict partial 3-D information of the local pulmonary vasculature. The same image region after adding a 12% white Gaussian noise is shown in Fig. 10b while the results of gradient, structure tensor and t-scale based filtering algorithms are presented in Fig. 10c – e. As observed in these results, the diffusive filtering algorithm has reduced some noise (Fig. 10c) although, it has blurred fine structures at several locations and also the residual noise is visually apparent. While the structure blurring is visually less prominent using the structure tensor based method (Fig. 10d), the presence of residual noise is visible and the peripheral vessels are visually blurred. On the other hand, the t-scale based filtering algorithm has successfully cleaned noise (Fig. 10e) while preserving almost every fine structure visible in Fig. 10a. As mentioned in Section 3.2, relative contrast may not be computed for this experiment and the results of quantitative evaluation of residual noise for different filtering methods at various noise levels are presented in Table 2 where it shows that, at all noise levels, the t-scale-based method has significantly outperformed the other two methods.

Fig. 10.

Results of 3-D image filtering. (a) An original image slice from a pulmonary CT image of a patient. (2) Degraded image after adding Gaussian white noise. (c–e) Results of 3-D image filtering using gradient (c), structure tensor (d) and t-scale (e) based diffusion.

4.3. Results of t-scale based n-linear interpolation

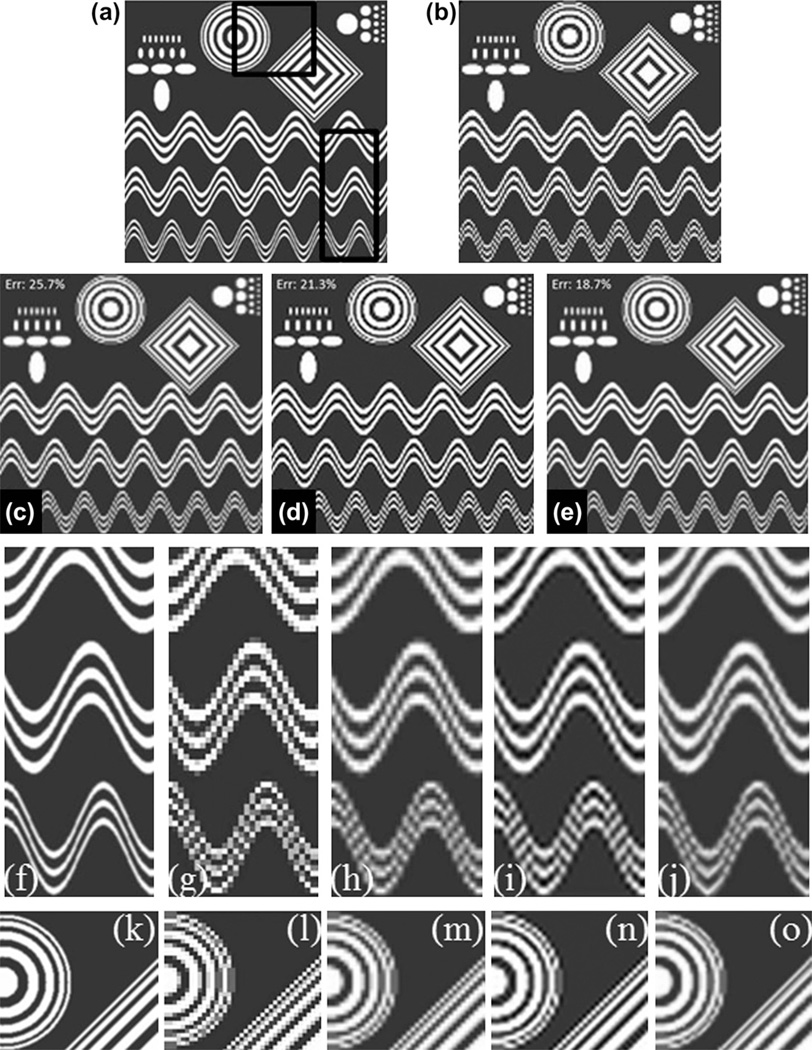

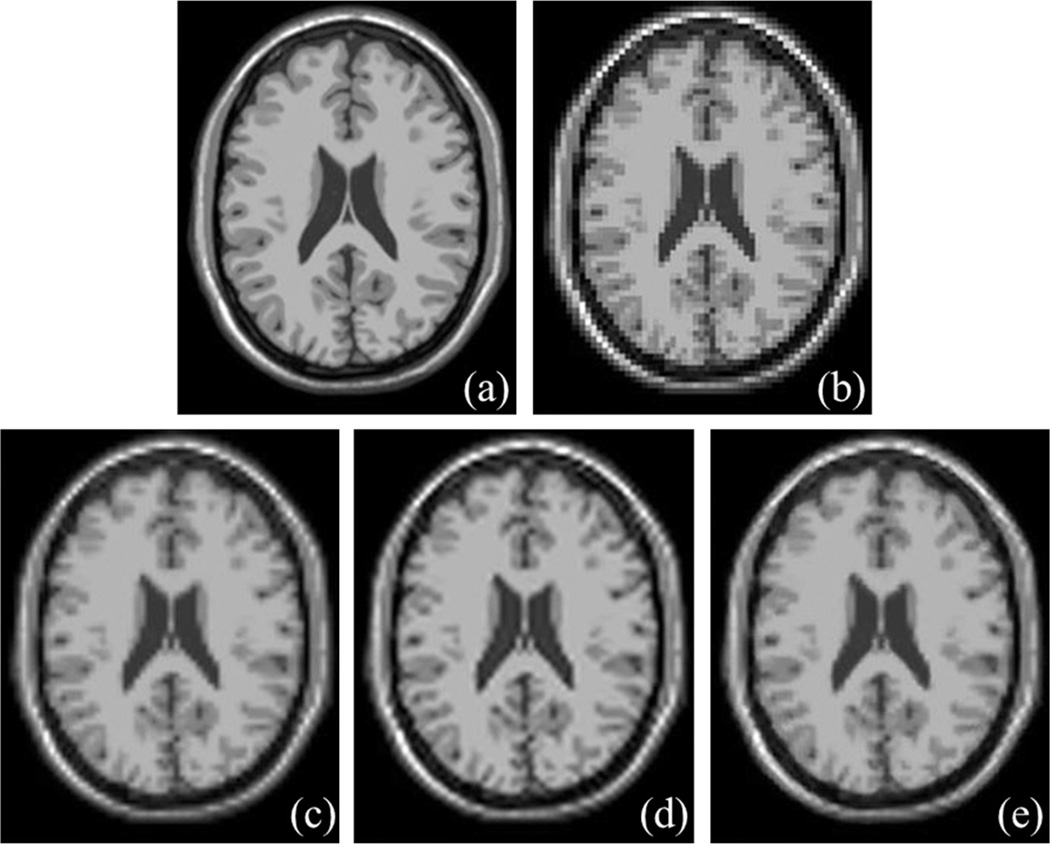

The performance of t-scale based n-linear image interpolation method has been examined and compared with standard linear and windowed-sync interpolation method using a 3-D phantom and a set of medical images selected from different applications. Fig. 11 show the results of applications of the three interpolation methods on the 3-D phantom image after 4 × 4 × 4 down sampling. Improvement in interpolations results using t-scale based interpolation in terms of structure smoothness is visually apparent. The results of application of the three interpolation methods on the Brainweb MR phantom data after 4 × 4 × 4 down sampling is shown in Fig. 12. It appears in the results the t-scale based method reduces the blur along object boundaries and also the ringing effects of windowed-sinc algorithm is absent in the t-scale based interpolation results (Fig. 12e). It may be mentioned that, for all interpolation experiments, t-scale was computed from the sub-sampled images. For quantitative analyses, the three methods were compared under 2 × 2 × 2, 3 × 3 × 3, and 4 × 4 × 4 down sampling rates and the results are presented in Figs. 13–15.

Fig. 11.

Results of image interpolation on a phantom data. (a) An original image slice. (b) Sub-sampled image at the rate 4. (c–e) Result using standard n-linear (c), windowed-sinc (d) and t-scale based n-linear image interpolation. (f–j) Same as (a–e) but for a zoomed part marked in (a). (k–o) Same as (a–e) but for another zoomed region. It may be observed that t-scale helps preserving small structures and it produces smooth edges without causing ringing artifacts which is visible for result produced by the windowed-sinc method.

Fig. 12.

Results of image interpolation on the Brainweb MR phantom image. (a) An original image slice. (b) An image slice from sub-sampled image at the rate of 3. (c-e) Results using standard n-linear (c), windowed-sinc (d) and t-scale based n-linear (e) interpolation methods. It may be observed that t-scale has produced crisper edges as compared to the standard n-linear interpolation without causing ringing artifact associated with the windowed-sync method.

Fig. 13.

Performance of three interpolation methods on the different phantom and medical images selected from various clinical applications at sub-sampling rate of 2 × 2 × 2. The percentage error was computed over the entire 3-D image while a paired t-test was performed based on the percentage error from individual slices. As compared with the standard n-linear and windowed-sinc methods, the t-scale based n-linear method has outperformed the first method while comparative performance with the windowed-sinc method varies for different images. An “NS” (non-significant) mark is used to indicate statistical insignificance of difference in results by two methods.

Fig. 15.

Same as Fig. 13 but for subsample rate of 3 × 3 × 3.

The t-scale based interpolation method has improved the interpolation results for datasets at every down sampling rates and the enhancements are statistically significant except for a few cases as indicated in Figs. 13 and 14. As compared to windowed-sinc algorithm, the t-scale based method has improved the interpolation results except for the ankle dataset at 2 × 2 × 2 down sampling. However, for the lung and abdomen datasets, the windowed-sinc interpolation method has performed even worse than basic n-linear method.

Fig. 14.

Same as Fig. 13 but for subsample rate of 3 × 3 × 3.

It general, it may be observed that, as sample rate gets lower, t-scale extends its improvement in results as compared to basic n-linear interpolation while the results using the windowed sinc methods get worse. This observation may be explained by the fact that, the use of structure information in the t-scale method leads to a local context adaptive metric space partially healing for the sub-sampling loss. On the other hand, for windowed sinc method, inclusion of a larger neighborhood may not add further meaningful information and may even worsen the results due to influence by locally disconnected structures falling inside the extended neighborhood leading to increase of ringing artifacts.

5. Concluding remarks

In this paper, we have presented an analytic formulation for t-scale for n-D images and have presented an efficient computational solution in 2- and 3-D. Also, we have provided an efficient computational solution for t-scale in 2-D and 3-D that is based on several new methods including gray scale distance transform and computation of local principal curvature directions on the closest partitioning manifold represented by discrete edge points. Experimental results in comparison with theoretical results derived under the ideal condition of object partitions with no noise and blur have demonstrated that the proposed efficient computation method yields acceptable results at moderate noise and blur with image structures being visually apparent. Applications of t-scale in diffusive image filtering and n-linear interpolation has been presented and the performance of their results in comparison with respective state of art methods has been examined. Specifically, the performance of t-scale based filtering has been compared with gradient and structure tensor based diffusive filtering algorithms and both qualitative and quantitative results have demonstrated improvements in image filtering using t-scale. The performance of t-scale based n-linear interpolation is compared standard n-linear and windowed-sinc interpolation results. Experimental results have shown a clear improvement using t-scale in n-linear interpolation; in comparison with the windowed-sinc interpolation method, the t-scale based n-linear interpolation has shown improved results except for the ankle data set at low sub-sampling. In summary, the new analytic formulation of t-scale captures rich contextual information of local structure with a practical computational solution and may benefit a large class of image processing and computer vision applications including image filtering and interpolation. Currently, we are investigating theoretical properties of t-scale and its applications to other image processing tasks including image segmentation, registration and quantitative morpho-metric analysis.

Acknowledgment

This work was supported by the NIH Grant R01 AR054439.

Footnotes

This paper has been recommended for acceptance by Tinne Tuytelaars.

References

- 1.Marr D, Vision WH. Freeman and Company. San Francisco, CA: 1982. [Google Scholar]

- 2.Witkin AP. Scale-space filtering; West Germany. Presented at the 8th International Joint Conference Artificial Intelligence, Karlsruhe.1983. [Google Scholar]

- 3.Koenderink JJ. The structure of images. Biol. Cyber. 1984;50:363–370. doi: 10.1007/BF00336961. [DOI] [PubMed] [Google Scholar]

- 4.Marr D, Hildretch E. Theory of edge detection. Proc. Roy. Soc. London B- 1980;207:187–217. doi: 10.1098/rspb.1980.0020. [DOI] [PubMed] [Google Scholar]

- 5.Ferraro M, Bocclgnone G, Caell T. On the representation of image structures via scale space entropy conditions. IEEE Trans. Pattern Anal. Mach. Intell. 1999;21:1190–1203. [Google Scholar]

- 6.Leung Y, Zhang JS, Xu ZB. Clustering by scale space filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2000;22:1396–1410. [Google Scholar]

- 7.Lindeberg T. Scale-space for discrete signals. IEEE Trans. Pattern Recogn. Mach. Intell. 1990;12:234–254. [Google Scholar]

- 8.Lindeberg T. Effective scale: a natural unit for measuring scale-space lifetime. IEEE Trans. Pattern Recogn. Mach. Intell. 1993;15:1068–1074. [Google Scholar]

- 9.Lindeberg T. Scale-Space Theory in Computer Vision. Boston, MA: Kluwer Academic Publishers; 1994. [Google Scholar]

- 10.Lindeberg T. A scale selection principle for estimating image deformations. Image Vis. Comput. 1998;6:961–977. [Google Scholar]

- 11.Lovell BC, Bradley AP. The multiscale classifier. IEEE Trans. Pattern Anal. Mach. Intell. 1996;18:124–137. [Google Scholar]

- 12.Vincken KL, Koster ASE, Viergever MA. Probabilistic multiscale image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1997;19:109–120. [Google Scholar]

- 13.Acton ST, Mukherjee DP. Scale space classification using area morphology. IEEE Trans. Image Process. 2000;9:623–635. doi: 10.1109/83.841939. [DOI] [PubMed] [Google Scholar]

- 14.Wang YP, Lee SL. Scale-space derived from B-splines. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:1040–1055. [Google Scholar]

- 15.Whitaker RT, Pizer SM. A multi-scale approach to nonuniform diffusion. Comput. Vis. Graph. Image Process.: Image Underst. 1993;57:99–110. [Google Scholar]

- 16.Wink O, Niessen WJ, Viergever MA. Multiscale vessel tracking. IEEE Trans. Med. Imaging. 2004;23:130–133. doi: 10.1109/tmi.2003.819920. [DOI] [PubMed] [Google Scholar]

- 17.Wong YFI. Nonlinear scale space filtering and multiresolution system. IEEE Trans. Image Process. 1995;4:774–787. doi: 10.1109/83.388079. [DOI] [PubMed] [Google Scholar]

- 18.Ahuja N. A transform for multiscale image segmentation by integrated edge and region detection. IEEE Trans. Pattern Anal. Mach. Intell. 1996;18:1211–1235. [Google Scholar]

- 19.Elder JH, Zucker SW. Local scale control for edge detection and blur estimation. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:699–716. [Google Scholar]

- 20.Liang P, Wang YF. Local scale controlled anisotropic diffusion with local noise estimate for image smoothing and edge detection; Bombay, India. Presented at the nternational Conference in Computer Vision.1998. [Google Scholar]

- 21.Pizer SM, Eberly D, Fritsch DS. Zoom-invariant vision of figural shape: the mathematics of core. Comput. Vis. Image Underst. 1998;69:55–71. [Google Scholar]

- 22.Tabb M, Ahuja N. Multiscale image segmentation by integrated edge and region detection. IEEE Trans. Image Process. 1997;6:642–655. doi: 10.1109/83.568922. [DOI] [PubMed] [Google Scholar]

- 23.Saha PK, Udupa JK, Odhner D. Scale-based fuzzy connected image segmentation: theory, algorithms, and validation. Comput. Vis. Image Underst. 2000;77:145–174. [Google Scholar]

- 24.Saha PK, Udupa JK. Scale based image filtering preserving boundary sharpness and fine structures. IEEE Trans. Med. Imaging. 2001;20:1140–1155. doi: 10.1109/42.963817. [DOI] [PubMed] [Google Scholar]

- 25.Jin Y, Laine AF, Imielinska C. Adaptive speed term based on homogeneity for level-set segmentation. San Diego, CA. Proceedings of SPIE: Medical Imaging; 2002. pp. 383–390. [Google Scholar]

- 26.Zhuge Y, Udupa JK, Saha PK. Vectorial scale-based fuzzy connected image segmentation. Comput. Vis. Image Underst. 2006;101:177–193. [Google Scholar]

- 27.Saha PK, Udupa JK. Fuzzy connected object delineation: axiomatic path strength definition and the case of multiple seeds. Comput. Vis. Image Underst. 2001;83:275–295. [Google Scholar]

- 28.Nyúl L, Udupa JK, Saha PK. Task specific comparison of 3D image registration methods; San Diego, CA. Proceedings of SPIE: Medical Imaging; 2001. pp. 1588–1598. [Google Scholar]

- 29.Souza ADA, Udupa JK, Saha PK. Volume rendering in the presence of partial volume effects. IEEE Trans. Med. Imaging. 2005;24:223–235. doi: 10.1109/tmi.2004.840295. [DOI] [PubMed] [Google Scholar]

- 30.Saha PK. Tensor scale: a local morphometric parameter with applications to computer vision and image processing. Comput. Vis. Image Underst. 2005;99:384–413. [Google Scholar]

- 31.Saha PK, Wehrli FW. A robust method for measuring trabecular bone orientation anisotropy at in vivo resolution using tensor scale. Pattern Recogn. 2004;37:1935–1944. [Google Scholar]

- 32.Saha PK, Xu Y, Duan H, Heiner A, Liang G. Volumetric topological analysis: a novel approach for trabecular bone classification on the continuum between plates and rods. IEEE Trans. Med. Imaging. 2010;29:1821–1838. doi: 10.1109/TMI.2010.2050779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Andaló FA, Miranda PAV, da Silva Torres R, Falcão AX. Shape feature extraction and description based on tensor scale. Pattern Recogn. 2010;43:26–36. [Google Scholar]

- 34.Andaló FA, Miranda PAV, da Silva Torres R, Falcão AX. Detecting contour saliences using tensor scale; Presented at the IEEE International Conference on Image Processing.2007. [Google Scholar]

- 35.Madabhushi A, Udupa JK, Souza A. Generalized scale: theory, algorithms, and application to image inhomogeneity correction. Comput. Vis. Image Underst. 2006;101:100–121. [Google Scholar]

- 36.Madabhushi A, Udupa JK. New methods of MR image intensity standardization via generalized scale. Med. Phys. 2006;33:3426–3434. doi: 10.1118/1.2335487. [DOI] [PubMed] [Google Scholar]

- 37.Souza A, Udupa JK, Madabhushi A. Image filtering via generalized scale. Med. Image Anal. 2008;12:87–98. doi: 10.1016/j.media.2007.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rueda Sylvia, Udupa Jayaram K, Bai Li. Local curvature scale: a new concept of shape description. SPIE. 2008;6914 [Google Scholar]

- 39.Weickert J. Anisotropic Diffusion in Image Processing. Stuttgart, Germany: ECMI Series, Teubner-Verlag; 1998. [Google Scholar]

- 40.Kothe U. Edge and junction detection with an improved structure tensor. Pattern Recogn. Proc. 2003;2781:25–32. [Google Scholar]

- 41.Bigun J, Bigun T, Nilsson K. Recognition by symmetry derivatives and the generalized structure tensor. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:1590–1605. doi: 10.1109/TPAMI.2004.126. [DOI] [PubMed] [Google Scholar]

- 42.Tao WB, Han SD, Wang DS, Tai XC, Wu XL. Image Segmentation Based on GrabCut Framework Integrating Multiscale Nonlinear Structure Tensor. IEEE Trans. Image Process. 2009;18:2289–2302. doi: 10.1109/TIP.2009.2025560. [DOI] [PubMed] [Google Scholar]

- 43.Saha PK, Xu Z. An analytic approach to tensor scale with an efficient algorithm and applications to image filtering; Sydney, Australia. Proceedings of Digital Image Computing: Techniques and Applications; 2010. pp. 429–434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zhang H, Yushkevich PA, Alexander DC, Gee JC. Deformable registration of diffusion tensor MR images with explicit orientation optimization. Med. Image Anal. 2006;10:764–785. doi: 10.1016/j.media.2006.06.004. [DOI] [PubMed] [Google Scholar]

- 45.Ou YM, Sotiras A, Paragios N, Davatzikos C. DRAMMS: Deformable registration via attribute matching and mutual-saliency weighting. Med. Image Anal. 2011;15:622–639. doi: 10.1016/j.media.2010.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Karacali B, Davatzikos C. Estimating topology preserving and smooth displacement fields. IEEE Trans. Med. Imaging. 2004;23:868–880. doi: 10.1109/TMI.2004.827963. [DOI] [PubMed] [Google Scholar]

- 47.Saha PK, Chaudhuri BB. 3D digital topology under binary transformation with applications. Comput. Vis. Image Underst. 1996;63:418–429. [Google Scholar]

- 48.Kong TY, Rosenfeld A. Digital topology: introduction and survey. Comput. Vis. Graph. Image Process. 1989;48:357–393. [Google Scholar]

- 49.Saha PK, Chaudhuri BB, Chanda B, Dutta Majumder D. Topology preservation in 3D digital space. Pattern Recogn. 1994;27:295–300. [Google Scholar]

- 50.Sonka M, Hlavac VR. Boyle, Image Processing, Analysis, and Machine Vision. third ed. Toronto, Canada: Thomson Engineering; 2007. [Google Scholar]

- 51.Canny J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986;8:679–698. [PubMed] [Google Scholar]

- 52.Medina-Carnicer R, Munoz-Salinas R, Yeguas-Bolivar E, Diaz-Mas L. A novel method to look for the hysteresis thresholds for the Canny edge detector. Pattern Recogn. 2011;44:1201–1211. [Google Scholar]

- 53.Saha PK, Das B, Wehrli FW. An object class-uncertainty induced adaptive force and its application to a new hybrid snake. Pattern Recogn. 2007;40:2656–2671. [Google Scholar]

- 54.Saha PK, Wehrli FW, Gomberg BR. Fuzzy distance transform: theory, algorithms, and applications. Comput. Vis. Image Underst. 2002;86:171–190. [Google Scholar]

- 55.Grevera GJ. The dead reckoning signed distance transform. Comput. Vis. Image Underst. 2004;95:317–333. [Google Scholar]

- 56.Goldfeather J, Interrante V. A novel cubic-order algorithm for approximating principal direction vectors. ACM Trans. Graph. 2004;23:45–63. [Google Scholar]

- 57.Stokely EM, Wu SY. Surface parameterization and curvature measurement of arbitrary 3-D objects - 5 practical methods. IEEE Trans. Pattern Anal. Mach. Intell. 1992;14:833–840. [Google Scholar]

- 58.Soldea O, Magid E, Rivlin E. A comparison of Gaussian and mean curvature estimation methods on triangular meshes of range image data. Comput. Vis. Image Underst. 2007;107:139–159. [Google Scholar]

- 59.Rieger B, Timmermans FJ, van Vliet LJ, Verbeek PW. On curvature estimation of ISO surfaces in 3D gray-value images and the computation of shape descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2004;26:1088–1094. doi: 10.1109/TPAMI.2004.50. [DOI] [PubMed] [Google Scholar]

- 60.Pennec X, Fillard P, Ayache N. A Riemannian framework for tensor computing. Int. J. Comput. Vision. 2006;66:41–66. [Google Scholar]

- 61.Arsigny V, Fillard P, Pennec X, Ayache N. Log-euclidean metrics for fast and simple calculus on diffusion tensors. Magn. Reson. Med. 2006;56:411–421. doi: 10.1002/mrm.20965. [DOI] [PubMed] [Google Scholar]

- 62.Perona P, Malik J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990;12:629–639. [Google Scholar]

- 63.Gerig G, Kubler O, Kikinis R, Jolesz FA. Nonlinear anisotropic filtering of MRI data. IEEE Trans. Med. Imaging. 1992;11:221–232. doi: 10.1109/42.141646. [DOI] [PubMed] [Google Scholar]

- 64.Gonzalez RC, Wintz P. Digital Image Processing. Reading, MA: Addison-Wesley; 1987. [Google Scholar]

- 65.Ibanez Luis, Schroeder William. The ITK Software Guide. Kitware, Inc. 2005 [Google Scholar]