Abstract

Object

Over the last decade image guidance systems have been widely adopted in neurosurgery. Nonetheless, the evidence supporting the use of these systems in surgery remains limited. The aim of this study was to compare simultaneously the effectiveness and safety of various image guidance systems against standard surgery.

Methods

In this preclinical randomized study 50 novice surgeons were allocated to: (1) no image guidance, (2) triplanar display, (3) always-on solid overlay, (4) always-on wire mesh overlay, and (5) on-demand inverse realism overlay. Each participant was asked to identify a basilar tip aneurysm in a validated model head. The primary outcomes were time to task completion (seconds), and tool path length (millimeters). The secondary outcomes were recognition of an unexpected finding (a surgical clip), and subjective depth perception using a Likert scale.

Results

The time to task completion and tool path length were significantly lower when utilizing any form of image guidance compared to no image guidance (p < 0·001 and p = 0·003, respectively). The tool path distance was also lower in groups utilizing augmented reality compared to triplanar display (p = 0·010). Always-on solid overlay resulted in the greatest inattentional blindness (20% recognition of unexpected finding). Wire mesh and on-demand overlays mitigated but did not negate inattentional blindness, and were comparable to triplanar display (40% recognition of unexpected finding in all groups). Wire mesh and inverse realism overlays also resulted in better subjective depth perception than always-on solid overlay (p = 0·031 and p = 0·008, respectively).

Conclusions

New augmented reality platforms may improve performance in less experienced surgeons. However, all image display modalities, including existing triplanar display, carry a risk of inattentional blindness.

Keywords: Neurosurgery, Minimally Invasive Surgery, Image Guidance, Augmented reality

INTRODUCTION

Image guidance systems are increasingly important tools in surgery, and have been widely adopted in neurosurgery over the last decade. Nonetheless, the evidence for the effectiveness and safety of these image guidance systems remains limited. Case-control studies report that the use of image guidance is associated with improved patient outcomes when compared to standard surgery,14 but commentators have suggested that clinical randomized studies are now neither practical nor ethical.17

The final component of all image guidance systems is the display of images in such a way that the surgeon is able to localize a point in the operative field unambiguously. Multi-planar reformatted (MPR) mode has been used since the advent of Computed Tomography (CT), and triplanar displays presenting axial, sagittal and coronal views, remains the most widely used technique. A drawback of triplanar displays is that the surgeon must use the information from image slices to construct, in their own mind, a potentially complex three-dimensional representation of anatomical and pathological structures. Moreover, triplanar displays require that surgeons stop operating momentarily, apply a probe to the region of interest (potentially near critical neurovascular structures), and then take their eyes off the surgical field to view the image guidance monitors. The fusion of virtual three-dimensional models and the actual operating field to provide an augmented reality may therefore enhance the operating room workflow.

Augmented reality systems have been described, albeit to a limited extent, in combination with endoscopy to assist during minimally invasive surgical procedures.1,2,7,19 Case series utilizing augmented reality to aid surgical localization have generally been encouraging, suggesting that such technology may improve the operating room workflow,2,7,10,13 but no randomized studies have confirmed the effectiveness of such systems beyond standard triplanar image display thus far. In addition, recent studies have highlighted several concerns with the use of augmented reality.3,6,13,18

Arguably the greatest issue with augmented reality systems is that overlays may alter the attention of surgeons such that they fail to recognize critical events within the surgical field, such as unexpected complications. Preclinical studies have confirmed that augmented reality displays utilizing always-on solid overlays may exacerbate inattentional blindness, raising major concerns over patient safety that must be addressed before widespread adoption of the technology into mainstream surgical practice.3 The use of wire mesh rather than solid overlays, or on-demand rather than always-on augmented reality displays may mitigate or negate inattentional blindness.3

A further problem with augmented reality systems is that at present endoscopic live images are generally two-dimensional, and virtual overlays are therefore presented in kind, significantly impairing depth perception. The increasing availability of three-dimensional endoscopy allows for stereoscopic augmented reality systems, but many users continue to have difficulty appreciating the depth of solid overlays despite binocular and kinetic cues.6,13,18 It has been suggested that wire mesh rather than solid overlays might improve depth perception.13 Another potential solution termed ‘inverse realism’ provides ‘see through vision’ of the embedded virtual object whilst maintaining the salient anatomical structures of the exposed surface, which serve to partially occlude the object.9

The aim of this study was to compare simultaneously the effectiveness and safety of various image guidance systems utilizing triplanar and augmented reality displays, against standard surgery, using a keyhole neurosurgical approach as an exemplar.

METHODS

The Imperial College Joint Research Compliance Office (JRCO) approved the study protocol. The Consolidated Standards of Reporting Trials (CONSORT) statement was used in the preparation of this manuscript.16

Participants and study settings

Fifty novices were recruited from one university hospital. Participants were deemed suitable for inclusion if they had no prior experience of endoscopic or endoscope-assisted surgery (performed zero). Written informed consent was obtained from all participants.

Trial design

A preclinical randomized study design was adopted, comparing: (1) no image guidance, (2) triplanar display of axial, sagittal and coronal images, (3) always-on solid overlay augmented reality, (4) always-on wire mesh overlay augmented reality, and (5) on-demand inverse realism augmented reality.

The Modeled Anatomical Replica for Training Young Neurosurgeons (MARTYN) head (Royal College of Surgeons of England, London, UK), with an accompanying circle of Willis including a basilar tip aneurysm, was utilized.11 The model consists of a gelatin-based brain, encased within a latex dura, and a polyurethane skull. A previous study has confirmed the MARTYN head is realistic (face validity), useful (content validity), and able to discriminate between surgeons of different experience (construct validity), with respect to the supraorbital subfrontal approach.12 A 25 × 15mm left supraorbital craniotomy was fashioned using a high-speed drill (B. Braun, Melsungen, Germany). The high-speed drill was then used to remove the inner edge of the bone above the orbital rim, and the jugae cerebralia. A simple “C” durotomy was performed, and the flap retracted basally.

A VisionSense III neuroendoscopy system (Visionsense, Petach Tikva, Israel) was used for visualization. The High Definition zero degree rigid endoscope is 4mm in diameter and 18cm in length, providing a resolution of 1920 × 1080 pixels. Images were displayed using a 42″ stereoscopic screen.

A CT scan of the MARTYN head was performed and the vascular tree and aneurysm manually segmented using itk-SNAP v2·4·0 (www.itksnap.org),20 and smoothed and decimated using MeshLab v1·3·2 (www.meshlab.sourceforge.net). The model head was fixed in place with a Mayfield clamp and a Budde-halo retractor system attached (Integra, New Jersey, USA). An NDI Polaris Optical Tracking System (Northern Digital Inc., Ontario, Canada) was used to track the endoscope and image guidance probe with respect to a reference frame. Rigid registration of surface fiducials was used to relate the head and CT scan coordinate frames. Manual alignment of a reference object was then used to determine the hand-eye transformation from the camera tracking frame to the camera frame defining the projection to display coordinates. Concatenated together, the results of these calibrations were used to map renderings of the CT segmentations onto captured images of the model head. Custom software was used to generate the different image display modalities and to record the probe path length (Figures 1 and 2).

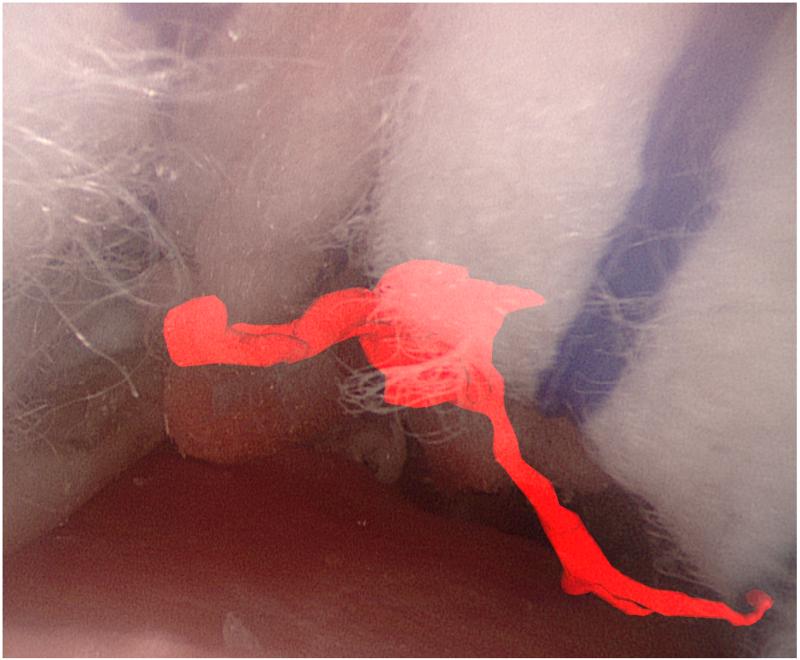

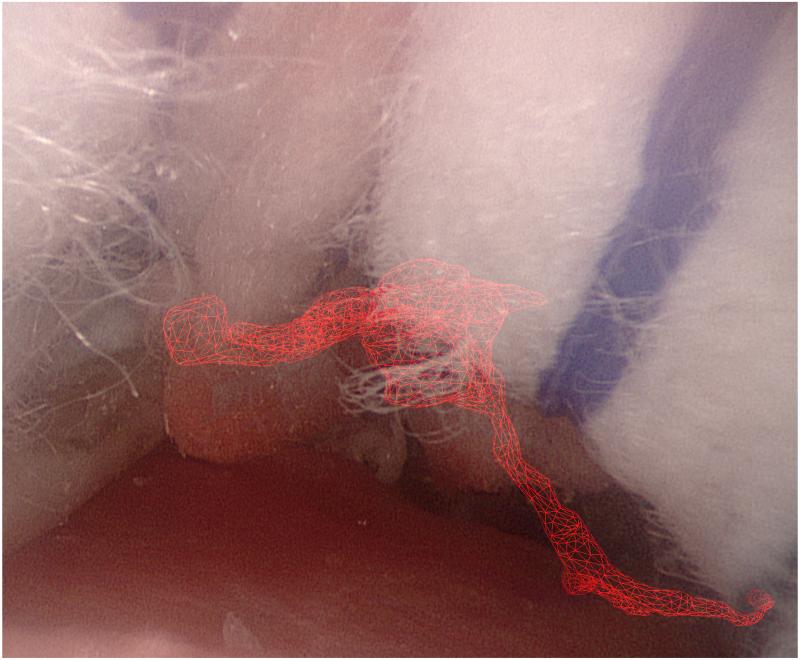

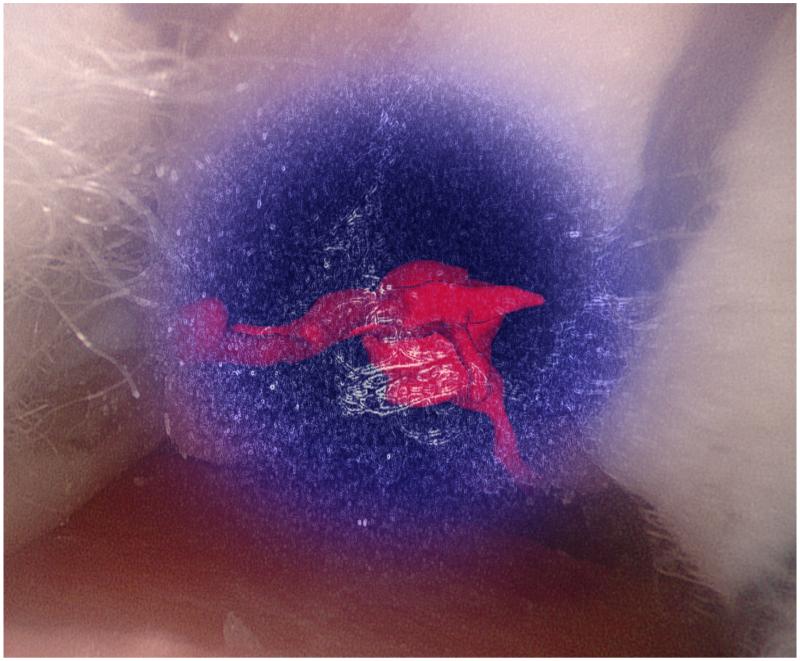

Figure 1.

Augmented reality overlays of the segmented vascular tree (red): (a) no image guidance, (b) always-on solid overlay, (c) always-on wire mesh overlay, and (d) on-demand inverse realism overlay.

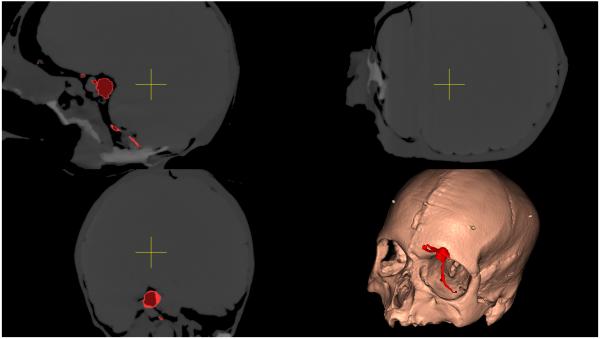

Figure 2.

Triplanar image guidance display of the segmented vascular tree (red) with axial, coronal and sagittal sections centered over the probe tip.

Participants were randomly allocated using a computer-generated sequence into five groups to determine which image display modality was utilized. Blocked randomization was used to ensure that ten participants were evenly allocated into each group. Each participant was shown a short video demonstrating the endoscopic supraorbital approach in the MARTYN head, and given several minutes to familiarize themselves with the image guidance system. They were then asked to identify the basilar tip aneurysm using a probe, with instructions to minimize their exposure and manipulation of brain tissue with use of cottonoid patties (Codman and Shurtleff, Massachusetts, USA), as they deemed appropriate. The task was considered complete when users applied the probe to the aneurysm.

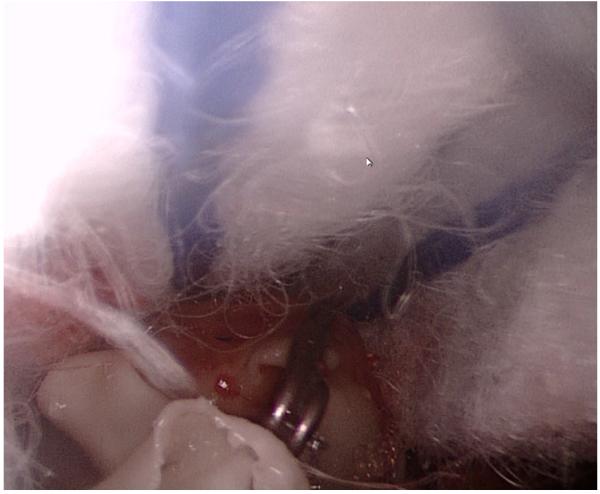

A surgical clip was placed over the left posterior cerebral artery, approximately 5mm from the aneurysm, and within the surgical trajectory, providing an unexpected finding to assess inattentional blindness (Figure 3).

Figure 3.

Endoscopic image demonstrating cottonoid patties (top), and an unexpected aneurysm clip (lower center), within the operative field.

Outcomes

The primary outcomes were time to task completion (seconds), and tool path length (millimeters). The secondary outcomes were recognition of the unexpected finding (prompted), and subjective depth perception (5-point Likert scale). Whereas participants were aware of the image display modality they were using, the data analysts were blinded to their allocation.

Statistical analysis

The sample size was calculated on the basis of recently published work, preliminary data, and anticipated ease of participant recruitment.12 It was estimated that to detect a reduction in time to task completion from 152 to 92 seconds (SD 48 seconds), with a two-sided 5% significance level and a power of 80%, a sample size of at least 10 participants was necessary in each group.

Data was analyzed with SPSS v 20·0 (IBM, Illinois, USA). The median and interquartile ranges were calculated for all outcome measures, and nonparametric tests performed, with a value of p < 0·05 considered statistically significant. We compared the time to task completion, tool path length, and subjective depth perception using the Kruskal-Wallis one-way analysis of variance. We compared the proportion of users recognizing the unexpected finding using Chi Square or Fisher’s Exact test (if less than 80% of the cells had an expected frequency of 5 or greater). If a significant difference was identified in any of the outcomes, we then directly compared the following groups, with the Bonferroni correction (n = 4; p < 0·0125): no image guidance versus any image guidance, triplanar image display versus any augmented reality display, always-on solid overlay versus on-demand inverse realism, and always-on solid overlay versus always-on mesh overlay.

RESULTS

Baseline demographic data

The demographics of the participants are summarized in Table 1. Of the 50 participants recruited, 35 were medical students and 15 were junior doctors; none had yet embarked on formal surgical training. There was no significant difference in demographics or experience between the groups. All participants that were enrolled completed the study, and no losses occurred after randomization.

Table 1.

Demographics of participants.

| Age, median (interquartile range) | Sex, male:female | Handedness, right:left | |

|---|---|---|---|

| No image guidance (n=10) | 24 (22 – 25) | 4:6 | 8:2 |

| Triplanar display (n=10) | 23.5 (21 – 26.5) | 4:6 | 10:0 |

| Always-on solid overlay (n=10) | 24.5 (22.3 – 25) | 6:4 | 10:0 |

| Always-on wire mesh overlay (n=10) | 21.5 (20.3 – 24) | 4:6 | 9:1 |

| On-demand inverse realism overlay (n=10) | 23.5 (22 – 24.5) | 5:5 | 10:0 |

| All groups (n=50) | 23.5 (21.3 – 25) | 23:27 | 47:3 |

| p | 0.664 | 0.863 | 0.225 |

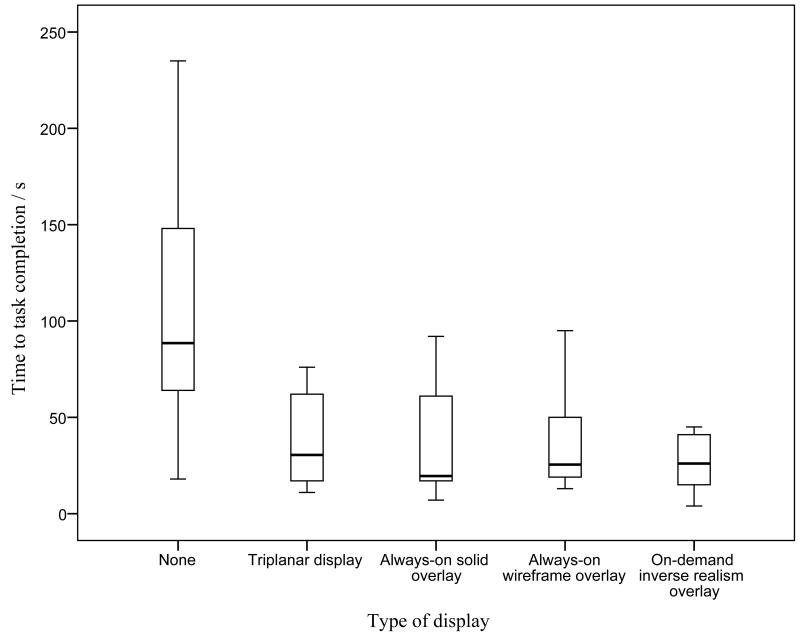

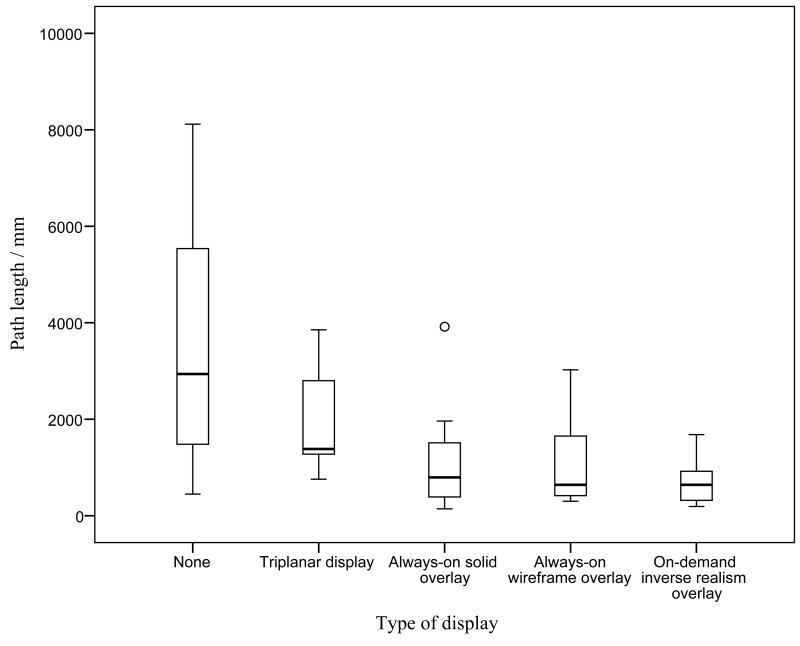

Primary Outcomes

The median and interquartile ranges of the primary outcomes are summarized in Table 2. The time to task completion and tool path length in the various study groups was significantly different (p = 0·007 and p = 0·002, respectively); they are illustrated in Figures 4 and 5. In subsequent analysis, the time to task completion and tool path length was significantly lower when utilizing any form of image guidance (triplanar display, always-on solid overlay, always-on wire mesh overlay, and on-demand inverse realism overlay) compared to no image guidance (p < 0·001 and p = 0·003, respectively). The tool path distance was also lower in groups utilizing augmented reality (always-on solid overlay, always-on wire mesh overlay, and on-demand inverse realism) compared to triplanar image display (p = 0·010). There was no significant difference in the primary outcomes between the different augmented reality overlays.

Table 2.

Summary of results according to image guidance used.

| Time to task completion a (s) |

Path length a (mm) |

Recognition of unexpected finding b |

Depth perception a, c |

|

|---|---|---|---|---|

| No image guidance | 88.5 (61.8 – 150.5) |

2938.5 (1411.8 – 5955.8) |

9/10 (90.0%) | N.A. |

| Triplanar display | 30.5 (16.5 – 64.8) |

1384.0 (1183.8 – 2873.0) |

4/10 (40.0%) | N.A. |

| Always-on solid overlay | 19.5 (16.3 – 64.3) |

795.0 (371.0 – 1624.8) |

2/10 (20.0%) | 2.0 (1.0 – 3.0) |

| Always-on wire mesh overlay | 25.5 (17.8 – 52.8) |

641.0 (406.5 – 1798.5) |

4/10 (40.0%) | 3.5 (2.0 – 4.0) |

| On-demand inverse realism overlay | 26.0 (13.0 – 41.5) |

639.5 314.5 – 1068.8) |

4/10 (40.0%) | 4.0 (2.8– 4.0) |

| p | 0.007 | 0.002 | 0.025 | 0.015 |

Values reported are median (interquartile range), and probability using the Kruskal-Wallis one-way analysis of variance.

Values reported are number of participants (percentage), and probability using Fisher’s exact test.

Likert scale: 5 = excellent depth perception; 1 = poor depth perception.

Figure 4.

Graph illustrating time to completion with different image guidance displays.

Figure 5.

Graph illustrating path distance with different image guidance displays. Circle represents an outlier (greater than 1.5 times the interquartile range).

Secondary Outcomes

The secondary outcomes are summarized in Table 2. All videos were reviewed and the surgical clip was visible in all cases. The proportion of participants that recognized the unexpected finding in the various study groups was significantly different (p = 0·025). All but one participant recognized the aneurysm clip in the control arm (90% recognition of unexpected finding) while less than half recognized it when utilizing any form of image guidance, including conventional triplanar display (p = 0·003). Always-on solid overlay resulted in the greatest inattentional blindness (20% recognition of unexpected finding). Always-on wire mesh and on-demand overlays mitigated but did not negate inattentional blindness, and were comparable to triplanar displays (40% recognition of unexpected finding in all groups). The subjective depth perception also varied significantly in the augmented reality groups (p = 0·015). Solid overlay resulted in significantly worse subjective depth perception than wire mesh and inverse realism (p = 0·031 and p = 0·008, respectively).

Discussion

Image guidance technology is among the most rapidly emerging innovations in surgery.5 Although the evidence for the use of image guidance in surgery remains limited, systems utilizing triplanar display have already achieved substantial clinical penetration in neurosurgery. The recent development and refinement of augmented reality displays offers the possibility of improved operating room workflow.1,2,7,19 In the coming years, technological advances are likely to promote further the dissemination of augmented reality technology in surgery. This randomized study is the first to simultaneously compare the effectiveness and safety of image guidance systems utilizing triplanar and augmented reality displays, against standard surgery, in a validated preclinical model. To this end, this study’s findings confirm the utility of image guidance systems in neurosurgery, and suggest that new augmented reality platforms with always-on wire mesh or on-demand inverse realism overlays may result in improved surgical performance. However, it must be acknowledged that all image display modalities, including existing triplanar display, carry a risk of inattentional blindness.

Image guidance systems have two potential roles in surgery: first, to help guide the overall surgical approach to pathology; and second, to facilitate unambiguous tissue dissection, particularly in the context of oncological resection.4 Currently, most image guidance systems are designed for the former, allowing surgeons to define precisely a narrow surgical corridor to deep-seated pathology, thus minimizing the risk of iatrogenic injury. In the present preclinical randomized study, all image guidance systems resulted in significantly reduced time to completion and tool path length compared to standard surgery (p < 0·001 and p = 0·003, respectively). Surprisingly, no clinical randomized studies have evaluated the role of contemporary image guidance systems to help define the surgical trajectory, and some commentators have suggested that performing such studies now would be neither practical nor ethical.17 Perhaps the largest case-control study in the surgical literature reported on patients undergoing meningioma resection at the National Hospital for Neurology and Neurosurgery (NHNN), and concluded that the use of image guidance was associated with shorter operating times, reduced blood loss, and fewer major complications than standard surgery.14

In the present study, image guidance with augmented reality displays led to a significantly reduced tool path length compared to image guidance with existing triplanar display (p = 0·010). Augmented reality displays may improve the surgical workflow by obviating the need for the surgeon to repeatedly stop operating, apply the probe to the region of interest, and turn away from the operating field to view the image guidance monitor. In a previous preclinical randomized study, augmented reality improved the accuracy of surgical trainees in identifying skull base landmarks on a cadaver, compared to no image guidance.2 The investigators noted that they did not include a triplanar display as comparison, and could therefore not comment on the relative merit of augmented reality over existing image guidance platforms. Augmented reality systems have also been developed and applied to minimally invasive surgery clinically7,8. The most cited of these studies is that of Kawamata et al., who developed an augmented reality system consisting of a 2·7mm rigid endoscope (Olympus, Tokyo, Japan), an optical tracking system, and a controller to overlay endoscopic live images with wire mesh models of tumors and neighboring anatomical landmarks.7 In a series of 12 patients with pituitary tumors undergoing endoscopic transnasal transsphenoidal hypophysectomy, the authors felt that the system improved operative workflow.

Recently, the use of augmented reality has been reported as being associated with inattentional blindness in surgeons, raising important safety concerns. Dixon et al. compared 32 surgeons of varying experience performing an endonasal navigation exercise on a cadaver.3 Surgeons were randomized into groups with or without augmented reality. Although the group with augmented reality was more accurate, they were less likely to identify unexpected findings. The authors of the study speculate that augmented reality may have lead to perceptual blindness in a number of ways including attentional tunneling, increased visual clutter and jitter, and an additional camouflage effect. In the present study, all image guidance displays resulted in considerable inattentional blindness (p = 0·003), despite the surgical clip being clearly visible in all the videos reviewed. Always-on solid overlay was associated with the greatest inattentional blindness (20% recognition of unexpected findings). Wire mesh and on-demand overlays mitigated but did not negate inattentional blindness (40% recognition of unexpected findings in all cases). Interestingly, image guidance with existing triplanar displays also resulted in comparable inattentional blindness (40% recognition of unexpected findings), suggesting that it is the cognitive load rather than the overlay per se that is important.

An additional concern with augmented reality systems has been the issue of subjective depth perception. At present, the majority of endoscopes in clinical use are two-dimensional, with augmented reality overlays presented in kind. The recent introduction of three-dimensional and high definition endoscopes may allow for stereoscopic augmented reality systems. In keeping with existing literature in the field, the present study found that even with such binocular cues, many users continue to struggle to appreciate the depth of solid overlays (median 2·0/5 on Likert scale).6,9,13,18 To this end, wire mesh and inverse realism overlays resulted in better subjective depth perception (median 3·5/5 and 4·0/5 on Likert scale, respectively).

Limitations

It should be noted that this study has a number of limitations. All the study participants were novices yet to embark on formal surgical training. Selection of novices, rather than intermediates or experts, allowed for a relatively homogenous sample; this was essential as the assessment of inattentional blindness with an unexpected finding meant a crossover study design could not be adopted.

Novice participants may have had difficulty in identifying the aneurysm, or recognizing the surgical clip as an abnormal finding. However, all participants observed a short video introducing the MARTYN head and demonstrating the Circle of Willis and basilar tip aneurysm. Moreover, the fact that 90% of participants in the control group did recognize the aneurysm clip, suggests the observed inattentional blindness was the result of the use of image guidance rather than surgical inexperience.

None of the study participants had prior experience with image guidance, and all were given a few minutes to familiarize themselves with the equipment. It is likely that greater familiarity would have resulted in improved performance and less inattentional blindness.

The supraorbital subfrontal approach was selected as an exemplar keyhole approach but it is infrequently performed even among experienced neurosurgeons. The approach was chosen as it is recognized as technically challenging, and may benefit from image guidance.15 In addition, a preclinical model with face, content, and construct validity was readily available.12

The MARTYN head is comparatively low fidelity, particularly with respect to its internal structure. The result is that the triplanar view lacks anatomical detail, and this may have had an impact on task performance. However, the use of segmented rather than plain images likely mitigated for this (see Figure 2).

Generalizability

The generalizability of this study is likely to depend on several factors including the experience of the surgeon, and the complexity of the surgical approach. With greater experience, surgeons learn to use anatomical landmarks to guide their surgical trajectory, and the benefits of image guidance almost certainly lessen. Similarly, in relatively straightforward surgical approaches, there may be little advantage to the use of image guidance.

CONCLUSIONS

In this study, the use of image guidance systems with augmented reality overlays significantly reduced the time to task completion and tool path distance, but also increased the risk of inattentional blindness. These findings support the need for less experienced surgeons using image guidance systems, particularly when undertaking complex approaches, to be carefully supervised by experts that are less cognitively loaded and better able to identify potential complications.

ACKNOWLEDGEMENTS

We thank Y. Saleh, G. Herbert, M. Edmondson, L. Chacko, and S. Morris, for their assistance in recruiting participants. We also thank M. Cooke and the Royal College of Surgeons of England for developing and providing the MARTYN model head.

Funding:

H. J. Marcus is supported by an Imperial College Wellcome Trust Clinical Fellowship.

Footnotes

Disclosure:

G.Z. Yang holds a patent in ‘non-photorealistic rendering augmented reality displays’.

References

- 1.Caversaccio M, Garcia Giraldez J, Thoranaghatte R, Zheng G, Eggli P, Nolte LP, et al. Augmented reality endoscopic system (ARES): preliminary results. Rhinology. 2008;46:156–158. [PubMed] [Google Scholar]

- 2.Dixon BJ, Daly MJ, Chan H, Vescan A, Witterick IJ, Irish JC. Augmented image guidance improves skull base navigation and reduces task workload in trainees: a preclinical trial. Laryngoscope. 2011;121:2060–2064. doi: 10.1002/lary.22153. [DOI] [PubMed] [Google Scholar]

- 3.Dixon BJ, Daly MJ, Chan H, Vescan AD, Witterick IJ, Irish JC. Surgeons blinded by enhanced navigation: the effect of augmented reality on attention. Surgical endoscopy. 2012 doi: 10.1007/s00464-012-2457-3. [DOI] [PubMed] [Google Scholar]

- 4.Hughes-Hallett A, Mayer EK, Marcus HJ, Cundy TP, Pratt PJ, Darzi AW, et al. Augmented Reality Partial Nephrectomy: Examining the Current Status and Future Perspectives. Urology. 2014;83:266–273. doi: 10.1016/j.urology.2013.08.049. [DOI] [PubMed] [Google Scholar]

- 5.Hughes-Hallett A, Mayer EK, Marcus HJ, Cundy TP, Pratt PJ, Parston G, et al. Quantifying Innovation in Surgery. Annals of Surgery. 2014 doi: 10.1097/SLA.0000000000000662. [DOI] [PubMed] [Google Scholar]

- 6.Johnson LG, Edwards P, Hawkes D. Surface transparency makes stereo overlays unpredictable: the implications for augmented reality. Studies in health technology and informatics. 2003;94:131–136. [PubMed] [Google Scholar]

- 7.Kawamata T, Iseki H, Shibasaki T, Hori T. Endoscopic augmented reality navigation system for endonasal transsphenoidal surgery to treat pituitary tumors: technical note. Neurosurgery. 2002;50:1393–1397. doi: 10.1097/00006123-200206000-00038. [DOI] [PubMed] [Google Scholar]

- 8.Kockro RA, Tsai YT, Ng I, Hwang P, Zhu C, Agusanto K, et al. Dex-ray: augmented reality neurosurgical navigation with a handheld video probe. Neurosurgery. 2009;65:795–807. doi: 10.1227/01.NEU.0000349918.36700.1C. discussion 807-798. [DOI] [PubMed] [Google Scholar]

- 9.Lerotic M, Chung AJ, Mylonas G, Yang GZ. Pq-space based non-photorealistic rendering for augmented reality. Medical image computing and computer-assisted intervention: MICCAI … International Conference on Medical Image Computing and Computer-Assisted Intervention. 2007;10:102–109. doi: 10.1007/978-3-540-75759-7_13. [DOI] [PubMed] [Google Scholar]

- 10.Lovo EE, Quintana JC, Puebla MC, Torrealba G, Santos JL, Lira IH, et al. A novel, inexpensive method of image coregistration for applications in image-guided surgery using augmented reality. Neurosurgery. 2007;60:366–371. doi: 10.1227/01.NEU.0000255360.32689.FA. discussion 371-362. [DOI] [PubMed] [Google Scholar]

- 11.Marcus HJ, Darzi A, Dipankar N. Surgical Simulation to Evaluate Surgical Innovation: Preclinical Studies With MARTYN. Bulletin of The Royal College of Surgeons of England. 2013;95:299. [Google Scholar]

- 12.Marcus HJ, Hughes-Hallett A, Pratt P, Yang GZ, Darzi A, Nandi D. Validation of MARTYN to simulate the keyhole supraorbital subfrontal approach. Bulletin of The Royal College of Surgeons of England. 2014;96:120–121. [Google Scholar]

- 13.Maurer CR, Sauer F, Hu B, Bascle B, Geiger B, Wenzel F, et al. Augmented-reality visualization of brain structures with stereo and kinetic depth cues: system description and initial evaluation with head phantom. Proc. SPIE. 2001;4319:445. [Google Scholar]

- 14.Paleologos TS, Wadley JP, Kitchen ND, Thomas DG. Clinical utility and cost-effectiveness of interactive image-guided craniotomy: clinical comparison between conventional and image-guided meningioma surgery. Neurosurgery. 2000;47:40–47. doi: 10.1097/00006123-200007000-00010. discussion 47-48. [DOI] [PubMed] [Google Scholar]

- 15.Reisch R, Marcus H, Koechlin N, Hugelshofer M, Stadie A, Kockro R. Transcranial endoscope-assisted keyhole surgery: anterior fossa. Innovative Neurosurgery. 2013;1:77–89. [Google Scholar]

- 16.Schulz KF, Altman DG, Moher D, Group C. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c332. doi: 10.1136/bmj.c332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Smith TL, Stewart MG, Orlandi RR, Setzen M, Lanza DC. Indications for image-guided sinus surgery: the current evidence. Am J Rhinol. 2007;21:80–83. doi: 10.2500/ajr.2007.21.2962. [DOI] [PubMed] [Google Scholar]

- 18.Swan JE, 2nd, Jones A, Kolstad E, Livingston MA, Smallman HS. Egocentric depth judgments in optical, see-through augmented reality. IEEE transactions on visualization and computer graphics. 2007;13:429–442. doi: 10.1109/TVCG.2007.1035. [DOI] [PubMed] [Google Scholar]

- 19.Thoranaghatte RU, Giraldez JG, Zheng G. Landmark based augmented reality endoscope system for sinus and skull-base surgeries. Conf Proc IEEE Eng Med Biol Soc. 2008;2008:74–77. doi: 10.1109/IEMBS.2008.4649094. [DOI] [PubMed] [Google Scholar]

- 20.Yushkevich PA, Piven J, Hazlett HC, Smith RG, Ho S, Gee JC, et al. User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage. 2006;31:1116–1128. doi: 10.1016/j.neuroimage.2006.01.015. [DOI] [PubMed] [Google Scholar]