Abstract

Purpose

To develop a consensus opinion regarding capturing diagnosis-timing in coded hospital data.

Methods

As part of the World Health Organization International Classification of Diseases-11th Revision initiative, the Quality and Safety Topic Advisory Group is charged with enhancing the capture of quality and patient safety information in morbidity data sets. One such feature is a diagnosis-timing flag. The Group has undertaken a narrative literature review, scanned national experiences focusing on countries currently using timing flags, and held a series of meetings to derive formal recommendations regarding diagnosis-timing reporting.

Results

The completeness of diagnosis-timing reporting continues to improve with experience and use; studies indicate that it enhances risk-adjustment and may have a substantial impact on hospital performance estimates, especially for conditions/procedures that involve acutely ill patients. However, studies suggest that its reliability varies, is better for surgical than medical patients (kappa in hip fracture patients of 0.7–1.0 versus kappa in pneumonia of 0.2–0.6) and is dependent on coder training and setting. It may allow simpler and more precise specification of quality indicators.

Conclusions

As the evidence indicates that a diagnosis-timing flag improves the ability of routinely collected, coded hospital data to support outcomes research and the development of quality and safety indicators, the Group recommends that a classification of ‘arising after admission’ (yes/no), with permitted designations of ‘unknown or clinically undetermined’, will facilitate coding while providing flexibility when there is uncertainty. Clear coding standards and guidelines with ongoing coder education will be necessary to ensure reliability of the diagnosis-timing flag.

Keywords: world health organization, international classification of diseases, diagnosis-timing indicators, present-on-admission flag, condition-onset flag, patient safety, hospital performance

Introduction

Hospital morbidity data coded in the International Classification of Diseases (ICD) are widely used internationally in surveillance, funding, research and measurement of quality of care and performance. Until recently a limitation has been an inability to time the onset of conditions; for example, to distinguish whether a coded diagnosis was present-on-admission or arose during the hospital stay. A sizable proportion of the latter diagnoses may represent adverse events or complications of care that are of particular interest for quality and safety reporting, or for adjusting hospital payment based on diagnosis-related groups.

In this paper, we summarize the experience and evidence regarding the use of diagnosis-timing indicators, and offer recommendations to countries with ICD-coded hospital data systems. These recommendations are a product of the work of the Quality and Safety Topic Advisory Group (QS-TAG) of the World Health Organization Family of International Classifications (WHO-FIC), which has been developing an improved ontology for quality and safety-related diagnoses in the 11th Revision of the International Classification of Diseases (ICD-11). In the course of its work, the QS-TAG recognized that ICD-coded data would be more useful for many purposes, including international comparison and benchmarking, if standards and definitions for data reporting could be harmonized across countries [1–3]. The international implementation of diagnosis-timing indicators represents an important opportunity for future enhancement of coded hospital data systems.

Sources of information

Two of the members (PR and VS) conducted the scholarly literature review with a search of titles and abstracts from 1991 to 2010, and subsequently updated to August 2014 in PubMed and Google Scholar using the following specific keywords: ‘present-on-admission’, ‘present at admission’, ‘diagnosis-type’, ‘diagnosis timing’, ‘date stamped’, ‘date stamping’, ‘hospital-acquired conditions’, ‘condition present at admission (CPAA)’, ‘hospital associated conditions’ and hyphenated versions of each phrase. Interviews with stakeholders, informal surveys and/or environmental scans of coding practices in countries that participate in the WHO-FIC Network (http://www.who.int/classifications/network/en/), reviews of scholarly and gray literature sources (including white papers, agency reports, etc.) and relevant web sources (including agency and organizational websites and reports) supplemented this information gathering process. We did not use MESH headings in our search process and acknowledge that this may have had an impact on the literature included in the review.

The results of the initial literature review were then presented to QS-TAG (see Acknowledgements for membership) over three sequential meetings from 2010 through 2012. Information from the literature review is described in a narrative format below, as the literature review undertaken is not amenable to systematic review of a focused question. The resulting narrative review informed the development of QS-TAG recommendations. These formal QS-TAG recommendations for the harmonized implementation of diagnosis-timing reporting reflect consensus by all members of the Group.

Coding schemes for classification of diagnosis timing

At least three countries have introduced a field to record diagnosis-timing. Canada was the pioneer in 1976 when it introduced diagnosis-type as a mandatory field in its coded hospital data (Table 1) [4]. Notably, the Canadian categories mix diagnosis-timing with whether a diagnosis was ‘most responsible’ for the treatment provided and whether it was a ‘morphology code.’

Table 1.

Chronology of diagnosis-timing adoption

| Year | Location | Name of field | Categories |

|---|---|---|---|

| 1976 | All Canadian provinces except Quebec | Diagnosis-type | In Canada, the indicator is a single-digit numerical code, with diagnosis-type 2 representing diagnoses that have arisen after admission. As well: ‘M’ for most responsible diagnosis/main condition; ‘Type (1)’ for a condition that existed pre-admission, comorbid conditions that were active and notable during a stay; ‘Type (3)’ for a condition for which a patient may or may not have received treatment, but which is a comorbidity and ‘Type (4)’ for a morphology code. |

| 1992 | Victoria, Australia | Vic Prefix | ‘P’ for a primary diagnosis for which the patient received treatment or investigation; ‘A’ for an associated condition which may have been the underlying disease for the condition being treated; ‘C’ for a condition which was not present at the time of admission and ‘M’ for a morphology code (Now superseded by Condition Onset Flag below). |

| 1994 | California, USA | Condition Present at Admission Modifier | The POA field, one for each diagnosis field, could take on one of three values: ‘present at admission’; ‘not present at admission’ and a state-specific value for ‘uncertain or unknown’ (Now superseded by Diagnosis timing reporting below). |

| 1996 | New York, USA | ||

| 2002 | Wisconsin, USA | ||

| 2006 | Australia | Diagnosis Onset Type | ‘1’ for primary condition; ‘2’ for post-admit condition and ‘9’ for unknown or uncertain (Now superseded by Condition Onset Flag below). |

| 2007 | USA | Diagnosis-timing reporting | ‘Y’ for present at time of inpatient admission; ‘N’ for not present at time of inpatient admission; ‘U’ for documentation insufficient; ‘W’ for clinically undetermined and ‘1’ for exempt from POA reporting. |

| 2008 | Australia | Condition Onset Flag | ‘1’ for condition with onset during the episode of admitted patient care; ‘2’ for condition not noted as arising during the episode of admitted patient care and ‘9’ for not reported. |

Beginning in 1992, the State of Victoria, Australia introduced the ‘Vic Prefix’ to its hospital data [5]. With evidence mounting as to the utility of diagnosis-timing, Australia introduced the Diagnosis Onset Type nationally in 2006 [6, 7] (Table 1).

The US States of New York, California and Wisconsin began requiring use of a ‘Present-on-admission’ flag by all non-federal hospitals in 1994, 1996 and 2002 respectively [8] and in October 2007, the US Centers for Medicare & Medicaid Services introduced mandatory diagnosis-timing reporting on hospital inpatient claims for Medicare beneficiaries. Only ‘critical access’ hospitals, mostly small hospitals in rural areas, were exempt (5% of discharges, 25–30% of hospitals nationwide) [9]. At least 38 US states, representing over 80% of the nation's population, are now collecting diagnosis-timing data in their all-payer inpatient discharge data sets [10]. The US will transition to ICD-10-CM on 1 October 2015. Its approach (Table 1) includes a separate ‘exempt’ category that includes diagnosis codes for which diagnosis-timing status is irrelevant or inherent to the code, such as codes from Chapter 21 of ICD-10 [Persons with potential health hazards related to socioeconomic and psychosocial circumstances (Z55-Z65)]. Exempt codes are intended to reduce burden on hospital coders. The US scheme also distinguishes two reasons for missing information: ‘documentation insufficient to determine if condition was present at the time of inpatient admission’ versus ‘provider unable to clinically determine whether the condition was present at the time of inpatient admission.’ The rationale for this distinction rests on recent efforts to reduce hospital payment for selected hospital-acquired conditions, in order to discourage hospitals from using ‘insufficient documentation’ as an excuse to avoid financial penalties. However, we are not aware of empirical evidence that requiring clinicians to explain missing diagnosis-timing actually reduces gaming behavior by hospitals.

Completeness and variation of diagnosis-timing reporting

In the US, only 0.2% of all secondary diagnoses from non-federal acute care hospitals in California during 2003 had missing diagnosis-timing flags, whereas a much higher proportion of New York discharges (6–8%) had missing flags [11, 12]. Moreover, within New York, substantial geographic variation across regions was found in the classification of conditions as uncertain in their diagnosis-timing [13]. However, there was also evidence of improving diagnosis-timing coding over time; the percentage of secondary diagnoses reported as uncertain or missing diagnosis-timing status in California declined from 1.3% in 1998 to 0.2% in 2004 [14]. Across all diagnoses on Medicare inpatient claims in 2011, 77.57% were reported as present on admission, 6.44% as not present on admission, 15.73% as exempt, 0.23% as ‘inadequate documentation,’ and 0.02% as ‘cannot be clinically determined’ [15].

An analysis of over 5 million secondary diagnosis fields in 2011–2012 data from the State of Victoria, Australia, indicates that with review and feedback, it is possible to ensure near-full completeness of diagnosis-timing flags. The accuracy of the coding is subsequently assessed in an independently conducted audit program (Personal communication, Department of Health, Victoria).

Validity of diagnosis-timing reporting

Several studies suggest that at least some hospitals misclassify hospital-acquired complications as present-on-admission.

For example, using nurse-recoded data from 1200 randomly sampled adult medical-surgical records from three Calgary, Alberta, Canada hospitals as a gold standard, 9 of 12 complications were over-classified as present-on-admission (under-identified as arising in hospital) by the original hospital coders e.g. acute myocardial infarction (AMI) by 40%, atrial fibrillation by 5%, atelectasis by 13%, bowel obstruction by 20%, cerebrovascular disease by 1%, pleural effusion by 5%, pneumonia by 3%, respiratory failure by 7% and UTI by 2% [16]. Only acute post-hemorrhagic anemia, acute renal failure and heart failure were more likely to be classified as present-on-admission on recoding than on the hospital's original coding.

Almost all of the publicly available information about diagnosis-timing accuracy in the US comes from two states, California [11, 17] and New York [11, 12], one hospital system (University of Michigan) [18] and Medicare inpatient claims data. Diagnosis-timing information may be more accurate for surgical patients than for medical patients. When randomly sampled medical records for community-acquired pneumonia (CAP) and hip fracture in California were re-abstracted, agreement on diagnosis-timing reporting was high for hip fracture patients (kappa 0.7–1.0). In contrast, agreement on diagnosis-timing status for CAP patients was lower (kappa 0.2–0.6) [19]. Among 1059 records of patients admitted for AMI, heart failure, CAP or percutaneous coronary intervention (PCI) to 48 hospitals in California, diagnosis-timing reporting accuracy (with a gold standard based on two-abstractor agreement and physician adjudication) ranged from 60% for septicemia among patients admitted for pneumonia to 82% for pulmonary edema in the setting of AMI [20]. Finally, among 318 randomly sampled Medicare inpatient claims with one of five conditions of interest (catheter-associated urinary tract infection, venous catheter-associated infection, stage III or IV pressure ulcer, falls with trauma or severe manifestations of poor glycemic control) reported as present on admission, only five (1.6%) were found to have been hospital-acquired [21].

The training and setting of the person assigning diagnosis-timing status may affect its reliability and validity. There was moderate agreement between nurses' and coders' assignment of diagnosis-timing status (kappa = 0.4) among 184 cases with any of 12 Patient Safety Indicators (PSIs) from the Agency for Healthcare Research and Quality (AHRQ); coders were more likely to misclassify apparent in-hospital events as present-on-admission than nurses [22]. Incorrectly classifying diagnoses that arose in hospital as present-on-admission was slightly more frequent than the reverse (14 versus 12%), with for-profit hospitals nearly twice as likely as other hospitals to over-report present-on-admission status, whereas teaching hospitals were about twice as likely to underreport present-on-admission status [20]. In a subsequent simulation study, the same authors found that ‘inaccuracy in POA recording is not the primary cause of differences in hospital rank when POA is added to risk-adjustment models of AMI mortality’ (i.e. less than 5% of hospitals changed ranks by at least 10% due to POA inaccuracy, compared with 25% that changed ranks by at least 10% when risk-adjustment was limited to POA diagnoses) [23].

When physician documentation is ambiguous, coders may tend to classify diagnoses as present-on-admission as their default option, especially at for-profit hospitals. Furthermore, there will always be clinical scenarios where coders will need to make difficult judgments on diagnosis timing (e.g. whether pneumonia was truly hospital-acquired if it was diagnosed early in the hospital stay). However, this type of clinical nuance is a challenge inherent to all disease coding, because coders in most health systems are not clinicians and must work within the constraints of often-incomplete documentation in clinical records.

Implications for risk-adjustment

Using diagnosis-timing information in comorbidity-based risk-adjustment has several consequences [24–28]. First, the odds ratios for in-hospital mortality associated with the presence of some comorbidities decrease, presumably because these comorbidities confer greater risk when they arise acutely during a hospitalization than when they are chronic. As well, with diagnosis information, additional covariates that would otherwise be excluded (due to the uncertainty that they may have arose during hospitalization) from risk-adjustment can be added to enhance the discrimination of multivariable risk-adjustment models. This enhancement may shrink associations between hospital characteristics (e.g. surgical volume) and adjusted outcomes toward the null, presumably due to reduced confounding [29].

Implications for coding of assessing hospital complications and mortality

Among approaches that use coded hospital data to assess complications of care, the impact of diagnosis-timing has been studied most extensively for the AHRQ PSIs [30]. Several authors have applied diagnosis-timing information from various settings to the PSIs to estimate the percentage of adverse (numerator) events captured by these PSIs that appear to be ‘false positive’, because diagnosis-timing reporting indicates that [23] they did not arise in hospital [11, 22, 31–33]. Among the current PSIs, in the absence of diagnosis-timing information, the true positive rate is generally high (i.e. over 70%) for iatrogenic pneumothorax, postoperative hemorrhage or hematoma, postoperative physiologic or metabolic derangement, postoperative respiratory failure, postoperative wound dehiscence and accidental puncture or laceration. The true positive rate is generally low (i.e. less than 50%) for pressure ulcer, postoperative hip fracture and postoperative deep vein thrombosis or pulmonary embolism. Other PSIs, such as Central Venous Catheter-Related Bloodstream Infection and Postoperative Sepsis, demonstrate intermediate or inconsistent results across studies [34]. PSI events that are over-reported as present-on-admission may compromise the sensitivity of the PSIs even as the positive predictive value improves. This tradeoff between sensitivity and positive predictive value may have different implications for different PSIs. Notably, diagnosis-timing indicators may allow the redefinition of PSIs by dropping exclusion criteria intended to avoid capturing events with a higher likelihood of being present-on-admission [34].

Diagnosis-timing reporting also has an impact on improving hospital mortality models [24, 28, 29, 35, 36]. For example, two risk-adjustment models for in-hospital mortality based on data from 23 Canadian hospitals, with and without use of diagnosis-type indicators, differed in ranking 19 of the 23 hospitals, with approximately half going up in the rankings, and half going down [24]. Similar findings have been reported across multiple conditions and procedures, from coronary artery bypass surgery to aspiration pneumonia.

Recommendations from the QS-TAG regarding diagnosis-timing reporting

Despite imperfect validity, the evidence to date indicates that a diagnosis-timing flag improves the ability of routinely collected, coded hospital discharge data to support outcomes research and the development of quality and safety indicators. As more countries adopt diagnosis-timing reporting, it is important to consider how the reporting categories are constructed and defined, to enhance the international comparability of these data in the future.

The QS-TAG recommends a three-level diagnosis-timing designation of ‘condition arising after admission (i.e. during hospitalization)’ (yes/no), with a permitted designation of ‘unknown/clinically undetermined’ (Table 2). This classification will facilitate accurate, reliable coding while providing flexibility when there is coding uncertainty. The ‘unknown/clinically undetermined’ option reduces coder burden and minimizes the collection of meaningless data. One category for missing information should be sufficient in jurisdictions where policy-makers do not plan to use diagnosis-timing information to determine payment or set penalties. For instance, we judged the Canadian approach to be complicated, as the options are not mutually exclusive, no option exists to report when diagnosis-timing cannot be determined and the rationale for reporting chronic diagnoses that do not require treatment or other intervention is unclear.

Table 2.

WHO TAG Recommendation for a diagnosis-timing flag

| Designation | Definition |

|---|---|

| Y | A condition arising after admission |

| N | A condition NOT arising after admission |

| U | Unknown or clinically undetermined |

The WHO has conceptually endorsed the Q&S TAG recommendations for inclusion of a diagnosis-timing mechanism in ICD-11. However, the recommended approach to code diagnosis timing in ICD-11 is still being finalized by the WHO at this time (May 2015). The current operational proposal is for diagnosis-timing codes to reside in a new ‘Extension codes’ section of the ICD-11. The plan is for this new Extension Code section to be available primarily for coding causes of hospital morbidity (It will be less applicable to the mortality coding use case.). Constructs to be captured in the new Extension codes section of ICD-11 include the diagnosis-timing concept discussed here, as well as code concepts for laterality (i.e. left vs right side of body), ‘family history of …’, ‘personal history of …’ and a diagnostic ‘rule out …’ concept. Information on timing of diagnosis will be captured by linking, through clustering, an Extension Chapter code for ‘diagnosis arising during hospital stay’ with a diagnosis code for the condition in question that arose during hospitalization, or alternatively an extension code for ‘present on admission’ when there is a desire to explicitly flag a diagnosis as being present at admission. This mechanism is the leading idea for diagnosis timing in ICD-11 because it resides within the classification itself, where it will be available internationally in a unified format.

There are several challenges to the introduction of diagnosis-timing coding. First, timing a diagnosis depends on the quality of medical record documentation and the training and judgment of the coder. Even in systems that have had diagnosis-timing coding for more than 20 years, the accuracy or reliability of these timing variables is only moderate. Having an explicit option for ‘unknown’ or ‘uncertain’ may improve coders' ability to report this information without substantially compromising the usefulness of the data. Second, the primary use of coded hospital data for payment purposes may lead coders to neglect diagnosis-timing reporting or to over-report hospital-acquired conditions as present-on-admission, depending on how diagnosis-timing information is used in public reporting programs and in setting payment rates. The addition of diagnosis-timing may require more time for coders to complete a hospital record.

In order to ensure completeness and accuracy of diagnosis-timing coding, we recommend coding guidelines to support complete coding of diagnosis-timing status for all conditions that affect care during a hospital stay. Such guidelines and coding standards should be provided to help clinicians and coders to handle ambiguity. For example, Official Guidelines for Coding and Reporting in the US instruct coders to ‘assign Y for conditions diagnosed during the admission that were clearly present but not diagnosed until after admission occurred: diagnoses are considered present-on-admission if at the time of admission they are documented as suspected, possible, rule out, differential diagnosis, or constitute an underlying cause of a symptom that is present at the time of admission’ [10]. The QS-TAG in its recommendations to the WHO will encourage countries to adopt similar guidelines for diagnosis-timing implementation in ICD-11 (or sooner if countries wish to consider implementation in existing ICD-10 coded data systems).

Training or retraining of clinicians will be needed to reinforce how their medical record documentation will be used, and thus the importance of documenting whether each diagnosis was present on admission. Similarly, regular training (and re-training) of coders will be required to improve and confirm their ability to use available documentation to code diagnosis-timing. Such coding can be complex, especially with incomplete or conflicting information in the medical record. Some countries use routine code-recode audits with feedback to ensure system-wide coding quality, while other countries use more focused or payer-specific audit programs. While there may be an increase in coding burden associated with international adoption of a coding approach to diagnosis timing, three countries have successfully adopted such coding and the resulting information has enhanced case mix systems and strengthened the quality and safety use case. Clearly, the experience of these three countries suggests that the benefits of this additional coding have been sufficient to justify continuation of the practice.

The literature on diagnosis-timing is growing, but is currently limited to specific US states, Canada and Australia. We recommend that more research on the completeness of coding, reliability and validity of diagnosis-timing be conducted, as this will support efforts to improve its accuracy and use. Some evidence of the reliability of diagnosis-timing may come from the code-recode audits conducted by government data custodians to ensure the quality of coded data. We encourage the release of these results into the public domain.

Finally, the introduction of diagnosis-timing coding may have implications for the size and complexity of hospital data sets, especially with a renewed emphasis on coding both chronic comorbidities and acute complications that affect care during the stay. In relation to these latter considerations, the QS-TAG is also exploring the implications of code clustering mechanisms that explicitly link two or more related codes, and coding guidelines for setting the maximum number of diagnosis fields in hospital data sets (Fig. 1).

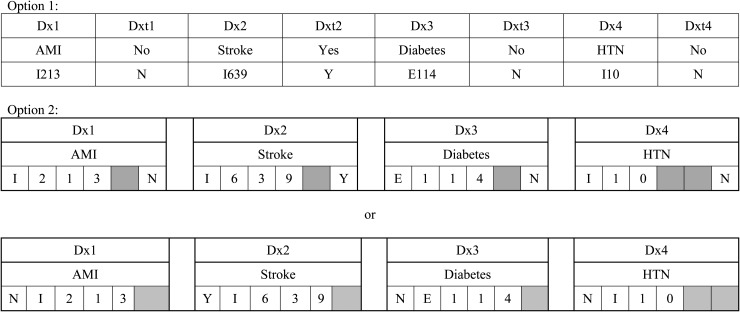

Figure 1.

Two options of how a diagnosis-timing flag could be included in hospital morbidity data. We describe how a diagnosis-timing flag (classified based on whether a diagnosis ‘arose after admission’) can provide valuable insights into the timing of diagnostic codes using the following scenario: a patient visits the Emergency Department due to severe chest pain and is admitted to hospital with an AMI. The AMI is treated, but the patient suffers a stroke, which extends his or her hospital stay. The patient has diabetes and hypertension on admission. In this scenario, the AMI is the reason for admission because the symptom chest pain is the clinical manifestation of an AMI, which is diagnosed shortly after admission and is coded as diagnosis1. AMI does not arise after admission; consequently the associated diagnosis-timing flag1 to diagnosis1 would equal ‘N’ for ‘No’ (Option 1). Stroke arises during hospitalization (diagnosis2 = stroke; diagnosis-timing flag2 = ‘Y’ = ‘Yes’), whereas both diabetes and hypertension are present-on-admission (diagnosis3 = diabetes, diagnosis-timing flag 3 = ‘N’; diagnosis4 hypertension, diagnosis-timing flag 4 = ‘N’). An alternative format of diagnosis-timing assignment would be to add an extra digit to the diagnostic ICD code, rather than have it be a separate field (Option 2).

Conclusion

As there is evidence that diagnosis-timing assignment has great potential to improve morbidity information in ICD-coded hospital data, the QS-TAG formally endorses the international implementation of diagnosis-timing flags in ICD-11. The preceding review of the issue supports a constructive dialog amongst the health data coding community about diagnosis-timing reporting in order to improve coding practices and advance the international harmonization of hospital data.

Funding

This work was supported by the Agency for Healthcare Research and Quality (grant number 5R13HS020543-02); National Center for Advancing Translational Sciences; National Institutes of Health (grant number UL1 TR000040); Canadian Patient Safety Institute and the Canadian Institute for Health Information. Drs W.A.G. and H.Q. are funded as an Alberta Innovates Health Solutions Senior Health Scholar.

Acknowledgements

The content is solely the responsibility of the authors and does not necessarily represent the official views of the AHRQ; the National Institutes of Health (NIH); the Canadian Patient Safety Institute (CPSI) and the Canadian Institute for Health Information (CIHI). The WHO QS-TAG membership includes: W.A.G. (co-chair), H.A.P. (co-chair), Marilyn Allen, S.B., B.B., Cyrille Colin, S.E.D., Alan Forster, Yana Gurevich, James Harrison, Lori Moskal, William Munier, Donna Pickett, H.Q., P.S.R., Brigitta Spaeth-Rublee, Danielle Southern and V.S. The authors acknowledge the expertize and contributions of the following individuals at TAG meetings: David Van der Zwaag, Christopher Chute, Eileen Hogan, Ginger Cox, Bedirhan Ustun and Nenad Kostanjek. They have all contributed to both the TAG work plan and some aspects of the paper.

References

- 1.Ghali WA, Pincus HA, Southern DA, et al. ICD-11 for quality and safety: overview of the WHO Quality and Safety Topic Advisory Group. Int J Qual Health Care 2013;25:621–5. [DOI] [PubMed] [Google Scholar]

- 2.Drosler SE, Romano PS, Sundararajan V, et al. How many diagnosis fields are needed to capture safety events in administrative data? Findings and recommendations from the WHO ICD-11 Topic Advisory Group on Quality and Safety. Int J Qual Health Care 2014;26:16–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Quan H, Moskal L, Forster AJ, et al. International variation in the definition of ‘main condition’ in ICD-coded health data. Int J Qual Health Care 2014;26:511–515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Canadian Institute for Health Information. Diagnosis Typing: Current Canadian and International Practices 2004. Available from: http://secure.cihi.ca/cihiweb/en/downloads/Diagnosis_Typing_Background_v1.pdf (27 March 2012, date last accessed).

- 5.State Government of Victoria. Victorian Additions to Australian Coding Standards 2001. Available from: http://www.health.vic.gov.au/hdss/icdcoding/vicadditions/vicadd01.pdf (23 March 2012, date last accessed).

- 6.Australian Institute for Health and Welfare. Episode of Admitted Patient Care—Diagnosis Onset Type, Code N 2006. Available from: http://meteor.aihw.gov.au/content/index.phtml/itemId/270192 (2 April 2012, date last accessed).

- 7.Australian Institute for Health and Welfare. Episode of Admitted Patient Care—Condition Onset Flag, Code N 2008. Available from: http://meteor.aihw.gov.au/content/index.phtml/itemId/354816 (2 April 2012, date last accessed).

- 8.Kassed C, Kowlessar N, Pfuntner A, et al. The Case for the Present-on-Admission (POA) Indicator: Update 2011 Methods Report. HCUP Methods Series Report #2011-05. ONLINE U.S. Agency for Healthcare Research and Quality, November 1, 2011. (2 April 2012, date last accessed). [Google Scholar]

- 9.Medicare Learning Network. Hospital-Acquired Conditions and Present on Admission Indicator Reporting Provision: Department of Health and Human Services Centers for Medicare & Medicaid Services ; Available from: http://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/Downloads/wPOAFactSheet.pdf (2 October 2014, date last accessed).

- 10.Centers, for, Medicare, &, Medicaid, Services. Hospital-Acquired Conditions (Present on Admission Indicator) Coding: Centers for Medicare & Medicaid Services; 2014. Available from: http://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/HospitalAcqCond/Coding.html (2 October 2014, date last accessed).

- 11.Houchens RL, Elixhauser A, Romano PS. How often are potential patient safety events present on admission? Jt Comm J Qual Patient Saf 2008;34:154–63. [DOI] [PubMed] [Google Scholar]

- 12.Pine M, Fry DE, Jones B, et al. Screening algorithms to assess the accuracy of present-on-admission coding. Perspect Health Inf Manag 2009;6:2. [PMC free article] [PubMed] [Google Scholar]

- 13.Rangachari P. Coding for quality measurement: the relationship between hospital structural characteristics and coding accuracy from the perspective of quality measurement. Perspect Health Inf Manag 2007;4:3. [PMC free article] [PubMed] [Google Scholar]

- 14.Coffey R, Milenkovic M, Andrews RM. The Case for the Present-on-Admission (POA) Indicator Report# 2006-01: U.S. Agency for Healthcare Research and Quality.; June 2006 [cited 2012 27-03-2012]. Available from: http://www.hcup-us.ahrq.gov/reports/methods/2006_1.pdf.

- 15.Research Triangle International. Centre for Medicare & Medicaid Services RTI Evaluation of FY 2011 Data, Chart A: Research Triangle International; 2011. Available from: http://www.rti.org/reports/cms/ (2 October 2014, date last accessed).

- 16.Quan H, Parsons GA, Ghali WA. Assessing accuracy of diagnosis-type indicators for flagging complications in administrative data. J Clin Epidemiol 2004;57:366–72. [DOI] [PubMed] [Google Scholar]

- 17.Glance LG, Osler TM, Mukamel DB, et al. Impact of the present-on-admission indicator on hospital quality measurement: experience with the Agency for Healthcare Research and Quality (AHRQ) Inpatient Quality Indicators. Med Care 2008;46:112–9. [DOI] [PubMed] [Google Scholar]

- 18.Meddings J, Saint S, McMahon LF., Jr Hospital-acquired catheter-associated urinary tract infection: documentation and coding issues may reduce financial impact of Medicare's new payment policy. Infect Control Hosp Epidemiol 2010;31:627–33. [DOI] [PubMed] [Google Scholar]

- 19.Haas J, Luft H, Romano PS, et al. Report for the California Hospital Outcomes Project. Community-acquired pneumonia, 1996: Model Development and Validation. Office of Statewide Health Planning and Development, 2010. [Google Scholar]

- 20.Goldman LE, Chu PW, Osmond D, et al. The accuracy of present-on-admission reporting in administrative data. Health Serv Res 2011;46(6pt1):1946–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.RTI, International. Accuracy of Coding in the Hospital-Acquired Conditions—Present on Admission Program. Final Report Centers for Medicare & Medicaid Services, 2012. [Google Scholar]

- 22.Bahl V, Thompson MA, Kau TY, et al. Do the AHRQ patient safety indicators flag conditions that are present at the time of hospital admission? Med Care 2008;46:516–22. [DOI] [PubMed] [Google Scholar]

- 23.Goldman LE, Chu PW, Bacchetti P, et al. Effect of Present-on-Admission (POA) reporting accuracy on hospital performance assessments using risk-adjusted mortality. Health Serv Res 2014;50:922–938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ghali WA, Quan H, Brant R. Risk adjustment using administrative data: impact of a diagnosis-type indicator. J Gen Intern Med 2001;16:519–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Glance LG, Dick AW, Osler TM, et al. Accuracy of hospital report cards based on administrative data. Health Serv Res 2006;41(4 Pt 1):1413–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pine M, Jordan HS, Elixhauser A, et al. Enhancement of claims data to improve risk adjustment of hospital mortality. JAMA 2007;297:71–6. [DOI] [PubMed] [Google Scholar]

- 27.Roos LL, Stranc L, James RC, et al. Complications, comorbidities, and mortality: improving classification and prediction. Health Serv Res 1997;32:229–38; discussion 39–42. [PMC free article] [PubMed] [Google Scholar]

- 28.Stukenborg GJ, Wagner DP, Harrell FE, Jr, et al. Hospital discharge abstract data on comorbidity improved the prediction of death among patients hospitalized with aspiration pneumonia. J Clin Epidemiol 2004;57:522–32. [DOI] [PubMed] [Google Scholar]

- 29.Stukenborg GJ, Kilbridge KL, Wagner DP, et al. Present-at-admission diagnoses improve mortality risk adjustment and allow more accurate assessment of the relationship between volume of lung cancer operations and mortality risk. Surgery 2005;138:498–507. [DOI] [PubMed] [Google Scholar]

- 30.AHRQ. Quality Indicators—Guide to Patient Safety Indicators. Department of Health and Human Services Agency for Healthcare Research and Quality, 2003. [Google Scholar]

- 31.Naessens JM, Campbell CR, Berg B, et al. Impact of diagnosis-timing indicators on measures of safety, comorbidity, and case mix groupings from administrative data sources. Med Care 2007;45:781–8. [DOI] [PubMed] [Google Scholar]

- 32.Scanlon MC, Harris JM, II, Levy F, et al. Evaluation of the agency for healthcare research and quality pediatric quality indicators. Pediatrics 2008;121:e1723–31. [DOI] [PubMed] [Google Scholar]

- 33.Jackson TJ, Michel JL, Roberts R, et al. Development of a validation algorithm for ‘present on admission’ flagging. BMC Med Inform Decis Mak 2009;9:48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McConchie S, Shepheard J, Waters S, et al. The AusPSIs: the Australian version of the agency of healthcare research and quality patient safety indicators. Aust Health Rev 2009;33:334–41. [DOI] [PubMed] [Google Scholar]

- 35.Dalton JE, Glance LG, Mascha EJ, et al. Impact of present-on-admission indicators on risk-adjusted hospital mortality measurement. Anesthesiology 2013;118:1298–306. [DOI] [PubMed] [Google Scholar]

- 36.Stukenborg GJ. Hospital mortality risk adjustment for heart failure patients using present on admission diagnoses: improved classification and calibration. Med Care 2011;49:744–51. [DOI] [PubMed] [Google Scholar]