Abstract

Purpose

Prospective motion correction for MRI and other imaging modalities are commonly based on the assumption of affine motion, i.e. rotations, shearing, scaling and translations. In addition it often involves transformations between different reference frames, especially for applications with an external tracking device. The goal of this work is to develop a computational framework for motion correction based on homogeneous transforms.

Theory and Methods

The homogeneous representation of affine transformations uses 4×4 transformation matrices applied to 4-dimensional augmented vectors. It is demonstrated how homogenous transforms can be used to describe the motion of slice objects during an MRI scan. Furthermore, we extend the concept of homogeneous transforms to gradient and k-space vectors, and show that the 4th dimension of an augmented k-space vector encodes the complex phase of the corresponding signal sample due to translations.

Results

The validity of describing motion tracking in real space and k-space using homogeneous transformations only is demonstrated on phantom experiments.

Conclusion

Homogeneous transformations allows for a conceptually simple, consistent and computationally efficient theoretical framework for motion correction applications.

Introduction

Motion correction in MRI is becoming increasingly important due to its ability to maximize effective spatial resolution and image quality by virtually freezing patient motion. This is achieved by locking the scan geometry to the mobile object reference frame, based on feedback from a system that tracks object motion. Object motion can be either derived from MR navigators (1-3), MR-visible markers (4,5), or using external tracking devices (e.g. optical camera systems (6-9)). Once information about object motion is available, motion correction can be performed prospectively by updating the imaging gradients and slice objects (6,7,9), retrospectively by implicitly or explicitly updating the k-space trajectory used during image reconstruction (10), or by a combination of both approaches (11,12). Many motion correction techniques are based on the assumption of rigid body object motion, and calculations commonly involve several coordinate systems (i.e. reference frames). This is particularly true for external tracking devices, whose internal coordinate system is different from that frame of the scanner (7-9,13).

Rigid body motion involves rotations and translations. Homogeneous coordinates and transformations (represented by augmented 4-dimensional, or 4D, vectors and 4x4 matrices) allow for a matrix formulation for both translations and rotations. Homogeneous transforms have been previously used in motion corrected MRI. For example, Aksoy et al. (6,11) used homogeneous matrices to model the calibration process and calculate gradient updates. The effect of affine transformations on the k-space signal is described in (14) and (15). However, most current commercial implementations still describe position in terms of slice offsets (i.e. translations), and the orientation of slices and the field-of-view in terms of gradient rotations (8,9). Additionally, gradient orientation and slice offsets are usually treated as completely separate entities. These approaches may lead to lengthy and complicated calculations when transformations between multiple reference frames are involved.

In this work, we demonstrate how the complete chain of transformations including the camera reference frame, the initial slice orientation and position and resulting updates can be described using homogeneous transformations only. We further introduce augmented 4D gradient and k-space vectors that allow for a direct application of homogeneous transformations to k-space coordinates and gradient orientations.

Theory

Reference frames

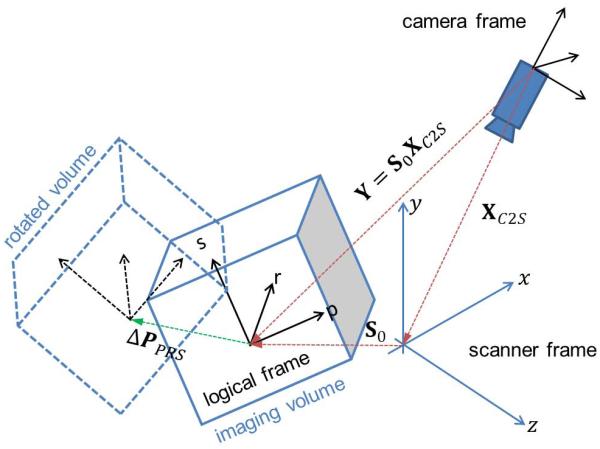

A motion correction system with an external tracking device typically involves three stationary reference frames: camera, scanner and the logical frame at the start of the scan (Figure 1). The camera frame is the internal reference frame of the tracking device. The stationary scanner frame is the reference frame of the MRI machine as defined by the orientation and direction of the physical gradient fields (XYZ-frame) used for spatial encoding. The logical frame depends on the initial orientation of the imaging volume or slice, and describes the spatial encoding process in terms of logical gradients (e.g. phase, read, slice direction or PRS-frame).

Figure 1.

Reference frames and transformations for a typical system using an external motion tracking device (here: camera) that does not directly track in the in the scanner frame.

Motion correction is commonly conceptualized and implemented as a two step process. First, the primary tracking data (in the internal reference frame of the tracking system) are transformed into scanner coordinates (XYZ), and object motion is calculated in scanner coordinates. This is achieved using an experimentally determined “cross-calibration” transformation, which describes the camera pose in the XYZ-frame (9,11,16). In step 2, motion in scanner coordinates is used to adjust the gradient orientation matrix and slice positions and FOV, i.e. to update the logical reference frame.

One major reason for using a two-step approach is that external motion tracking systems are not fully integrated into commercial scanners. Consequently, the external tracking system typically acts as an independent front-end that provides tracking data to the scanner. Updates to the logical reference frame are subsequently performed on the scanner using internal software algorithms available. Furthermore, since current scanner environments typically treat the gradient matrix, slice objects, and FOV properties as separate entities, calculations for rotations and translations are usually performed separately as well, which can result in rather complex algorithms. In the following sections, we describe how the consistent use of homogeneous transformations can dramatically simplify calculations required for motion correction, and lead to more efficient implementations.

Rigid body motion and homogeneous transformations

Rigid body motion involves a combination of rotations (3×3 matrix R̂) and translations (3×1 column vector d̂ = (dx, dy, dz)T). However, rotations and translations of a 3-dimensional vector r̂ can be expressed as a single matrix multiplication if the position vector r̂ is augmented by a 4th dimension. The augmented 4×1 vector r is of the form r = (r̂, 1)T = (rx, ry, rz, 1)T with the 4th coefficient set to a constant value of 1. Here, the hat is used to indicate regular 3D vectors and matrices, and omitted when using augmented 4D coordinates (homogeneous transforms). Transformation of an augmented vector r is then written as

| [1] |

where A is a 4×4 homogeneous transform matrix of the form It can be easily verified that Eq.[1] is equivalent to a vector rotation and subsequent translation. Furthermore, it is easy to proof that the transpose of A is and the inverse is .

Representing slices and imaging volume as homogeneous matrices

First, rather than treating slice (or imaging volume) offsets and the rotation matrix as two separate objects, we represent a slice object as a single homogeneous matrix that combines translations and rotations. Specifically, a given slice is characterized by the offset of its center relative to (physical) isocenter, as well as its rotation relative to the scanner reference frame (Figure 1). Thus, the initial slice object is represented by its homogeneous matrix S0 relative to the XYZ-frame. S0 maps the augmented gradient (see below) vector gPRS (phase, read, slice, 0) in the logical frame to the physical frame by

| [2] |

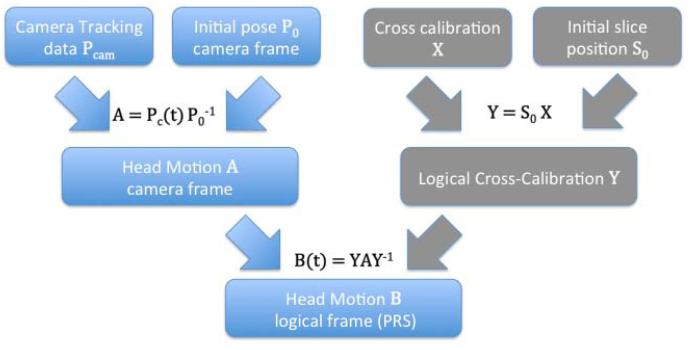

“Logical cross calibration” and transforming motion from the camera to logical frame

Once slice objects are represented as homogeneous matrices, it is possible to describe the process of motion correction in a simplified framework. First, we define the “logical cross calibration” Y as the pose of the camera relative to the logical frame. Specifically, for any slice i with initial orientation S0i, the associated logical cross calibration Yi is defined as:

| [3] |

where X is the “conventional” cross-calibration (from camera to physical coordinate frame).

The primary measurement of object motion occurs in the reference frame of the tracking device, and is calculated relative to the initial pose of the tracking marker Pcam(t = t0) at time point t = t0, typically at the start of the imaging sequence. Pcam(t) represents a subsequent pose of the maker at time t in the camera frame. Object motion A(t) as seen by the camera is then given by

| [4] |

The logical cross calibration Yi from Eq. [3] allows the direct transformation of poses in the camera frame into poses in the logical frame, resulting in Pprs(t0) = YiPcam(t0) and Pprs(t) = YiPcam(t). Therefore, motion B(t) in the logical PRS-frame is given by

| [5] |

As shown in Figure 2, the proposed algorithm starts by initializing the logical cross calibration (for each slice) at the start of a given scan. Subsequent motion correction updates in logical coordinates can then be calculated as per Eq. [5], and require a single matrix operation. It is then trivial to extract the necessary rotational and translational changes from the homogeneous matrix B(t), and update the rotation matrix and slice position accordingly.

Figure 2.

One step transformation from motion in camera frame directly to motion in logical frame. For each slice, a “logical cross calibration” Y is calculated and stored at the start of the scan, and then used repeatedly in calculating motion updates in logical coordinates.

Augmented K-space

In this section, we extend the use of homogenous coordinates to k-space and the MR signal equation, and introduce augmented 4D gradient and k-space vectors.

The received signal s(t) in MRI is commonly written as

| [6] |

with ρ(r̂) being the complex valued spin density at spatial location r̂ = (rx, ry, rz)T·Φ(r̂, t) is the accumulated phase of a single spin at position r̂ due to the influence of temporally and spatially varying gradient field ĝ = (gx, gy, gz)T. The phase variation Φ at position r̂ is given by integrating the effects of the magnetic field gradient over time:

| [7] |

By defining the k-space variable as and under the assumption of a stationary object, Eq.[7] can be rewritten as the scalar product of the spatial position with the k-space vector.

| [8] |

Phase evolution in case of rigid body motion and homogeneous representation

Equations [6] to [8] demonstrate that spatial encoding in MRI is ultimately based on a periodic phase pattern imposed on the object’s magnetization, as determined by the scalar product of r̂ and k̂. In analogy to the augmented 4×1 spatial vector r = (r̂, 1)T = (rx, ry, rz, 1)T, we now define an augmented gradient vector as g = (gx, gy, gz, c0)T, with the 4th component c0 yet to be determined. We also define an augmented k-space vector as

| [9] |

The 4th component φ0 is still undetermined (c0), but it will become clear below that φ0 represents an initial phase. Rewriting the phase evolution of Eq. [7] during rigid body motion for an initial position r0 in terms of homogeneous coordinates yields:

| [10] |

In general, both A(t) and g0(t) are time dependent and therefore cannot be separated under the integral. However, for many motion tracking applications it is reasonable to assume that no motion occurs during short (millisecond) periods of gradient encoding; this corresponds to a zero order Taylor approximation in time for A. Therefore, for the remainder of this paper, we assume that A has no time dependence. Consequently, A can be separated from the integral in Eq.[10], which then becomes:

| [11] |

The phase evolution is now given as the scalar product of the initial pose r0 with a transformed augmented k-space vector K = ATk0. Since the phase Φ(r, t) is a scalar, its value based on Eq.[11] has to be identical to that determined with the conventional approach, i.e. using separate rotation and translations. First we calculate the transformed augmented k-space vector k:

| [12] |

Here, (kx, ky, kz)T are the components of a rotated k-space vector k̂ = R̂Tk̂0. The fourth component φ equals the sum of φ0 plus the scalar product of the translations with the non-rotated k-space vector, as in the case of a translation only. The scalar product for spatial encoding as per Eq. [11] then becomes:

| [13] |

Finally, rewriting the MRI signal equation [6] in terms of homogeneous coordinates yields:

| [14] |

Consequently, homogeneous coordinates and homogeneous k-space vectors allow the signal equation in the presence of rigid body motion to be written in a very compact form:

| [15] |

Affine motion

Changes in reference frames (for instance, from the camera frame to the scanner frame) involves translations and rotations only, and can therefore be represented by rigid body motion only. Consequently, the calculations in the previous sections were based on the assumption of rigid body motion. However, a more general class of movements involves affine transformations; i.e. additional non-rigid motion components such as shear or compression and inflation (scaling). For instance, cardiac and abdominal movements have been approximated using affine transformations (17). Importantly, our theory can be readily generalized to include arbitrary affine transformations. This is achieved by replacing the rotation matrix R̂ in the 4×4 matrix with a (linear) affine transformation matrix F̂.

Methods

Experiments were performed on 3T Tim Trio scanner (Siemens, Erlangen) using a 12 channel RF coil. Validation of Eqs. [5], [12] and [15] was performed on a structured phantom that was imaged in two different positions using a 3D-GRE sequence (TR/TE = 10/5 ms, 2mm isotropic resolution). The initial scan geometry (FOV) was along the sagittal direction, rotated by 15° around the slice axis with additional off-center shifts of 19.3, 21.0, and 10 mm along the x, y, and z-axes. The vendor specific description of the initial slice position was converted to the homogeneous representation of the slice position and orientation S0.

Motion between the two phantom positions was recorded using an optical tracking device (in-bore camera system, Metria Innovation, (18)). The motion approximately consisted of a 60° rotation around the scanner’s xy-axis and 10mm translations along all three axes. Retrospective motion correction was implemented using an iterative augmented SENSE (10) reconstruction. The forward model (encoding matrix) was updated incorporating motion feedback according to Eq. [15]. Starting with a Cartesian trajectory, the 3D k-space was rotated using Eq.[12], since no intra-scan motion took place. The starting value of the 4th component of the augmented k-space vector was set to zero for the original Cartesian trajectory.

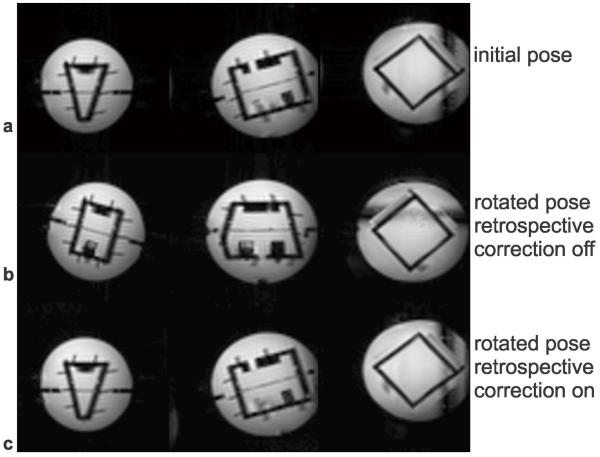

Results

Figure 3a shows three orthogonal slices of the phantom in its initial position. After moving (rotating and translating) the phantom, uncorrected images demonstrate substantial movement (Figure 3b). Retrospective motion correction, using image reconstruction according to Eq.[15], results in images that closely match those in the initial position (Figure 3c). This demonstrates that Equation [15] makes it possible to remove the effect of the static inter-scan motion between Figure 3a and 3b.

Figure 3.

Retrospective correction of inter-scan motion based on augmented k-space vectors.

Discussion

We demonstrate that the complete process of transformations between reference frames in MRI, and medical imaging in general, can be described using a homogeneous representation of rigid body motion by 4×4 homogeneous matrices and vectors. This framework models all changes in reference frames, and allows efficient calculation of arbitrary (oblique) volume / slice orientations as well as actual gradient and receiver phase updates for prospective motion correction (by introducing 4D augmented gradients and k-space vectors). Furthermore, it is possible to include non-rigid body motion like shear and scaling motion in this framework.

Assuming that slices are prospectively updated to track object motion, our framework of homogeneous transformations is valid for 2D multi-slice imaging as well because from a signal perspective, slice excitation and encoding are identical to acquiring a thin 3D volume with no phase encoding along the slice direction. In practice, the description of a stack of slices would require an array of initial slice positions S0i (e.g. with different translational components in case of non-oblique slices) and consequently, an array of logical cross calibrations Yi as well. However, if slice objects are not updated prospectively, then the disturbance of the steady-state in case of through-plane motion is not addressed by our alternative mathematical framework.”

Our initial motivation for the given formulism was a direct consequence of the need for a cross-calibration between the camera and the scanner in case of optical motion tracking. The derivation, however, applies to all tracking systems with an internal reference frame that is independent from the scanner’s reference frame. Consequently, our same framework is also useful for MR-navigators that are not acquired in the same logical coordinate system as defined by the orientation and position of the imaging field-of-view.

In conclusion, the use of homogeneous vectors and matrices makes it possible to transform motion from the camera into the scanner frame via two simple matrix multiplications. Our new approach is conceptually simpler and computationally more efficient than performing the common two-step process, from camera to physical to logical frames, and than treating rotations and translations separately (although the conventional approach is in no way incorrect ). However, we believe that many aspects of MRI or other medical imaging modalities involving transformations of volumes or slices are best described as a change in reference frames, and are most conveniently formulated in terms of homogeneous transformations and augmented k-space vectors.

Acknowledgements

This project was supported by grants NIH 1R01 DA021146 and 3R01 DA021146-05S1 (BRP), NIH U54 56883 (SNRP), and NIH K02-DA16991.

References

- 1.Ehman RL, Felmlee JP. Adaptive technique for high-definition MR imaging of moving structures. Radiology. 1989;173(1):255–263. doi: 10.1148/radiology.173.1.2781017. [DOI] [PubMed] [Google Scholar]

- 2.White N, Roddey C, Shankaranarayanan A, Han E, Rettmann D, Santos J, Kuperman J, Dale A. PROMO: Real-time prospective motion correction in MRI using image-based tracking. Magn Reson Med. 2010;63(1):91–105. doi: 10.1002/mrm.22176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tisdall MD, Hess AT, Reuter M, Meintjes EM, Fischl B, van der Kouwe AJ. Volumetric navigators for prospective motion correction and selective reacquisition in neuroanatomical MRI. Magn Reson Med. 2012;68(2):389–399. doi: 10.1002/mrm.23228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Haeberlin M, Kasper L, Barmet C, Brunner DO, Dietrich BE, Gross S, Wilm BJ, Kozerke S, Pruessmann KP. Real-time motion correction using gradient tones and head-mounted NMR field probes. Magn Reson Med. 2014 doi: 10.1002/mrm.25432. doi: 10.1002/mrm.25432. [DOI] [PubMed] [Google Scholar]

- 5.Ooi MB, Aksoy M, Maclaren J, Watkins RD, Bammer R. Prospective motion correction using inductively coupled wireless RF coils. Magn Reson Med. 2013 doi: 10.1002/mrm.24845. doi:10.1002/mrm.24845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Aksoy M, Forman C, Straka M, Skare S, Holdsworth S, Hornegger J, Bammer R. Real-time optical motion correction for diffusion tensor imaging. Magn Reson Med. 2011;66(2):366–378. doi: 10.1002/mrm.22787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Qin L, van Gelderen P, Derbyshire JA, Jin F, Lee J, de Zwart JA, Tao Y, Duyn JH. Prospective head-movement correction for high-resolution MRI using an in-bore optical tracking system. Magn Reson Med. 2009;62(4):924–934. doi: 10.1002/mrm.22076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schulz J, Siegert T, Reimer E, Labadie C, Maclaren J, Herbst M, Zaitsev M, Turner R. An embedded optical tracking system for motion-corrected magnetic resonance imaging at 7T. MAGMA. 2012;25(6):443–453. doi: 10.1007/s10334-012-0320-0. [DOI] [PubMed] [Google Scholar]

- 9.Zaitsev M, Dold C, Sakas G, Hennig J, Speck O. Magnetic resonance imaging of freely moving objects: prospective real-time motion correction using an external optical motion tracking system. Neuroimage. 2006;31(3):1038–1050. doi: 10.1016/j.neuroimage.2006.01.039. [DOI] [PubMed] [Google Scholar]

- 10.Bammer R, Aksoy M, Liu C. Augmented generalized SENSE reconstruction to correct for rigid body motion. Magn Reson Med. 2007;57(1):90–102. doi: 10.1002/mrm.21106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Aksoy M, Forman C, Straka M, Cukur T, Hornegger J, Bammer R. Hybrid prospective and retrospective head motion correction to mitigate cross-calibration errors. Magn Reson Med. 2012;67(5):1237–1251. doi: 10.1002/mrm.23101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Maclaren J, Lee KJ, Luengviriya C, Speck O, Zaitsev M. Combined prospective and retrospective motion correction to relax navigator requirements. Magn Reson Med. 2011;65(6):1724–1732. doi: 10.1002/mrm.22754. [DOI] [PubMed] [Google Scholar]

- 13.Forman C, Aksoy M, Hornegger J, Bammer R. Self-encoded marker for optical prospective head motion correction in MRI. Medical image analysis. 2011;15(5):708–719. doi: 10.1016/j.media.2011.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Atkinson D, Hill DL. Reconstruction after rotational motion. Magn Reson Med. 2003;49(1):183–187. doi: 10.1002/mrm.10333. [DOI] [PubMed] [Google Scholar]

- 15.Schechter G. Respiratory Motion of the Heart: Implications for Magnetic Resonance Coronary Angiography. Johns Hopkins University; 2004. [Google Scholar]

- 16.Zahneisen B, Lovell-Smith C, Herbst M, Zaitsev M, Speck O, Armstrong B, Ernst T. Fast noniterative calibration of an external motion tracking device. Magn Reson Med. 2014;71(4):1489–1500. doi: 10.1002/mrm.24806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nehrke K, Bornert P. Prospective correction of affine motion for arbitrary MR sequences on a clinical scanner. Magn Reson Med. 2005;54(5):1130–1138. doi: 10.1002/mrm.20686. [DOI] [PubMed] [Google Scholar]

- 18.Maclaren J, Armstrong BS, Barrows RT, Danishad KA, Ernst T, Foster CL, Gumus K, Herbst M, Kadashevich IY, Kusik TP, Li Q, Lovell-Smith C, Prieto T, Schulze P, Speck O, Stucht D, Zaitsev M. Measurement and correction of microscopic head motion during magnetic resonance imaging of the brain. Plos One. 2012;7(11):e48088. doi: 10.1371/journal.pone.0048088. [DOI] [PMC free article] [PubMed] [Google Scholar]