Abstract

Human body postures convey useful information for understanding others’ emotions and intentions. To investigate at which stage of visual processing emotional and movement-related information conveyed by bodies is discriminated, we examined event-related potentials elicited by laterally presented images of bodies with static postures and implied-motion body images with neutral, fearful or happy expressions. At the early stage of visual structural encoding (N190), we found a difference in the sensitivity of the two hemispheres to observed body postures. Specifically, the right hemisphere showed a N190 modulation both for the motion content (i.e. all the observed postures implying body movements elicited greater N190 amplitudes compared with static postures) and for the emotional content (i.e. fearful postures elicited the largest N190 amplitude), while the left hemisphere showed a modulation only for the motion content. In contrast, at a later stage of perceptual representation, reflecting selective attention to salient stimuli, an increased early posterior negativity was observed for fearful stimuli in both hemispheres, suggesting an enhanced processing of motivationally relevant stimuli. The observed modulations, both at the early stage of structural encoding and at the later processing stage, suggest the existence of a specialized perceptual mechanism tuned to emotion- and action-related information conveyed by human body postures.

Keywords: body postures, emotion perception, visual structural encoding, N190, early posterior negativity (EPN)

INTRODUCTION

Human body postures comprise a biologically salient category of stimuli, whose efficient perception is crucial for social interaction. Although in natural environments human bodies and faces are usually integrated into a unified percept, the neural networks underlying the processing of these two categories of stimuli, though closely related, seem to be distinct. In particular, neuroimaging evidence has demonstrated selective responses to human bodies in two focal brain regions: the extrastriate body area (EBA), located in the lateral occipitotemporal cortex (Downing et al., 2001), and the fusiform body area (FBA), in the posterior fusiform gyrus (Peelen and Downing, 2005; Taylor et al., 2007). Interestingly, both EBA and FBA responses generalize to schematic depictions of bodies, suggesting that body representation in these two areas is independent of low-level image features (Downing et al., 2001; Peelen et al., 2006).

As is the case with faces (e.g. Adolphs, 2002), the perceptual processing of bodies seems to represent a specialized mechanism, in which perception is configural (i.e. based on relations among the features of the stimulus), rather than based on the analysis of single body features. This is suggested, for example, by the inversion effect, a phenomenon in which bodies presented upside-down are more difficult to recognize than inverted objects (Reed et al., 2003). At the electrophysiological level, event-related potentials (ERPs) in response to bodies show a prominent negative deflection at occipitotemporal electrodes peaking in a range between 150 and 230 ms after stimulus presentation (Stekelenburg and de Gelder, 2004; Meeren et al., 2005; Van Heijnsbergen et al., 2007; Minnebusch et al., 2010). More specifically, Thierry et al. (2006) found a negative component peaking at 190 ms post-stimulus onset (N190), reflecting the structural visual encoding of bodies, which was distinct in terms of latency, amplitude and spatial distribution compared with the typical negative component elicited by the visual encoding of faces (i.e. the N170; Rossion and Jacques, 2008). The neural generators responsible for the negative deflection in response to bodies are thought to be located in a restricted area of the lateral occipitotemporal cortex, corresponding to EBA, as suggested by source localization analysis (Thierry et al., 2006), magnetoencephalographic recordings (Meeren et al., 2013) and electroencephalogram (EEG)-fMRI correlation studies (Taylor et al., 2010).

Studies on the perceptual processing of faces have shown that the component reflecting visual encoding (N170) is modulated by the emotional expressions of faces processed both explicitly (Batty and Taylor, 2003; Stekelenburg and de Gelder, 2004) and implicitly (Pegna et al., 2008, 2011; Cecere et al., 2014), suggesting that relevant emotional signals are able to influence the early stages of structural face encoding. In addition, non-emotional face movements, such as gaze and mouth movements, seem to be encoded at an early stage of visual processing and to modulate the N170 amplitude (Puce et al., 2000; Puce and Perrett, 2003; Rossi et al., 2014). At a later stage of visual processing (typically around 300 ms after stimulus onset), salient emotional faces are known to modulate the amplitude of the early posterior negativity (EPN), which reflects stimulus-driven attentional capture, in which relevant stimuli are selected for further processing (Sato et al., 2001; Schupp et al., 2004a; Frühholz et al., 2011; Calvo and Beltran, 2014).

Although faces represent a primary source of information about others’ states (Adolphs, 2002), human bodies can also be a powerful tool for inferring the internal states of others (de Gelder et al., 2010). Indeed, body postures convey information about others’ actions and emotions, both of which are useful for interpreting goals, intentions and mental states. Neuroimaging studies have shown that motion and emotion-related information conveyed by bodies activates a broad network of brain regions (Allison et al., 2000; de Gelder, 2006; Peelen and Downing, 2007). On the one hand, the observation of human motion increases activation in occipitotemporal areas close to and partly overlapping with EBA (Kourtzi and Kanwisher, 2000; Senior et al., 2000; Peelen and Downing, 2005), the superior temporal sulcus (STS), the parietal cortex (Bonda et al., 1996) and the premotor and motor cortices (Grèzes et al., 2003; Borgomaneri et al., 2014a), which might take part in perceiving and reacting to body postures (Rizzolatti and Craighero, 2004; Urgesi et al., 2014). On the other hand, emotional body postures, compared with neutral body postures, enhance activation not only at the approximate location of EBA, the fusiform gyrus and STS but also in the amygdala (de Gelder et al., 2004; Van de Riet et al., 2009) and other cortical (e.g. orbitofrontal cortex, insula) and subcortical structures (e.g. superior colliculus, pulvinar) known to be involved in emotional processing (Hadjikhani and de Gelder, 2003; Peelen et al., 2007; Grèzes et al., 2007; Pichon et al., 2008).

Although the pattern of neural activation for bodies conveying motion and emotion-related information suggests a similarity between perceptual mechanisms for faces and bodies, it is still unclear whether, like the information conveyed by faces, the information conveyed by body postures is already encoded at the early stage of structural representation and is therefore able to guide visual selective attention to favor the recognition of potentially relevant stimuli. Thus, this study was designed to investigate, using the high temporal resolution of ERPs, whether the structural encoding of bodies, reflected in the N190 component and visual selective attention, measured by the subsequent EPN component, are influenced by motion and emotion-related information represented in body postures. To this end, an EEG was recorded from healthy participants performing a visual task in which they were shown pictures of bodies. These bodies had static postures (without implied motion or emotional content), implied-motion postures without emotional content or implied motion postures expressing emotion (fear or happiness). In addition, stimuli were peripherally presented to the left or the right of a central fixation point to investigate whether the two hemispheres differentially contribute to the processing of body postures. In keeping with previous evidence that the right hemisphere plays a prominent role in responding to bodies (Chan et al., 2004; Taylor et al., 2010) and processing emotional information (Gainotti et al., 1993; Làdavas et al., 1993; Adolphs et al., 2000; Borod, 2000), a more detailed perceptual analysis of the different body postures was expected in the right hemisphere, compared with the left. More specifically, a low-level discrimination of motion-related information, reflected by an enhancement of the N190 component in response to postures with implied motion (either neutral or emotional) compared with static postures, was expected in both hemispheres. In contrast, discrimination of emotional content, reflected by an enhanced N190 in response to fearful compared with happy bodies, was only expected in the right hemisphere. Finally, at a later stage of visual processing, the salience of fearful body postures was expected to increase visual selective attention, resulting in an enhanced EPN component. Unlike the emotion-related modulation of the N190, we expected the EPN enhancement for salient fearful postures to occur in both hemispheres, since attention-related emotional modulations are known to occur in a widespread bilateral network of brain regions, including extrastriate occipital cortex, superior and inferior parietal areas and medial prefrontal regions (for a review, Pourtois and Vuilleumier, 2006).

METHODS

Participants

Twenty-two right-handed healthy volunteers (two males; mean age: 21.6 years; range: 20–26 years) took part in the experiment. They all had normal or corrected-to-normal vision. Since alexithymia is a relatively stable personality trait (Nemiah et al., 1976; Taylor et al., 1991), which is known to affect emotion recognition and processing (Jessimer and Markham, 1997; Parker et al., 2005), all volunteers underwent a screening for alexithymia, using the 20-item Toronto Alexithymia Scale (TAS-20; Taylor et al., 2003). Only volunteers with scores in the normal range (TAS score: >39 and <61) were selected to participate. Participants were informed about the procedure and the purpose of the study and gave written informed consent. The study was designed and performed in accordance with the ethical principles of the Declaration of Helsinki and was approved by the Ethics Committee of the Psychology Department at the University of Bologna.

Experimental task

The experimental session was run in a sound-attenuated and dimly lit room. Participants sat in a relaxed position on a comfortable chair in front of a 17’’ PC monitor (refresh rate 60 Hz) at a distance of ∼57 cm. Prior to the experiment, a short practice session was administered to familiarize the participants with the task.

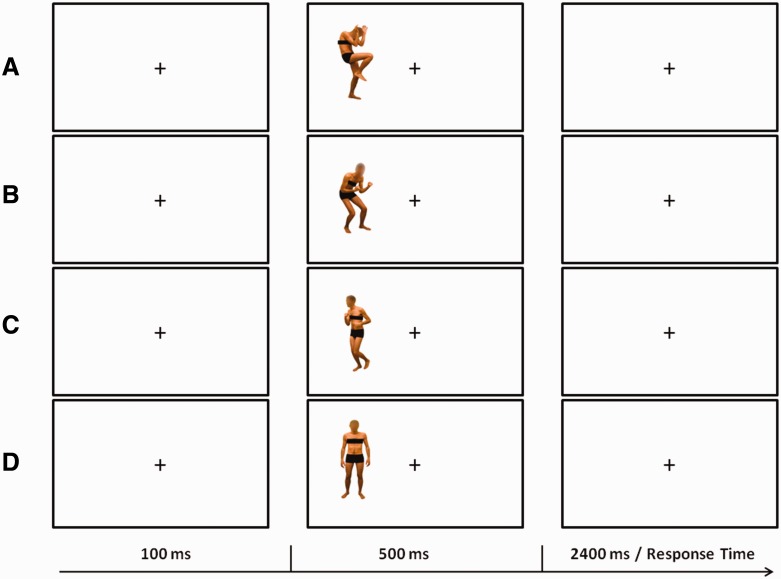

The stimuli were presented on a PC running Presentation software (Version 0.60; www.neurobs.com) and consisted of 64 static color pictures of human bodies (two males and two females; 10° × 16°) with the faces blanked out. The images were selected from a validated database (Borgomaneri et al., 2012, 2014a). Half of the stimuli were the original pictures and the other half were mirror-reflected copies. Stimuli represented bodies in different postures, in which implied motion was absent (static body posture) or motion was implied with different emotional expressions and body movement. In particular, the body images included 16 static body postures (static body stimuli; S) in which neither motion nor emotion was implied, 16 neutral body postures in which motion was implied (neutral body stimuli; N), 16 fearful body postures in which motion was implied (fearful body stimuli; F) and 16 happy body postures in which motion was implied (happy body stimuli; H; Figure 1). Two independent psychophysical studies (Borgomaneri et al. 2012, 2014a) provided evidence that N, F and H are subjectively rated as conveying the same amount of implied motion information and as conveying more body motion information than S stimuli. Moreover, H and F were rated as more arousing than N and S. Critically, although H and F were rated as conveying positive and negative emotional valence, respectively, these two classes of stimuli received comparable arousal ratings. The stimuli were displayed against a white background, 11° to the left [left visual field (LVF) presentation] or the right [right visual field (RVF) presentation] of the central fixation point (2°). Each trial started with a central fixation period (100 ms), followed by the stimulus (500 ms). Participants were asked to keep their gaze fixed on the central fixation and decide whether the presented stimulus was emotional (fearful or happy) or non-emotional (static or neutral) by pressing one of two vertically arranged buttons on the keyboard. The task was selected to balance the number of stimuli assigned to each response while maximizing the number of correct responses to minimize the rate of rejected epochs. Behavioral responses were recorded during an interval of 2400 ms. Half of the subjects pressed the upper button with the middle finger to emotional stimuli and the lower button with the index finger to non-emotional stimuli, while the remaining half performed the task with the opposite button arrangement. Eye movements were monitored throughout the task with electrooculogram (EOG; see below).

Fig. 1.

Graphical representation of the trial structure in the behavioral task. The figure depicts example trials with stimuli showing fearful (A), happy (B), neutral (C) and static body postures (D).

Participants performed 12 blocks in an experimental session of ∼45 min. In half of the blocks, the stimuli were presented in the LVF, while in the remaining half, they were presented in the RVF. Blocks with LVF and RVF presentation were interleaved, and the sequence of the blocks was counterbalanced between participants. In each block, 67 trials were randomly presented (16 trials × 4 body stimuli: static, motion neutral, motion fearful, motion happy = 64 trials + 3 practice trials). Each participant completed a total of 768 trials (384 trials in the LVF and 384 in the RVF).

EEG recording

EEG was recorded with Ag/AgCl electrodes (Fast’n Easy-Electrodes, Easycap, Herrsching, Germany) from 27 electrode sites (Fp1, F3, F7, FC1, C3, T7, CP1, P3, P7, O1, PO7, Fz, FCz, Cz, CPz, Pz, Fp2, F4, F8, FC2, C4,T8, CP2, P4, P8, O2, PO8) and the right mastoid. The left mastoid was used as reference electrode. The ground electrode was placed on the right cheek. Impedances were kept below 5 kΩ. All electrodes were off-line re-referenced to the average of all electrodes. Vertical and horizontal EOG was recorded from above and below the left eye and from the outer canthi of both eyes. EEG and EOG were recorded with a band-pass of 0.01–100 Hz and amplified by a BrainAmp DC amplifier (Brain Products, Gilching, Germany). The amplified signals were digitized at a sampling rate of 500 Hz and off-line filtered with a 40-Hz low-pass filter.

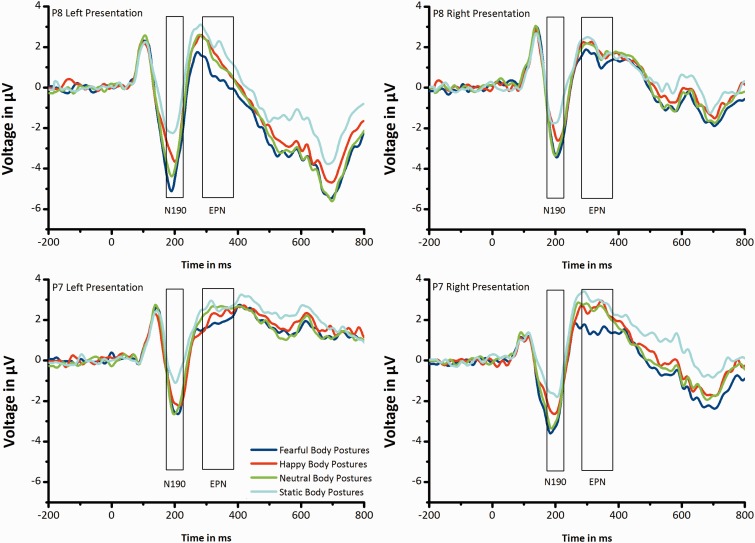

ERP data analysis

ERP data were analyzed using custom routines in MATLAB 7.0.4 (The Mathworks, Natic, MA) and EEGLAB 5.03 (Delorme and Makeig, 2004; http://www.sccn.ucsd.edu/eeglab). Segments of 200 ms before and 800 ms after stimulus onset were extracted from the continuous EEG. The baseline window ran from −100 ms to 0 ms relative to stimulus onset. Epochs with incorrect responses were rejected (5.8% per body stimulus type). In addition, epochs contaminated with large artifacts were identified using two methods from the EEGLAB toolbox (Delorme et al., 2007): (i) an epoch was excluded whenever the voltage on an EOG channel exceeded 100 μV to remove epochs with large EOG peaks and (ii) an epoch was excluded whenever the joint probability of a trial exceeded five standard deviations to remove epochs with improbable data (mean excluded epochs: 9.6%). Remaining blinks and EOG horizontal artifacts were corrected using a multiple adaptive regression method (Automatic Artifact Removal Toolbox Version 1.3; http://www.germangh.com/eeglab_plugin_aar/index.html; Gratton et al., 1983), based on the Least Mean Squares algorithm. Finally, epochs were discarded from the analysis when saccadic movements (>30 mV on horizontal EOG channels) were registered in a time window of 500 ms following stimulus onset (1.73%). The remaining epochs (mean: 83 epochs per body stimulus type) were averaged separately for each participant and each body stimulus type. The N190 amplitude was quantified as the mean amplitude in a time window of 160–230 ms post-stimulus presentation (Figure 2). Scalp topographies for the N190 component were calculated as mean amplitude in a time window of 160–230 ms post-stimulus presentation (Figure 3g and h). In addition, the EPN was calculated as the mean amplitude in a time window of 290–390 ms post-stimulus presentation (Figure 2).

Fig. 2.

Grand-average ERPs elicited by fearful, happy, neutral and static body postures. ERP waveforms at the representative electrodes P8 (A,B) and P7 (C,D) when stimuli were presented in the LVF (A,C) and in the RVF (B,D).

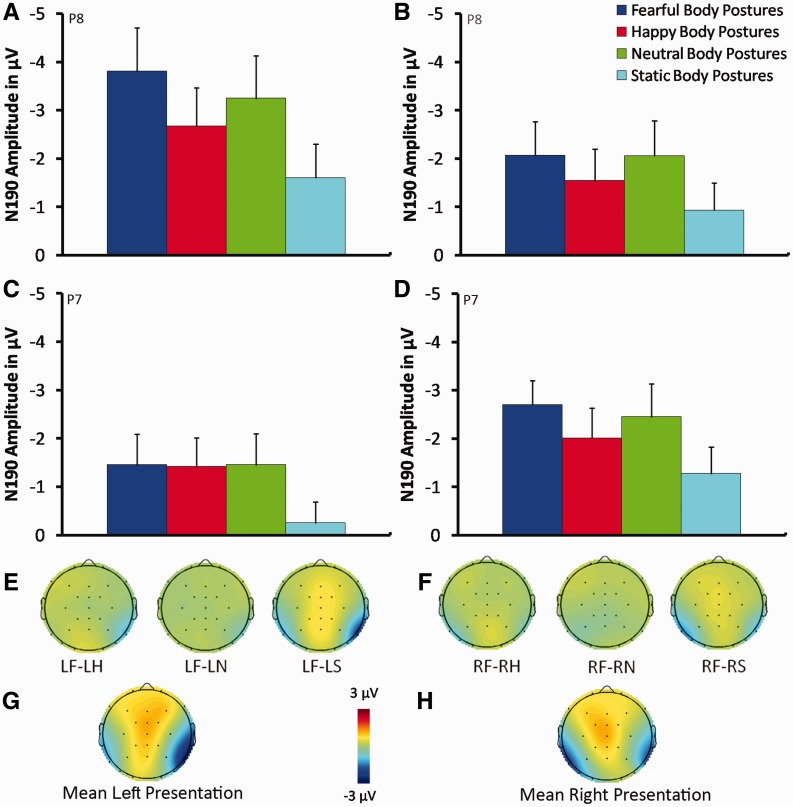

Fig. 3.

Mean N190 amplitude elicited by fearful, happy, neutral and static body postures from electrode P8 in the right hemisphere (A, B) and electrode P7 in the left hemisphere (C, D) when stimuli were presented in the LVF (A, C) and in the RVF (B, D). Scalp topographies of the difference in mean N190 amplitude between fearful and other body stimuli (happy, neutral and static) when stimuli were presented in the LVF (E) and in the RVF (F) in a time window of 160–230 ms. (G) and (H) represent scalp topographies of the mean N190 amplitude averaged for all body stimuli (fearful, happy, neutral and static body postures) in a time window of 160–230 ms when stimuli were presented in the LVF and RVF, respectively. Error bars represent standard error of the mean (SEM). LF, left fearful body posture; LH, left happy body posture; LF, left neutral body posture; LS, left static body posture; RF, right fearful body posture; RH, right happy body posture; RN, right neutral body posture; RS, right static body posture.

Both the N190 and the EPN mean amplitudes were analyzed with a three-way analysis of variance (ANOVA) with electrode (P8, P7), visual field (LVF, RVF) and body stimulus (static: S; neutral: N; fearful: F; happy: H) as within-subjects variables. To compensate for violations of sphericity, Greenhouse–Geisser corrections were applied whenever appropriate (Greenhouse and Geisser, 1959) and corrected P values (but uncorrected degrees of freedom) are reported. Post-hoc comparisons were performed using the Newman–Keuls test.

RESULTS

ERP results

N190

The mean N190 amplitude averaged for all body stimuli (fearful, happy, neutral and static body postures) reached a maximum negative deflection in a time window of 160–230 ms on electrodes P7 and P8, as shown in the scalp topographies (Figure 3g and h). Electrodes P7 and P8 were therefore chosen as electrodes of interest in the N190 analyses, in line with previous studies (Stekelenburg and de Gelder, 2004; Thierry et al., 2006). Grand average waveforms for the electrodes P7 and P8 are shown in Figure 2.

The ANOVA showed a significant main effect of Body stimulus (F(3, 63) = 29.14; P < 0.0001; = 0.58), a significant Electrode × Visual field interaction (F(1, 21) = 11.84; P = 0.002; = 0.36) and, more importantly, a significant Electrode × Visual field × Body stimulus interaction (F(3, 63) = 7.43; p = 0.0003; = 0.26). This interaction was further explored with two-way ANOVAs with Visual field (LVF, RVF) and Body stimulus (static, neutral, fearful, happy) as within-subjects factors for the two electrodes (P8 and P7) separately, to investigate possible differences between the two hemispheres.

The results of the ANOVA on the N190 amplitude over electrode P8, located in the right hemisphere, revealed a significant main effect of Visual field (F(1, 21) = 6.74; P = 0.016; = 0.24), with larger amplitudes for stimuli presented in the contralateral LVF (−2.83 μV), compared with the ipsilateral RVF (−1.65 μV; P = 0.016). Moreover, the main effect of Body stimulus was significant (F(3, 63) = 18.42; P < 0.0001; = 0.47). Post-hoc analyses showed a significantly smaller N190 amplitude in response to static postures (−1.27 μV) compared with all the motion postures (all Ps ≤ 0.001; H: −2.10 μV; N: −2.65 μV; F: −2.93 μV). In addition, a significant difference was found between the emotional postures, with a significantly larger N190 amplitude for fearful postures (−2.93 μV; P = 0.003) compared with happy postures (−2.10 μV). More importantly, the Visual field × Body stimulus interaction was significant (F(3, 63) = 6.99; P = 0.0007; = 0.25). Post-hoc analyses revealed that, in both the LVF and the RVF, static postures (S-LVF: −1.60 μV; S-RVF: −0.93 μV) elicited a significantly smaller N190 compared with all the motion postures (LVF: all Ps ≤ 0.0001; H-LVF: −2.66 μV; N-LVF: −3.24 μV; F-LVF: −3.80 μV; RVF: all Ps ≤ 0.0006; H-RVF: −1.54 μV; N-RVF: −2.06 μV; F-RVF: −2.07 μV). Also, in both the LVF and the RVF, the N190 amplitude was significantly larger for fearful postures than for happy postures (F-LVF vs H-LVF: P = 0.0001; F-RVF vs H-RVF: P = 0.01). In addition, in the LVF, the N190 amplitude was significantly larger for fearful postures (F-LVF: −3.80 μV) than for neutral postures (N-LVF: −3.24 μV; P = 0.001; Figure 3a, b, e and f).

The ANOVA for electrode P7, located in the left hemisphere, revealed a significant main effect of Visual field (F(1, 21) = 11.44; P = 0.002; = 0.35), with larger N190 amplitudes for stimuli presented in the contralateral RVF (−2.11 μV) compared with the ipsilateral LVF (−1.14 μV; P = 0.002). In addition, the main effect of Body stimulus was significant (F(3, 63) = 14.26; P < 0.0001; = 0.4). Post-hoc comparisons revealed a significantly smaller N190 amplitude in response to static postures (−0.76 μV), compared with all the motion postures (all Ps ≤ 0.0001; H: −1.71 μV; N: −1.95 μV; F: −2.08 μV; Figure 3c and d). However, in contrast to the results from electrode P8, the N190 amplitude recorded from electrode P7 did not significantly differ between fearful and happy body postures (P = 0.24). No other comparisons were significant (all Ps > 0.57).

EPN

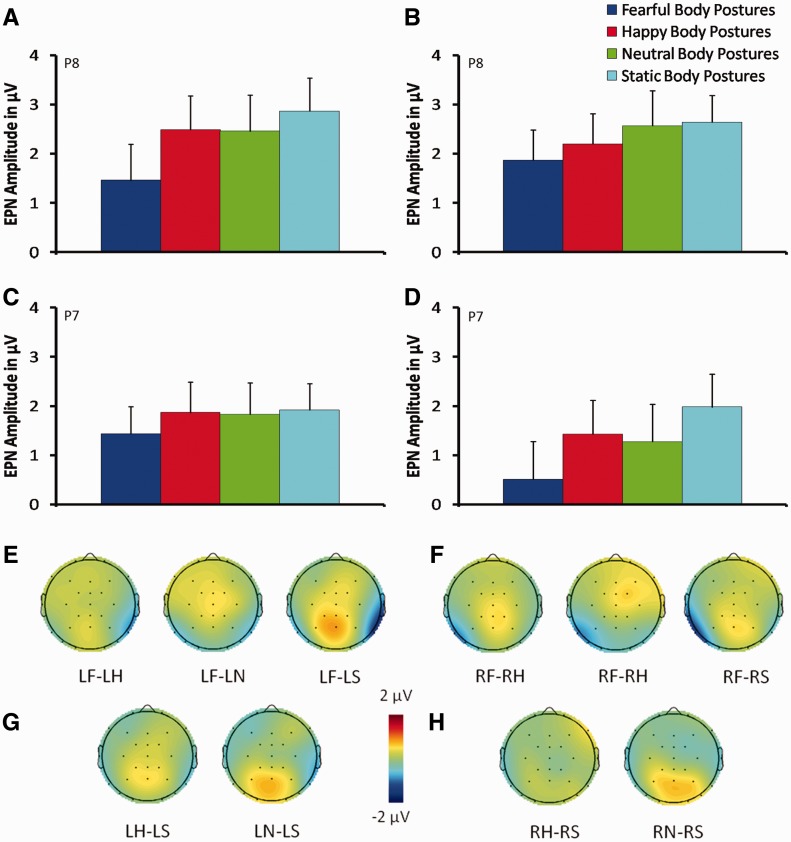

The subsequent EPN amplitudes were measured at the same electrode locations as the N190, in a time window of 290–390 ms post-stimulus onset (Figure 2).

The ANOVA showed a significant main effect of Body stimulus (F(3, 63) = 14.87; P < 0.0001; = 0.41) and, more interestingly, a significant Electrode × Visual field × Body stimulus interaction (F(3, 63) = 9.07; P = 0.0002; = 0.3). This interaction was further explored with two-way ANOVAs with Visual field (LVF, RVF) and Body stimulus (static, neutral, fearful, happy) as within-subject factors for the two electrodes (P8 and P7) separately, to investigate possible differences between the two hemispheres.

The ANOVA for electrode P8, in the right hemisphere, revealed a significant main effect of Body stimulus (F(3, 63) = 7.71; P < 0.0002; = 0.27), showing a significant more negative amplitude in response to fearful postures (0.97 μV), compared with the remaining postures (all Ps ≤ 0.006; H: 1.64 μV; N: 1.55 μV; S: 1.95 μV). The interaction Visual field × Body stimulus was also significant (F(3, 63) = 5.63; P = 0.003; = 0.21). Post-hoc analyses revealed that, both in the LVF and the RVF, fearful body postures (F-LVF: 0.51 μV; F-RVF: 1.43 μV) elicited the most negative amplitude compared with the remaining postures (LVF: all Ps ≤ 0.0001; H-LVF: 1.42 μV; N-LVF: 1.27 μV; S-LVF: 1.98 μV; RVF: all Ps ≤ 0.03; H-RVF: 1.86 μV; N-RVF: 1.83 μV; S-RVF:1.92 μV; see Figure 4a, b, e and f). In addition, in the LVF, happy (1.42 μV) and neutral (1.27 μV) body postures showed a significantly more negative amplitude than compared with static body postures (1.98 μV; all Ps ≤ 0.02; Figure 4a, b, g and h).

Fig. 4.

Mean EPN amplitude elicited by fearful, happy, neutral and static body postures from electrode P8 in the right hemisphere (A, B) and electrode P7 in the left hemisphere (C, D) when stimuli were presented in the LVF (A, C) and in the RVF (B, D). Scalp topographies of the difference in mean EPN amplitude between fear and other body stimuli (happy, neutral and static) when stimuli were presented in the LVF (E) and in the RVF (F) in a time window of 290–390 ms. Scalp topographies of the difference in mean EPN amplitude between static and other body stimuli (happy, neutral) when stimuli were presented in the LVF (G) and in the RVF (H) in a time window of 290–390 ms. Error bars represent standard error of the mean (SEM). LF, left fearful body posture; LH, left happy body posture; LF, left neutral body posture; LS, left static body posture; RF, right fearful body posture; RH, right happy body posture; RN, right neutral body posture; RS, right static body posture.

The ANOVA for electrode P7, in the left hemisphere, revealed a significant main effect of Body stimulus (F(3, 63) = 7.47; P = 0.0002; = 0.26). Post-hoc comparisons revealed that negative amplitude was significantly greater in response to fearful postures (1.66 μV), compared with the remaining postures (all Ps ≤ 0.006; H: 2.34 μV; N: 2.51 μV; S: 2.75 μV; Figure 4c–f). No other comparisons were significant (all Ps > 0.48).

Behavioral results

Reaction times (RTs), accuracy scores and inverse efficiency scores (IES = reaction times/accuracy) were analyzed with separate ANOVAs with Visual field (LVF, RVF) and Body stimulus (static, neutral, fearful, happy) as within-subjects variables. The analysis on RTs revealed a significant main effect of Body stimulus (F(3, 63) = 31.81; P < 0.0001; = 0.6), showing faster RTs for static body postures (654 ms) compared with fearful (756 ms), happy (764 ms) and neutral postures (797 ms; all Ps < 0.0001). In addition, RTs for neutral body postures were significantly slower than for fearful (P = 0.02) and happy body postures (P = 0.03). The ANOVA performed on the accuracy scores revealed a significant main effect of Body stimulus (F(3, 63) = 8.42; P < 0.0001; = 0.29), showing that participants were slightly more accurate in responding to static body postures (98%), compared with fearful (93%; P = 0.004), happy (93%; P = 0.007) and neutral postures (90%; P = 0.0001). Finally, the ANOVA on inverse efficiency scores revealed a significant main effect of Body stimulus (F(3, 63) = 21.62; P < 0.0001; = 0.5), showing significantly lower scores (reflecting better performance) for static body postures (661 ms; all Ps ≤ 0.0001), compared with fearful (811 ms), happy (822 ms) and neutral postures (891 ms). In addition, IES for the neutral body postures was significantly higher than for the remaining postures (all Ps < 0.02).

DISCUSSION

Seeing images of bodies elicits a robust negative deflection peaking at 190 ms post-stimulus onset (N190) reflecting the early structural encoding of these stimuli (Thierry et al., 2006) and a subsequent relative negativity (EPN) indexing attentional engagement to salient stimuli (Schupp et al., 2006; Olofsson et al., 2008). This study revealed that information concerning both the presence of motion and the emotions expressed by different body postures are able to modulate the early stage of the visual encoding of bodies and the attentional engagement process as reflected by changes in the amplitudes of N190 and EPN, respectively.

In particular, laterally presented pictures of bodies in different postures strongly modulated the N190 component. Interestingly, this component showed differential sensitivity to the observed body postures in the two cerebral hemispheres. On the one hand, the right hemisphere showed a modulation of the N190 both for the motion content (i.e. all the postures implying motion elicited larger N190 amplitudes compared with static, no-motion body postures) and for the emotional content (i.e. fearful postures elicited the largest N190 amplitude). On the other hand, the left hemisphere showed a modulation of the N190 only for the motion content, with no modulation for the emotional content. These findings suggest partially distinct roles of the two cerebral hemispheres in the visual encoding of emotional and motion information from bodies. In addition, at a later stage of perceptual representation reflecting selective attention to salient stimuli, an enlarged EPN was observed for fearful stimuli in both hemispheres, reflecting an enhanced processing of motivationally relevant stimuli (Schupp et al., 2006; Olofsson et al., 2008).

Electrophysiological studies suggest that, akin to the N170 for faces, the N190 component represents the process of extracting abstract and relevant properties of the human body form for categorization (Thierry et al., 2006) and is considered the earliest component indexing structural features of human bodies (Taylor et al., 2010). Our study expands these ideas by demonstrating that the stage of structural encoding reflected by the N190 entails not only the categorization of the visual stimulus as a body but also an analysis of motion-related and emotional features of the body posture. In other words, the visual encoding stage involves not only a perceptual representation of the form, configuration and spatial relations between the different body parts (Taylor et al., 2007, 2010), but it also reflects a discrimination between body postures conveying information about the presence of actions and emotions.

It has been argued that EBA (i.e. the putative neural generator of the N190; Thierry et al., 2006; Taylor et al., 2010) has a pivotal role in creating a cognitively unelaborated but perceptually detailed visual representation of the human body (Peelen and Downing, 2007; Downing and Peelen, 2011), which is forwarded to higher cortical areas for further analysis. On the other hand, EBA is thought to be modulated by top-down signals from multiple neural systems, including those involved in processing emotion and action information (Downing and Peelen, 2011). Thus, the finding that the N190 is sensitive to information about motion and emotions conveyed by human body postures suggests that emotion- and action-related signals are rapidly extracted from visual stimuli and can exert a fast top-down modulation of the neural processing reflecting structural encoding of bodies in occipitotemporal areas, i.e. the N190.

The smaller N190 amplitudes for static bodies than for bodies with implied motion suggest that both hemispheres operate a perceptual distinction between bodies with static postures and bodies performing actions. Because of the highly adaptive value of motion perception, observers typically extract motion-related information from static images where motion is implied (Freyd, 1983; Verfaillie and Daems, 2002). Occipitotemporal visual areas have been suggested to encode dynamic visual information from static displays of “moving” objects (e.g. human area MT, Kourtzi and Kanwisher, 2000; STS, when implied motion information is extracted from pictures of biological entities, Puce and Perrett, 2003; Perrett et al. 2009) and to respond to static images of human body postures implying an action (Peigneux et al., 2000; Kourtzi et al., 2008). Thus, the static snapshots of moving bodies used here were not only a necessary methodological substitute for real motion that was required to reliably record ERPs but also a sufficient substitute for understanding how the human visual system represents human body movements.

Notably, action observation is also known to activate a wide frontoparietal network of sensorimotor regions involved in action planning and execution. Indeed, observing images of humans during ongoing motor acts is known to enhance the excitability of the motor system (Urgesi et al., 2010; Borgomaneri et al., 2012; Avenanti et al., 2013a,b), where the perceived action is dynamically simulated (Gallese et al., 2004; Nishitani et al., 2004; Keysers and Gazzola, 2009; Gallese and Sinigaglia, 2011). Such motor simulation appears to emerge very early in time (<100 ms after stimulus onset in some cases, e.g. van Schie et al., 2008; Lepage et al., 2010; Ubaldi et al., 2015; Rizzolatti et al., 2014) and is thought to facilitate visual perception through feedback connections from motor to visual areas (Wilson and Knoblich, 2005; Kilner et al., 2007; Schippers and Keysers, 2011; Avenanti et al., 2013a; Tidoni et al., 2013). Thus, the observed enhancement of structural encoding for postures implying motion and action compared with static postures seems to indicate increased perceptual representation of the bodies, possibly triggered by fast action simulation processes in interconnected frontoparietal areas.

On the other hand, a finer perceptual distinction, discriminating not only the presence of action but also the emotional content of that action, is evident only in the right hemisphere, where the N190 was differentially modulated by fearful and happy body postures, with fearful postures eliciting the largest N190 amplitude. This emotional modulation of structural encoding might reflect an adaptive mechanism, in which the perceptual representation of body stimuli signaling potential threats is enhanced by top-down modulations. In line with this, neuroimaging studies have shown that fearful bodies increase the BOLD signal in the temporo-occipital areas from which the N190 originates and in nearby visual areas (Hadjikhani and de Gelder, 2003; Peelen et al., 2007; Grèzes et al., 2007; Pichon et al., 2008; Van de Riet et al., 2009). Importantly, fearful bodies are known to enhance activation in the amygdala (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; Van de Riet et al., 2009), the key subcortical structure for signaling fear and potential threat (Adolphs, 2013; LeDoux, 2014). Notably, the magnitude of amygdala activation predicts activity in EBA and FBA during perception of emotional bodies (Peelen et al., 2007). Therefore, the enhanced N190 over the right occipitotemporal electrodes might reflect a rapid and distant functional influence of the amygdala on interconnected visual cortices, useful for processing threat signals efficiently and implementing fast motor reactions (Vuilleumier et al., 2004; Borgomaneri et al., 2014b). Similarly, somatosensory and motor regions, crucial to the processing of threat-related expressions (Adolphs et al., 2000; Pourtois et al., 2004; Banissy et al., 2010; Borgomaneri et al., 2014a), might also participate in this top-down influence. Indeed, somato-motor regions are connected to occipitotemporal areas via the parietal cortex (Keysers et al., 2010; Rizzolatti et al., 2014) and exert a critical influence on visual recognition of emotional expressions quite early in time (i.e. 100–170 ms after stimulus onset; Pitcher et al., 2008; Borgomaneri et al., 2014a), which may be compatible with the observed N190 modulation.

Although previous electrophysiological findings showed a modulation of fearful body expressions at the stage of the P1 component (i.e. before structural encoding of the stimulus has taken place; Mereen et al., 2005; Van Heijnsbergen et al., 2007), the potentials peaking in the range of the N1 seem to offer more reliable measures of both face- and body-selective perceptual mechanisms. Indeed, earlier potentials such as the P1 could be modulated to a greater degree by low-level features of the stimuli, as they are highly sensitive to physical properties of visual stimuli (Halgren et al., 2000; Rossion and Jacques, 2008).

The observed emotional modulation of the N190 exclusively over the right hemisphere is in keeping with the idea of a possible right hemisphere advantage in processing emotions (Gainotti et al., 1993; Làdavas et al., 1993; Adolphs et al., 2000; Borod, 2000). Alternatively, the more detailed modulation of structural encoding processes observed in the right hemisphere could be due to a higher sensitivity to human bodies, as suggested by preferential activation in response to body stimuli in the right EBA (Downing et al., 2001; Chan et al., 2004; Saxe et al., 2006) and in a broad network of right cortical areas (Caspers et al., 2010). In keeping with the idea of a right hemisphere advantage in processing emotional body postures, recent transcranial magnetic stimulation studies have shown that motor excitability over the right (but not the left) hemisphere is sensitive to the emotional content of the observed body posture at a latency compatible with the initial part of the N190 component (Borgomaneri et al., 2014a). This suggests a strict functional coupling between visual and motor representations during the processing of emotional body postures, which might favor perception of and adaptive motor responses to threatening stimuli.

Interestingly, at a later stage of visual processing (i.e. 300 ms post-stimulus onset), the EPN component was enhanced for fearful stimuli in both hemispheres. The EPN is a relative negativity for emotional stimuli (Schupp et al., 2006). This emotional modulation reflects attentional capture driven by salient emotional stimuli and might reflect the degree of attention needed to recognize relevant signals (Olofsson et al., 2008). Previous studies have shown increases in the amplitude of the EPN in response to both emotional scenes (Schupp et al., 2003, 2004b; Thom et al., 2014) and emotional faces (Sato et al., 2001; Schupp et al., 2004a; Frühholz et al., 2011; Calvo and Beltran, 2014). Similar to the findings of present study, the EPN is also enhanced during observation of hand gestures, with a greater effect for negatively valenced gestures (Flaisch et al., 2009, 2011). This suggests that viewing isolated body parts with emotional relevance also modulates this component. The present results add to the previous studies by showing strong EPN sensitivity to whole body expressions of fear, supporting the idea that fearful body postures represent a highly salient category of stimuli, able to engage selective visual attention to favor explicit recognition of potentially threatening signals (de Gelder et al., 2004, 2010; Kret et al. 2011; Borgomaneri et al., 2014a). Notably, our data suggest that attentional processes are enhanced by fearful postures in both hemispheres, indicating that, at later stages of visual processing, both the right and the left hemispheres concur to engage attentional resources to aid recognition of salient emotional stimuli. However, it is interesting to note that the right hemisphere also maintains a higher capacity to discriminate between the different body postures at this later stage, as suggested by an increased negativity for happy and neutral body postures compared with static body postures.

Interestingly, the emotional modulations observed both at the early stage of structural encoding and at the later attentional engagement stage might be a by-product of the interaction between movement and emotion-related information conveyed by emotional body postures. Indeed, bodies express emotions through movements, therefore providing concurrent motion-related information. Further studies are needed to disentangle the contributions of emotion and movement-related information by investigating ERP modulations in response to emotional body postures with a minimal amount of motion content (e.g. sad body postures). Overall, these results suggest that information pertaining to motion and emotion in human bodies is already differentially processed at the early stage of visual structural encoding (N190), in which a detailed representation of the form and configuration of the body is created.

At this early stage, the right hemisphere seems prominent in processing the emotional content of body postures, as shown by the effects of laterally presented body postures on structural encoding. At a later stage of visual processing (EPN), the relevant and salient information represented by fearful body postures recruits visual attention networks in both hemispheres, which might facilitate recognition of potentially dangerous stimuli. Finally, the modulations observed in the visual processing of different body postures, both at the visual encoding and attentional engagement stages, are reminiscent of modulations seen in visual face processing (Batty and Taylor, 2003; Stekelenburg and de Gelder, 2004; Schupp et al., 2004a; Frühholz et al., 2011; Calvo and Beltran, 2014), suggesting that face and body processing might involve distinct but similar perceptual mechanisms. This highly efficient and specialized structural encoding, and the subsequent attentional engagement for salient stimuli, may represent an adaptive mechanism for social communication that facilitates inferences about the goals, intentions and emotions of others.

Conflict of Interest

None declared.

Acknowledgments

E.L. was supported by grants from the University of Bologna (FARB 2012), A.A. was supported by grants from the Cogito Foundation (R-117/13) and Ministero Istruzione Università Ricerca (Futuro in Ricerca 2012, RBFR12F0BD) and M.E.M. was supported by a grant from the Deutsche Forschungsgemeinschaft (MA 4864/3-1).

We are grateful to Sara Borgomaneri for her help in providing and selecting the experimental stimuli and for her comments on the results. We are grateful to Brianna Beck for her help in editing the manuscript.

REFERENCES

- Adolphs R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R. The biology of fear. Current Biology. 2013;23:R79–93. doi: 10.1016/j.cub.2012.11.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. The Journal of Neuroscience. 2000;20:2683–90. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Avenanti A, Annella L, Candidi M, Urgesi C, Aglioti SM. Compensatory plasticity in the action observation network: virtual lesions of STS enhance anticipatory simulation of seen actions. Cerebral Cortex. 2013a;23:570–80. doi: 10.1093/cercor/bhs040. [DOI] [PubMed] [Google Scholar]

- Avenanti A, Candidi M, Urgesi C. Vicarious motor activation during action perception: beyond correlational evidence. Frontiers in Human Neuroscience. 2013b;7:185. doi: 10.3389/fnhum.2013.00185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banissy MJ, Sauter DA, Ward J, Warren JE, Walsh V, Scott SK. Suppressing sensorimotor activity modulates the discrimination of auditory emotions but not speaker identity. The Journal of Neuroscience. 2010;30:13552–7. doi: 10.1523/JNEUROSCI.0786-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. The Journal of Neuroscience. 1996;16:3737–44. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borgomaneri S, Gazzola V, Avenanti A. Motor mapping of implied actions during perception of emotional body language. Brain Stimulation. 2012;5:70–6. doi: 10.1016/j.brs.2012.03.011. [DOI] [PubMed] [Google Scholar]

- Borgomaneri S, Gazzola V, Avenanti A. Transcranial magnetic stimulation reveals two functionally distinct stages of motor cortex involvement during perception of emotional body language. Brain Structure and Function. 2014a doi: 10.1007/s00429-014-0825-6. doi: 10.1007/s00429-014-0825-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borgomaneri S, Gazzola V, Avenanti A. Temporal dynamics of motor cortex excitability during perception of natural emotional scenes. Social Cognitive and Affective Neuroscience. 2014b;9:1451–7. doi: 10.1093/scan/nst139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borod JC, editor. The Neuropsychology of Emotion. New York: Oxford University Press; 2000. pp. 80–103. [Google Scholar]

- Calvo MG, Beltrán D. Brain lateralization of holistic versus analytic processing of emotional facial expressions. Neuroimage. 2014;92:237–47. doi: 10.1016/j.neuroimage.2014.01.048. [DOI] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB. ALE meta-analysis of action observation and imitation in the human brain. Neuroimage. 2010;50:1148–67. doi: 10.1016/j.neuroimage.2009.12.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cecere R, Bertini C, Maier ME, Làdavas E. Unseen fearful faces influence face encoding: evidence from ERPs in hemianopic patients. Journal of Cognitive Neuroscience. 2014;26:2564–77. doi: 10.1162/jocn_a_00671. [DOI] [PubMed] [Google Scholar]

- Chan AW, Peelen MV, Downing PE. The effect of viewpoint on body representation in the extrastriate body area. Neuroreport. 2004;15:2407–10. doi: 10.1097/00001756-200410250-00021. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews Neuroscience. 2006;7:242–9. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of the United States of America. 2004;101:16701–6. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B, Van den Stock J, Meeren HK, Sinke C, Kret ME, Tamietto M. Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neuroscience & Biobehavioral Reviews. 2010;34:513–27. doi: 10.1016/j.neubiorev.2009.10.008. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Delorme A, Sejnowski T, Makeig S. Enhanced detection of artifacts in EEG data using higher-order statistics and independent component analysis. Neuroimage. 2007;34:1443–9. doi: 10.1016/j.neuroimage.2006.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–3. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Downing PE, Peelen MV. The role of occipitotemporal body-selective regions in person perception. Cognitive Neuroscience. 2011;2:186–203. doi: 10.1080/17588928.2011.582945. [DOI] [PubMed] [Google Scholar]

- Flaisch T, Häcker F, Renner B, Schupp HT. Emotion and the processing of symbolic gestures: an event-related brain potential study. Social Cognitive and Affective Neuroscience. 2011;6:109–18. doi: 10.1093/scan/nsq022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flaisch T, Schupp HT, Renner B, Junghöfer M. Neural systems of visual attention responding to emotional gestures. Neuroimage. 2009;45:1339–46. doi: 10.1016/j.neuroimage.2008.12.073. [DOI] [PubMed] [Google Scholar]

- Freyd JJ. The mental representation of movement when static stimuli are viewed. Perception & Psychophysics. 1983;33:575–81. doi: 10.3758/bf03202940. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Jellinghaus A, Herrmann M. Time course of implicit processing and explicit processing of emotional faces and emotional words. Biological Psychology. 2011;87:265–74. doi: 10.1016/j.biopsycho.2011.03.008. [DOI] [PubMed] [Google Scholar]

- Gainotti G, Caltagirone C, Zoccolotti P. Left/right and cortical/subcortical dichotomies in the neuropsychological study of human emotions. Cognition & Emotion. 1993;7:71–93. [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends in Cognitive Sciences. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Gallese V, Sinigaglia C. What is so special about embodied simulation? Trends in Cognitive Sciences. 2011;15:512–9. doi: 10.1016/j.tics.2011.09.003. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MG, Donchin E. A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysiology. 1983;55:468–84. doi: 10.1016/0013-4694(83)90135-9. [DOI] [PubMed] [Google Scholar]

- Greenhouse SW, Geisser S. On methods in the analysis of profile data. Psychometrika. 1959;24:95–112. [Google Scholar]

- Grèzes J, Pichon S, de Gelder B. Perceiving fear in dynamic body expressions. Neuroimage. 2007;35:959–67. doi: 10.1016/j.neuroimage.2006.11.030. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Tucker M, Armony J, Ellis R, Passingham RE. Objects automatically potentiate action: an fMRI study of implicit processing. European Journal of Neuroscience. 2003;17:2735–740. doi: 10.1046/j.1460-9568.2003.02695.x. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology. 2003;13:2201–5. doi: 10.1016/j.cub.2003.11.049. [DOI] [PubMed] [Google Scholar]

- Halgren E, Raij T, Marinkovic K, Jousmäki V, Hari R. Cognitive response profile of the human fusiform face area as determined by MEG. Cerebral Cortex. 2000;10:69–81. doi: 10.1093/cercor/10.1.69. [DOI] [PubMed] [Google Scholar]

- Jessimer M, Markham R. Alexithymia: a right hemisphere dysfunction specific to recognition of certain facial expressions? Brain and Cognition. 1997;34:246–58. doi: 10.1006/brcg.1997.0900. [DOI] [PubMed] [Google Scholar]

- Keysers C, Gazzola V. Expanding the mirror: vicarious activity for actions, emotions, and sensations. Current Opinion in Neurobiology. 2009;19:666–71. doi: 10.1016/j.conb.2009.10.006. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kaas JH, Gazzola V. Somatosensation in social perception. Nature Reviews Neuroscience. 2010;11:417–28. doi: 10.1038/nrn2833. [DOI] [PubMed] [Google Scholar]

- Kilner JM, Friston KJ, Frith CD. Predictive coding: an account of the mirror neuron system. Cognitive Processing. 2007;8:159–66. doi: 10.1007/s10339-007-0170-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Activation in human MT/MST by static images with implied motion. Journal of Cognitive Neuroscience. 2000;12:48–55. doi: 10.1162/08989290051137594. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Krekelberg B, Van Wezel RJ. Linking form and motion in the primate brain. Trends in Cognitive Sciences. 2008;12:230–6. doi: 10.1016/j.tics.2008.02.013. [DOI] [PubMed] [Google Scholar]

- Kret ME, Pichon S, Grèzes J, de Gelder B. Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage. 2011;54:1755–62. doi: 10.1016/j.neuroimage.2010.08.012. [DOI] [PubMed] [Google Scholar]

- Làdavas E, Cimatti D, Pesce MD, Tuozzi G. Emotional evaluation with and without conscious stimulus identification: evidence from a split-brain patient. Cognition & Emotion. 1993;7:95–114. [Google Scholar]

- LeDoux JE. Coming to terms with fear. Proceedings of the National Academy of Sciences. 2014;111:2871–8. doi: 10.1073/pnas.1400335111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lepage JF, Tremblay S, Théoret H. Early non-specific modulation of corticospinal excitability during action observation. European Journal of Neuroscience. 2010;31:931–7. doi: 10.1111/j.1460-9568.2010.07121.x. [DOI] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meeren HK, de Gelder B, Ahlfors SP, Hämäläinen MS, Hadjikhani N. Different cortical dynamics in face and body perception: an MEG study. PloS One. 2013;8:e71408. doi: 10.1371/journal.pone.0071408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minnebusch DA, Keune PM, Suchan B, Daum I. Gradual inversion affects the processing of human body shapes. Neuroimage. 2010;49:2746–55. doi: 10.1016/j.neuroimage.2009.10.046. [DOI] [PubMed] [Google Scholar]

- Nemiah JC, Freyberger H, Sinfeos PE. Alexithymia: a view of the psychosomatic process. In: Hill OW, editor. Modern Trends in Psychosomatic Medicine. Vol. 3. London: Butterworths; 1976. pp. 430–9. [Google Scholar]

- Nishitani N, Avikainen S, Hari R. Abnormal imitation-related cortical activation sequences in Asperger's syndrome. Annals of Neurology. 2004;55:558–62. doi: 10.1002/ana.20031. [DOI] [PubMed] [Google Scholar]

- Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: an integrative review of ERP findings. Biological Psychology. 2008;77:247–65. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker PD, Prkachin KM, Prkachin GC. Processing of facial expressions of negative emotion in alexithymia: the influence of temporal constraint. Journal of Personality. 2005;73:1087–107. doi: 10.1111/j.1467-6494.2005.00339.x. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cognitive and Affective Neuroscience. 2007;2:274–83. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Selectivity for the human body in the fusiform gyrus. Journal of Neurophysiology. 2005;93:603–8. doi: 10.1152/jn.00513.2004. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. The neural basis of visual body perception. Nature Reviews Neuroscience. 2007;8:636–48. doi: 10.1038/nrn2195. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49:815–22. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Pegna AJ, Darque A, Berrut C, Khateb A. Early ERP modulation for task-irrelevant subliminal faces. Frontiers in Psychology. 2011;2:88. doi: 10.3389/fpsyg.2011.00088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pegna AJ, Landis T, Khateb A. Electrophysiological evidence for early non-conscious processing of fearful facial expressions. International Journal of Psychophysiology. 2008;70:127–36. doi: 10.1016/j.ijpsycho.2008.08.007. [DOI] [PubMed] [Google Scholar]

- Peigneux P, Salmon E, Van Der Linden M, et al. The role of lateral occipitotemporal junction and area MT/V5 in the visual analysis of upper-limb postures. Neuroimage. 2000;11:644–55. doi: 10.1006/nimg.2000.0578. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Xiao D, Barraclough NE, Keysers C, Oram MW. Seeing the future: natural image sequences produce “anticipatory” neuronal activity and bias perceptual report. Quarterly Journal of Experimental Psychology. 2009;62:2081–104. doi: 10.1080/17470210902959279. [DOI] [PubMed] [Google Scholar]

- Pichon S, de Gelder B, Grezes J. Emotional modulation of visual and motor areas by dynamic body expressions of anger. Social Neuroscience. 2008;3:199–212. doi: 10.1080/17470910701394368. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine BC. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. The Journal of Neuroscience. 2008;28:8929–33. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Sander D, Andres M, Grandjean D, Reveret L, Olivier E, Vuilleumier P. Dissociable roles of the human somatosensory and superior temporal cortices for processing social face signals. European Journal of Neuroscience. 2004;20(12):3507–15. doi: 10.1111/j.1460-9568.2004.03794.x. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Vuilleumier P. Dynamics of emotional effects on spatial attention in the human visual cortex. Progress in Brain Research. 2006;156:67–91. doi: 10.1016/S0079-6123(06)56004-2. [DOI] [PubMed] [Google Scholar]

- Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences. 2003;358:435–45. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Smith A, Allison T. ERPs evoked by viewing facial movements. Cognitive Neuropsychology. 2000;17:221–39. doi: 10.1080/026432900380580. [DOI] [PubMed] [Google Scholar]

- Reed CL, Stone VE, Bozova S, Tanaka J. The body-inversion effect. Psychological Science. 2003;14:302–8. doi: 10.1111/1467-9280.14431. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Cattaneo L, Fabbri-Destro M, Rozzi S. Cortical mechanisms underlying the organization of goal-directed actions and mirror neuron-based action understanding. Physiological Reviews. 2014;94:655–706. doi: 10.1152/physrev.00009.2013. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rossi A, Parada FJ, Kolchinsky A, Puce A. Neural correlates of apparent motion perception of impoverished facial stimuli: a comparison of ERP and ERSP activity. Neuroimage. 2014;98:442–59. doi: 10.1016/j.neuroimage.2014.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage. 2008;39:1959–79. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S, Matsumura M. Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. Neuroreport. 2001;12:709–14. doi: 10.1097/00001756-200103260-00019. [DOI] [PubMed] [Google Scholar]

- Saxe R, Jamal N, Powell L. My body or yours? The effect of visual perspective on cortical body representations. Cerebral Cortex. 2006;16:178–82. doi: 10.1093/cercor/bhi095. [DOI] [PubMed] [Google Scholar]

- Schippers MB, Keysers C. Mapping the flow of information within the putative mirror neuron system during gesture observation. Neuroimage. 2011;57:37–44. doi: 10.1016/j.neuroimage.2011.02.018. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Flaisch T, Stockburger J, Junghöfer M. Emotion and attention: event-related brain potential studies. Progress in Brain Research. 2006;156:31–51. doi: 10.1016/S0079-6123(06)56002-9. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. Attention and emotion: an ERP analysis of facilitated emotional stimulus processing. Neuroreport. 2003;14:1107–10. doi: 10.1097/00001756-200306110-00002. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004b;41:441–9. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Öhman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO. The facilitated processing of threatening faces: an ERP analysis. Emotion. 2004a;4:189–200. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Senior C, Barnes J, Giampietroc V, et al. The functional neuroanatomy of implicit-motion perception or ‘representational momentum’. Current Biology. 2000;10:16–22. doi: 10.1016/s0960-9822(99)00259-6. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, de Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777–80. doi: 10.1097/00001756-200404090-00007. [DOI] [PubMed] [Google Scholar]

- Taylor GJ, Bagby RM, Parker JD. The 20-item Toronto Alexithymia Scale: IV. Reliability and factorial validity in different languages and cultures. Journal of Psychosomatic Research. 2003;55:277–83. doi: 10.1016/s0022-3999(02)00601-3. [DOI] [PubMed] [Google Scholar]

- Taylor GJ, Michael Bagby R, Parker JD. The alexithymia construct: a potential paradigm for psychosomatic medicine. Psychosomatics. 1991;32:153–64. doi: 10.1016/s0033-3182(91)72086-0. [DOI] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. Journal of Neurophysiology. 2007;98:1626–33. doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- Taylor JC, Wiggett AJ, Downing PE. fMRI—adaptation studies of viewpoint tuning in the extrastriate and fusiform body areas. Journal of Neurophysiology. 2010;103:1467–77. doi: 10.1152/jn.00637.2009. [DOI] [PubMed] [Google Scholar]

- Thierry G, Pegna AJ, Dodds C, Roberts M, Basan S, Downing P. An event-related potential component sensitive to images of the human body. Neuroimage. 2006;32:871–9. doi: 10.1016/j.neuroimage.2006.03.060. [DOI] [PubMed] [Google Scholar]

- Thom N, Knight J, Dishman R, Sabatinelli D, Johnson DC, Clementz B. Emotional scenes elicit more pronounced self-reported emotional experience and greater EPN and LPP modulation when compared to emotional faces. Cognitive, Affective, & Behavioral Neuroscience. 2014;14:849–60. doi: 10.3758/s13415-013-0225-z. [DOI] [PubMed] [Google Scholar]

- Tidoni E, Borgomaneri S, Di Pellegrino G, Avenanti A. Action simulation plays a critical role in deceptive action recognition. The Journal of Neuroscience. 2013;33:611–23. doi: 10.1523/JNEUROSCI.2228-11.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ubaldi S, Barchiesi G, Cattaneo L. Bottom-up and top-down visuomotor responses to action observation. Cerebral Cortex. 2015;25:1032–41. doi: 10.1093/cercor/bht295. [DOI] [PubMed] [Google Scholar]

- Urgesi C, Candidi M, Avenanti A. Neuroanatomical substrates of action perception and understanding: an anatomic likelihood estimation meta-analysis of lesion-symptom mapping studies in brain injured patients. Frontiers in Human Neuroscience. 2014;8:344. doi: 10.3389/fnhum.2014.00344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Urgesi C, Maieron M, Avenanti A, Tidoni E, Fabbro F, Aglioti SM. Simulating the future of actions in the human corticospinal system. Cerebral Cortex. 2010;20:2511–21. doi: 10.1093/cercor/bhp292. [DOI] [PubMed] [Google Scholar]

- Van de Riet WA, Grèzes J, de Gelder B. Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Social Neuroscience. 2009;4:101–20. doi: 10.1080/17470910701865367. [DOI] [PubMed] [Google Scholar]

- Van Heijnsbergen CCRJ, Meeren HKM, Grezes J, de Gelder B. Rapid detection of fear in body expressions, an ERP study. Brain Research. 2007;1186:233–41. doi: 10.1016/j.brainres.2007.09.093. [DOI] [PubMed] [Google Scholar]

- van Schie HT, Koelewijn T, Jensen O, Oostenveld R, Maris E, Bekkering H. Evidence for fast, low-level motor resonance to action observation: an MEG study. Social Neuroscience. 2008;3:213–28. doi: 10.1080/17470910701414364. [DOI] [PubMed] [Google Scholar]

- Verfaillie K, Daems A. Representing and anticipating human actions in vision. Visual Cognition. 2002;9:217–32. [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nature Neuroscience. 2004;7:1271–8. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Wilson M, Knoblich G. The case for motor involvement in perceiving conspecifics. Psychological Bulletin. 2005;131:460. doi: 10.1037/0033-2909.131.3.460. [DOI] [PubMed] [Google Scholar]