Highlights

-

•

Junior psychiatrists notes were compared before and after the use of a template.

-

•

Template notes were rated as more thorough, organized, useful and comprehensible.

-

•

An audit revealed template notes contained more clinically relevant information.

Keywords: Mental state examination, Electronic health records, OPCRIT+, Psychiatry, Junior doctors, Documentation, National Health Service, Semi-structured, Audit

Abstract

Objectives

The mental state examination (MSE) provides crucial information for healthcare professionals in the assessment and treatment of psychiatric patients as well as potentially providing valuable data for mental health researchers accessing electronic health records (EHRs). We wished to establish if improvements could be achieved in the documenting of MSEs by junior doctors within a large United Kingdom mental health trust following the introduction of an EHR based semi-structured MSE assessment template (OPCRIT+).

Methods

First, three consultant psychiatrists using a modified version of the Physician Documentation Quality Instrument-9 (PDQI-9) blindly rated fifty MSEs written using OPCRIT+ and fifty normal MSEs written with no template. Second, we conducted an audit to compare the frequency with which individual components of the MSE were documented in the normal MSEs compared with the OPCRIT + MSEs.

Results

PDQI-9 ratings indicated that the OPCRIT + MSEs were more ‘Thorough’, ‘Organized’, ‘Useful’ and ‘Comprehensible’ as well as being of an overall higher quality than the normal MSEs. The audit identified that the normal MSEs contained fewer mentions of the individual components of ‘Thought content’, ‘Anxiety’ and ‘Cognition & Insight’.

Conclusions

These results indicate that a semi-structured assessment template significantly improves the quality of MSE recording by junior doctors within EHRs. Future work should focus on whether such improvements translate into better patient outcomes and have the ability to improve the quality of information available on EHRs to researchers.

1. Introduction

The primary purpose of medical records is communication amongst the healthcare team, to enable seamless patient care [1–3]. However, they also provide an important source of managerial, financial and statistical information, a source of evidence in the event of litigation and a potentially valuable resource for teaching and research [1,3,4].

Despite their importance, medical record practices have a long history of criticism. Common concerns include: records being untimed or undated, using imprecise language or being illegible, lacking a logical structure, being poorly synthesized, being difficult to retrieve and prone to loss, lacking information relating to the aims of clinical management, or simply erroneous [1,2,4–8].

Considerable hope has been placed on the improvements that electronic health records (EHRs) might bring. Many reports [4,8–17] have proposed numerous ways, in addition to solving the problems detailed above, in which EHRs might benefit health providers: the instant availability of notes, the ability to retrieve data in a variety of ways (e.g. test results for a specific date range), the elimination of storage issues, the ability for more than one user at a time to access a patients notes, improvements in security and confidentiality, the potential to incorporate automated decision support systems and improving the exchange of clinical information between an institutions clinical systems or between institutions themselves. The use of EHRs has even been associated with a reduction in mortality during hospitalization [14]. Several studies have raised criticisms of EHRs however [9,15,18], suggesting that they can lead to an increase in the length of time it takes physicians to write notes (although see Sola et al. [17]) and an increase in redundancy and poor formatting. Concerns have also been raised about the use of the ‘copy and paste’ function, which can lead to the propagation of inaccurate or false information [19].

It is also widely acknowledged that EHRs have the potential to significantly benefit medical research via the reuse of patient data gathered during routine clinical care [16,20]. An example would be EHR-based phenotyping for ‘pragmatic clinical trials’. Richesson et al. [21] lists some of the potential benefits of clinical trials being embedded directly in the healthcare system: (1) access to larger research populations, allowing the detection of smaller clinical effects (2) the easier identification and study of rare disorders and (3) a reduction in the expense and logistical challenge entailed by current randomized controlled trial methodologies. However, although EHRs clearly hold enormous potential for researchers, concerns do exist about the quality, completeness and accuracy of the available data [21].

A key issue surrounding the quality of EHR data, for both clinical and research use, is the optimal level of structure to be used in the electronic notes deployed. Medical records can be seen as existing on a continuum between ‘structured/coded’ data and ‘unstructured/clinical narrative’ data (e.g. a complete blood count vs. a ‘presenting complaint’), with each end of the spectrum offering advantages and disadvantages for clinical and research use. For example, in psychiatry, the clinician will frequently record the patient history in a narrative style using a document that has no defined structure. Whilst this provides the freedom for the clinician to express anything they wish and allows for the relevant facts to be documented in a manner that is understandable to other professionals, there are several disadvantages. First, providing the clinician with no defined structure may increase the likelihood that issues will be under-explored or overlooked entirely. Second, for research use in particular, data that is not coded requires the use of natural language processing (NLP) technologies in order to harvest the relevant information and, as of yet, such tools have shortcomings that limit their use with unstructured, narrative data [20,22,23].

A considerable number of studies, mostly conducted in non-EHR settings and in medical specialities other than psychiatry, have advocated for [15,24], or investigated the consequences of, introducing structured and/or coded templates into medical record practices. Fernando et al. [22] for example, in a review of ten studies, concluded that the main outcome of structuring and/or coding the ‘patient history’ was an increase in the completeness of information. Several studies have advocated the use specifically, as investigated in this report, of a semi-structured approach (i.e. narrative text organized under standardized headings). These reports have demonstrated that physicians prefer reading such documents, that they can improve completeness, accuracy and organization, aid in the retrospective location of data from records and significantly improve the performance of NLP tools (see Johnson et al. [25] for review). However, some studies have commented on controversial, or even negative aspects of more structured documentation practices (See Harrop and Amegavie [2] for review). For example, it is unknown whether improved documentation outcomes, such as an increase in completeness, are correlated with improved quality of care. Furthermore, opposition can arise from clinical staff when faced with the use of more structured forms [2]; clinicians may feel that these documents are overly prescriptive and restrict their clinical freedom.

This study was specifically interested in investigating the potential benefits of introducing a semi-structured template for the documentation of the mental state examination (MSE) in EHRs. MSEs are a vital aspect of clinical assessment in psychiatric practice and are concerned with understanding the patient's current thoughts and mood. In conjunction with comprehensive history taking, it is an important component of accurate professional formulation, diagnosis and consequent treatment in mental health care [26]. The symptomatology data contained within the MSE also makes this part of the clinical record a primary target for research use. In the United Kingdom, there are no specific guidelines at a national level, neither do the majority of psychiatric training schemes insist upon, a definitive structure for MSEs; the nature of MSE documentation thus differs from clinician to clinician [26]. However, commonly utilised components do exist, typically: appearance, behaviour, mood and affect, speech, thought process, thought content, perception, cognition and insight.

Several studies have previously investigated the change in MSE documentation practice following the introduction of more structured formats. However, to the best of our knowledge, this issue has only been addressed in paper-based health records and not within EHRs. Kareem and Ashby [26] reported on the improvement in the documentation of MSEs amongst psychiatric trainees following the introduction of a ‘standardized format’. They reported that correctly recorded components increased from 69% to 83%. Dinniss et al. [27] reported increases in the documentation of all MSE components following the introduction of a standardised admission form, however, these were only significant in the cases of ‘Appearance and behaviour’, ‘Speech’ and ‘Cognition’. In addition to being the first study to investigate this issue within EHRs, our study also adds a more subjective assessment element; whereas both of the above studies only audited the frequency with which specific MSE components were recorded, we also report quality ratings given to the MSEs by consultant psychiatrists using a medical documentation-rating tool.

The aim of this study was to establish whether, amongst junior doctors using EHRs in National Health Service (NHS) psychiatric hospital wards, the introduction of a semi-structured assessment template (OPCRIT + ) would improve the quality of documentation of patient's mental states, when compared to the existing system of no template. To achieve this, first, we asked three consultant psychiatrists, working within the same health trust, to blindly rate one hundred MSEs, produced under both formats, according to an instrument designed for assessing the quality of medical documentation. Second, we conducted an audit to record how many components of the MSE (e.g. ‘Appearance & Behaviour’) were missed in the normal MSEs, compared to those written with the semi-structured template.

2. Methods

2.1. Semi-structured assessment template

The semi-structured assessment template used was OPCRIT+ [28]. This system is located on the South London and Maudsely (SLaM) NHS trust's ‘electronic Patient Journey System (ePJS), which is the primary clinical record keeping system within SLaM, across both inpatient and outpatient teams. OPCRIT+ is based on the original OPCRIT [29] and, in addition to an MSE template consisting of a series of free-text areas underneath standardized MSE headings, contains a suite of proprietary algorithms which, via a symptom and psychiatric history checklist, produces research-quality ICD-10 and DSM-IV diagnoses [30]. This study was only concerned with the assessment of the MSE template. SLaM funded the creation of OPCRIT+ as a data collection and diagnostic tool but also to provide structure and guidance (via hyperlinks leading to related information) for junior clinicians in their assessment of patients.

The MSE template in OPCRIT+ contains the following components: ‘Appearance and Behaviour’; ‘Speech and Form of Thought’; ‘Mood, Affect and Associated Features’; ‘Anxiety, Trauma and Associated Features’; ‘Thought Content’; ‘Perceptions’; ‘Cognition’ and ‘Insight and Capacity’. Following discussions with senior level psychiatrists, for the purposes of this study text relating to ‘Capacity’ was removed, as it was deemed an atypical MSE component.

2.2. Location

SLaM serves a local population of approximately 1.1 million, provides nationally available specialist services and delivers inpatient care for approximately 5300 people per year. MSEs were extracted from ePJS via the National Institute for Health Research (NIHR) Biomedical Research Centre and Dementia Unit's (BRC/U) Case Register Interactive Search (CRIS) system [31], which allows all documented clinical information from the Trust to be accessed for analysis by researchers whilst preserving patient anonymity. MSEs were selected only from wards upon which OPCRIT+ had been introduced (approximately twenty), which included both specialist and general acute adult inpatient units.

2.3. Mental state examinations

For this study we choose to use only MSEs completed by junior clinicians (Foundation Year Two, Core Trainee and General Practice Vocational Trainee Scheme level), as they were the group most regularly undertaking MSEs on the wards concerned. Prior to the introduction of OPCRIT+, typical practice was to document MSEs onto one of two types of electronic notes within ePJS: ‘Event’ forms or ‘Ward Progress Note’ forms. These are essentially blank boxes for free-text, with no defined structure (hereafter referred to as ‘normal MSEs’). Fifty MSEs completed via OPCRIT+ and fifty normal MSEs were extracted from ePJS following the inclusion/exclusion criteria detailed in Section 2.3.1. All clinicians on the wards concerned had been given training and support in the use of OPCRIT+. During training, clinicians were advised that the purpose of OPCRIT+ was both to promote thorough record keeping and to enable information to be accessed for research purposes via CRIS. All normal MSEs used originated from the same wards where the OPCRIT+ MSEs originated but were written prior to the introduction of OPCRIT+ into the Trust. Furthermore, due to clinician rotation, no OPCRIT+ MSEs and normal MSEs used in this analysis were written by the same doctor.

2.3.1. MSE inclusion/exclusion criteria

MSEs of both types were eligible for inclusion in the study if (1) the clinician who documented it was working full-time on the ward where the patient was based; that is, they were excluded if completed by a ‘duty’ doctor visiting the ward and (2) it was documented during the patient's first ward stay of an inpatient episode; that is, they were excluded if the MSE was documented on a ward the patient had been transferred to.

Normal MSEs were included if they been documented between the 1st of December 2008 and the 1st of May 2009, shortly after which the introduction of OPCRIT+ within the Trust began. OPCRIT + MSEs were excluded if text was missing from any of the MSE components, as it was deemed the template had not been used correctly in these cases.

The proportions of OPCRIT+ and normal MSEs extracted were matched on whether they were documented on a ‘specialist’ or a ‘general psychiatric’ ward and also for the reason why the MSE was undertaken (e.g. an ‘admission clerking’ or a ‘ward-round’). The total number of doctors who completed both MSE types was equivalent as was the total number of wards from where each MSE type came.

2.4. Rating tool

To rate the quality of the MSEs, we used the ‘Physician Documentation Quality Instrument-9’ (PDQI-9) [19], an abbreviated version of the full twenty-two item PDQI [11]. After discussions with the instrument's author, we removed three further items: ‘Up-to-date’, ‘Accurate’ and ‘Synthesized’. These were deemed irrelevant in the context of this project, given that the MSEs were read and rated as standalone electronic notes, rather than as part of a full set of patient records. The PDQI-9 has been shown to have good criterion-related and discriminant validity, internal consistency and inter-rater reliability for rating patient notes [19]. Each of the remaining items (‘Thorough’, ‘Useful’, ‘Organized’, ‘Comprehensible’, ‘Succinct’ and ‘Internally Consistent’) was rated on a five-point Likert scale ranging from 1—Not at all to 5—Extremely, with a descriptor of ideal characteristics anchoring the highest value of the scale. We added the similarly scaled summary item ‘General impression of Note Quality’ (GNQ), as used by Stetson et al. [19] in the development of the PDQI-9.

2.4.1. Raters

Three consultant psychiatrists from within SLaM (MK, FG, GC) were recruited to rate the MSEs. None of the raters were involved in the development or dissemination of OPCRIT+ and all were blind to the research question. Each psychiatrist rated the one hundred MSEs in a different random order.

2.5. Statistical analyses

All statistical analyses were undertaken using Statistical Package for the Social Sciences version twenty-two. The inter-rater reliability of the three rater's ratings for each of the seven judgements was tested using a two-way random consistency intra-class correlation (ICC) calculation. We used an ICC coefficient threshold of 0.6, as described by Chinn [32], when determining whether to consider a judgement further. We then compared OPCRIT+ MSEs with normal MSEs, on the PDQI-9 ratings that were found to have adequate inter-rater reliability, using t-tests. For the audit, two of the authors (P.B. and J.R.) reviewed the normal MSEs to calculate the proportion that mentioned each of the MSE components covered by OPCRIT+. Where uncertainty occurred consensus agreement was reached. Differences between the two MSE types were then assessed with Fisher's exact tests.

2.6. Ethics

Ethical approval for CRIS as an anonymised data resource for secondary analyses was provided by Oxfordshire REC in 2008 (Reference 08/H0606/71), in accordance with the Declaration of Helsinki, as well as by the Institute of Psychiatry's Institutional Review Board. Individual patient consent is therefore not necessary for CRIS projects as all data is anonymised at the point of extraction. However, all projects require approval by the CRIS oversight committee and this was granted for this project.

3. Results

3.1. PDQI-9 and GNQ Inter-rater reliability ratings

ICC coefficients indicated that rating reliability between the three consultants was adequate for five of the seven judgements: ‘Comprehensible’ (0.61), ‘Organized’ (0.61), GNQ (0.76), ‘Thorough’ (0.81) and ‘Useful’ (0.81). However, ratings for ‘Internally Consistent’ (0.39) and ‘Succinct’ (−0.1) were unacceptable in terms of their agreement and were therefore not considered further.

3.2. PDQI-9 and GNQ ratings

Mean and standard deviation ratings for the remaining PDQI-9 judgments and the GNQ are displayed in Table 1. OPCRIT + MSEs were rated significantly higher than normal MSEs in terms of being more ‘Thorough’ (t(298) = 5.95, p = < 0.001), more ‘Useful’ (t(293) = 5.15, p = < 0.001), more ‘Organized’ (t(296) = 6.84, p = < 0.001) and more ‘Comprehensible’ (t(296) = 4.39, p = < 0.001). Ratings were also significantly higher in terms of GNQ (t(293) = 5.83, p = < 0.001).

Table 1.

Mean and standard deviation ratings of three consultant psychiatrists for fifty OPCRIT+ and fifty normal MSEs written by junior clinicians on inpatient wards. Each judgment was made on a five-point Likert scale with one indicating ‘Not at all’ and five indicating ‘Extremely’.

| Judgement | Mean OPCRIT + MSE rating (s.d.) | Mean normalMSE rating (s.d.) |

|---|---|---|

| Thorough | 3.27 (0.83)* | 2.65 (0.98) |

| Useful | 3.38 (0.89)* | 2.81 (1.01) |

| Organized | 3.29 (0.81)* | 2.63 (0.88) |

| Comprehensible | 3.42 (0.80)* | 3.00 (0.86) |

| GNQ | 3.32 (0.80)* | 2.74 (0.91) |

p < 0.01.

3.3. Descriptive data & audit

The mean number of words in the OPCRIT+ MSEs was higher than in the normal MSEs (228 (s.d. 96) vs. 148 (s.d. 86)), a statistically significant difference (U = 604.000, p < 0.001). Whereas 100% of the OPCRIT + MSEs used structured headings, only 86% of the normal MSEs did (Fisher's exact test, p < 0.05). Table 1 details the proportion of normal MSEs that commented on each of the MSE components used by OPCRIT+ (100% of the OPCRIT + MSEs contained mention of all of these components, although in many cases this was the mention of a ‘null’ finding).

4. Discussion

The continued take-up of EHRs in health care systems around the world has the potential to significantly improve clinical care and opens up a wealth of possibilities for routinely collected clinical data to be re-used in a variety of research applications. However, a pertinent question remains as to the degree of structure that should be applied to the electronic notes that comprise the EHR, as there are both advantages and disadvantages to the use of increased structure. This study set out to investigate whether MSEs written by junior clinicians would be improved via the use of a semi-structured assessment template; blind ratings of semi-structured and normal MSEs by three consultant psychiatrists, together with an audit of the individual components missed in the normal MSEs, offered strong evidence that they are.

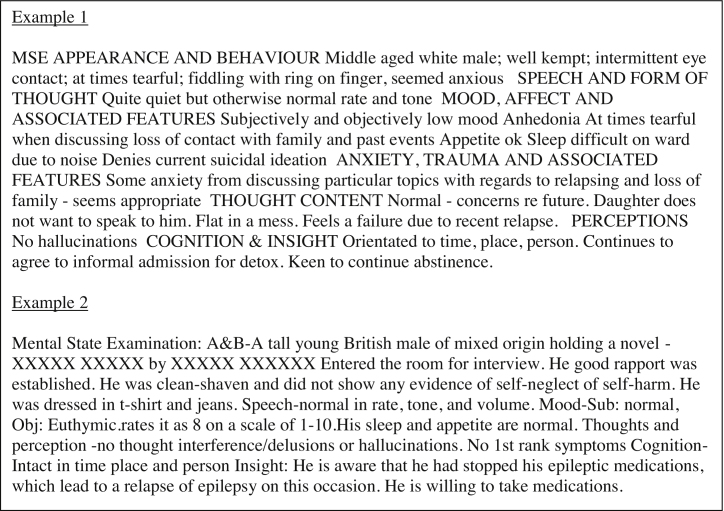

In relation to the consultant's ratings, the OPCRIT + MSEs were scored significantly higher than the normal MSEs on the PDQI-9 judgements of ‘Thorough’, ‘Useful’, ‘Organized’ and ‘Comprehensible’ as well as in terms of ‘General Impression of Note Quality’. On a five-point scale, mean judgements ranged between 0.42 and 0.66 points higher for the OPCRIT + MSEs, with the greatest difference for how ‘Organized’ they were thought to be (Table 1). Across all five judgements, this equated to a mean 11% improvement in the quality of MSEs when written with the semi-structured template (see Fig. 1 for examples). Unfortunately, we could not consider the two PDQI-9 judgements where inter-rater reliability was not of an acceptable standard. In the case of ‘Internally consistent’, it may have been that this confused the consultants; MSEs are typically brief documents, stating a few core observations and probably have little room to be internally inconsistent (i.e. they rarely have enough content to contradict themselves). In terms of the ‘Succinct’ judgement, it may simply be that one consultant's notion of a succinct MSE is different from another's and this led to rating inconsistency.

Fig. 1.

Example of an OPCRIT+ (Example 1) and normal MSE (Example 2). Examples chosen were both ranked twenty-fifth out of fifty based on a mean of all three raters mean scores for the ratings ‘Thorough’, ‘Useful’, ‘Organized’, ‘Comprehensible’ and ‘General Impression of Note Quality’. For the OPCRIT + MSE this mean of means was 3.3 and for the normal MSE it was 2.8 (from a maximum of five). All typos are as they were in the original text.

With regards the audit, due to the semi-structured nature of the OPCRIT + MSEs, all individual components were mentioned 100% of the time, even if this was often a report of a null finding (e.g. ‘Patient A exhibited no abnormal perceptions.’). However, the only component mentioned without fail by the clinicians in the normal MSEs was ‘Appearance and Behaviour’, with the remaining components being mentioned between 12 and 98% of the time (Table 2). Three of these differences reached statistical significance: ‘Thought content’ (90%), ‘Cognition and Insight’ (88%) and ‘Anxiety, Trauma and Associated Features’ (12%). Besides demonstrating that the use of a semi-structured template increases the frequency with which individual MSE components are mentioned, of interest here was the clear dichotomy between the number of times ‘Anxiety’ was documented compared to the other components. There are at least two potential reasons for this. First, there are no specific requirements from the majority of psychiatric training schemes, including that offered by SLaM, in relation to the minimum components that must be reported upon during an MSE and it appears possible from these results that nor are junior clinicians being taught that the reporting of ‘Anxiety’ is important. Second, as the study was undertaken solely using inpatients, it may be that all other components of the MSE (e.g. ‘Speech and Form of Thought’) were deemed more pertinent to the inpatient setting than ‘Anxiety’, which may be mentioned more frequently in outpatient or community settings. It was not the case that anxiety was being reported upon as part of another component of the normal MSEs (e.g. ‘Mood’), as the audit looked for mention of ‘Anxiety’ throughout the entire text, not just as a separate component. In summary, if we include ‘Anxiety’, the normal MSEs, in total, reported 18% fewer components than the OPCRIT + MSEs and if we discount ‘Anxiety’, the figure is 6% fewer.

Table 2.

The proportion of normal MSEs mentioning each of the MSE components covered in OPCRIT+. Fisher's exact tests indicate the three components that were mentioned significantly less than they were in the OPCRIT + MSEs (100%).

| MSE component | % |

|---|---|

| Appearance and behaviour | 100 |

| Speech and form of thought | 94 |

| Mood, affect and associated features | 98 |

| Anxiety, trauma and associated features | 12** |

| Thought content | 90* |

| Perceptions | 94 |

| Cognition and insight | 88* |

p < 0.05 (one-tailed).

p < 0.01 (one-tailed).

The improvements seen in MSE documentation via the use of OPCRIT+, whilst statistically significant according to a number of measures, might still be seen as fairly modest. Subjectively, the consultants thought the semi-structured MSEs were approximately 11% better than the normal MSEs, whereas objectively, the normal MSEs missed between 6 and 18% of the components mentioned in the OPCRIT + MSEs, depending on whether ‘Anxiety’ is considered. In fact, the figure of 11% for the PDQI-9 judgements is deceptive, as the consultants were judging OPCRIT + MSEs that always contained mention of ‘Anxiety’, whereas, as already mentioned, when writing a normal MSE mention of anxiety appears not to have been a consistent consideration. If we therefore presume, for arguments sake, that the real improvement seen in MSE documentation after the introduction of a semi-structured template is somewhere around the 10% mark, is this a figure which would make the introduction of such a template worthwhile for other psychiatric healthcare institutions? It is not the focus of this report but a variety of issues arose during the implementation of OPCRIT+ which indicated that more structured documents can be unpopular with those who have to complete them and this can have a detrimental effect on staff morale and relations with management. Also, it is unclear from this study whether such improvements would translate into better clinical care (e.g. via the improved transmission of key patient information) or higher-quality data for use in research. These should be questions posed by future studies, as they are vital in deciding whether the imposition of more structured electronic notes is worthwhile.

A further point of interest from our study was the difference in length between the OPCRIT+ and normal MSEs. We observed a statistically significant 54% increase in the mean length of the MSEs when completed with the OPCRIT+ template. Whilst the greater length may have contributed to the OPCRIT + MSEs being rated by the consultants as more ‘Thorough’ and perhaps also as more ‘Useful’, it may also have been one reason for some of the disgruntlement amongst the clinicians using the form; extra time on ‘paperwork’ is rarely popular. However, it is possible that the increased MSE length reflected more in-depth clinical assessments, prompted by the use of a semi-structured template. Such an outcome would likely represent a significant clinical benefit but, unfortunately, we did not investigate this.

There are several limitations to our study. First, our sample size of one hundred MSEs was too small. This left our analysis underpowered to detect significant differences in the frequency of mention of ‘Speech and Form of Thought’ and ‘Perceptions’ in the audit section. Second, although the consultants’ supposedly rated the MSEs blind as to whether they were written with the semi-structured template or without, the fact that 50% of the MSEs contained specific headings such as ‘Anxiety, Trauma and Associated Features’ would possibly have led to conclusions being drawn regarding the nature of the research question, which may in turn have biased the ratings given. Lastly, although the majority of MSEs within the trust are written by junior doctors, which was why we only used this group, more experienced clinicians do document a small proportion of the MSEs and it would have been interesting to see if these results extrapolate to these individuals.

In summary, our results indicate that MSEs documented by junior doctors are improved, in the opinion of consultants and via more consistent documentation of individual components, when they are written with a semi-structured template. Future studies should assess whether these improvements translate into better clinical care and higher quality data for researchers, as these are two vital considerations that may offset the institutional challenges that can arise from the introduction of more structured forms within the EHR.

Author contributions

Conceived and designed the experiments: SL, JR, MH, RS, MB, MB, SL, PM, MA, GS, PB

Performed the experiments:

SL, JR, MK, FG, GC, MH, RS, MB, MB, SL, PM, MA, SN, GS, PB

Analyzed the data:

SL, PB

Contributed reagents/materials/analysis tools:

JR, MK, FG, GC, MH, RS, MB, MB, SL, PM, MA, SN, GS, PB

Wrote the paper:

SL, JR, MK, FG, RS, MB, MB, PM, MA, GS, PB

Conflict of interest

None of the authors report any conflicts of interest.

Summary points.

-

•

We investigated whether the introduction of a semi-structured assessment template for mental state examinations (MSEs) improved the quality of documentation in electronic health records by junior doctors in a large psychiatric National Health Service trust.

-

•

Three consultant psychiatrists blindly rated fifty MSEs composed with the assistance of the template and fifty without. Those composed with were judged as significantly more ‘Thorough’, ‘Organized’, ‘Useful’, ‘Comprehensible’ and of being of an overall higher quality.

-

•

A subsequent audit of individual MSE components found that those composed without the template contained significantly fewer mentions of ‘Thought content’, ‘Anxiety’ and ‘Cognition & Insight’.

-

•

This is the first report investigating this issue in electronic health records. Future work should investigate whether the improvements in documentation practice seen with the use of a semi-structured template translate into better patient outcomes and an improvement in the quality of data accessible for reuse by researchers.

Acknowledgements

This study was funded by the National Institute for Health Research (NIHR), Biomedical Research Centre and Dementia Unit at South London and Maudsley National Health Service Foundation Trust and Kings College London. Gunter Schuman is also part-funded by the European Union-funded FP6 Integrated Project IMAGEN (Reinforcement-related behaviour in normal brain function and psychopathology) (LSHM-CT-2007-037286), the FP7 projects ADAMS (Genomic variations underlying common neuropsychiatric diseases and disease related cognitive traits in different human populations) (242257) and the Innovative Medicine Initiative Project EU-AIMS (115300-2), as well as the Medical Research Council Programme Grant “Developmental pathways into adolescent substance abuse” (93558) and the Swedish Research Council FORMAS.

References

- 1.Abdelrahman W., Abdelmageed A. Medical record keeping: clarity, accuracy, and timeliness are essential. BMJ Careers. 2014 [Google Scholar]

- 2.Harrop M., Amegavie L. Developing a paediatric asthma review pro forma. Nurs. Stand. 2005;19:33–40. doi: 10.7748/ns2005.02.19.22.33.c3803. [DOI] [PubMed] [Google Scholar]

- 3.Oladipo A., Narang L., Sathiyathasan S., Hakeem-Habeeb Y., Mustaq V. A prospective audit of the quality of documentation of gynaecological operations. J. Obstet. Gynaecol. 2011;31:510–513. doi: 10.3109/01443615.2011.587913. (The Journal of the Institute of Obstetrics and Gynaecology) [DOI] [PubMed] [Google Scholar]

- 4.Pullen I., Loudon J. Improving standards in clinical record keeping. Adv. Psychiatr. Treat. 2006;12:280–286. [Google Scholar]

- 5.Reiser S.J. The clinical record in medicine. Part 2: Reforming content and purpose. Ann. Intern. Med. 1991;114:980–985. doi: 10.7326/0003-4819-114-11-980. [DOI] [PubMed] [Google Scholar]

- 6.Burnum J.F. The misinformation era: the fall of the medical record. Ann. Intern. Med. 1989;110:482–484. doi: 10.7326/0003-4819-110-6-482. [DOI] [PubMed] [Google Scholar]

- 7.Campling E.A., Devlin H.B., Hoile R.W., Lunn J.N. 1991/1992. The Report Of The National Confidential Enquiry Into Perioperative Deaths 1991/1992. [Google Scholar]

- 8.Audit Commission . Audit Commission; London: 1995. Setting the Record Straight: A Study of Hospital Medical Records. [Google Scholar]

- 9.Embi P.J., Yackel T.R., Logan J.R., Bowen J.L., Cooney T.G., Gorman P.N. Impacts of computerized physician documentation in a teaching hospital: perceptions of faculty and resident physicians. J. Am. Med. Inf. Assoc.: JAMIA. 2004;11:300–309. doi: 10.1197/jamia.M1525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Thompson A., Jacob K., Fulton J. Anonymized dysgraphia. J. R. Soc. Med. 2003;96:51. doi: 10.1258/jrsm.96.1.51-a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stetson P.D., Morrison F.P., Bakken S., Johnson S.B., eNote Research Team Preliminary development of the physician documentation quality instrument. J. Am. Med. Inf. Assoc.: JAMIA. 2008;15:534–541. doi: 10.1197/jamia.M2404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dolin R.H., Alschuler L., Beebe C., Biron P.V., Boyer S.L., Essin D., Kimber E., Lincoln T., Mattison J.E. The HL7 clinical document architecture. J. Am. Med. Inf. Assoc.: JAMIA. 2001;8:552–569. doi: 10.1136/jamia.2001.0080552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dolin R.H., Alschuler L., Boyer S., Beebe C., Behlen F.M., Biron P.V., Shabo Shvo A. HL7 clinical document architecture, release 2. J. Am. Med. Inf. Assoc.: JAMIA. 2006;13:30–39. doi: 10.1197/jamia.M1888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Amarasingham R., Plantinga L., Diener-West M., Gaskin D.J., Powe N.R. Clinical information technologies and inpatient outcomes: a multiple hospital study. Arch. Inter. Med. 2009;169:108–114. doi: 10.1001/archinternmed.2008.520. [DOI] [PubMed] [Google Scholar]

- 15.Lewis R., Adler D.A., Dixon L.B., Goldman B., Hackman A.L., Oslin D.W., Siris S.G., Valenstein M. The psychiatric note in the era of electronic communication. J. Nerv. Ment. Dis. 2011;199:212–213. doi: 10.1097/NMD.0b013e318216376f. [DOI] [PubMed] [Google Scholar]

- 16.Centre HaSCI, Colleges AoMR . Centre HaSCI, Colleges AoMR; London: 2013. Standards for the clinical structure and content of patient records. [Google Scholar]

- 17.Sola C.L., Bostwick J.M., Sampson S. Benefits of an electronic consultation-liaison note system: better notes faster. Acad. Psychiatry. 2007;31:61–63. doi: 10.1176/appi.ap.31.1.61. (The Journal of the American Association of Directors of Psychiatric Residency Training and the Association for Academic Psychiatry) [DOI] [PubMed] [Google Scholar]

- 18.Poissant L., Pereira J., Tamblyn R., Kawasumi Y. The impact of electronic health records on time efficiency of physicians and nurses: a systematic review. J. Am. Med. Inf. Assoc.: JAMIA. 2005;12:505–516. doi: 10.1197/jamia.M1700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Stetson P.D., Bakken S., Wrenn J.O., Siegler E.L. Assessing electronic note quality using the physician documentation quality instrument (PDQI-9) Appl. Clin. Inf. 2012;3:164–174. doi: 10.4338/ACI-2011-11-RA-0070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jensen P.B., Jensen L.J., Brunak S. Mining electronic health records: towards better research applications and clinical care. Nat. Rev. Genet. 2012;13:395–405. doi: 10.1038/nrg3208. [DOI] [PubMed] [Google Scholar]

- 21.Richesson R.L., Hammond W.E., Nahm M., Wixted D., Simon G.E., Robinson J.G., Bauck A.E., Cifelli D., Smerek M.M., Dickerson J., Laws R.L., Madigan R.A., Rusincovitch S.A., Kluchar C., Califf R.M. Electronic health records based phenotyping in next-generation clinical trials: a perspective from the NIH Health Care Systems Collaboratory. J. Am. Med. Inf. Assoc.: JAMIA. 2013;20:e226–e231. doi: 10.1136/amiajnl-2013-001926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fernando B., Kalra D., Morrison Z., Byrne E., Sheikh A. Benefits and risks of structuring and/or coding the presenting patient history in the electronic health record: systematic review. BMJ Qual. Saf. 2012;21:337–346. doi: 10.1136/bmjqs-2011-000450. [DOI] [PubMed] [Google Scholar]

- 23.Ohno-Machado L. Realizing the full potential of electronic health records: the role of natural language processing. J. Am. Med. Inf. Assoc.: JAMIA. 2011;18:539. doi: 10.1136/amiajnl-2011-000501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Allan N., Bell D., Pittard A. Resuscitation of the written word: meeting the standard for cardiac arrest documentation. Clin. Med. 2011;11:348–352. doi: 10.7861/clinmedicine.11-4-348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Johnson S.B., Bakken S., Dine D., Hyun S., Mendonca E., Morrison F., Bright T., Van Vleck T., Wrenn J., Stetson P. An electronic health record based on structured narrative. J. Am. Med. Inf. Assoc.: JAMIA. 2008;15:54–64. doi: 10.1197/jamia.M2131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kareem O.S., Ashby C. Mental state examinations by psychiatric trainees in a community NHS trust. Psychiatric Bull. 2000;24:109–110. [Google Scholar]

- 27.Dinniss S., Dawe J., Cooper M. Psychiatric admission booking: audit of the impact of a standardised admission form. Psychiatric Bull. 2006;30:334–336. [Google Scholar]

- 28.Rucker J., Newman S., Gray J., Gunasinghe C., Broadbent M., Brittain P., Baggaley M., Denis M., Turp J., Stewart R., Lovestone S., Schumann G., Farmer A., McGuffin P. OPCRIT+: an electronic system for psychiatric diagnosis and data collection in clinical and research settings. Br. J. Psychiatry. 2011;199:151–155. doi: 10.1192/bjp.bp.110.082925. (The Journal of Mental Science) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.McGuffin P., Farmer A., Harvey I. A polydiagnostic application of operational criteria in studies of psychotic illness. Development and reliability of the OPCRIT system. Arch. Gen. Psychiatry. 1991;48:764–770. doi: 10.1001/archpsyc.1991.01810320088015. [DOI] [PubMed] [Google Scholar]

- 30.Brittain P.J., Stahl D., Rucker J., Kawadler J., Schumann G. A review of the reliability and validity of OPCRIT in relation to its use for the routine clinical assessment of mental health patients. Int. J. Methods Psychiatric Res. 2013;22:110–137. doi: 10.1002/mpr.1382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stewart R., Soremekun M., Perera G., Broadbent M., Callard F., Denis M., Hotopf M., Thornicroft G., Lovestone S. The South London and Maudsley NHS Foundation Trust Biomedical Research Centre (SLAM BRC) case register: development and descriptive data. BMC Psychiatry. 2009;9:51. doi: 10.1186/1471-244X-9-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chinn S. Statistics in respiratory medicine. 2. Repeatability and method comparison. Thorax. 1991;46:454–456. doi: 10.1136/thx.46.6.454. [DOI] [PMC free article] [PubMed] [Google Scholar]