Abstract

Head-mounted video cameras (with and without an eye camera to track gaze direction) are being increasingly used to study infants’ and young children’s visual environments and provide new and often unexpected insights about the visual world from a child’s point of view. The challenge in using head cameras is principally conceptual and concerns the match between what these cameras measure and the research question. Head cameras record the scene in front of faces and thus answer questions about those head-centered scenes. In this “tools of the trade” article, we consider the unique contributions provided by head-centered video, the limitations and open questions that remain for head-camera methods, and the practical issues of placing head-cameras on infants and analyzing the generated video.

Pre-crawlers, crawlers, and walkers have different visual experiences of objects, of space, of social partners (Adolph, Tamis-LaMonda, Ishak, Karasik & Lobo 2008; Bertenthal & Campos, 1990; Kretch, Franchak, Brothers & Adolph, 2012; Soska & Adolph 2014). Because the body’s morphology and behavior change dramatically and systematically in early development, there is concomitant developmental changes in visual environments, changes that are likely to play an explanatory role with respect to development in many domains (see Smith 2013; Byrge, Smith & Sporns, 2014). However, we are at the earliest stages of understanding the specific properties of children’s environments and how they change with development. This paper is about how head cameras by capturing a child-centered perspective on the visual world may contribute to an understanding of the role of developmentally changing visual environments in developmental process.

The central challenge in using head cameras to capture the “child’s view” is conceptual and concerns the relevant scales at which environments may be measured. The conceptual problem derives from the fact that eyes and heads typically move together but do not always move together (see Schmitow, Sternberg, Billard & von Hofsten, 2013). Because heads and eyes typically move together, there has been considerable interest in whether head cameras might provide useable data for studying looking behavior and visual attention; however, because heads and eyes do not always move together there are also limitations as to what can be inferred from head camera data alone (Aslin, 2008; 2012; Schmitow, Sternberg, Billard & von Hofsten, 2013). In the first section, we set the background by considering this larger conceptual issue. We then consider the unique role of head cameras in capturing visual scenes linked to the wearer’s bodily posture and location. We then turn to open and theoretically important questions concerning heads, eyes and their alignment that are also relevant to the assessing the limits and potential contributions of head cameras. Finally, we consider the practical issues in using head cameras.

Before proceeding, it is helpful to make explicit the relation between head cameras and head-mounted eye trackers as measuring devices. Head-mounted eye-trackers are just head-mounted cameras with an added camera directed at the eye to capture gaze direction. Algorithms are then used to estimate pupil orientation and corneal reflections from the eye camera and project that information onto the head-camera view of the scene. There are many complexities in this step (see Aslin, 2012; Holmqvist, et al, 2011; Nystrom & Holmqvist, 2010; Wass, Smith & Johnson, 2012). Further, although psychological significance of fixations has been studied in adults (e.g., Nuthmann, Smith, Engbert & Henderson, 2010), little is known about the meaning of the not adult-like frequencies and durations of infant and toddler fixations (see Wass et al, 2012). We do not consider these issues but instead focus on the unique contributions provided by the head-mounted camera whether used alone or as part of a head-mounted eye-tracking system. But keep in mind, with the one exception of knowing the momentary direction of eye-gaze, every contribution and every limitation concerning the video recorded from a head camera applies to head cameras used alone and when they are used as part of a head-mounted eye tracking system.

Three views on development

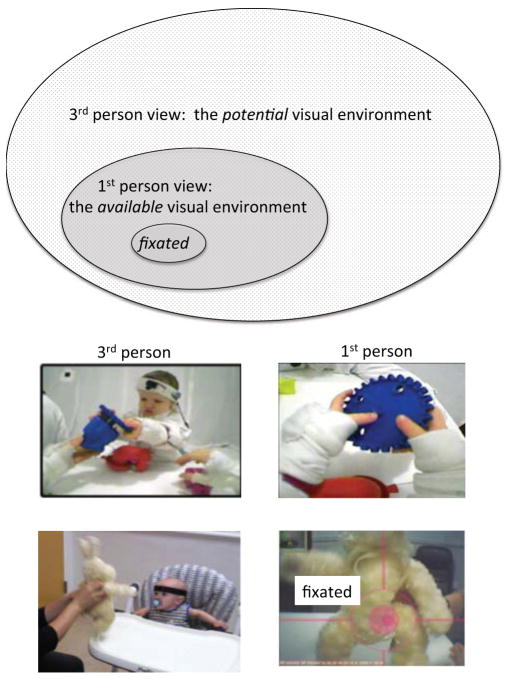

Figure 1 shows the spatial scales of three perspectives on the visual environment: a third-person view, a first-person view, and fixations within the first person view. Long before the invention of small head cameras or eye trackers, developmental researchers put video cameras on tripods and recorded third-person (observer) views of children’s environments. Because much of this broad scene may be out of the view of the child at any moment, the room-size observer view may be considered a measure of the child’s potential environment. Coded properties of these third-person views have repeatedly been shown to be predictive of developmental outcomes in many domains (e.g., Cartmill et al, 2013; Rodriguez & Tamis-LeMonda, 2011). However, cameras on tripods capture the same view regardless of the child’s age and actions. For example, the parent’s face, the ceiling fan, small spots of dirt as well as the toys on the rug, may all be part of a recorded 3rd person view and thus all within the potential environment for the studied child. However, the overhead fan is more visually available to a 3-month-old infant who is often in an infant seat on the table than it is to an 8-month-old who is often sitting or crawling on the floor. Likewise, the crawling 8-month-old has more visual access to the dirt spots on the rug than does the 3-month-old.

Figure 1.

Three spatial scales for measuring the visual environment: The 3rd person view of the visual environment that may be potentially seen by the child; the 1st person view of the available visual environment that is directly linked to the child’s bodily location and posture, and the fixated elements of the 1st person view.

Head cameras replace the tripod with the child’s own body and measure the available visual environment, the scene that is in front of the wearer’s face. This is a view that varies as a function of the child’s location, posture, and activity. The evidence from head camera studies to date indicates that the composition and statistical properties of these child-views change considerably over the first two years of life. For example, for very young infants, the in-front-of face scene is often full of other people’s faces whereas the in-front-of-face scenes for older infants contain many more views of hands on objects (Franks et al, 2012, Sugden, Mohamed-Ali & Moulson, 2013; Jayaraman, Fausey & Smith, 2013). One study (Kretch, Franchak, Brothers & Adolph, 2012) compared the views of crawling and walking infants: Crawling infants –when crawling –had limited views of their social partners’ faces and a limited view of potential goal objects. When infants were crawling, the head camera images showed the floor and infants had to stop crawling to sit up and look at their social partners or the goal object. The head camera images from walking infants, in contrast, showed continuous views of social partners and goal objects. In brief, the unique contribution of the head camera derives from the fact that it captures the region of the visual environment that is directly in front of the child, a moving region that changes in perspective, depth of field, and contents as the child’s body, posture and activities change moment to moment and over developmental time.

Eye-trackers capture fixations within the recorded first person view. By adding the measure of eye-gaze direction to the head-mounted camera, the researcher increases the spatial and temporal precision of the measured visual environment to determine just where in the head-camera-captured scene the perceiver directed gaze. Studies using head-mounted eye tracking systems have yielded new insights into how infants and children use visual cues to reach for and grasp objects (Corbetta, Guan & Williams, 2012), how they search for goal objects while moving in large physically complex spaces (Franchak & Adolph, 2012), how they coordinate head movements and eye-gaze (Schmitow et al, 2013), and how they coordinate visual attention with a social partner (Yu & Smith, 2013).

All three of these perspectives on the visual environment – the potential information in the 3rd person view, the available information in the first person view, and the fixated information within the first person view – are relevant to understanding the visual environments of developing children. But they provide different information that may be suited to different questions about the visual environment.

Scenes

The unique contribution of the head-camera is that it measures scenes, what wearers have the opportunity to see. Researchers need these child-centered views in part because we –from our adult perspectives –do not have good intuitions about how the world looks to infants and toddlers and because these scenes may differ considerably from those available to adults. For example, Smith, Yu and Pereira (2011; see also Yoshida & Smith, 2008) and Yu and Smith (2012) recorded head-camera videos from parents and toddlers as they played together with objects. The toddler-view of a scene often contained a single object that was large and dominating (See Figure 1). In contrast, the parent-view of a scene was broader and encompassed all the toys in play. In another study, Yurovsky, Smith and Yu (2013) presented adults with scenes of parents naming objects for their toddlers. A beep replaced the name and the adult’s task was to guess –given the video clip – the object that was named. Adults were much better able to predict the named object from a series of child views than from a series of observer views, a result that confirms that child views contain unique information not available from other views.

We also need to measure these scenes for a well-founded account of visual development. In a recent review of gaps in developmental vision science, Braddick and Atkinson (2011) called for a description of the statistical structure of child-experienced scenes. They noted the considerable progress that has been made by studying the statistics of natural scenes (from third-person-perspective photographs of the physical world) and how properties of the mature visual system appear to be adaptations of the statistical regularities in those scenes (see Simonceli, 2003). The developing visual system does not have access to all the kinds of scenes used to study natural statistics in adult vision. Instead, the visual scenes encountered by developing infants are more selective and are ordered in systematic ways across development. By recording the scenes in front of developing children’s faces, head-cameras provide a direct way to collect the developmentally appropriate scenes needed to determine their statistical properties. Although statistical analyses of the properties that characterize a large corpus of developmentally-indexed head-cameras scenes is just beginning (Jayaraman, Fausey & Smith, 2013), this would seem to be a critical step towards understanding the role of visual environments in visual and cognitive development. The value of a developmental study of the natural statistics of scenes is supported by several recent studies using head-cameras that have shown direct links between the contents of head-camera images and independent measures of performance in the domains of causality and agency (Cicchino, Aslin & Rakison, 2011), object name learning (Yu & Smith, 2012; Pereira, Yu & Smith; 2013; Yurovsky, Smith & Yu, 2013), and visual object recognition (James et al, 2013). These studies provide direct evidence of the validity of head-camera images as measures of developmentally relevant properties of visual environments.

Scenes versus Fixations

Infants and adults typically turn heads and eyes in the same direction to attend to a visual event (e.g., Bloch & Carschon, 1992; Daniel & Lee, 1990; Taylor, Hayhoe, Land & Ballard, 2011; von Hofsten, Vishton, Spelke, Feng, & Rosander, 1998; Yoshida & Smith, 2008). The likelihood that both head and eyes move together may be particularly high in young children (Nakagawa& Sukigara, 2013; Murray et al, 2007). Eyes typically lead infant heads by just fractions of a sec, (Schmitow et al, 2013; Yoshida & Smith, 2008). These facts foster the idea that head-cameras by themselves might work as measures of attention and looking behavior (Aslin 2008; Schmitow et al, 2013). The problem is that although both heads and eyes tend to move in the same direction, head movement undershoots eye-movements at both horizontal and vertical extremes. Schmitow et al (2013) measured eye and head movements in 6- and 12-month-olds. Head movements were always less than eye movements. The undershoot was less than 5° when the target was less than 30° from the body-defined center, but was over 10° when the target was laterally extreme (80°). In light of the full pattern of their findings, Schmitow et al concluded that head-mounted cameras are suitable for measuring horizontal looking direction in a task (such as toy play on a table) in which the main visual events deviate only moderately (+/− 50°) from midline. In their view, in such geometrically constrained contexts, head movements are sufficiently correlated with eye movements to allow reasonable inferences. However, head movements are not sufficient in many contexts. Our view is that eye-cameras, which are designed to measure gaze direction preciselv, are the best method for measuring looking behavior. Head-mounted cameras capture the scenes in front of faces and the research questions that head cameras can best answer are questions at the level of scenes, but not gaze within scenes.

For scene-level questions about the contents of the visual environment, the relevant methodological limit is not where the eyes are but whether the head-camera captures the relevant scene information. This is a much more complicated question than it might first appear. We know that when viewers orient to a new target, head cameras miss those targets at the extremes. But orienting, that is, turning heads and eyes to a new target, is a momentary event and those “extreme” targets, if attended, do not remain in the periphery and outside of the central region of the head-camera image for long. As yet, there is no precise quantification of just how much or for how long information is missing from the head camera view given directional shifts in eyes and heads.

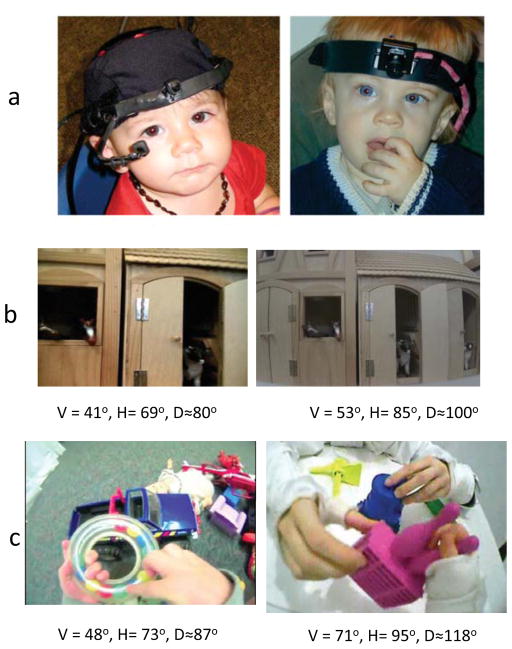

We do know head cameras systematically miss available visual information because the lenses on current head cameras are just not as broad the visual field. Visual fields are classically measured in terms of shifts in eye gaze to stimulus onsets in the periphery from a fixation at center. The evidence suggests that infants detect onsets in the periphery up to 90° from center and by 16 months up to 170° horizontally and vertically (Cummings et al, 1988; Tabuchi al, 2003). Head-cameras (with fields of view ranging from 60° to 100° diagonally as shown in Figure 2) do not capture the full visual field so defined. Again, the psychological relevance of the missed information is not clear because the effective visual field depends on the task (de Schonen, McKenzie, Maury, & Bresson; 1978; Ruff & Rothbart, 1996). In particular, the size of the effective visual field for an infant to detect a stimulus onset in the periphery will not be the same as that for discriminating objects, nor the same in an empty field as in a crowded one (Farzin, Rivera & Whitney, 2010 Whitney & Levi, 2011), nor the same when the perceiver is moving in 3-dimensional space versus just watching events on a screen (Foulsham, Walker & Kingstone, 2011), nor when an attended object is held versus not held (Gozli, West, & Pratt, 2012). The developmental study of effective visual field sizes for different kinds of visual tasks is critical to understanding the utility and limitations of head-mounted cameras; it is also critical to understanding the development of visual processing. In sum, head cameras are imprecise in the timing of transitions between scenes and miss information at the edges of by scene; nonetheless, by measuring the scenes directly in front of infant faces, head cameras may capture the most important segment of the available information allowing researchers to study how the properties of visual scenes change with development and with activities.

Figure 2.

The panels in a show head and eye cameras on a hat (left) and a head camera on a band (right). The four panels in b and c show images from four different cameras with different vertical (V) and horizontal (H) fields of view (and the diagonal, D, measure of field of view). The two views in b were taken with each cameras placed on a tripod 14 inches in front of a toy barn (a reachable distance for a toddler). The two views in c were taken while the head cameras were being worn by toddlers during toy play.

Aligned Heads and Eyes

One can have most confidence in the scenes captured by head cameras when the heads and eyes are aligned. Critically, multiple lines of evidence also suggest that aligned heads and eyes are relevant to the effective attentional field. This idea is contrary to traditional approaches focused on eye gaze alone and that equate gaze direction and gaze duration with attention (Fantz, 1964). However, by both behavioral and neural measures attention and looking are not the same (see, Johnson, Posner & Rothbart 1991; Robertson, Watamura & Wilbourn, 2012; Smith & Yu, 2013). Further, studies of adults indicate that aligned heads and eyes are better for visual processing than misaligned heads and eyes (e.g., Einhauser et al, 2007; Thaler & Todd, 2009, Jovancevic & Hayhoe, 2009). If perceivers typically align their eyes and heads and if visual processing is optimal when those heads and eyes are aligned, then head cameras with their head-centered view may provide a measure of optimal views for attention and learning.

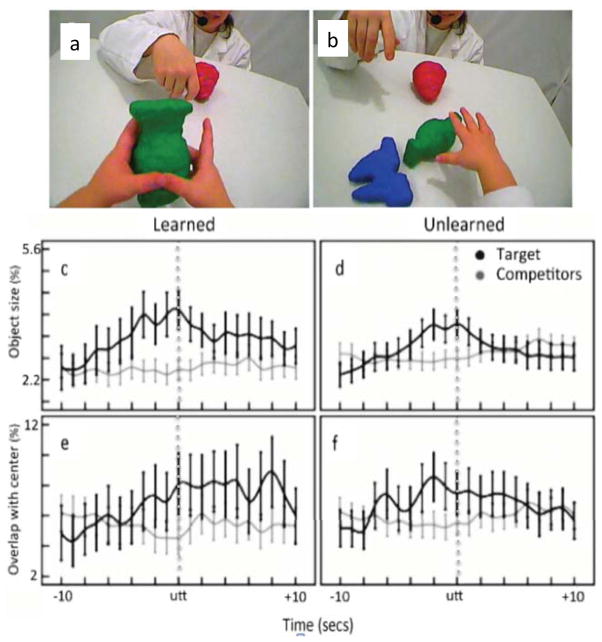

Consistent with this idea is evidence from research (using 3rd person cameras views) to study infant visual attention during object play (e.g., Kanass & Oakes, 2008; Ruff & Capozzoli, 2003). These studies suggest that sustained attention is associated with minimal head movements and objects at midline, a posture consistent with aligned heads and eyes (Ruff & Capozzoli, 2003). If attention is optimal when heads and eyes are aligned and the attended object is at the child’s midline, then head camera images in which a target object is centered in the image should be indicative of optimal attention. Recent findings from head camera studies support this prediction (Yu & Smith, 2012; Pereira, Smith & Yu, 2012). In these studies, parents named objects as infants played with them. Subsequently, infants were tested to determine they had learned the names. The head-mounted camera images were analyzed to determine the properties of naming events that did and did not lead to learning. As shown in Figure 3, for learned object names, the named object was bigger in image size and more centered in the head-camera image than competitor objects. Moreover, for learned object names, the proximity and centering of the object was extended for several seconds before and after the parent had uttered the name. These results both provide direct evidence for a role for joint head and eye direction in visual processing and also illustrate how head cameras may provide insights beyond the contents of scenes and about the importance of the stability of those views.

Figure 3.

Results from head-camera studies linking visual size and centering of a named object to learning. Panels a and b show examples head-camera images during two naming moments when later testing showed the child had learned the name (a) and not learned the name (b). Panels c and d show the image size (% pixels) of the named target object (black) and the mean of the other in-view, competitor objects (gray) for the 20-second window around the naming utterance (utt) for naming moments that led to the learning of the object name (c) or did not (d). Panels e and f show the overlap of the image of the named target (black) and the mean overlap of the images of the competitor objects (gray) with the center of the head-camera image for the 20-s window around the naming utterance (utt) for naming moments that led to the learning of the object name (e) or did not (f). See Yu and Smith (2012) and Pereira, Smith, and Yu (2013) for technical details and related graphs. Error bars represent standard errors.

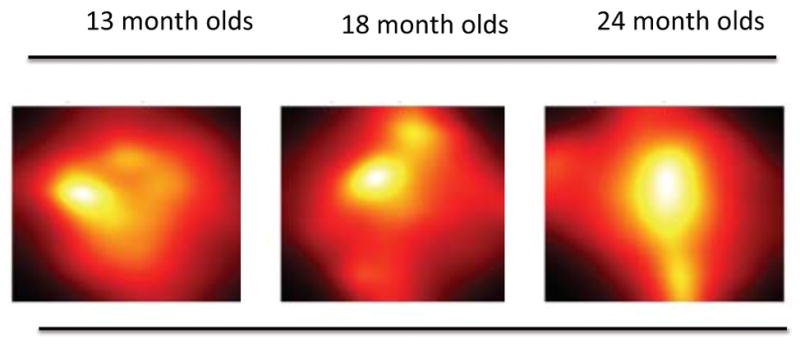

In light of these issues, we have begun using head-mounted eye-trackers to study how 13- to 24- month-old infants distribute eye gaze within the head camera image (using the 118° diagonal head cameras as shown in Figure 2). The “heat maps” in Figure 4 show the gaze distribution within the head camera image for infants for a 6-minute session in which they were playing with toys on a table. The infants were free to move and they moved their heads a lot: more than 63% (SD = 10.9) of the time head position was changing at a speed greater than 2 inches per second and more than 71% (SD = 11.8) of the time head rotation was changing at a speed of more than 30° per second. Nonetheless, and as is evident in the heat maps in Figure 4, the distribution of eye gaze is organized in one region of the head camera images, with over 80% of gaze within the center (sized at 36% of the pixels) of the head camera image. Although gaze distributions in broader contexts need to be measured, these data suggest that measures of the statistical properties of head camera images may be sufficient to capture developmentally important contents. Comparisons of gaze distribution in adults wearing head-mounted eye-trackers and acting in the world versus watching the same scenes on a screen also show that adults center their fixations when acting in a 3-dimensional world; in contrast, when passively watching the same video on a screen, they distribute their eye-gaze more widely (Foulsham, Walker & Kingstone, 2010). This is a reminder that what we know about gaze distributions from eye-tracking studies of infants looking at small screens while sitting in laboratories may not apply to gaze distributions when those same infants are acting in a 3-dimensional world.

Figure 4.

Gaze density as measured by an eye camera (low black, white high) within the head- camera images during a 6-minute toy play period for 13- (n=18), 18- (n=18) and 24 month olds (n=16).

Practical matters

Head cameras are not expensive (less than $500 for everything excluding computers and servers for storage). There are a variety of small video cameras commercially available with different properties (see Sugden et al, 2013; Smith et al, 2009; Frank et al, 2009). The critical issues are field of view (in general, the larger the better, see Figure 2), distortion (more likely for wide angle views), video storage (digital storage cards or cable to a computer are preferred as wifi and Bluetooth communication often fails), weight and ability to mount in a way that infants tolerate.

Our success rate in placing head cameras on infants is about 75%. Success is very high with infants under a year and more problematic at 15–18 months. Placement is best done in one move; hesitation and multiple attempts increase the likelihood that the infant will refuse. However, experimenters who practice placing hats and devices on toddlers and parents (who have lots of experience putting hats on babies) can readily do this. Depending on the purpose of the experiment, we mount head cameras with and without eye-trackers (as shown in figure 2) on headbands or on hats. The critical issues for choosing how to mount the gear is: (1) the ability to place the system on the child in a single move; (2) placement low enough on the forehead for a front-of-face view; and (3) no movement once placed. This last criterion is not just critical not for the stability of the images captured but if the headwear jiggles, toddlers notice and pull it off. We have found that anything that draws attention to the gear (including exploring the equipment or talking about it before placing it on the child) increases refusals. Placement is done in three steps: (1) We desensitize the infant to hand actions near the head by asking the parent to lightly touch (or stroke) the child’s head and hair several times. The experimenter who will be the “placer” does the same. (2) In the laboratory, we use 3 experimenters: one to place the head camera, the other to distract the child, and one to monitor the head camera view. The experimenter places the head-mounted when the child is distracted with a push-button toy so that the child’s hands are busy. The distracting experimenter or parent helps at this stage by gently pushing hands toward the engaging toy so that they do not go to the head. (3) When the child is clearly engaged with the toy, the placer tightens and adjusts the head camera. We adjust the camera so when the infant’s hands make contact with the object, the object is centered in the head-camera field. For recording natural environments in the home, we fit a hat and camera to the individual infant and then at home, parents put the hat on the child for recording.

Data annotation

Head-mounted cameras, like traditional room-cameras, yield a lot of data that has to be coded – a time-consuming task with which developmentalists are already expert. However, there are remarkable advances in computer-assisted hand coding systems as well as more automatic analysis tools that may be able to help us with this task. We provide some leads here:

The Datavyu Coding System (originally Open-Shapa) is a free open-source event-based coding system that supports fine-grained dynamic and sequential hand coding of data and data analysis analysis from very large data sets (see Sanderson et al, 1994; Adolph et al, 2012; http://datavyu.org/).

There are a number of algorithm-assisted approaches to coding the contents of head cameras, including the coding of faces (Frank, Vul & Johnson, 2009; Frank, 2012). One useful system is VATIC (Visual Annotation Tool from Irvine California, http://mit.edu/vondrick/vatic/), a free, online, interactive video annotation tool for putting bouding boxes around objects to measure size and location (Vondrick, Patterson & Ramaman, 2012). Advances in machine learning also make it possible to train automatic coding of specific classes of objects and their location in images (Fergus, Fei-Fei, Perona & Zisserman, 2010; Smith, Yu & Pereira, 2011).

The Open Source Computer Vision (http://opencv.org) library offers a whole tool box for visual and image processing including measures of lower level visual properties including optical flow, motion vectors, and contrast. For relevant infant studies measuring optic flow patterns in head camera images, see Burling, Yoshida & Nagai, (2013), and Raudies, Gilmore, Kretch, Franchak & Adolph (2012).

Finally, Itti, Koch and Niebur (1998) proposed a procedure for creating Salience Maps (www.klab.caltech.edu/~harel/share/gbvs.php) from images that is widely used. Although their precise measures probably do not constitute a proper psychological description of stimulus salience for infants, the method provides a well-defined procedure through which to measure attention-getting properties of head-camera images.

Summary

Head cameras measure the scene that is directly in front of the wearer. It seems highly likely that the statistical properties of these scenes play an important role in the development of the human visual system (Braddick & Atkinson, 2011 Moreover, vision is not just about eyes; because eyes are “head mounted,” the coordination of heads and eyes plays a role in sustained attention and in learning. The unique contribution of head cameras is that they capture the head-centered child’s view, one relevant view of the environment. However, there is still much we need to know to understand both the utility and limitations of this method. These open questions on limitations are also theoretically important questions about how heads, eyes and bodies create visual environments.

Acknowledgments

We thank Sven Bambach for the gaze distribution data. Support was provided in part by NIH grant 5R21HD068475.

References

- Aslin RN. Headed in the right direction: A commentary on Yoshida and Smith. Infancy. 2008;13(3):275–278. [Google Scholar]

- Aslin RN. Infant eyes: A window on cognitive development. Infancy. 2012;17(1):126–140. doi: 10.1111/j.1532-7078.2011.00097.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Gilmore RO, Freeman C, Sanderson P, Millman D. Toward open behavioral science. Psychological Inquiry. 2012a;23(3):244–247. doi: 10.1080/1047840x.2012.705133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Tamis-LeMonda CS, Ishak S, Karasik LB, Lobo SA. Locomotor experience and use of social information are posture specific. Developmental Psychology. 2008;44(6):1705. doi: 10.1037/a0013852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertenthal BI, Campos JJ. A systems approach to the organizing effects of self-produced locomotion during infancy. Advances in Infancy Research. 1990;6:1–60. Retrieved from psycnet.apa.org/psycinfo/1990-30563-001. [Google Scholar]

- Bloch H, Carchon I. On the onset of eye-head coordination in infants. Behavioural Brain Research. 1992;49(1):85–90. doi: 10.1016/s0166-4328(05)80197-4. [DOI] [PubMed] [Google Scholar]

- Braddick O, Atkinson J. Development of human visual function. Vision research. 2011;51(13):1588–1609. doi: 10.1016/j.visres.2011.02.018. [DOI] [PubMed] [Google Scholar]

- Burling JM, Yoshida H, Nagai Y. The significance of social input, early motion experiences, and attentional selection. Proceedings of the 3rd IEEE International Conference on Development and Learning and on Epigenetic Robotics..2013. Aug, [Google Scholar]

- Byrge L, Sporns O, Smith LB. Developmental process emerges from extended brain-body-behavior networks. Trends in Cognitive Science. 2014 doi: 10.1016/j.tics.2014.04.010. under revision. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cartmill EA, Armstrong BF, Gleitman LR, Goldin-Meadow S, Medina TN, Trueswell JC. Quality of early parent input predicts child vocabulary 3 years later. Proceedings of the National Academy of Sciences. 2013;110(28):11278–11283. doi: 10.1073/pnas.1309518110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchino JB, Aslin RN, Rakison DH. Correspondences between what infants see and know about causal and self-propelled motion. Cognition. 2011;118(2):171–192. doi: 10.1016/j.cognition.2010.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clearfield MW. Learning to walk changes infants’ social interactions. Infant Behavior and Development. 2011;34(1):15–25. doi: 10.1016/j.infbeh.2010.04.008. [DOI] [PubMed] [Google Scholar]

- Corbetta D, Guan Y, Williams JL. Infant eye tracking in the context of goal-directed actions. Infancy. 2012;17(1):102–125. doi: 10.1111/j.1532-7078.2011.0093.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cummings MF, Van Hof-Van Duin J, Mayer DL, Hansen RM, Fulton AB. Visual fields of young children. Behavioural Brain Research. 1988;29(1):7–16. doi: 10.1016/0166-4328(88)90047-2. [DOI] [PubMed] [Google Scholar]

- Daniel BM, Lee DN. Development of looking with head and eyes. Journal of Experimental Child Psychology. 1990;50(2):200–216. doi: 10.1016/0022-0965(90)90039-b. [DOI] [PubMed] [Google Scholar]

- de Schonen S, McKenzie B, Maury L, Bresson F. Central and peripheral object distances as determinants of the effective visual field in early infancy. Perception. 1978;7(5):499–506. doi: 10.1068/p070499. [DOI] [PubMed] [Google Scholar]

- Einhäuser W, Schumann F, Bardins S, Bartl K, Böning G, Schneider E, König P. Human eye-head co-ordination in natural exploration. Network: Computation in Neural Systems. 2007;18(3):267–297. doi: 10.1080/09548980701671094. [DOI] [PubMed] [Google Scholar]

- Fantz RL. Visual experience in infants: Decreased attention to familiar patterns relative to novel ones. Science. 1964;146:668–670. doi: 10.1126/science.146.3644.668. [DOI] [PubMed] [Google Scholar]

- Farzin F, Rivera SM, Whitney D. Spatial resolution of conscious visual perception in infants. Psychological science. 2010;21(10):1502–1509. doi: 10.1177/0956797610382787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fergus R, Fei-Fei L, Perona P, Zisserman A. Learning object categories from internet image searches. Proceedings of the IEEE. 2010;98(8):1453–1466. [Google Scholar]

- Franchak JM, Kretch KS, Soska KC, Adolph KE. Head-mounted eye tracking: a new method to describe infant looking. Child Development. 2011;82(6):1738–1750. doi: 10.1111/j.1467-8624.2011.01670.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franchak JM, Adolph KE. What infants know and what they do: Perceiving possibilities for walking through openings. Developmental Psychology. 2012;48(5):1254. doi: 10.1037/a0027530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MC, Vul E, Saxe R. Measuring the development of social attention using free viewing. Infancy. 2012;17(4):355–375. doi: 10.1111/j.1532-7078.2011.00086.x. [DOI] [PubMed] [Google Scholar]

- Frank MC, Vul E, Johnson SP. Development of infants’ attention to faces during the first year. Cognition. 2009;110(2):160–170. doi: 10.1016/j.cognition.2008.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MC. Measuring children’s visual access to social information using face detection. Proceedings of the 33nd Annual Meeting of the Cognitive Science Society.2012. [Google Scholar]

- Foulsham T, Walker E, Kingstone A. The where, what and when of gaze allocation in the lab and the natural environment. Vision Research. 2011;51(17):1920–1931. doi: 10.1016/j.visres.2011.07.002. [DOI] [PubMed] [Google Scholar]

- Holmqvist K, Nyström M, Andersson R, Dewhurst R, Jarodzka H, van de Weijer J. Eye tracking: A comprehensive guide to methods and measures. Oxford, UK: Oxford University Press; 2011. [Google Scholar]

- Itti L, Koch C, Niebur E. A model of saliency-based visual attention for rapid scene analysis. Pattern Analysis and Machine Intelligence, IEEE Transactions on. 1998;20(11):1254–1259. [Google Scholar]

- Kretch K, Franchak J, Brothers J, Adolph K. What infants see depends on locomotor posture. Journal of Vision. 2012;12(9):182–182. [Google Scholar]

- James KH, Jones SS, Smith LB, Swain SN. Young children’s self-generated object views and object recognition. Journal of Cognition and Development. 2013 doi: 10.1080/15248372.2012.749481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jovancevic-Misic J, Hayhoe M. Adaptive gaze control in natural environments. Journal of Neuroscience. 2009;29:6234–6238. doi: 10.1523/JNEUROSCI.5570-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MH, Posner MI, Rothbart MK. Components of visual orienting in early infancy: Contingency learning, anticipatory looking, and disengaging. Journal of Cognitive Neuroscience. 1991;3(4):335–344. doi: 10.1162/jocn.1991.3.4.335. [DOI] [PubMed] [Google Scholar]

- Jayaraman S, Fausey CM, Smith LB. Visual statistics of infants’ ordered experiences. Journal of Vision. 2013;13(9):735–735. [Google Scholar]

- Karasik LB, Adolph KE, Tamis-LeMonda CS, Zuckerman AL. Carry on: Spontaneous object carrying in 13-month-old crawling and walking infants. Developmental Psychology. 2012;48(2):389–397. doi: 10.1037/a0026040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kannass KN, Oakes LM. The development of attention and its relations to language in infancy and toddler hood. Journal of Cognition and Development. 2008;9(2):222–246. [Google Scholar]

- Lobo MA, Galloway JC. Postural and object-oriented experiences advance early reaching, object exploration, and means–end behavior. Child Development. 2008;79(6):1869–1890. doi: 10.1111/j.1467-8624.2008.01231.x. [DOI] [PubMed] [Google Scholar]

- Murray K, Lillakas L, Weber R, Moore S, Irving E. Development of head movement propensity in 4–15 year old children in response to visual step stimuli. Experimental Brain Research. 2007;177(1):15–20. doi: 10.1007/s00221-006-0645-x. [DOI] [PubMed] [Google Scholar]

- Nakagawa A, Sukigara M. Variable coordination of eye and head movements during the early development of attention: A longitudinal study of infants aged 12–36 months. Infant Behavior and Development. 2013;36(4):517–525. doi: 10.1016/j.infbeh.2013.04.002. [DOI] [PubMed] [Google Scholar]

- Nuthmann A, Smith TJ, Engbert R, Henderson JM. CRISP: A computational model of fixation durations in scene viewing. Psychological Review. 2010;117(2):382–405. doi: 10.1037/a0018924. [DOI] [PubMed] [Google Scholar]

- Nyström M, Holmqvist K. An adaptive algorithm for fixation, saccade, and glissade detection in eye tracking data. Behavior Research Methods. 2010;42(1):188–204. doi: 10.3758/BRM.42.1.188. [DOI] [PubMed] [Google Scholar]

- Pereira AF, James KH, Jones SS, Smith LB. Early biases and developmental changes in self-generated object views. Journal of Vision. 2010;10(11) doi: 10.1167/10.11.22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira AF, Smith LB, Yu C. A bottom-up view of toddler word learning. Psychonomic bulletin & review. 2013:1–8. doi: 10.3758/s13423-013-0466-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raudies F, Gilmore RO, Kretch KS, Franchak JM, Adolph KE. Understanding the development of motion processing by characterizing optic flow experienced by infants and their mothers. Development and Learning and Epigenetic Robotics (ICDL), 2012 IEEE International Conference..2012. Nov, [Google Scholar]

- Robertson SS, Watamura SE, Wilbourn MP. Attentional dynamics of infant visual foraging. Proceedings of the National Academy of Sciences. 2012;109(28):11460–11464. doi: 10.1073/pnas.1203482109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robertson SS, Johnson SL. Embodied infant attention. Developmental Science. 2009;12(2):297–304. doi: 10.1111/j.1467-7687.2008.00766.x. [DOI] [PubMed] [Google Scholar]

- Rodriguez ET, Tamis-LeMonda CS. Trajectories of the home learning environment across the first 5 years: Associations with children’s vocabulary and literacy skills at prekindergarten. Child Development. 2011;82(4):1058–1075. doi: 10.1111/j.1467-8624.2011.01614.x. [DOI] [PubMed] [Google Scholar]

- Ruff HA, Capozzoli MC. Development of attention and distractibility in the first 4 years of life. Developmental Psychology. 2003;39(5):877. doi: 10.1037/0012-1649.39.5.877. [DOI] [PubMed] [Google Scholar]

- Ruff HA, Rothbart MK. Attention in early development. New York: Oxford University Press; 1996. [Google Scholar]

- Sanderson PM, Scott JJP, Johnston T, Mainzer J, Wantanbe LM, James JM. MacSHAPA and the enterprise of Exploratory Sequential Data Analysis (ESDA) International Journal of Human-Computer Studies. 1994;41:633–681. doi: 10.1006/ijhc.1994.1077. [DOI] [Google Scholar]

- Schmitow C, Stenberg G, Billard A, von Hofsten C. Using a head-mounted camera to infer attention direction. International Journal of Behavioral Development. 2013;37(5):468–474. [Google Scholar]

- Simoncelli EP. Vision and the statistics of the visual environment. Current Opinion in Neurobiology. 2003;13(2):144–149. doi: 10.1016/s0959-4388(03)00047-3. [DOI] [PubMed] [Google Scholar]

- Soska KC, Adolph KE, Johnson SP. Systems in development: motor skill acquisition facilitates three-dimensional object completion. Developmental Psychology. 2010;46(1):129. doi: 10.1037/a0014618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soska KC, Adolph KE. Postural Position Constrains Multimodal Object Exploration in Infants. Infancy. 2014;19(2):138–161. doi: 10.1111/infa.12039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith LB. It’s all connected: Pathways in visual object recognition and early noun learning. American Psychologist. 2013;68(8):618. doi: 10.1037/a0034185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith LB, Yu C. Visual attention is not enough: individual differences in statistical word-referent learning in infants. Language Learning and Development. 2013;9(1):25–49. doi: 10.1080/15475441.2012.707104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith LB, Yu C, Pereira AF. Not your mother’s view: The dynamics of toddler visual experience. Developmental Science. 2011;14(1):9–17. doi: 10.1111/j.1467-7687.2009.00947.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugden NA, Mohamed-Ali MI, Moulson MC. I spy with my little eye: Typical, daily exposure to faces documented from a first-person infant perspective. Developmental Psychobiology. 2013;56:249–261. doi: 10.1002/dev.21183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tabuchi A, Maeda F, Haruishi K, Nakata D, Nishimura N. Visual field in young children. Neuro Ophthalmology. 2003;20(3):342–349. [Google Scholar]

- Tatler BW, Hayhoe MM, Land MF, Ballard DH. Eye guidance in natural vision: Reinterpreting salience. Journal of Vision. 2011;11(5) doi: 10.1167/11.5.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thaler L, Todd JT. The use of head/eye-centered, hand-centered and allocentric representations for visually guided hand movements and perceptual judgments. Neuropsychologia. 2009;47:1227–1244. doi: 10.1016/j.neuropsychologia.2008.12.039. [DOI] [PubMed] [Google Scholar]

- von Hofsten C, Vishton P, Spelke ES, Feng Q, Rosander K. Predictive action in infancy: tracking and reaching for moving objects. Cognition. 1998;67(3):255–285. doi: 10.1016/s0010-0277(98)00029-8. [DOI] [PubMed] [Google Scholar]

- Wass SV, Smith TJ, Johnson MH. Parsing eye-tracking data of variable quality to provide accurate fixation duration estimates in infants and adults. Behavior Research Methods. 2012:1–22. doi: 10.3758/s13428-012-0245-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney D, Levi DM. Visual crowding: A fundamental limit on conscious perception and object recognition. Trends in cognitive sciences. 2011;15(4):160–168. doi: 10.1016/j.tics.2011.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C, Smith LB. Embodied attention and word learning by toddlers. Cognition. 2012;125:244–262. doi: 10.1016/j.cognition.2012.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C, Smith LB. Joint Attention without Gaze Following: Human Infants and Their Parents Coordinate Visual Attention to Objects through Eye-Hand Coordination. PLoS One. 2013;8(11):e79659. doi: 10.1371/journal.pone.0079659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yurovsky D, Smith LB, Yu C. Statistical word learning at scale: the baby’s view is better. Developmental Science. 2013;16:959–966. doi: 10.1111/desc.12036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vondrick C, Patterson D, Ramanan D. Efficiently scaling up crowdsourced video annotation. International Journal of Computer Vision. 2012:1–21. Retrieved from http://arxiv.org/abs/1212.2278.