Abstract

Context

Researchers have identified high exposure to game conditions, low back dysfunction, and poor endurance of the core musculature as strong predictors for the occurrence of sprains and strains among collegiate football players.

Objective

To refine a previously developed injury-prediction model through analysis of 3 consecutive seasons of data.

Design

Cohort study.

Setting

National Collegiate Athletic Association Division I Football Championship Subdivision football program.

Patients or Other Participants

For 3 consecutive years, all 152 team members (age = 19.7 ± 1.5 years, height = 1.84 ± 0.08 m, mass = 101.08 ± 19.28 kg) presented for a mandatory physical examination on the day before initiation of preseason practice sessions.

Main Outcome Measure(s)

Associations between preseason measurements and the subsequent occurrence of a core or lower extremity sprain or strain were established for 256 player-seasons of data. We used receiver operating characteristic analysis to identify optimal cut points for dichotomous categorizations of cases as high risk or low risk. Both logistic regression and Cox regression analyses were used to identify a multivariable injury-prediction model with optimal discriminatory power.

Results

Exceptionally good discrimination between injured and uninjured cases was found for a 3-factor prediction model that included equal to or greater than 1 game as a starter, Oswestry Disability Index score equal to or greater than 4, and poor wall-sit–hold performance. The existence of at least 2 of the 3 risk factors demonstrated 56% sensitivity, 80% specificity, an odds ratio of 5.28 (90% confidence interval = 3.31, 8.44), and a hazard ratio of 2.97 (90% confidence interval = 2.14, 4.12).

Conclusions

High exposure to game conditions was the dominant injury risk factor for collegiate football players, but a surprisingly mild degree of low back dysfunction and poor core-muscle endurance appeared to be important modifiable risk factors that should be identified and addressed before participation.

Key Words: clinical decision making, primary injury prevention, low back pain

Key Points

A 3-factor prediction model that includes 2 modifiable injury risk factors can be used to identify collegiate football players who might benefit from targeted risk-reduction interventions.

A mild degree of low back dysfunction and a suboptimal level of core-muscle endurance appeared to be important injury risk factors that should be identified and addressed.

High exposure to game conditions was a dominant injury risk factor.

The combination of high exposure to game conditions with a potentially modifiable risk factor was associated with a substantially increased risk of core or lower extremity sprain or strain.

Injury prevention is mentioned in virtually every definition of sports medicine, but very little research evidence is available to support specific procedures for reduction of injury risk. A 4-step model to guide sports injury-prevention research and practice was introduced more than 20 years ago by van Mechelen et al.1 The model subsequently was modified to incorporate additional concepts,2,3 but very little progress has been made beyond the initial step of documenting injury incidences for various populations.4,5 Risk factors for some specific types of injury have been identified, but little information in the literature has supported specific screening procedures to identify individual athletes who possess elevated injury risk.6–8 The relative lack of evidence for the effectiveness of specific interventions for reducing injury incidence may be explained by the highly injury-specific and sport-specific nature of many risk factors9 and the cumulative effects, and possibly interactive effects, of multiple risk factors in creating injury susceptibility.3,10–13

Injury prevention is typically categorized as a clinical-practice domain that is distinct from injury rehabilitation, but some overlap exists. A previously sustained injury is a well-established risk factor for subsequent injury, which often may be attributable to suboptimal clinical management.14,15 Furthermore, intrinsic injury risk factors may affect the rate at which an athlete's functional capabilities are restored after an injury. An individual's capacity to tolerate the external loads imposed by sport-related activities largely depends on tissue stiffness,11 which is potentially modifiable through training-induced adaptations in neuromuscular function. Furthermore, injury-induced neural inhibition of muscle function can produce subtle and persistent performance deficiencies among highly active elite athletes.16 Most injuries do not completely remove athletes from participation,15 which may result in an unrecognized, persistent increase in injury susceptibility.

A clinical prediction model can provide a quantitative estimate of the likelihood that an individual who possesses a particular combination of factors will ultimately develop a particular condition or experience an adverse event at some time.17 The combination of simple core-muscle–endurance test results, survey responses, anthropometric measurements, and recorded exposures to game conditions has been shown to differentiate the preseason profiles of collegiate football players who subsequently sustained core or lower extremity sprains or strains from players who did not, which was represented quantitatively by an odds ratio (OR).8 The maximum time that static body positions can be maintained against gravity has been reported to provide highly reliable measurements of core-muscle endurance.18 Wilkerson et al8 administered 4 tests in the same sequence: (1) back-extension hold, (2) 60° trunk-flexion hold, (3) side-bridge hold, and (4) bilateral wall-sit hold. Surveys that were originally designed to quantify joint function to document treatment outcome can be modified for use as discriminative instruments before injury occurrence.19 Researchers8 have suggested that well-validated outcome survey instruments can undergo minor modifications to obtain preparticipation joint function scores that have value for injury prediction. Self-perception of the preparticipation functional status of the lower back, knees, and ankles and feet has been quantified by 3 surveys with well-established psychometric properties: (1) the Oswestry Disability Index (ODI),20,21 (2) the International Knee Documentation Committee Subjective Knee Form,22 and (3) the sports component of the Foot and Ankle Ability Measure.23

Wilkerson et al8 observed that the odds for occurrence of a core or lower extremity sprain or strain over 1 football season were 16 times greater for players who had at least 3 of the following characteristics: (1) trunk-flexion hold time equal to or less than 161 seconds, (2) bilateral wall-sit–hold time equal to or less than 88 seconds, (3) ODI score equal to or greater than 6, and (4) starting in 3 or more games or playing in all 11 games. With game exposure removed from the analysis, the odds for injury incidence among players with at least 2 of the 3 potentially modifiable risk factors was 4 times greater than the risk level for players with 0 or 1 factor. In subsequent years, the core-muscle–endurance tests were modified to increase their difficulty and thereby shorten the time required for their administration. Every modification of testing procedures resulted in improved efficiency of administration without loss of predictive power. Two subsequent single-season analyses confirmed the validity of the original multifactor model, but the results also demonstrated that the model could be simplified without substantial loss of predictive power (G.B.W., unpublished data, 2011, 2012). Therefore, the purpose of our study was to analyze 3 consecutive seasons of combined data for preseason status, game exposures, and injury occurrences to derive a refined model for prediction of core or lower extremity sprain or strain during participation in collegiate football.

METHODS

Participants

The prospective cohort study design included all members of a National Collegiate Athletic Association Division I Football Championship Subdivision football team who were present for a preparticipation examination immediately before the initiation of preseason practice sessions in 2009 (n = 83), 2010 (n = 88), and 2011 (n = 85). The cohort consisted of 152 individuals (age = 19.7 ± 1.5 years, height = 1.84 ± 0.08 m, mass = 101.08 ± 19.28 kg) who were members of the team for the duration of a given season, which yielded 256 player-seasons of data over the 3 consecutive seasons (17 208 practice session and game exposures). Players who participated in more than 1 season were treated as separate cases for each season, which is a widely accepted practice for such multiyear studies.24–27 Among the 152 players who contributed data to the analysis, 33 participated in all 3 seasons, 38 participated in 2 seasons, and 81 participated in 1 season. Information acquired at the preparticipation examination included responses to 3 previously identified surveys for quantification of joint-specific function.8 All injuries that resulted from participation in practice sessions, conditioning sessions, or games were documented from the start of the preseason practice period until the end of the season. An injury was operationally defined as a core or lower extremity sprain or strain that required the attention of an athletic trainer and that limited football participation to any extent for at least 1 day after its occurrence. Fractures, dislocations, contusions, lacerations, abrasions, and overuse syndromes were excluded to limit the analysis to injuries that were most likely to result from an insufficient neuromuscular response to dynamic loading of muscles and joints. All participants provided written informed consent, and the study was approved by the Institutional Review Board of the University of Tennessee at Chattanooga.

Procedures

Variations of 3 core-muscle–endurance tests that involved maintenance of a specified postural position for as long as possible (trunk-flexion hold, wall-sit hold, back-extension hold) were administered each year. To simultaneously accelerate the process of test administration and improve sensitivity for detection of injury risk, the tests were modified during the 3-year period. For example, the trunk-flexion hold at 60° (sitting position) was performed the first year with the knees in 90° of flexion, the elbows in full flexion, and the upper extremities in 90° of abduction. For the second year, the upper extremities were maintained in an elevated overhead position that corresponded to the 60° position of the trunk. The result was a reduction in average hold duration from 141 seconds to 110 seconds. Administration of the test was further accelerated the third year by maintaining the knees in a fully extended position, which decreased the average hold duration to 75 seconds. Whereas the test modifications accomplished the goal of faster test administration, the relative contribution of the trunk-flexion hold to the predictive power of multifactor prediction models decreased.

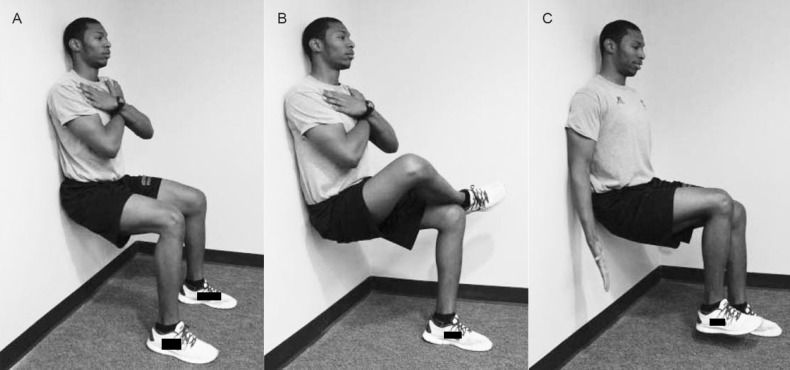

For the first year, athletes performed the wall-sit–hold test with body mass equally distributed between the lower extremities and with the knees and hips maintained in 90° of flexion (Figure 1). For the second year, we devised a unilateral test that athletes performed with the nonsupporting lower leg crossed on top of the thigh of the supporting extremity in a figure-4 position; each extremity was tested separately. The test was improved further the third year by having athletes slightly lift the foot to remove all body-mass support. The result was a 65% reduction in average test duration from 79 seconds the first year to 28 seconds the third year, while maintaining the discriminative power of the test (ie, OR > 2). Test-retest reliability for the unilateral foot-lift version of the wall-sit hold (average of right and left extremity values) has been assessed in a convenience sample of 14 players who performed the test twice within a 48-hour interval, which demonstrated an intraclass correlation coefficient (2,1) of 0.85 and a standard error of measurement value of 3.5 seconds (K. Miyazaki, MS, ATC, unpublished data, 2011).

Figure 1.

Versions of wall-sit–hold test administered over 3-season study period. A, Bilateral support of body mass. B, Unilateral support of body mass with nonsupporting extremity in figure-4 position. C, Unilateral support of body mass with foot lift of nonsupporting extremity.

Data Analysis

Receiver operating characteristic (ROC) analysis was used to identify cut points for preseason posture-hold test results, survey-derived joint-function scores, anthropometric measurements, and subsequent exposure to game conditions during each season. The initial single-season prediction model established greater likelihood of injury for players who had at least 3 of 4 risk factors, which included a high level of exposure to game conditions, suboptimal low back function, and poor performance on 1 or both of 2 different tests of core-muscle endurance (ie, trunk-flexion hold and wall-sit hold).8 The following year, a single-season analysis yielded a 3-factor model that eliminated the modified trunk-flexion hold and produced the identical OR derived from the original 4-factor model. In addition, the definition of a high level of game exposure was simplified from starter status for 3 or more games and playing in all 11 games to starter status for 1 or more games. The more complex operational definition of starter originally was chosen on the basis of its slightly larger observed effect as measured by OR estimates (OR = 8.66 versus OR = 7.65). Subsequent analyses demonstrated that either definition of starter status provided a reasonably comparable indication of the effect of high-level exposure to game conditions, so we adopted the simpler method to designate starter status.

To validate the predictive power of the 3 risk factors that were identified by both of the single-season analyses, we combined and analyzed data for 3 consecutive seasons. Other dichotomized variables that had been measured in a consistent manner each year were also assessed for predictive value by separate cross-tabulation analyses. Cut points for dichotomization of each variable were determined by ROC analysis of the 3-season combined dataset, with the exception of the trunk-flexion hold and wall-sit hold. Given that technique changes dramatically reduced average test duration for the core muscle-endurance tests from year to year, we used the ROC-derived cut point for a given testing procedure for each successive year to classify cases as high risk or low risk. Cross-tabulation analysis was performed to calculate the OR for each predictor variable. Logistic regression analysis was used to assess the relative contributions of predictor variables to the discriminatory power of a multivariable model, and a confidence interval (CI) function was created to assess both the magnitude and precision of OR values for the multivariable model. Predictor variables retained by the logistic regression analysis were entered into a Cox regression analysis to model the instantaneous probability for injury occurrence across the course of a football season (ie, cumulative hazard). We used IBM SPSS Statistics (version 21; IBM Corporation, Armonk, NY) to analyze the data.

RESULTS

A total of 132 core or lower extremity sprains and strains were sustained by 82 of 152 individual players during 17 208 player-exposures (7.7 per 1000 player-exposures). Among 71 players who participated in either 2 or 3 seasons, only 19 were injured during more than 1 season, and only 2 of 33 players who participated in all 3 seasons sustained injuries during each season. Over the 3-season study period, 5 players sustained 3 different injuries and 19 players sustained 2 different injuries within the same season. For the 256 player-seasons, 103 players sustained at least 1 injury during a given season (ie, 103 cases).

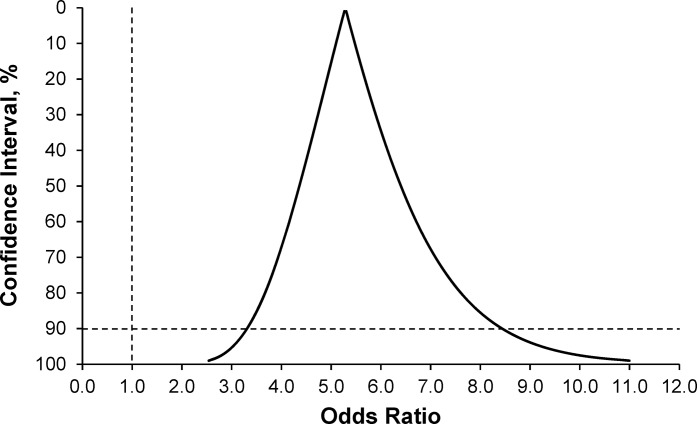

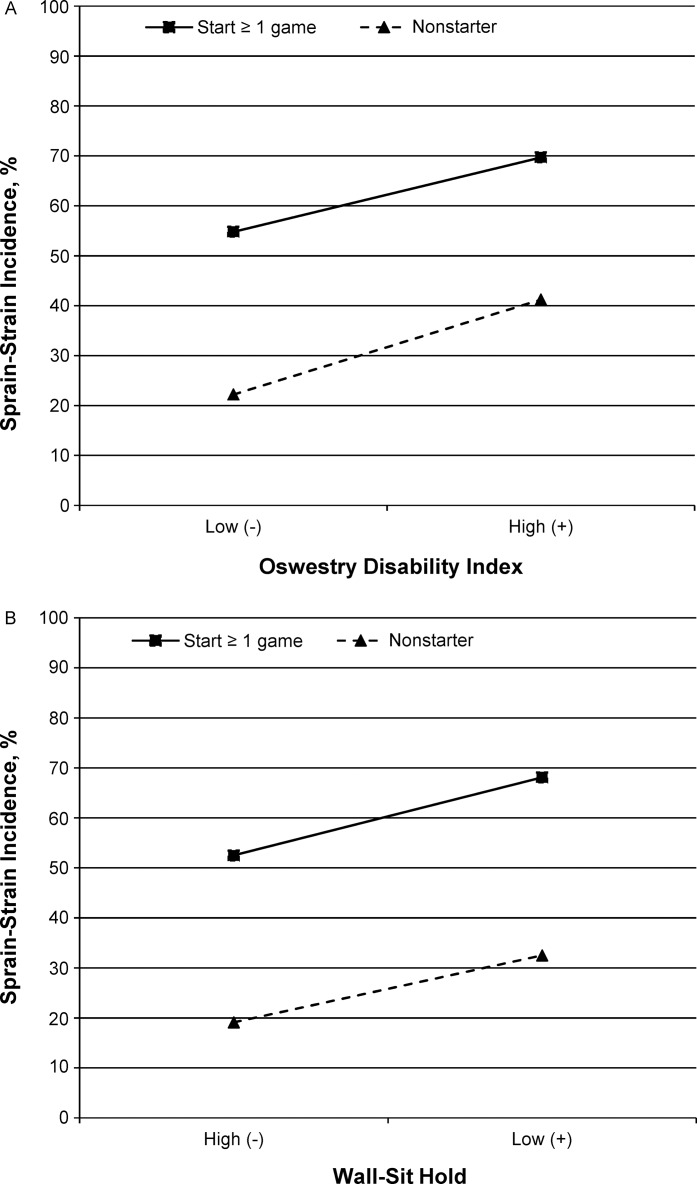

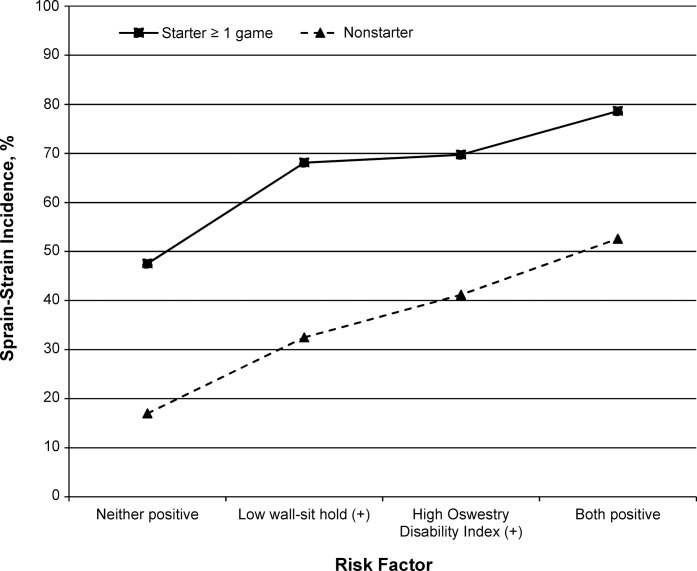

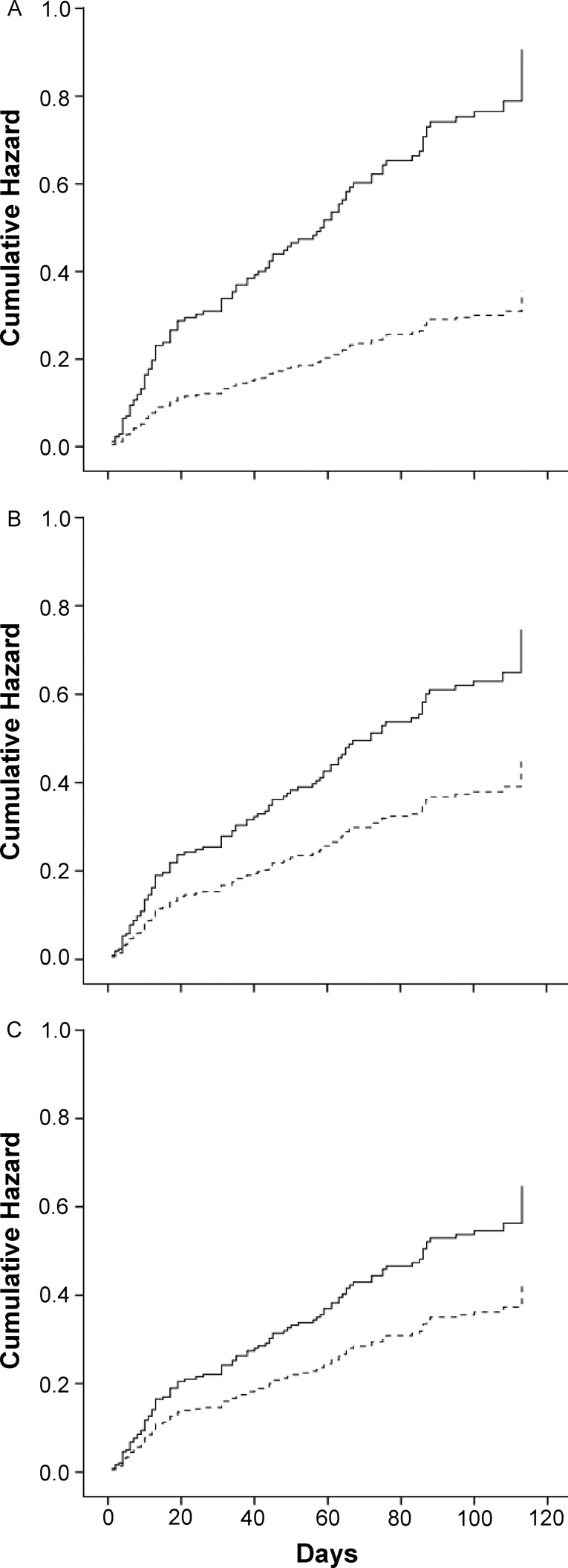

The results of separate analyses of the 3-season aggregated dataset for 7 predictor variables are presented in Table 1. The results of logistic regression and Cox regression analyses demonstrated that inclusion of the trunk-flexion hold in a 4-factor model was not superior to the simpler 3-factor model (Table 2). The 3-factor logistic regression model was associated strongly with the dichotomous outcome (χ23 = 43.64, P < .001), and the Hosmer-Lemeshow goodness-of-fit test demonstrated an exceptional level of agreement between observed and predicted values (χ26 = 1.43, P = .96). A Cox regression model that included the same 3 factors was also associated strongly with the outcome variable (χ23 = 36.72, P < .001). Subsequent ROC analysis demonstrated that the existence of at least 2 of the 3 risk factors (starter ≥1 game, ODI score ≥4, and wall-sit hold ≤cut point specific to test version) provided exceptionally good discrimination between injured and uninjured cases (Table 3). A CI function graph that illustrates the magnitude and precision of the 3-factor prediction model OR value is presented in Figure 2. Follow-up analysis-of-injury-incidence graphs for various combinations of risk factors did not demonstrate evidence of interactions among factors (Figure 3), and the addition of interaction terms to the logistic regression analysis did not demonstrate an effect for any combination of factors (starter × ODI: P = .68; starter × wall-sit hold: P = .87; ODI × wall-sit hold: P = .53). A progressive increase in injury incidence was clearly associated with an increase in the number of risk factors (Table 4, Figure 4). The cumulative hazard predicted by the Cox regression equation for both levels of each factor (adjusted for the effects of the other 2 factors in the 3-factor model) is depicted in Figure 5.

Table 1.

Results of Cross-Tabulation Analysis of Dichotomized Variables

| Variable |

Odds Ratio (90% Confidence Interval) |

Pa |

Sensitivity |

Specificity |

| Starter status ≥1 game | 4.03 (2.58, 6.29) | <.001 | 0.61 | 0.72 |

| Games played ≥4 | 2.78 (1.76, 4.38) | <.001 | 0.74 | 0.50 |

| Oswestry Disability Index ≥4 | 2.32 (1.47, 3.67) | .002 | 0.41 | 0.77 |

| Trunk-flexion hold ≤161 (2009), 96 (2010), 68 (2011) s | 2.27 (1.47, 3.49) | .001 | 0.65 | 0.55 |

| Estimated mass moment of inertia ≥450 kg · m2 | 2.08 (1.13, 3.84) | .04 | 0.18 | 0.90 |

| Wall-sit hold ≤88 (2009), 41 (2010), 30 (2011) s | 1.94 (1.27, 2.97) | .007 | 0.58 | 0.58 |

| Body mass index ≥30.5 | 1.88 (1.21, 2.90) | .01 | 0.45 | 0.70 |

Fisher exact test 1-sided P value.

Table 2.

Logistic Regression and Cox Regression Results (90% Confidence Intervals)

| Predictor Set |

Odds Ratio |

Hazard Ratio |

| 4-factor model | ||

| Starter ≥1 game | 4.14 (2.59, 6.61) | 2.48 (1.77, 3.47) |

| Oswestry Disability Index ≥4 | 2.19 (1.33, 3.60) | 1.65 (1.18, 2.29) |

| Wall-sit hold ≤88 (2009), 41 (2010), 30 (2011) s | 1.82 (1.10, 3.00) | 1.36 (0.96, 1.92) |

| Trunk-flexion hold ≤161 (2009), 96 (2010), 68 (2011) s | 1.72 (1.05, 2.83) | 1.38 (0.96, 1.99) |

| 4-Factor model ≥2 positive factors | 5.47 (2.95, 10.16) | 2.79 (2.01, 3.87) |

| 3-factor model | ||

| Starter ≥1 game | 4.22 (2.65, 7.62) | 2.55 (1.82, 3.57) |

| Oswestry Disability Index ≥4 | 2.26 (1.38, 3.70) | 1.66 (1.19, 2.31) |

| Wall-sit hold ≤88 (2009), 41 (2010), 30 (2011) s | 2.22 (1.40, 3.53) | 1.51 (1.09, 2.10) |

| 3-factor model ≥2 positive factors | 5.28 (3.31, 8.44) | 2.97 (2.14, 4.12) |

Table 3.

Results of Cross-Tabulation Analysis of Final Prediction Modela

| Status |

Number |

||

| Injury |

No Injury |

Total |

|

| High risk (≥2 risk factors) | 58 | 30 | 88 |

| Low risk (0 or 1 risk factor) | 45 | 123 | 168 |

| Total | 103 | 153 | 256 |

Fisher exact 1-sided P < .001, sensitivity = 0.56, specificity = 0.80, positive likelihood ratio = 2.87, negative likelihood ratio = 0.54, and odds ratio = 5.28 (90% confidence interval = 3.31, 8.44).

Figure 2.

Confidence-interval function graph for point estimate of exposure-outcome association defined by 3-factor prediction model (≥2 positive factors).

Figure 3.

Lower extremity sprain and strain incidence for starter (≥1 game) versus nonstarter status. A, Oswestry Disability Index score ≥4 (high) versus <4 (low). B, Wall-sit hold time ≤cut point specific to test version (low) versus >cut point (high).

Table 4.

Injury Occurrence in Relation to Number of Risk Factors

| Risk Factors |

Injury |

No Injury |

Incidence, % |

| 0 | 9 | 47 | 16 |

| 1 | 36 | 76 | 32 |

| 2 | 45 | 25 | 64 |

| 3 | 13 | 5 | 72 |

Figure 4.

Lower extremity sprain and strain incidence for starter (≥1 game) versus nonstarter status in relation to potentially modifiable injury risk profile.

Figure 5.

Cox regression equation prediction of injury hazard for high-risk (solid lines) versus low-risk (dashed lines) status for each factor (adjusted for the effects of the other 2 factors included in the 3-factor model). A, Starter (high risk: ≥1 game). B, Oswestry Disability Index (high risk: score ≥4). C, Wall-sit hold (high risk: time ≤cut point specific to test version).

DISCUSSION

The refined prediction model demonstrated a high degree of accuracy in discriminating injured cases from uninjured cases, which suggests that optimal function of the lumbar spine and fatigue resistance of the core musculature are important considerations for preventing core and lower extremity sprains and strains among collegiate football players. The dominant injury risk factor was clearly a high volume of exposure to game conditions, but the level of injury risk among both starters and nonstarters appeared to be increased substantially by either a relatively mild degree of low back dysfunction or a deficiency in core-muscle endurance. We observed that the 2 potentially modifiable factors had comparable magnitudes of effect on injury risk, but the combined factors elevated the level of injury risk beyond that observed for the existence of only 1 factor among both starters and nonstarters.

Sports medicine practitioners tend to focus on the mechanism by which an inciting event produces a pathologic condition, which is the final link in a chain of injury causation.3,10,11 If preventing a sport-related injury is possible, some objective means is needed to predict that an injury is likely to occur. Such information could be used to guide implementation of a specific intervention that is designed to improve the individual's capacity to prevent an injury by either avoiding or tolerating the transfer of energy from the external environment to body tissues.11,12 A prospective cohort study design provides the only feasible method to quantify the strength of associations between preparticipation characteristics and subsequent injury occurrence. Such exposure-outcome associations can provide evidence that strongly suggests a causative influence, which depends on avoiding systematic bias, minimizing random error, and an analysis that rules out the influence of possible confounding factors.3,13

The observed incidence rate of 7.7 core or lower extremity sprains and strains per 1000 player-exposures for our cohort was relatively close to the estimated national incidence rate of 6.1 per 1000 player-exposures for National Collegiate Athletic Association collegiate football.28 The strong precision of the OR and hazard ratio point estimates (ie, narrow CIs) suggested that random error did not exert a major influence on the results. Given that starter status clearly had the greatest effect on injury risk, a high volume of exposure to game conditions represents a potentially important confounding factor that needed to be thoroughly assessed. The results of the logistic regression analysis and of the stratified analyses of starter versus nonstarter status suggested that the influence of the 2 potentially modifiable risk factors in the prediction model (ie, low back dysfunction and core-muscle fatigue) incrementally increased injury risk in a manner that was comparable for starters and nonstarters. Despite the lack of a confounding effect, the profound influence of starter status on injury risk made the incremental influence of 1 or more other risk factors a serious concern that should be addressed.

The preparticipation ODI score demonstrated poor sensitivity (41%) but good specificity for identifying players who avoided injury (77%). Whereas many players who report a mild degree of low back dysfunction may avoid injury, athletes who report the absence of low back symptoms or functional limitations appear to be less susceptible to core and lower extremity sprains and strains. Given that the ODI survey items were developed to assess low back dysfunction in the general population, a survey instrument specifically designed for young competitive athletes may offer a more precise representation of the influence of low back symptoms on sport-specific performance capabilities29 and thereby provide greater discriminatory power. Our results suggested that therapeutic remediation of any low back symptoms should be a high priority before a football player is exposed to high-intensity practice drills and game conditions. Relatively minor or intermittent low back symptoms could be associated with subtle and persistent alterations in neuromuscular activation patterns that elevate injury risk.16

Rapid fatigue of the core musculature and low back dysfunction have been related to impaired neuromuscular control of the body's center of mass, inhibition of lower extremity muscles, and elevated risk for lower extremity injury.30–35 The unilateral wall-sit–hold test appeared to effectively identify athletes who experience rapid fatigue in muscles that are important for maintenance of lumbar spine, pelvis, hip, knee, and ankle positioning. The muscle-activation patterns required to maintain the unilateral wall-sit–hold position may relate to the ability to avoid excessive hip adduction and knee valgus during dynamic activities, which needs to be assessed using electromyographic analysis. Given that the test imposes simultaneous demands on the quadriceps and hamstrings, rapid fatigue in either muscle group might indicate a diminished ability to dynamically stabilize the knee joint. Further assessment of the reliability of wall-sit–hold duration measurements is also needed.

The reliability of exposure-outcome associations derived from cohort studies is highly dependent on the number of criterion-positive (eg, injured) cases. For example, inclusion of the trunk-flexion hold in the original 4-factor prediction model was based on an analysis of injury data for a cohort of 83 players. Subsequent single-season analyses did not replicate the predictive value of the trunk-flexion hold for the separate cohorts of 88 and 85 players, whereas the other 3 predictors consistently demonstrated strong predictive value from year to year. The broad operational definition of injury as any core or lower extremity sprain or strain ensured a relatively large number of injured cases, whereas a narrower definition might have yielded less reliable estimates of exposure-outcome associations. Much larger datasets are needed to generate reliable injury-prediction models for different age and sex groups, different sports, and specific types of injuries.

A limitation of this study was the operational definition of an injury as any core or lower extremity sprain or strain. For example, the estimated mass moment of inertia (MMOI) around a horizontal axis through the ankle has been identified as a risk factor for lateral ankle sprain.36 Our univariable analysis of MMOI revealed that a cut point of equal to or greater than 450 kg · m2 was associated with an OR of 2.08 (90% CI lower limit = 1.13) but it did not contribute substantially to the power of the multivariable model for predicting core and lower extremity sprains and strains. A relatively large amount of upper body mass could elevate the risk for any lower extremity sprain or strain, but its influence may be greatest at the most distal joints. Furthermore, the exclusion of a variable from the final prediction model should not be interpreted as an indication that it completely lacks predictive value. In future research on ankle injuries, investigators might identify an interaction between estimated MMOI and some modifiable factor (such as postural balance deficiency, muscle weakness, or structural malalignment) that would further support a highly individualized approach to injury prevention.

Another limitation of this study was the possibility that important predictors of collegiate football injury risk were not included in our 3-season cumulative analysis. For example, computerized neurocognitive testing was not included as a standard component of the preparticipation assessment until the last year of the study period. Authors37 of a recently completed single-season univariable analysis suggested that neurocognitive reaction time was a strong predictor of lower extremity sprains and strains, but a larger dataset is needed to establish its importance in relation to the factors that have been confirmed as predictors of injury risk through this 3-season analysis. The anterior reach component of the Star Excursion Balance Test also has been identified recently as a strong predictor of ankle and knee injuries among collegiate football players.38 Much more research is needed to develop prediction models for specific injury types (eg, acute trauma, chronic instability, overuse syndrome) at specific locations (eg, joint, bone, muscle group) in specific populations (eg, age group, sex, sport).

Although a relatively broad operational definition of injury can preclude identification of risk factors that are specific to a given type of injury (eg, lateral ankle sprain), it may identify other risk factors that contribute to multiple types of injuries. Thus, a prediction model for an outcome that is broadly defined may provide greater clinical utility than one that is highly specific to a single type of injury. Furthermore, a complex mathematical model that requires data derived from multiple time-consuming test procedures is not likely to be used by most practicing clinicians. A clinical prediction guide can provide an individualized estimate of injury risk that is more accurate than a clinician's intuitive assessment, but practical considerations dictate that the guide's components must be easy to remember and simple to apply.17 Our multiyear effort to reduce the amount of time required to administer screening tests while attempting to maintain or improve the predictive power of their results yielded a clinical prediction guide with fewer components than the one originally derived from analysis of a single season of data and a high degree of prediction accuracy.

CONCLUSIONS

The results of our analysis supported simplification of the previously developed 4-factor prediction model to a 3-factor model that includes 2 modifiable injury risk factors. A relatively mild degree of low back dysfunction and a suboptimal level of core muscle endurance appeared to be important injury risk factors that should be identified and addressed. Whereas exposure to game conditions is the dominant injury risk factor for collegiate football players, when combined with a potentially modifiable factor that adversely affects core function, the risk for a core or lower extremity sprain or strain appeared to increase substantially.

REFERENCES

- 1.van Mechelen W, Hlobil H, Kemper HC. Incidence, severity, aetiology, and prevention of sports injuries: a review of concepts. Sports Med. 1992;14(2):82–99. doi: 10.2165/00007256-199214020-00002. [DOI] [PubMed] [Google Scholar]

- 2.Finch C. A new framework for research leading to sports injury prevention. J Sci Med Sport. 2006;9((1–2)):3–9. doi: 10.1016/j.jsams.2006.02.009. [DOI] [PubMed] [Google Scholar]

- 3.Meeuwisse WH. Assessing causation in sport injury: a multifactorial model. Clin J Sport Med. 1994;4(3):166–170. [Google Scholar]

- 4.Chalmers DJ. Injury prevention in sport: not yet part of the game? Inj Prev. 2002;8((suppl 4)):iv22–iv25. doi: 10.1136/ip.8.suppl_4.iv22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Van Tiggelen D, Wickes S, Stevens V, Roosen P, Witvrouw E. Effective prevention of sports injuries: a model integrating efficacy, efficiency, compliance and risk-taking behavior. Br J Sports Med. 2008;42(8):648–652. doi: 10.1136/bjsm.2008.046441. [DOI] [PubMed] [Google Scholar]

- 6.Cameron KL. Commentary: time for a paradigm shift in conceptualizing risk factors in sports injury research. J Athl Train. 2010;45(1):58–60. doi: 10.4085/1062-6050-45.1.58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Parkkari J, Kujala U, Kannus P. Is it possible to prevent sports injuries? Review of controlled clinical trials and recommendations for future work. Sports Med. 2001;31(14):985–995. doi: 10.2165/00007256-200131140-00003. [DOI] [PubMed] [Google Scholar]

- 8.Wilkerson GB, Giles J, Seibel D. Prediction of core and lower extremity strains and sprains in college football players: a preliminary study. J Athl Train. 2012;47(3):264–272. doi: 10.4085/1062-6050-47.3.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Emery CA. Risk factors for injury in child and adolescent sport: a systematic review of the literature. Clin J Sport Med. 2003;13(4):256–268. doi: 10.1097/00042752-200307000-00011. [DOI] [PubMed] [Google Scholar]

- 10.Bahr R, Holme I. Risk factors for sports injuries: a methodological approach. Br J Sports Med. 2003;37(5):384–392. doi: 10.1136/bjsm.37.5.384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bahr R, Krosshaug T. Understanding injury mechanisms: a key component of preventing injuries in sport. Br J Sports Med. 2005;39(6):324–329. doi: 10.1136/bjsm.2005.018341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McIntosh AS. Risk compensation, motivation, injuries, and biomechanics in competitive sport. Br J Sports Med. 2005;39(1):2–3. doi: 10.1136/bjsm.2004.016188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Meeuwisse WH. Athletic injury etiology: distinguishing between interaction and confounding. Clin J Sport Med. 1994;4(3):171–175. [Google Scholar]

- 14.Creighton DW, Shrier I, Shultz R, Meeuwisse WH, Matheson GO. Return-to-play in sport: a decision-based model. Clin J Sport Med. 2010;20(5):379–385. doi: 10.1097/JSM.0b013e3181f3c0fe. [DOI] [PubMed] [Google Scholar]

- 15.Meeuwisse W, Tyreman H, Hagel B, Emery C. A dynamic model of etiology in sport injury: the recursive nature of risk and causation. Clin J Sport Med. 2007;17(3):215–219. doi: 10.1097/JSM.0b013e3180592a48. [DOI] [PubMed] [Google Scholar]

- 16.Hides JA, Stanton W, McMahon S, Sims K, Richardson CA. Effect of stabilization training on multifidus muscle cross-sectional area among young elite cricketers with low back pain. J Orthop Sports Phys Ther. 2008;38(3):101–108. doi: 10.2519/jospt.2008.2658. [DOI] [PubMed] [Google Scholar]

- 17.Guyatt GH. Determining prognosis and creating clinical decision rules. In: Haynes RB, Sackett DL, Guyatt GH, Tugwell P, editors. Clinical Epidemiology: How To Do Clinical Practice Research. 3rd ed. Philadelphia, PA: Lippincott Williams & Wilkins;; 2006. pp. 323–355. In. eds. [Google Scholar]

- 18.McGill SM, Childs A, Liebenson C. Endurance times for low back stabilization exercises: clinical targets for testing and training from a normal database. Arch Phys Med Rehabil. 1999;80(8):941–944. doi: 10.1016/s0003-9993(99)90087-4. [DOI] [PubMed] [Google Scholar]

- 19.Cosby NL, Hertel J. Clinical assessment of ankle injury outcomes: case scenario using the foot and ankle ability measure. J Sport Rehabil. 2011;20(1):89–99. doi: 10.1123/jsr.20.1.89. [DOI] [PubMed] [Google Scholar]

- 20.Fairbank JC, Pynsent PB. The Oswestry Disability Index. Spine (Phila Pa 1976) 2000;25(22):2940–2952. doi: 10.1097/00007632-200011150-00017. [DOI] [PubMed] [Google Scholar]

- 21.Fritz JM, Irrgang JJ. A comparison of a modified Oswestry Low Back Pain Disability Questionnaire and the Quebec Back Pain Disability Scale. Phys Ther. 2001;81(2):776–788. doi: 10.1093/ptj/81.2.776. [DOI] [PubMed] [Google Scholar]

- 22.Irrgang JJ, Anderson AF, Boland AL, et al. Development and validation of the International Knee Documentation Committee Subjective Knee Form. Am J Sports Med. 2001;29(5):600–613. doi: 10.1177/03635465010290051301. [DOI] [PubMed] [Google Scholar]

- 23.Carcia CR, Martin RL, Drouin JM. Validity of the Foot and Ankle Ability Measure in athletes with chronic ankle instability. J Athl Train. 2008;43(2):179–183. doi: 10.4085/1062-6050-43.2.179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Beynnon BD, Vacek PM, Murphy D, Alosa D, Paller D. First-time inversion ankle ligament trauma: the effects of sex, level of competition, and sport on the incidence of injury. Am J Sports Med. 2005;33(10):1485–1491. doi: 10.1177/0363546505275490. [DOI] [PubMed] [Google Scholar]

- 25.McHugh MP, Tyler TF, Mirabella MR, Mullaney MJ, Nicholas SJ. The effectiveness of a balance training intervention in reducing the incidence of noncontact ankle sprains in high school football players. Am J Sports Med. 2007;35(8):1289–1294. doi: 10.1177/0363546507300059. [DOI] [PubMed] [Google Scholar]

- 26.Rothman KJ, Greenland S. Cohort studies. In: Rothman KJ, Greenland S, Lash TL, editors. Modern Epidemiology. 3rd ed. Philadelphia, PA: Lippincott Williams & Wilkins;; 2008. pp. 100–102. In. eds. [Google Scholar]

- 27.Tyler TF, McHugh MP, Mirabella MR, Mullaney MJ, Nicholas SJ. Risk factors for noncontact ankle sprains in high school football players: the role of previous ankle sprains and body mass index. Am J Sports Med. 2006;34(3):471–475. doi: 10.1177/0363546505280429. [DOI] [PubMed] [Google Scholar]

- 28.Marshall SW, Corlette J. NCAA Injury Surveillance Program Falls Sports Qualifying Report: 2004–2009 Academic Years. Indianapolis, IN: Datalys Center for Sports Injury Research and Prevention; 2009. [Google Scholar]

- 29.d'Hemecourt PA, Zurakowski D, d'Hemecourt CA, et al. Validation of a new instrument for evaluating low back pain in the young athlete. Clin J Sport Med. 2012;22(3):244–248. doi: 10.1097/JSM.0b013e318249a3ce. [DOI] [PubMed] [Google Scholar]

- 30.Hammill RR, Beazell JR, Hart JM. Neuromuscular consequences of low back pain and core dysfunction. Clin Sports Med. 2008;27(3):449–462. doi: 10.1016/j.csm.2008.02.005. [DOI] [PubMed] [Google Scholar]

- 31.Hart JM, Fritz JM, Kerrigan DC, Saliba EN, Gansneder BM, Ingersoll CD. Reduced quadriceps activation after lumbar paraspinal fatiguing exercise. J Athl Train. 2006;41(1):79–86. [PMC free article] [PubMed] [Google Scholar]

- 32.Leetun DT, Ireland ML, Willson JD, Ballantyne BT, Davis IM. Core stability measures as risk factors for lower extremity injury in athletes. Med Sci Sports Exerc. 2004;36(6):926–934. doi: 10.1249/01.mss.0000128145.75199.c3. [DOI] [PubMed] [Google Scholar]

- 33.Nadler SF, Malanga GA, DePrince M, Stitik TP, Feinberg JH. The relationship between lower extremity injury, low back pain, and hip muscle strength in male and female collegiate athletes. Clin J Sport Med. 2000;10(2):89–97. doi: 10.1097/00042752-200004000-00002. [DOI] [PubMed] [Google Scholar]

- 34.Suter E, Lindsay D. Back muscle fatigability is associated with knee extensor inhibition in subjects with low back pain. Spine (Phila Pa 1976) 2001;26(16):E361–E366. doi: 10.1097/00007632-200108150-00013. [DOI] [PubMed] [Google Scholar]

- 35.Zazulak BT, Hewett TE, Reeves NP, Goldberg B, Cholewicki J. Deficits in neuromuscular control of the trunk predict knee injury risk: a prospective biomechanical-epidemiologic study. Am J Sports Med. 2007;35(7):1123–1130. doi: 10.1177/0363546507301585. [DOI] [PubMed] [Google Scholar]

- 36.Milgrom C, Shiamkovitch N, Finestone A, et al. Risk factors for lateral ankle sprain: a prospective study among military recruits. Foot Ankle. 1991;12(1):26–30. doi: 10.1177/107110079101200105. [DOI] [PubMed] [Google Scholar]

- 37.Wilkerson GB. Neurocognitive reaction time predicts lower extremity sprains and strains. Int J Athl Ther Train. 2012;17(6):4–9. [Google Scholar]

- 38.Ford A, Gribble PA, Pfile KR, et al. Star Excursion Balance Test as a predictor of ankle and knee injuries in collegiate football athletes. J Athl Train. 2012;47((suppl 3)):S44. [abstract] [Google Scholar]