Abstract

The degree to which face-specific brain regions are specialized for different kinds of perceptual processing is debated. The present study parametrically varied demands on featural, first-order configural or second-order configural processing of faces and houses in a perceptual matching task to determine the extent to which the process of perceptual differentiation was selective for faces regardless of processing type (domain-specific account), specialized for specific types of perceptual processing regardless of category (process-specific account), engaged in category-optimized processing (i.e., configural face processing or featural house processing) or reflected generalized perceptual differentiation (i.e. differentiation that crosses category and processing type boundaries). Regions of interest were identified in a separate localizer run or with a similarity regressor in the face-matching runs. The predominant principle accounting for fMRI signal modulation in most regions was generalized perceptual differentiation. Nearly all regions showed perceptual differentiation for both faces and houses for more than one processing type, even if the region was identified as face-preferential in the localizer run. Consistent with process-specificity, some regions showed perceptual differentiation for first-order processing of faces and houses (right fusiform face area and occipito-temporal cortex, and right lateral occipital complex), but not for featural or second-order processing. Somewhat consistent with domain-specificity, the right inferior frontal gyrus showed perceptual differentiation only for faces in the featural matching task. The present findings demonstrate that the majority of regions involved in perceptual differentiation of faces are also involved in differentiation of other visually homogenous categories.

The brain basis of face recognition is widely studied with fMRI in order to understand the neural components that reveal the nature of perceptual and cognitive processing specific to faces versus other object categories. However, one unanswered questions is whether face network components are more strongly tuned to category delineations, preferring faces over other objects, or driven by certain cognitive and perceptual processes that are more strongly invoked by faces? We suggest that an examination of the perceptual processes associated with face recognition is pivotal for characterizing the degree of specialization for faces in the brain.

A core set of brain regions in human occipito-temporal cortex respond preferentially to faces, including the “fusiform face area” (FFA, located in the lateral middle fusiform gyrus; Kanwisher, McDermott, & Chun, 1997), occipital face area (OFA, situated in inferior occipital cortex; Gauthier et al., 2000; Rossion et al., 2003) and the face selective superior temporal sulcus (for a review, see Haxby et al., 2000). The greater response to faces than to objects under various conditions has led to the formation of a domain-specific account of neural specialization for faces (Rhodes, Byatt, Michie, & Puce, 2004; Yovel & Kanwisher, 2004). A strong form of this account suggests that faces induce a greater response than non-faces regardless of the processing type used to categorize a stimulus as a face or identify individual faces (Figure 1) and despite the degree of familiarity or expertise with the non-face comparison categories. For example, Yovel and Kanwisher (2004) showed that fMRI response in the FFA preferred faces over houses but showed no differential activation for processing facial features (the shape and size of eyes, nose or mouth) or the spacing of features (2nd order processing), nor did the FFA prefer either processing type for houses. Rhodes et al. (2004) also showed that the FFA responded more to faces than to another visually homogenous category (Lepidoptera) and that Lepidoptera expertise did not modulate the FFA response. In the domain-specific account, face-specific regions are tuned to faces and potentially all relevant aspects of face processing but the processing in these regions is not co-opted for other categories or for different levels of category expertise.

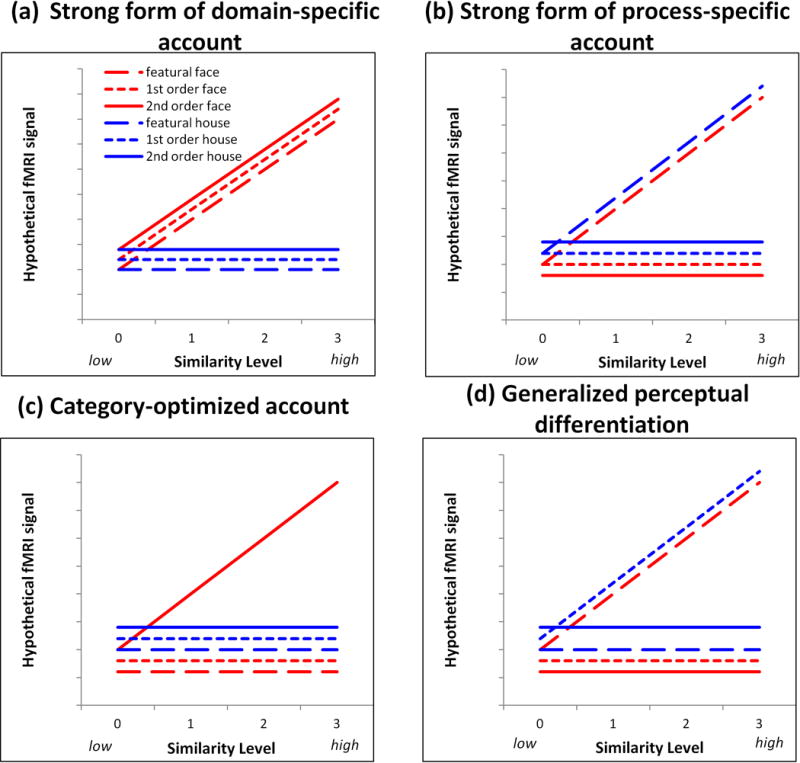

Figure 1.

Hypotheses associated with the different accounts of information processing that could occur in brain regions involved in face and object processing. Hypothetical fMRI signal is shown on the y-axis and similarity level is shown on the x-axis. Face conditions are shown in red; house conditions are shown in blue. A flat line indicates no significant modulation by similarity for a given condition whereas a sloped line indicates significant similarity modulation as a reflection of processing the information associated with that condition.

Alternatively, Gauthier, Tarr, Anderson, Skudlarski, and Gore (1999) promoted the perceptual expertise account which is closely aligned with process specificity. When individuals are trained to discriminate items from a visually homogenous non-face category (i.e., greebles), the FFA is strongly activated once expertise was established following training (also see Xu, et al. 2005). They argued that the right FFA supports the process of making fine distinctions among items from visually similar categories for which an individual has expertise. Other findings also support process-specificity by showing that the FFA responds to non-faces even in the absence of expertise (Haist, Lee, & Stiles, 2010; Joseph & Gathers, 2002) which suggests that the FFA is linked to processing information that is strongly associated with faces (such as high within-category visual similarity) but that this information is not reserved only for faces.

One challenge in assessing the domain- and process-specificity accounts is that the finding of more activation to a given category or process relative to another does not necessarily provide sufficient evidence that a neural node is specific or specialized for face processing (Joseph, 2001; Joseph & Gathers, 2002). A greater fMRI signal for faces may be driven by factors that are not directly related to the domain-relevant processing. For example, Yovel and Kanwisher’s (2004) finding that the FFA responded more to faces than to houses but did not differentially respond to featural and 2nd order processing may have been explained by task difficulty. They reported that discriminating upright faces was harder than upright houses but that there were no performance differences between featural and 2nd order processing for these stimuli. The FFA may have responded more to faces than to houses due to the greater effort required for discrimination.

The FFA’s lack of response to different types of perceptual processing (featural or 2nd order) could either mean that it is not engaged in these types of processing or is engaged in all processing types to the same degree. Liu, Harris, and Kanwisher (2010) reported that the FFA is sensitive to the presence of facial features and to their 1st order configuration (i.e. the ordering of the eyes above the nose, which is above the mouth) and that this information processing is correlated, suggesting that the FFA is engaged in both featural and 1st order configural processing. However, it is possible that other components of the face network are even more strongly engaged in these processing types, which can be addressed with a voxel-wise whole brain analysis, as conducted by Maurer et al. (2007). They showed that 2nd order configural versus featural processing of faces did not isolate the FFA but instead isolated a FFA-adjacent region as well as right frontal regions (whereas featural versus 2nd order processing isolated left frontal cortex). Lobmaier, Klaver, Loenneker, Martin, and Mast (2008) also compared featural and configural processing directly and did not show greater right FFA response to configural information. The left FFA, left lingual gyrus and left parietal cortex, however, showed a greater response to facial featural information. Another way to probe neural substrates for processing type is through face inversion, which may disrupt configural processing or more strongly engage featural processing, or both. Some studies show no difference in FFA activation to inverted and upright faces (Joseph et al., 2006; Leube et al., 2003) but others show an enhanced FFA response (Yovel & Kanwisher, 2004). However, inversion is an indirect approach to examine differences in featural and 2nd order processing. In sum, findings are mixed as to whether the FFA is engaged for different types of face processing to the same degree or whether other regions are responsible for such processing. Direct comparisons of processing types must ensure that the conditions are equated for difficulty, and lack of a differential response to processing types cannot necessarily be taken as evidence for domain-specificity.

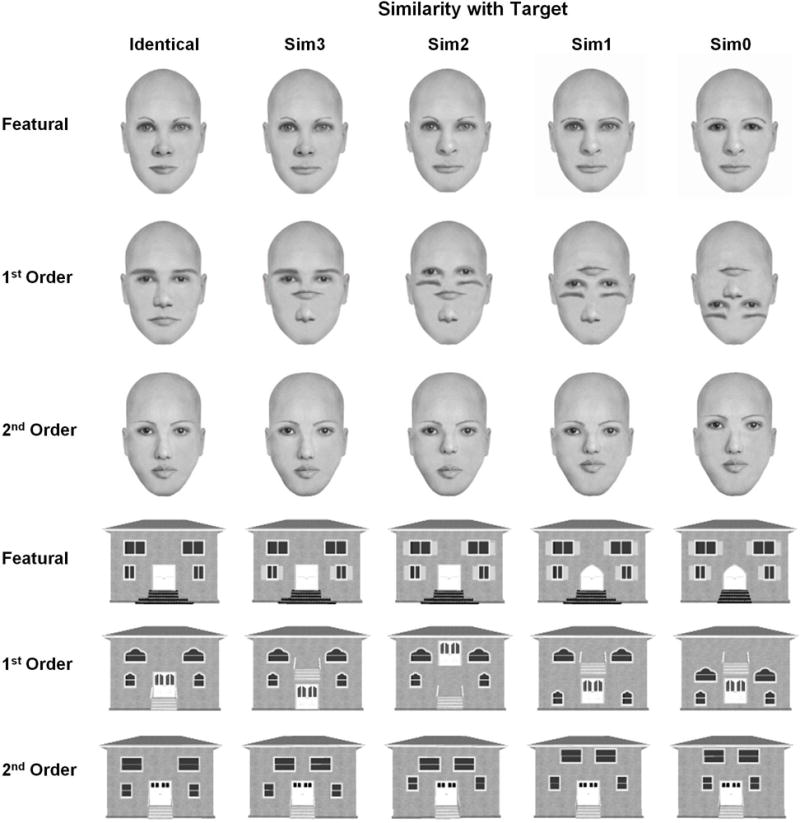

To directly probe the degree of domain- and process-specificity for faces, the present study parametrically manipulated degree of similarity related to three different types of perceptual face processing (1st order configural, 2nd order configural and featural). Parametric manipulation of similarity has the advantage of changing demands on processing in a graded fashion, thereby directly tapping into differential processing of perceptual information in a quantitative manner. For example, two featurally dissimilar faces will be easier to discriminate than two faces sharing several features (Figure 2). The greater difficulty of discriminating two similar faces is related to the featural similarity manipulation in this example. We expect that increasing the similarity of two stimuli will require a greater degree of processing for that specific type of information (featural, 1st order or 2nd order) and will result in monotonically increasing functions for behavioral performance (increased reaction time or errors) and fMRI signal. Prior research has shown that parametrically varied perceptual similarity of objects successfully modulates fMRI signal in brain regions like the lateral occipital complex (LOC; Drucker & Aguirre, 2009) and ventral temporal cortex (Joseph & Gathers, 2003; Liu, Steinmetz, Farley, Smith, & Joseph, 2008). Similarity manipulations that use morphing of face identities also modulate ERP response amplitude proportionally (Kahn, Harris, Wolk, & Aguirre, 2010).

Figure 2.

Sample stimuli used in the present study. A sample target stimulus is shown in the “Identical” column. Sim3-Sim0 columns illustrate progressively less similarity with the target as more features, 1st order relations, or 2nd order relations are changed. For example, featural face changes were created by changing the lips of the target face (Sim3), the lips and nose (Sim2), the lips, nose and eyebrows (Sim1), or the lips, nose, eyebrows and eyes (Sim0). Although single stimuli are illustrated here, stimuli were presented in pairs so that the target and the sim3 stimulus form a sim3 pair.

The present parametric design addresses the following concerns with prior studies. First, this approach directly manipulates the processing types of interest rather than relying on an indirect approach, such as inversion, to infer what type of perceptual information is processed in different brain regions. Second, the present design does not rely exclusively on a qualitative comparison of different processing types, which may or may not be equated for difficulty. The main hypothesis is that if a given region is engaged for a specific type of processing, that region will show modulation by the greater demands on processing (i.e., a monotonically increasing similarity function). In the present framework, the modulation of fMRI signal by increasing processing demands is used as the main evidence for processing different kinds of perceptual information, with less emphasis on differences in fMRI signal magnitude for qualitative comparisons (such as featural versus 2nd order processing differences in average magnitude of response in the two conditions). Third, the present approach (similar to Yovel & Kanwisher, 2004) compares faces with another visually homogenous category (houses) which have the same external contour as faces with the same relations or types of features are systematically changed across similarity levels. Fourth, the present study compared three types of perceptual processing that are relevant for faces and objects: featural, 1st order configural, and 2nd order configural. Prior fMRI studies (Liu et al., 2010; Lobmaier et al., 2008; Maurer et al., 2007; Yovel & Kanwisher, 2004) have only compared two of these types of processing in the same study.

Regions were isolated with (1) a localizer task that presented blocks of faces, objects and visual textures to define face-preferential and object-preferential regions, and (2) a perceptual differentiation task with parametrically varied featural, 1st order or 2nd order similarity in the face condition using a similarity-weighted regressor that represented the four similarity levels in Figure 2. Within each region (isolated by either method), percent signal change from the matching task for each similarity level, category (faces or houses) and processing type (featural, 1st order or 2nd order) was extracted. Follow-up ANOVAs determined whether each region was tuned to one of the non-preferred categories, processing types or both.

If a given region of the face network is domain-specific, then it should be sensitive to face processing and insensitive to processing type. This would predict that the highest order interaction for that region would be a Category × Similarity interaction of the form shown in Figure 1a. Alternatively, if any given region is process-specific, then it should show sensitivity to one processing type but not show differential sensitivity to category. The Processing Type × Similarity interaction of the form shown in Figure 1b would emerge. A third account, referred to as “category-optimized,” hypothesizes that face processing engages configural processing to a greater degree than does non-face processing, whereas house processing engages featural processing to a greater degree than does face processing. In this case, the highest order interaction would be a Category × Processing Type × Similarity of the form shown in Figure 1c. Another possibility (generalized perceptual differentiation) is that a brain region is engaged in perceptual differentiation in a generalized sense. This account would predict a Category × Processing Type × Similarity interaction of the form shown in Figure 1d. In this case, the perceptual differentiation is not easily reduced to clear-cut category and processing type distinctions because the effects of these variables are non-additive.

Method

Participants

Fifty-nine healthy right-handed volunteers (mean age = 26.5 years, SD =6.0, range 18–42; 29 men) were compensated or received course credit for participation. Due to excessive head motion (>1.75mm), data from eight participants were eliminated. No participants reported neurological or psychiatric diagnoses or pregnancy and all provided informed consent before participating. All procedures were approved by the University’s Institutional Review Board.

Design and Stimuli

This was a 2 (category: faces, houses) × 3 (processing type: featural, 1st order, 2nd order) × 4 (similarity level: 0, 1, 2, 3, where 0 indicated no features or relations in common and 3 indicated that 3 features or relations were in common between paired stimuli) mixed blocked design. Participants were assigned to the featural (n=16, 8 men, mean age = 27.1, SD = 5.2), 1st order (n=17, 8 men, mean age = 25.8, SD = 5.6), or 2nd order (n=18, 10 men, mean age = 27.1, SD = 7.4) processing condition. Category and similarity were manipulated within-subjects.

Photo-realistic faces were constructed using FACES 4.0 software (IQ Biometrix, Redwood Shores, CA) and house stimuli were created using Chief Architect 10.06a (Coeur d’Alene, ID). Adobe Photoshop 5.5 (San Jose, CA) was used for of 1st order configuration manipulations. Twenty-four faces were initially constructed so that none of the features overlapped and these were used as the basis for making featural, 1st order, and 2nd order changes and constructing stimulus pairs. While no two pairs were repeated, the same face was repeated up to five times in different similarity conditions across both house or face runs. Forty-eight identical (same) pairs per category and processing type were used (referred to as sim4; see Figure 2).

Featural changes

For each original face, distracter faces were constructed so that 1, 2, 3, or 4 features (eyes, nose, mouth, or eyebrows) were replaced, yielding 4 similarity (sim) levels (and 96 unique faces for each processing type). Sim0-sim3 faces respectively shared 0–3 common features with the target face. The feature changed for each sim level was counterbalanced across all stimulus pairs so that feature replacement was not confounded with sim level. The same procedures were used for house features (door, steps, lower-level and upper-level windows).

1st order changes

The 1st order face changes were: (a) eyes above nose, (b) eyes above mouth, (c) nose above mouth, or (d) eyebrows above eyes. The 1st order house changes were: (a) lower windows above / level with the door, (b) upper windows above steps, (c) door above steps, or (d) upper windows above door. The relation changed for each sim level was counterbalanced across all stimulus pairs so that relation replacement was not confounded with sim level.

2nd Order Changes

The 2nd order face changes were: (a) horizontal distance between the centroid of both eyes, (b) vertical distance between centroid of nose and top of forehead, (c) vertical distance between centroid of mouth and top of forehead, and (d) vertical distance between center of two eyes and top of forehead. For faces, an initial spacing of 2 SD from Farkas (1994) norms was used, but was changed to a 3 SD spacing after 2 SD was identified as being too difficult to detect. The house changes were: (a) horizontal distance between the centroid of both lower windows, (b) horizontal distance between the centroid of both upper windows, (c) vertical distance between center of lower windows and bottom of roof, and (d) vertical distance between center of upper windows and bottom of roof. Again, the relation changed for each sim level was counterbalanced across all pairs to avoid confounding with sim level.

Procedure

Each participant completed five functional runs in counterbalanced order: two face- and two house-matching runs and a face localizer run. Each face and house run consisted of eight task blocks: two per sim level. Each task block (27.5 s in length) consisted of eight trials: five different trials of a given block’s sim level and three sim4 (same) trials. The ratio of 5 “different”: 3 “same” was used because the process of interest, perceptual differentiation, was most relevant on the “different” trials; therefore, we were able to sample more of the relevant behavior while also having a sufficient number of “same” trials so that responding was not completely biased toward responding “different.” Prior studies (e.g., Joseph & Gathers, 2003) showed that performance on “same” trials did not vary as a function of similarity level, but performance on “different” trials did vary across similarity level, as expected.

For each trial, participants saw either two faces or two houses for 2900 ms followed by a fixation interval for 538 ms. Participants indicated whether the two stimuli were the same (index finger) or different (middle finger) using a fiber-optic response pad (MRA Inc., Washington, PA). Participants could respond at any point during the trial. A 12.5-s rest period occurred between blocks and each task block onset was triggered by a scanner pulse. The face localizer run consisted of nine blocks (3 each of face, object, or texture) lasting 17.5 s each, with 10 interleaved fixation blocks (12.5 s each). During each block, 10 different yearbook faces, common objects or visual textures appeared for 1000 ms followed by a fixation of 750 ms. Participants pressed a button each time a stimulus appeared to ensure attentive processing.

fMRI Data Acquisition and Analysis

Images were acquired using a Siemens 3T Trio MRI system (Erlangen, Germany): one 109-volume (272.5 s) face localizer scan and four 133-volume (322.5 s) task scans (gradient echo EPI; TE=30 ms, TR=2500 ms, flip angle=80°, FOV=22.4 ×22.4 cm, interleaved acquisition, 38 axial contiguous 3.5-mm slices for the face localizer scan and 40 slices for the task scans). Hence, the total number of brain volumes used to sample perceptual differentiation behavior was 352 per subject (88 task block volumes per subject × 4 functional runs). A T1-weighted MPRAGE (TE=2.56 ms, TR=1690 ms, TI=1100 ms, FOV=25.6 cm×22.4 cm, flip angle=12° 176 contiguous sagittal 1-mm thick slices) and field map were also collected. E-prime software (version 1, www.pstnet.com; Psychology Software Tools) running on a Windows computer connected to the MR scanner presented visual stimuli and recorded the time of each MR pulse, visual stimulus onset, and behavioral responses.

Preprocessing and statistical analysis were conducted using FSL (v. 4.1.7, FMRIB, Oxford University, Oxford, UK). For each subject, preprocessing included motion correction with MCFLIRT, brain extraction using BET, spatial smoothing with a 7-mm FWHM Gaussian kernel and temporal high-pass filtering (cutoff = 100 s). Statistical analyses were performed at the single-subject level (GLM, FEAT v. 5.98). Each localizer time series was modeled with three explanatory variables (EVs; face, object, and texture) versus baseline convolved with a double gamma HRF, and a temporal derivative. Contrasts of interest were face > fixation, object > fixation, texture > fixation, face > object, face > texture, and object > texture. For each subject, face localizer contrast maps were registered via the subject’s high-resolution T1-weighted anatomical image to the MNI-152 template (12-parameter affine transformation; FLIRT) yielding images with spatial resolution of 2 × 2 × 2 mm. Mixed-effects group analyses (using FLAME1+2) yielded the group level statistical parametric map of each contrast. Group-level maps were cluster thresholded (Worsley, 2001) using corrected significance of p = 0.05 and Z > 2.44 for faces or 4.86 for objects (minimum cluster size = 831 or 1 voxels, respectively). A higher threshold was used for objects because the clusters were large and not easily decomposed at a lower threshold. Face-preferential regions were then isolated using logical combination (Joseph & Gathers, 2002; Joseph, Partin & Jones, 2002; Joseph, Gathers, & Bhatt, 2011) of the group-level contrasts: face > object and face > texture and face > fixation. Object-preferential regions were identified by logical combination of objects > textures and objects > faces and objects > fixation. The logical combination was conducted at the group level rather than the individual-subject level to avoid the possibility that a given subject would show no above-threshold activation for the combination of contrasts, which would produce 0’s in the follow-up analyses. Because logical intersection was applied to the cluster-thresholded maps, the size of the resulting clusters could be smaller than the minimum cluster size. Hypothesis-testing was conducted in confirmatory repeated measures ANOVAs (which account for multiple subjects) with Bonferroni-corrected (alpha = .017) post-hoc tests. These ANOVAs tested the main effect of category (face, object, texture) on percent signal change relative to baseline with planned contrasts using face (or object for object-preferential regions) as the reference category. All of the face-preferential regions in Table 1 showed a significantly greater face than object or texture response (all p’s < .017; similarly for object-preferential regions) except the left amygdala in which one comparison fell short of significance (p = .02). In addition, all regions showed face (object) > fixation using a one-sample t-test comparing to 0 (all p’s < .017). Therefore, the preferential regions in Table 1 showed a stronger response for the condition of interest (face or object) compared to three other conditions.

Table 1.

Regions of Interest Isolated by the Face Localizer Task

| Region | Size | Coordinates (mm) | Main effects | Interactions | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||

| Category | Similarity | Processing type | C × S | C × P | P × S | C × P × S | |||||

|

| |||||||||||

| x | y | z | F(1, 48) = | F(3, 144) = | F(2, 48) = | F(3, 144) = | F(2, 48) = | F(6, 144) = | F(6, 144) = | ||

| RFFA | 312 | 43 | −52 | −21 | 28.1a | – | – | 5.48a | 5.4b | 2.6c | – |

| ROFA | 319 | 37 | −79 | −16 | – | 3.3c | 3.5c | – | 4.9c | – | – |

| RAMG | 963 | 24 | −12 | −12 | 25.4a | – | – | – | – | – | – |

| RIFG | 1531 | 47 | 26 | 7 | 14.5a | 4.9b | – | 3.57c | – | – | 2.4c |

| RoLOC* | 416 | 35 | −82 | 9 | 72.1a | 10.6a | – | – | 3.6c | 2.5c | – |

| RtLOC* | 31 | 44 | −62 | −4 | 5.2c | 5.1a | – | – | 11.9a | – | – |

| RfLOC* | 273 | 28 | −43 | −14 | 223.5a | – | – | 2.96c | 6.7b | – | – |

| RCAS | 592 | 15 | −92 | −1 | 13.9b | – | – | – | 4.8c | – | – |

| LAMG | 410 | −20 | −12 | −12 | 16.5a | – | – | – | – | – | – |

| LoLOC* | 306 | −35 | −85 | 9 | 59.0a | 8.5a | – | 3.55c | 6.1b | – | – |

| LtLOC* | 329 | −42 | −66 | −2 | 19.7a | 9.6a | – | – | 25.0a | – | 2.8c |

| LfLOC* | 308 | −29 | −46 | −13 | 175a | – | – | 3.3c | 6.4b | – | – |

Notes.

p<0.001

p < 0.01

p< 0.05

these regions were isolated as object-preferential; all other regions are face-preferential; AMG: amygdala; CAS: calcarine sulcus ; FFA: fusiform face area; IFG: inferior frontal gyrus; L: left; OFA: occipital face area; fLOC: lateral occipital complex, fusiform portion; oLOC: lateral occipital complex, occipital portion; tLOC: lateral occipital complex, temporal portion; R: right. Size is in voxels with spatial resolution of 2 × 2 × 2 mm. Minimum cluster extent for each individual contrast prior to logical combination was 831 to 920 voxels for face-preferential regions (Z > 2.44) and 1 voxel for object-preferential contrasts (Z > 4.86). However, cluster sizes could be smaller than this after logical intersection. In addition, the LOC was manually divided into three segments based on anatomical boundaries, leading to smaller clusters

For the face and house runs, the different sim level blocks were modeled as one task EV (all with the same event strength) and a second similarity EV (higher sim blocks were assigned a higher event strength) for the voxel-wise analyses. This approach controls for the overall task effect while isolating fMRI signal modulation due to sim. The assignment of the values 1 through 4 to event strengths representing the different sim level blocks is consistent with the experimental manipulation in that each subsequent similarity level introduces one additional feature or spatial relation relative to the prior similarity level. Statistical maps isolated by mixed-effects group analyses (FLAME1 + 2) for the task and similarity EVs were cluster thresholded (Z > 3.1 and corrected significance of p = 0.05 (minimum cluster size = 225 voxels) then combined using logical intersection (“and”).

For each face- and object-preferential and face-matching ROI, % signal change relative to fixation (all EV heights of 1) was extracted for each sim level and category in each subjects’ first level analysis (using Featquery). For the ROIs from the localizer task, the percent signal change extracted (from the matching runs) was logically independent from the signal used to define the ROIs. The matching-task ROIs were based only on 1 of those 6 conditions; therefore, 83% of the data were logically independent from the data used to define the ROI. In fact, 67% of the percent signal change data in matching-task ROIs were completely independent because that 67% came from different subjects. We acknowledge that within featural-face ROIs, for example, we would expect the effect of similarity to be significant because that is how the ROI was defined. However, the critical aspect of hypothesis testing was whether the similarity effect also emerged for the other 5 conditions. Therefore, although there is a small degree of dependence in the data, the critical hypotheses are based on data that is logically independent from defining the ROIs.

Percent signal change for the 4 sim levels × 2 categories for each subject were submitted to a 3 (processing type: featural, 1st order, 2nd order) × 4 (sim) × 2 (category: face, house) repeated measures ANOVA with processing type as the between-subjects factor for each ROI. Reaction time on each trial was log-transformed to normalize the distribution of RT. Outliers were defined as three standard deviations above or below the mean RT (.1 % of the data). Error rate and correct log-transformed reaction time (logRT) were initially analyzed with a 3 (processing type: featural, 1st order, 2nd order) × 4 (sim level) × 2 (category: face, house) × 2 (trial type: same, different) repeated measures ANOVA with a between-subjects factor (processing type) to demonstrate that the similarity manipulation was more pronounced for “different” trials. Having established that (see Results) we then collapsed over trial types and conducted a 3 (processing type: featural, 1st order, 2nd order) × 4 (sim level) × 2 (category: face, house) repeated measures ANOVA for errors and logRT. Collapsing over trial type for the analysis of errors and logRT was also consistent with the ROI repeated measures ANOVAs because using a block design did not allow deconvolution of the fMRI signal for specific trials. For all repeated measures ANOVAs, results from the univariate tests are reported because there were no sphericity violations, following guidelines by Hertzog and Rovine (1985). When interactions with sim emerged, we used simple effects analysis (Keppel & Zedeck, 1989) and planned polynomial contrasts to determine whether the sim effect was monotonically increasing. “Monotonically increasing” was indicated by (a) a significant linear fit where the slope was positive or (b) a significant quadratic fit in which repeated contrasts of successive sim levels indicated that at least one sim level was greater than the prior level. If the best polynomial fit was cubic, or if the quadratic fit indicated local decreases for any adjacent sim levels, then the sim effect was not monotonic. Establishing positive monotonicity was critical for concluding that perceptual differentiation was exhibited in a given ROI. Below, when we report simple effects of sim, those effects were monotonically increasing according to the above definition.

Results

Behavioral Results

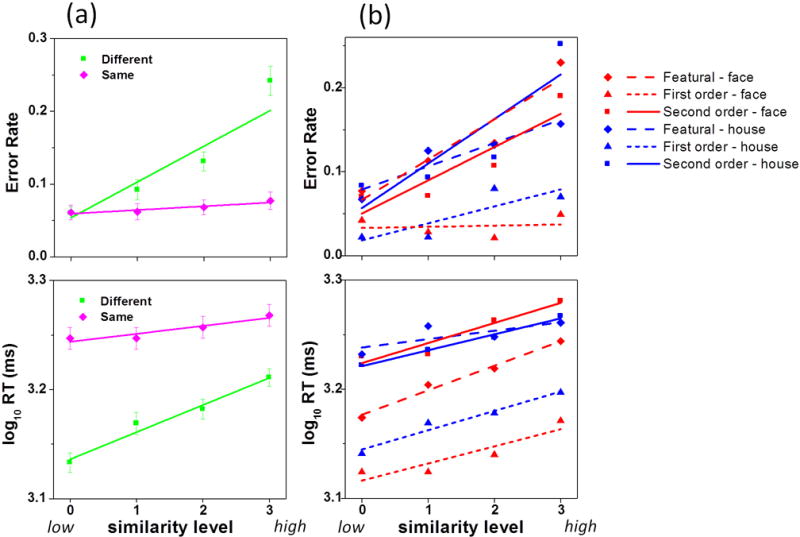

An initial analysis of behavior was analyzed with a Similarity × Same/Different repeated measures ANOVA to establish that the effect of similarity was more pronounced on “different” than “same” trials. This interaction was significant for both errors, F(3, 165) = 41.0, p < .0001, and logRT, F(3, 165) = 43.3, p < .0001. As shown in Figure 3a, modulation of behavior due to similarity was driven more strongly by “different” than “same” trials. The contribution of “same” trials was constant across sim levels for errors and nearly constant across sim levels for logRT. The blocked design did not allow us to separately examine “same” and “different” responses; therefore, in all subsequent analyses, we collapsed across “same” and “different” trials given that the contribution of “same” trials to the similarity effect was nearly constant.

Figure 3.

(a) log-transformed Reaction Time (logRT) and error rates as a function of similarity level and same/different responding. (b) RT and errors in each of the 6 experimental conditions. Error bars are standard errors.

As expected, both error rate and logRT increased as a function of perceptual similarity (Figure 3), which demonstrated that this manipulation was effective at modulating perceptual discrimination performance. For logRT, the main effect of similarity, F(3, 144) = 97.7, p = .0001, and the Category × Sim × Processing Type interaction were significant, F(6, 144) = 2.3, p = .039. Simple main effects of sim (3 processing types × 2 categories) were all significant (p’s < .001). The main effect of processing type, F(2, 48) = 19.6, p = .0001, showed that 1st order responding was faster than 2nd order or featural. The category main effect, F(1, 48) = 13.6, p = .001, indicated that houses had longer RTs. The Category × Processing Type interaction, F(2, 48) = 8.1, p = .001, further qualified this effect – houses took more time to respond than faces for featural (p = .002) and 1st order processing (p = .004) but not for 2nd order processing.

For errors, the main effect of sim, F(3, 144) = 60.7, p = .0001, and the Category × Sim × Processing Type interaction, F(6, 144) = 4.7, p = .0001 were significant. Simple main effects of sim were significant for all processing type and category conditions (p’s < .002) except for 1st order face processing (p = .44). The processing type main effect, F(2, 48) = 20.6, p = .0001, showed that 1st order processing was easier than 2nd order or featural. The Category × Processing Type interaction, F(2, 48) = 4.0), p = .024, and simple category effect for each processing type indicated that houses were more difficult than faces for 2nd order processing (p = .015); otherwise, houses and faces were equated for featural and 1st order performance.

Regions of Interest from the Localizer Task

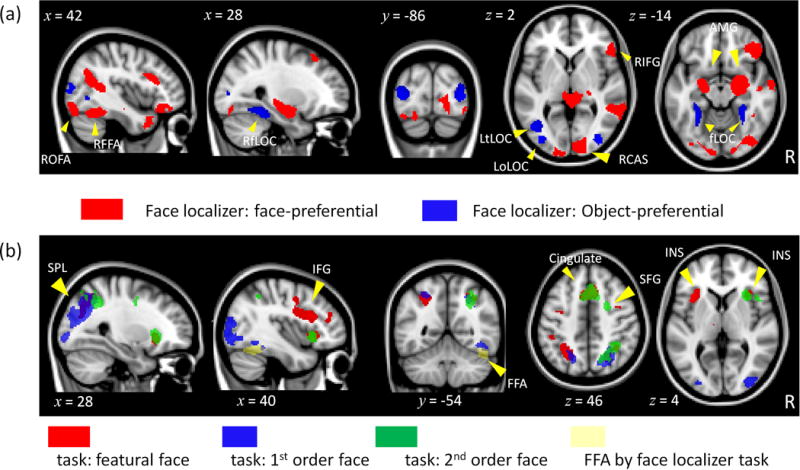

Face- or object-preferential regions are outlined in Table 1 with activation maps shown in Figure 4a. As expected, face-preferential regions included the right FFA, bilateral OFA, right inferior frontal gyrus (IFG), right STS, bilateral amygdala (AMG) and the bilateral calcarine sulcus (CAS). Object-preferential regions included a large expanse of bilateral occipito-temporal cortex consistent with the lateral occipital complex (LOC; Malach et al., 1995). We manually divided the LOC into three portions: occipital, fusiform and temporal. ROIs with negative % signal change extracted from the matching task (brain stem, medial prefrontal cortex, right temporal pole, right STS, right temporal–parietal junction) and ROIs with no main effects or interactions (left OFA, left CAS) were not included in Table 1. The repeated measures ANOVA conducted in each face- (or object-) preferential region was expected to reveal a main effect of category with fMRI signal greater for faces than houses or vice versa. The category main effect was significant in all ROIs except the right OFA and the right CAS (Table 1). However, the critical test was whether a region responded to perceptual differentiation for the non-preferred category and for different processing types. Although the right FFA showed perceptual differentiation only for faces (significant Category × Sim interaction and significant simple effects of sim for faces, p’s < .003), the sim trend was not monotonically increasing. However, the right FFA showed evidence for process specificity: the sim effect was significant and monotonically increasing only for 1st order (p < .001). The right IFG showed a significant 3-way interaction with simple main effects of sim for featural and 1st order faces (p’s < .01) but the trend was monotonically increasing only for featural faces. The right occipital LOC showed perceptual differentiation only for 1st order processing (Processing × Sim interaction; simple effect of sim, p < .009) regardless of category, thereby supporting process specificity. The left temporal LOC showed perceptual differentiation only for 1st order houses (significant three-way interaction; simple effect of sim, p < .004). The right OFA showed evidence for generalized perceptual differentiation: the main effect of sim was not further qualified by category or processing type.

Figure 4.

Group-level activation maps for (a) face-preferential (Z > 2.44, p = .05, corrected) and object-preferential (Z > 4.86, p = .05, corrected) activation in the localizer run and (b) featural (red), 1st order (blue) and 2nd order (green) matching in the task runs (Z > 3.1, p = .05, corrected).

Regions of Interest from the Face-matching Run

Regions that emerged from the logically combined task and sim-weighted EVs for faces for each processing type are listed in Table 2 and illustrated in Figure 4. Featural face processing was associated with the most extensive activation that included symmetric activation in the insula (INS), superior parietal lobule (SPL) and IFG, and activation in the cerebellum and anterior cingulate. Second-order and featural processing overlapped in the cingulate, right INS and right SPL, but 2nd order uniquely recruited the right superior frontal gyrus (SFG). First-order processing emerged in the bilateral SPL, which partially overlapped with featural and 2nd order activation, and the right fusiform gyrus in a region somewhat consistent with the right FFA. The repeated measures ANOVA conducted in each region was expected to reveal monotonically increasing similarity functions for the processing type and category that isolated the region in the voxel-wise analysis. However, the critical test was how the region responded to the other category and processing types not isolated in the voxel-wise analysis. As shown in Table 2, the majority of regions showed a significant Category × Sim × Processing interaction. Analysis of simple effects of sim for each condition revealed that these regions showed perceptual differentiation that crossed category and processing type boundaries (consistent with generalized perceptual differentiation). None of these regions showed perceptual differentiation only for 2nd order faces or featural houses (category optimized account). Three regions (cerebellum from the featural task, ACC and right superior frontal from the 2nd order task) showed a Category × Sim interaction which is potentially consistent with domain-specificity. However, analysis of simple effects of sim in all three regions revealed perceptual differentiation for both faces and houses (with different slopes for the two categories). No regions showed only a Processing × Sim interaction which would be consistent with process specificity.

Table 2.

Regions of Interest Isolated in the Face-Matching Runs.

| Region | Size | Coordinates (mm) | Main effects

|

Interactions

|

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Category | Similarity | Processing type | C × S | C × P | P × S | C × P × S | |||||

|

|

|

||||||||||

| x | y | z | F(1, 48) = | F(3, 144) = | F(2, 48) = | F(3, 144)= | F(6, 144)= | F(6, 144)= | F(6, 144)= | ||

| Cingulate (F) | 520 | 2.3 | 19.0 | 41.9 | – | 12.5a | 6.8b | 3.8c | – | – | 2.3c |

| RINS(F) | 378 | 36.0 | 20.8 | −3.5 | – | 18.6a | 8.0b | 5.0b | – | – | 2.9c |

| LINS (F) | 322 | −32.8 | 20.5 | −0.7 | – | 8.7a | 6.1b | 5.6b | – | – | 3.5b |

| RIFG (F) | 856 | 46.7 | 14.6 | 22.9 | 8.3b | 11.1a | 4.6c | 3.6c | – | – | 4.4a |

| LIFG (F) | 125 | −42.7 | 2.9 | 27.7 | – | 7.3a | – | – | 6.4b | – | 3.0b |

| RSPL (F) | 283 | 24.8 | −65.6 | 40.5 | 63.4a | 18.0a | – | 3.3c | 5.9b | – | 3.0b |

| LSPL (F) | 387 | −27.0 | −56.1 | 44.0 | 24.0a | 15.6a | – | – | 5.8b | – | 2.9c |

| Cerebellum (F) | 229 | −7.4 | −75.6 | −30.1 | – | 8.3a | – | 4.1b | – | – | – |

| LSPL (1) | 766 | −26.8 | −72.8 | 30.9 | 95.9a | 13.3a | – | 3.0c | 10.9a | – | 2.3c |

| RSPL (1) | 2142 | 31.3 | −74.7 | 22.3 | 56.3a | 13.2a | – | 3.3c | 8.6b | 2.7c | – |

| Above RFFA (1) | 206 | 46.0 | −54.9 | −13.0 | – | 4.9b | – | 5.8b | 2.8c | – | |

| RSPL (2) | 684 | 26.7 | −56.3 | 46.3 | 39.0a | 18.8a | 5.1c | −3.0c | – | 2.6c | 3.3b |

| RINS (2) | 484 | 35.1 | 20.2 | −1.4 | – | 18.9a | 7.2b | 4.7b | – | – | 3.1b |

| Cingulate (2) | 842 | 3.0 | 18.0 | 43.1 | – | 11.4a | 7.6b | 3.6c | – | – | – |

| RSFG (2) | 116 | 24.0 | −0.1 | 45.8 | 23.0a | 11.6a | 7.0b | 3.8c | – | – | – |

Notes.

p<0.001

p < 0.01

p< 0.05, - indicates non-significant results. Regions were isolated from featural (F), first-order (1), or second-order (2) face processing. INS: insula; IFG: inferior frontal gyrus; L: left; R: right; SPL: superior parietal; FFA: fusiform face area; SFG: superior frontal gyrus. Size is in voxels with spatial resolution of 2 × 2 × 2 mm. Minimum cluster extent for each individual contrast prior to logical combination was 225 to 305 voxels (Z > 3.1). However, cluster sizes could be smaller than this after logical intersection.

Aggregate regions

Given the large number of ROIs in Tables 1 and 2 and the fact that several of these ROIs overlapped, we aggregated the ROIs into six broad anatomical groupings: (a) amygdala, (b) occipito-temporal cortex, (c) occipital cortex, (d) insula-anterior cingulate, (e) lateral frontal cortex, and (f) parietal cortex (Table 3). Percent signal change was averaged over individual ROIs in each aggregate group (by category, sim and processing type conditions) and critical hypotheses outlined in Figure 1 were tested using repeated measures ANOVAs (Table 3) with category and similarity as repeated factors and processing type as the between-subjects factor.

Table 3.

Aggregate Region of Interest Analysis

| Region | Components | Main effect of

|

Interaction

|

|||||

|---|---|---|---|---|---|---|---|---|

| Category | Similarity | Processing-type | C × S | C × P | P × S | C × P × S | ||

| F(1, 48) = | F(3, 144) = | F(2, 48) = | F(3, 144)= | F(6, 144)= | F(6, 144)= | F(6, 144)= | ||

| Amygdala | RAMG (FL), LAMG (FL) | 21.8a | – | – | – | – | – | – |

| Occipital | RoLOC (FL), RtLOC (FL), RfLOC (FL), RCAS (FL), LoLOC (FL), LtLOC (FL), LfLOC (FL) | 111.1a | 5.1b | – | 3.3c | 13.0a | – | – |

| Occipito-temporal | RFFA (FL), ROFA (FL), Above FFA (1) | 9.7c | 2.8c | – | 3.7c | 4.9c | 2.2c | – |

| Parietal | RSPL (F), LSPL (F), RSPL (1), LSPL (1), RSPL (2) | 60.9a | 17.8a | – | 3.0c | 7.2b | 2.4c | 2.8c |

| Insula-cingulate | RINS (F), LINS (F), Cingulate (F), RINS (2), Cingulate (2) | – | 14.4a | 8.5b | 5.2b | – | – | 3.1b |

| Lateral prefrontal | RIFG (FL), RIFG (F), LIFG (F), RSFG (2) | – | 9.4a | 3.8c | 3.9c | – | – | 3.1b |

Notes.

p<0.001

p < 0.01

p< 0.05, - indicates non-significant results. Regions were isolated from face localizer (FL), featural (F), fist-order (1), or second-order (2) matching. AMG: amygdala; CAS: calcarine sulcus ; FFA: fusiform face area; IFG: inferior frontal gyrus; INS: insula; fLOC: lateral occipital complex, fusiform portion; oLOC: lateral occipital complex, occipital portion; tLOC: lateral occipital complex, temporal portion; OFA: occipital face area; SFG: superior frontal gyrus; SPL: superior parietal.

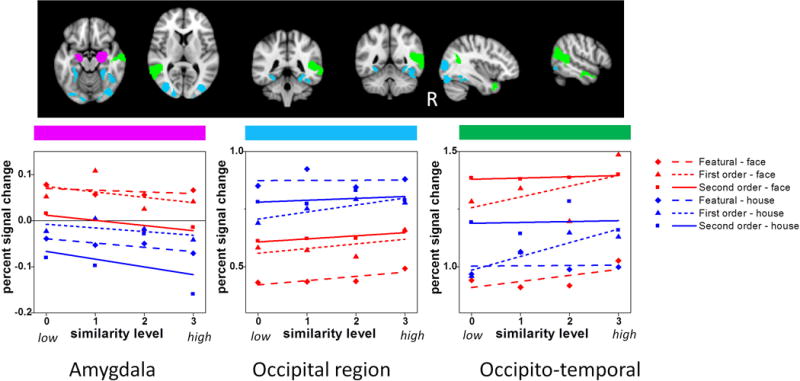

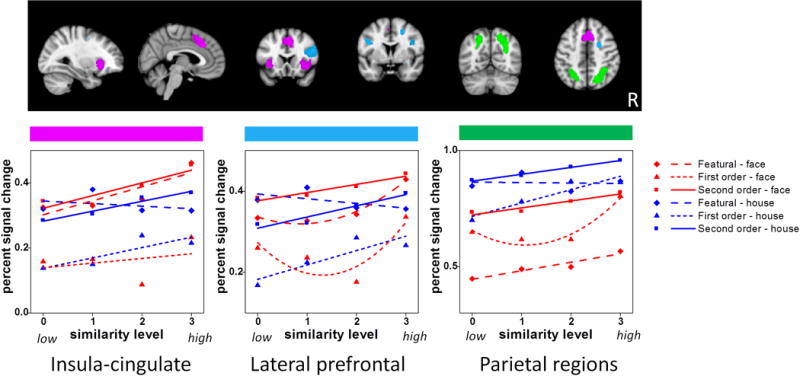

In Figure 5, the amygdala showed a face-preference but no effect of similarity, processing type or interactions. Occipital cortex showed a significant Category × Similarity interaction, but sim effects were significant for both faces (p = .002) and houses (p = .044). The interaction was driven by slightly different shapes of the similarity function for each category. The occipito-temporal region showed a significant Category × Similarity interaction in which the sim effect was significant for faces (p = .006) but not for houses (p = .112), consistent with domain specificity. However, the significant Processing Type × Sim interaction (consistent with process specificity) indicated that the sim effect was significant only for 1st order (p = .001) but not for featural (p = .12) or 2nd order (p = .45) processing. The remaining three regions (insula-cingulate, lateral prefrontal and parietal cortex) showed evidence for generalized perceptual differentiation given the significant Category × Similarity × Processing Type interactions. In these regions, simple effects of sim were significant for featural faces and 1st order houses. Lateral prefrontal and parietal cortex also showed a significant simple effect of sim for 1st order faces, whereas insula-cingulate cortex also showed a significant simple effect of sim for 2nd order faces.

Figure 5.

fMRI signal plotted as a function of similarity, category and processing type in three aggregate ROIs: (a) amygdala, (b) occipital region, and (c) occipito-temporal region.

A supplemental ANOVA also explored hemispheric differences in aggregate regions (ANOVA included a repeated factor “hemisphere” for all but the occipito-temporal region because that was composed only of right-hemisphere regions). The main effect of hemisphere was significant for the occipital (right > left, F(1, 48) = 69.5, p = .0001), insula-cingulate (right > left, F(1, 48) = 68.2, p = .0001) and lateral frontal (left > right, F(1, 48) = 10.8, p = .002) regions. A Hemisphere × Category × Processing Type interaction (F(2, 48) = 8.1, p = .001) emerged in the lateral frontal region indicating greater left-hemisphere activation for featural face and house processing but no hemisphere effect for the other conditions and no interactions with similarity, suggesting this preference is not related to perceptual differentiation.

Concerns About Task Difficulty

To address whether fMRI signal in the matching-task ROIs were driven by task difficulty, apart from the similarity manipulation, we examined the association of percent signal change relative to baseline in the face conditions (averaged across sim level) and behavioral performance (averaged across sim level) in these regions (see Joseph & Gathers, 2003). We chose to examine signal magnitude for this analysis rather than cluster extent given that cluster extent depends heavily on arbitrary thresholding. If fMRI signal is driven by task difficulty that is not related to similarity modulation, then participants who performed more poorly (as indexed by longer RT and more errors) should also produce greater signal. In other words, correlations between fMRI signal and performance should be positive if task difficulty drives responses in a given region. Spearman correlations were conducted for each ROI in Table 2. In featural ROIs, this correlation was conducted using only the subjects in the featural condition and likewise for the 1st and 2nd order ROIs. Only the right superior frontal gyrus showed a negative correlation between face percent signal change and face logRT in the 2nd order condition. Higher fMRI signal was associated with faster responding, which indicates that this region was associated with better performance, not task difficulty. Therefore, greater fMRI signal in similarity-modulated regions reflects perceptual differentiation rather than greater effort or resources devoted to processing, apart from the similarity manipulation.

Discussion

The present study examined the degree to which face and object processing regions exhibit domain-specificity (i.e., perceptual differentiation of faces or houses but little sensitivity to processing type), process-specificity (i.e., perceptual differentiation for processing type but little sensitivity for a given category), category-optimized processing (i.e., perceptual differentiation for configural face processing or featural house processing) or generalized perceptual differentiation (i.e. perceptual differentiation crossing category and processing type distinctions). Similarity was parametrically varied based on three different processing types, which directly manipulated the component process of perceptual differentiation of featural, 1st order or 2nd order information in faces and houses. Evidence for a strong domain-specific account of perceptual differentiation was minimal whereas evidence for generalized perceptual differentiation was more abundant. Each of the different accounts is discussed below.

Evidence for Domain-specificity

The right FFA, right IFG and aggregate occipito-temporal region showed evidence for perceptual differentiation of faces but not houses, consistent with domain-specificity. However, the right FFA / occipito-temporal region also showed 1st order perceptual differentiation, but not specifically for faces, consistent with process-specificity. In addition, the right IFG from the localizer task showed featural face processing but this did not persist in the aggregate analysis. These findings suggest that the right FFA / occipito-temporal cortex is involved in both face and 1st order processing and the right IFG is involved in both featural and face processing. Maurer, Le Grand, and Mondloch (2002) suggested that the right FFA is sensitive to 1st order face information and may be involved in making the basic distinction between faces and objects. Following this, we suggest that the right FFA and surrounding occipito-temporal regions may process 1st order information in a stimulus en route to making this face/non-face determination. In other words, this aggregate region processes 1st order information in both faces and houses, but also accumulates information that a stimulus is a face based on this 1st order information, which leads to a bias toward processing faces over houses.

However, other findings have suggested that the right FFA is involved in featural, and 2nd order in addition to 1st order processing (Liu et al., 2010; Maurer et al., 2007; Yovel & Kanwisher, 2004). One reason that the present study did not find evidence for both featural and 2nd order processing in the FFA is that the present simultaneous matching task may have emphasized analytical processing of the elements of a face more than a sequential matching or 1-back task, as used in these other studies. With simultaneous matching, the discrepant features can be directly compared and perceptually analyzed within the same time interval. With sequential matching, the first stimulus must be briefly remembered in order to compare with the second stimulus, which may have engaged holistic processing in that remembering the individual features may have been easier to encode as an integrated percept. Consequently, with sequential matching, both the configural and featural conditions may have engaged holistic strategies, so that the lack of a differential response in the right FFA may have been driven by holistic processing rather than featural or 2nd order processing. The right FFA shows a stronger response to holistic than to parts-based processing of faces (e.g., Axelrod & Yovel, 2010; Harris & Aguirre, 2010; Rossion et al., 2000; Schiltz & Rossion, 2006). Although the present tasks did not require holistic processing, the current findings are consistent with the idea that the right FFA shows a weaker response to featural or analytical processing.

The right and left AMG showed a preference for faces over houses in the matching task but no sensitivity to processing type and no similarity modulation. Because the faces used in this study varied little in terms of facial expression, the AMG activation was not likely related to processing emotion or expression. The AMG has been described as a salience detector (Santos, Mier, Kirsh, & Meyer-Lindenberg, 2011) rather than a region that responds only to threatening stimuli. All faces, regardless of emotional content, are salient to humans and may be given attentional priority for processing (Palermo & Rhodes, 2007). We suggest that the AMG may be involved in detecting the presence of faces. Differential processing of featural and configural information in the AMG did not emerge, but Sato, Kochiyama, and Yoshikawa (2011) reported that the AMG showed a reduced response to inversion, thereby implicating a role in configural processing. However, inversion is an indirect test of configural processing and may make a stimulus less face-like, and consequently, less salient, thereby reducing the amygdala response.

Evidence for Process-specificity

Consistent with process-specificity, some regions showed evidence for processing only 1st order information, regardless of category (right FFA, right oLOC and left tLOC). However, as discussed, sensitivity that was exclusive to 1st order processing did not persist in the aggregate analysis and the right FFA and occipito-temporal cortex also showed evidence for domain-specificity. Although the evidence was somewhat weak for 1st order specificity, we suggest that the general function of regions sensitive to 1st order processing is to initially determine whether a stimulus is a face or non-face. The present task did not require this determination, but fMRI signal modulation by 1st order information suggests sensitivity to disruptions in 1st order processing which implies that these regions normally process 1st order information. The left lateral frontal cortex showed a preference for featural processing, but not specific for faces. This appears to be the only region that showed evidence for process specificity, but the hemispheric modulation did not interact with similarity. Nevertheless, preference for featural processing in the left lateral frontal cortex is consistent with Maurer et al. (2007) for face stimuli but the present study showed that this preference is not face-specific.

Evidence for Category-optimized Processing

Evidence for category-optimized processing was minimal – no regions showed perceptual differentiation of configural face or featural house information. This is surprising given the importance of 2nd order processing for faces (e.g., Diamond & Carey, 1986). Potentially, the lack of evidence for category-optimized processing was due to using a perceptual matching task rather than face identification or emotion recognition, which may preferentially emphasize different types of perceptual information in faces. Kadosh, Henson, Kadosh, Johnson, and Dick (2010) examined changes in identity, expression and gaze and found that fusiform and inferior occipital activation was highly overlapping for identity and expression processing. They suggested that this overlap was due to demands on featural and configural processing. Similarly, psychophysical studies have shown that featural and configural information processing are not as separable as once thought (Sekuler et al., 2004). The present results similarly showed that featural and 2nd order face processing are not very separable in terms of neural substrates.

Evidence for Generalized Perceptual Differentiation

Nearly all regions showed processing of more than one category and processing type despite the fact that the voxel-wise analyses isolated regions that either preferred faces (in the localizer run) or showed perceptual processing of faces in the task runs. Regions involved in differentiation of faces almost always differentiated houses. This is not surprising given that perceptual differentiation is a component process of discriminating items within visually homogenous categories. Many of the regions typically attributed to face processing may instead reflect a process of making fine distinctions among stimuli that are highly similar in shape. The use of house stimuli that were well matched with the face stimuli in terms of number of features and the spatial relations of those features revealed very few category differences. Instead, the degree of perceptual similarity was a stronger influence on performance and fMRI signal in most regions. In addition, the influence of perceptual similarity was not driven by task difficulty because many regions that showed differentiation in the more difficult conditions (e.g., 2nd order or featural processing) also showed differentiation in the easier condition (1st order processing).

The right OFA showed generalized perceptual differentiation that was not qualified by higher order interactions. Others have suggested that the right OFA is as essential as the right FFA in face processing (Rossion et al., 2003), shows more face specialization than the right FFA in adults (Joseph et al., 2011), acts early in the face processing stream (Harris & Aguirre, 2008) by passing along information to the FFA (Fairhall & Ishai, 2007; Haxby, Hoffman, & Gobbini, 2000), and builds up a face representation in a hierarchical manner by analytically processing features (Pitcher, Walsh, Yovel, & Duchaine, 2007). The present study did not show that the right OFA was preferentially sensitive to featural face information, in contrast to Pitcher et al.’s study in which rTMS disrupted 1-back matching of faces that differed in featural but not in 2nd order information. They also showed that the disruption of featural processing occurred only in an earlier (60–110 ms following stimulus onset) but not in later time windows. Because fMRI cannot resolve processing at the same temporal resolution as double-pulse TMS, this effect could not be detected in the present study. However, the preference for featural face processing was not dominant enough to drive the responding in the right OFA in the present study.

The present finding of generalized perceptual differentiation in the right OFA is consistent with another study (Haist et al., 2010) showing that the right OFA was involved in differentiating stimuli from visually homogenous categories (faces or watches). The right OFA was slightly more sensitive to perceptual differentiation than the right FFA. Based on that finding and the present results, we suggest that the right OFA is involved in perceptual differentiation of items within the same category, as opposed to making a face versus non-face distinction which relies on 1st order information (as in the right FFA). Generalized perceptual differentiation in the service of making fine within-category distinctions is consistent with the idea that the right OFA acts early in processing (Fairhall & Ishai, 2007; Haxby et al., 2000; c.f., Kadosh et al., 2010) and is as essential as the right FFA in face discrimination (Rossion et al., 2003).

Cortical distribution of Information Processing

The matching task was associated with only minimal activation in occipito-temporal regions (except the right fusiform region from 1st order face matching), which may be surprising in light of many studies that have isolated functional regions like the FFA, OFA and LOC. However, face localizer tasks (which strongly implicate occipito-temporal regions) do not necessarily isolate processing that is relevant for higher-level face processing (Berman et al., 2010; Ng, Ciaramitaro, Anstis, Boynton, & Fine, 2006). In addition, others have noted that face processing relies on an extended network (Haxby et al., 2000), including frontal regions (Chan & Downing, 2011; Fairhall & Ishai, 2007; Maurer et al., 2007). Prior studies have also demonstrated that superior parietal cortex is involved in perceptual discrimination of non-face items that are highly similar in shape (e.g., Joseph & Gathers, 2003), consistent with the present findings.

The heavy involvement of frontal and parietal regions in perceptual differentiation of two visually homogenous categories, coupled with the finding that category and processing type effects were not purely additive in most brain regions suggests that processing different kinds of perceptual information likely occurs in a distributed brain system. Interestingly, the aggregate analysis showed that information processing in the amygdala was described only by a main effect of category, but in the LOC / occipital cortex the category effect was further qualified by two 2-way interactions whereas in the occipito-temporal region, all three 2-way interactions were significant. In parietal and frontal regions the higher order 3-way interactions were significant. This suggests that information processing in regions associated with “early” processing stages (amygdala, occipital or occipito-temporal cortex) is driven by category or processing type but not by the integration of that information. Regions associated with higher-order processing, however, show more complex integration of information (as indexed by the 3-way interactions). Potentially, a process of evidence accumulation occurs simultaneously in multiple brain regions during the matching task before making a final perceptual decision, as described by Ploran et al. (2007). In other words, many regions are involved in perceptual differentiation, but these regions interact and further qualify the perceptual differentiation based on category or processing type in other regions. Face processing, then, may rely on some of the same cognitive operations (and neural substrates) that are engaged for object processing, but face processing is distinguished from object processing by the interaction of multiply activated regions that accumulate perceptual evidence in favor of faces. The distributed nature of the information processing may be due to the fact that perceptual differentiation is a component process of many higher-order face tasks such as identification or emotion recognition. Had these other tasks been employed, there may have been greater evidence for domain-specific or category-optimized processing.

Figure 6.

fMRI signal plotted as a function of similarity, category and processing type in three aggregate ROIs: (a) parietal region, (b) insula-cingulate region, and (c) lateral prefrontal region.

Acknowledgments

This publication was supported by NIH grant R01 HD 052724-04 and by a pilot grant from Autism Speaks. The contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH or Autism Speaks.

References

- Axelrod V, Yovel G. External facial features modify the representation of internal facial features in the fusiform face area. NeuroImage. 2010;52:720–725. doi: 10.1016/j.neuroimage.2010.04.027. [DOI] [PubMed] [Google Scholar]

- Berman MG, Park J, Gonzalez R, Polk TA, Gehrke A, Knaffla S, Jonides J. Evaluating functional localizers: the case of the FFA. Neuroimage. 2010;50:56–71. doi: 10.1016/j.neuroimage.2009.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AWY, Downing PE. Faces and eyes in human lateral prefrontal cortex. Frontiers in Human Neuroscience. 2011;5:51. doi: 10.3389/fnhum.2011.00051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond R, Carey S. Why faces are and are not special: An effect of expertise. Journal of Experimental Psychology: General. 1986;115:107–117. doi: 10.1037//0096-3445.115.2.107. [DOI] [PubMed] [Google Scholar]

- Drucker DM, Aguirre GK. Different spatial scales of shape similarity representation in lateral and ventral LOC. Cerebral Cortex. 2009;19:2269–2280. doi: 10.1093/cercor/bhn244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cerebral Cortex. 2007;17:2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nature Neuroscience. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. Journal of Cognitive Neuroscience. 2000;12:495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Haist F, Lee K, Stiles J. Individuating faces and common objects produces equal responses in putative face-processing areas in the ventral occipitotemporal cortex. Frontiers in Human Neurosciences. 2010;4:181. doi: 10.3389/fnhum.2010.00181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris AM, Aguirre GK. The effects of parts, wholes, and familiarity on face-selective responses in MEG. Journal of Vision. 2008;8:1–12. doi: 10.1167/8.10.4. [DOI] [PubMed] [Google Scholar]

- Harris AM, Aguirre GK. Neural tuning for face wholes and parts in human fusiform gyrus revealed by fMRI adaptation. Journal of Neurophysiology. 2010;104:336–345. doi: 10.1152/jn.00626.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Hertzog C, Rovine M. Repeated measures analysis of variance in developmental research: Selected issues. Child Development. 1985;56:787–809. [PubMed] [Google Scholar]

- Joseph JE. Functional neuroimaging studies of category specificity in object recognition: A critical review and meta-analysis. Cognitive, Affective, & Behavioral Neuroscience. 2001;1:119–136. doi: 10.3758/cabn.1.2.119. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD. Natural and manufactured objects activate the ‘fusiform face area’. NeuroReport. 2002;13:935–938. doi: 10.1097/00001756-200205240-00007. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD. Effects of structural similarity on neural substrates for object recognition. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:1–16. doi: 10.3758/cabn.3.1.1. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD, Liu X, Corbly CR, Whitaker SK, Bhatt RS. Neural developmental changes in processing inverted faces. Cognitive, Affective, & Behavioral Neuroscience. 2006;6:223–35. doi: 10.3758/cabn.6.3.223. [DOI] [PubMed] [Google Scholar]

- Joseph JE, Gathers AD, Bhatt R. Progressive and regressive developmental changes in neural substrates for face processing: Testing specific predictions of the Interactive Specialization account. Developmental Science. 2011;14:227–241. doi: 10.1111/j.1467-7687.2010.00963.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joseph JE, Partin DJ, Jones KM. Hypothesis testing for selective, differential, and conjoined brain activation. Journal of Neuroscience Methods. 2002;118:129–140. doi: 10.1016/s0165-0270(02)00122-x. [DOI] [PubMed] [Google Scholar]

- Kadosh KC, Henson RN, Kadosh RC, Johnson MH, Dick F. Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. Journal of Cognitive Neuroscience. 2010;22:903–917. doi: 10.1162/jocn.2009.21224. [DOI] [PubMed] [Google Scholar]

- Kahn DA, Harris AM, Wolk DA, Aguirre GK. Temporally distinct neural tuning of perceptual similarity and prototype bias. Journal of Vision. 2010;10(10):1–12. doi: 10.1167/10.10.12. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4301–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keppel G, Zedeck S. Data analysis for research designs. New York: Freeman; 1989. [Google Scholar]

- Leube DT, Yoon HW, Rapp A, Erb M, Grodd W, Bartels M, Kircher TTJ. Brain regions sensitive to the face inversion effect: A functional magnetic resonance imaging study in humans. Neuroscience Letters. 2003;342:143–146. doi: 10.1016/s0304-3940(03)00232-5. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: An fMRI Study. Journal of Cognitive Neuroscience. 2010;22:203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Steinmetz NA, Farley AB, Smith CD, Joseph JE. Mid-fusiform activation during object discrimination reflects the process of differentiating structural descriptions. Journal of Cognitive Neuroscience. 2008;20:1711–1726. doi: 10.1162/jocn.2008.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lobmaier JS, Klaver P, Loenneker T, Martin E, Mast FW. Featural and configual face processing strategies: evidence from a functional magnetic resonance imaging study. NeuroReport. 2008;19:287–291. doi: 10.1097/WNR.0b013e3282f556fe. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas J, Benson R, Kwong K, Jiang H, Kennedy W, Tootell R. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proceedings of the National Academy of Sciences. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maurer D, Craven KM, Le Grand R, Mondloch CJ, Springer MV, Lewis CL, Grady CL. Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia. 2007;45:1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016. [DOI] [PubMed] [Google Scholar]

- Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends in Cognitive Sciences. 2002;6:255–260. doi: 10.1016/s1364-6613(02)01903-4. [DOI] [PubMed] [Google Scholar]

- Ng M, Ciaramitaro VM, Anstis S, Boynton GM, Fine I. Selectivity for the configural cues that identify the gender, ethnicity, and identity of faces in human cortex. Proceedings of the National Academy of Sciences. 2006;103:19552–19557. doi: 10.1073/pnas.0605358104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia. 2007;45:75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Current Biology. 2007;17:1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Ploran EJ, Nelson SM, Velanova K, Donaldson DI, Petersen SE, Wheeler ME. Evidence accumulation and the moment of recognition: Dissociating perceptual decision processes using fMRI. Journal of Neuroscience. 2007;27:11912–11924. doi: 10.1523/JNEUROSCI.3522-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes G, Byatt G, Michie PT, Puce A. Is the fusiform face area specialized for faces, individuation, or expert individuation? Journal of Cognitive Neuroscience. 2004;16:189–203. doi: 10.1162/089892904322984508. [DOI] [PubMed] [Google Scholar]

- Rossion B, Caldara R, Seghier M, Schuller A, Lazeyras F, Mayer E. A network of occipito-temporal face sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain. 2003;126:2381–2395. doi: 10.1093/brain/awg241. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, Bodart JM, Crommelinck M, De Gelder B, Zoontjes R. Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 2000;12:793–802. doi: 10.1162/089892900562606. [DOI] [PubMed] [Google Scholar]

- Santos A, Mier D, Kirsh A, Meyer-Lindenberg A. Evidence for a general face salience signal in human amygdala. NeuroImage. 2011;54:3111–3116. doi: 10.1016/j.neuroimage.2010.11.024. [DOI] [PubMed] [Google Scholar]

- Sato W, Kochiyama T, Yoshikawa S. The inversion effect for neutral and emotional facial expressions on amygdala activity. Brain Research. 2011;1378:84–90. doi: 10.1016/j.brainres.2010.12.082. [DOI] [PubMed] [Google Scholar]

- Schiltz C, Rossion B. Faces are represented holistically in the human occipito-temporal cortex. Neuroimage. 2006;32:1385–1394. doi: 10.1016/j.neuroimage.2006.05.037. [DOI] [PubMed] [Google Scholar]

- Sekuler AB, Gaspar CM, Gold JM, Bennett PJ. Inversion leads to quantitative, not qualitative, changes in face processing. Current Biology. 2004;14:391–396. doi: 10.1016/j.cub.2004.02.028. [DOI] [PubMed] [Google Scholar]

- Worsley KJ. Statistical analysis of activation images. In: Jezzard P, Matthews PM, Smith SM, editors. Functional MRI: An Introduction to Methods. Oxford University Press; 2001. [Google Scholar]

- Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cerebral Cortex. 2005;15:1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]