Abstract

Objective

This paper describes the University of Michigan's nine-year experience in developing and using a full-text search engine designed to facilitate information retrieval (IR) from narrative documents stored in electronic health records (EHRs). The system, called the Electronic Medical Record Search Engine (EMERSE), functions similar to Google but is equipped with special functionalities for handling challenges unique to retrieving information from medical text.

Materials and Methods

Key features that distinguish EMERSE from general-purpose search engines are discussed, with an emphasis on functions crucial to (1) improving medical IR performance and (2) assuring search quality and results consistency regardless of users' medical background, stage of training, or level of technical expertise.

Results

Since its initial deployment, EMERSE has been enthusiastically embraced by clinicians, administrators, and clinical and translational researchers. To date, the system has been used in supporting more than 750 research projects yielding 80 peer-reviewed publications. In several evaluation studies, EMERSE demonstrated very high levels of sensitivity and specificity in addition to greatly improved chart review efficiency.

Discussion

Increased availability of electronic data in healthcare does not automatically warrant increased availability of information. The success of EMERSE at our institution illustrates that free-text EHR search engines can be a valuable tool to help practitioners and researchers retrieve information from EHRs more effectively and efficiently, enabling critical tasks such as patient case synthesis and research data abstraction.

Conclusion

EMERSE, available free of charge for academic use, represents a state-of-the-art medical IR tool with proven effectiveness and user acceptance.

Keywords: Electronic Health Records (E05.318.308.940.968.625.500), Search Engine (L01.470.875), Information Storage and Retrieval (L01.470)

Graphical abstract

I. Background and Significance

In addition to improving patient care delivery, the widespread adoption of electronic health records (EHRs) in the U.S. has created unprecedented opportunities for increased access to clinical data, enabling multiple secondary use purposes such as quality assurance, population health management, and clinical and translational research. The broader use of clinical data for discovery, surveillance, and improving care provides great potential to transform the U.S. healthcare system into a self-learning vehicle—or a “Learning Health System”—to advance our knowledge in a wide range of clinical and policy domains.1,2

However, the benefits of electronically captured clinical data have yet to be fully realized for a number of reasons. Foremost is the continued popularity of free-text documentation in EHRs. While structured data at the time of entry is desirable, unstructured clinical documentation is likely to persist due to the need by clinicians to express their thoughts in a flexible manner and to preserve the complexity and nuances of each patient.3,4 Recent studies have shown that clinicians often revert to free-text entry even when coding options are provided,3,5-7 and that the free text is still needed for complex tasks such as clinical trial recruitment.8

The challenges to extracting information locked in medical text should not be underestimated.9 Factors contributing to this complexity include clinicians' frequent use of interchangeable terms, acronyms and abbreviations,10 as well as negation and hedge phrases.11-13 Ambiguities may also arise due to a lack of standard grammar and punctuation usage10 and the inherent difficulties for computer systems to process context-sensitive meanings,14 anaphora and coreferences,15 and temporal relationships.16 Even different ways by which clinical notes were created (e.g., via dictation/transcription vs. typing) could result in distinct linguistic properties posing postprocessing challenges.17 Indeed, a paradox has been noted in the biomedical informatics literature that increased availability of electronic patient notes does not always lead to increased availability of information.18

Automated data extraction methods, including natural language processing, hold great promise for transforming unstructured clinical notes into a structured, codified, and thus computable format.19 However, the use of such tools is often associated with considerable upfront costs in software setup and in training the algorithms for optimal performance. Further, despite significant research advancements, the precision and recall of such tools are not yet up to par for meeting the requirements of many sophisticated chart abstraction tasks, and existing tools' lack of generalizability often necessitates customized solutions be built to the specific needs and data characteristics of each problem.20-23

As such, search engines, or information retrieval (IR) systems more generally, offer an effective, versatile, and scalable solution that can augment the value of unstructured clinical data.24-29 Search engines help human reviewers quickly pinpoint where information of interest is located, while leaving some difficult problems that computers are not yet capable of solving to human wisdom. The requirement for end user training is also minimized as healthcare practitioners and researchers are already familiar with how search engines work through their day-to-day interactions with general-purpose web search engines such as Google and literature search tools such as PubMed. This antecedent familiarity is important because in healthcare, clinicians, administrators, and researchers often lack time to attain mastery of informatics tools.

Surprisingly, despite a growing need for IR tools in healthcare settings for both operational and research purposes, very few successful implementations have been reported in the literature.26,27,30 In this paper, we describe University of Michigan's (UM) nine-year experience in developing and using a web-based full-text medical search engine designed to facilitate information retrieval from narrative EHR documents. The system, called the Electronic Medical Record Search Engine (EMERSE), has been used by numerous research groups in over 750 clinical and translational studies yielding 80 peer-reviewed publications to date. (e.g., 31-35). As part of the results validation process several studies explicitly examined the efficacy of EMERSE and concluded that the system was instrumental in ensuring the quality of chart review while significantly reducing manual efforts.33,36,37 To the best of our knowledge, this is the first comprehensive description of an EHR search tool that has been used in a production setting by many real end users for multiple years and for a wide variety of clinical, operational, and research tasks. We believe our experience of designing, building, maintaining, and disseminating EMERSE will provide useful insights to the researchers who have a similar need for an HER search engine and will help potential users of such an EHR search engine to understand what types of problems that such tools can help solve.

In the following sections, we first describe the background and architecture of EMERSE, followed by a presentation of various usage metrics and the results of user adoption and effectiveness evaluations. Note that the primary purpose of this paper is to provide a comprehensive description of the design and features of EMERSE, especially those that significantly deviate from traditional IR systems and those that are well received among end users of EMERSE, most of whom are clinicians, healthcare administrators, and clinical researchers. It should also be noted that there is no universally accepted benchmark, or ‘gold standard,’ with which to measure the performance of a system such as EMERSE that is designed to serve a wide range of purposes in a wide variety of clinical contexts. For example, when trying to identify a subset of potential study subjects among a pool of many possible candidates, precision may be most important. By contrast, when trying to identify all patients affected by a faulty pacemaker then recall may be most important. Therefore, our goal is not to evaluate the IR performance of the EMERSE and compare it to that of other search engines (e.g., PubMed) or algorithms developed for IR competitions (e.g., TREC), but rather to describe EMERSE in the context of the mostly empty landscape of EHR-specific IR tools. Such information is important to developing an enhanced understanding of how to achieve better penetration of IR systems such as EMERSE in everyday healthcare settings.

II. Methods and Materials

A. History and Current Status

EMERSE has been operational since 2005 and has undergone multiple rounds of interface and architectural revisions based on end user feedback, usability testing, and the changing technology environment. The National Center for Advancing Translational Sciences, the National Library of Medicine (NLM), the UM Comprehensive Cancer Center, the Michigan Institute for Clinical and Health Research, and the UM Medical Center Information Technology department provided funding support for the software's continued development and evaluation.

EMERSE was originally designed to work with the University of Michigan Health System's (UMHS) legacy homegrown EHR system, CareWeb, deployed in 1998. In 2012, EMERSE was overhauled so it could integrate data from our newly implemented commercial EHR system, Epic (Epic Systems Corporation, Verona, WI), locally renamed MiChart. During the overhaul, significant efforts were made to ensure EMERSE is as much platform independent and vendor-neutral as possible. At present, users at UMHS can use EMERSE to search through 81.7 million clinical documents: 36.4 million from CareWeb, 10.6 million from Epic (MiChart), 10.4 million radiology reports, 23.2 million narrative pathology reports, and 1.2 million other genres of documents such as electroencephalography and pulmonary function studies.

EMERSE has also been adapted to work with VistA, the Veterans Affairs (VA)'s health IT architecture, and has been used at the VA Hospital in Ann Arbor, Michigan since 2006 to support various VA research initiatives.38-40 This adapted version can be readily adopted by other VA facilities nationwide as they all share the same underlying IT infrastructure. EMERSE is available free of charge for academic use. Note that due to reasons such as local customization, not all features described in this paper are present in all versions of the software. Additional details, as well as a demonstration version of EMERSE, are available at http://project-emerse.org.

B. User Interface Design and Software Architecture

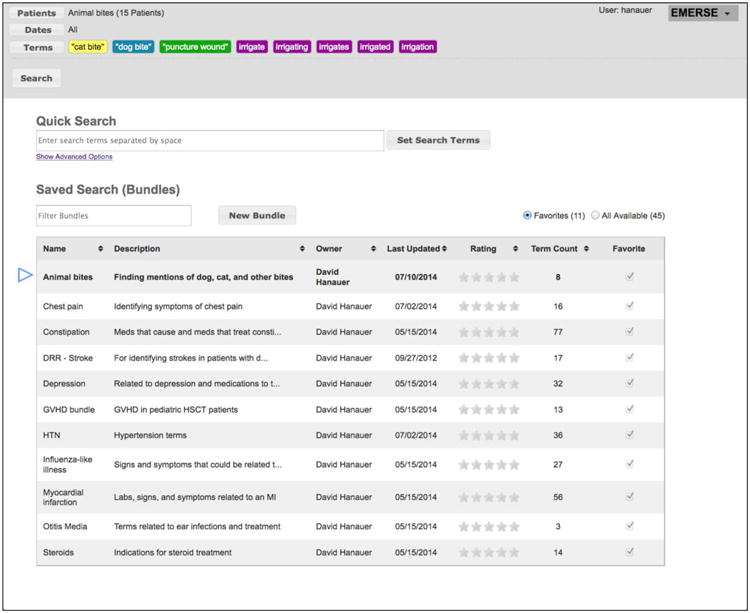

Figure 1 exhibits the main workspace of EMERSE where users construct search queries and subsequently submit the queries to the backend IR engine for processing. Search terms can be entered rapidly as a Quick Search or they can be activated from Search Term Bundles pre-stored in the system.

Figure 1.

EMERSE main workspace. Screen capture of EMERSE showing where search terms can be entered. Search keywords can be quickly typed into the Quick Search text box. Available pre-saved collections of search terms (i.e., search term bundles) are listed further down on the same page. In this example, a search term bundle named “Animal bites” has been selected and the terms belonging to the bundle are shown at the top.

Quick Search

Similar to Google, the most common way of using EMERSE is to type keywords into a simple text entry box. Search terms may contain single words or multi-word phrases (e.g., “sick sinus syndrome”), wild cards (e.g., “hyperten*”), and other operators (e.g., ˆ for case sensitivity). In earlier versions of EMERSE, advanced users could also write sophisticated search queries using regular expressions. This function was dropped during the 2012 overhaul due to lack of use. Searches in EMERSE are case insensitive by default, but an option is provided allowing users to enforce the case-sensitivity, such as for distinguishing “FROM” (full range of motion) from the common word “from.” Similarly, stop words are preserved in the document indices because many are legitimate acronyms of medical concepts, e.g., OR: operating room; IS: incentive spirometry; IT: intrathecal.

Exclusion criteria can be entered to instruct the system not to include certain words and phrases in the search. This feature has been utilized particularly in handling negations. For example, the UMHS Department of Ophthalmology developed a “search term bundle” (see below) to look in surgeon notes for perioperative complications (Appendix A.1). The query contains only one search term, “complications,” while excluding 51 phrases that unambiguously rule out the possibility of perioperative complications (e.g., “without any complications”) or that mentioned complications in an irrelevant context (e.g., “diabetes with neurologic complications”).

Search Term Bundles and Collaborative Search

EMERSE provides a special “collaborative search” mechanism that allows users to save their search queries as “search term bundles” which can be reused and shared with others. Examples include a bundle that contains 28 search terms enumerating common ways in which apathy or indifference may be described in clinician notes (Appendix A.2), and another that lists 70 concepts for identifying infections in hematopoietic stem cell transplant patients (Appendix A.3). This collaborative search feature was inspired by social information foraging and crowdsourcing techniques found on the Web that leverage users' collective wisdom to perform collaborative tasks such as IR. The resulting search term bundles not only provide a means for end users to preserve and collectively refine search knowledge, but also to ensure the consistent use of standardized sets of search terms by users. In prior work assessing adoption of this feature, we found that about half of the searches performed in EMERSE had used pre-stored search term bundles, of which one-third utilized search knowledge shared by other users.41

Handling of Spelling Errors and Logic Validation

Medical terminology contains many difficult-to-spell words (e.g., “ophthalmology”) which can be challenging even to seasoned clinicians. It is also not uncommon for misspellings to make their way into official patient records. These can be words incorrectly spelled such as “infectoin” instead of “infection,” or words that were spelled correctly (thus eluding detection by a spell checker) but were nevertheless incorrect, such as “prostrate” instead of “prostate.”

To address these issues, EMERSE incorporates a medical spelling checker to alert users about potentially misspelled words in their search queries. In addition, EMERSE offers an option for users to include potentially misspelled forms of the search terms in the search. The implementation of these features was based on phonetic matching and sequence comparison algorithms provided in open-source spell check APIs (Jazzy in earlier versions of EMERSE and Apache Lucene in the overhauled version),42,43 and a customized dictionary containing about 6,200 common spelling alternatives that we manually curated from the search logs of EMERSE over the years. The dictionary includes, for example, 24 misspelled forms of the word “diarrhea”, e.g. “diarrheae” and “diarheea.” Besides spelling mistakes, EMERSE also inspects for common logic errors found in user-submitted search queries, e.g., same keywords appearing on both inclusion and exclusion lists.

Query Recommendation

EMERSE users who are tasked with reviewing medical documents do not necessarily possess adequate clinical knowledge (e.g., they may be student research assistants). Through our observations of users and analyses of search logs, we also discovered that even clinicians with extensive clinical experience might have difficulty creating a set of search terms ‘minimally necessary’ to ensure reasonably inclusive search results.44 For example, when looking for “myocardial infarction,” users often failed to include common synonyms such as “heart attack,” “cardiac arrest,” and its acronym “MI.”

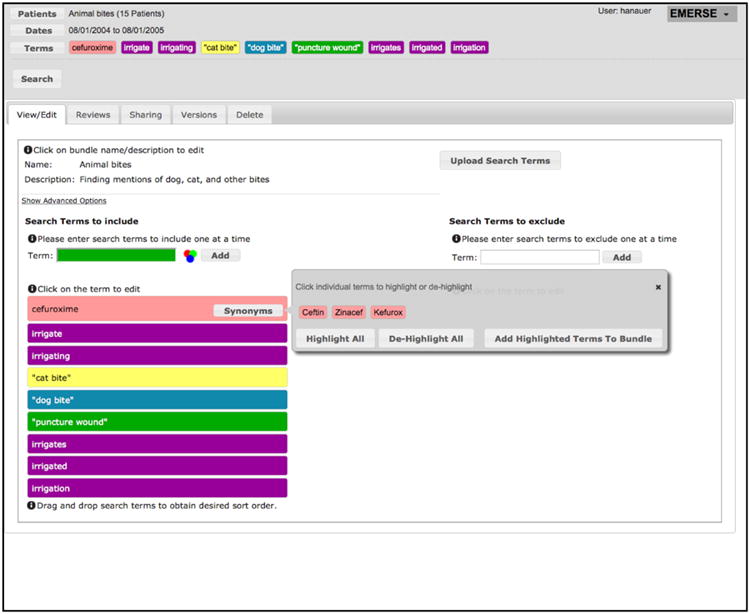

To improve search quality and reduce user variation, significant effort was made to build a query expansion function to recommend alternative terms that users may consider adding to a query (Figure 2). These alternative terms can be acronyms and synonyms of the keywords searched, as well as generic names of a commercial drug or vice versa. The knowledge base underlying this feature, currently consisting of about 78,000 terms representing approximately 16,000 concepts, was derived from multiple sources including medical dictionaries, drug lexicons, and an empirical synonym set manually curated from the search logs of EMERSE and from the pre-stored search term bundles. The query recommendation feature is available with the ‘Quick Search’ option as well as with the ‘Bundles’ option.

Figure 2.

Screen capture of EMERSE showing the editing of a search terms bundle. In the example, the term “cefuroxime” was added by the user and the system provided additional suggestions. To aid in recognizing terms in the search results, the Bundles feature will highlight synonyms and related concepts using the same color, which can be overridden by users.

Funded by the NLM, we also developed an experimental extension to EMERSE to leverage the nomenclatures included in the Unified Medical Language System® (UMLS®) Metathesaurus for more comprehensive query expansion. This experimental extension also incorporates MetaMap, NLM's named entity recognition engine,45 to enable the use of additional text features such as term frequency, inverse document frequency, and document length penalization to improve the relevance of document ranking of the search results that EMERSE returns.

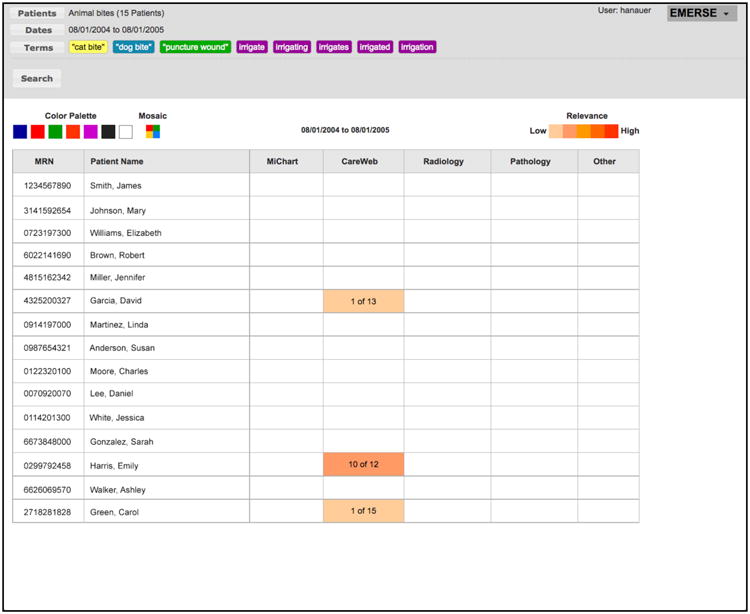

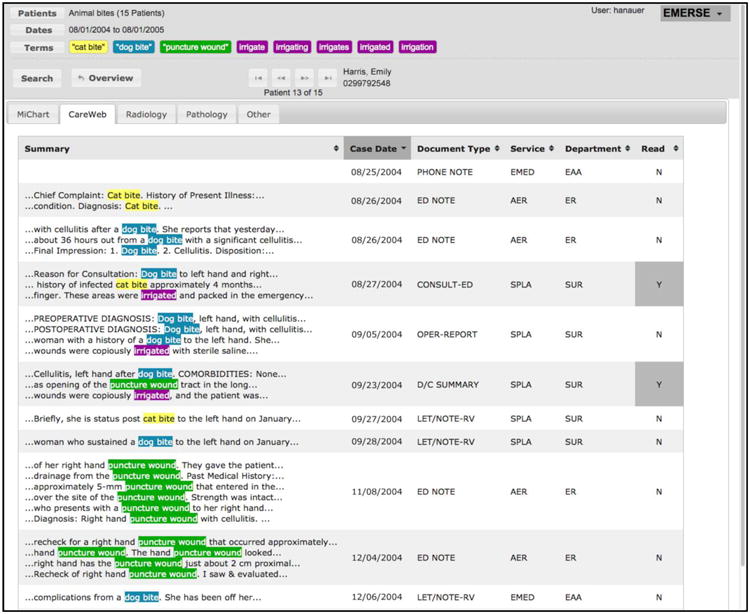

Multilevel Data Views with Visual Cues

EMERSE presents search results through multilevel data views and uses visual cues to help users quickly scan through returned documents. The data view at the highest level, the Overview, provides users a succinct summary of patients (rows) and document sources (columns) where the “hits” of a search are found (Figure 3). Cells in the Overview display a color gradation along with both the number of relevant documents found and the total number of documents for the patient, suggesting the ‘intensity’ of the hits. Clicking on a cell in the Overview takes users to the Summaries view where documents are presented in reverse chronological order with text snippets revealing the context in which the search terms appear (Figure 4). Clicking on a snippet will then reveal the full document in the Documents view.

Figure 3.

The Overview screen, presenting the search results for eight terms related to animal bites and wound cleaning. Each row on the Overview screen represents a patient and each column represents a document source. All patient identifiers have been replaced with realistic substitutes.

Figure 4.

The document Summaries view where the rows represent each of the documents belonging to a specific patient. Documents that do not contain any of the search terms are also included for viewing if needed.

There are two important design aspects of EMERSE that deviate from a general-purpose search engine with respect to these views. The first is that documents are grouped and displayed by patient, and among each patient they are grouped and displayed by source system (e.g., pathology, radiology, etc.). The second is that all documents for a patient are retrieved and made available to the user, including those without a hit of the query words. This is visible in the top row of the results shown in Figure 4. On that row, no visible snippets are displayed, demonstrating that the document does not contain any of the search terms used. Retaining this document in the Documents view is still important in many common scenarios of EHR search because even though such documents do not contain the exact search terms, they may still be relevant to the particular task of the user (e.g., patient screening). .

Matched keywords found in returned documents (or in text snippets displayed in the document summary view) are automatically highlighted using a palette of 18 system-assigned colors to ensure that terms stands out from their surroundings (Figure 2). For search terms included in pre-stored bundles, users have additional controls over the choice of colors; for example, they can override system-assigned colors to allow logical groupings by color of similar medical concepts (e.g., all narcotic medications in green and all stool softeners in orange). This feature makes rapid visual scanning of search results easier, especially when many search terms are involved, The use of distinct colors for different concepts is not commonly supported by general-purpose search engines, but this feature has been highly appreciated by our users.

Patient Lists and Date Range

EMERSE allows users to supply a predefined list of patients to limit the scope of search which can be, for example, a cohort of patients that has passed preliminary trial eligibility screening. Such lists may be prepared ad hoc (e.g., of patients currently staying in an inpatient unit) or systematically identified through claims data, disease-specific patient registries, or research informatics tools such as the i2b2 Workbench.46 We have developed a plug-in for the i2b2 Workbench so that users can directly transfer patient cohorts identified in i2b2 to EMERSE as predefined patient lists ready for searching. Searches in EMERSE can also be bounded with a date range. This feature is used by the UMHS Infection Control and Epidemiology Department to identify cases of post-operative surgical site infections in one-month blocks.

Security

EMERSE is hosted behind the UMHS firewall in a computing environment certified for storing protected health information. Access to EMERSE is limited to authorized personnel who have patient data access privileges or, among those using it for research, have provided evidence of training in responsible research practices and proof of valid institutional review board approvals, including demonstration of a need to review identifiable patient information. At each login users must complete a brief attestation form to document their intent of use. Audit trail logs are kept for each use session. Searching clinical documents for clinical or operational purposes generally does not warrant removal of identifiers. For research, de-identification would be desirable in certain settings, but is not currently mandated by our health system. Nevertheless, we are exploring de-identification of the entire document corpus to reduce privacy concerns.

Technical Implementation

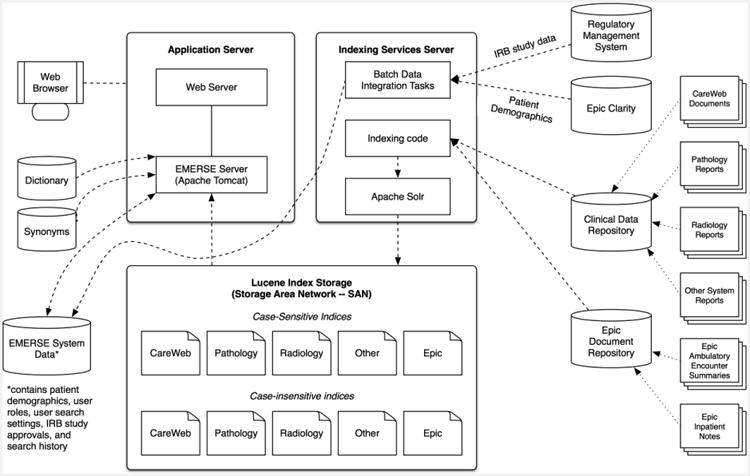

The technical architecture of the most recent version of EMERSE is illustrated in Figure 5. The system uses a variety of open-source components including Apache Lucene, Spring, and Hibernate, with a code base written in Java. Free-text documents are indexed nightly using Apache Solr without additional processing. Document repositories are recommended for indexing but are not needed at EMERSE runtime. Further, while our institution utilizes Epic as its EHR, EMERSE was built to be vendor-neutral and contains no dependencies on the EHR itself. Additional implementation details can be found in our online Technical Manual (http://project-emerse.org/software.html), including directions on how to configure EMERSE to work with local data sources.

Figure 5.

The Technical Architecture of EMERSE. Data are captured from the sources of EHR data (right side) mainly via HL7 interfaces and added to locally-maintained document repositories separate from the EHR. The Indexing services server routinely updates the Lucene-based indices used by the main search application from these databases. Note that while having all documents indexed in both a case-sensitive and case-insensitive index increases storage requirements, this approach allows for faster response time for most search scenarios.

III. Results

The most revealing result in evaluating a practical informatics tool such as EMERSE is perhaps whether people use the system in their everyday work. In this section, we present usage statistics of EMERSE, typical use scenarios, and results of several evaluation studies that assessed the IR performance and end user satisfaction of the system.

A. Usage Statistics and Typical Use Scenarios

As of March 2015, EMERSE has had 1,137 registered users who represent nearly all clinical and operational departments at UMHS covering a majority of medical specialties and professions. These users have collectively logged in more than 138,000 times. EMERSE currently stores 961 search term bundles created by users, each on average containing 32 search terms (median 18; range 1 - 774).

Several UMHS operational departments have incorporated EMERSE into their job routine in data synthesis and reporting. For example, the cancer registrars at our cancer center use EMERSE to perform data abstraction of genetic and biomarker testing results for submission to our tumor registry. Coding teams in the Department of Health Information Management use the system as a computer-assisted coding tool to improve the efficiency in evaluating and managing inpatient billing code assignments as well as for regulatory compliance reviews. EMERSE has also supported the coding team in improving hospital reimbursements by aiding in the identification of supporting information (e.g., proof of pneumonia on admission) for reimbursements that were previously rejected by insurers, and for which they lacked the manpower to perform manual chart reviews. Professionals in the Department of Infection Control and Epidemiology use EMERSE to monitor the incidence of surgical site infections, a process that previously required labor-intensive manual chart auditing.47

In the clinical setting, EMERSE has enabled providers to answer questions such as “for this patient in clinic today, what medication worked well for her severe migraine headache several years ago,” without having to read every note. EMERSE has also been used often in obtaining ‘prior authorization’ clearance for medications initially denied by insurance companies. With the system, clinic staff can rapidly identify supporting evidence as to when and why cheaper, more ‘preferable’ medications have failed for a patient.

Researchers at UMHS use EMERSE to perform a variety of chart abstraction tasks ranging from cohort identification and eligibility determination to augmenting phenotypic data for laboratory-based translational research linking biomarkers to patient outcomes.35,48-50 The research studies that EMERSE has supported span many topic areas in health sciences.35,51,52 Several papers explicitly acknowledged the enabling role that EMERSE played in the studies which allowed the investigators to “systematically identify data within the electronic medical record,”33 and helped to accomplish “a standardized, accurate, and reproducible electronic medical record review.”36,37 Table 1 exhibits 14 peer-reviewed publications appearing in 2014 alone that were supported by the use of EMERSE. A full list of the publications supported by EMERSE to date, of which we are aware, is provided in Appendix B.

Table 1. Peer-reviewed publications supported by EMERSE, 2014 only.

| Publication Title | The way EMERSE was used | Journal | PubMed Identifier |

|---|---|---|---|

| Anticoagulant Complications in Facial Plastic and Reconstructive Surgery | Data abstraction from operative and other notes for wound healing, infection, bleeding, return to the operating room, and other factors. | JAMA Facial Plast Surg | 25541679 |

| Proton pump inhibitors and histamine 2 blockers are associated with improved overall survival in patients with head and neck squamous carcinoma. | To identify mentions of medications taken by the patients in the study. | Cancer Prev Res | 25468899 |

| Transcutaneous biopsy of adrenocortical carcinoma is rarely helpful in diagnosis, potentially harmful, but does not affect patient outcome | To obtain data about staging, reason for biopsy, number of biopsies, institution performing the biopsy, complications, and other factors. | Eur J Endocrinol | 24836548 |

| Engraftment syndrome after allogeneic hematopoietic cell transplantation predicts poor outcomes | To identify patients with engraftment syndrome using a set of 82 search terms | Biol Blood Marrow Transplant | 24892262 |

| Osteochondromas after radiation for pediatric malignancies: a role for expanded counseling for skeletal side effects | To identify patient who developed osteochondromas after hematopoietic stem cell transplant and total body irradiation | J Pediatr Orthop | 23965908 |

| Unbiased identification of patients with disorders of sex development | Chart reviews to locate features described in the notes that helped identify patients with disorders of sex development | PLoS One | 25268640 |

| Changes in characteristics of hepatitis C patients seen in a liver centre in the United States during the last decade | Medical records review which included treatment status and clinical status at the time of presentation | J Viral Hepat | 25311830 |

| Symptomatic subcapsular and perinephric hematoma following ureteroscopic lithotripsy for renal calculi | Chart review of clinical notes to identify patients whose surgery was complicated by a symptomatic hematoma | J Endourol | 25025758 |

| Comparison of Second-Echelon Treatments for Ménière's Disease | Data abstraction for and identification of patients with Ménière's Disease | JAMA Otolaryngol Head Neck Surg | 25057891 |

| A Combined Paging Alert and Web-Based Instrument Alters Clinician Behavior and Shortens Hospital Length of Stay in Acute Pancreatitis | Finding data of interest for chart reviews. | Am J Gastroenterol | 24594946 |

| Adjuvant therapies, patient and tumor characteristics associated with survival of adult patients with adrenocortical carcinoma | Chart reviews for a variety of clinical parameters including stage, pathology data, evaluation of hormone secretion, surgical approach, and treatment modalities. | J Clin Endocrinol Metab | 24302750 |

| Population-based incidence and prevalence of systemic lupus erythematosus: The Michigan Lupus Epidemiology & Surveillance (MILES) Program | Data abstraction for a variety of clinical characteristics for patients with systemic lupus erythematosus. | Arthritis Rheum | 24504809 |

| The association between race and gender, treatment attitudes, and antidepressant treatment adherence | Eligibility determination based on chart review by considering factors such as clinically significant depression and having a new recommendation for antidepressant treatment. | Int J Geriatr Psychiatry | 23801324 |

| Aneurysms in abdominal organ transplant recipients | To identify patients with mention of an arterial aneurysm in the clinical documentation among patients undergoing liver or kidney transplants over an 11 year period. | J Vasc Surg | 24246534 |

B. Results of Evaluation of IR Performance

We evaluated the IR performance of EMERSE in the context of conducting several clinical or informatics studies. For example, in a psychiatric trial,53 we assessed the efficiency of eligibility screening with or without the system and showed that the team assisted by EMERSE achieved significant time savings while maintaining accuracy compared to the team doing manual chart review. In another study aiming to improve the process of data submission to the American College of Surgeons National Surgical Quality Improvement Program (ACS NSQIP), we demonstrated that automated data extraction procedures powered by EMERSE attained high sensitivity (100.0% and 92.8%, respectively) and high specificity (93.0% and 95.9%, respectively) in identifying postoperative complications, which compared favorably with existing ACS NSQIP datasets manually prepared by clinical surgical nurses.54 Finally, in collaboration with pediatric cardiologists at UMHS, we compared the performance of using EMERSE vs. three other specialty surgical registries to identify a rare tachyarrhythmia associated with congenital heart surgery. EMERSE was found to be the best-performing method, yielding the highest sensitivity (96.9%) and had comparable performance on other evaluation dimensions.55

C. Results of End User Satisfaction Survey

Periodic satisfaction surveys have been conducted as part of an ongoing effort to collect users' feedback regarding the usefulness and usability of EMERSE. The most recent survey received responses from 297 users (60.5% of all active users at the time). According to the survey, the three largest user groups of EMERSE were clinicians with research responsibilities (22.2%), data analysts/managers (21.5%), and full-time researchers (14.4%). Over a quarter of the respondents were specialized in hematology and oncology (26.7%) followed by pediatrics (17.5%), general medicine (8.7%), and general surgery (8.3%). Among the respondents there were also 17 (5.7%) undergraduate students, 17 (5.7%) non-medical graduate students, and 13 (4.4%) medical students approved to participate in clinical research as student research assistants.

The survey questionnaire solicited end users' options about EMERSE such as to what extent the system facilitates data extraction tasks (“solves my problem or facilitates the tasks I face”) and how use of the system compares to manual chart review processes (“has expanded my ability to conduct chart reviews to areas previously impossible with manual review”). The results are shown in Table 2. Across all items, EMERSE users reported a high level of satisfaction with the system and the vast majority believed the system contributed to improved time efficiency and helped them find data that they “might have otherwise missed or overlooked.”

Table 2. End user satisfaction survey results.

| Does not meet (%) | Meets or exceeds (%) | Does not apply (%) | ||

|---|---|---|---|---|

| 1. | Enables effective searching | 0.4 | 92.6 | 7.0 |

| 2. | Solves my problem or facilitates the tasks I face | 1.3 | 88.7 | 10.0 |

| 3. | Saves overall time and effort | 0.4 | 95.3 | 4.3 |

| 4. | Allows me to get accurate answers | 0.9 | 88.9 | 10.2 |

| 5. | Helps me find data I might have otherwise missed or overlooked | 0.4 | 93.1 | 6.5 |

| 6. | Has expanded my ability to conduct chart reviews to areas previously impossible with manual review | 1.8 | 78.8 | 19.4 |

IV. Discussion

Healthcare practitioners and researchers continue to seek efficient and effective informatics tools to support their needs for retrieving information from narrative patient records. EHR search engines, working similar to Google but equipped with features to accommodate the unique characteristics of medical text, provide a viable solution for meeting such needs. The success of EMERSE at our institution demonstrates the potential of using EHR search engines in clinical, operational, and research settings, enabling critical tasks such as patient case synthesis and research data abstraction.

However, only a handful of studies in the biomedical informatics literature have discussed the design and use of EHR search engines (e.g., Harvard's Queriable Patient Inference Dossier [QPID] system and Columbia's CISearch system);24,26-30,56,57 even fewer have rigorously evaluated the systems' usability and IR performance, or reported usage metrics. There remains a paucity of knowledge as to how well the existing systems have been used in practice and how their design and implementation can be further improved. We believe this paper provides valuable insights into addressing this knowledge gap.

We learned several important lessons through interacting with end users of EMERSE and incorporating their feedback to continuously improve the system. First, we found that users in healthcare desire simple tools, and are willing to sacrifice efficiency for less learning and more cognitive comfort: some spent hours scrutinizing ‘imperfect’ search results returned by rudimentary queries, rather than trying to use the advanced search features readily available to help them improve the results.44 For example, the early generations of EMERSE provided regular expressions support allowing users to construct sophisticated search queries that could yield desired results with high precision. An analysis of the search logs, however, revealed that this feature was minimally utilized.44 Second, we found that the quality of search queries submitted by end users is generally poor, particularly in failing to consider essential alternative phrasings.44 Several new functions, such as computer-aided query recommendation, were introduced as a result to assist users in constructing high-quality queries.

Third, users of EMERSE embraced the collaborative search feature more than anticipated. Users were not only enthusiastic in adopting and using search term bundles shared by others, but also in creating bundles and making them widely available. This search knowledge sharing is also taking place beyond EMERSE. Several studies enabled by the system published their search terms used to ensure other researchers could replicate the results.36,58-64 As more and more studies now leverage EHR data for clinical and translational research, the development of a central repository for search terms used in extracting data from EHRs could serve as a useful and sharable knowledge base.

Lastly, because the goal of EHR search engines is to help users retrieve relevant documents rather than providing a precise answer to the question at hand, the usability of such systems, particularly in organizing the research results returned, is critical to their success. An EHR search engine with a well-designed user interface could thus be even more effective than a system with superior IR performance but with a sub-optimal interface. In EMERSE, we implemented many visual and cognitive aids to help users iteratively explore search results, identify the text of interest, and read the information in its surrounding context to better interpret the meaning. Based on user feedback, we believe that the usability of the system has played a substantial role in growing our user base and retaining existing users. Nevertheless, building IR functionalities into EHRs is complex, and there remains much to do to better understand the varied needs of the many individuals and groups who wish to extract information from narrative clinical documents.

V. Conclusion

This paper reports University of Michigan's nine-year experience in developing and using a full-text search engine, EMERSE, designed to facilitate retrieval of information from narrative clinician notes stored in EHRs. Based on substantial adoption for a wide range of use scenarios, EMERSE has proven to be a valuable addition to the clinical, operational, and research enterprise at our institution. For other institutions interested in adopting EMERSE, the software is available at no cost for academic use.

Supplementary Material

Highlights.

EMERSE is an information retrieval system designed for free-text clinical notes

EMERSE supports varied tasks including infection control surveillance and research

Sharing and reusing search terms helps to ensure standardized search results

Synonym recommendations are important to broaden users' ability to find concepts

EMERSE is available at no cost for academic use

Acknowledgments

This work was supported in part by the Informatics Core of the University of Michigan Cancer Center in addition to support received from the National Institutes of Health through the UMCC Support Grant (CA46592), the Michigan Institute for Clinical and Health Research through the Clinical Translational Science Award (UL1RR024986), and the National Library of Medicine (HHSN276201000032C). We would also like to acknowledge the support of Stephen Gendler and Kurt Richardson from the UMHS Medical Center Information Technology Team, Frank Manion from the Comprehensive Cancer Center, and Thomas Shanley from the CTSA-supported Michigan Institute for Clinical and Health Research.

Footnotes

Contributorship Statement: David Hanauer contributed to the conception and design of the production system as well as the experimental extension to EMERSE, acquisition, analysis and interpretation of the data, and drafting the manuscript.

James Law contributed to the conception, design, and coding of the production system, analysis and interpretation of the data, and drafting the manuscript.

Ritu Khanna contributed to the conception, design, and coding of the production system, analysis and interpretation of the data, and drafting the manuscript.

Qiaozhu Mei contributed to conception and design of the experimental extension to EMERSE, the analysis and interpretation of the data, and drafting the manuscript.

Kai Zheng contributed to conception and design of the experimental extension to EMERSE, the analysis and interpretation of the data, and drafting the manuscript.

All authors approved the final version to be published and agree to be accountable for all aspects of the work.

Conflict of Interest Statement: EMERSE was developed at the University of Michigan, and is currently being offered at no cost for academic use. For commercial use a non-academic license is being offered by the University of Michigan for a small fee. As inventor of EMERSE, David Hanauer is entitled to a portion of the royalties received by the University of Michigan.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Friedman C, Rigby M. Conceptualising and creating a global learning health system. Int J Med Inform. 2013;82(4):e63–71. doi: 10.1016/j.ijmedinf.2012.05.010. [DOI] [PubMed] [Google Scholar]

- 2.Friedman CP, Wong AK, Blumenthal D. Achieving a nationwide learning health system. Sci Transl Med. 2010;2(57):57cm29. doi: 10.1126/scitranslmed.3001456. [DOI] [PubMed] [Google Scholar]

- 3.Ford E, Nicholson A, Koeling R, et al. Optimising the use of electronic health records to estimate the incidence of rheumatoid arthritis in primary care: what information is hidden in free text? BMC Med Res Methodol. 2013;13(1):105. doi: 10.1186/1471-2288-13-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rosenbloom ST, Denny JC, Xu H, et al. Data from clinical notes: a perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc. 2011;18(2):181–6. doi: 10.1136/jamia.2010.007237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Madani S, Shultz E. An Analysis of Free Text Entry within a Structured Data Entry System. AMIA Annu Symp Proc. 2012:947. [Google Scholar]

- 6.Zheng K, Hanauer DA, Padman R, et al. Handling anticipated exceptions in clinical care: investigating clinician use of ‘exit strategies’ in an electronic health records system. J Am Med Inform Assoc. 2011;18(6):883–9. doi: 10.1136/amiajnl-2011-000118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhou L, Mahoney LM, Shakurova A, et al. How many medication orders are entered through free-text in EHRs?--a study on hypoglycemic agents. AMIA Annu Symp Proc. 2012;2012:1079–88. [PMC free article] [PubMed] [Google Scholar]

- 8.Raghavan P, Chen JL, Fosler-Lussier E, et al. How essential are unstructured clinical narratives and information fusion to clinical trial recruitment? AMIA Joint Summits on Translational Science proceedings AMIA Summit on Translational Science. 2014;2014:218–23. [PMC free article] [PubMed] [Google Scholar]

- 9.Edinger T, Cohen AM, Bedrick S, et al. Barriers to retrieving patient information from electronic health record data: failure analysis from the TREC Medical Records Track. AMIA Annu Symp Proc. 2012;2012:180–8. [PMC free article] [PubMed] [Google Scholar]

- 10.Barrows RC, Jr, Busuioc M, Friedman C. Limited parsing of notational text visit notes: ad-hoc vs. NLP approaches. Proc AMIA Symp. 2000:51–5. [PMC free article] [PubMed] [Google Scholar]

- 11.Agarwal S, Yu H. Biomedical negation scope detection with conditional random fields. J Am Med Inform Assoc. 2010;17(6):696–701. doi: 10.1136/jamia.2010.003228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hanauer DA, Liu Y, Mei Q, et al. Hedging their mets: the use of uncertainty terms in clinical documents and its potential implications when sharing the documents with patients. AMIA Annu Symp Proc. 2012;2012:321–30. [PMC free article] [PubMed] [Google Scholar]

- 13.Mitchell KJ, Becich MJ, Berman JJ, et al. Implementation and evaluation of a negation tagger in a pipeline-based system for information extract from pathology reports. Stud Health Technol Inform. 2004;107(Pt 1):663–7. [PubMed] [Google Scholar]

- 14.Jagannathan V, Mullett CJ, Arbogast JG, et al. Assessment of commercial NLP engines for medication information extraction from dictated clinical notes. Int J Med Inform. 2009;78(4):284–91. doi: 10.1016/j.ijmedinf.2008.08.006. [DOI] [PubMed] [Google Scholar]

- 15.Chapman WW, Savova GK, Zheng J, et al. Anaphoric reference in clinical reports: characteristics of an annotated corpus. J Biomed Inform. 2012;45(3):507–21. doi: 10.1016/j.jbi.2012.01.010. [DOI] [PubMed] [Google Scholar]

- 16.Tang B, Wu Y, Jiang M, et al. A hybrid system for temporal information extraction from clinical text. J Am Med Inform Assoc. 2013;20(5):828–35. doi: 10.1136/amiajnl-2013-001635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zheng K, Mei Q, Yang L, et al. Voice-dictated versus typed-in clinician notes: linguistic properties and the potential implications on natural language processing. AMIA Annu Symp Proc. 2011;2011:1630–8. [PMC free article] [PubMed] [Google Scholar]

- 18.Christensen T, Grimsmo A. Instant availability of patient records, but diminished availability of patient information: a multi-method study of GP's use of electronic patient records. BMC Med Inform Decis Mak. 2008;8:12. doi: 10.1186/1472-6947-8-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jha AK. The promise of electronic records: around the corner or down the road? JAMA. 2011;306(8):880–1. doi: 10.1001/jama.2011.1219. [DOI] [PubMed] [Google Scholar]

- 20.Friedman C, Rindflesch TC, Corn M. Natural language processing: state of the art and prospects for significant progress, a workshop sponsored by the National Library of Medicine. J Biomed Inform. 2013;46(5):765–73. doi: 10.1016/j.jbi.2013.06.004. [DOI] [PubMed] [Google Scholar]

- 21.Nadkarni PM, Ohno-Machado L, Chapman WW. Natural language processing: an introduction. J Am Med Inform Assoc. 2011;18(5):544–51. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stanfill MH, Williams M, Fenton SH, et al. A systematic literature review of automated clinical coding and classification systems. J Am Med Inform Assoc. 2010;17(6):646–51. doi: 10.1136/jamia.2009.001024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zeng QT, Goryachev S, Weiss S, et al. Extracting principal diagnosis, co-morbidity and smoking status for asthma research: evaluation of a natural language processing system. BMC Med Inform Decis Mak. 2006;6:30. doi: 10.1186/1472-6947-6-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Biron P, Metzger MH, Pezet C, et al. An information retrieval system for computerized patient records in the context of a daily hospital practice: the example of the leon berard cancer center (france) Appl Clin Inform. 2014;5(1):191–205. doi: 10.4338/ACI-2013-08-CR-0065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gardner M. Information retrieval for patient care. BMJ. 1997;314(7085):950–3. doi: 10.1136/bmj.314.7085.950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gregg W, Jirjis J, Lorenzi NM, et al. StarTracker: an integrated, web-based clinical search engine. AMIA Annu Symp Proc. 2003:855. [PMC free article] [PubMed] [Google Scholar]

- 27.Natarajan K, Stein D, Jain S, et al. An analysis of clinical queries in an electronic health record search utility. Int J Med Inform. 2010;79(7):515–22. doi: 10.1016/j.ijmedinf.2010.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tawfik AA, Kochendorfer KM, Saparova D, et al. “I Don't Have Time to Dig Back Through This”: The Role of Semantic Search in Supporting Physician Information Seeking in an Electronic Health Record. Performance Improvement Quarterly. 2014;26(4):75–91. [Google Scholar]

- 29.Zalis M, Harris M. Advanced search of the electronic medical record: augmenting safety and efficiency in radiology. J Am Coll Radiol. 2010;7(8):625–33. doi: 10.1016/j.jacr.2010.03.011. [DOI] [PubMed] [Google Scholar]

- 30.Lowe HJ, Ferris TA, Hernandez PM, et al. STRIDE--An integrated standards-based translational research informatics platform. AMIA Annu Symp Proc. 2009;2009:391–5. [PMC free article] [PubMed] [Google Scholar]

- 31.Blayney DW, McNiff K, Hanauer D, et al. Implementation of the Quality Oncology Practice Initiative at a university comprehensive cancer center. J Clin Oncol. 2009;27(23):3802–7. doi: 10.1200/JCO.2008.21.6770. [DOI] [PubMed] [Google Scholar]

- 32.Braley TJ, Segal BM, Chervin RD. Sleep-disordered breathing in multiple sclerosis. Neurology. 2012;79(9):929–36. doi: 10.1212/WNL.0b013e318266fa9d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.DiMagno MJ, Spaete JP, Ballard DD, et al. Risk models for post-endoscopic retrograde cholangiopancreatography pancreatitis (PEP): smoking and chronic liver disease are predictors of protection against PEP. Pancreas. 2013;42(6):996–1003. doi: 10.1097/MPA.0b013e31827e95e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hanauer DA, Ramakrishnan N, Seyfried LS. Describing the relationship between cat bites and human depression using data from an electronic health record. PLoS One. 2013;8(8):e70585. doi: 10.1371/journal.pone.0070585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Paczesny S, Braun TM, Levine JE, et al. Elafin is a biomarker of graft-versus-host disease of the skin. Sci Transl Med. 2010;2(13):13ra2. doi: 10.1126/scitranslmed.3000406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Debenedet AT, Raghunathan TE, Wing JJ, et al. Alcohol use and cigarette smoking as risk factors for post-endoscopic retrograde cholangiopancreatography pancreatitis. Clin Gastroenterol Hepatol. 2009;7(3):353–8e4. doi: 10.1016/j.cgh.2008.11.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.DeBenedet AT, Saini SD, Takami M, et al. Do clinical characteristics predict the presence of small bowel angioectasias on capsule endoscopy? Dig Dis Sci. 2011;56(6):1776–81. doi: 10.1007/s10620-010-1506-9. [DOI] [PubMed] [Google Scholar]

- 38.Kim HM, Smith EG, Ganoczy D, et al. Predictors of suicide in patient charts among patients with depression in the Veterans Health Administration health system: importance of prescription drug and alcohol abuse. J Clin Psychiatry. 2012;73(10):e1269–75. doi: 10.4088/JCP.12m07658. [DOI] [PubMed] [Google Scholar]

- 39.Kim HM, Smith EG, Stano CM, et al. Validation of key behaviourally based mental health diagnoses in administrative data: suicide attempt, alcohol abuse, illicit drug abuse and tobacco use. BMC Health Serv Res. 2012;12:18. doi: 10.1186/1472-6963-12-18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Smith EG, Kim HM, Ganoczy D, et al. Suicide risk assessment received prior to suicide death by Veterans Health Administration patients with a history of depression. J Clin Psychiatry. 2013;74(3):226–32. doi: 10.4088/JCP.12m07853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Zheng K, Mei Q, Hanauer DA. Collaborative search in electronic health records. J Am Med Inform Assoc. 2011;18(3):282–91. doi: 10.1136/amiajnl-2011-000009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.The Java Open Source Spell Checker. Secondary The Java Open Source Spell Checker. http://jazzy.sourceforge.net/

- 43.SpellChecker Secondary SpellChecker. http://wiki.apache.org/lucene-java/SpellChecker.

- 44.Yang L, Mei Q, Zheng K, et al. Query log analysis of an electronic health record search engine. AMIA Annu Symp Proc. 2011;2011:915–24. [PMC free article] [PubMed] [Google Scholar]

- 45.Aronson AR, Lang FM. An overview of MetaMap: historical perspective and recent advances. J Am Med Inform Assoc. 2010;17(3):229–36. doi: 10.1136/jamia.2009.002733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Murphy SN, Weber G, Mendis M, et al. Serving the enterprise and beyond with informatics for integrating biology and the bedside (i2b2) J Am Med Inform Assoc. 2010;17(2):124–30. doi: 10.1136/jamia.2009.000893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Friedman C, Sturm LK, Chenoweth C. Electronic chart review as an aid to postdischarge surgical site surveillance: increased case finding. Am J Infect Control. 2001;29(5):329–32. doi: 10.1067/mic.2001.114401. [DOI] [PubMed] [Google Scholar]

- 48.Ferrara JL, Harris AC, Greenson JK, et al. Regenerating islet-derived 3-alpha is a biomarker of gastrointestinal graft-versus-host disease. Blood. 2011;118(25):6702–8. doi: 10.1182/blood-2011-08-375006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Harris AC, Ferrara JL, Braun TM, et al. Plasma biomarkers of lower gastrointestinal and liver acute GVHD. Blood. 2012;119(12):2960–3. doi: 10.1182/blood-2011-10-387357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Paczesny S, Krijanovski OI, Braun TM, et al. A biomarker panel for acute graft-versus-host disease. Blood. 2009;113(2):273–8. doi: 10.1182/blood-2008-07-167098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gao M, Gemmete JJ, Chaudhary N, et al. A comparison of particulate and onyx embolization in preoperative devascularization of juvenile nasopharyngeal angiofibromas. Neuroradiology. 2013;55(9):1089–96. doi: 10.1007/s00234-013-1213-2. [DOI] [PubMed] [Google Scholar]

- 52.Jensen KM, Taylor LC, Davis MM. Primary care for adults with Down syndrome: adherence to preventive healthcare recommendations. J Intellect Disabil Res. 2013;57(5):409–21. doi: 10.1111/j.1365-2788.2012.01545.x. [DOI] [PubMed] [Google Scholar]

- 53.Seyfried L, Hanauer DA, Nease D, et al. Enhanced identification of eligibility for depression research using an electronic medical record search engine. Int J Med Inform. 2009;78(12):e13–8. doi: 10.1016/j.ijmedinf.2009.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hanauer DA, Englesbe MJ, Cowan JA, Jr, et al. Informatics and the American College of Surgeons National Surgical Quality Improvement Program: automated processes could replace manual record review. J Am Coll Surg. 2009;208(1):37–41. doi: 10.1016/j.jamcollsurg.2008.08.030. [DOI] [PubMed] [Google Scholar]

- 55.Zampi JD, Donohue JE, Charpie JR, et al. Retrospective database research in pediatric cardiology and congenital heart surgery: an illustrative example of limitations and possible solutions. World J Pediatr Congenit Heart Surg. 2012;3(3):283–7. doi: 10.1177/2150135112440462. [DOI] [PubMed] [Google Scholar]

- 56.Campbell EJ, Krishnaraj A, Harris M, et al. Automated before-procedure electronic health record screening to assess appropriateness for GI endoscopy and sedation. Gastrointest Endosc. 2012;76(4):786–92. doi: 10.1016/j.gie.2012.06.003. [DOI] [PubMed] [Google Scholar]

- 57.Scheufele EL, Housman D, Wasser JS, et al. i2b2 and Keyword Search of Narrative Clinical Text. Annu Symp Proc. 2011:1950. [Google Scholar]

- 58.Asmar R, Beebe-Dimmer JL, Korgavkar K, et al. Hypertension, obesity and prostate cancer biochemical recurrence after radical prostatectomy. Prostate Cancer Prostatic Dis. 2013;16(1):62–6. doi: 10.1038/pcan.2012.32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ghaziuddin N, Dhossche D, Marcotte K. Retrospective chart review of catatonia in child and adolescent psychiatric patients. Acta Psychiatr Scand. 2012;125(1):33–8. doi: 10.1111/j.1600-0447.2011.01778.x. [DOI] [PubMed] [Google Scholar]

- 60.Jensen KM, Cooke CR, Davis MM. Fidelity of Administrative Data When Researching Down Syndrome. Med Care. 2013 doi: 10.1097/MLR.0b013e31827631d2. [DOI] [PubMed] [Google Scholar]

- 61.Jensen KM, Davis MM. Health care in adults with Down syndrome: a longitudinal cohort study. J Intellect Disabil Res. 2013;57(10):947–58. doi: 10.1111/j.1365-2788.2012.01589.x. [DOI] [PubMed] [Google Scholar]

- 62.O'Lynnger TM, Al-Holou WN, Gemmete JJ, et al. The effect of age on arteriovenous malformations in children and young adults undergoing magnetic resonance imaging. Childs Nerv Syst. 2011;27(8):1273–9. doi: 10.1007/s00381-011-1434-9. [DOI] [PubMed] [Google Scholar]

- 63.Patrick SW, Davis MM, Sedman AB, et al. Accuracy of hospital administrative data in reporting central line-associated bloodstream infections in newborns. Pediatrics. 2013;131 Suppl 1:S75–80. doi: 10.1542/peds.2012-1427i. [DOI] [PubMed] [Google Scholar]

- 64.Singer K, Subbaiah P, Hutchinson R, et al. Clinical course of sepsis in children with acute leukemia admitted to the pediatric intensive care unit. Pediatr Crit Care Med. 2011;12(6):649–54. doi: 10.1097/PCC.0b013e31821927f1. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.