Abstract

Recent fMRI decoding studies have demonstrated that early retinotopic visual areas exhibit similar patterns of activity during the perception of a stimulus and during the maintenance of that stimulus in working memory. These findings provide support for the sensory recruitment hypothesis that the mechanisms underlying perception serve as a foundation for visual working memory. However, a recent study by Ester, Serences, and Awh (2009) found that the orientation of a peripheral grating maintained in working memory could be classified from both the contralateral and ipsilateral regions of the primary visual cortex (V1), implying that, unlike perception, feature-specific information was maintained in a nonretinotopic manner. Here, we evaluated the hypothesis that early visual areas can maintain information in a spatially specific manner and will do so if the task encourages the binding of feature information to a specific location. To encourage reliance on spatially specific memory, our experiment required observers to retain the orientations of two laterally presented gratings. Multivariate pattern analysis revealed that the orientation of each remembered grating was classified more accurately based on activity patterns in the contralateral than in the ipsilateral regions of V1 and V2. In contrast, higher extrastriate areas exhibited similar levels of performance across the two hemispheres. A time-resolved analysis further indicated that the retinotopic specificity of the working memory representation in V1 and V2 was maintained throughout the retention interval. Our results suggest that early visual areas provide a cortical basis for actively maintaining information about the features and locations of stimuli in visual working memory.

Keywords: visual short-term memory, fMRI, pattern classification, decoding, feature-based attention, imagery

Introduction

Visual working memory refers to our ability to retain a small amount of visual information with high fidelity for a short period of time (Baddeley, 1986; Cowan, 2008; Zhang & Luck, 2008). Both neurophysiological and human neuroimaging studies have shown that higher-order brain areas, including the prefrontal, temporal, and parietal cortices, are involved in working memory maintenance (Fuster & Alexander, 1971; Miller, Erickson, & Desimone, 1996; Miyashita & Chang, 1988; Pessoa, Gutierrez, Bandettini, & Ungerleider, 2002; Ranganath & D'Esposito, 2005; Todd & Marois, 2004; Xu & Chun, 2006). However, recent human imaging studies have shown that early visual areas, including the primary visual cortex (V1), also support the active maintenance of visual information (Harrison & Tong, 2009; Serences, Ester, Vogel, & Awh, 2009). For example, in the study by Harrison and Tong (2009), participants viewed two sequentially presented oriented gratings and were subsequently cued to retain one of the two orientations during the retention interval. Using multivoxel pattern analysis (MVPA, Kamitani & Tong, 2005; Norman, Polyn, Detre, & Haxby, 2006; Tong & Pratte, 2012), they showed that fMRI activity patterns in both the striate and extrastriate visual areas could be used to successfully classify which of the stimuli was being retained. Moreover, pattern classifiers trained on cortical responses to unattended presentations of low-contrast gratings could successfully classify orientations being held in memory. These results suggest that features are maintained in working memory using the same mechanisms that underlie visual perception, an idea referred to as the sensory recruitment hypothesis (see also Pasternak & Greenlee, 2005).

The sensory recruitment hypothesis is also supported by behavioral evidence. For example, the visual features of a stimulus, such as its spatial frequency, color, motion direction, or orientation, can be held in memory for well over 10 s with a precision that nearly matches that of immediate perceptual judgments (see Magnussen & Greenlee, 1999 for a review). Moreover, working memory performance is better when the sample and test stimuli are presented in the same spatial location than when they appear in different locations (Hollingworth, 2006, 2007; Zaksas, Bisley, & Pasternak, 2001). Taken together, these behavioral findings provide support for the proposal that visual working memory relies on the same neural representations that underlie visual perception with a retinotopically specific representation of local feature information.

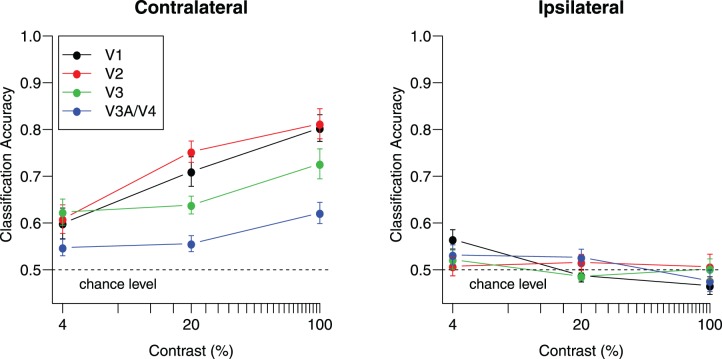

If the neural mechanisms underlying visual working memory are similar to those underlying vision itself, then signatures of orientation processing in early visual areas during viewing should also be observed during the retention of orientation in working memory. One such characteristic of orientation processing is retinotopic specificity: Reliable orientation information should be found primarily at the corresponding retinotopic location in the visual cortex. Figure 1 shows orientation classification performance reported by Tong, Harrison, Dewey, and Kamitani (2012), in which participants viewed two simultaneously presented gratings in the left and right visual fields with independent orientations. The authors showed that classification performance improves as a function of stimulus contrast for corresponding contralateral visual cortex but led to chance-level performance in the ipsilateral visual cortex. Tong et al. (2012) also found that this contralateral specificity holds across a range of stimulus spatial frequencies. Although above-chance classification has been found in the ipsilateral visual cortex in studies involving feature-based attention, even here, decoding of the attended stimulus is typically better for contralateral than ipsilateral cortical regions (e.g., Jehee, Brady, & Tong, 2011; Kamitani & Tong, 2005; Serences & Boynton, 2007; but see Anderson, Ester, Serences, & Awh, 2013 for an recent exception).

Figure 1.

Classification accuracy for viewed gratings increases as a function of stimulus contrast in the contralateral visual cortex but falls around chance-level performance in the ipsilateral visual cortex. Adapted with permission from Tong et al. (2012). Separate gratings were shown in each visual field with independent orientations on each block (45° or 135°) at one of three contrast levels. The left panel shows performance for contralateral hemispheres, which is significantly above chance for all visual areas at all contrast levels (all ps < 0.05, one-tailed t test). The right panel shows performance for the ipsilateral hemispheres, and all areas at all contrast levels failed to deviate significantly from chance with the single exception of V1 at 4% contrast, t(9) = 3.15, p < 0.05, uncorrected. Error bars indicate ±1 SEM based on data from 10 participants.

If perception and visual working memory share a common substrate, then one would expect that the neural representation of a remembered stimulus in early visual areas should be specific to the retinotopic location in which the stimulus was studied. A study by Ester et al. (2009) addressed this question by presenting a single grating in either the left or right visual field. A postcue indicated whether the grating's orientation should be maintained during the subsequent retention interval or not. MVPA was used to classify the retained orientation and applied separately to the activity patterns in left V1 and right V1. Surprisingly, the remembered orientation could be classified based on activity patterns in the hemisphere ipsilateral to the location of the originally presented stimulus. Moreover, classification accuracy was equivalent in V1 regions both ipsilateral and contralateral to the stimulus location. This result was taken to suggest that features in working memory are not retained in a retinotopically specific manner but, rather, are represented in a spatially global fashion in the primary visual cortex. This lack of retinotopic specificity was quite unexpected, not only because it is discordant with the notion that visual working memory relies on neural mechanisms that support perception, but also because of the behavioral evidence of retinotopic specificity in working memory tasks (Hollingworth, 2006, 2007; Zaksas et al., 2001).

The results of Ester et al. (2009) provide compelling evidence that maintained information about a visual feature can spread to other locations in the visual cortex—even to the opposite hemisphere. These findings are consistent with fMRI reports of spatial spreading of feature-based attention to unstimulated portions of the visual field (Jehee et al., 2011; Serences & Boynton, 2007). However, is it necessarily the case that these areas can only maintain visual information by relying on nonspatiotopic representations? Perhaps under different circumstances, one might find that the visual system is capable of maintaining the representation of specific features at specific spatial locations.

The goal of the present study was to evaluate the hypothesis that early visual areas should be capable of maintaining information in a spatially specific manner and will do so if the working memory task encourages the binding of featural information to specific visual locations. In the study by Ester et al. (2009), participants only had to retain the orientation of a single peripheral grating. As a consequence, they could potentially rely on a strategy of maintaining information about that single orientation in a manner that was not bound to the original stimulus location. For example, participants might prefer to maintain such information by relying on foveal representations in early visual areas or by relying on most of the visual cortex. Although such strategies would lead to bilateral representations of the orientation, these results may not necessarily reflect a fundamental property of working memory representations in early visual areas.

In the present study, we encouraged the maintenance of spatially specific information by requiring our participants to remember the orientation of two gratings presented in the separate hemifields (see Figure 2). This experimental design was expected to encourage participants to maintain a representation of each stimulus orientation at the corresponding location to avoid potentially confusing which of the two orientations appeared at which location. We applied multivariate pattern analysis to fMRI activity patterns corresponding to the retention interval to determine whether reliable orientation information was maintained in both the contralateral and ipsilateral regions of interest (ROIs) of the early visual areas. If working memory relies on spatially global representations, then one would expect to find equally good classification performance across the contralateral and ipsilateral representations in the visual cortex. However, if working memory is spatially specific and our task successfully motivates participants to take advantage of this specificity, then we expect to find better classification performance in the contralateral representation than in the ipsilateral representation.

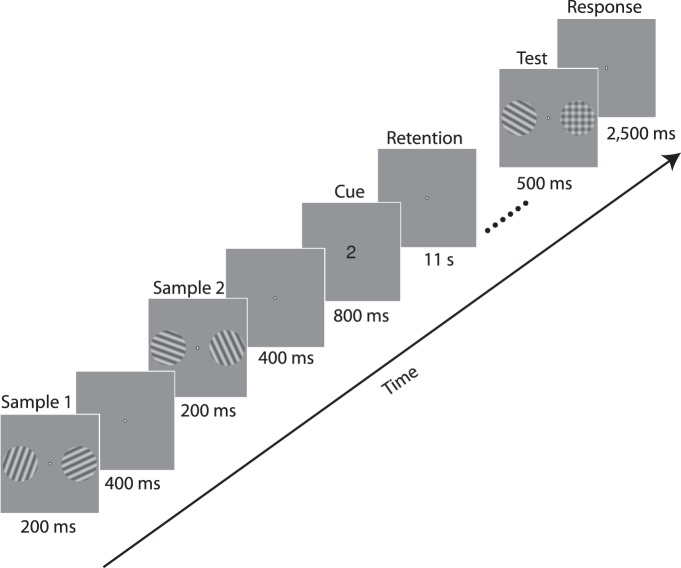

Figure 2.

Trial structure. Two sample gratings were presented bilaterally at the beginning of each trial and were soon followed by two more gratings. A central cue of “1” or “2” then indicated which pair of gratings was to be retained during the subsequent retention interval. Following retention, a grating was presented in either the left or right position, rotated slightly clockwise or counterclockwise relative to the original sample grating presented in that location; a plaid stimulus appeared in the untested location. Participants indicated the direction of the orientation change within the response period.

Experiment

Methods

Participants

Eight adults (three male), ages 22–39, with normal or corrected-to-normal visual acuity participated in both a retinotopic scanning session and an experimental session. All participants provided informed written consent, and the study was approved by the Vanderbilt University Institutional Review Board.

Data acquisition

All MRI data were collected with a Philips 3T Intera Achieva scanner using an eight-channel head coil. Functional acquisitions were standard gradient-echo echoplanar T2*-weighted images, consisting of 28 slices aligned perpendicular to the calcarine sulcus (TR 2000 ms; TE 35 ms; flip angle 80°; FOV = 192 × 192 mm, slice thickness 3 mm with no gap; in-plane resolution 3 × 3 mm). This slice prescription covered the entire occipital lobe and parts of the posterior parietal and temporal cortices with no SENSE acceleration. During a separate retinotopic mapping session, a high-resolution 3-D anatomical T1-weighted image was acquired (FOV = 256 × 256; resolution = 1 × 1 × 1 mm), and this image was used to construct an inflated representation of the cortical surface. A custom bite-bar system was used to minimize head motion.

Stimuli and design

This experiment relied on a postcuing working memory paradigm (Harrison & Tong, 2009) to distinguish memory-specific activity from stimulus-driven activity; the structure of a representative trial is shown in Figure 2. Each trial began with the 200-ms presentation of two sine wave gratings (radius 4°; spatial frequency 1.2 c/°; contrast 0.2), centered at locations 5.5° to the left and right of fixation. Following a brief interstimulus interval, two more gratings were presented at the same locations. The orientations of the two left-field gratings were approximately 20° and 110°, and those of the two right-field gratings were 65° and 155° (jittered by up to ±5° on each trial), presented in a randomized order. Following a brief poststimulus period, a retroactive cue of “1” or “2” was presented, informing participants to retain either the first or second pair of stimuli, respectively, during the subsequent 11-s retention interval. Following retention, a test grating was presented in one visual location, and a plaid stimulus was presented in the other. The orientation of the test grating was rotated clockwise or counterclockwise relative to the grating studied in that location. Participants indicated the direction of rotation from study to test with a button press (within 3 s of stimulus onset). The magnitude of the orientation change was set to 8° at the beginning of each session, and adjusted slightly between runs (range 8°–10°) to ensure that that the participant's accuracy remained near 75% correct.

Each 8.8-min experimental run consisted of 16 trials that lasted 16 s each, which were interleaved between 16-s fixation-rest periods. Conditions within each run were counterbalanced in a 2 × 2 × 2 × 2 factorial design across factors of left item order (20° first vs. 110° first), right item order (65° first vs. 155° first), which set of gratings was cued (first vs. second), and which location was probed at test (left vs. right). The order of these conditions was randomized within each run. Each participant completed between 9 and 10 runs of the experiment, thereby providing 144–160 total sample trials for fMRI analysis.

Regions of interest

During the experimental session, participants completed two visual localizer runs in addition to the experimental runs. In the localizer runs, a single grating was presented in either the left or right visual field, alternating position every 12 s. The grating flickered on and off every 200 ms with a random phase, and one of the four orientations used in the experiment was chosen randomly for each presentation. The grating had a slightly smaller radius (3.8°) and identical contrast and spatial frequency as the experimental stimuli. Standard regression analyses were used to identify voxels that preferentially responded to the left stimulus region over the right and visa versa.

In a session separate from the main experiment, standard retinotopic mapping procedures were used to delineate visual areas V1, V2, V3, V3A, V3B, and V4 (DeYoe et al., 1996; Engel, Glover, & Wandell, 1997; Sereno et al., 1995) in the left and right hemispheres. These cortical visual areas were defined on the inflated surface, which was constructed using Freesurfer (Dale, Fischl, & Sereno, 1999). The results of the functional localizer were transformed to this surface using Freesurfer's boundary-based registration (Greve & Fischl, 2009) so that the localizer maps could be overlaid with retinotopic maps. ROIs were identified separately in each hemisphere by taking the 60 most responsive voxels in the appropriate left- or right-hemisphere localizer that lay within area V1, V2, or V3 for each participant. For example, voxels that responded more to the left visual field stimulus in the localizer were used to select voxels in the right hemisphere of V1. The number of voxels used in our main decoding analysis was chosen a priori as it ensured the selection of strongly responsive voxels in areas V1 through V3 and matched the ROI size used by Ester et al. (2009). Areas V3A, V3B, and V4 were combined as fewer active voxels were available in these regions, and the 60 most responsive voxels across all three areas were used to define a combined ROI, V3AB/V4. In addition, an ROI combining all visual areas (labeled “V1–V4”) was constructed by combining the 60 most responsive voxels in each visual area, yielding an ROI comprised of 240 voxels.

Data preprocessing

fMRI data for each participant were simultaneously motion-corrected and aligned to the mean of one run using FSL's MCFLIRT motion-correction algorithm (Jenkinson, Bannister, Brady, & Smith, 2002). The data were then high-pass filtered (cutoff = 50 s) in the temporal domain, spatially smoothed (Gaussian FWHM = 2 mm), and registered to the cortical surface to identify voxels lying within each ROI. We have previously found that modest spatial smoothing using a 2-mm Gaussian kernel can improve orientation classification performance (Swisher et al., 2010) and did so here; however, we also confirmed that the overall patterns of results did not depend on this preprocessing step. The time-series data for each voxel were converted to percentage signal change units separately for each run by dividing the signal by its mean intensity over each run. Outliers were then removed from the signal by truncating any voxel responses that deviated by more than three of that voxel's standard deviation from zero. These data were then averaged over acquisitions corresponding to the retention interval and shifted by 4 s to account for hemodynamic lag (including acquisitions starting at 6, 8, 10, and 12 s into the trial). For a given ROI, the result was a matrix of mean voxel responses, for which each row corresponded to a trial and each column was a separate voxel's response. This data matrix and the corresponding label vectors denoting the left-stimulus and right-stimulus orientations served as input for the pattern classification analysis.

Multivoxel pattern classification

The goal of pattern classification is to quantify the extent to which a group of voxels conveys information about a stimulus condition or cognitive state, such as the orientation being maintained during the retention interval (for reviews, see Norman et al., 2006; Tong & Pratte, 2012). We used a leave-one-run-out cross-validation procedure (Kamitani & Tong, 2005) whereby linear support vector machines (SVM, Vapnik, 1998) with cost parameters of 0.01 were trained to discriminate between orientation conditions based on activity patterns from all but one run. This trained model was then used to identify the remembered orientation in each trial in the left-out test run. This procedure was repeated iteratively until all runs served as test once, which ensured independence between the training and test data sets. Consequently, the classifier is guaranteed to perform at chance level if no information about the orientation condition is present in the voxel activity pattern. The accuracy of this classification procedure averaged across all trials serves as the dependent measure of the orientation information present in a given ROI. We assessed whether classification accuracy scores were above chance for a given ROI using a one-tailed t test as the large number of samples that were collected for each participant (144–160) ensured that the binomially distributed accuracy scores were well approximated by a normal distribution. We also performed a nonparametric bootstrapping test by repeatedly, randomly shuffling the orientation labels and performing classification, producing a null distribution of accuracy scores. The proportion of this null distribution that lies above the observed accuracy scores serves as a p value for assessing above-chance classification, and the pattern of results from this analysis were virtually identical to those obtained using the t test.

Two separate SVMs were trained across trials for each region of interest. One was trained to classify trials based on the remembered orientation presented in the left visual field (20° vs. 110°), and the other was trained to classify orientations presented in the right visual field (65° vs. 155°). The relationship between the stimulus location used to provide labels to train the SVM and the visual area of interest can be categorized as ipsilateral or contralateral. For example, classification accuracy in left V1 for stimuli in the left visual field is ipsilateral whereas classification accuracy in right V1 for stimuli in the left visual field is contralateral. Classification accuracy was averaged across hemispheres separately for each visual area as no differences in performance were observed across hemispheres. The result was a measure of classification accuracy for predicting the orientation of both contralateral and ipsilateral gratings for each visual area.

Results

Behavioral results

Average behavioral performance on the task was 74% (ranging from 63%–81%). A repeated-measures ANOVA revealed that behavioral performance did not depend on whether the left or right stimulus was tested, F(1, 7) = 0.02, p = 0.90, nor on whether the first or second stimulus was tested, F(1, 7) = 3.1, p = 0.12.

Pattern classification

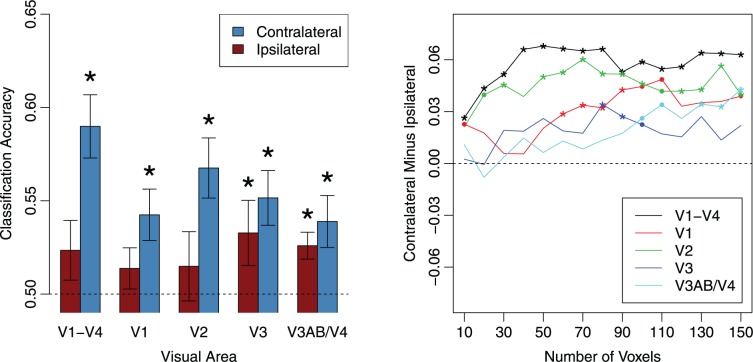

Classifiers were trained on voxel activity patterns to identify the orientation represented during the retention interval, and the accuracy of this classification analysis served as a measure of the amount of orientation information maintained in the activity patterns of a given visual area. The left panel of Figure 3 shows classification accuracy for each visual area separately for contralateral and ipsilateral stimulus-hemisphere relationships (averaged over left and right hemispheres). Overall classification accuracy was lower than that found by Harrison and Tong (2009), which involved retaining a single, large central grating (∼75% decoding accuracy for individual areas), but comparable to the accuracy found by Ester et al. (2009) during the maintenance of a peripheral grating. Additional analyses confirmed that overall decoding accuracy was comparable when the first or second set of gratings had to be retained, similar to previous reports (Harrison & Tong, 2009), indicating that cortical information about the first set of gratings could be actively maintained and was robust to potential interference from the second set of gratings.

Figure 3.

Classification accuracy for orientations held in working memory. Left: Orientation classification performance for gratings presented to the ipsilateral and contralateral hemifields. Error bars denote standard errors; stars denote significant (p < 0.05) deviations from chance-level performance. Right: Contralateral advantage in classification as a function of the number of voxels used in each ROI. Asterisks denote statistically significant effects (p < 0.05); dots denote marginal significance (p < 0.10).

For the contralateral hemisphere, classification performance was significantly greater than chance level as indicated by one-tailed t tests in the combined V1–V4 ROI, t(7) = 5.30, p = 0.0006; V1, t(7) = 3.08, p = 0.009; V2, t(7) = 4.19, p = 0.002; V3, t(7) = 3.52, p = 0.005; and V3AB/V4, t(7) = 2.79, p = 0.01. In contrast, classification performance for the ipsilateral hemisphere was not significantly above chance in the combined V1–V4 ROI, t(7) = 1.47, p = 0.09; V1, t(7) = 1.25, p = 0.13; or V2, t(7) = 0.78, p = 0.23, but was above chance in V3, t(7) = 1.88, p = 0.05, and V3AB/V4, t(7) = 3.60, p = 0.004. These results indicate that information about a laterally presented grating was evident in the bilateral activity patterns of higher extrastriate visual areas, but in early visual areas V1 and V2, reliable information was found only in the hemisphere contralateral to the stimulus being decoded.

Although we find positive evidence in contralateral visual areas and nonsignificant results in ipsilateral V1 and V2, the lack of reliable ipsilateral classification in early areas could arguably be attributed to noise in the fMRI signal and insufficient statistical sensitivity. As a consequence, one cannot rule out the possibility that there might exist some information in the ipsilateral regions of V1 and V2 on the basis of such null results. However, the critical question in this study is whether classification performance is significantly higher in contralateral than ipsilateral hemispheres for each ROI, which would directly suggest at least some degree of retinotopic specificity.

To determine whether the classification performance was higher in the contralateral than the ipsilateral hemispheres, we performed a repeated-measures ANOVA with visual area (V1, V2, V3, and V3AB/V4) and ipsilateral-contralateral condition as separate factors. This analysis revealed a main effect of lateralization, F(1, 7) = 13.05, p < 0.01; no effect of visual area, F(3, 21) = 1.46, p = 0.25; and marginal evidence for an interaction between lateralization and visual area, F(3, 21) = 2.39, p = 0.10. Planned comparisons revealed that classification performance was significantly higher for contralateral than ipsilateral relationships for areas V1, t(7) = 5.00, p < 0.002, and V2, t(7) = 3.39, p = 0.01, and for the ROI that combined the activity patterns of visual areas V1 through V4, t(7) = 5.13, p = 0.001. However, performance did not reliably differ in areas V3, t(7) = 1.13, p = 0.29, or V3AB/V4, t(7) = 1.45, p = 0.19. These results suggest that the orientations of the lateral gratings were maintained in a retinotopically specific manner in the earliest cortical visual areas V1 and V2. The marginal interaction between visual area and the ipsilateral-contralateral factor was also suggestive of a gradual shift in how these gratings are represented in different visual areas during working memory maintenance, with representations becoming less retinotopically specific in higher visual areas.

We conducted an additional analysis to determine whether the effects we observed were consistent across a range of ROI sizes based on the activation maps obtained from our independent visual localizer experiment. The right panel of Figure 3 shows the difference between classification performance in the contralateral and ipsilateral hemispheres plotted as a function of the number of voxels selected from each ROI. Positive values indicate a contralateral advantage. Across a wide range of ROI sizes, decoding performance was significantly higher in contralateral than ipsilateral hemispheres for the combined V1–V4 ROI, V1, and also V2, indicating that this contralateral advantage is reliable and robust. Although we decided a priori to use an ROI size of 60 voxels to match the selection criterion used by Ester et al. (2009), it is worth noting that with a larger ROI size of 80–90 voxels, area V3 also exhibits a significant contralateral advantage.

Time-resolved pattern classification

In addition to classification of BOLD activity patterns averaged over the duration of the retention interval, it is possible to perform a time-resolved analysis by classifying orientation separately for each fMRI time point (c.f., Albers, Kok, Toni, Dijkerman, & de Lange, 2013; Harrison & Tong, 2009). Specifically, SVMs were trained and tested separately on activity patterns at each time point using a leave-one-run-out cross-validation procedure. To improve statistical power, we first performed this analysis by combining the activity patterns across early visual areas V1–V4.

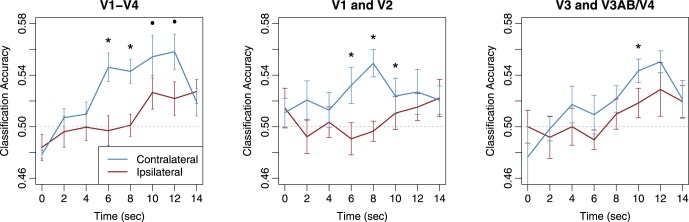

The left panel of Figure 4 shows that reliable information emerges over time in the contralateral hemisphere, reaching statistical significance by time point 6 s with statistically significant or marginally significant classification performance observed at time points 6 s through 12 s. The rise in information over the first 6 s is consistent with the BOLD hemodynamic lag, and the sustained component thereafter agrees with previous reports showing the maintenance of item-specific information in early visual areas throughout the delay period (Harrison & Tong, 2009). In contrast, performance for ipsilateral V1–V4 is generally lower, appears to rise later in time, and does not reach statistical significance. Planned comparisons with a one-tailed t test indicated that classification performance was significantly greater for the contralateral than the ipsilateral representation at the time points of 6 s, t(7) = 4.19, p < 0.01, and 8 s, t(7) = 4.08, p < 0.01, and the difference was marginally significant at 10 s, t(7) = 2.08, p = 0.08, and 12 s, t(7) = 2.19, p = 0.06. The results of this time-resolved analysis show that the contralateral-over-ipsilateral advantage in classification accuracy is not idiosyncratic; rather, it persists for a large proportion of the retention interval and is robust enough to be detectable at even a single acquisition.

Figure 4.

Time-resolved classification of remembered orientation for activity patterns in a combined V1–V4 ROI (left), an ROI combining visual areas V1 and V2 (middle), and an ROI combining areas V3 and V3AB/V4 (right). Pattern classifiers were trained and tested separately for each BOLD acquisition in each ROI. Error bars denote standard errors, asterisks denote significant differences between contralateral (blue curve) and ipsilateral (red curve) classification performance at each time point (p < 0.05, not corrected for multiple comparisons), and dots denote marginal significance (p < 0.10).

We also conducted the time-resolved classification analysis separately for areas V1–V2 combined and for V3–V4 combined, motivated by the fact that our main analyses indicated retinotopic specificity in V1 and V2. The middle panel of Figure 4 shows the time-resolved classification analysis for V1 and V2 combined, and the results again suggest significant classification performance in the contralateral hemisphere throughout the retention interval but very little evidence for information in the ipsilateral hemisphere. In addition, contralateral performance was significantly higher than ipsilateral performance at the time points of 6 s, t(7) = 3.27, p = 0.007; 8 s, t(7) = 3.52, p = 0.005; and 10 s, t(7) = 1.89, p = 0.05. In contrast, the right panel of Figure 4 suggests that in V3 and V3AB/V4, classification performance rises very slowly over the retention period in both contralateral and ipsilateral hemispheres. In these higher extrastriate areas, there appears to be somewhat better classification for the contralateral representation although this advantage was only significant at 10 s, t(7) = 1.94, p = 0.046. Taken together, the results indicate that early visual areas V1 and V2 maintain a greater amount of information about the contralateral stimulus than the ipsilateral stimulus throughout the delay period.

Discussion

According to the sensory recruitment hypothesis, visual working memory representations are maintained in early visual areas using the same neural machinery that underlies visual perception. Because retinotopic specificity is a defining feature of sensory processing in early visual areas, the sensory recruitment hypothesis predicts that representations in visual working memory should also exhibit retinotopic specificity. We tested this prediction by requiring participants to retain the orientations of two laterally presented gratings. We measured the amount of item-specific orientation information in the hemispheres contralateral and ipsilateral to the location of each remembered grating using multivariate pattern classification. In early visual areas V1 and V2, classification of the retained orientation was greater in the contralateral than in the ipsilateral hemisphere. These results suggest that the oriented gratings were retained in working memory in a retinotopically specific manner, supporting the notion that perception and memory share a common substrate in early visual areas (Harrison & Tong, 2009; Serences et al., 2009).

Behavioral evidence has shown that visual features, such as orientation and spatial frequency, can be retained for many seconds with minimal loss in precision beyond what is observed in immediate perceptual judgments (Magnussen & Greenlee, 1999; Zhang & Luck, 2009). Moreover, in working memory tasks, visual features are reported with higher precision when the probe stimulus appears in the same location as the original sample stimulus (Hollingworth, 2006, 2007; Zaksas et al., 2001). Our work corroborates these behavioral observations, supporting the role of early visual areas in maintaining a precise, retinotopically specific representation of a visual stimulus in working memory.

Our results differ from those of Ester et al. (2009), who found that the orientation of a laterally presented grating held in memory could be classified equally well in the contralateral and ipsilateral V1, suggesting a spatially global representation. We suspect that this discrepancy reflects a critical difference in design between their study and our own. In our study, participants were required to retain the orientations of two gratings presented simultaneously. We designed this task to encourage participants to not just remember the orientations, but to remember where each orientation was presented. In contrast, the Ester et al. study required only a single grating to be maintained, and this task provided little incentive to retain the orientation of the grating in the spatial location in which it was studied. For example, one possible strategy might be to represent the lateral grating at the fovea, potentially increasing performance and making classification in the ipsilateral hemisphere possible. The time course analysis of our data (Figure 4) further supports the possibility that transformations in visual working memory are reflected in early visual areas: Over the course of the 11-s retention interval, classification appears to become less retinotopically specific. We suspect that this pattern of results may reflect an online transformation of information in visual working memory, such as a loss of retinotopic specificity. However, this hypothesis should be considered tentative, and more research will be needed to determine how such online transformations are reflected in representations in early visual areas.

We found that the spatial specificity of working memory representations was strongest in areas V1 and V2, but appeared to become more retinotopically diffuse in higher extrastriate visual areas. These findings concur with fMRI studies of retinotopic mapping, which find evidence of BOLD responses to ipsilateral stimulation in higher visual areas, including areas V3A and V4 (Dumoulin & Wandell, 2008; Tootell, Mendola, Hadjikhani, Liu, & Dale, 1998). Although this pattern of results suggests a similarity between perception and memory maintenance, it also raises an important question: If memory-related activity in early visual areas results from top-down feedback progressing downward through the visual hierarchy, then how can a representation beginning with poor retinotopic specificity in high-level areas lead to greater specificity as information makes its way back to V1? Alternatively, if visual information is stored directly in V1 during working memory maintenance with information propagating forward through the visual stream and losing spatial specificity along the way, what is the role of feedback in maintaining such representations in V1? Although much more work is needed to address these questions, we believe that fMRI pattern classification provides a promising avenue for examining them.

Previous fMRI studies have shown that, in simple perception tasks, reliable orientation information can only be found in the activity patterns of contralateral visual areas with negligible information found in ipsilateral visual areas (see Figure 1; Kamitani & Tong, 2005; Tong et al., 2012). However, when participants must attend to a particular visual feature, such as the orientation of a grating or the motion of a set of drifting dots, feature-based attention can lead to the spreading of attended information to the ipsilateral hemisphere. For example, Serences and Boynton (2007) showed that attending to the motion of a unilateral motion stimulus led to direction-specific activity patterns in not only contralateral visual areas; ipsilateral visual areas showed weaker but reliable direction information as well. Similarly, Jehee et al. (2011) found that attending to the orientation of a lateral grating led to better orientation classification performance in contralateral visual areas (increases from ∼70% to ∼80% accuracy) and also boosted the performance of ipsilateral visual areas from chance level to ∼60% accuracy. These studies of feature-based attention indicate that feature information can spread to the ipsilateral hemisphere but that a contralateral advantage persists due to the presence of retinotopically specific stimulus information.

Likewise, here we observe some information about an actively remembered stimulus in ipsilateral regions of higher extrastriate areas, but we find generally better classification in contralateral than ipsilateral visual areas V1 and V2. Taken together, these findings suggest that representations of both attended features and features held in working memory involve some degree of retinotopic specificity but that feature-specific information may also spread to other regions depending on the task demands. The concepts of working memory and attention are clearly intertwined (e.g., Engle, 2002), and the nature of retinotopic specificity in feature-based attention may play an important role in our understanding of retinotopic specificity in working memory.

The concepts of visual working memory and mental imagery are also closely related (Kosslyn, Ganis, & Thompson, 2001; Luck & Vogel, 2013), and the possibility that these cognitive functions may rely on common perceptual mechanisms has been a focus of recent discussions (Tong, 2013). In particular, a recent fMRI study by Albers et al. (2013) demonstrated that orientations held in working memory led to very similar activity patterns in early visual areas as those that result from acts of mental imagery. The authors found that performing a mental rotation task on an oriented grating leads to the predicted updating of the orientation represented in early visual areas. These experiments and behavioral evidence demonstrating an influence of mental imagery on perception (Pearson, Clifford, & Tong, 2008) suggest that the same neural machinery responsible for perception supports both working memory and imagery and presumably does so in similar ways. The idea that other higher-level cognitive processes such as mental imagery rely on early sensory processing in the same way that working memory does provides a powerful framework for understanding the relationships between these processes. Indeed, the strongest formulations of the sensory recruitment hypothesis can be attributed to an extensive line of research on visual imagery (see Kosslyn et al., 2001 for a review). However, more work will be needed to determine the extent to which working memory and mental imagery rely on overlapping or distinct mechanisms.

We used MVPA to measure the amount of orientation-specific information present in early visual areas during working memory maintenance. Whereas differences in mean BOLD amplitude across the contralateral and ipsilateral hemispheres could simply reflect differences in attentional allocation and not necessarily anything about the strength of feature representations, pattern classification provides a powerful tool for doing so. However, since the earliest use of MVPA to classify orientation signals from visual areas, the source of the signal that allows for this classification has been debated. Some researchers have suggested that the orientation information present in fMRI signals arises from local anisotropies in the distribution of orientation columns in early visual areas (Alink, Krugliak, Walther, & Kriegeskorte, 2013; Kamitani & Tong, 2005; Swisher et al., 2010) whereas others have argued that low-frequency biases in the layout of orientation-tuned neurons largely accounts for successful orientation classification (Freeman, Brouwer, Heeger, & Merriam, 2011; Sasaki et al., 2006). From our current research and standpoint, we find evidence of orientation at both local and global spatial scales. That being said, the goals of the present study on visual working memory are distinct from and orthogonal to this ongoing debate. Regardless of the source of the orientation signal, it undoubtedly reflects information about the orientation of the remembered stimulus in the visual cortex.

The present finding of retinotopic specificity during the active maintenance of visual orientation provides further evidence to support the sensory recruitment hypothesis and complements a growing body of research on the functional role of early visual areas in visual working memory. Recent studies have demonstrated the presence of stimulus-specific information in early visual areas during working memory tasks, not only for stimulus orientation (Harrison & Tong, 2009; Serences et al., 2009), but also for color (Serences et al., 2009), motion direction (Riggall & Postle, 2012), stimulus contrast (Xing, Ledgeway, McGraw, & Schluppeck, 2013), and more complex visual patterns (Christophel, Hebart, & Haynes, 2012). Although it remains to be determined whether retinotopically specific representations would be found in early visual areas for feature domains other than stimulus orientation, based on the current findings, we would predict that this would likely be the case. Indeed, typical visual working memory tasks require participants to maintain precise information about what features are where in the visual field (e.g., Bays & Husain, 2008; Zhang & Luck, 2008). Given the precision with which early visual areas can encode spatial and featural information to support perception, these cortical areas would appear to provide an ideal site for maintaining high-resolution information about stimuli that are no longer in view.

Acknowledgments

We thank E. C. Lorenc for technical assistance. This research was supported by grants from the National Science Foundation (BCS-1228526) and the National Eye Institute grant R01-EY017082 to FT and the National Eye Institute grant F32-EY022569 to MP and was facilitated by an NIH P30-EY008126 center grant to the Vanderbilt Vision Research Center.

Commercial relationships: none.

Corresponding author: Michael S. Pratte.

Email: prattems@gmail.com.

Address: Psychology Department and Vanderbilt Vision Research Center, Vanderbilt University, Nashville, TN, USA.

Contributor Information

Michael S. Pratte, Email: prattems@gmail.com.

Frank Tong, Email: frank.tong@vanderbilt.edu.

References

- Albers A. M., Kok P., Toni I., Dijkerman H. C., de Lange F. P. (2013). Shared representations for working memory and mental imagery in early visual cortex. Current Biology, 23 (15), 1427–1431. [DOI] [PubMed] [Google Scholar]

- Alink A., Krugliak A., Walther A., Kriegeskorte N. (2013). fMRI orientation decoding in V1 does not require global maps or globally coherent orientation stimuli. Frontiers in Psychology , 4, 493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson D. E., Ester E. F., Serences J. T., Awh E. (2013). Attending multiple items decreases the selectivity of population responses in human primary visual cortex. Journal of Neuroscience, 33 (22), 9273–9282. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- Baddeley A. (1986). Working memory. Oxford, UK: Oxford University Press. [Google Scholar]

- Bays P. M., Husain M. (2008). Dynamic shifts of limited working memory resources in human vision. Science, 321 (5890), 851–854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christophel T. B., Hebart M. N., Haynes J. D. (2012). Decoding the contents of visual short-term memory from human visual and parietal cortex. Journal of Neuroscience, 32 (38), 12983–12989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N. (2008). What are the differences between long-term, short-term, and working memory? Progress in Brain Research , 169, 323–338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale A. M., Fischl B., Sereno M. I. (1999). Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage, 9 (2), 179–194. [DOI] [PubMed] [Google Scholar]

- DeYoe E. A., Carman G. J., Bandettini P., Glickman S., Wieser J., Cox R., Neitz J. (1996). Mapping striate and extrastriate visual areas in human cerebral cortex. Proceedings of the National Academy of Sciences, USA , 93 (6), 2382–2386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumoulin S. O., Wandell B. A. (2008). Population receptive field estimates in human visual cortex. Neuroimage, 39 (2), 647–660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel S. A., Glover G. H., Wandell B. A. (1997). Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cerebral Cortex, 7 (2), 181–192. [DOI] [PubMed] [Google Scholar]

- Engle R. W. (2002). Working memory capacity as executive attention. Current Directions in Psychological Science, 11 (1), 19–23. [Google Scholar]

- Ester E. F., Serences J. T., Awh E. (2009). Spatially global representations in human primary visual cortex during working memory maintenance. Journal of Neuroscience, 29 (48), 15258–15265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman J., Brouwer G. J., Heeger D. J., Merriam E. P. (2011). Orientation decoding depends on maps, not columns. Journal of Neuroscience, 31 (13), 4792–4804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster J. M., Alexander G. E. (1971). Neuron activity related to short-term memory. Science, 173 (3997), 652–654. [DOI] [PubMed] [Google Scholar]

- Greve D. N., Fischl B. (2009). Accurate and robust brain image alignment using boundary-based registration. Neuroimage, 48 (1), 63–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison S. A., Tong F. (2009). Decoding reveals the contents of visual working memory in early visual areas. Nature, 458 (7238), 632–635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingworth A. (2007). Object-position binding in visual memory for natural scenes and object arrays. Journal of Experimental Psychology: Human Perception and Performance , 33 (1), 31–47. [DOI] [PubMed] [Google Scholar]

- Hollingworth A. (2006). Scene and position specificity in visual memory for objects. Journal of Experimental Psychology: Learning, Memory, and Cognition , 32 (1), 58–69. [DOI] [PubMed] [Google Scholar]

- Jehee J. F., Brady D. K., Tong F. (2011). Attention improves encoding of task-relevant features in the human visual cortex. Journal of Neuroscience, 31 (22), 8210–8219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M., Bannister P., Brady M., Smith S. (2002). Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage, 17 (2), 825–841. [DOI] [PubMed] [Google Scholar]

- Kamitani Y., Tong F. (2005). Decoding the visual and subjective contents of the human brain. Nature Neuroscience , 8 (5), 679–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn S. M., Ganis G., Thompson W. L. (2001). Neural foundations of imagery. Nature Reviews Neuroscience, 2 (9), 635–642. [DOI] [PubMed] [Google Scholar]

- Luck S. J., Vogel E. K. (2013). Visual working memory capacity: From psychophysics and neurobiology to individual differences. Trends in Cognitive Sciences , 17 (8), 391–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magnussen S., Greenlee M. W. (1999). The psychophysics of perceptual memory. Psychological Research , 62 (2–3), 81–92. [DOI] [PubMed] [Google Scholar]

- Miller E. K., Erickson C. A., Desimone R. (1996). Neural mechanisms of visual working memory in prefrontal cortex of the macaque. Journal of Neuroscience , 16 (16), 5154–5167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miyashita Y., Chang H. S. (1988). Neuronal correlate of pictorial short-term memory in the primate temporal cortex. Nature, 331 (6151), 68–70. [DOI] [PubMed] [Google Scholar]

- Norman K. A., Polyn S. M., Detre G. J., Haxby J. V. (2006). Beyond mind-reading: Multi-voxel pattern analysis of fMRI data. Trends in Cognitive Sciences , 10 (9), 424–430. [DOI] [PubMed] [Google Scholar]

- Pasternak T., Greenlee M. W. (2005). Working memory in primate sensory systems. Nature Reviews Neuroscience , 6 (2), 97–107. [DOI] [PubMed] [Google Scholar]

- Pearson J., Clifford C. W., Tong F. (2008). The functional impact of mental imagery on conscious perception. Current Biology, 18 (13), 982–986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L., Gutierrez E., Bandettini P., Ungerleider L. (2002). Neural correlates of visual working memory: fMRI amplitude predicts task performance. Neuron, 35 (5), 975–987. [DOI] [PubMed] [Google Scholar]

- Ranganath C., D'Esposito M. (2005). Directing the mind's eye: Prefrontal, inferior and medial temporal mechanisms for visual working memory. Current Opinion in Neurobiology , 15 (2), 175–182. [DOI] [PubMed] [Google Scholar]

- Riggall A. C., Postle B. R. (2012). The relationship between working memory storage and elevated activity as measured with functional magnetic resonance imaging. Journal of Neuroscience, 32 (38), 12990–12998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasaki Y., Rajimehr R., Kim B. W., Ekstrom L. B., Vanduffel W., Tootell R. B. (2006). The radial bias: A different slant on visual orientation sensitivity in human and nonhuman primates. Neuron , 51 (5), 661–670. [DOI] [PubMed] [Google Scholar]

- Serences J. T., Boynton G. M. (2007). Feature-based attentional modulations in the absence of direct visual stimulation. Neuron, 55 (2), 301–312. [DOI] [PubMed] [Google Scholar]

- Serences J. T., Ester E. F., Vogel E. K., Awh E. (2009). Stimulus-specific delay activity in human primary visual cortex. Psychological Science , 20 (2), 207–214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno M. I., Dale A. M., Reppas J. B., Kwong K. K., Belliveau J. W., Brady T. J., Tootell R. B. (1995). Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science , 268 (5212), 889–893. [DOI] [PubMed] [Google Scholar]

- Swisher J. D., Gatenby J. C., Gore J. C., Wolfe B. A., Moon C. H., Kim S. G.& Tong, F. (2010). Multiscale pattern analysis of orientation-selective activity in the primary visual cortex. Journal of Neuroscience , 30 (1), 325–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd J. J., Marois R. (2004). Capacity limit of visual short-term memory in human posterior parietal cortex. Nature, 428 (6984), 751–754. [DOI] [PubMed] [Google Scholar]

- Tong F. (2013). Imagery and visual working memory: One and the same? Trends in Cognitive Sciences , 17 (10), 489–490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F., Harrison S. A., Dewey J. A., Kamitani Y. (2012). Relationship between BOLD amplitude and pattern classification of orientation-selective activity in the human visual cortex. Neuroimage, 63 (3), 1212–1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tong F., Pratte M. S. (2012). Decoding patterns of human brain activity. Annual Review of Psychology , 63, 483–509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell R. B., Mendola J. D., Hadjikhani N. K., Liu A. K., Dale A. M. (1998). The representation of the ipsilateral visual field in human cerebral cortex. Proceedings of the National Academy of Sciences, USA , 95 (3), 818–824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vapnik V. (1998). Statistical learning theory. New York: Wiley-Interscience. [Google Scholar]

- Xing Y., Ledgeway T., McGraw P. V., Schluppeck D. (2013). Decoding working memory of stimulus contrast in early visual cortex. Journal of Neuroscience, 33 (25), 10301–10311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Y., Chun M. M. (2006). Dissociable neural mechanisms supporting visual short-term memory for objects. Nature, 440 (7080), 91–95. [DOI] [PubMed] [Google Scholar]

- Zaksas D., Bisley J. W., Pasternak T. (2001). Motion information is spatially localized in a visual working-memory task. Journal of Neurophysiology , 86 (2), 912–921. [DOI] [PubMed] [Google Scholar]

- Zhang W., Luck S. J. (2008). Discrete fixed-resolution representations in visual working memory. Nature, 453 (7192), 233–235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang W., Luck S. J. (2009). Sudden death and gradual decay in visual working memory. Psychological Science, 20 (4), 423–428. [DOI] [PMC free article] [PubMed] [Google Scholar]